Abstract

High dexterity is required in tasks in which there is contact between objects, such as surface conditioning (wiping, polishing, scuffing, sanding, etc.), specially when the location of the objects involved is unknown or highly inaccurate because they are moving, like a car body in automotive industry lines. These applications require the human adaptability and the robot accuracy. However, sharing the same workspace is not possible in most cases due to safety issues. Hence, a multi-modal teleoperation system combining haptics and an inertial motion capture system is introduced in this work. The human operator gets the sense of touch thanks to haptic feedback, whereas using the motion capture device allows more naturalistic movements. Visual feedback assistance is also introduced to enhance immersion. A Baxter dual-arm robot is used to offer more flexibility and manoeuvrability, allowing to perform two independent operations simultaneously. Several tests have been carried out to assess the proposed system. As it is shown by the experimental results, the task duration is reduced and the overall performance improves thanks to the proposed teleoperation method.

Similar content being viewed by others

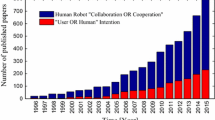

1 Introduction

Robotic manipulators are traditionally used to execute fixed and repetitive tasks [1]. However, some tasks are difficult to be programmed beforehand, because they require fine dexterity in manipulation, are context dependent or change overtime.

To overcome these challenges, a human agent may be used to perform the task via operation of the robotic arm. This can be used to cope with a few non-ordinary jobs that need to be executed by the robot. Alternatively, and if the appropriate sensors are in place, the human operations may be recorded and used as demonstrations to teach the robot how to automate the task execution.

Because of the redundant degrees of freedom of most robotic arms and the difficulty in precisely controlling the end-effector’s pose over time, a common approach for human operation of robotic manipulators is through physically holding it and moving it in the desired way. This is usually facilitated by a “zero-gravity mode”, through which, after compensating the effects of gravity, the arm does not present resistance to forces externally applied.

However, this direct manipulation of the robotic arm is not always an option. Situations including hazardous or inaccessible environments, robots with overly large workspaces, ergonomic issues, and difficulties with dual-arm simultaneous movement may render physical manipulation impractical or even impossible to the human operator. In these cases, robot teleoperation is a viable alternative [2]. Sensors placed on the robot or near it are used to give the human operator a correct understanding of the working scene and available actions. Dedicated interfaces are then used to remotely control the robot and achieve the desired goals.

Within the area of teleoperation, many applications pose a significant challenge for the human operator, and can potentially benefit from the use of multimodal interfaces. In [3], for example, force feedback cues were employed to assist the teleoperation control of multiple unmanned aerial vehicles. In [4], when controlling a telepresence robot, the operator observes the remote scene through a video feed, but is also aided by haptic feedback applied on their feet. [5] shows how touch and tangible interfaces could be used to facilitate the remote control of robots in hazardous situations. And [6] showed how multimodal interfaces based on gaze estimation, SLAM, and haptic feedback can be integrated to help a remote assistant infer the intention of a wheelchair driver.

Surface treatment is one area where multimodal interfaces can prove beneficial. Tasks such as wiping, polishing, sanding, and scuffing require detailed representation of the working surface, precise movements and control of interaction force. To satisfy all these requirements, a dedicated teleoperation platform needs to be configured. In this sense, this work proposes a teleoperation system that integrates different modes of actuation, combining motion capture with haptic and visual feedback to enhance the remote control of a dual-arm robot with two redundant 7R robotic manipulators, offering a highly flexible and manoeuvrable robotic cell. This setup allows to perform operations much quicker as the human operator is able to use both arms simultaneously. Moreover, in order to validate the proposed system several experiments have been conducted with multiple subjects to perform a surface-conditioning test task, erasing a hand-held white-board. Several benchmark metrics are analysed and the results indicate that the use of combined modalities led to improve the performance on the task, as measured by time to complete the task, accuracy of movements and erasing coverage.

This paper is organised as follows: Sect. 2 discusses previous related works on the field, including the topics of teleoperation, haptics, general applications and surface conditioning. Section 3 introduces some preliminaries about robot kinematics, dynamics and control. Section 4 defines materials and methods, and introduces the proposed multimodal teleoperation system to remotely control a dual-arm collaborative robot. In Sect. 5, the multimodal human–robot interfaces used in the proposed teleoperation architecture are explained. Section 6 describes our experimental methodology, including the tests implemented and the metrics used, whilst Sect. 7 discusses the results obtained from these experiments. Finally, the main conclusions of the paper and further work are presented in Sect. 8.

2 Related works

2.1 Teleoperation and interfaces

The reasons to remotely operate robotic systems are mainly safety, when due to dangerous tasks or manipulation of hazardous materials the user and the robot cannot share the same workspace [7,8,9], because of ergonomic issues [10, 11] or when getting access to the working scenario is impossible or hard for the human operator [12, 13]. Therefore, robot teleoperation can be useful, for instance, in situations where the robot is performing a task autonomously but the operator takes control of it, either to show the robot how to do it properly or to perform it manually [14]. Indeed, teleoperation has extensively been used in several applications such as: remote surgery [10], spacecraft manipulation and landing [12]; remotely operated vehicles (ROVs), specially drones [3, 9, 15] and underwater vehicles [13]; and remote control of industrial machinery, particularly in hazardous environments [7, 8], among others.

Some remotely controlled robots have supervised autonomy, where the operator only gives instruction on what is the next step to do and the remote machine performs the task semi-autonomously [16]. In these cases, there is no real-time interaction between the local and the remote workspaces. But in telepresence, a more sophisticated form of teleoperation, the human operator has a sense of being on the remote location [9, 10]. For example, a remotely controlled system can be equipped with sensors that capture images, sounds, or tactile sensations (e.g. pressure, texture, temperature). Then, specialised transducers can reproduce this information to the human operator, so that the experience resembles virtual reality (VR). Additionally, in telerobotics [17] users are given the ability to affect the remote location. In this case, the user’s position, movements, actions, voice, etc. may be sensed, transmitted and duplicated by the robot in the remote location to bring about this effect. The form in which the human’s actions are registered can have important impacts on the overall operation and are usually application dependent.

2.1.1 Motion capture

Many options are available for capturing the motion commands of the teleoperator, the most common one being the use of an articulated joystick [18]. This kind of interface has the advantage of accurately measuring the end-effector’s pose which can then be sent as a goal to the robot. Alternatively, if enough degrees of freedom are available, the joystick joints can directly be mapped to the robot’s joints.

The main disadvantage of using joysticks for tele- manipulation is constraining the operator to a fixed location and a potentially reduced workspace. As an alternative, it is possible to capture the natural arm movements of the teleoperator. This pose estimation can be done using video [19], depth cameras [20], Inertial Measurement Units (IMUs) attached to links in the body of the operator [21, 22], or a combination of these [23]. The human’s pose can then be used to control the robot through forward or inverse kinematics [24, 25]. If more accuracy is needed in positioning the robot’s end effector, infrared reflections may be used to track the pose of markers attached to the operator’s body or of objects that they hold in their hands. An example of this is the use of Virtual Reality joysticks for teleoperation [26, 27].

2.1.2 Haptics

Haptics is “the science and technology of experiencing and creating touch sensations in human operators” [28]. This kind of technology is gaining widespread acceptance as a key part of virtual reality systems, adding the sense of touch to previously visual-only interfaces [29]. The majority of consumer electronics offering haptic feedback use vibrations. The intensity of the shaking and also the pattern of vibration (continuous, intermittent, increasing, etc.) is what is normally used to give extra information to the user. However, this kind of haptic feedback can only convey a limited amount of information, so there are applications where it might be difficult for the user to understand the meaning of such feedback. To overcome this issue, more advanced haptic devices use electromechanic systems to interact with the human operator through force-feedback [30, 31]. These are commonly used in video games simulating automobile driving or aircraft piloting. In these cases, forces are applied to the steering wheel [32] or to the joystick [33], to simulate the sensations experienced on real vehicles.

On the other hand, to improve performance and generate a lower mental workload haptic feedback can be used in upper limb motor therapy [34]. Force feedback has also been used to warn the drivers of a potential risk in advanced driver assistance systems. To avoid collisions, a haptic device can be added either in the pedals [35] or in both pedals and steering wheel [36, 37] to interact with the driver.

Thanks to their characteristics, haptic devices have become an important alternative for medical training, adding a tactile sense to virtual surgeries [38]. Previous articles in the field state that haptic devices enhance the learning of surgeons compared to current training environments used in medical schools (corpses, animals, or synthetic skin and organs) [39]. Consequently, virtual environments use haptic devices to improve realism. For instance, haptic interfaces for medical simulation are being developed for training in minimally invasive procedures such as stitching, palpation, laparoscopy and interventional radiology, and for training dental students [40, 41]. Haptic technology has also enabled expert surgeons to remotely operate patients. When making an incision during telepresence surgery, the surgeon feels a resisting force, as if locally working on the patient [10].

Regarding teleoperation for manipulation tasks, force feedback can be obtained using either grounded [42] or wearable [43] devices. Grounded devices, those which have a fixed base attached to a world frame, are capable of naturally conveying the direction and intensity of force feedback, but limit the operator’s range of motion. Wearable devices, on the other hand, do not limit the operator’s workspace or their agility. But, not being grounded to a fixed frame, these devices require an alternative way to map forces measured on the robot to the haptic feeling presented to the operator. In [43], for example, normal forces measured on the robot’s end effector are translated to squeezing motions on the wearable device.

2.2 Dual-arm manipulation

Most surface treatment applications assume that the object is still and the robot is moving the tool [44], or the other way around [45]. Notwithstanding, certain applications require movement from both object and tool, so that the surface treatment can be applied to all sides of the object. In these cases, dual-arm manipulation [46] is usually more appropriate to perform the task. Despite the increased manipulability stemming from the use of two robotic arms, this scenario also poses additional challenges, since both object and tool are moving while in contact. Surface friction and slippage are likely to impose stronger effects, leading to higher inaccuracies in both perception and positioning, thus making automatic control more difficult [47].

Although these are significant challenges for automatic control, human operators are naturally able to perceive when replaning of trajectories are needed and of executing it. This leads to the field of bimanual robot teleoperation [48], which in itself presents the challenge of how to allow the human to intuitively operate the robot’s arms from a remote location [49]. To this end, many different types of interfaces have been used before, such as motion capture, pose-tracking, articulated joysticks, etc. However, successful dual-arm manipulation for surface treatment applications requires the teleoperation platform to present a combination of features: dexterity, agility, ample enough workspace and touch/force perception. While many of the interfaces traditionally used can convey one or some of these desired characteristics, we are not aware of any that could achieve all of them.

2.3 Contact-driven manipulation

A typical robot assembly operation involves contact with the parts of the product to be assembled and consequently requires the knowledge of not only position and orientation trajectories but also the accompanying force-torque profiles for successful performance. Furthermore, position uncertainty is inevitable in many force-guided robotic assembly tasks. Such uncertainty can cause a significant delay, extra energy expenditure, and may even result in detriments to the mated parts or the robot itself. In [50], the authors suggested a strategy for identifying the accurate hole position in force-guided peg-in-hole tasks by observing only the forces and torques on the robotic manipulator. An Expectation Maximisation-based Gaussian Mixtures Model is employed to estimate the Contact-State expected when the peg matches the hole position. The assembly process starts from free space and as soon as the peg touches the target surface but misses the hole, a spiral search path is followed to survey the entire surface. When the estimated Contact-State of the peg-on-hole is detected, the hole position is identified.

In [51], the authors proposed a guidance algorithm for fitting complex-shaped parts in peg-in-hole tasks. This guidance algorithm is inspired by the study of human motion patterns; that is, the assembly direction selection process and the maximum force threshold are determined through the observation of humans performing similar actions. In order to carry out assembly tasks, an assembly direction is chosen using the spatial arrangement and geometric information of complex-shaped parts, and the required force is decided by kinaesthetic teaching with a Gaussian mixture model. The performance of the proposed assembly strategy was evaluated by experiments using arbitrarily complex-shaped parts with different initial situations.

To learn the execution of assembly operations when even the geometry of the product varies across task executions, the robot needs to be able to adapt its motion based on a parametric description of the current task condition, which is usually provided by geometrical properties of the parts involved in the assembly. In [52], the authors proposed a complete methodology to generalise positional and orientational trajectories and the accompanying force-torque profiles to compute the necessary control policy for a given condition of the assembly task. The method is based on statistical generalisation of successfully recorded executions at different task conditions, which are acquired by kinaesthetic guiding.

Surface conditioning tasks (polishing, sanding, scuffing, deburring, profiling, etc.) can be even harder for a robot, as they require good accuracy and adaptability to variable requirements of position, orientation and pressure. Additional task-specific constraints can also be present, such as keeping the tool normal to the surface and holding the pressure within bounds in order to improve the overall performance and surface finishing quality. To cope with these challenges, several works invested in developing dedicated low-level control techniques. Some authors have developed hybrid position/force control algorithms for robotic manipulation. For instance, in [53] an adaptive position/force control was designed for robot manipulators in contact with rigid surface with uncertain parameters, whilst in [54] robot manipulators were in contact with flexible environments. A different approach is the development of sliding mode control (SMC) algorithms for sanding or polishing tasks, which allow the tool to move along a surface while keeping constant pressure [44]. A system where a human and a robot have to cooperate for robust surface treatment using non-conventional SMC was developed in [55]. The authors of [56] designed and implemented an adaptive fuzzy SMC to allow robotic arms to manipulate objects in uncertain environments. Admittance control has also been used for contact-driven robotic surface conditioning [57].

Two different methods to perform surface conditioning tasks (see Video 1 https://media.upv.es/player/?id=a1a83cc0-33c6-11ea-8310-f5d74c1b22b6 for the complete demo)

On the other hand, some works sought to mechanically solve the problem of potential inaccuracies by designing polishing tools that are able to absorb mechanical vibrations, thus easing the contact between surfaces [58]. The authors of [59] developed a smart end-effector for robotic polishing which granted improved force control and vibration suppression, whereas in [60] an electrochemical mechanical polishing end-effector for robotic polishing applications was manufactured using a synergistic integrated design.

3 Preliminaries

3.1 Robot kinematics

A robotic manipulator is a kinematic chain of links and joints, where the joint configuration \({\mathbf {q}}=[q_1,\ldots q_n]\) affects the pose of its end-effector \({\mathbf {p}}=[x,y,z,\alpha ,\beta ,\gamma ]^T\) (where the orientation is defined in Z-Y-X Euler angles: yaw \(\alpha \), pitch \(\beta \), roll \(\gamma \)). Note that pose of robot’s end-effector p can be readily computed from the robot configuration q using, for instance, the well-known Denavit-Hartenberg (DH) convention, see [61] for further details.

In the industry, point-to-point control is widely extended, for instance in pick and place applications. In such cases, an a prior computation of the robot forward and inverse kinematics might be enough to solve the problem, because the point-to-point trajectory is computed once (either in the joint space or in the Cartesian space) and, theoretically, there is no risk of collision. However, in human–robot collaborative tasks, where human and robot interact directly by sharing the same workspace, it might be useful to dynamically solve the robot forward or inverse links kinematics problem. In such case, for a serial-chain manipulator, given the positions and the rates of motion of all the joints, the goal is to compute the velocity (and acceleration) of the robot’s end-effector. Therefore, using the robot’s kinematic model and its derivatives, the velocities (and accelerations) of the robot’s end-effector can be computed from the velocities (and accelerations) of the joints, or the other way around [61]. The robot controller guarantees that a proper input torque \({\varvec{\tau }}\) is applied to the joints, so that a particular desired reference (position, velocity and acceleration) can be followed accurately with negligible errors.

3.2 Impedance control

Impedance control is a dynamic control approach that relates force and position [62]. It is often used in applications where not only the position of a manipulator is of concern but also the force it applies. Mechanical impedance is the ratio between force output and motion input. The impedance control of a mechanism means controlling the resistance force of the mechanism to external motions imposed by the environment.

Baxter’s dual-arm teleoperation scheme, using haptics to control the right arm and mocap based control for the left arm. A GUI is used as visual feedback, whereas an F/T sensor takes measurements for the force feedback assistance. \({\mathbf {J}}\) is the so-called Jacobian matrix of the robot [61], which defines the kinematic model and relates velocities between joint and task spaces

Baxter’s dual arm teleoperation for the whiteboard wiping task, using two different combinations of control inputs (see Video 2 https://media.upv.es/player/?id=b7f9d220-33c8-11ea-8310-f5d74c1b22b6 for more details)

Impedance control has been extensively applied to human–robot interaction due to all its benefits, such as the ability to modulate impact forces, acting as a mass-spring-damper system. For instance, a guidance algorithm based on an impedance controller was implemented in [51] to achieve stable contact motion for a position control-based industrial robot to solve a peg-in-hole task with complex-shaped parts. On the other hand, a novel impedance control for haptic teleoperation was introduced in [63], whereas the authors of [64] developed an adaptive impedance control to improve transparency under time-delay.

4 Methodology

4.1 Multimodal teleoperation

The task tackled in this work consists of wiping a whiteboard with an eraser, although other surface conditioning and manipulation tasks could be also carried out using the same architecture proposed in this paper. Surface conditioning can be very diverse in nature, from just wiping or polishing the surface of an object, which does not require subtracting material, to sanding or deburring tasks, where the tool modifies the structure of the object treated. However, all of them share the same principles: two surfaces in contact (tool and object) where applied force and angle of attack must be kept within certain limits.

Assuming that doing the task autonomously is not an option due to the difficulty of programming a trajectory when the object is moving and its location is unknown or inaccurate, these and other similar tasks are usually done either manually by the human worker, or using kynaesthetic manipulation with the robot in zero gravity mode. Figure 1 shows examples of the same surface treatment task done using these two different methods.

The manual option is not always possible because carrying the task out might be hard for the human operator due to manipulation of heavy objects, or dangerous because of the use of chemical and toxic products, or even forbidden when operating with sharp tools that can cut or in environments with hazardous materials. Regarding the second method, the kynaesthetic manipulation is affected by the same issues mentioned in the first case, plus the fact that manipulating a robot, even in zero gravity mode, can be really uncomfortable and not recommended from an ergonomic point of view. Besides, the time spent to complete the task can be extremely long in some situations.

For that reason, as an alternative to the previous approaches the multimodal teleoperation scheme shown in Fig. 2 is proposed in this work, where the operator does not even need to be in the same workspace as the robot. To avoid manual or kynaesthetic manipulation, the proposal consists of operating remotely the dual-arm robot combining two different control methods: an inertial motion capture system (abbreviated as mocap) and a haptic device with force-feedback (denoted as haptic henceforward). Figure 3 shows the two combinations of teleoperation inputs, where motion capture is used for the left arm, whilst for the right arm both mocap based and haptic based teleoperation methods are considered. The initial hypothesis of this work, which has to be proved with real experimentation, is that the use of haptics with force-feedback assistance will improve the performance of the dual-arm teleoperation compared to the remote control using only inertial motion capture systems. So, the goal is to compare conditions shown in Fig. 3, i.e., both arms with inertial motion capture teleoperation (a) against left arm with mocap and right arm with haptic teleoperation (b).

4.2 Dual-arm manipulator

Baxter, a dual-arm collaborative robot, was used for the experimentation conducted in this work. Each arm has 7 degrees of freedom, corresponding to two redundant kinematic chains with 7 rotational joints. Figure 4 shows the local frames and joints associated to both Baxter’s arms, as well as the local coordinate system \({\mathbf {T}}_{0}\), whiteboard’s frame \({\mathbf {T}}_{Wb}\) and eraser’s frame \({\mathbf {T}}_{Er}\). Blue arrows in the frames of Fig. 4 represent the rotation joints, which are turnings around the z-axis of their local frames. Smoother and more accurate manoeuvres with singularity-free movements can be produced thanks to this joint configuration [65].

The robot has its own computer to perform low-level control of both arms, with an Ubuntu 14.04 distribution. For communications and data visualisation, the Indigo version of the Robotic Operating System (ROS) is installed. Baxter’s local computer can also take measurements of all sensors mounted, such as cameras, accelerometers, ultrasounds, etc. The position and orientation of both arms can be easily obtained from the robot forward kinematics, since the angular position, velocity and effort are measured for each joint.

A laptop with Xubuntu 16.04 as OS and ROS Kinetic distribution is used as external computer to control the Baxter robot. This remote computer uses the inputs from the haptic device and the motion capture system for the teleoperation. This computer also processes and streams all the video feeds in the Graphical User Interface (GUI). It can also be used as a data-logger to record all the signals published in the network.

In order to perform the wiping task, two different tools are attached to the end-effectors of both arms. On the one hand, a 6-axis force-torque sensor (F/T sensor) is mounted on the right arm, aligned with an eraser, as shown in Fig. 5a. Using this design, when the whiteboard’s surface is being cleaned by the eraser, the applied force can be measured by the F/T sensor and used to provide feedback through the haptic device and assist the remote operator (further details about the force-feedback aid are given in Sect. 5.2).

On the other hand, a magnetic whiteboard is used as a tool for the left arm, see Fig. 5b. 7 neodymium magnets are used to keep the whiteboard attached to the robotic manipulator to perform the wiping task, but it can be detached and fall down when a torque above 2 Nm is applied to it (which approximately corresponds to a force of 20 N applied on the edges, perpendicular to the whiteboard’s surface). Therefore, the goal is to emulate delicate surfaces that cannot support too much pressure as they would suffer deformations or even break into pieces. Hence, the developed tool is able to increase the difficulty of the task and to give some feedback about the performance.Footnote 1 As depicted in Fig. 5b, a zig-zag pattern is painted on the whiteboard’s surface, centred and covering approximately a quarter of the overall area. The goal is to use the eraser to clean the drawing one the whiteboard’s surface.

5 Multimodal human–robot interfaces

5.1 Kynaesthetic (contactless)

To remotely operate the robot using kynaesthetics with no contact, an inertial motion capture system is used. Notice that this contactless control method differs from the one introduced in Fig. 1b, in which there was physical contact between the human and the robot system, because the human operator moves the robotic manipulator by grabbing its arms in gravity compensation control mode.

An Xsens MTw Awinda motion capture system with 8 wireless inertial measurement units (IMU) is used to send information about the human operator posture. Figure 6 shows the IMUs of the mocap system placed on the human upper body. As observed in Fig. 7, there are two symmetric kinematic chains, left (L) and right (R), composed by 4 links and 3 joints each: the links are back (B), L/R arm (A), L/R forearm (F), L/R hand (H); whereas the joints are placed in the shoulders, elbows and wrists of both arms. All these joints are considered spherical, so they have three degrees of freedom each, i.e., three rotations. Therefore, each kinematic chain has a total of 9 degrees of freedom (DoF).

The head IMU is not used in this study, whilst the back IMU is used as a reference for the remainder IMUs located on both arms, so that rotations and translations of each link are related to this reference frame \({\mathbf {T}}_B\) (see Fig. 7). The orientation of each section of the upper limbs (arm, forearm, hand) is determined by the corresponding IMU attached to each section. The global orientations given by each one of the IMUs is converted to a local orientation with respect to the frame of the previous link.

In Fig. 7, the location of the human joints and their related frames is shown, as well as the parameters to define the kinematic model of each arm. Parameters \(h_S\) and \(l_S\) refer to shoulder height and width measured from the back reference frame, respectively. Parameters \(l_A\) and \(l_F\) represent the length of arm and forearm, respectively, measured from joint to joint. Finally, \(l_H\) is the distance from the wrist joint to the centre of the hand palm, whose coordinate frame is \({\mathbf {T}}_H^R\) for the right hand and \({\mathbf {T}}_H^L\) for the left hand. It is assumed that humans are symmetric so that both upper limbs have the same length and range of movements.

Parameters must be measured for each human operator in order to calibrate the system and have accurate kinematic models of their upper body. Assuming that all these parameters are known, it is possible to compute the position and orientation of left and right hands using standard methods for the computation of robot forward kinematics [61].

where superscripts on the right are referred to the arm side (left/right), superscripts on the left represent the reference frame (or parent) of the local transform, and subscripts on the right represent the target frame (or child) of the local transform.

These configurations in the local workspace are mapped to the remote workspace and used as a reference posture for the end-effectors of both robotic arms. In this particular case the scaling used is unitary, because this way the movements performed by the human operator are more realistic and natural.

To remotely control the dual-arm robot the forward kinematic model of the human operator is used to compute the reference position and orientation. Then, PID controllers are used to close the loop (see [66] for further details) in order to set the linear and angular commanded velocities \({\dot{\mathbf {p}}}^L_c\) in the task space. Then, the robot kinematics is used to compute the commanded angular velocities \({\dot{\mathbf {q}}}^L_c\) in joint space, so that the robotic manipulator follows the reference trying to reduce the tracking error \({\varvec{e}}^L_p\), as depicted in Fig. 2.

Finally, note that for the robotic manipulator holding the whiteboard (left arm in the proposed setup) the mocap based teleoperation is the only one tested in the experiments, as explained in Sect. 4.1. However, for the arm with the eraser (right arm) mocap teleoperation is compared against haptic teleoperation in order to assess which one is better.

5.2 Haptic

A Phantom Omni haptic device [30] is used as an alternative for the teleoperation of the robot’s right hand. The system is a small robotic arm with 6 rotational joints, with standard configuration: hip-shoulder-elbow and in-line wrist. This configuration allows 6 DoF (3 positions and 3 orientations), as can be seen in Fig. 8. The first three joints (\(J1\!-\!J3\)) allow positioning the wrist, whereas the last three joints (\(J4\!-\!J6\)) determine the orientation of the stylus like a spherical wrist. Therefore, thanks to its design, both the position and the orientation of the robot’s end-effector can be defined simultaneously. A 3D force feedback is also implemented by the Phantom haptic device, but it cannot control the torque applied to the handle. Thus, the first three joints are active (can be actuated), whilst the remainder three are passive (cannot be actuated). The stylus incorporates 2 buttons: the light grey one is used for safety as a deadman button, so the remote robotic manipulator cannot move unless it is pressed; whereas the dark grey button opens and closes the gripper, although it is not used in the experimentation carried out in this work.

A joint space mapping is done to control Baxter’s right arm. The Phantom Omni is a 6-joints device, whereas each arm of the Baxter is a redundant 7-joints robotic manipulator, so the Baxter’s right arm’s third joint (\(q_3^R\) in Fig. 4) was made fixed in order to map the other six to Phantom’s joints (see Fig. 8). Under such conditions, two equivalent 6R kinematic chains are obtained, with slight differences, such that their DH parameters are not proportional and, therefore, neither are their forward/inverse kinematic models. However, the discrepancies did not affect the performance of the teleoperation and were neglected. Indeed, we did some preliminary tests and found that the teleoperation was quite intuitive because the configuration of both arms (haptic device and robotic manipulator) were the same after fixing the joint \(q_3^R\) of Baxter’s arm (i.e., both were standard configuration: hip-shoulder-elbow and in-line wrist).

For the right arm, joint-space control was considered rather than Cartesian-space control because it had better performance. In particular, in order to associate the local and remote’s joint spaces proposed in this work, an affine transformation f of \({\mathbb {R}}^6 \rightarrow {\mathbb {R}}^7\) is defined as follows:

where \({\mathbf {q}}^R_h\) is a vector in the local space (representing the joint configuration in \({\mathbb {R}}^6\) of the Phantom haptic device), \({\mathbf {q}}^R_d\) is a vector in the remote space (representing the desired robot’s joint positions in \({\mathbb {R}}^7\)), \({\mathbf {h}}\) is also a vector (representing a translation in the robot’s joint space, so it belongs to \({\mathbb {R}}^7\)), and G is a matrix of dimension \(7\times 6\). The workspace mapping in the proposed control scheme can be computed using the following matrix G and vector \({\mathbf {h}}\):

where elements in rows are associated to joints in the remote space and elements in columns refer to joints in the local space. Notice that all the elements in the third row are zero, as this row corresponds to joint \(q_3^R\) of Baxter’s right arm, which is kept fixed. For each joint-pair mapping \(\{i,j\}\), the corresponding ij-element of G matrix can be either \(+1\) or \(-1\) depending on the rotation sign, as it can be the same in the local and remote joint spaces, or opposite. So, matrix G determines which haptic device’s joint (local space) is commanding the corresponding robot right arm’s joint (remote space) and whether their rotations are the same or opposite. Whereas, vector \({\mathbf {h}}\) sets an offset angle (in radians) of the remote joints with respect to the local joints.

An open-loop force control is implemented as a haptic feedback, where the motion of the user is measured as input and the force applied in the remote space is utilised as feedback to the user. As can be observed in Fig. 2, the user commands the Phantom Omni haptic device giving inputs in joint space that are mapped as robot’s joint references. Then, PID controllers for each joint guarantee that the angular position error of the robotic joints are minimised by computing a control action that represents the commanded angular velocity \({\dot{\mathbf {q}}}^R_c\). As it is already stated in Sect. 3.1, the low level internal dynamic control of the robotic manipulator, which is responsible for computing and applying the corresponding torques \({\varvec{\tau }}\), is assumed to guarantee that the reference trajectory is followed properly.

Finally, the force \({\mathbf {F}}_s^R\) applied to the whiteboard is sensed using the F/T sensor placed in the wrist of Baxter’s right arm, as shown in Figs. 2 and 5a. The measured force is filtered using a low-pass discrete filter to reduce noise and it can also be scaled to adapt its sensitivity when necessary. The mapping between both workspaces, local and remote, makes the user feel the force \({\mathbf {F}}_h^R\) as if the haptic device was the robotic arm itself. That is because the force is measured by the F/T sensor as a 3D vector (direction and magnitude) with respect to the right arm’s end-effector. Then, such force is applied onto the local operator’s workspace using the mapping between both spaces. Hence, the user can feel in the haptic device a force proportional and in the same direction as the one measured in the robot right arm. In this manner, haptic feedback could allow the user to intuitively react and adapt their teleoperation commands.

5.3 Visual

A screen with a Graphical User Interface (GUI) is used to show data from the remote workspace in order to assist the operator in the teleoperation task. The GUI is split in 5 sections, as shown in Fig. 9. The structure of the visual feedback is similar to the one used in surgery teleoperation [10, 11], but apart from the real-time video streaming of the robot’s environment, some extra information has been added to improve the visual assistance.

Two video feeds stream images on the top left area of the GUI, one from a front camera placed in robot’s chest (red box) and a second image coming from Baxter’s right hand camera facing longitudinally with the eraser tool (yellow box). The first camera allows the user to visualise the robot’s workspace, whilst the second one helps the user to see where the eraser is pointing to and also to check whether the whiteboard is completely wiped or not (see details in Fig. 2).

A third image is depicted in the bottom left corner of the GUI (green box). It consists of a top view perspective of robot’s 3D virtual model, which is intended as an aid to see both arms simultaneously in a first-person view and also for depth sensing of the remote workspace. This display can be considered as the main view, since it allows a more immersive experience, like in telepresence applications [9, 10], so that the users feel as if they were the robot itself. In this sense, the size of the virtual model is larger because in the preliminary tests it was found the best configuration in order to achieve better accuracy in the teleoperated movements.

Finally, in the bottom right corner there is a graph showing the longitudinal force applied by the eraser (black box). Notice that, alongside with the force measurement (red line) two horizontal lines are also plotted in this graph to represent the bounds of the applied force (negative values imply compression, whilst positive values mean stretching). These bounds are used as visual references to help apply the correct force. The lower bound in magnitude (cyan line) is the force at which the eraser starts to wipe the whiteboard (approximately 5 N), whilst the upper bound (blue line) is the maximum safe force (approximately 20 N as explained in Sect. 4.2, but a more conservative 15 N bound was set to reduce the risk), since higher values (in magnitude) might detach the whiteboard from the magnets and make it fall. A tool for online parameter reconfiguration appears also on the top right region (blue box), although it has not been used in the experimentation.

Note that the image of the virtual model was placed at the bottom center-left because it is essential for the accuracy of the movements, as mentioned above. Then, the two cameras were placed on top and the graph on the right in order to avoid distracting too much when the operators were performing the remote control, but close enough to give assistance when necessary.

Some subjects using different input methods to control remotely the Baxter’s arms while performing the whiteboard wiping task (Video 3 https://media.upv.es/player/?id=199d1730-33c9-11ea-8310-f5d74c1b22b6 shows some users performing the teleoperation with each input method)

6 Dual-arm teleoperation benchmarking

6.1 Methodology

In order to assess the proposed teleoperation architecture and to establish which is the best combination of control inputs, some specific tests have been designed for the benchmarking. Due to its complexity, the overall task of wiping a whiteboard with an eraser is split in two simpler sub-tasks in order to evaluate the performance of the proposed method.

6.1.1 Preliminary tests

In this work, the authors wanted to carry out the study with inexperienced users. Therefore, some preliminary tests were conducted to find that for them it was hard to perform the task fully dual-arm, so the authors decided to split the complete task into two independent sub-tasks. This makes sense also because it is the best way to isolate both modalities (haptics and kynaesthetics with mocap), so that the overall performance is not blurred by unknown effects. When combining the performance of the left and right task, it can happen that a poor performance of the left task was due to a poor performance of the right hand, and vice versa. In this way, the task assessment was much easier.

In the preliminary tests, it was also found (based on users’ feedback) that motion capture was more intuitive to teleoperate the left arm as holding the whiteboard was a much simpler task, so the authors of this work discarded a comparison between mocap and haptics for such arm. Thus, the comparison in this work is mainly focused on the right arm considering haptic and mocap input modalities. It is also worth noting that the users did not complain in the preliminary tests about having different methods for controlling both arms of the robot.

6.1.2 Task 1: left arm teleoperation

The first task (T1) consists of moving the whiteboard on the remote workspace, from its initial position to a reference pose (position and orientation) trying to minimise the error with respect to the reference. For this task, the users have to control the Baxter’s left arm with the inertial motion capture system, as depicted in Fig. 10a. In order to help during the teleoperation, a virtual whiteboard placed in the target pose is introduced in the GUI as a visual feedback (green box in Fig. 9).

6.1.3 Task 2: right arm teleoperation

For the second task (T2), the goal is to remotely manipulate the eraser attached to the end-effector of the Baxter’s right arm, in order to wipe completely the whiteboard in the shortest possible time, which is already placed in the optimal configuration for the cleaning (reference pose used in task T1). To perform the task properly, the users have to erase the surface without making the whiteboard fall over. So, they need to keep the applied force within the bounds, i.e., push hard enough to wipe the surface (absolute force above 5 N to start cleaning), but without pressing too much for safety (absolute force below 15 N to avoid pulling the whiteboard apart from the magnets). The operators were asked to use two different control modes: on one side, as shown in Fig. 10b, using the inertial motion capture system also used in task T1; whilst on the other side, using the Phantom haptic device, as it can be observed in Fig. 10c. As a visual aid, the GUI includes a graph showing the axial force applied and the upper/lower bounds (black box in Fig. 9).

6.2 Setup

Tests were conducted on 22 subjects, 19 males and 3 females.Footnote 2 The age range was between 22 and 34 years, with an average age and standard deviation of \(28.5\pm 3.2\) years. Most users were undergraduate and PhD students, but their backgrounds were diverse and the majority had no previous experience in robotics, haptics, teleoperation, or virtual reality. 19 users were right handed, whilst 3 were ambidextrous. No left-handed people was considered because the eraser and whiteboard configurations were optimised for right-handed users, although the results can be easily generalised to people with any kind of dexterity due to symmetry.

In a preliminary stage, the instructor supervising the experimentation explained to each user the goal of this study, as well as introduced them the dual-arm robot and detailed how to use both the motion capture system and the haptic device. They were allowed to practice with both teleoperation systems as long as necessary, until they knew how to use properly both systems and were comfortable enough to carry out the tests.

The users were instructed to do each task individually (i.e., one at a time), as shown in Fig. 10. That way, both sub-tasks (left and right) and input methods (haptics and mocap) were isolated, so that the overall task performance was not affected by cross-effects and the assessment was not blurred by unknown artifacts. The tests were carried out in counterbalance order to avoid any learning bias. The maximum time to carry out any trial was set to 150 seconds, as it was long enough to finish successfully the tasks in regular conditions.

6.3 Metrics

The following metrics are used to quantitatively assess the performance of the tests carried out in the benchmarkingFootnote 3:

-

Duration [s] elapsed time in seconds to perform the task, computed as the difference between initial time \(t_i\) and final time \(t_f\). In both tasks the test started when the user pressed the deadman button to begin with the teleoperation and finished either when the whiteboard is placed in the reference configuration (in task T1), when the whiteboard is wiped completely (in task T2), or when the maximum time \(t_{max}\!=\!150\) seconds is reached (in both tasks).

$$\begin{aligned} \varDelta t = \min (t_f-t_i,t_{max}) \end{aligned}$$(5) -

Position error [m] distance in meters between the target position \(p_t\!=\![x_t\ y_t\ z_t]^T\) and the whiteboard position \(p_w(t)\!=\![x_w(t)\ y_w(t)\ z_w(t)]^T\) at the final time instant \(t\!=\!t_f\) (only in T1).

$$\begin{aligned} e_p(t) = \sqrt{(x_t-x_w(t))^2+(y_t-y_w(t))^2+(z_t-z_w(t))^2}\nonumber \\ \end{aligned}$$(6)where subscript t refers to the target and subscript w is related to the whiteboard. Notice that the metric used for the benchmark is the final position error, which is computed at the final time instant as \(e_p(t_f)\).

-

Orientation error [rad] distance in radians between the target orientation \(o_t\!=\![\phi _t\ \theta _t\ \psi _t]^T\) and the whiteboard orientation \(o_w(t)\!=\![\phi _w(t)\ \theta _w(t)\ \psi _w(t)]^T\) (only in T1).

$$\begin{aligned} e_o(t) = \sqrt{(\phi _t-\phi _w(t))^2+(\theta _t-\theta _w(t))^2+(\psi _t-\psi _w(t))^2}\nonumber \\ \end{aligned}$$(7)where \(\phi \), \(\theta \), and \(\psi \) represent the orientation defined by Euler angles roll, pitch and yaw, respectively. Note that these angles represent a global orientation obtained by three consecutive local rotations: \(\psi \) around z axis, \(\theta \) around y axis and \(\phi \) around x axis. It is also necessary to remark that the metric used for the benchmark is the final orientation error, computed at the final time instant as \(e_o(t_f)\).

-

Max linear velocity [m/s] maximum value of the 2-norm of the tool’s linear velocity vector (only in T2).

$$\begin{aligned} \begin{aligned} v_{max}&= \max {|{\mathbf {v}}(t)|} \\&= \max {\sqrt{v_x^2(t)+v_y^2(t)+v_z^2(t)}}, \forall t\in [t_i,t_f] \end{aligned} \end{aligned}$$(8) -

Max linear acceleration [\(\text {m/s}^2\)] maximum value of the 2-norm of the tool’s linear acceleration vector (only in T2).

$$\begin{aligned} \begin{aligned} a_{max}&= \max {|{\varvec{a}}(t)|} \\&= \max {\sqrt{a_x^2(t)+a_y^2(t)+a_z^2(t)}}, \forall t\in [t_i,t_f] \end{aligned} \end{aligned}$$(9) -

Mean linear velocity [m/s] average value of the eraser velocity (only in T2).

$$\begin{aligned} v_{mean} = \frac{\sum \limits _{t=t_i}^{t_f}{|{\mathbf {v}}(t)|}}{N} = \frac{\sum \limits _{t=t_i}^{t_f}{\sqrt{v_x(t)^2+v_y(t)^2+v_z(t)^2}}}{N} \end{aligned}$$(10)being \(|{\mathbf {v}}(t)|\) the magnitude or 2-norm of the velocity vector \({\mathbf {v}}(t)\).

-

Mean linear acceleration [\(\text {m/s}^2\)] average value of the eraser acceleration (only in T2).

$$\begin{aligned} a_{mean} = \frac{\sum \limits _{t=t_i}^{t_f}{|{\mathbf {a}}(t)|}}{N} = \frac{\sum \limits _{t=t_i}^{t_f}{\sqrt{a_x(t)^2+a_y(t)^2+a_z(t)^2}}}{N} \end{aligned}$$(11)being \(|{\mathbf {a}}(t)|\) the magnitude or 2-norm of the acceleration vector \({\mathbf {a}}(t)\).

-

Wiped surface [%] percentage of wiped surface (only in T2). This metric was estimated by the person conducting the task assessment. Depending on the area wiped at the end of the task, it was graded in the range \(S\in [0,100]\)% with 5% increments. Although it was not possible to draw exactly the same pattern (see Fig. 5b) for all the users and trials, the drawing covered approximately the same area, so that differences were negligible and this metric could be computed quantitatively and in a fair way.

-

Time of bounded alignment [%] percentage of time in which the eraser and the whiteboard’s surfaces are parallel, which means that their corresponding normal vectors are aligned within certain bounds (only in T2). First, the dot product of the binormal vectors (z-axis in local frames), defined by the orientation of the whiteboard and the eraser, is computed for each experiment

$$\begin{aligned} A(t) = {\mathbf {B}}_{Wb}(t)\cdot {\mathbf {B}}_{Er}(t) \end{aligned}$$(12)where \({\mathbf {B}}\) corresponds to the third column of the rotation matrix \({\mathbf {R}}\!=\!\left[ {\mathbf {T}}\ {\mathbf {N}}\ {\mathbf {B}}\right] \) defining the orientation of the whiteboard (subscript Wb) and eraser (subscript Er). If they are completely parallel, with the eraser pointing towards the whiteboard, their dot product is \(A\!=\!-1\), as their binormal vectors are defined so that they have opposite directions. On the contrary, if both devices are orthogonal, i.e., they are perpendicular to each other, their dot product is \(A\!=\!0\). Finally, considering that \(t_A\) is the overall time in which both surfaces are approximately parallel, which is considered when \(A(t)<-0.95\), the percentage of time of bounded alignment can be obtained as follows

$$\begin{aligned} t_A^\% = \frac{t_A}{\varDelta t}100 \end{aligned}$$(13) -

Time of bounded force [%] percentage of time in which the force applied by the eraser to the whiteboard is properly bounded, which happens when \(F(t)\in [0,-15]\) N (only in T2). So, considering that \(t_B\) is the overall time in which force is within bounds, the percentage of time with bounded force can be obtained as follows

$$\begin{aligned} t_B^\% = \frac{t_B}{\varDelta t}100 \end{aligned}$$(14) -

Max force [N] maximum force applied by the eraser to the whiteboard during the test (only in T2).

$$\begin{aligned} F_{max} = \max {|{\mathbf {F}}(t)|}, \forall t\in [t_i,t_f] \end{aligned}$$(15)being \(|{\mathbf {F}}(t)|\) the magnitude of the force measured by the F/T sensor after gravity compensation (whose computation is omitted for brevity).

-

Max torque [Nm] maximum torque applied by the eraser to the whiteboard during the test (only in T2).

$$\begin{aligned} \tau _{max} = \max {|{\varvec{\tau }}(t)|}, \forall t\in [t_i,t_f] \end{aligned}$$(16)being \(|{\varvec{\tau }}(t)|\) the magnitude of the torque measured by the F/T sensor.

Performance metrics of left arm teleoperation: box-plots on top with the median (red line), average (green dot) and outliers (red cross); whilst at the bottom the histograms and their corresponding probability distributions. An overall good performance is shown, although 5 individuals lasted longer than expected

6.4 Questionnaire

Two questionnaires were given to the participants, before and after the experiments performed in this study. The questions in the initial questionnaire collected personal information to characterize the type of user and were related to their previous experience in various areas related to the experiment: teleoperation, robotics, haptics, and so on. As for the questionnaire after taking the tests, the questions sought to obtain a subjective answer to assess which method was most appropriate for the task to be solved. The questions were related to the following items: usability, accuracy and stability.

7 Results and discussion

This section analyses and discusses in detail the main results from our experiments.

7.1 Task 1: left arm teleoperation

For the left arm teleoperation only the motion capture system is used, since after some preliminary tests the authors found that it was clearly the best option for this sub-task. After the first test, the results show that users were able to finish the task in \(\varDelta t=38.91\pm 16.88\) s (average value ± std value), with a position accuracy of \(e_p=0.0757\pm 0.0537\) m and an orientation accuracy of \(e_o=0.3354\pm 0.2106\) rad.

Figure 11 shows the histogram and the distribution of the three performance metrics. As can be observed, for the position and orientation errors, the distributions are approximately Gaussian with small positive skewness (right-skewed distributions), which is expected as the errors are absolute values near zero. However, regarding the distribution of the duration variable, not only has it high skewness but also a multimodal distribution. After analysing the data, it was found that this effect is mainly produced by 4 users who did really slow movements during the whole teleoperation process and also one operator who found some difficulties in the fine adjustment of the whiteboard orientation near the target. However, the results show that all human operators were able to carry out the task with good accuracy.

7.2 Task 2: right arm teleoperation

The second test explored if haptic teleoperation with force-feedback assistance could improve performance on the wiping task. As shown in Fig. 12a, the average time spent on this task went from \(\varDelta t\!=\!128.25\pm 27.76\) s with motion capture input to \(\varDelta t\!=\!89.45\pm 23.77\) s when using the haptic device. After performing a one-way ANOVA analysis, the results imply that both datasets are statistically different because \(p\!=\!2.91\cdot 10^{-5}\). This can also be observed in Fig. 12a, where the \(95\%\) confidence intervals of both groups are not overlapped.

Box-plots with the median (red line), average (green dot) and outliers (red cross), related to some performance metrics of right arm teleoperation using motion capture (M) and haptics (H): a duration, b maximum velocity, c mean velocity, d maximum acceleration, e mean acceleration, f wiped surface, g time of bounded alignment, h time of bounded force, i maximum force, and j maximum torque. All results show significant difference, meaning that the haptic teleoperation is better than the one using motion capture

Regarding the overall area cleaned when performing task T2, the haptic feedback helps to improve the performance with respect to the mocap based teleoperation, as shown in Fig. 12f. In fact, the percentage of wiped surface rose from \(S\!=\!51.75\pm 43.96\%\) with mocap to \(S\!=\!93.75\pm 5.82\%\) when using haptics with force feedback. Besides, such difference is statistically significant and, therefore, it can be attributed to the haptic teleoperation system, because \(p\!=\!1.39\cdot 10^{-4}\) in the one-way ANOVA analysis carried out. Furthermore, it is worth to remark that among all 20 cases analysed, 8 people were unable to wipe the surface with motion capture teleoperation, as the whiteboard was pulled over from the magnets after applying too much pressure with the eraser. However, all the users could finish the second task with haptic assistance, that is, no user made the whiteboard fall on the ground.

Figure 12h shows that the applied force is kept within bounds most of the time when the haptic device is used, while the percentage of time of bounded force is much lower with motion capture teleoperation. The difference is statistically significant, with \(p\!=\!8.22\cdot 10^{-5}\), going from an average time of bounded force of \(t_B^\%\!=\!90.33\pm 7.23\) % with mocap teleoperation to \(t_B^\%\!=\!98.22\pm 3.43\) % using haptics with force-feedback assistance.

Moreover, the alignment is also improved when using haptics compared to remote control through motion capture, as shown in Fig. 12g. For the case of mocap teleoperation the percentage of time with bounded alignment was \(t_A^\%\!=\!51.69\pm 27.82\) %, whereas that with haptics increased up to \(t_A^\%\!=\!91.78\pm 5.99\) %. The difference observed is statistically significant, since \(p\!=\!2.2\cdot 10^{-7}\).

Regarding the applied force and torque, as shown in Fig. 12i, haptic feedback considerably reduces the maximum force applied onto the whiteboard (statistical significance of \(p\!=\!3.13\cdot 10^{-5}\)). In particular, there is a reduction of approximately 13.5 N, as \(F_{max}\!=\!29.82\pm 9.35\) N with the mocap system and \(F_{max}\!=\!16.25\pm 8.82\) N with the haptic device. Figure 12j shows the distributions of the maximum torque applied to the whiteboard, using both control methods. It can be observed that the haptic teleoperation improves the performance with a statistical significance of \(p\!=\!2.92\cdot 10^{-5}\), as the average maximum value is reduced from \(\tau _{max}\!=\!1.27\pm 0.41\) Nm using contactless kynaesthetics to \(\tau _{max}\!=\!0.72\pm 0.31\) Nm using haptics.

Next, kinematic variables are evaluated regarding the right arm’s end-effector. The maximum linear velocity and acceleration of the tool during the task are reduced when using haptic teleoperation, as shown in Fig. 12b and d respectively. This result implies increased safety during task execution, avoiding sharp movements and shakiness, with smoother and more accurate manoeuvres. With motion capture guidance the maximum linear velocity was \(v_{max}\!=\!0.509\pm 0.229\) m/s, whilst with the haptic input it was \(v_{max}\!=\!0.387\pm 0.114\) m/s. This difference is statistically significant, since \(p\!=\!0.0402\). Regarding the maximum linear acceleration, it was reduced from \(a_{max}\!=\!18.266\pm 6.428 \text { m/s}^2\) with mocap teleoperation to \(a_{max}\!=\!13.865\pm 2.104 \text { m/s}^2\) using haptics (with statistical significance of \(p\!=\!0.006\)).

Focusing on the average linear velocity \(v_{mean}\), it is increased when using the haptic teleoperation method (statistical significance of \(p\!=\!7.25\cdot 10^{-4}\)), as depicted in Fig. 12c. This result was expected as the overall duration \(\varDelta t\) was reduced. However, using haptics is a win-win strategy, because there is no tradeoff to pay. Indeed, the increment of linear velocity produced by the haptic teleoperation is achieved by performing smoother movements, since the maximum values of linear velocity and acceleration are reduced. Finally, Fig. 12e shows a reduction in the mean value of linear acceleration when using haptic teleoperation. Such difference has a statistical significance of \(p\!=\!7.23\cdot 10^{-13}\).

To conclude with the benchmarking evaluation of both control modes, Fig. 13 shows two examples of different users performing task T2 with both teleoperation methods, where the axial force \(F_z\) applied on the whiteboard and the alignment A over time are depicted. The difference between the haptic device with force-feedback assistance (red line) and the motion capture system (blue-dotted line) for the teleoperation is clearly observable. In fact, with the kynaesthetic remote control, the eraser has an oscillating behaviour, breaking quite a few times the force bound of \(-15\) N, as observed in the top graphs of Fig. 13. This is because both users were unable to stabilise the eraser, which needs the exertion of a limited force while keeping contact with the whiteboard. However, this instability is minimised and the performance is improved with haptic assistance (red line), since the force is bounded most of the time and the overall duration of the task reduced. Notice, however, that the force limits are only used to give feedback to the human operator. These limits can still be broken, as the assistance system is not designed to guarantee that constraints are not violated. Therefore, at all times the user retains full control of the task.

Furthermore, the alignment between eraser’s axial vector and the whiteboard’s surface normal vector is also kept bounded. Indeed, as shown in the bottom graphs of Fig. 13, the dot product defining the alignment tends to \(A\rightarrow -1\), because both vectors are parallel with opposite directions (see whiteboard and eraser’s frames, \({\mathbf {T}}_{Wb}\) and \({\mathbf {T}}_{Er}\) respectively, in Fig. 4). The rate at which the alignment metric is reduced is similar in both control methods

Both control methods comparably reduce the alignment metric, but the alignment is better and kept within bounds most of the time when using haptics. That effect does not occur with the kynaesthetic teleoperation using the mocap system, as both tools are misaligned several times during the test.

The results obtained in this section confirm the hypothesis made in Sect. 4.1, proving that the proposed haptic teleoperation with force-feedback helps to improve the performance of the wiping task in comparison to kynaesthetic teleoperation based on inertial motion capture systems. In particular, the haptics allows to reduce the task duration, increasing the percentage of wiped surface, while keeping the applied force bounded, also reducing maximum values of force and torque. Besides, although it is an indirect consequence because there was no torque feedback and the torque was not controlled in the remote manipulator, the alignment between eraser and whiteboard is also improved by haptics.

7.3 Questionnaire

The results of the final questionnaire are discussed next. After performing the left-arm task, most of the users declared that using motion capture was appropriate for moving and holding the whiteboard, since this teleoperation method was very intuitive, accurate and stable.

Regarding the right-arm task, i.e., erasing the whiteboard, the users rated the motion capture teleoperation slightly better than the haptic one in terms of usability, because using their own body was very intuitive. However, according to their opinion, the accuracy and stability was better in the haptic teleoperation thanks to the force-feedback assistance. In fact, most of the users said that the haptic feedback was helpful to improve stability, whilst wiping the whiteboard with the mocap teleoperation was not that easy, since they did not feel the force applied onto the surface.

Finally, some users reported some fatigue when doing the remote control with the motion capture system. That is because they had to keep the arm on the air while doing a task. On the contrary, teleoperation with haptic feedback was far more comfortable than using the motion capture device. However, a couple of users complained about the force-feedback, as apparently they did not like feeling the force and found this assistance unnecessary and a bit annoying. Indeed, these users were the ones that performed the worst in the haptic teleoperation. We assume that this feeling and poor performance are mainly due to the fact that they did not adapt to the haptic device in such a little time. Probably, using it for longer time would make them more comfortable and improve their performance.

7.4 Improvement of GUI

It is worth noting that, at some instants of the experiments, the user does not have a perfect view of the drawing on the whiteboard. In this sense, the view on the main camera (red box) allowed the user to see quite well the area to be wiped in real time (a 22” screen monitor was big enough to see it properly). The camera in region 2 of Fig. 9 (yellow box) was aimed to help the user check the state of the wiping. However, since it provided a perspective view, sometimes (not always) the user had to take off the eraser from the whiteboard to see the remaining ink to be wiped.

As further work, we intend to add an extra camera so that the user can switch between both views and decide which one is better at any time depending on their needs. In order to cover the whole working area avoiding occlusions or any blind spot, the new camera could be placed from a top view covering the whole working area. An alternative location for the camera would be the robot’s right arm, but instead of being on the wrist it could be placed at the elbow to allow a better view of the whiteboard, displaying more clearly the ink to be wiped.

8 Conclusions

Based on the results obtained in the experimentation carried out in this work, it can be concluded that the proposed dual-arm robot teleoperation system using inertial motion capture with haptic feedback assistance can be useful to apply to surface conditioning tasks. After analysing several metrics, it was found that the force-feedback assistance can reduce the time taken to complete the wiping task, increase the whiteboard’s wiped area, and facilitate pressure control, reducing the peaks of force and torque during the task. The proposed system also helped users to maximise the contact surface by keeping a better alignment between tools (whiteboard and eraser). Furthermore, all these benefits were obtained with smoother and more accurate manoeuvres. The results confirmed the initial assumption, since the haptic assistance system with force and visual feedback was designed to improve the overall performance by increasing the user’s immersion.

This work brings new insights to collaborative human–robot interaction. It has been demonstrated that the proposed architecture improves the overall performance of contact driven tasks, but it could also be used in other scenarios, such as grasping or object manipulation. To our knowledge, this is the first study combining these three modalities (haptics, kynaesthetics and visual feedback) and the results are promising. Indeed, the proposed framework could be implemented as a multimodal human–computer interaction system to interact with virtual agents, not only robots. Currently, there are many systems using either haptics or mocap as inputs to interface with computers. But they are used independently rather than exploiting the potential they have combined, as proposed in this work.

Further research can be done to improve the proposed control system in several ways. A first approach would be to develop a shared-control architecture, where human and robot will work in cooperation. In this new human in-the-loop architecture, the whiteboard and eraser will be moved around freely, until the tool makes contact with some object. Then, a low-level controller enters the loop to regulate the force applied by the manipulator, as was done in [67]. This guarantees that certain constraints are not violated, such as upper/lower bounds of the applied force or the alignment between both surfaces in contact.

Finally, the authors also plan to enhance the immersive experience by adding some audiovisual feedback using Mixed Reality. Thus, the remote environment will be reconstructed using depth cameras or any other similar device, in order to collect 3D data with texture. Then, in the local workspace, the user will wear a Head-Up Display (either AR or VR) in which a virtual world including the model of the robot will be rendered together with some real objects. It is expected that the combination of haptic and visual feedback with depth information will improve the accuracy, reduce the overall duration and produce more natural manoeuvres with lower mental workload for the human operator. Regarding the audio feedback, we aim to encode the applied force (or any other source of information) into a sound, so that such signal sets the frequency of a pitch sound to help the user perform the teleoperation. Something similar can be also done with the visual feedback. For instance, instead of using an independent graph to show the applied force, such signal could be shown in the virtual environment using some colour map proportional to the force. In this way, the operator would not be distracted from the primary task (surface conditioning) when trying to perform the secondary task (keep the constraints bounded).

Notes

Magnets were added for safety (to avoid breaking the tool in case of very high forces) and also to visualize whether the task was performed well or not (i.e., if the whiteboard drops the task was not performed properly).

Only 20 subjects are considered in Task 2, because two of them were discarded since they were unable to perform the task with any of the teleoperation methods.

Metrics are calculated for each participant and generated from their individual tests, but in order to assess the proposed method the results analysed in Sect. 7 compare the metrics for all participants combined.

References

Hägele M, Nilsson K, Pires JN, Bischoff R (2016) Industrial robotics. Springer, Cham, pp 1385–1422. https://doi.org/10.1007/978-3-319-32552-1_54

Hokayem PF, Spong MW (2006) Bilateral teleoperation: an historical survey. Automatica 42(12):2035–2057. https://doi.org/10.1016/j.automatica.2006.06.027

Son HI (2019) The contribution of force feedback to human performance in the teleoperation of multiple unmanned aerial vehicles. J Multimodal User Interfaces 13(4):335–342

Jones B, Maiero J, Mogharrab A, Aguliar IA, Adhikari A, Riecke BE, Kruijff E, Neustaedter C, Lindeman RW (2020) Feetback: augmenting robotic telepresence with haptic feedback on the feet. In: Proceedings of the 2020 international conference on multimodal interaction, pp 194–203

Merrad W, Héloir A, Kolski C, Krüger A (2021) Rfid-based tangible and touch tabletop for dual reality in crisis management context. J Multimodal User Interfaces. https://doi.org/10.1007/s12193-021-00370-2

Schettino V, Demiris Y (2019) Inference of user-intention in remote robot wheelchair assistance using multimodal interfaces. In: 2019 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, pp 4600–4606

Casper J, Murphy RR (2003) Human–robot interactions during the robot-assisted urban search and rescue response at the world trade center. IEEE Trans Syst Man Cybern Part B (Cybern) 33(3):367–385. https://doi.org/10.1109/TSMCB.2003.811794

Chen JY (2010) UAV-guided navigation for ground robot tele-operation in a military reconnaissance environment. Ergonomics 53(8):940–950. https://doi.org/10.1080/00140139.2010.500404 (pMID: 20658388.)

Aleotti J, Micconi G, Caselli S, Benassi G, Zambelli N, Bettelli M, Calestani D, Zappettini A (2019) Haptic teleoperation of UAV equipped with gamma-ray spectrometer for detection and identification of radio-active materials in industrial plants. In: Tolio T, Copani G, Terkaj W (eds) Factories of the future: the Italian flagship initiative. Springer, Cham, pp 197–214. https://doi.org/10.1007/978-3-319-94358-9_9

Santos Carreras L (2012) Increasing haptic fidelity and ergonomics in teleoperated surgery. PhD Thesis, EPFL, Lausanne, pp 1–188. https://doi.org/10.5075/epfl-thesis-5412

Hatzfeld C, Neupert C, Matich S, Braun M, Bilz J, Johannink J, Miller J, Pott PP, Schlaak HF, Kupnik M, Werthschützky R, Kirschniak A (2017) A teleoperated platform for transanal single-port surgery: ergonomics and workspace aspects. In: IEEE world haptics conference (WHC), pp 1–6. https://doi.org/10.1109/WHC.2017.7989847

Burns JO, Mellinkoff B, Spydell M, Fong T, Kring DA, Pratt WD, Cichan T, Edwards CM (2019) Science on the lunar surface facilitated by low latency telerobotics from a lunar orbital platform-gateway. Acta Astronaut 154:195–203. https://doi.org/10.1016/j.actaastro.2018.04.031

Sivčev S, Coleman J, Omerdić E, Dooly G, Toal D (2018) Underwater manipulators: a review. Ocean Eng 163:431–450. https://doi.org/10.1016/j.oceaneng.2018.06.018

Abich J, Barber DJ (2017) The impact of human–robot multimodal communication on mental workload, usability preference, and expectations of robot behavior. J Multimodal User Interfaces 11(2):211–225. https://doi.org/10.1007/s12193-016-0237-4

Hong A, Lee DG, Bülthoff HH, Son HI (2017) Multimodal feedback for teleoperation of multiple mobile robots in an outdoor environment. J Multimodal User Interfaces 11(1):67–80. https://doi.org/10.1007/s12193-016-0230-y

Katyal KD, Brown CY, Hechtman SA, Para MP, McGee TG, Wolfe KC, Murphy RJ, Kutzer MDM, Tunstel EW, McLoughlin MP, Johannes MS (2014) Approaches to robotic teleoperation in a disaster scenario: from supervised autonomy to direct control. In: IEEE/RSJ international conference on intelligent robots and systems, pp 1874–1881. https://doi.org/10.1109/IROS.2014.6942809

Niemeyer G, Preusche C, Stramigioli S, Lee D (2016) Telerobotics. Springer, Cham, pp 1085–1108. https://doi.org/10.1007/978-3-319-32552-1_43

Li J, Li Z, Hauser K (2017) A study of bidirectionally telepresent tele-action during robot-mediated handover. In: Proceedings—IEEE international conference on robotics and automation, pp 2890–2896. https://doi.org/10.1109/ICRA.2017.7989335

Peng XB, Kanazawa A, Malik J, Abbeel P, Levine S (2018) Sfv: reinforcement learning of physical skills from videos. ACM Trans. Graph. 37(6):178:1-178:14. https://doi.org/10.1145/3272127.3275014

Coleca F, State A, Klement S, Barth E, Martinetz T (2015) Self-organizing maps for hand and full body tracking. Neurocomputing 147: 174–184. Advances in self-organizing maps subtitle of the special issue: selected papers from the workshop on self-organizing maps 2012 (WSOM 2012). https://doi.org/10.1016/j.neucom.2013.10.041

Von Marcard T, Rosenhahn B, Black MJ, Pons-Moll G (2017) Sparse inertial poser: automatic 3d human pose estimation from sparse Imus. In: Computer graphics forum, vol 36. Wiley, pp 349–360

Zhao J (2018) A review of wearable IMU (inertial-measurement-unit)-based pose estimation and drift reduction technologies. J Phys Conf Ser 1087:042003. https://doi.org/10.1088/1742-6596/1087/4/042003

Malleson C, Gilbert A, Trumble M, Collomosse J, Hilton A, Volino M (2018) Real-time full-body motion capture from video and IMUs. In: Proceedings—2017 international conference on 3D vision, 3DV 2017 (September), pp 449–457. https://doi.org/10.1109/3DV.2017.00058

Du G, Zhang P, Mai J, Li Z (2012) Markerless kinect-based hand tracking for robot teleoperation. Int J Adv Robot Syst 9(2):36. https://doi.org/10.5772/50093

Çoban M, Gelen G (2018) Wireless teleoperation of an industrial robot by using myo arm band. In: International conference on artificial intelligence and data processing (IDAP), pp 1–6. https://doi.org/10.1109/IDAP.2018.8620789

Lipton JI, Fay AJ, Rus D (2018) Baxter’s homunculus: virtual reality spaces for teleoperation in manufacturing. IEEE Robot Autom Lett 3(1):179–186. https://doi.org/10.1109/LRA.2017.2737046

Zhang T, McCarthy Z, Jow O, Lee D, Chen X, Goldberg K, Abbeel P (2018) Deep imitation learning for complex manipulation tasks from virtual reality teleoperation. In: IEEE international conference on robotics and automation (ICRA), pp 5628–5635. https://doi.org/10.1109/ICRA.2018.8461249

Hannaford B, Okamura AM (2016) Haptics. Springer, Cham, pp 1063–1084. https://doi.org/10.1007/978-3-319-32552-1_42

Rodríguez J-L, Velàzquez R (2012) Haptic rendering of virtual shapes with the Novint Falcon. Proc Technol 3:132–138. https://doi.org/10.1016/J.PROTCY.2012.03.014

Teklemariam HG, Das AK (2017) A case study of phantom omni force feedback device for virtual product design. Int J Interact Des Manuf (IJIDeM) 11(4):881–892. https://doi.org/10.1007/s12008-015-0274-3

Karbasizadeh N, Zarei M, Aflakian A, Masouleh MT, Kalhor A (2018) Experimental dynamic identification and model feed-forward control of Novint Falcon haptic device. Mechatronics 51:19–30. https://doi.org/10.1016/j.mechatronics.2018.02.013

Georgiou T, Demiris Y (2017) Adaptive user modelling in car racing games using behavioural and physiological data. User Model User-Adapted Interact 27(2):267–311. https://doi.org/10.1007/s11257-017-9192-3

Son HI (2019) The contribution of force feedback to human performance in the teleoperation of multiple unmanned aerial vehicles. J Multimodal User Interfaces 13(4):335–342. https://doi.org/10.1007/s12193-019-00292-0

Ramírez-Fernández C, Morán AL, García-Canseco E (2015) Haptic feedback in motor hand virtual therapy increases precision and generates less mental workload. In: 2015 9th international conference on pervasive computing technologies for healthcare (PervasiveHealth), pp 280–286. https://doi.org/10.4108/icst.pervasivehealth.2015.260242

Saito Y, Raksincharoensak P (2019) Effect of risk-predictive haptic guidance in one-pedal driving mode. Cognit Technol Work 21(4):671–684. https://doi.org/10.1007/s10111-019-00558-3

Girbés V, Armesto L, Dols J, Tornero J (2016) Haptic feedback to assist bus drivers for pedestrian safety at low speed. IEEE Trans Haptics 9(3):345–357. https://doi.org/10.1109/TOH.2016.2531686

Girbés V, Armesto L, Dols J, Tornero J (2017) An active safety system for low-speed bus braking assistance. IEEE Trans Intell Transp Syst 18(2):377–387. https://doi.org/10.1109/TITS.2016.2573921

Escobar-Castillejos D, Noguez J, Neri L, Magana A, Benes B (2016) A review of simulators with haptic devices for medical training. J Med Syst 40(4):104. https://doi.org/10.1007/s10916-016-0459-8

Coles TR, Meglan D, John NW (2011) The role of haptics in medical training simulators: a survey of the state of the art. IEEE Trans Haptics 4(1):51–66. https://doi.org/10.1109/TOH.2010.19