Abstract

In this paper, we study an important real-life scheduling problem that can be formulated as an unrelated parallel machine scheduling problem with sequence-dependent setup times, due dates, and machine eligibility constraints. The objective is to minimise total tardiness and makespan. We adapt and extend a mathematical model to find optimal solutions for small instances. Additionally, we propose several variants of simulated annealing to solve very large-scale instances as they appear in practice. We utilise several different search neighbourhoods and additionally investigate the use of innovative heuristic move selection strategies. Further, we provide a set of real-life problem instances as well as a random instance generator that we use to generate a large number of test instances. We perform a thorough evaluation of the proposed techniques and analyse their performance. We also apply our metaheuristics to approach a similar problem from the literature. Experimental results show that our methods are able to improve the results produced with state-of-the-art approaches for a large number of instances.

Similar content being viewed by others

1 Introduction

Finding optimised machine schedules in manufacturing is an important and challenging task, as a large number of jobs need to be processed every day. While a manual approach performed by human experts can be used to deal with a small number of jobs, the large-scale requirements of modern factories introduce the need for efficient automated scheduling techniques. In the literature, such scheduling problems that deal with the assignment of jobs to multiple machines which operate in parallel have previously been described as Parallel Machine Scheduling Problems (PMSPs, e.g. Allahverdi et al. 2008; Allahverdi 2015).

Several types of machines can perform different sets of operations in the industry, so that each job can be assigned to a specified set of eligible machines, and varying machine efficiency has to be considered. PMSPs that consider eligible machines are well-known in the literature and have been studied in several publications (e.g. Afzalirad and Rezaeian 2016; Perez-Gonzalez et al. 2019). Similarly, problems with varying machine efficiency have been previously described as Unrelated PMSP (UPMSP) (e.g. Vallada and Ruiz 2011; Avalos-Rosales et al. 2015; Allahverdi 2015). After a job has reached its completion on a machine, in practice it is often required to perform a change of the tooling or a machine maintenance before the next job can be processed. The corresponding changeover times between jobs have been referred to as sequence-dependent setup times in previous publications (e.g. Vallada and Ruiz 2011; Perez-Gonzalez et al. 2019).

In the problem, we investigate in this paper, which emerges from a company in the packaging industry, each job corresponds to a customer order with an associated due date. Therefore, the problem’s objective function aims to minimise the total tardiness of all jobs in addition to the total makespan.

In summary, the real-life problem we investigate in this paper can be characterised as UPMSP with sequence-dependent setup times and eligible machines that aims to minimise both tardiness and makespan. Although a large number of different variants of UPMSP that feature all of the mentioned attributes to some extent have been described in the literature, efficient metaheuristic and exact solution methods that consider both tardiness and makespan objectives at the same time remain to be investigated.

We adapt existing mathematical models for related problems and compare several variations for our problem. However, the evaluated exact approaches can solve in reasonable time only small instances. To solve large practical instances, we deeply investigate several variants of Simulated Annealing (SA, Kirkpatrick et al. 1983). The variants we investigate include different cooling schemes such as a dynamic cooling rate and a reheating mechanism. We investigate different search neighbourhoods based on shift and swap moves, which are commonly used in the context of PMSP. Additionally, we propose a novel neighbourhood operator for UPMSP that operates on blocks of consecutively scheduled jobs. To increase the effectiveness of the move generation procedure, we guide the search towards more promising regions of the search space by incorporating domain knowledge into the neighbourhood move selection strategy.

We provide real problem instances which are based on real-life scheduling scenarios that have been provided to us by an industrial partner. Furthermore, we propose a random instance generator to generate diverse datasets that together with the real-world instances form a large pool of instances which we use in our experimental evaluation. Experimental results show that the Simulated Annealing approach is able to obtain high-quality solutions for both randomly generated and real-life instances.

To show the robustness of our method we compare to the approach from Perez-Gonzalez et al. (2019) that was proposed recently for a similar problem. The problem specification provided by Perez-Gonzalez et al. (2019) uses the same set of constraints of the problem that we investigate in this paper and also aims to minimise total tardiness. However, the authors do not consider the minimisation of the total makespan. As the minimisation of total tardiness is incomparably more important than the makespan in our problem specification, we can easily use our solution methods to approach the problem instances provided by Perez-Gonzalez et al. (2019). Our comparison on a huge set of instances that have been provided by Perez-Gonzalez et al. (2019) shows that our approach produces improved results for the majority of the instances.

The structure of the paper is as follows: In Sect. 2, we describe our problem and give an overview of the existing related literature. We provide eight different mixed-integer programming (MIP) formulation variants for the problem in Sect. 3. Our metaheuristic algorithms are presented in Sect. 4. Our instance generator and our datasets are described in Sect. 5. The results of our computational experiments are presented in Sect. 6. Finally, conclusions and remarks on possible future research are given in Sect. 7.

2 Problem statement and literature review

The problem we investigate in this paper can be characterised as an Unrelated Parallel Machine Scheduling Problem with Sequence-Dependent Setup Times and Machine Eligibility Constraints with the objective of minimising both total tardiness as well as makespan. A Parallel Machine Scheduling Problem (PMSP) aims to assign jobs to a given number of machines to create an optimised production schedule, where every machine is continuously available and can process one job at a time. The execution of jobs cannot be interrupted and resumed later, and there are no precedence constraints between jobs. Furthermore, every machine is assumed to start immediately the execution of its assigned schedule without any break or interruption.

Instances of the PMSP are stated using a set of machines M and a set of jobs J. For every job \(j \in J\), we have a due date \(d_j\) denoting its latest acceptable completion time and a set of eligible machines \(M_j \subseteq M\) on which the job can be processed. The processing time \(p_{jk}\) of each job \(j \in J\) depends on the machine \(k \in M\) on which it is executed. Further, setup times \(s_{ijk}\) are defined for any pair of jobs i and j that are consecutively scheduled on a machine k. Additionally, an initial setup time \(s_{0jk}\) is required before the execution of job j can begin when it is scheduled as the first job on machine k. Analogously, a clearing time \(s_{j0k}\) is required after the execution of job j, if it is the last scheduled job on machine k.

A solution to a PMSP assigns a schedule for every machine k, which is represented by a permutation of a subset of all jobs J. If a job i occurs directly before another job j in the schedule for machine k, then i is called the predecessor of j (and j is the successor of i). Since the solution representation of the schedule is sequence-based, the completion time \(C_j\) of a job j is the sum of the predecessor’s completion time \(C_i\), the appropriate setup time \(s_{ijk}\), and the job’s processing time \(p_{jk}\). If a job is the first in its schedule, its completion time is defined by the initial setup time \(s_{0jk}\) plus its own processing time \(p_{jk}\). Furthermore, the tardiness of a job is defined to be the difference between its completion time and due date (\(T_j := \max (0, C_j - d_j)\)). The machine span \(O_k\) for a machine k is set to the completion time of the job which is scheduled last plus the final clearing time \(s_{j0k}\). Note that the final clearing times only affect the machine spans, but not the completion times of jobs. Finally, the makespan \(C_{\max }\) is defined to be equal to the maximum of all machine spans.

Graham et al. (1979) proposes a three-field \(\alpha | \beta | \gamma \) notation to categorise machine scheduling problems, where \(\alpha \), \(\beta \) and \(\gamma \) describe the machine environment, additional constraints and the solution objectives, respectively. The machine environment for PMSPs typically consists of identical machines (\(P_m\)) or unrelated machines (\(R_m\)). Given that we are dealing with an environment consisting of unrelated machines, the problem we investigate can be characterised as \(R_m | s_{ijk}, M_j | Lex(\varSigma _j (T_j), C_{\max })\). The objective function \(Lex(\varSigma _j (T_j), C_{\max })\) in this case means that both tardiness and makespan should be minimised, with the tardiness being incomparably more important than the makespan (i.e. the objectives are lexicographically ordered). This particular problem variant can be seen as a generalisation of the basic PMSP with identical machines (\(P_m | | C_{\max }\)), which has been shown to be NP-hard even with only two machines (see Garey and Johnson 1979). Furthermore, even the minimisation of only tardiness has been shown to be NP-hard by Du and Leung (1990).

PMSPs have been the subject of thorough research in the past, and two surveys by Allahverdi et al. (2008) and Allahverdi (2015) give an overview of the related literature. Sequence-dependent setup times on unrelated machines have been described for many problems that have been studied in the literature. A well-known problem in this area is for example the Unrelated Parallel Machine Scheduling Problem with Sequence-Dependent Setup Times, aiming to minimise the makespan (\(R_m | s_{ijk} | C_{\max }\)). Among the first to investigate this problem variant were Al-Salem (2004), who propose a Partitioning Heuristic as solution method and Rabadi et al. (2006) who tackle the problem using a novel technique called Meta-Heuristic for Randomised Priority Search. Arnaout et al. (2010) proposed an Ant Colony Optimisation algorithm, which they further improved later (Arnaout et al. 2014). Vallada and Ruiz (2011) propose Genetic Algorithms for the problem and create a set of benchmark instances for their experiments. Avalos-Rosales et al. (2015) propose two new mathematical formulations and a Variable Neighbourhood Descent metaheuristic. They show that their proposed MIP models outperform existing models on the set of benchmark instances provided by Vallada and Ruiz (2011). More recent contributions include Santos et al. (2019) who use Stochastic Local Searches on Vallada’s instances. They find that Simulated Annealing offers good performance over all evaluated instance sizes. Tran et al. (2016) apply both Logic-based Benders Decomposition and Branch and Check as exact methods for solving the problem. Gedik et al. (2018) propose a Constraint Programming formulation of the problem, leveraging the benefits of interval variables. Fanjul-Peyro et al. (2019) propose a new MIP model for the problem and an algorithm based on mathematical programming. They replace the sub-tour elimination constraints in the MIP model from Avalos-Rosales et al. (2015) by constraints adapted from previous traveling salesperson problem formulations. This results in a more efficient mathematical formulation for the problem which does not compute all of the jobs’ completion times. Other contributions for this problem variant include a Tabu Search approach (Helal et al. 2006) and a Simulated Annealing approach (Ying et al. 2012).

PMSPs that include machine eligibility constraints have been considered several times in the literature. Rambod and Rezaeian (2014) consider a PMSP with sequence-dependent setup times and machine eligibility constraints that focuses on minimising the makespan (\(R_m | s_{ijk}, M_j | C_{\max }\)). Additionally, they include the likeliness of manufacturing defects in their objective function. Afzalirad and Rezaeian (2017) minimise a bi-criterion objective consisting of mean weighted tardiness (MWT) and mean weighted flow time (MWFT) in a PMSP with sequence-dependent setup times and machine eligibility. They assume different release times of jobs (\(r_j\)) and precedence constraints (prec) among jobs

(\(R_m | s_{ijk}, M_j, r_j, prec | MWT, MWFT\)).

Afzalirad and Rezaeian (2016) try to minimise the makespan for a similar problem where the execution of jobs requires additional resources (res) with limited availability (\(R_m | s_{ijk}, M_j, r_j, prec, res | C_{\max }\)). Bektur and Saraç (2019) consider a problem variant similar to the one we investigate in this paper, where they minimise the total weighted tardiness. Additionally, they require the availability of a single server (\(S_1\)) to perform the setups between jobs (\(R_m | s_{ijk}, M_j, S_1 | \varSigma _j (w_j \cdot T_j)\)). Chen (2006) considers a problem with machine eligibility, where fixed setup times are only required if two consecutive jobs produce different product families. Their objective is to minimise the maximum tardiness of all jobs. Afzalirad and Shafipour (2018) try to minimise the makespan in a PMSP with machine eligibility and resource restrictions and assume that setup times are included in the processing times.

The problem statements most closely resembling the problem considered in this paper are studied by Caniyilmaz et al. (2015), Adan et al. (2018) and Perez-Gonzalez et al. (2019). All three papers use sequence-dependent setup times, due dates and machine eligibility constraints. However, each of these papers focuses on the minimisation of a slightly different objective function.

Caniyilmaz et al. (2015) try to minimise the sum of makespan and cumulative tardiness (\(C_{\max } + \varSigma _j T_j\)). They implement an Artificial Bee Colony algorithm and compare its performance against a Genetic Algorithm on a real-life instance originating from a quilting work centre. This most closely resembles our objective of minimising tardiness as primary target and makespan as secondary target. Adan et al. (2018) try to minimise a three-part objective function, consisting of a weighted sum of total tardiness, setup times and processing times (\(\alpha \cdot \varSigma _j T_j + \beta \cdot \varSigma _{jk} p_{jk} + \gamma \cdot \varSigma _{ijk} s_{ijk}\)), where \(\alpha \), \(\beta \) and \(\gamma \) are weights. This objective function coincides with our objective function when there is only a single machine available and the weights are chosen appropriately. They implement a Genetic Algorithm very similar to the one described by Vallada and Ruiz (2011) and apply it to three real-life datasets. Perez-Gonzalez et al. (2019) also consider a problem that is similar to ours, but they only take the tardiness of jobs into consideration and disregard the makespan. Furthermore, they propose a MIP model for their problem which is based on the mathematical formulation from Vallada and Ruiz (2011), along with five different constructive heuristics and an immune-based metaheuristic. They are the first to create a sizeable dataset that is available for other researchers.

In summary, we can see that a large variety of PMSPs have been studied in the past. However, to the best of our knowledge, efficient metaheuristic and exact solution methods for the particular problem variant that considers a lexicographically ordered minimisation of total tardiness and makespan under machine eligibility constraints and sequence-dependent setup times remains to be investigated.

3 Mixed-integer programming

In their work, Perez-Gonzalez et al. (2019) propose a mathematical formulation for a similar PMSP that is based on the model described by Vallada and Ruiz (2011). As their objective function does not consider the makespan, their model does not include corresponding constraints. We therefore extend their proposed model by constraint sets (5) and (8), to calculate the makespan (which includes the clearing times). The variables used in the formulation are described in Table 1. The set \(J_0\) includes a dummy job (0), that represents the start and end points of each machine schedule. The predecessor of the first job assigned to each machine is set to be the dummy job. Similarly, the successor of the last job assigned to each machine is set to be the dummy job. \(X_{i,j,m}\) are binary decision variables which are set to 1 if and only if job j is scheduled directly after job i on machine m (and 0 otherwise). \(C_{j,m}\) denotes the completion time of job j on machine m and variables \(T_{j}\) represent the tardiness of job j. \(C_{\max }\) is set to the total makespan which includes the clearing times.

The resulting model M1 can be stated as follows:

Constraint set (1) binds the tardiness of each job. Constraint set (2) ensures that every job has exactly one predecessor and is scheduled on one machine, while constraint set (3) restricts every job to have only one successor. They are not instantiated for the dummy job, because it is shared over all machines and thus can have multiple predecessors (successors) in the solution. Constraint set (4) restricts every machine to schedule at most one job at position one. Constraint set (5) forces each job (except for the dummy job) to have a single predecessor and successor on the machine where it is scheduled. Constraint set (6) checks that if a job j is scheduled after another job i on the same machine, then i has at least one predecessor as well. Constraint set (7) calculates the completion time \(C_{j,m}\) for every job j on machine m. Note that \(V \cdot (1 - X_{i,j,m})\) evaluates to 0, if job i is the predecessor of job j on some machine. Otherwise, it evaluates to V and thereby fulfils the inequality. Given that every job has a processing time greater than zero on every machine, constraint set (7) also enforces sub-tour elimination regarding the predecessor relations. Constraint set (8) calculates the makespan which includes the clearing time after the final job. Constraint set (9) forces the completion time of the dummy job to be 0 on every machine. Constraint sets (10) to (12) restrict the domains of the decision variables.

Note that constraint set (2) does not ensure that jobs are scheduled on one of their eligible machines. Instead, M1 sets the processing times of each job on its ineligible machines to V (an upper bound to the makespan) so that the minimisation will implicitly avoid assignment of jobs on ineligible machines. This way of implicitly modelling the machine eligibility constraints was used in the parallel machine scheduling model proposed by Perez-Gonzalez et al. (2019).

We additionally investigate in this paper an alternative model M2 that simply models machine eligibility explicitly by constraint sets (13) and (14) that replace constraint set (2).

Constraint set (13) ensures that every job is scheduled on exactly one of its eligible machines, while constraint set (14) prohibits jobs from being scheduled on any other machine.

Avalos-Rosales et al. (2015) propose new mathematical formulations for the problem described by Vallada and Ruiz (2011). Most notably, they include a new set of binary decision variables \(Y_{j,m}\) to describe whether or not job j is scheduled on machine m and they additionally introduce the notion of machine spans. Further, they replace the decision variables for the completion time \(C_{j,m}\) of job j on machine m by the variables \(C_{j}\).

In addition to the above-mentioned models M1 and M2, we extend the model proposed by Avalos-Rosales et al. (2015) to our problem statement to derive alternative mathematical formulations. These extensions include machine eligibility, due dates and the incorporation of final clearing times.

The resulting model M3 can be stated as:

Constraint set (15) ensures that every job is scheduled on exactly one machine. Constraint sets (16), (17) ensure that every job has predecessors and successors on a machine if and only if the job is scheduled on this machine. Constraint set (18) connects the completion time for each job to its predecessors. Constraint set (19) ensures that there is at most one first job on each machine. Constraint set (20) binds the machine span for each machine by summing up the setup times between its scheduled jobs and their processing times. Constraint sets (21) and (22) involve the tardiness of each job and force it to be nonnegative. Constraint (23) sets the dummy job’s completion time to 0. Constraint (24) and (25) enforce that variables \(X_{i,j,m}\) and \(Y_{i,m}\) have a binary domain.

The model M3 incorporates machine eligibility via penalisation of the corresponding processing times similar as it is done in M1, and therefore also sets the processing time of each job on its ineligible machines to V. We investigate in this paper an alternative model M4 that is based on M3 but explicitly models the machine eligibility constraints using hard constraints. M4 therefore replaces constraint set (15) with constraint sets (26) and (27).

Constraint set (26) ensures that every job is scheduled on exactly one of its eligible machines, while constraint set (27) prohibits jobs from being scheduled on any ineligible machines.

Helal et al. (2006) use a different formulation for constraint set (18) which aggregates the machines via sums instead of instantiating the constraints for every machine. Replacing constraint set (18) in models M3 and M4 by constraint set (28) results in models M5 and M6.

We additionally experimented with an alternative variant for constraint set (5), which removes the set of machines from the summation and instead includes it in the \(\forall \) quantifier. We therefore introduce constraint set (29):

Replacing constraint set (5) in models M1 and M2 by constraint set (29) results in models M7 and M8.

The implementation of models M1–M8 and their effectiveness will be discussed in Sect. 6.1.

4 Metaheuristic approaches

In addition to the MIP formulations, we propose several Simulated Annealing variants to quickly find high-quality solutions for large instances as they appear in the real world. We first describe how initial solutions for local search can be generated in Sect. 4.1. Afterwards, we propose neighbourhood operators for the PMSP in Sect. 4.2, and finally, we describe three Simulated Annealing variants in Sect. 4.3.

4.1 Constructing initial solutions

One way to create an initial solution for local search is to randomly assign jobs to machines. We can do this by selecting one of the eligible machines for each job randomly and then scheduling all jobs in random order on the selected machines.

An alternative to using a random construction of initial solutions is to greedily build an initial schedule. In our case, we propose a constructive greedy heuristic which aims to minimise both tardiness and makespan as follows: first, we sort the set of jobs in ascending order by the due dates. Afterwards, we process the ordered jobs and schedule one job after the other on one of its eligible machines. To decide which machine should be selected for a job, the greedy heuristic compares the total machine spans that would be caused by each of the feasible machine assignment and finally selects the assignment that leads to the lowest machine span (ties are broken randomly). If multiple jobs exist that have exactly the same due date, we compare possible machine assignments for all of these jobs in a single step instead of processing them in random order. In such a case we then also select the job to machine assignment that leads to the lowest increase in machine span. The detailed pseudo-code for this heuristic can be seen in Algorithm 1.

4.2 Search neighbourhoods

In this section, we introduce the neighbourhood relations that we use in our search method. We begin with the atomic neighbourhoods Shift and Swap, and then we describe the more complex block moves, called BlockShift and BlockSwap. Finally, we discuss the general notion of guidance used to bias the random selection toward promising moves.

4.2.1 Shift neighbourhood

A Shift move is configured to shift a given job j onto machine m at position p. In other words, the job j is first removed from its original location in the current solution. Any successor on the associated machine is then shifted by one position towards the front of the schedule. Finally, job j is re-inserted into the solution at its target position p in the schedule of machine m. Any job that is present on the target schedule at a later or equal position is shifted towards the end of the schedule.

We call a shift move an intra-machine shift move if job j is already scheduled on machine m in the current solution. Otherwise, if j is currently assigned to a machine different to m, it is called an inter-machine shift move. An example of an inter-machine shift move is visualised in Fig. 1. The figure shows a schedule for two machines, before and after job 3 is moved from machine 1 to machine 2. Note that the length of the arrows between two consecutive jobs indicates the length of the required setup time.

Shift moves are feasibility-preserving as long as the target machine m is eligible for the selected job j.

4.2.2 Swap neighbourhood

A Swap move swaps the position of two distinct jobs \(j_1\) and \(j_2\). If both jobs are scheduled on the same machine, we refer to such a move as an intra-machine swap. Otherwise, if jobs are scheduled on different machines, we call it an inter-machine swap. An example of an inter-machine swap move is visualised in Fig. 2. The figure shows a schedule for two machines, before and after job 3 is swapped with job 11 between machine 1 and machine 2.

Note that in the case of inter-machine swaps it is likely that the processing times and associated setup times for both jobs will change. Furthermore, completion times of all jobs that are scheduled on the affected machines after the swapped jobs need to be updated. When performing intra-machine swaps, the processing times of the swapped jobs do not change. However, the completion times of other jobs on the same schedule still need to be updated.

To preserve feasibility for swap moves, it has to be ensured that the first job’s machine has to be eligible for the second job and vice versa.

4.2.3 Block moves

We introduce the notion of block moves, as a variant of the basic shift and swap neighbourhoods: A block is defined as a set of jobs that are scheduled consecutively on a single machine. Therefore, a block move operates on a set of jobs instead of a single job. This concept can be applied to both Swap and Shift moves, leading to two new neighbourhoods that we call BlockSwap and BlockShift, respectively. BlockShift moves are similar to regular shift moves, but include an additional parameter l determining the length of the block. In turn, every BlockSwap move uses two additional attributes \(l_1\) and \(l_2\) representing the length of the blocks.

Block moves are motivated by our real-life application, where usually several jobs process the same material type and thus are in the best case scheduled consecutively to avoid unnecessary setup times. Therefore, moving blocks of jobs at once can be beneficial to preserve low setup times when searching for neighbourhood solutions. To the best of our knowledge, using such block moves to approach parallel machine scheduling problems has not been considered in the literature before.

To be feasibility-preserving, the target machine of a BlockShift move has to be included in the intersection of all eligible machine sets of the affected blocks. For intra-machine BlockSwap moves, the blocks may not overlap and the second block’s machine has to be contained in the intersection of the eligible machine sets of all jobs in the first block and vice versa.

4.2.4 Guidance in random move generation

As customary for many practical scheduling problems, the size of the search neighbourhood becomes tremendously large for real-world instances. Therefore, it might be infeasible to explore all possible neighbourhood moves in reasonable time and the probability of randomly guessing an improving move usually is very low. Thus, it can be beneficial to introduce problem-specific strategies that guide exploration towards promising areas in the search neighbourhood. The general idea is to preferably select moves affecting jobs and machines that contribute to the cost of the solution.

Santos et al. (2019) propose a guidance strategy for their Simulated Annealing approach that focuses on reducing the makespan. They point out that a move can only improve the makespan if it involves the machine with the longest machine span. For this reason, they generate moves such that at least one of the involved machines is fixed to be this machine, in addition to randomly generated moves.

Similarly, in our problem the tardiness of a solution can only be improved if a tardy job is either rescheduled at an earlier time, or its predecessors are shifted to other machines or later positions. Therefore, we propose a move selection strategy that is biased towards moves that shift tardy jobs to earlier positions in the schedule as follows: Whenever a tardy job exists in the schedule, either the job itself or one of its predecessors is selected randomly. Otherwise, if no tardy job exists, any random job is chosen. Additionally, in case of an intra-machine (block) shift move we restrict the target position to be earlier than the source position. The detailed procedure to select a job that can improve tardiness is described in Algorithm 2.

To generate the moves we include, in addition to a completely random move generation, both the makespan guidance and the tardiness guidance strategies. One of the mentioned generation strategies is chosen during a search iteration based on random probabilities that are configured by parameters.

4.3 Simulated annealing

Simulated Annealing is a metaheuristic procedure which is inspired by the cooling processes appearing in metallurgy and has first been proposed by Kirkpatrick et al. (1983). The main idea is to generate random neighbourhood moves and determine the probability of move acceptance based on the change in solution quality caused by the move. Moves that lead to an improved objective function or no change in solution cost are accepted in any case. To determine whether or not a move that weakens the solution quality should be accepted, the notion of temperature is used. Simply put, the higher the temperature, the higher is the probability to accept also worsening moves. As the search goes on, the temperature lowers its value according to some cooling scheme and eventually reaches values close to zero. Towards the end of search Simulated Annealing therefore evolves into a Hill-Climber as only improving moves are accepted.

Pseudo-code for the basic procedure of Simulated Annealing can be seen in Algorithm 3, where \(T_{\max }\), \(N_s\) and \(\alpha \) are the initial temperature, the number of samples per temperature, and the cooling rate, respectively.

In the remainder of this section, we propose three different variants of Simulated Annealing with different cooling schemes.

4.3.1 Reheating simulated annealing (SA-R)

This uses a geometric cooling scheme, i.e. \(t_{i+1} := t_{i} \cdot \alpha \), where the cooling rate \(\alpha \) is a predefined constant. At each temperature, a number of moves \(N_{s}\) are generated before the next cooling step is applied. To determine the neighbourhood from which to sample the next move, we use a set of hierarchical probabilities (\(p_{I}\), \(p_{S}\), and \(p_{B}\)). When the next move is determined to be a block move, we determine its size by uniformly sampling from the set \(\{2, ..., B_{\max }\}\). Further options for move generation are whether or not to enable guidance towards minimising tardiness or makespan. This is again handled by corresponding probabilities, \(p_{T}\) and \(p_{M}\).

If the generated move improves the solution, it is accepted immediately. The probability of accepting a worsening move is calculated via Eq. (30) where \(\delta \) denotes the cumulative weighted delta cost introduced by the move and \(t_c\) is the current temperature.

In order to compute \(\delta \), we define the weighted cost of a solution S as C(S) Eq. (31), where C(S) is the weighted sum of total tardiness and the makespan.

W should be a constant that is sufficiently large to ensure the lexicographic order of the objectives (in our experiments we set \(W := 10^5\)). Given a current solution S and a solution \(S'\), we then set \(\delta := C(S') - C(S)\).

Since improving moves are accepted unconditionally, only positive values for \(\delta \) can occur in this formula. It should be noted that higher values for \(\delta \) lead to lower values in the exponent and thus lower acceptance probabilities.

When the algorithm reaches its minimum temperature \(T_{\min }\), a reheat occurs and its temperature is set to the initial temperature \(T_{\max }\). Execution stops when a time limit is reached.

The parameters for this variant of Simulated Annealing are the following:

-

\(T_{\max }\): Initial Temperature

-

\(T_{\min }\): Minimum Temperature

-

\(N_{s}\): Samples per Temperature

-

\(\alpha \): Cooling Rate

-

\(p_{I}\): Probability of generating inter-machine moves (as opposed to intra-machine moves)

-

\(p_{S}\): Probability of generating shift moves (as opposed to swap moves)

-

\(p_{B}\): Probability of generating block moves (as opposed to single-job moves)

-

\(p_{T}\): Probability of applying tardiness guidance in the move generation

-

\(p_{M}\): Probability of applying makespan guidance in the move generation

-

\(B_{\max }\): Maximum size of blocks

4.3.2 Simulated annealing with dynamic cooling (SA-C)

This variant tries to continually adapt its cooling rate in such a way that the minimum temperature is reached at the end of the algorithm’s time limit. Therefore, an estimate of how many iterations can still be done within the time limit is calculated after each iteration. Before every cooling step, the current cooling rate \(\alpha _i\) is computed according to Eq. (32), where x is the number of cooling steps left, \(T_{\min }\) and \(t_c\) are the minimum and current temperature, respectively. Pseudo-code for this dynamic cooling procedure can be seen in Algorithm 4.

In order to minimise the time spent in high temperatures, we further apply a cut-off mechanic. This counts the number of accepted moves at every temperature and forces an immediate cooling step if a specified threshold \(N_{a}\) is exceeded. This threshold is often specified as ratio \(\rho = \frac{N_{a}}{N_{s}}\) and a typical value is 0.05. Overall, SA-C requires the same set of parameters as SA-R, except for \(\alpha \), which is replaced by \(\rho \).

4.3.3 Simulated annealing with iteration budget (SA-I)

This variant uses a constant cooling rate to determine the temperature for each iteration. In addition to a time limit, it uses a fixed iteration budget \(\mathcal {I}\) to limit its run time. The idea is to choose the value for \(\mathcal {I}\) is to estimate the possible number of iterations within the given time limit, based on the execution speed of sample iterations on the benchmark machine (in our experiments, we set \(\mathcal {I}\) to \(185 \cdot L\), where L is the time limit in milliseconds). The provided iteration budget is split evenly over the temperatures, such that the minimum temperature is reached when its iteration budget is exhausted. As such, the number of samples per temperature is defined as the value of a function depending on the maximum and minimum temperature, which can be seen in Eq. (33).

We apply the same cut-off mechanism as in SA-C. Since the cooling rate is adjusted differently than for SA-C, this may cause the temperature to fall below the actual minimum temperature. Due to \(N_{s}\) being calculated directly by SA-I, it does not have to be provided as a parameter. However, the cooling rate \(\alpha \) has to be stated as a parameter for SA-I.

5 Instances

To evaluate the proposed approaches, we perform a large number of experiments with a set of randomly generated instances, a set of real-life instances, and instances on a related PMSP from the literature. In Sect. 5.1, we provide information on our instance generator, then we describe the set of randomly generated instances in Sect. 5.2. Later in Sect. 5.3, we present the real-life instances that have been provided to us by our industrial partner. Additional information on the problem instances from the literature are given in Sect. 5.4.

5.1 Instance generator

To describe the generation of instances we use the notion of materials, as our real-life data determines the setup times using materials instead of jobs. As every job processes a single material, converting a given material-based setup time matrix into a job-based setup time matrix is a simple preprocessing step. The main reason for this kind of specification of the setup times is to reduce space requirements of the instance files. A similar instance format has also been used previously in the literature (e.g. Caniyilmaz et al. 2015).

To create a large pool of instances we implement a random instance generator which is configured by parameters that specify the desired instance size (i.e. the number of machines, materials and jobs) as well as a random seed. This instance generator is based on a similar one proposed by Vallada and Ruiz (2011), but we extend it to include also the generation of machine eligibility constraints and due dates. The detailed pseudo-code is provided in Algorithm 5 (where parameters \(NoMach, NoMat, NoJbs\) are the number of machines, the number of materials, and the number of jobs).

The processing time of each job on each machine and the setup time between every pair of materials are drawn from uniform distributions [1, 100) and [1, 125), respectively. Setup times between two jobs sharing the same material are set to zero.

Exactly one material is assigned to each job as follows: At first, one job after the other gets matched to an unused material until every material has been assigned exactly once. Afterwards, a randomly selected material is assigned to any job that has not been matched to a material yet. Thus, no two jobs will process the same material if the number of jobs is lower or equal to the number of materials.

For every job, the corresponding set of eligible machines is determined in the following way: First, the eligible machine count m is sampled from a uniform distribution [1, M], where M is the total number of machines. Then, the set of available machines is sampled m times without replacement to determine the eligible machines for the job.

We further use three different procedures to assign due dates randomly to create different sets of benchmark instances.

In the first two procedures, the due dates are determined by constructing a reference solution for the problem and afterwards setting the scheduled completion time of each job as the corresponding due date. Thus, it is ensured by construction that a feasible schedule exists for every generated problem instance even though the generated reference solution may not be optimal with respect to makespan. The construction of such a reference solution consists of the following steps (see Algorithm 5, lines 35–46): First, we determine a random order of jobs in which we will schedule them one after the other. Then, for every job we randomly select one of its eligible machines according to an independently specified selection strategy. The job is then appended to the selected machine’s schedule and the job’s due date is set to its completion time based on the current schedule.

Our first due date generation procedure (S-style) constructs a solution as described above with the use of a random machine selection strategy.

The second due date generation procedure (T-style) also constructs a reference solution but aims to obtain tighter due dates through the use of an alternative greedy machine selection heuristic (see Algorithm 6). This alternative strategy greedily selects the machine that causes the lowest setup time when the corresponding job is scheduled.

The third due date generation procedure (P-style) does not rely on constructing a reference solution but assigns random due dates by sampling the values from a uniform distribution

\([\widetilde{C}_{\max } \cdot (1 - \tau - R / 2), \widetilde{C}_{\max } \cdot (1 - \tau + R / 2)]\). The variables \(\tau \) and R in this case are parameters determining the tightness and variance of the generated due dates. For the calculation of the approximate makespan \(\widetilde{C}_{\max }\), we use the formula suggested by Perez-Gonzalez et al. (2019) (see Eq. (34)). Similar approaches to randomly sample due dates have been used by Potts and Wassenhove (1982), Chen (2006) and Lee et al. (2013).

5.2 Generated instances

Using the previously described instance generator, we generated 560 instances that can be separated into six different categories. The instances of each category have been generated with a differently configured random instance generator (see Table 2).

When every job in an instance is assigned to a different material (i.e. no pair of jobs use the same material), then the instance is classified as using Unique Materials. In this case, between any pair of jobs, the setup time is greater than zero. This is the case commonly found in the literature.

Instances with less materials than jobs are classified as using Shared Materials, because at least one material is shared between multiple jobs. As mentioned earlier, in such a case the setup time between pairs of jobs is zero, because no change in tooling is required. This reflects the structure observed in our real-life instances.

For the due date generation procedure that does not use a reference solution, we set the parameters \(\tau = 0.25\) and \(R = 0.5\), to generate instances that are unlikely to have zero-cost solutions with respect to tardiness.

We sample the input parameters for our instance generator (i.e. the number of jobs, machines and materials) from the uniform distributions described in Table 3. For instances with shared materials, both the number of jobs and the number of materials are sampled separately from a uniform distribution. For instances with unique materials, the number of materials is set to be equal to the number of jobs. The bounds for the value ranges have been chosen to generate instances with realistic size.

As our metaheuristic approaches rely on a number of parameters, we use the automated parameter configuration tool SMAC to find efficient parameter configurations. To avoid over-fitting of the tuned parameters on the instances we use in our experiments, we split the 560 randomly generated instances into a training set for parameter tuning and a validation set that is used in our final experimental evaluation. To create our training set we uniformly sampled 90 S-style instances with shared materials and 90 S-style instances with unique materials. Similarly, we further sampled two sets of 90 instances from the T-style ones. Finally, we also sampled 40 P-style instances with shared materials and another 40 P-style ones with unique materials. Our training set therefore consists of 440 instances in total, while the validation set consists of the remaining 120 instances. Further details on the parameter tuning are given in Sect. 6.2.1.

The generated instances are publicly available at https://doi.org/10.5281/zenodo.4118241, and the instance generator is available on request.

5.3 Real-life instances

For testing purposes, our industrial partners provided us with three real-life instances (A, B and C) that represent planning scenarios from industrial production sites. The characteristics of these instances can be seen in Table 4.

We further created four additional instances by scaling up instance C (up to 16 times the original size). Additionally, we include a new instance, called C-assigned, that uses the same set of jobs and machines as instance C, but predetermines a machine assignment for each job. Similar to instance C, we scaled up instance C-assigned to create another four instances. The set of all real-life based instances used in our benchmark experiments can be seen in the first column of Table 13.

5.4 Instances from the literature

We evaluate the performance of our metaheuristics on instances for the PMSP variant that has been described by Perez-Gonzalez et al. (2019).Footnote 1 The only difference to the problem we investigate is that their objective function only considers the minimisation of total tardiness and does not include the makespan. Since the makespan is the secondary objective in our problem and thus incomparably less important than total tardiness, we can directly use our metaheuristics to approach the problem from Perez-Gonzalez et al. (2019) by simply ignoring the makespan.

6 Computational results

To evaluate our approaches we performed a large number of experiments based on the sets of instances described in Sect. 5.1.

6.1 Comparison of mixed-integer programming formulations

We implemented the MIP models described in Sect. 3 with Gurobi 8.1.1. Experiments with the MIP models were performed on a computer with an AMD Ryzen 2700X Eight-Core CPU and 16 GB RAM.

All eight models were evaluated on the 25 smallest generated instances from the validation set under a time limit of 1800 s per instance. Table 5 summarises the results (tardiness/makespan) for each instance.

It can be seen that the models that account for the machine eligibility requirements by a set of hard constraints (M2, M4, M6, and M8) are able to provide solutions for more instances than their counterparts (M1, M3, M5, M7) that set ineligible machines processing times to a makespan upper bound. In fact, models M2 and M6 are able to provide solutions for all 25 randomly generated instances, while M4 and M8 can provide solutions for all instances but one. Interestingly, the solutions found by M1 exhibit often higher quality than the corresponding solutions found by M2, the same is true for M7 and M8 which are similar to M1 and M2, but differ only in the formulation of constraint set (29). If we compare the results produced by M1 and M7, we see that the solution quality is only slightly different for most instances. However, it seems that M1 reaches overall best results more often than M7. Similarly, we can see that M2 produces slightly more feasible results than its counterpart M8, although M8 produces a better solution quality for some instances and can reach overall best results for four instances. If we compare results produced by models M3 and M5 with their counterparts M4 and M6 we can clearly see that M4 and M6 produce better results for the majority of the instances. The novel model adaptation that we described in Sect. 3 (M6) is able to find the best solutions for 14 of the randomly generated instances. Nine of these instances are P-style, four are S-style and one is a T-style instance. M4 provides the best solution two times for P-style instances, three times for S-style ones and four times for T-style ones. Further, M4 is able to prove optimality for two solutions, while M6 is able to find one optimal solution. Overall, M4 and M6 provide the best solutions for 22 randomly generated instances.

Tables 6 and 7 show initial and final dual bounds obtained by the MIP solver for each instance. We can see M4 and M6 produce the best bounds for most instances, indicating that these two formulations often lead to a tighter linear relaxation. However, other formulations are able to provide better bounds in some cases.

To calculate the relative performance, we use the Relative Percentage Deviation (RPD) for every instance I and solution S as defined in Eq. (35). RPD values have also been used as a performance measure in publications on related problems (e.g. Vallada and Ruiz 2011).

Figure 3 visualises the RPD values of each model in the form of box plots. In Fig. 3a, results for all models except for M3 are presented. Figure 3b shows RPD values of models with explicit machine eligibility constraints and Fig. 3c shows RPD values for models M4, M5 and M6. Model M3 is excluded from the comparisons, as it was unable to solve most instances. Only those instances that could be solved by all compared models within the time limit are included in the plots to avoid missing values.

Overall, we conclude that models M4 and M6 provide the best results for the majority of instances in our experiments. The best model depends on the instance characteristics: For T-style instances, M4 shows the best performance, while M6 produces the best results for P-style instances.

6.2 Comparison of metaheuristic approaches

Both parameter tuning and benchmark experiments for the metaheuristics have been executed on a computer with an Intel Xeon E5-2650 v4 12-Core processor that has 24 logical cores and 252 gigabytes of RAM.

6.2.1 Parameter tuning

As our methods include various parameters which have an impact on the final results, we performed automated parameter tuning using Sequential Model-based Algorithm Configuration (SMAC) as proposed by Hutter et al. (2011).

For every Simulated Annealing variant, we started 24 parallel SMAC runs with a wallclock time budget of 18 h per run. The initial configurations used for the starting point of SMAC are based on intuition about plausible parameter values. Table 8 shows the value ranges for all parameters as well as their initial values. The tuned parameters proposed by SMAC are listed in Table 9.

To investigate the robustness of the SA variants against different configurations, we conducted additional experiments for the so-called incumbent configurations (i.e. the best found configurations) of each parallel SMAC run. We let every SA variant run 20 times with every incumbent configuration on the validation set. Box plots for the resulting RPD values can be seen in Fig. 4. It can be seen that SA-I and SA-R (on the left and right thirds of the plot, respectively) are much more robust against changes in the configuration than SA-C. Figure 5 shows the RPD values for all incumbents, grouped per SA variant for a clearer visual representation. Inspection of the plot scales shows that SA-C and SA-R are the least and most robust of the compared SA variants, respectively. Figure 5a, c shows that most configurations result in a very similar performance for both SA-I and SA-R. However, some configurations lead to significantly better results than the others. This suggests that both SA-I and SA-R are largely robust against changes in the configuration, but still have the potential for some optimisation.

6.2.2 Results on randomly generated instances

In this section, we present the results produced by the proposed Constructive Heuristic (Algorithm 1) and Simulated Annealing variants. For each of the investigated Simulated Annealing variants, we performed experiments with a randomly generated initial solution (R) and initial solutions produced by our Constructive Heuristic (CH). All experiments were performed within a time limit of 60 s per run regardless of the instance size. For SA-I, we set an iteration budget of 11, 100, 000 iterations, as this corresponds to the average number of iterations that the other Simulated Annealing variants performed within the 60 seconds time limit.

Tables 10, 11 and 12 show the detailed experimental results for all SA variants. Fig. 6 further visualises an overview of the relative algorithm performances on the entire validation set as box plots. As expected, all Simulated Annealing variants are able to significantly improve the quality of their initial solution (Algorithm 1). Further, we can see in Fig. 6b that SA starting from a good initial solution produces significantly lower mean RPDs than SA starting from a random initial solution. Figure 6c shows box plots over the median RPD values per instance for each algorithm. The fact that the median RPD values are not notably different from the mean RPD values for SA (CH) indicates its robustness.

Comparing SA variants with random initial solution, we conclude that SA-R shows a slightly better performance compared to the other two SA variants. We further observe that all SA variants produce similar results when starting from a greedily constructed initial solution.

6.2.3 Results on real-life instances

To evaluate our approaches on the real-life instances, we use the same computational environment and time limits as in Sects. 6.1 and 6.2. Table 13 shows the best solution costs obtained by MIP (across all models) and the median absolute solution costs provided by each SA variant. All methods obtained the same solution costs for instances A and C-assigned, which also could be solved to optimality by MIP. For the rest of the instances, SA produced better results than MIP in our experiments. All SA variants achieved the same result in all 20 runs for four instances (A, B, C-restricted and C-restricted x2). Only for the larger instances we observe varying solution qualities. SA-R exhibits a slightly better performance on the larger instances (C-x8, C-x16 and C-assigned-x8), while SA-I performs best on smaller instances (C-x2 and C-x4). The box plots in Fig. 7 show that all SA variants perform very similarly, with RPDs close to zero.

6.2.4 Influence of the features

To investigate the influence of block moves and guidance strategies on the performance of the metaheuristics, we performed additional experiments with different configurations on the validation set. We created further configurations for each SA variant by changing the value for a single feature in the configuration while leaving everything else fixed. The derived configurations are the following:

-

Standard The configuration proposed by SMAC

-

X% Blocks The probability for generating block moves \(p_{B}\) is set to 0%, 10%, 20%, and 30%, corresponding to \(p_B = 0.0\), 0.1, 0.2, and 0.3, respectively.

-

G-T The probability for applying guidance towards minimising makespan \(p_{M}\) is set to zero

-

G-M The probability for applying guidance towards minimising tardiness \(p_{T}\) is set to zero

-

G-None Both guidance probabilities (\(p_M\) and \(p_T\)) are set to zero

The RPD values for all three SA variants over the validation instances can be seen in Fig. 8. For SA-I, any modification of the standard configuration leads to performance degradation as can be seen in Fig. 8a. For SA-C and SA-R, the results are not as clear (see Fig. 8b, c). In the case of SA-C, block moves apparently have the most negative impact, as increases in block move probabilities lead to increases in RPD values. SA-R loses some performance when the guidance is reduced in any way but does not perform significantly different with higher probabilities for block moves.

Overall, there is no configuration for any of the SA variants that outperforms the standard configuration. Thus, we conclude that a limited amount of guidance towards minimisation of both makespan as well as tardiness is favourable over a purely unguided search. The block moves in their current implementation, however, do not provide a significant benefit and, if selected to often, are detrimental to the performance of SA-I and SA-C.

To further analyse the influence of block moves on the instance structure, we can look on only the results for the set of instances which use unique materials without including the results for shared materials instances and vice versa.

Figures 9, 10, and 11 show the results produced by different block move configurations using SA-I, SA-C, and SA-R separately for unique material instances and shared material instances.

For SA-I we can see that using no block moves still produces the best results for both unique material and shared material instances. However, for SA-C and SA-R the results show that for the unique material instances the 10% Blocks configuration produced the best results, whereas for shared material instances again the 0% Blocks configuration performs best.

6.2.5 Comparison to results from the literature

To assess the quality of our methods, we compare them to the state of the art approach that was proposed recently for the problem provided by Perez-Gonzalez et al. (2019).

Since the optimal solution for the majority of these instances has an objective function value of zero, we use the Relative Deviation Index (RDI, Eq. (36)) as performance measure instead of the RPD. Similar performance measures have been used in previous publications (e.g. Perez-Gonzalez et al. 2019).

We ran each of our SA variants 20 times on every instance with a time limit that is based on the run time formula used by Perez-Gonzalez et al. (2019). The parameters for each of our metaheuristics are set to the ones determined by SMAC.

The results for the Clonal Selection Algorithms

(CSA_t and CSA_f) described by Perez-Gonzalez et al. (2019) have been kindly provided to us by the authors. Publicly available CPU benchmarksFootnote 2 show that the CPU used in our experiments is roughly 1.5 times faster than the Intel i7 7700 processor that was used by Perez-Gonzalez et al. (2019) with respect to single-thread performance. Therefore, we used a time limit of \(M \cdot N \cdot \frac{20}{2}\) milliseconds per instance, where M is the number of machines and N is the number of jobs (Perez-Gonzalez et al. (2019) used a time limit of \(M \cdot N \cdot \frac{30}{2}\) in their experiments).

For the majority of the instances (2469 small, 5058 medium and 5909 big), all runs of the Simulated Annealing variants and all runs of the Clonal Selection Algorithms produced solutions with the exact same solution cost. Figure 13 shows box plots for all evaluated algorithms on the remaining 1371 small, 702 medium and 91 big instances, respectively. In the aggregated results it can be seen that all SA variants outperform the CSA algorithms regarding solution quality for most of the instances. This observation is further supported by Mann–Whitney–Wilcoxon tests using a confidence level of 0.95 which show that the proposed SA variants produce significantly improved results than both CSA_t and CSA_f in our experiments.

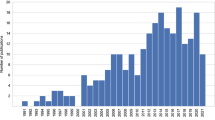

When looking at the best results per instance for all SA and both CSA variants, respectively, we observe that in 3291 small, 5548 medium and 5979 big instances, the best SA result has the same cost as the best CSA result. For 506 small, 208 medium and 21 big instances, the best SA result is better than the best CSA result. On the other hand, for 43 small and 4 medium instances, the best CSA result is better than the best SA result. Figure 12 shows the number of instances where CSA outperforms SA, grouped by the number of jobs per instance. We observe that the number of instances where CSA outperforms SA declines with instance size, which indicates that SA scales better with increasing instance size.

Detailed results of all our experiments are publicly available for download at https://doi.org/10.5281/zenodo.4284100.

7 Conclusions

In this paper, we have investigated several solution approaches for a novel variant of the Unrelated Parallel Machine Scheduling Problem. To approach the problem, we have proposed several Simulated Annealing-based metaheuristics that utilise different neighbourhood operators and have investigated variations of a mathematical formulation.

Based on a set of randomly generated and real-life instances, we performed a thorough evaluation of all investigated methods. Furthermore, we evaluated the performance of the proposed metaheuristics on a related problem from the literature, and compared the results to the state-of-the-art on a set of existing benchmark instances. The experimental results show that Simulated Annealing is able to provide high-quality solutions within short run times and outperforms other approaches for the large majority of instances. Using our approach, we were further able to provide previously unknown upper bounds for a large number of benchmark instances from the literature.

For future work, it may be interesting to select Block Moves based on solution-specific information. For instance, a more sophisticated heuristic for the selection of job blocks could be employed by defining a distance measure between jobs and building blocks based on this measure. Additionally, it would be interesting to investigate new neighbourhood operators and hybrid algorithms for this problem.

Notes

The instances we use in our experiments have been kindly provided to us by the authors.

References

Adan, J., Adan, I. J. B. F., Akcay, A., Van den Dobbelsteen, R., & Stokkermans, J. (2018). A hybrid genetic algorithm for parallel machine scheduling at semiconductor back-end production. In ICAPS (pp. 298–302). AAAI Press.

Afzalirad, M., & Rezaeian, J. (2016). Resource-constrained unrelated parallel machine scheduling problem with sequence dependent setup times, precedence constraints and machine eligibility restrictions. Computers & Industrial Engineering, 98, 40–52.

Afzalirad, M., & Rezaeian, J. (2017). A realistic variant of bi-objective unrelated parallel machine scheduling problem: NSGA-II and MOACO approaches. Applied Soft Computing, 50, 109–123.

Afzalirad, M., & Shafipour, M. (2018). Design of an efficient genetic algorithm for resource-constrained unrelated parallel machine scheduling problem with machine eligibility restrictions. Journal of Intelligent Manufacturing, 29(2), 423–437.

Allahverdi, A. (2015). The third comprehensive survey on scheduling problems with setup times/costs. European Journal of Operational Research, 246(2), 345–378.

Allahverdi, A., Ng, C. T., Cheng, T. C. E., & Kovalyov, M. Y. (2008). A survey of scheduling problems with setup times or costs. European Journal of Operational Research, 187(3), 985–1032.

Al-Salem, A. (2004). Scheduling to minimize makespan on unrelated parallel machines with sequence dependent setup times. Engineering Journal of the University of Qatar, 17(1), 177–187.

Arnaout, J.-P., Musa, R., & Rabadi, G. (2014). A two-stage ant colony optimization algorithm to minimize the makespan on unrelated parallel machines—Part II: Enhancements and experimentations. Journal of Intelligent Manufacturing, 25(1), 43–53.

Arnaout, J.-P., Rabadi, G., & Musa, R. (2010). A two-stage ant colony optimization algorithm to minimize the makespan on unrelated parallel machines with sequence-dependent setup times. Journal of Intelligent Manufacturing, 21(6), 693–701.

Avalos-Rosales, O., Angel-Bello, F., & Alvarez, A. (2015). Efficient metaheuristic algorithm and re-formulations for the unrelated parallel machine scheduling problem with sequence and machine-dependent setup times. The International Journal of Advanced Manufacturing Technology, 76(9–12), 1705–1718.

Bektur, G., & Saraç, T. (2019). A mathematical model and heuristic algorithms for an unrelated parallel machine scheduling problem with sequence-dependent setup times, machine eligibility restrictions and a common server. Computers & OR, 103, 46–63.

Caniyilmaz, E., Benli, B., & Ilkay, M. S. (2015). An artificial bee colony algorithm approach for unrelated parallel machine scheduling with processing set restrictions, job sequence-dependent setup times, and due date. The International Journal of Advanced Manufacturing Technology, 77(9), 2105–2115.

Chen, J.-F. (2006). Minimization of maximum tardiness on unrelated parallel machines with process restrictions and setups. The International Journal of Advanced Manufacturing Technology, 29(5), 557–563.

Du, J., & Leung, J.Y.-T. (1990). Minimizing total tardiness on one machine is NP-hard. Math. Oper. Res., 15(3), 483–495.

Fanjul-Peyro, L., Ruiz, R., & Perea, F. (2019). Reformulations and an exact algorithm for unrelated parallel machine scheduling problems with setup times. Computers & OR, 101, 173–182.

Garey, M. R., & Johnson, D. S. (1979). Computers and intractability: A guide to the theory of NP-completeness. San Francisco: W. H. Freeman.

Gedik, R., Kalathia, D., Egilmez, G., & Kirac, E. (2018). A constraint programming approach for solving unrelated parallel machine scheduling problem. Computers & Industrial Engineering, 121, 139–149.

Graham, R. L., Lawler, Lenstra, E. L. J. K., & Rinnooy Kan, A. H. G. (1979). Optimization and approximation in deterministic sequencing and scheduling: A survey. In Annals of discrete mathematics (Vol. 5, pp. 287–326). Elsevier.

Helal, M., Rabadi, G., & Al-Salem, A. (2006). A tabu search algorithm to minimize the makespan for the unrelated parallel machines scheduling problem with setup times. International Journal of Operations Research, 3(3), 182–192.

Hutter, F., Hoos, H. H., & Leyton-Brown, K. (2011). Sequential model-based optimization for general algorithm configuration. In LION, volume 6683 of Lecture Notes in Computer Science (pp. 507–523). Springer.

Kirkpatrick, S., Gelatt, C. D., & Vecchi, M. P. (1983). Optimization by simulated annealing. Science, 220(4598), 671–680.

Lee, J.-H., Jae-Min, Yu., & Lee, D.-H. (2013). A tabu search algorithm for unrelated parallel machine scheduling with sequence-and machine-dependent setups: minimizing total tardiness. The International Journal of Advanced Manufacturing Technology, 69(9–12), 2081–2089.

Perez-Gonzalez, P., Fernandez-Viagas, V., García, M. Z., & Framiñan, J. M. (2019). Constructive heuristics for the unrelated parallel machines scheduling problem with machine eligibility and setup times. Computers & Industrial Engineering, 131, 131–145.

Potts, C. N., & Van Wassenhove, L. N. (1982). A decomposition algorithm for the single machine total tardiness problem. Operations Research Letters, 1(5), 177–181.

Rabadi, G., Moraga, R. J., & Al-Salem, A. (2006). Heuristics for the unrelated parallel machine scheduling problem with setup times. Journal of Intelligent Manufacturing, 17(1), 85–97.

Rambod, M., & Rezaeian, J. (2014). Robust meta-heuristics implementation for unrelated parallel machines scheduling problem with rework processes and machine eligibility restrictions. Computers & Industrial Engineering, 77, 15–28.

Santos, H. G., Toffolo, T. A. M., Silva, C. L. T. F., & Berghe, G. V. (2019). Analysis of stochastic local search methods for the unrelated parallel machine scheduling problem. ITOR, 26(2), 707–724.

Tran, T. T., Araujo, A., & Beck, J. C. (2016). Decomposition methods for the parallel machine scheduling problem with setups. INFORMS Journal on Computing, 28(1), 83–95.

Vallada, E., & Ruiz, R. (2011). A genetic algorithm for the unrelated parallel machine scheduling problem with sequence dependent setup times. European Journal of Operational Research, 211(3), 612–622.

Ying, K.-C., Lee, Z.-J., & Lin, S.-W. (2012). Makespan minimization for scheduling unrelated parallel machines with setup times. Journal of Intelligent Manufacturing, 23(5), 1795–1803.

Acknowledgements

The financial support by the Austrian Federal Ministry for Digital and Economic Affairs, the National Foundation for Research, Technology and Development and the Christian Doppler Research Association is gratefully acknowledged. Further, we would like to thank Paz Perez-Gonzalez for providing us with their problem instances and results for comparison.

Funding

Open access funding provided by TU Wien (TUW).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Moser, M., Musliu, N., Schaerf, A. et al. Exact and metaheuristic approaches for unrelated parallel machine scheduling. J Sched 25, 507–534 (2022). https://doi.org/10.1007/s10951-021-00714-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10951-021-00714-6