Abstract

Between June 2019 and March 2020, thousands of wildfires spread devastation across Australia at the tragic cost of many lives, vast areas of burnt forest, and estimated economic losses upward of AU$100 billion. Exceptionally hot and dry weather conditions, and preceding years of severe drought across Australia, contributed to the severity of the wildfires. Here we present analysis of a very large ensemble of initialized climate simulations to assess the likelihood of the concurrent drought and fire-weather conditions experienced at that time. We focus on a large region in southeast Australia where these fires were most widespread and define two indices to quantify the susceptibility to fire from drought and fire weather. Both indices were unprecedented in the observed record in 2019. We find that the likelihood of experiencing such extreme susceptibility to fire in the current climate was 0.5%, equivalent to a 200 year return period. The conditional probability is many times higher than this when we account for the states of key climate modes that impact Australian weather and climate. Drought and fire-weather conditions more extreme than those experienced in 2019 are also possible in the current climate.

Similar content being viewed by others

Introduction

The 2019–2020 wildfire season in Australia was among the most catastrophic in recorded history, causing severe social, environmental, ecological and economic impacts across the continent. An area larger than the size of the United Kingdom was burned (estimates range from 24 to 34 million hectares1), including at least 21 percent of Australia’s temperate forests2,3 and over 3000 homes1. Thirty-three deaths occurred as a direct result of the fires4. Hundreds more deaths, and thousands of hospital and emergency-department admissions, have been attributed to the extreme levels of air pollution resulting from the wildfire smoke5,6. The estimated death toll for animals is in the billions1, with fears that some species have been driven to extinction7,8. Recent estimates of the total economic loss to Australia resulting from the 2019–2020 wildfires are in the order of AU$100 billion9,10.

Two key factors have been linked to the severity of the 2019–2020 wildfires. First, the exceptionally dry conditions in the years and months leading up to the fire season produced very low fuel-moisture content, especially in eastern Australia11,12,13,14. The widespread drought conditions have been connected to the states of the El Niño Southern Oscillation (ENSO) and Indian Ocean Dipole (IOD) in the years and months preceding 202015,16,17. Second, the extremely hot and dry weather conditions experienced across Australia during the 2019–2020 summer were particularly favorable to fire ignition and spread11,18,19,20,21. A number of extreme weather records were broken over this period, which included Australia’s highest national daily-averaged temperature (41.9 oC)15 and record-high values of the Forest Fire Danger Index (FFDI) in areas of all Australian States and Territories19. The Southern Annular Mode (SAM), which was strongly in its negative phase during the spring and summer of 2019, has been implicated in the unusually hot and dry conditions across eastern Australia22.

Many studies have investigated how climate and weather conditions favorable to wildfires in Australia have changed historically and how they will continue to change into the future. Paleoclimate records indicate an increase in the last century in the occurrence of the fire-promoting phases of both ENSO23 and the IOD15,24. These increases may continue in the coming decades25,26. Observed records since the mid-twentieth century show a trend towards more dangerous fire-weather conditions for much of Australia27,28,29 and a corresponding reduction in the time between major wildfires30. Future projections of Australian fire weather are strongly region- and model-dependent, but generally indicate increased severity in southeast Australia31,32,33,34.

Estimates of the likelihoods of increased susceptibility to fire from extreme climate and weather are essential for policy makers, contingency planners, and insurers. However, such likelihoods are difficult to quantify from observed records, which are limited to approximately the past century and thus provide few samples of extremely susceptible conditions in a given region28,35,36. There was, for example, no direct observational precedent for the high values of FFDI nor the low annual accumulated rainfall total experienced in southeast Australia in 201915. Further, assessment of likelihoods is compounded by nonstationarity in the observed record, resulting, for example, from climate change. Even if past likelihoods could be well determined from the observed record, they may not be representative of current wildfire susceptibility.

Climate models can provide large samples of plausible conditions over short time periods that can be used to reduce uncertainties in quantifying risk. Previous studies have used ensemble seasonal and weather prediction systems to estimate return periods of surge levels in the Netherlands37,38 and of significant wind and wave heights globally39,40,41,42. More recently, the approach of quantifying risks of extremes using ensemble climate simulations has been popularized under the acronym UNSEEN, standing for UNprecedented Simulated Extremes using ENsembles. The UNSEEN approach has been used to assess the risk of droughts and heat waves43,44 and to quantify likelihoods of extreme meteorological events, such as instances of unprecedented rainfall45,46 and temperature47, and sudden stratospheric warming in the Southern Hemisphere48.

In this paper, we use a decadal ensemble climate forecast model to quantify the likelihood of concurrent extreme drought and fire weather in a region of southeast Australia where the 2019–2020 wildfires burned significant area (Fig. 1). We focus on two indices averaged over this region (below, overlines denote regional averaging). Our drought index, \(\overline{{{{{{\rm{DI}}}}}}}\), is defined as the total accumulated rainfall from January to December and quantifies how preconditioned for fires the landscape may be leading into the wildfire season of a given year. We use the December-average FFDI, \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\), to quantify how severe fire-weather conditions are near the peak of the fire season of a given year. These indices were selected to capture the unprecedented nature of the drought and fire weather in southeast Australia in 2019. Simultaneous low values of \(\overline{{{{{{\rm{DI}}}}}}}\) and high values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) indicate elevated susceptibility to wildfires in southeast Australia. Therefore, hereafter, we will refer to their vector as simply “fire susceptibility”.

Total burnt forest area between and including October 2019 and February 2020. The black boxes show the forecast model grid cells where the total burnt area is greater than 10% of the cell area (greater than approximately 500,000 hectares). The region covered by these cells is the focus of this paper.

Our climate forecast dataset comprises 10-year long, daily forecasts, each with 96 ensemble members, initialized at the beginning of every May and November over the period 2005–2020. By pooling forecast ensemble members and lead times, these forecasts provide up to 1920 times more samples of \(\overline{{{{{{\rm{DI}}}}}}}\) and \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) in the current climate than are available from observed records (“Methods”). After first checking that these many samples provide accurate and independent representations of the real world (“Model fidelity”), we use them to estimate the likelihoods of exceeding extreme values of \(\overline{{{{{{\rm{DI}}}}}}}\) and \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\), including the unprecedented values experienced during the 2019–2020 wildfires (“Likelihoods of exceedance”). The very large number of forecast samples yields many years with \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) that are simultaneously more severe than the observed 2019 values. This enables us to test the correspondence between unprecedented drought and fire weather in southeast Australia and the states of ENSO, IOD and SAM (“Extreme susceptibility to fire and climate drivers”).

Results

Historical record of fire susceptibility

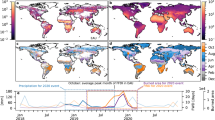

The historical record of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) is shown in Fig. 2. These data are calculated from high quality atmospheric reanalysis and gridded rainfall data (“Methods”) and are referred to hereafter as “observations”. The data points in Fig. 2 are shaded according to the year for which they are calculated, with colored shading for years in which severe wildfires occurred in summer in southeast Australia.

a Values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) for the period 1958-2020. The dashed lines show contour levels of a two-dimensional kernel-density estimate of the data points shown (levels at 1.5e–4, 3.5e–4 and 5.5e–4, enclosing approximately 87%, 62%, and 27% of the data points) and the value in the top left shows the Pearson correlation coefficient between the two indices. b Year of maximum December-averaged FFDI. c Year of minimim DI. d Year of maximum normalized distance from the mean DI and December-averaged FFDI over 1958-2020. In all panels, data points are shaded according to their year, with colored shading showing years where unplanned summer fires burned at least 250,000 hectares of the region covered by the four model cells shown in Fig. 1 (“Methods”).

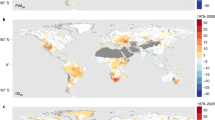

Severe fires have generally been associated with extreme values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) (Fig. 2). The values of both indices recorded in 2019 were the most extreme in the 63 years (1958–2020) of observational data available for both indices (Fig. 2a). Indeed, there was no precedent for the 2019 \(\overline{{{{{{\rm{DI}}}}}}}\) values in the full 121 years (1900–2020) of data available for this index (see Supplementary Fig. 1). A large proportion of Australia experienced unprecedented values of December-averaged FFDI in 2019 (Fig. 2b). Similarly, much of eastern and central Australia accumulated the lowest rainfall annually in 2019 relative to all other years in the joint historical record (Fig. 2c). Over much of our region of interest, the minimum \(\overline{{{{{{\rm{DI}}}}}}}\) occurred in 1980 and 1982, which were also years in which unplanned summer fires burned extensive areas of southeast Australia. We can quantify the joint extremity of DI and December-average FFDI in a given year as the normalized distance from the mean index values over 1958–2020: \(\sqrt{{{{{{\rm{FFD{I}}}}}_{{{\rm{Dec}}}}^{^{\prime} 2}}}+{{{{{{\rm{DI}}}}}}}^{^{\prime} 2}}\), where primes indicate the difference from the mean index over 1958–2020, normalized by the standard deviation of the index over the same period. Even at a local scale, this joint quantity was unprecedented in 2019 over much of southeast Australia (Fig. 2d).

The small number of samples in the observed record makes quantification of the probabilities of extreme \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) events very difficult. It is not possible, for example, to directly determine the likelihood of an event more severe than that observed in 2019 simply because no such event has ever been observed. Statistical techniques enable extrapolation of fitted distributions, but require assumptions about the shape of the distribution and still suffer from large uncertainties when the sample size is small49,50,51. Issues with sampling become increasingly restrictive as dimensionality—that is, the number of variables—increases52. With its very large sample size, our forecast model (hereafter “model”) provides many in-sample estimates of rare extreme events, allowing for the probabilities of these events to be determined directly from the empirical probability density function53.

Model fidelity

In order to provide reliable estimates of likelihoods of fire susceptibility, the model samples must be stable, independent and realistic estimates of the real world46. Stability here refers to an absence of systematic changes in the estimates of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) with model lead time. Model stability is necessary for pooling samples at different lead times. Dependence between model samples inflates the sample size without adding new information and arises at short lead times because the ensemble forecasts are initialized from similar initial conditions. We remove dependent samples by considering only model lead times for which ensemble members are uncorrelated (≥37 months: Fig. 3, see also “Methods”).

To assess the model fidelity, we compare the modeled joint and marginal distributions of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) to the observed distributions over a common time period (Fig. 4). Initial assessment of the marginal distributions revealed a systematic dry bias in the modeled \(\overline{{{{{{\rm{DI}}}}}}}\) that was corrected for by applying a simple additive adjustment to the mean \(\overline{{{{{{\rm{DI}}}}}}}\) at each lead time (“Methods”). With this correction applied, we compare the distributions using the 96-member forecasts—denoted f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\)—in Fig. 4a–c. These model distributions show little dependence on lead time (colored lines) and agree generally with the observed data. However, because these forecasts are initialized over a relatively short period of time (2005–2020), there are very limited observations to which they can be compared. Only data within the 7-year period 2014–2020 (comprising 9408 model samples) are shown in Fig. 4a–c so that the distributions at each lead time are constructed from the same number of samples, thus enabling comparison of the distributions across lead times (“Methods”).

The joint (a) and marginal (b, c) distributions of observed (white circles and bars) and modeled (gray circles and lines) \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) from the bias-corrected f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) model dataset over the period 2014-2020. Lines show probability densities from the model for each lead time (colors) and for all lead times together (black dashed). For the joint distribution, probability densities are from a two-dimensional kernel density estimate and are presented with contour levels at 0.5e–4, 2e–4, and 4e–4 (enclosing approximately 92%, 63%, and 27% of the model data across all lead times). d–f As in a–c, but using f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) data over the period 1989-2020. g Null distributions of the Kolmogorov–Smirnov statistic, K, resulting from bootstrapping the f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) (purple shading) and f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) (pink shading) datasets using all independent lead times. h As in g, but for each lead time separately. In g and h, the distributions are presented as a difference between K and the KS-statistic calculated between the observed and model data, Kobs, such that the vertical black line (at K − Kobs = 0) indicates the location of Kobs in the distributions of K. Colored numbers show the right-tail p-value for the f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) (pink) and f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) (purple) distributions.

We therefore also assess the distributions computed from another set of forecast model data, denoted f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\). These data are produced using the same decadal forecast system and initialization dataset as f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\). However, they have only 10 ensemble members and they are initialized over the longer period 1980-2020. The latter enables comparison to a much larger set of observed values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\). In Fig. 4d–f we compare the f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) distributions over the period 1989–2020 (comprising 4480 samples) with observations over the same period (“Methods”). As for the f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) data, the f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) data distributions are stable and show good agreement with observations over the matched time period.

We use a two-dimensional, two-sample Kolmogorov–Smirnov (KS) test54,55 to test the null hypothesis that the modeled and observed joint distributions (gray and white points in Fig. 4) are the same (“Methods”). Proxy time series are generated by randomly subsampling the model data for sets of equal length to the observed record. These sets are compared with the full model distribution to produce a null distribution for the KS-statistic, K. The KS-statistic calculated between the observed and modeled distributions, Kobs, is compared with the null distribution. For both f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) and f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\), the observed KS-statistic falls below the 95th percentile of the null distribution (p-value > 0.05) and hence the model is considered to provide values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) that are consistent with the observed record (Fig. 4g). The KS test also confirms consistent modeled and observed distributions when applied using model data at each lead time independently (Fig. 4h), indicating that the independent model samples are both realistic and stable.

Likelihoods of exceedance

In 2019 unprecedented values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) were observed that were respectively 19% higher and 8% lower than previous record values (since 1958). These record values coincided with one of the worst wildfire seasons in recorded history1,2,3. Our model simulations show that the likelihoods of experiencing \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) or \(\overline{{{{{{\rm{DI}}}}}}}\) values equal to or more extreme than those experienced in 2019 are 7.8% and 1.5%, respectively, in the current climate (2014-2023 comprising 13,440 samples, Fig. 5a, b). The likelihood of exceeding both simultaneously is roughly 0.5%, indicating a return period for the 2019 event of approximately 200 years (Fig. 5c). Note that for \(\overline{{{{{{\rm{DI}}}}}}}\) to be “more extreme” or to “exceed” is to have a lower value, since lower values of \(\overline{{{{{{\rm{DI}}}}}}}\) indicate drier conditions that are more conducive to wildfires.

a Likelihoods of exceeding values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\). The observed 2019 value of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and the associated likelihood of exceeding this value are shown with dashed lines. b As in a, but for \(\overline{{{{{{\rm{DI}}}}}}}\). c Likelihoods of simultaneously exceeding \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\). The observed 2019 values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) are shown with dashed black lines and the likelihood of exceeding both these values together is shown by the text in the upper right. Dashed white lines show contours of a two dimensional kernel density estimate using the forecast data (levels at 2e–05, 1.8e–04, 3.4e–04, and 5e–04, enclosing approximately 97%, 68%, 39%, and 13% of the data, respectively). d As in c but showing only values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) that are more extreme than the observed 2019 values. In all panels, likelihoods are calculated using model data over the period 2014-2023. Note that here “exceeding” a value of \(\overline{{{{{{\rm{DI}}}}}}}\) is defined as having a smaller magnitude since lower values of \(\overline{{{{{{\rm{DI}}}}}}}\) are indicative of increased susceptibility to fire.

Values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) substantially more extreme than observed records occur within the model sample (Fig. 5d). This is especially true for \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\), for which nearly 8% of all model samples are more extreme than the observed 2019 value, with some model realizations up to twice as high. Indeed, there are realizations from the model where \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) are simultaneously 60% higher and 20% lower, respectively, than in 2019, though such events are very unlikely (<0.05% chance, or >2000 year return period). What such extreme events would mean for the severity of wildfires in southeast Australia is an important question that requires further investigation.

Extreme susceptibility to fire and climate drivers

Climate and weather extremes prior to and during the fire season in southeast Australia are influenced by multiple drivers of climate variability, including the El Niño Southern Oscillation (ENSO), Indian Ocean Dipole (IOD), and Southern Annular Mode (SAM)15,27. The positive phase of ENSO (El Niño) is associated with warm and dry conditions across eastern Australia, generally leading up to and during the fire season (spring and summer)56. Similarly, positive IOD events reduce atmospheric moisture availability to the continent and are generally concomitant with drier conditions in southeast Australia56,57. Negative phases of the SAM in spring and summer are characterized by an equatorward shift of both the westerly storm track and the descending branch of the southern hemisphere Hadley Cell, resulting in dry westerly winds and warm conditions over eastern Australia58. These three climate drivers are not independent and the compounding effects of their co-occurrence can impact wildfire susceptibility15. Positive phases of ENSO and IOD tend to co-occur59, for example, as do the fire-promoting states of ENSO and SAM60,61.

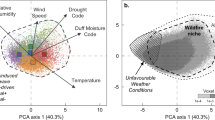

Every sample from our model provides a simulated realization of the earth system, including the ocean and atmosphere. We can quantify for every modeled sample of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) the corresponding states of ENSO, IOD and SAM over the period leading into the wildfire season using the Nino 3.4, DMI and SAMI indices (defined in “Methods” and assessed in Supplementary Figs. 2 and 3). The model provides 66 simulated Earths over the period 2014–2023 with values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) worse than the most extreme event in the observed record (2019). Of these samples, approximately 80% are associated with simultaneous positive ENSO, positive IOD and negative SAM states (Fig. 6a). Composites of sea surface temperature and 500 hPa geopotential height anomalies generated from years of unprecedented \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) exhibit the typical patterns associated with these states (Fig. 6b, c). While these results indicate a clear correspondence between extreme susceptibility to fire and the driver states, there is little evidence that the strength of the driver states is related to the joint magnitude of unprecedented \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) (as quantified by the normalized distance from the mean—see the shading of points in Fig. 6a). That is, the severity of extreme wildfire susceptibility is apparently associated mostly with the concurrency of fire-conducive Nino 3.4, DMI, and SAMI states and not with their individual magnitudes, a characteristic of compound events previously described in frameworks for understanding extreme impacts62,63,64.

a Values of average Nino 3.4 over September–December (SOND), average DMI over September–November (SON) and average SAMI over SOND from the model data (gray dots, 2014–2023) and from observations (white dots, 2014–2020). Indices are normalized by their standard deviation, σ (calculated over 2014–2023 for the model data and over 1980-2020 for the observed data). Colored dots show the subset of model data points for which both \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) are unprecedented—i.e., values more extreme than the respective observed 2019 values—where the color indicates the normalized distance from the mean modeled \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) over 2014–2023. The text in each quadrant gives the percentage of colored points that fall within each quadrant. Where no text is given, the percentage is 0%. Dashed black lines show contours of two dimensional kernel-density estimates using the model forecast data with levels at 2e–2, 5e–2, 1e–1 and 2e–1. b Composite of average sea surface temperature anomalies over September–November from forecast years in the period 2014-2023 with unprecedented values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\). Dashed boxes show the regions used to calculate the Nino 3.4 and DMI indices. c As in b but showing the composite of average 500-hPa geopotential height anomalies over September–December. Dashed lines at 40oS and 65oS show the locations of the longitudinal averages used in the calculation of SAMI.

Conditioning on the states of ENSO, IOD, and SAM has a large impact on the likelihood of simultaneously experiencing unprecedented values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) (Fig. 7). The SAM appears to be the strongest driver; when SAMI is strongly negative (< −1 standard deviation) over spring the likelihood of an unprecedented event is over 5 times higher (nearly 3%) than if the state of the SAM is not considered. Likelihoods are higher still when conditioned on more than one of the drivers being strongly in their fire-conducive phases (up to approximately 4% for strongly positive Nino 3.4 and strongly negative SAMI). In 2019, both the IOD and SAM were strongly in their fire-conducive phases (Fig. 6a). According to the model, the likelihood under such conditions of the unprecedented values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) in 2019 was approximately 3%.

The likelihoods of simultaneously exceeding 2019 \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) conditions given that one or more of Nino 3.4, DMI and − SAMI are positive (purple shading) or strongly positive (>1 standard deviation, pink shading) over the period leading into the wildfire season (September, October, November, and December for Nino 3.4 and SAMI; September, October, and November for DMI). Likelihoods and standard deviations are calculated using model data over the period 2014–2023 and error bars show 2.5–97.5% confidence bounds (“Methods”). Numbers show the number of years in the 63-year historical record (1958–2020) that satisfy each condition, where underlines indicate that 2019 is one such year.

Discussion

We have used a very large ensemble of climate model simulations to quantify the likelihood of high susceptibility to wildfire from concurrent extreme fire-weather and drought in southeast Australia. Our analysis shows that the likelihood of experiencing the unprecedented conditions that occurred leading into and during the catastrophic 2019-2020 wildfire season was approximately 0.5% in the current climate. Substantially more extreme conditions are also realized by the model, and the impact of such conditions on the severity of wildfires in southeast Australia is a potential area for future research. A very high proportion (~80%) of the model realizations with more extreme fire susceptibility than that observed during 2019 occur when ENSO, IOD and SAM are all in their fire-promoting phases—positive, positive and negative, respectively—during the austral spring and early summer. Accounting for the observed phases and strengths of these climate modes, the likelihood of the fire-weather and drought conditions experienced in 2019 was approximately 3%.

ENSO and IOD are predictable on seasonal timescales, particularly during austral winter and spring when any event has already started to establish itself and persistence plays a first order role in predictability65,66. The neutral ENSO and positive IOD conditions experienced during spring (SON) of 2019, for example, were predicted by the Australian Bureau of Meteorology in June67 and their implications on the fire season were foreshadowed in August68. SAM events are generally shorter lived and less predictable, although there is evidence that the significant stratospheric polar vortex weakening in spring 2019 and subsequent development of negative SAM was forecast as early as late July58. Thus the corresponding quantitative increases in likelihoods of extreme fire susceptibility demonstrated in this paper were potentially predictable months in advance of the peak in the 2019–2020 fire season.

The work in this paper builds on a growing area of research using climate simulations to assess and explore extreme events. There is enormous value to policy planners and decision makers in the ability to quantify the probability of impactful climate and weather events, particularly those that are unprecedented in the observed record. However, the use of climate models to quantify real-world risk is not without its difficulties. Foremost, the accuracy of estimates of probabilities is dependent entirely on the climate model’s ability to realistically represent the full range of plausible states that could be experienced in the real world. This is inherently very difficult to test because limited observed records provide very few samples of real world states and one is left trying to verify model states that have never been observed. We designed a statistical test to check for consistency between our modeled and observed indices and applied this in a way to maximize the number of observations in the test period. However, our test still suffers from small numbers of observations, particularly of extreme events. Thus, there is still some inherent reliance on the model’s ability to simulate the indices used.

Ideally, a climate model should be able to represent the real world without any correction, and indeed this can be the case for specific models simulating specific variables in specific regions45,69. But more generally, climate models have systematic biases that must be accounted for prior to their use. In this study we used relatively low resolution climate simulations because of the uniquely large number of realizations they provide of the current climate, enabling sufficient samples to empirically deduce likelihoods of rare concurrent extremes. Our model required minimal adjustment—only bias correction of the mean \(\overline{{{{{{\rm{DI}}}}}}}\)—to produce joint distributions of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) that are statistically consistent with the real world over our region of focus. However, this is not necessarily the case for other indices and/or regions. Where more sophisticated calibrations are necessary, one must be careful not to overly constrain the model to observations, since this could negate the purpose of using the model in the first place (to provide plausible, yet unobserved, realizations of the earth system). Ongoing improvements to climate models and their resolutions will likely reduce model biases, allowing analyses like ours to be applied more broadly and with more confidence.

Our focus here has been on susceptibility to wildfire in the current climate (2014—2023) because of our focus on the 2019-2020 wildfire season. It is important to note, however, that occurrences of extreme drought and fire weather are expected to increase over the coming century due to anthropogenic influences. Projections indicate that winter and spring rainfall over Australia’s eastern seaboard will decrease70 and that drought duration and frequency across southern Australia are likely to increase71. Increases in mean and extreme temperatures this century are virtually certain70 and likely to contribute to increases in the number and severity of dangerous fire weather events15,31,32,33,34 and drier, more volatile, fuel loads72. Indeed, some studies suggest that temperature may play an increasing role over precipitation in global fire occurrence over the next century18,73. Exactly how these changes will impact wildfire risk is a potential area for future research.

Methods

Burnt area data

To produce Fig. 1, burnt forest areas are taken from FireCCI v5.1 provided by the European Space Agency Climate Change Initiative74 (2001–2019) and from C3S v1.0 provided by the Copernicus Climate Change Service (2020). The data are gridded monthly burnt areas for different vegetation classes with a resolution of 0.25o. Here we consider only burnt areas associated with land cover categories 50–90, corresponding to forested areas.

The burnt area data used in Fig. 2 are calculated from New South Wales National Parks and Wildlife Service Fire History data75. These data are provided as polygons of burnt areas of wildfires and prescribed burns, sometimes including associated start and/or end dates, over the period 01/01/1920–18/02/2021. The polygon data are converted to a gridded product with 0.05o resolution in latitude and longitude. We consider only wildfire data and exclude data that have start or end dates that do not fall within 28 days of December or do not span a period encompassing December. The regional burnt areas used in Fig. 2 are calculated by summing the 0.05o resolution data over the region shown in Fig. 1.

Calculation of forest fire and drought indices

The daily McArthur Forest Fire Danger Index76,77, FFDI, is defined here as:

where T (oC) is the maximum daily temperature; H (%) is the daily average relative humidity at 1000 hPa; W (km/h) is the daily average 10m wind speed; and D is the rolling 20-day total precipitation scaled to range between 0 and 10, with larger D for lower precipitation totals.

Note that this formulation differs from standard formulations of the FFDI76,77 in a number of ways, principally in its use of daily average humidity and wind speed. These changes were necessitated by the data that were available to us across the various datasets used in this paper. FFDI estimates herein are likely to be attenuated relative to the standard formulations as a result of these differences. We calculate the FFDI from equation (1) from both the forecast model data (see “Use of forecast model data”) and the Japanese 55-year reanalysis (JRA-55)78,79 which spans 1958–2020. For the latter, the 1.25o resolution fields of the individual components in equation (1) are first interpolated linearly to the forecast model grid (the only exception to this is in Fig. 2b, d, where the FFDI is presented at the JRA-55 grid resolution). For both the forecast and JRA-55 data, the regional December-averaged FFDI, \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\), is calculated for a given year by averaging all daily December values of FFDI over the four model grid cells in Fig. 1.

The Drought Index, DI, of a given year is defined as the accumulated total precipitation (mm) between January and December (both inclusive) of that year. Thus, lower values of DI indicate drier conditions. We calculate DI using the daily forecast data and using data from the Australian Gridded Climate Dataset (AGCD), which provides interpolated in situ observations on a 0.05o × 0.05o grid over the period 1900-202080,81. AGCD v1 data are used for the period 1900–2018 and AGCD v2 data are used for the period 2019–2020. In Fig. 2c, we show DI calculated from AGCD at the native grid resolution. We also spatially average DI calculated from AGCD to the JRA-55 grid resolution in order to generate the combined index presented in Fig. 2d. Everywhere else, we focus in this paper on the regional Drought Index, \(\overline{{{{{{\rm{DI}}}}}}}\), which is defined for each dataset as the average DI over the region in Fig. 1.

The JRA-55 and AGCD data provide joint historical records of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) spanning 1958–2020 and are referred to herein as “observations”. By pooling forecast ensemble members and lead times, the forecast model provides many estimates of plausible values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) for every available forecast year.

Use of forecast model data

We use the Commonwealth Science and Industrial Research Organization (CSIRO) Climate Analysis Forecast Ensemble (CAFE) near-term climate prediction system to produce many simulations of contemporary climate. The system uses the Geophysical Fluid Dynamics Laboratory Coupled Model version 2.182, with an upgraded oceanic component (MOM5.1) and an atmospheric model resolution of 2o in latitude and 2. 5o in longitude83. Retrospective forecasts were run from initial conditions taken from the CAFE60v1 reanalysis84,85, which provides an ensemble of estimates of the states of the atmosphere, ocean, land and sea-ice over the period 1960–2020 using the same underlying model as the forecasts.

The retrospective climate forecasts provide a very large sample of possible values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) under contemporaneous anthropogenic and natural forcings that enable the likelihoods of exceeding rare events (like those in 2019) to be estimated empirically. Our methodology is similar to those introduced in previous papers using the UNSEEN approach46:

-

1.

Remove model samples at short lead times where there is dependence between forecast ensemble members due to their similar initial conditions (see “Testing of ensemble member independence”). Dependence between samples artificially inflates the sample size without adding new information.

-

2.

Test that the model provides stable (with lead time) and realistic estimates of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\). We apply a simple bias correction to the modeled \(\overline{{{{{{\rm{DI}}}}}}}\) (see “Bias correction”) and check that the joint distributions of modeled \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) are consistent with the observed record (see “Testing of model fidelity”).

-

3.

Calculate likelihoods of exceedance (see “Calculation of likelihoods of exceedance”).

Two sets of forecasts are used in this paper, denoted f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) and f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\). The f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) dataset comprises 10-year long forecasts, each with 10 ensemble members, initialised at the beginning of every May and November over the period 1980–2020. The f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) dataset is identical to f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\), except that the initialization period is shorter—2005–2020—and the number of ensemble members per forecast is much larger—96 members. In this paper, f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) is used only to bias-correct f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) and to demonstrate the efficacy of the forecast model. The large-ensemble f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) data are used for all other analyses.

When using forecast data for the purpose of providing multiple realizations of a given time period, it is important that equal numbers of samples are included for each year in the period, since this avoids over/under-sampling the conditions of a particular year. Figure 8 shows the number of samples per calendar year for the f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) (pink labels) and f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) (purple labels) datasets after removing lead times with dependent ensemble members. Fewer samples are available for calendar forecast years toward the start and end of each forecast period because these years have fewer lead times available. For both \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\), we define the lead time of a given forecast as the number of elapsed months between initialization and December of the forecast year (e.g., the 2020 forecast initialised in Nov 2020 is at 1-month lead). Note that no \(\overline{{{{{{\rm{DI}}}}}}}\) forecast is available at 1-month lead, since this index requires the accumulation of rainfall from January to December. Thus, the shortest available lead time for \(\overline{{{{{{\rm{DI}}}}}}}\) is 13 months.

a The initialization and forecast periods for the f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) forecasts and b the resulting number of samples per year after removing lead times with dependent ensemble members (see “Testing of ensemble member independence”). In a, each purple line represents a single ensemble member and the forecast in the top right shows all 96 ensemble members in gray. The dashed lines show, for specific years, why different forecast years have different numbers of samples. In b, purple (pink) labels apply to the f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) (f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\)) dataset and the numbers in or above each bar show the lead times that are available for each year (defined as the number of elapsed months between initialization and December of the forecast year). Where a range is specified, lead times are available in increments of 6 months.

The f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) dataset provides 140 simulations (14 × 10: lead times × ensemble members) for every year over the period 1989–2023. Likewise, the f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) dataset provides 1344 simulations (14 × 96) for every year in 2014–2023. Thus, when testing the fidelity of the f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) and f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) forecasts in Fig. 4g we use the periods 1989–2020 and 2014–2020, respectively, since these periods provide maximum and equal numbers of realizations per year and overlap with the observed record. To calculate the likelihoods of the 2019 \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) conditions using the f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) data, we consider all lead times together and use the 10-year period centered on 2019 (2014–2023) as representative of current climate conditions.

Testing of ensemble member independence

Each multi-member forecast is initialised from a set of initial conditions that seek to estimate the state of the climate at the time of initialization and the uncertainty about that state. As such, ensemble members of a given forecast at short lead times are strongly dependent on each other. Inclusion of dependent ensemble members in our analysis results in artificial inflation of the sample size, without adding new information39,40,41,46. To determine the lead time at which the ensemble members can be considered independent, we apply a simple statistical test that the correlation between ensemble members at a given lead time is zero.

At each lead time, the f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) model dataset provides 96 (members), 16-year timeseries of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) (spanning, e.g., 2005–2020 at 1 month lead, or 2014–2029 at 115 months lead, see Fig. 8). We define our test statistic, ρt, for each lead time and variable as the mean Spearman correlation86 in time between all combinations of the 96 ensemble members (of which there are 4560: member 1 with 2, member 1 with 3 etc). Significance of ρt is estimated using a permutation test, whereby 10,000 sets of 96 × 16 points are randomly drawn from the complete \({\mathrm f}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) model dataset to produce 10,000 estimates of the mean Spearman correlation for each variable in the same manner as above. Because these estimates are constructed from randomly drawn data, they represent the distribution of mean correlation values for uncorrelated data (i.e., the null distribution). Ensemble members of each variable are considered to be dependent (i.e., the null hypothesis of independence is rejected) at a given lead time if ρt falls outside of confidence intervals calculated from the randomly sampled distribution using a 5% significance level (Fig. 3). The test is very similar to that described in previous studies46, however here we test only the mean correlation over combinations of ensemble members rather than all box-and-whisker statistics. Our approach reduces the number of simultaneous tests and the associated issues with multiple testing87.

Note that nonzero correlation between ensemble members at a given lead time can also come about because they share the same time-varying forcing. However, correlation from shared forcing is not a valid reason to reject ensemble members. Applying the same test as described above, but removing the ensemble mean temporal trend from each ensemble member prior to calculating ρt produces negligible changes to Fig. 3.

Bias correction

All climate models have systematic biases relative to the real world. There is a very large range of existing methods, of varying levels of complexity, for correcting for climate model biases88,89,90. Generally, these methods involve building a transfer function between the distributions of observed and modeled variables over a particular period of time. All such methods include potentially ad hoc assumptions regarding, for example, the shape and stationarity of the observed/modeled distributions. In the present analysis, we seek to use our forecast model to learn about events that are unprecedented in the historical record and therefore have no observations to constrain their correction. Our approach to model correction is to find the simplest justifiable method that produces model distributions that are statistically consistent with the limited historical record. In doing so, we minimize the extent to which the forecast model data are manipulated, and thus rely as much as possible on the ability of the model to simulate the range of contemporaneous climate conditions.

It is necessary to bias-correct the forecast \(\overline{{{{{{\rm{DI}}}}}}}\) to ensure that the simulated joint distribution of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) is consistent with the real world. For each forecast lead time, we estimate the mean \(\overline{{{{{{\rm{DI}}}}}}}\) bias as the difference between the mean f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) and observed \(\overline{{{{{{\rm{DI}}}}}}}\) over the period 1990–2020. These biases (which range between −141 mm and −68 mm, depending on the lead time) are subtracted from both the f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) and f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) forecasts to produce unbiased estimates of \(\overline{{{{{{\rm{DI}}}}}}}\). No bias correction is necessary for \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\). Note that the f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) model dataset is used here so that the biases can be estimated using a relatively long time period (31 years). The f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) model dataset is initialised over a shorter period (2005–2020), so provides, for example, only seven years of data at 115 months lead (2014–2020) that could be compared with observations to estimate biases (see Fig. 8).

Testing of model fidelity

We test the ability of our forecast model to simulate the real world by comparing the forecast and observed distributions of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) over a common period of time. Previous studies assessing likelihoods of extremes using forecast ensembles have tested that the observed mean, standard deviation, skewness and kurtosis of the variable in question falls within 95% confidence intervals from bootstrapped distributions of each statistic computed from the forecast model44,45,46,47,48,69. We apply a different test for two reasons. First, our focus in this paper is on compound events and thus we seek to assess the fidelity of our model in simulating the joint distributions of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\). Second, because the approach of previous studies simultaneously tests multiple statistics, each with their own statistical significance, it suffers from issues with multiple testing87. Indeed, Monte–Carlo simulations applying the above test to samples and bootstrapped distributions drawn from the same Gaussian population show that the rejection rate is approximately 18% (not 5%), with little dependence on sample size. For two variables, the rejection rate is higher still.

For these reasons, we instead apply a two-dimensional Kolmogorov–Smirnov (KS) test54,55 to compare the joint distributions of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\). The f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) model dataset provides 96 (member) forecasts for the 7-year period 2014–2020 at all independent lead times (see Fig. 8). We calculate the two-dimensional KS statistic between the observed and forecast distributions, Kobs, using all data in this period. To derive a p-value for this statistic, we bootstrap 10,000 7-year pseudo-timeseries of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) from all forecasts that fall within the same period. For each bootstrapped sample, we calculate the two-dimensional KS statistic, K, relative to the full set of forecasts within the period, thus providing the null distribution for our KS test. If Kobs falls below the 95th percentile of the null distribution—i.e., the right-tail p-value is greater than 0.05—we cannot reject the null hypothesis that the joint distributions are the same. In this case, we consider that our forecast model provides a good representation of plausible values of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\).

The results of the two-dimensional KS test are shown for the bias-corrected f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) model data in Fig. 4 (pink shading). We run the test for all lead times together (Fig. 4g) and for each lead month separately (Fig. 4h). In the latter case, the period of time over which the test is applied is adjusted to maximize the number of observed points in the comparison. For example, f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) forecasts at 37-months lead span 2008–2020 (see Fig. 8) and thus the test at 37 months lead is applied over this period. We also apply the same KS test to the bias-corrected f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) data, which span a longer period of time and hence allow for comparison to a larger sample of observations (Fig. 4g and h, purple shading). For the f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) data, all KS tests are applied over the period 1989–2020.

Calculation of likelihoods of exceedance

Likelihoods of exceeding a given event are calculated from the empirical probability distribution as the proportion of total f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) forecast samples that are more extreme than the event in question. For example, Fig. 5a, b, respectively, show probabilities P(\({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) > \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\),i) and P(\(\overline{{{{{{\rm{DI}}}}}}}\) < \(\overline{{{{{{\rm{DI}}}}}}}\)i) for every sample i of the 13,440 samples in the f\({}_{96\ {{{{{\rm{mem}}}}}}}^{2005\to }\) dataset over 2014-2023, and Fig. 5c similarly show P(\({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) > \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\),i ∧ \(\overline{{{{{{\rm{DI}}}}}}}\) < \(\overline{{{{{{\rm{DI}}}}}}}\)i). In the calculation of likelihoods of exceedance, we limit ourselves to the 10 year period, 2014-2023, since all independent lead times are available from the model for these years (Fig. 8).

Likelihood confidence bounds in Figs. 5 and 7 are constructed by repeatedly bootstrapping the set of \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) values used to calculate the likelihood in question and recomputing the likelihood for each boostrapped sample to produce 10,000 resampled estimates of the likelihoods of exceedance. These resampled likelihoods are used to calculate the 2.5–97.5% percentile ranges shown in the figures. In Fig. 5c, d, the likelihoods of exceedance are interpolated onto a regular grid using the ‘griddata’ routine in the Python Scipy library.

Calculation of climate driver indices

We employ three simple indices for climate modes that impact Australia. To assess the strength and phase of the El Niño Southern Oscillation (ENSO), we use the Nino 3.4 index91, which is the average sea-surface temperature (SST) anomaly over the region 5oN–5oS, 120o–170oW. The Indian Ocean Dipole is quantified using the Dipole Mode Index, DMI92, which is the difference between the average SST anomalies over western (10oN–10oS, 50o–70oE) and south-eastern (0o–10oS, 90o–110oE) tropical Indian Ocean regions. We represent the strength of the Southern Annual Mode (SAM, also called the Antarctic Oscillation) using a Southern Annular Mode Index, SAMI, defined as the difference between the normalized monthly zonal mean sea level pressure at 40oS and 65oS93.

The climate mode indices are computed from the forecast model data and from reanalysis data: Hadley Centre Global Sea Ice and Sea Surface Temperature (HadISST1)94 for Nino 3.4 and DMI; and JRA-55 for SAMI. Anomalies are computed relative to the climatological average over the period 1990–2020. In cases where forecast model data are used, a separate climatological average is constructed using the f\({}_{10\ {{{{{\rm{mem}}}}}}}^{1980\to }\) dataset and removed for each forecast lead time. This removes from the forecasts the mean model biases over the reference period at each lead time. We focus on the average Nino 3.4 and SAMI over September, October, November and December (SOND), and on the average DMI over September, October and November (SON), corresponding to when each index has its strongest influence on precipitation and FFDI in southeast Australia15. The fidelity of the model climate driver indices relative to observations and their relationships to \({\overline{{{{{{\rm{FFDI}}}}}}}}_{{{{{{\rm{Dec}}}}}}}\) and \(\overline{{{{{{\rm{DI}}}}}}}\) are assessed in Supplementary Figs. 2 and 3.

Data availability

FireCCI v5.1 and C3S v1.0 fire burned area data are openly available from the Copernicus Climate Data Store at https://doi.org/10.24381/cds.f333cf85. New South Wales National Parks and Wildlife Service Fire History data are available for download at https://data.nsw.gov.au/data/dataset/fire-history-wildfires-and-prescribed-burns-1e8b6. JRA-55 data are available from the University Corporation for Atmospheric Research Research Data Archive at https://doi.org/10.5065/D6HH6H41. HadISST1 data are available from the Met Office Hadley Centre at https://hadleyserver.metoffice.gov.uk/hadisst/data/download.html. AGCD v1 data are accessible from Australia’s National Computational Infrastructure Data Catalogue at https://doi.org/10.25914/6009600b58196. AGCD v2 data are not publicly available, with access details provided by the Bureau of Meteorology at http://www.bom.gov.au/climate/austmaps/metadata-monthly-rainfall.shtml. The Natural Earth data used to generate the shading in the inset of Fig. 1 are in the public domain and are available at https://www.naturalearthdata.com/. The forecast datasets are not yet available publicly but are available from the corresponding author upon request, bearing in mind that these datasets comprise hundreds of terabytes of data. Postprocessed versions of all the variables and indices presented in this paper are available from the corresponding author upon request.

Code availability

All code used to perform the analysis and generate the figures in this paper is openly available at https://doi.org/10.5281/zenodo.5566244.

References

The Royal Commission into National Natural Disaster Arrangements: Report. Technical Report (The Royal Commission into National Natural Disaster Arrangements, 2020). https://naturaldisaster.royalcommission.gov.au/publications/royal-commission-national-natural-disaster-arrangements-report.

Boer, M. M., Resco de Dios, V. & Bradstock, R. A. Unprecedented burn area of Australian mega forest fires. Nat. Clim. Change 10, 171–172 (2020).

Hughes, L. et al. Summer of Crisis. Technical Report (The Climate Council of Australia, 2020). https://www.climatecouncil.org.au/resources/summer-of-crisis/.

Davey, S. M. & Sarre, A. Editorial: the 2019/20 Black Summer bushfires. Aust. For. 83, 47–51 (2020).

Borchers Arriagada, N. et al. Unprecedented smoke-related health burden associated with the 2019–20 bushfires in eastern Australia. Med. J. Aust. 213, 282–283 (2020).

Vardoulakis, S., Jalaludin, B. B., Morgan, G. G., Hanigan, I. C. & Johnston, F. H. Bushfire smoke: urgent need for a national health protection strategy. Med. J. Aust. 212, 349–353.e1 (2020).

Pickrell, J. As fires rage across Australia, fears grow for rare species. Science. https://www.sciencemag.org/news/2019/12/fires-rage-across-australia-fears-grow-rare-species. (2019).

Ward, M. et al. Impact of 2019–2020 mega-fires on Australian fauna habitat. Nat. Ecol. Evol. 4, 1321–1326 (2020).

Read, P. & Denniss, R. With costs approaching $100 billion, the fires are Australia’s costliest natural disaster (2020). http://theconversation.com/with-costs-approaching-100-billion-the-fires-are-australias-costliest-natural-disaster-129433.

Quiggin, J. Australia is promising $2 billion for the fires. I estimate recovery will cost $100 billion https://www.cnn.com/2020/01/10/perspectives/australia-fires-cost/index.html (2020).

Nolan, R. H. et al. Causes and consequences of eastern Australia’s 2019–20 season of mega-fires. Glob. Chang. Biol. 26, 1039–1041 (2020).

Adams, M. A., Shadmanroodposhti, M. & Neumann, M. Causes and consequences of Eastern Australia’s 2019–20 season of mega-fires: a broader perspective. Glob. Chang. Biol. 26, 3756–3758 (2020).

Bradstock, R. A. et al. A broader perspective on the causes and consequences of eastern Australia’s 2019–20 season of mega-fires: a response to Adams et al. Glob. Chang. Biol. 26, e8–e9 (2020).

King, A. D., Pitman, A. J., Henley, B. J., Ukkola, A. M. & Brown, J. R. The role of climate variability in Australian drought. Nat. Clim. Change 10, 177–179 (2020).

Abram, N. J. et al. Connections of climate change and variability to large and extreme forest fires in southeast Australia. Commun. Earth Environ. 2, 1–17 (2021).

Wang, G. & Cai, W. Two-year consecutive concurrences of positive Indian Ocean Dipole and Central Pacific El Niño preconditioned the 2019/2020 Australian “black summer” bushfires. Geosci. Lett. 7, 19 (2020).

Doi, T., Behera, S. K. & Yamagata, T. Predictability of the Super IOD Event in 2019 and Its Link With El Niño Modoki. Geophys. Res. Lett. 47, e2019GL086713 (2020).

Sanderson, B. M. & Fisher, R. A. A fiery wake-up call for climate science. Nat. Clim. Change 10, 175–177 (2020).

Special Climate Statement 72—dangerous bushfire weather in spring 2019. Technical Report (Australian Bureau of Meteorology, 2020). http://www.bom.gov.au/climate/current/statements/scs72.pdf.

Deb, P. et al. Causes of the widespread 2019–2020 Australian bushfire season. Earths Future 8, e2020EF001671 (2020).

No authors listed. Biodiversity in flames. Nat. Ecol. Evol. 4, 171–171 (2020).

Lim, E.-P. et al. Australian hot and dry extremes induced by weakenings of the stratospheric polar vortex. Nat. Geosci. 12, 896–901 (2019).

Grothe, P. R. et al. Enhanced El Niño–southern oscillation variability in recent decades. Geophys. Res. Lett. 47, e2019GL083906 (2020).

Abram, N. J. et al. Palaeoclimate perspectives on the Indian Ocean dipole. Quat. Sci. Rev. 237, 106302 (2020).

Cai, W. et al. Increasing frequency of extreme El Niño events due to greenhouse warming. Nat. Clim. Change 4, 111–116 (2014).

Cai, W. et al. Increased frequency of extreme Indian Ocean dipole events due to greenhouse warming. Nature 510, 254–258 (2014).

Harris, S. & Lucas, C. Understanding the variability of Australian fire weather between 1973 and 2017. PLoS ONE 14, e0222328 (2019).

Dowdy, A. J. Climatological variability of fire weather in Australia. J. Appl. Meteorol. Climatol. 57, 221–234 (2018).

Clarke, H., Lucas, C. & Smith, P. Changes in Australian fire weather between 1973 and 2010. Int. J. Climatol. 33, 931–944 (2013).

Sharples, J. J. et al. Natural hazards in Australia: extreme bushfire. Clim. Change 139, 85–99 (2016).

Pitman, A. J., Narisma, G. T. & McAneney, J. The impact of climate change on the risk of forest and grassland fires in Australia. Clim. Change 84, 383–401 (2007).

Clarke, H. et al. An investigation of future fuel load and fire weather in Australia. Clim. Change 139, 591–605 (2016).

Clarke, H. & Evans, J. P. Exploring the future change space for fire weather in southeast Australia. Theor. Appl. Climatol. 136, 513–527 (2019).

Touma, D., Stevenson, S., Lehner, F. & Coats, S. Human-driven greenhouse gas and aerosol emissions cause distinct regional impacts on extreme fire weather. Nat. Commun. 12, 212 (2021).

Bradstock, R. A. et al. Prediction of the probability of large fires in the Sydney region of south-eastern Australia using fire weather. Int. J. Wildland Fire 18, 932–943 (2010).

Louis, S. A. Gridded return values of McArthur Forest Fire Danger Index across New South Wales. Aust. Meteorol. Oceanogr. J. 64, 243–260 (2014).

Brink, H. W. V. D., Können, G. P., Opsteegh, J. D., Oldenborgh, G. J. V. & Burgers, G. Improving 104-year surge level estimates using data of the ECMWF seasonal prediction system. Geophys. Res. Lett. 31, L17210 (2004).

Brink, H. W. V. D., Können, G. P., Opsteegh, J. D., Oldenborgh, G. J. V. & Burgers, G. Estimating return periods of extreme events from ECMWF seasonal forecast ensembles. Int. J. Climatol. 25, 1345–1354 (2005).

Breivik, Ø., Aarnes, O. J., Bidlot, J.-R., Carrasco, A. & Saetra, Ø. Wave extremes in the Northeast Atlantic from ensemble forecasts. J. Clim. 26, 7525–7540 (2013).

Breivik, Ø., Aarnes, O. J., Abdalla, S., Bidlot, J.-R. & Janssen, P. A. E. M. Wind and wave extremes over the world oceans from very large ensembles. Geophys. Res. Lett. 41, 5122–5131 (2014).

Meucci, A., Young, I. R. & Breivik, Ø. Wind and wave extremes from atmosphere and wave model ensembles. J. Clim. 31, 8819–8842 (2018).

Walz, M. A. & Leckebusch, G. C. Loss potentials based on an ensemble forecast: How likely are winter windstorm losses similar to 1990? Atmos. Sci. Lett. 20, e891 (2019).

Kent, C. et al. Using climate model simulations to assess the current climate risk to maize production. Environ. Res. Lett. 12, 054012 (2017).

Kent, C. et al. Maize drought hazard in the Northeast Farming Region of China: unprecedented events in the current climate. J. Appl. Meteorol. Climatol. 58, 2247–2258 (2019).

Thompson, V. et al. High risk of unprecedented UK rainfall in the current climate. Nat. Commun. 8, 107 (2017).

Kelder, T. et al. Using UNSEEN trends to detect decadal changes in 100-year precipitation extremes. npj Clim. Atmos. Sci. 3, 1–13 (2020).

Kay, G. et al. Current likelihood and dynamics of hot summers in the UK. Environ. Res. Lett. 15, 094099 (2020).

Wang, L. et al. What chance of a sudden stratospheric warming in the southern hemisphere? Environ. Res. Lett. 15, 104038 (2020).

Tancredi, A., Anderson, C. & O’Hagan, A. Accounting for threshold uncertainty in extreme value estimation. Extremes 9, 87 (2006).

Scarrott, C. & MacDonald, A. A review of extreme value threshold estimation and uncertainty quantification. REVSTAT Stat. J. 10, 33–60 (2012).

Chowdhary, H. & Singh, V. P. Reducing uncertainty in estimates of frequency distribution parameters using composite likelihood approach and copula-based bivariate distributions. Water Resour. Res. 46, W11516 (2010).

Altman, N. & Krzywinski, M. The curse(s) of dimensionality. Nat. Meth. 15, 399–400 (2018).

Folland, C. & Anderson, C. Estimating changing extremes using empirical ranking methods. J. Clim. 15, 2954–2960 (2002).

Fasano, G. & Franceschini, A. A multidimensional version of the Kolmogorov–Smirnov test. Mon. Not. R. Astron. Soc. 225, 155–170 (1987).

Press, W. H. & Teukolsky, S. A. Kolmogorov-Smirnov test for two-dimensional data. Comput. Phys. 2, 74–77 (1988).

Risbey, J. S., Pook, M. J., McIntosh, P. C., Wheeler, M. C. & Hendon, H. H. On the remote drivers of rainfall variability in Australia. Mon. Wea. Rev. 137, 3233–3253 (2009).

Ummenhofer, C. C. et al. What causes southeast Australia’s worst droughts? Geophys. Res. Lett. 36, L04706 (2009).

Lim, E.-P. et al. The 2019 Southern Hemisphere stratospheric polar vortex weakening and its impacts. Bull. Am. Meteorol. Soc. 1, 1–50 (2021).

Cai, W. et al. Pantropical climate interactions. Science 363, eaav4236 (2019).

Abram, N. J. et al. Evolution of the Southern Annular Mode during the past millennium. Nat. Clim. Change 4, 564–569 (2014).

Dätwyler, C., Grosjean, M., Steiger, N. J. & Neukom, R. Teleconnections and relationship between the El Niño–Southern Oscillation (ENSO) and the Southern Annular Mode (SAM) in reconstructions and models over the past millennium. Clim. Past 16, 743–756 (2020).

Leonard, M. et al. A compound event framework for understanding extreme impacts. WIREs Clim. Change 5, 113–128 (2014).

Sadegh, M. et al. Multihazard scenarios for analysis of compound extreme events. Geophys. Res. Lett. 45, 5470–5480 (2018).

Zscheischler, J. et al. A typology of compound weather and climate events. Nat. Rev. Earth Environ. 1, 333–347 (2020).

Barnston, A. G., Tippett, M. K., L’Heureux, M. L., Li, S. & DeWitt, D. G. Skill of real-time seasonal ENSO model predictions during 2002–11: is our capability increasing? Bull. Am. Meteorol. Soc. 93, 631–651 (2012).

Liu, H., Tang, Y., Chen, D. & Lian, T. Predictability of the Indian Ocean Dipole in the coupled models. Clim. Dyn. 48, 2005–2024 (2017).

Climate Driver Update Archive. http://www.bom.gov.au/climate/enso/wrap-up/archive/20190625.archive.shtml (2019).

Australian seasonal bushfire outlook: August 2019. Technical Report (Bushfire & natural hazards CRC, 2019). https://www.bnhcrc.com.au/hazardnotes/63.

Thompson, V. et al. Risk and dynamics of unprecedented hot months in South East China. Clim. Dyn. 52, 2585–2596 (2019).

Grose, M. R. et al. Insights from CMIP6 for Australia’s future climate. Earths Future 8, e2019EF001469 (2020).

Ukkola, A. M., Kauwe, M. G. D., Roderick, M. L., Abramowitz, G. & Pitman, A. J. Robust future changes in meteorological drought in CMIP6 projections despite uncertainty in precipitation. Geophys. Res. Lett. 47, e2020GL087820 (2020).

Williams, A. P. et al. Observed impacts of anthropogenic climate change on wildfire in California. Earths Future 7, 892–910 (2019).

Pechony, O. & Shindell, D. T. Driving forces of global wildfires over the past millennium and the forthcoming century. Proc. Natl Acad. Sci. USA 107, 19167–19170 (2010).

Lizundia-Loiola, J., Otón, G., Ramo, R. & Chuvieco, E. A spatio-temporal active-fire clustering approach for global burned area mapping at 250 m from MODIS data. Remote Sens. Environ. 236, 111493 (2020).

Data.NSW. NPWS Fire History - Wildfires and Prescribed Burns. https://data.nsw.gov.au/data/dataset/fire-history-wildfires-and-prescribed-burns-1e8b6 (2021).

Luke, R. H. & McArthur, A. G. Bush fires in Australia. (Australian Government Publishing Service, Canberra, Australia, 1978).

Noble, I. R., Gill, A. M. & Bary, G. A. V. McArthur’s fire-danger meters expressed as equations. Aust. J. Ecol. 5, 201–203 (1980).

Harada, Y. et al. The JRA-55 reanalysis: representation of atmospheric circulation and climate variability. J. Meteorol. Soc. Japan Ser. II 94, 269–302 (2016).

Kobayashi, S. et al. The JRA-55 reanalysis: general specifications and basic characteristics. J. Meteorol. Soc. Japan Ser. II 93, 5–48 (2015).

Jones, D. A., Wang, W. & Fawcett, R. High-quality spatial climate data-sets for Australia. Aust. Meteorol. Oceanogr. J. 58, 233 (2009).

Raupach, M. R. et al. Australian water availability project (AWAP): CSIRO marine and atmospheric research component: final report for phase 3. Technical Report, Centre for Australian weather and climate research (Bureau of Meteorology and CSIRO, 2009) https://www.droughtmanagement.info/literature/CAWCR_AWAP_Final_report_phase_3_2009.pdf.

Delworth, T. L. et al. GFDL’s CM2 global coupled climate models. Part I: formulation and simulation characteristics. J. Clim. 19, 643–674 (2006).

O’Kane, T. J. et al. Coupled data assimilation and ensemble initialization with application to multiyear ENSO prediction. J. Clim. 32, 997–1024 (2018).

O’Kane, T. J. et al. CAFE60v1: a 60-year large ensemble climate reanalysis. Part I: system design, model configuration and data assimilation. J. Clim. 1, 1–48 (2021).

O’Kane, T. J. et al. CAFE60v1: a 60-year large ensemble climate reanalysis. Part II: evaluation. J. Clim. 1, 1–62 (2021).

Spearman, C. The Proof and Measurement of Association Between Two Things. Studies in individual differences: The search for intelligence (Appleton-Century-Crofts, East Norwalk, 1961).

Wilks, D. S. Statistical methods in the atmospheric sciences, vol. 100 (Academic press, 2011).

Teutschbein, C. & Seibert, J. Bias correction of regional climate model simulations for hydrological climate-change impact studies: Review and evaluation of different methods. J. Hydrol. 456-457, 12–29 (2012).

Maraun, D. Bias correcting climate change simulations - a critical review. Curr. Clim. Change Rep. 2, 211–220 (2016).

Risbey, J. S. et al. Standard assessments of climate forecast skill can be misleading. Nat. Commun. 12, 4346 (2021).

Trenberth, K. E. The definition of El Niño. Bull. Am. Meteorol. Soc. 78, 2771–2778 (1997).

Saji, N. H., Goswami, B. N., Vinayachandran, P. N. & Yamagata, T. A dipole mode in the tropical Indian Ocean. Nature 401, 360–363 (1999).

Gong, D. & Wang, S. Definition of Antarctic Oscillation index. Geophys. Res. Lett. 26, 459–462 (1999).

Rayner, N. A. et al. Global analyses of sea surface temperature, sea ice, and night marine air temperature since the late nineteenth century. J. Geophys. Res. Atmos 108, 4407 (2003).

Acknowledgements

This work was supported by the CSIRO Decadal Climate Forecasting Project and the Australasian Leadership Computing Grants scheme, with computational resources provided by NCI Australia and the Pawsey Supercomputing Centre, both funded by the Australian Government. The authors would like to acknowledge the Pangeo community for developing many of the open-source tools and workflows used in this paper.

Author information

Authors and Affiliations

Contributions

D.T.S. and V.K. ran the ensemble forecasts. D.T.S. devised and performed the analysis and wrote the draft paper. All authors contributed to the development of the method, the interpretation of results and reviewed the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Squire, D.T., Richardson, D., Risbey, J.S. et al. Likelihood of unprecedented drought and fire weather during Australia’s 2019 megafires. npj Clim Atmos Sci 4, 64 (2021). https://doi.org/10.1038/s41612-021-00220-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41612-021-00220-8

This article is cited by

-

Climate change unevenly affects the dependence of multiple climate-related hazards in China

npj Climate and Atmospheric Science (2024)

-

Identification and characterization of global compound heat wave: comparison from four datasets of ERA5, Berkeley Earth, CHIRTS and CPC

Climate Dynamics (2024)

-

Research progresses and prospects of multi-sphere compound extremes from the Earth System perspective

Science China Earth Sciences (2024)

-

Variation in fire danger in the Beijing-Tianjin-Hebei region over the past 30 years and its linkage with atmospheric circulation

Climatic Change (2024)

-

Connecting dryland fine-fuel assessments to wildfire exposure and natural resource values at risk

Fire Ecology (2023)