Abstract

Rate distortion theory is concerned with optimally encoding signals from a given signal class \(\mathcal {S}\) using a budget of R bits, as \(R \rightarrow \infty \). We say that \(\mathcal {S}\) can be compressed at rate s if we can achieve an error of at most \(\mathcal {O}(R^{-s})\) for encoding the given signal class; the supremal compression rate is denoted by \(s^*(\mathcal {S})\). Given a fixed coding scheme, there usually are some elements of \(\mathcal {S}\) that are compressed at a higher rate than \(s^*(\mathcal {S})\) by the given coding scheme; in this paper, we study the size of this set of signals. We show that for certain “nice” signal classes \(\mathcal {S}\), a phase transition occurs: We construct a probability measure \(\mathbb {P}\) on \(\mathcal {S}\) such that for every coding scheme \(\mathcal {C}\) and any \(s > s^*(\mathcal {S})\), the set of signals encoded with error \(\mathcal {O}(R^{-s})\) by \(\mathcal {C}\) forms a \(\mathbb {P}\)-null-set. In particular, our results apply to all unit balls in Besov and Sobolev spaces that embed compactly into \(L^2 (\varOmega )\) for a bounded Lipschitz domain \(\varOmega \). As an application, we show that several existing sharpness results concerning function approximation using deep neural networks are in fact generically sharp. In addition, we provide quantitative and non-asymptotic bounds on the probability that a random \(f\in \mathcal {S}\) can be encoded to within accuracy \(\varepsilon \) using R bits. This result is subsequently applied to the problem of approximately representing \(f\in \mathcal {S}\) to within accuracy \(\varepsilon \) by a (quantized) neural network with at most W nonzero weights. We show that for any \(s > s^*(\mathcal {S})\) there are constants c, C such that, no matter what kind of “learning” procedure is used to produce such a network, the probability of success is bounded from above by \(\min \big \{1, 2^{C\cdot W \lceil \log _2 (1+W) \rceil ^2 - c\cdot \varepsilon ^{-1/s}} \big \}\).

Similar content being viewed by others

1 Introduction

Let \(\mathcal {S}\) be a signal class, that is, a relatively compact subset of a Banach space \((\mathbf {X}, \Vert \cdot \Vert _{\mathbf {X}})\). Rate distortion theory is concerned with the question of how well the elements of \(\mathcal {S}\) can be encoded using a prescribed number R of bits. In many cases of interest, the best achievable coding error scales like \(R^{-s^*}\), where \(s^*\) is the optimal compression rate of the signal class \(\mathcal {S}\). We show that a phase transition occurs: the set of elements \(\mathbf {x}\in \mathcal {S}\) that can be encoded using a strictly larger exponent than \(s^*\) is thin; precisely, it is a null-set with respect to a suitable probability measure \(\mathbb {P}\). Crucially, the measure \(\mathbb {P}\) is independent of the chosen coding scheme.

In order to rigorously formulate these results, we first review the needed notions of rate-distortion theory, see also [3, 4, 13, 15]. For later use, we state the definitions here in the setting of general Banach spaces, although our main results only focus on the Hilbert space \(L^2(\varOmega )\).

1.1 A Crash Course in Rate Distortion Theory

To formalize the notion of encoding a signal class \(\mathcal {S}\subset \mathbf {X}\), we define the set  of encoding/decoding pairs (E, D) of code-length \(R \in \mathbb {N}\) as

of encoding/decoding pairs (E, D) of code-length \(R \in \mathbb {N}\) as

We are interested in choosing  such as to minimize the (maximal) distortion .

such as to minimize the (maximal) distortion .

The intuition behind these definitions is that the encoder E converts any signal \(\mathbf {x}\in \mathcal {S}\) into a bitstream of code-length R (i.e., consisting of R bits), while the decoder D produces from a given bitstream \({b \in \{ 0, 1 \}^R}\) a signal \(D(b) \in \mathbf {X}\). The goal of rate distortion theory is to determine the minimal distortion that can be achieved by any encoder/decoder pair of code-length \(R \in \mathbb {N}\). Typical results concerning the relation between code-length and distortion are formulated in an asymptotic sense: One assumes that for every code-length \(R \in \mathbb {N}\), one is given an encoding/decoding pair  and then, studies the asymptotic behavior of the corresponding distortion

and then, studies the asymptotic behavior of the corresponding distortion  as \(R \rightarrow \infty \).

as \(R \rightarrow \infty \).

We refer to a sequence \(\big ( (E_R, D_R) \big )_{R \in \mathbb {N}}\) of encoding/decoding pairs as a codec, so that the set of all codecs is

For a given signal class \(\mathcal {S}\) in a Banach space \(\mathbf {X}\), it is of great interest to find an asymptotically optimal codec; that is, a sequence  such that the asymptotic decay of

such that the asymptotic decay of  is, in a sense, maximal. To formalize this, for each \(s \in [0,\infty )\) define the class of subsets of \(\mathbf {X}\) that admit compression rate s as

is, in a sense, maximal. To formalize this, for each \(s \in [0,\infty )\) define the class of subsets of \(\mathbf {X}\) that admit compression rate s as

For a given (bounded) signal class \(\mathcal {S}\subset \mathbf {X}\), we aim to determine the optimal compression rate for \(\mathcal {S}\) in \(\mathbf {X}\), that is

Although the calculation of the quantity  may appear daunting for a given signal class \(\mathcal {S}\), there exists in fact a large body of literature addressing this topic. A landmark result in this area states that the JPEG2000 compression standard represents an optimal codec for the compression of piecewise smooth signals [26]. This optimality is typically stated more generally for the signal class \(\mathcal {S}= {{\,\mathrm{Ball}\,}}\big ( 0, 1; B_{p,q}^\alpha (\varOmega ) \big )\), the unit ball in the Besov space \(B_{p,q}^\alpha (\varOmega )\), considered as a subset of \(\mathbf {X}= \mathcal {H}= L^2(\varOmega )\), for “sufficiently nice” bounded domains \(\varOmega \subset \mathbb {R}^d\); see [10].

may appear daunting for a given signal class \(\mathcal {S}\), there exists in fact a large body of literature addressing this topic. A landmark result in this area states that the JPEG2000 compression standard represents an optimal codec for the compression of piecewise smooth signals [26]. This optimality is typically stated more generally for the signal class \(\mathcal {S}= {{\,\mathrm{Ball}\,}}\big ( 0, 1; B_{p,q}^\alpha (\varOmega ) \big )\), the unit ball in the Besov space \(B_{p,q}^\alpha (\varOmega )\), considered as a subset of \(\mathbf {X}= \mathcal {H}= L^2(\varOmega )\), for “sufficiently nice” bounded domains \(\varOmega \subset \mathbb {R}^d\); see [10].

For a codec  , instead of considering the maximal distortion of \(\mathcal {C}\) over the entire signal class \(\mathcal {S}\), one can also measure the approximation rate that the codec \(\mathcal {C}\) achieves for each individual \(\mathbf {x}\in \mathcal {S}\). Precisely, the class of elements with compression rate s under \(\mathcal {C}\) is

, instead of considering the maximal distortion of \(\mathcal {C}\) over the entire signal class \(\mathcal {S}\), one can also measure the approximation rate that the codec \(\mathcal {C}\) achieves for each individual \(\mathbf {x}\in \mathcal {S}\). Precisely, the class of elements with compression rate s under \(\mathcal {C}\) is

If the signal class \(\mathcal {S}\) is “sufficiently regular”—for instance if \(\mathcal {S}\) is compact and convex—then one can prove (see Proposition 3) that the following dichotomy is valid:

Thus, all signals in \(\mathcal {S}\) can be approximated at any compression rate lower than the optimal rate for \(\mathcal {S}\) using a common codec. Furthermore, for any approximation rate s larger than the optimal rate for \(\mathcal {S}\), and for any codec \(\mathcal {C}\), there exists some \({\mathbf {x}^*= \mathbf {x}^*(s, \mathcal {C}) \in \mathcal {S}}\) that is not compressed at rate s by \(\mathcal {C}\).

Remark 1

(Encoding/decoding schemes vs. discretization maps) As the above considerations suggest, the crucial objects for our investigations are not the encoding/decoding pairs  , but the distortion they cause for each \(\mathbf {x}\in \mathcal {S}\). Therefore, we could equally well restrict our attention to the discretization map \(D \circ E : \mathcal {S}\rightarrow \mathbf {X}\), which has the crucial property \({|\mathrm {range} (D \circ E)| \le 2^R}\). Conversely, given any (discretization) map \(\varDelta : \mathcal {S}\rightarrow \mathbf {X}\) with \(|\mathrm {range}(\varDelta )| \le 2^R\), one can construct an encoding/decoding pair

, but the distortion they cause for each \(\mathbf {x}\in \mathcal {S}\). Therefore, we could equally well restrict our attention to the discretization map \(D \circ E : \mathcal {S}\rightarrow \mathbf {X}\), which has the crucial property \({|\mathrm {range} (D \circ E)| \le 2^R}\). Conversely, given any (discretization) map \(\varDelta : \mathcal {S}\rightarrow \mathbf {X}\) with \(|\mathrm {range}(\varDelta )| \le 2^R\), one can construct an encoding/decoding pair  , by choosing a surjection \({D : \{ 0,1 \}^R \rightarrow \mathrm {range}(\varDelta )}\), and then setting

, by choosing a surjection \({D : \{ 0,1 \}^R \rightarrow \mathrm {range}(\varDelta )}\), and then setting

which ensures that \(\Vert \mathbf {x}- D(E(\mathbf {x})) \Vert _{\mathbf {X}} \le \Vert \mathbf {x}- \varDelta (\mathbf {x}) \Vert _{\mathbf {X}}\) for all \(\mathbf {x}\in \mathcal {S}\). Thus, all our results could be rephrased in terms of such discretization maps rather than in terms of encoding/decoding pairs. For more details on this connection, see also Lemma 10. \(\blacktriangleleft \)

1.2 Our Contributions

1.2.1 Phase Transition

We improve on the dichotomy (1.3) by measuring the size of the class  of elements with compression rate s under the codec \(\mathcal {C}\). Then, a phase transition occurs: the class of elements that cannot be encoded at a “larger than optimal” rate is generic. We prove this when the signal class is a ball in a Besov- or Sobolev space, as long as this ball forms a compact subset of \(L^2(\varOmega )\) for a bounded Lipschitz domain \(\varOmega \subset \mathbb {R}^d\).

of elements with compression rate s under the codec \(\mathcal {C}\). Then, a phase transition occurs: the class of elements that cannot be encoded at a “larger than optimal” rate is generic. We prove this when the signal class is a ball in a Besov- or Sobolev space, as long as this ball forms a compact subset of \(L^2(\varOmega )\) for a bounded Lipschitz domain \(\varOmega \subset \mathbb {R}^d\).

More precisely, for each such signal class \(\mathcal {S}\), we construct a probability measure \(\mathbb {P}\) on \(\mathcal {S}\) such that the compressibility exhibits a phase transition as in the following definition.

Definition 1

A Borel probability measure \(\mathbb {P}\) on a subset \(\mathcal {S}\) of a Banach space \(\mathbf {X}\) exhibits a compressibility phase transition if it satisfies the following:

In this definition, since the set  is not necessarily measurable for

is not necessarily measurable for  , instead of the measure \(\mathbb {P}\), we use the associated outer measure \(\mathbb {P}^*\). Generally, given a measure space \((\mathcal {S}, \mathscr {A},\mu )\) the outer measure \(\mu ^*: 2^{\mathcal {S}} \rightarrow [0,\infty ]\) induced by \(\mu \) is defined as

, instead of the measure \(\mathbb {P}\), we use the associated outer measure \(\mathbb {P}^*\). Generally, given a measure space \((\mathcal {S}, \mathscr {A},\mu )\) the outer measure \(\mu ^*: 2^{\mathcal {S}} \rightarrow [0,\infty ]\) induced by \(\mu \) is defined as

In general, \(\mu ^*\) is not a measure, but it is always \(\sigma \)-subadditive, meaning that it satisfies \({\mu ^*(\bigcup _{n=1}^\infty M_n) \le \sum _{n=1}^\infty \mu ^*(M_n)}\) for arbitrary \(M_n \subset \mathcal {S}\); see [14, Section 1.4]. Furthermore, it is easy to see for \(M \in \mathscr {A}\) that \(\mu ^*(M) = \mu (M)\); that is, \(\mu ^*\) is an extension of \(\mu \).

As seen in (1.4), we are mostly interested in \(\mu ^*\)-null-sets; that is, subsets \(N \subset \mathcal {S}\) satisfying \(\mu ^*(N) = 0\). This holds if and only if there is \(N' \in \mathscr {A}\) satisfying \(N \subset N'\) and \(\mu (N') = 0\). Directly from the \(\sigma \)-subadditivity of \(\mu ^*\), it follows that a countable union of \(\mu ^*\)-null-sets is again a \(\mu ^*\)-null-set.

We note that the first implication in (1.4) is always satisfied, as a consequence of (1.3). The second part of (1.4) states that for any  and any codec \(\mathcal {C}\), almost every \(\mathbf {x}\in \mathcal {S}\) cannot be compressed by \(\mathcal {C}\) at rate s. In other words, whenever \(\mathbb {P}\) exhibits a compressibility phase transition on \(\mathcal {S}\), the property of not being compressible at a “larger than optimal” rate is a generic property.

and any codec \(\mathcal {C}\), almost every \(\mathbf {x}\in \mathcal {S}\) cannot be compressed by \(\mathcal {C}\) at rate s. In other words, whenever \(\mathbb {P}\) exhibits a compressibility phase transition on \(\mathcal {S}\), the property of not being compressible at a “larger than optimal” rate is a generic property.

Remark 2

-

(i)

We emphasize that the measure \(\mathbb {P}\) in Definition 1 is required to satisfy the second property in (1.4) universally for any choice of codec \(\mathcal {C}\).

In fact, if \(\mathbb {P}\) would be allowed to depend on \(\mathcal {C}\), one could simply choose \(\mathbb {P}= \delta _{\mathbf {x}}\), where \(\mathbf {x}= \mathbf {x}(\mathcal {C}, s) \in \mathcal {S}\) is a single element that is not approximated at rate s by \(\mathcal {C}\); for

such an element exists under mild assumptions on \(\mathcal {S}\); see Proposition 3.

such an element exists under mild assumptions on \(\mathcal {S}\); see Proposition 3. -

(ii)

Any measure \(\mathbb {P}\) satisfying (1.4) also satisfies \(\mathbb {P}^*(\{ \mathbf {x}\}) = 0\) (and hence \(\mathbb {P}(\{ \mathbf {x}\}) = 0\)) for each \(\mathbf {x}\in \mathcal {S}\), as can be seen by taking \({\mathcal {C}= \bigl ((E_R,D_R)\bigr )_{R \in \mathbb {N}}}\) with \(D_R : \{ 0,1 \}^R \rightarrow \mathcal {S}, c \mapsto \mathbf {x}\), so that

for all \({s > 0}\). Thus, any probability measure \(\mathbb {P}\) exhibiting a compressibility phase transition is atom free.

for all \({s > 0}\). Thus, any probability measure \(\mathbb {P}\) exhibiting a compressibility phase transition is atom free. -

(iii)

Measures satisfying (1.4) are quite special—in fact, Proposition 4 shows under mild assumptions on \(\mathcal {S}\) that the set of measures not satisfying (1.4) is generic in the set of atom-free probability measures. \(\blacktriangleleft \)

Our first main result establishes the existence of critical measures for all Sobolev- and Besov balls (denoted by \({{\,\mathrm{Ball}\,}}( 0 , 1; W^{k,p}(\varOmega ; \mathbb {R}))\), respectively \({{\,\mathrm{Ball}\,}}( 0 , 1; B_{p,q}^{\tau }(\varOmega ;\mathbb {R}))\); see Appendix C) that are compact subsets of \(L^2 (\varOmega )\):

Theorem 1

Let \(\emptyset \ne \varOmega \subset \mathbb {R}^d\) be a bounded Lipschitz domain. Consider either of the following two settings:

-

\(\mathcal {S}:= {{\,\mathrm{Ball}\,}}\big ( 0, 1; B_{p,q}^{\tau } (\varOmega ; \mathbb {R}) \big )\) and \(s^*:= \frac{\tau }{d}\), where \(p,q \in (0,\infty ]\) and \(\tau \in \mathbb {R}\) with \({\tau > d \cdot (\frac{1}{p} - \frac{1}{2})_{+}}\), or

-

\(\mathcal {S}:= {{\,\mathrm{Ball}\,}}\bigl (0, 1; W^{k,p}(\varOmega )\bigr )\) and \(s^*:= \frac{k}{d}\), where \(p \in [1,\infty ]\) and \(k \in \mathbb {N}\) with \({k > d \cdot (\frac{1}{p} - \frac{1}{2})_+}\).

In either case,  , and there exists a Borel probability measure \(\mathbb {P}\) on \(\mathcal {S}\) that exhibits a compressibility phase transition as in Definition 1.

, and there exists a Borel probability measure \(\mathbb {P}\) on \(\mathcal {S}\) that exhibits a compressibility phase transition as in Definition 1.

Proof

This follows from Theorems 7, 8, and 4. \(\square \)

Since Remark 2 shows that the measure \(\mathbb {P}\) from the preceding theorem satisfies \(\mathbb {P}(M) = 0\) for each countable set \(M \subset \mathcal {S}\), we get the following strengthening of the dichotomy (1.3).

Corollary 1

Suppose the assumptions of Theorem 1 are satisfied. Then, given any codec  the set

the set  , which consists of all signals that cannot be encoded by \(\mathcal {C}\) at compression rate s for some \(s > s^*\), is uncountable.

, which consists of all signals that cannot be encoded by \(\mathcal {C}\) at compression rate s for some \(s > s^*\), is uncountable.

In words, Corollary 1 states that for every codec the set of signals in \(\mathcal {S}\) that cannot be approximated at any compression rate larger than the optimal rate for \(\mathcal {S}\) is uncountable. In contrast, previous results (such as Proposition 3) only state the existence of a single such “badly approximable” signal.

1.2.2 Quantitative Lower Bounds

The statement of Theorem 1 is purely qualitative; it shows that the set of elements that are approximable at a “better than optimal rate” forms a null-set with respect to the measure \(\mathbb {P}\). In fact, the measure \(\mathbb {P}\) constructed in (the proof of) Theorem 1 satisfies a stronger, quantitative condition: If one randomly draws a function \(f \sim \mathbb {P}\) using the probability measure \(\mathbb {P}\), one can precisely bound the probability that a given encoding/decoding pair \((E_R,D_R)\) of code-length R achieves a given error \(\varepsilon \) for f. To underline this probabilistic interpretation, we define, for any property Q of elements \(f \in \mathcal {S}\),

where \(\mathbb {P}^*\) denotes the outer measure induced by \(\mathbb {P}\).

Theorem 2

Let \(\mathcal {S}\) and \(s^*\) as in Theorem 1. Let the probability measure \(\mathbb {P}\) on \(\mathcal {S}\) as constructed in (the proof of) Theorem 1, and let us use the notation from Eq. (1.6).

Then, for any \(s > s^*\) there exist \(c, \varepsilon _0 > 0\) (depending on \(\mathcal {S},s\)) such that for arbitrary \(R \in \mathbb {N}\) and  it holds that

it holds that

Proof

This follows from Theorem 4 and (the proof of) Theorem 1. \(\square \)

Theorem 2 is interesting due to its nonasymptotic nature. Indeed, given a fixed budget of R bits and a desired accuracy \(\varepsilon \), it provides a partial answer to the question:

How likely is one to succeed in describing a random \(f \in \mathcal {S}\) to within accuracy \(\varepsilon \) using R bits?

Figure 1 provides an illustration of the phase transition behavior in dependence of \(\varepsilon \) and R; it graphically shows that the transition is quite sharp.

For \(\mathcal {S}\) a Sobolev or Besov ball, Theorem 2 provides bounds on the probability of being able to describe a random function \(f\in \mathcal {S}\) to within accuracy \(\varepsilon \) using R bits. This probability is, for every \(s > s^*\) and \(\varepsilon \in (0,\varepsilon _0)\) (\(s^*\) denoting the optimal compression rate of \(\mathcal {S}\)), upper bounded by \(E_s(R,\varepsilon ) := \min \bigl \{1,2^{R-c\cdot \varepsilon ^{-1/s}}\bigr \}\). In this figure, we show two plots of the function \(E_s\) over the \((R,1/\varepsilon )\)-plane. Both grayscale plots show \(E_s\) for \(s = 2.002 > s^*= 2\) and \(c = 1\), while the red curve indicates the critical region where \(R = (1/\varepsilon )^{1/s^*}\). We see that a sharp phase transition occurs in the sense that above and slightly below the critical curve \(R = \varepsilon ^{-1/2}\) (white area) the upper bound \(E_s\) does not rule out the possibility that it is always possible to describe \(f\in \mathcal {S}\) to within accuracy \(\varepsilon \) using R bits; but even slightly below the critical curve (dark area) the bound \(E_s\) shows that such a compression is almost impossible. The sharpness of the phase transition is more clearly shown in the zoomed part of the figure. The bottom plot further illustrates the quantitative behavior by using a logarithmic colormap. Note that in the bottom plot two different colormaps are used for the range \([-100,0]\) and the remaining range \([-1000, -100)\)

1.2.3 Lower Bounds for Neural Network Approximation

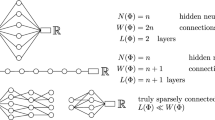

As an application, we draw a connection between the previously described results and the approximation properties of neural networks. In a nutshell, a neural network alternatingly applies affine-linear maps and a so-called activation function \(\varrho : \mathbb {R}\rightarrow \mathbb {R}\) that acts componentwise on vectors, meaning \(\varrho \bigl ( (x_1,\dots ,x_m) \bigr ) = \bigl (\varrho (x_1),\dots ,\varrho (x_m)\bigr )\). More precisely, we will use the following mathematical formalization of (fully connected, feed forward) neural networks [29].

Definition 2

Let \(d,L \in \mathbb {N}\) and \(\mathbf {N} = (N_0,\dots ,N_L) \subset \mathbb {N}\) with \(N_0 = d\). We say that \(\mathbf {N}\) is a network architecture, where L describes the number of layers of the network and \(N_\ell \) is the number of neurons in the \(\ell \)-th layer.

A neural network (NN) with architecture \(\mathbf {N}\) is a tuple

of matrices \(A_\ell \in \mathbb {R}^{N_\ell \times N_{\ell - 1}}\) and bias vectors \(b_\ell \in \mathbb {R}^{N_\ell }\). Given a function \(\varrho : \mathbb {R}\rightarrow \mathbb {R}\), called the activation function, the mapping computed by the network \(\varPhi \) is defined as

Here, we use the convention \(\varrho ( (x_1,\dots ,x_m) ) = (\varrho (x_1),\dots ,\varrho (x_m))\), i.e., \(\varrho \) acts componentwise on vectors.

The complexity of \(\varPhi \) is mainly described by the number \(L(\varPhi ) := L\) of layers, the number \(W(\varPhi ) := \sum _{\ell =1}^L \big ( \Vert A_\ell \Vert _{\ell ^0} + \Vert b_\ell \Vert _{\ell ^0} \big )\) of weights (or connections), and the number \(N(\varPhi ) := \sum _{\ell =0}^L N_\ell \) of neurons of \(\varPhi \). Here, for a matrix or vector A, we denote by \(\Vert A \Vert _{\ell ^0}\) the number of nonzero entries of A. Furthermore, we set \(d_{\mathrm {in}}(\varPhi ) := N_0\) and \(d_{\mathrm {out}}(\varPhi ) := N_L\).

In addition to the number of layers, neurons, and weights of a network, we will also be interested in the complexity of the individual weights and biases of the network, i.e., of the entries of the (weight) matrices \(A_\ell \) and the bias vectors \(b_\ell \). Thus, define

We say that \(\varPhi \) is \((\sigma ,W)\)-quantized if all entries of the matrices \(A_\ell \) and the vectors \(b_\ell \) belong to \(G_{\sigma ,W}\).

The set \(G_{\sigma ,W}\) in (1.7) contains all real numbers that belong to the grid \(2^{-\sigma \lceil \log _2 W \rceil ^2} \mathbb {Z}\) and simultaneously to the interval \(\bigl [ - W^{\sigma \lceil \log _2 W \rceil }, W^{\sigma \lceil \log _2 W \rceil } \bigr ]\); thus, the grid gets arbitrarily fine and the interval gets arbitrarily large as \(W \rightarrow \infty \), where the parameter \(\sigma \) determines how fast this happens. We note that in applications one necessarily deals with quantized NNs due to the necessity to store and process the weights on a digital computer. Regarding function approximation by such quantized neural networks, we have the following result:

Theorem 3

Let \(\varrho : \mathbb {R}\rightarrow \mathbb {R}\) be measurable and let \(d, \sigma \in \mathbb {N}\). For \(W \in \mathbb {N}\), define

Let \(\mathcal {S}\), \(s^*\), and \(\mathbb {P}\) as in Theorem 1. Then, using the notation (1.6), the following hold:

-

1.

There exists \(C = C(d,\sigma ) \in \mathbb {N}\) such that for each \(s > s^*\) there are \(c, \varepsilon _0 > 0\) satisfying

$$\begin{aligned} \mathrm {Pr} \Bigl (\, \min _{g \in \mathcal {NN}_{d,W}^{\sigma ,\varrho }} \Vert f - g \Vert _{L^2(\varOmega )} \le \varepsilon \Bigr ) \le 2^{C \cdot W \, \lceil \log _2 (1+W) \rceil ^2 - c \cdot \varepsilon ^{-1/s}} \,\, \forall \, \varepsilon \in (0,\varepsilon _0) . \end{aligned}$$(1.8)In fact, one can choose \(C = 4 + 4 \lceil \log _2(4 e d) \rceil + 8 \sigma \).

-

2.

If we define \(W_{\sigma ,\varrho } (f;\varepsilon ) \in \mathbb {N}\cup \{ \infty \}\) by

$$\begin{aligned} W_{\sigma ,\varrho } (f;\varepsilon ) := \inf \Bigl \{ W \in \mathbb {N}\,\,\, :\,\,\, \exists \, g \in \mathcal {NN}_{d,W}^{\sigma ,\varrho } \text { such that } \Vert f - g \Vert _{L^2(\varOmega )} \le \varepsilon \Bigr \} \end{aligned}$$and furthermore set

$$\begin{aligned} \mathcal {A}_{\mathcal {NN},\varrho }^*:= \bigg \{ f \in \mathcal {S}\,\,\,:\,\, \begin{array}{l} \exists \, \tau \in (0, \tfrac{1}{s^*}), \sigma \in \mathbb {N}, C > 0 \\ \forall \, \varepsilon \in (0, 1): W_{\sigma ,\varrho }(f;\varepsilon ) \le C \cdot \varepsilon ^{-\tau } \end{array} \bigg \} , \end{aligned}$$then \(\mathbb {P}^*( \mathcal {A}_{\mathcal {NN},\varrho }^*) = 0\).

Proof

The proof of this theorem is deferred to Appendix F. \(\square \)

In a nutshell, Eq. (1.8) states the following: If one draws a random function f from \(\mathbb {P}\), then with probability at least \(1 - 2^{C \cdot W \, \lceil \log _2 (1+W) \rceil ^2 - c \cdot \varepsilon ^{-1/s}}\), the function f will have \(L^2\) distance at least \(\varepsilon \) to every network from the class \(\mathcal {NN}_{d,W}^{\sigma ,\varrho }\). Consequently, Eq. (1.8) implies that the network size W has to scale at least like \(W \gtrsim \varepsilon ^{-1/s^*}\) (up to log factors) to succeed with high probability if \(\mathcal {S}\) is a Sobolev- or Besov ball with optimal exponent  . In particular, if one uses any learning procedure \(\mathrm {Learn} : \mathcal {S}\rightarrow \mathcal {NN}_{d,W}^{\sigma ,\varrho }\)— not necessarily a typical algorithm like (stochastic) gradient descent— and hopes to achieve \(\Vert f - \mathrm {Learn}(f) \Vert _{L^2(\varOmega )} \le \varepsilon \), then Eq. (1.8) provides an upper bound on the probability of success.

. In particular, if one uses any learning procedure \(\mathrm {Learn} : \mathcal {S}\rightarrow \mathcal {NN}_{d,W}^{\sigma ,\varrho }\)— not necessarily a typical algorithm like (stochastic) gradient descent— and hopes to achieve \(\Vert f - \mathrm {Learn}(f) \Vert _{L^2(\varOmega )} \le \varepsilon \), then Eq. (1.8) provides an upper bound on the probability of success.

Remark 3

(Further discussion of Theorem 3) It might seem peculiar at first sight that the depth of the approximating networks does not seem to play a role in Theorem 3, even though deeper networks should intuitively have better approximation properties. To understand how this can be, note that the theorem only provides a hardness result: it gives a lower bound on how well arbitrarily deep (quantized) networks can approximate functions from the class \(\mathcal {S}\).

Whether this lower bound is sharp (i.e., whether the critical rate \(s^*\) can be attained using suitable networks) then depends on the chosen activation function and the network depth. For instance, for approximating \(C^k\) functions, a result by Mhaskar [27] shows that shallow networks with smooth, non-polynomial activation functions already attain the optimal rates. For networks with the (non-smooth) ReLU activation function \(\varrho (x) = \max \{ 0,x \}\), on the other hand, it is known that (somewhat) deep networks are necessary to attain the optimal rates; more precisely, one needs ReLU networks of depth \(\mathcal {O}(1 + \frac{k}{d})\) to achieve the optimal approximation rates for \(C^k\) functions on \([0,1]^d\); see [29, Theorem C.6] and [31, 32].

In fact, the optimal rates predicted by Theorem 3 are attained (up to log factors) by sufficiently deep ReLU networks and domain \(\varOmega = [0,1]^d\). Precisely, there exist \(C = C(\mathcal {S}) > 0\) and \(\sigma = \sigma (\mathcal {S}) \in \mathbb {N}\) such that

where \(\tau \in (0, \frac{1}{s^*})\) is arbitrary. This follows from results in [13, 34]. Since the details are mainly technical, the proof is deferred to Appendix F. We remark that by similar arguments as in [13, 34], one can also prove the sharpness for other activation functions than the ReLU and other domains than \([0,1]^d\). \(\blacktriangleleft \)

1.3 Related Literature and Concepts

1.3.1 Minimax Optimality in Approximation Theory

Many (optimality) results in approximation theory are formulated in a minimax sense, meaning that one precisely characterizes the asymptotic decay of

where \(\mathcal {S}\subset \mathbf {X}\) is the class of signals to be approximated, and \(M_n \subset \mathbf {X}\) contains all functions “of complexity n,” for example polynomials of degree n or shallow neural networks with n neurons, etc. As recent examples of such results related to neural networks, we mention [4, 29, 41].

A minimax lower bound of the form \(d_{\mathbf {X}} (\mathcal {S}, M_n) \gtrsim n^{-s^*}\), however, only makes a claim about the possible worst case of approximating elements \(f \in \mathcal {S}\). In other words, such an estimate in general only guarantees that there is at least one “hard to approximate” function \(f^*\in \mathcal {S}\) satisfying \(\inf _{g \in M_n} \Vert f^*- g \Vert _{\mathbf {X}} \gtrsim n^{-s}\) for each \(s > s^*\), but nothing is known about how “massive” this set of “hard to approximate” functions is, or about the “average case.”

1.3.2 Results Quantifying the “mass” of Hard-To-Approximate Functions

The first paper to address this question—and one of the main sources of inspiration for the present paper—is [24]. In that paper, Maiorov, Meir, and Ratsaby consider essentially the “\(L^2\)-Besov-space type” signal class \(\mathcal {S}= \mathcal {S}_r\) of functions \(f \in L^2(\mathbb {B}_d)\) (with \({\mathbb {B}_d = \{ x \in \mathbb {R}^d :\Vert x \Vert _2 \le 1 \}}\)) that satisfy

where \( \mathscr {P}_K = \mathrm {span} \bigl \{ x^\alpha :\alpha \in \mathbb {N}_0^d \text { with } |\alpha | \le K \bigr \} \) denotes the space of d-variate polynomials of degree at most K. On this signal class, they construct a probability measure \(\mathbb {P}\) such that given the subset of functions

one obtains the minimax asymptotic \( d_{L^2}(\mathcal {S}_r, M_n) \asymp n^{-r/(d-1)}, \) but furthermore there exists \(c > 0\) such that

In other words, the measure of the set of functions for which the minimax asymptotic is sharp tends to 1 for \(n \rightarrow \infty \). In this context, we would also like to mention the recent article [23], in which the results of [24] are extended to cover more general signal classes and approximation in stronger norms than the \(L^2\) norm.

While we draw heavily on the ideas from [24] for the construction of the measure \(\mathbb {P}\) in Theorem 1, it should be noted that we are interested in phase transitions for general encoding/decoding schemes, while [23, 24] exclusively focus on approximation using the ridge function classes \(M_n\).

1.3.3 Baire Category

Extending the scale of \(C^k\) spaces (\(k \in \mathbb {N}_0\)) to the scale of Hölder-spaces \(C^\beta \), \(\beta \ge 0\), it is well-known that \(C^\beta \) is of first category (or meager) in \(C^\eta \) if \(\beta > \eta \). Similarly, under mild regularity conditions on the signal class \(\mathcal {S}\subset \mathbf {X}\), one can show for every codec  that the set

that the set  of signals \(\mathbf {x}\in \mathcal {S}\) that are encodable by \(\mathcal {C}\) at a “better than optimal” rate

of signals \(\mathbf {x}\in \mathcal {S}\) that are encodable by \(\mathcal {C}\) at a “better than optimal” rate  is meager in \(\mathcal {S}\); for instance, this holds if \(\mathcal {S}\) is compact and convex; see Proposition 3.

is meager in \(\mathcal {S}\); for instance, this holds if \(\mathcal {S}\) is compact and convex; see Proposition 3.

Thus, if one could construct a Borel probability measure \(\mathbb {P}\) on \(\mathcal {S}\) satisfying \(\mathbb {P}^*(M) = 0\) for every set \(M \subset \mathcal {S}\) that is meager in \(\mathcal {S}\), then \(\mathbb {P}\) would automatically satisfy the phase transition (1.4). In most cases, however, it turns out that no such measure exists.

Indeed, assuming that such a measure exists and that \(\mathcal {S}\) has no isolated points, every singleton \(\{ \mathbf {x}\} \subset \mathcal {S}\) is meager in \(\mathcal {S}\), so that \(\mathbb {P}(\{ \mathbf {x}\}) = 0\) for every \(\mathbf {x}\in \mathcal {S}\). Therefore, if \(\mathcal {S}\), equipped with the topology induced by \(\Vert \cdot \Vert _{\mathbf {X}}\), has a base whose cardinal has measure zero (see below for details), then [28, Theorem 16.5] shows that one can write \(\mathcal {S}= N \cup M\), where \(M \subset \mathcal {S}\) is meager and \(N \subset \mathcal {S}\) satisfies \(\mathbb {P}(N) = 0\), leading to \(1 = \mathbb {P}(\mathcal {S}) \le \mathbb {P}^*(N) + \mathbb {P}^*(M) = 0\), a contradiction. Regarding the assumption on the existence of a base whose cardinal has measure zero, note that if \(\mathcal {S}\) is relatively compact (which always holds if  ), then \(\mathcal {S}\) is separable and hence has a countable base, whose cardinal thus has measure zero; see [28, Page 63]. In summary, if \(\mathcal {S}\subset \mathbf {X}\) is relatively compact and has no isolated points, then there does not exist a Borel probability measure \(\mathbb {P}\) on \(\mathcal {S}\) satisfying \(\mathbb {P}^*(M) = 0\) for every set \(M \subset \mathcal {S}\) of first category.

), then \(\mathcal {S}\) is separable and hence has a countable base, whose cardinal thus has measure zero; see [28, Page 63]. In summary, if \(\mathcal {S}\subset \mathbf {X}\) is relatively compact and has no isolated points, then there does not exist a Borel probability measure \(\mathbb {P}\) on \(\mathcal {S}\) satisfying \(\mathbb {P}^*(M) = 0\) for every set \(M \subset \mathcal {S}\) of first category.

One could further ask how “special” measures satisfying the phase transition (1.4) are; more precisely: Is the set of probability measures \(\mathbb {P}\) satisfying (1.4) generic in the set of (atom-free) probability measures? This is not the case; in fact, Proposition 4 shows under very mild assumptions that if one equips the set of atom-free probability measures on \(\mathcal {S}\) with the total variation metric, then the set of measures satisfying (1.4) is meager. In other words, the set of measures not satisfying (1.4) is generic as a subset of all atom-free Borel probability measures on \(\mathcal {S}\).

1.3.4 Small ball probabilities and Gaussian measures

An important notion that we introduce and study in this article are measures of logarithmic growth order \(s_0\), which are measures satisfying a certain small ball condition; see Eq. (1.9) below. Such small ball conditions have been extensively studied in the theory of Gaussian measures; see for instance [21, 22]. An important result in that area of research shows that the small ball probability of a Gaussian measure \(\mu \) is closely related to the behavior of the entropy numbers of the unit ball \(K_\mu \) of a certain reproducing kernel Hilbert space \(H_{\mu }\) associated with \(\mu \).

In seeming similarity, we are concerned with constructing a probability measure \(\mathbb {P}\) supported on a given set \(\mathcal {S}\) such that \(\mathbb {P}\) satisfies a certain small ball property, depending on the optimal exponent  of \(\mathcal {S}\), which is intimately related to the behavior of the entropy numbers of \(\mathcal {S}\). As far as we can tell, however, the similarity is only superficial, meaning that the main similarity is simply that both results are concerned with measures satisfying small ball properties and the relation to entropy numbers.

of \(\mathcal {S}\), which is intimately related to the behavior of the entropy numbers of \(\mathcal {S}\). As far as we can tell, however, the similarity is only superficial, meaning that the main similarity is simply that both results are concerned with measures satisfying small ball properties and the relation to entropy numbers.

To see that the questions considered in [21, 22] are different from the ones studied here, note that the Gaussian measures considered in [21, 22] are not supported on \(K_\mu \) and furthermore that the entropy numbers of \(K_\mu \) always satisfy \(H(K_\mu , \varepsilon ) \in o(\varepsilon ^{-2})\) as \(\varepsilon \rightarrow 0\), a property that is in general not shared by the signal classes \(\mathcal {S}= {{\,\mathrm{Ball}\,}}\big ( 0, 1; B_{p,q}^{\tau } (\varOmega ; \mathbb {R}) \big )\) and \(\mathcal {S}:= {{\,\mathrm{Ball}\,}}\bigl (0, 1; W^{k,p}(\varOmega )\bigr )\) that we consider.

Finally, we mention that a (non-trivial) modification of our proof shows that the measure \(\mathbb {P}\) constructed in Theorem 1 can be chosen to be (the restriction of) a suitable centered Gaussian measure.

1.3.5 Optimality Results for Neural Network Approximation

We emphasize that our lower bounds for neural network approximation consider networks with quantized weights, as in [4, 29]. The main reason is that without such an assumption, even networks with two hidden layers and a fixed number of neurons can approximate any function arbitrarily well if the activation function is chosen suitably; see [25, Theorem 4] and [40]. Moreover, even if one considers the popular ReLU activation function, it was recently observed that the optimal approximation rates for networks with quantized weights can in fact be doubled by using arbitrarily deep ReLU networks with highly complex (non-quantized) weights [41].

1.4 Structure of the Paper and Proof Ideas

In Sect. 2, we introduce and study a class of probability measures with a certain growth behavior. More precisely, we say that \(\mathbb {P}\) is of logarithmic growth order \(s_0\) on \(\mathcal {S}\subset \mathbf {X}\) if for each \(s > s_0\) there exist \(\varepsilon _0 = \varepsilon _0 (s) > 0\) and \(c = c(s) > 0\) satisfying

Here, as in the rest of the paper, \({{\,\mathrm{Ball}\,}}(\mathbf {x},\varepsilon ;\mathbf {X})\) is the closed ball around \(\mathbf {x}\) of radius \(\varepsilon \) with respect to \(\Vert \cdot \Vert _{\mathbf {X}}\). A probability measure has critical growth if its logarithmic growth order equals the optimal compression rate  . We show in particular that every critical probability measure exhibits a compressibility phase transition as in Definition 1, and we show how critical probability measures can be transported from one set to another.

. We show in particular that every critical probability measure exhibits a compressibility phase transition as in Definition 1, and we show how critical probability measures can be transported from one set to another.

Intuitively, the natural way to construct a probability measure \(\mathbb {P}\) satisfying (1.9) is to make the measure “as uniform as possible,” so that each ball \({{\,\mathrm{Ball}\,}}(\mathbf {x},\varepsilon ;\mathbf {X})\) contains roughly the same (small) volume. At first sight, it is thus natural to choose \(\mathbb {P}\) to be translation invariant. It is well-known, however, (see, e.g., [17, Page 218]) that there does not exist any non-trivial locally finite, translation invariant measure on an infinite-dimensional, separable Banach space.

Therefore, we construct a measure \(\mathbb {P}\) satisfying (1.9) in the setting of certain sequence spaces, where we can exploit the product structure of the signal class \(\mathcal {S}\) to make the measure as uniform as possible—this technique was pioneered in [24]. More precisely, in Sect. 3, we study the sequence spaces  , which are essentially the coefficient spaces associated with Besov spaces. By modifying the construction given in [24], we construct probability measures of critical growth on the unit balls

, which are essentially the coefficient spaces associated with Besov spaces. By modifying the construction given in [24], we construct probability measures of critical growth on the unit balls  of the spaces

of the spaces  , for the range of parameters for which the embedding

, for the range of parameters for which the embedding  is compact. For the case \(q = \infty \), we directly use the product structure of the spaces to construct the measure; the construction for the case \(q < \infty \) uses the measure from the case \(q = \infty \), combined with a technical trick (namely, introducing an additional weight).

is compact. For the case \(q = \infty \), we directly use the product structure of the spaces to construct the measure; the construction for the case \(q < \infty \) uses the measure from the case \(q = \infty \), combined with a technical trick (namely, introducing an additional weight).

The construction of critical measures on the unit balls of Besov and Sobolev spaces is then accomplished in Sect. 4, by using wavelet systems to transfer the critical measure from the sequence spaces to the function spaces. This makes heavy use of the transfer results established in Sect. 2.

A host of more technical proofs are deferred to the appendices.

1.5 Notation

We write \(\mathbb {N}:= \{1,2,3,\dots \}\) for the set of natural numbers, and \(\mathbb {N}_0 := \{0\} \cup \mathbb {N}\) for the natural numbers including zero. The number of elements of a set M is denoted by \(|M| \in \mathbb {N}_0 \cup \{ \infty \}\). For \(n \in \mathbb {N}_0\), we define \([n] := \{ k \in \mathbb {N}:k \le n \}\); in particular, \([0] = \emptyset \).

For \(x \in \mathbb {R}\), we write \(x_+ := \max \{ 0, x \}\) and \(x_{-} := (-x)_+ = \max \{ 0, -x \}\).

We assume all vector spaces to be over \(\mathbb {R}\), unless explicitly stated otherwise.

For a given (quasi)-normed vector space \((\mathbf {X}, \Vert \cdot \Vert )\), we denote the closed ball of radius \(r \ge 0\) around \(\mathbf {x}\in \mathbf {X}\) by

If we want to emphasize the quasi-norm (for example, if multiple quasi-norms are considered on the same space \(\mathbf {X}\)), we write \({{\,\mathrm{Ball}\,}}( \mathbf {x}, r; \Vert \cdot \Vert )\) instead.

We say that a subset \(\mathcal {S}\) of a topological space \(\mathbf {X}\) is relatively compact, if the closure \({\overline{\mathcal {S}}}\) of \(\mathcal {S}\) in \(\mathbf {X}\) is compact. If \(\mathbf {X}\) is a complete metric space with metric d, then this holds if and only if \(\mathcal {S}\) is totally bounded, meaning that for every \(\varepsilon > 0\) there exist finitely many \(\mathbf {x}_1,\dots ,\mathbf {x}_N \in \mathbf {X}\) satisfying \(\mathcal {S}\subset \bigcup _{i=1}^N \{ \mathbf {x}\in \mathbf {X}:d(\mathbf {x},\mathbf {x}_i) \le \varepsilon \}\); see [14, Theorem 0.25].

For an index set \(\mathcal {I}\) and an integrability exponent \(p \in (0,\infty ]\), the sequence space \(\ell ^p (\mathcal {I}) \subset \mathbb {R}^{\mathcal {I}}\) is

where \(\Vert \mathbf {x}\Vert _{\ell ^p} := \bigl (\sum _{i \in \mathcal {I}} |x_i|^p\bigr )^{1/p}\) if \(p < \infty \), while \(\Vert \mathbf {x}\Vert _{\ell ^\infty } := \sup _{i \in \mathcal {I}} |x_i|\).

A Comment on Measurability: Given a (not necessarily measurable) subset \(M \subset \mathbf {X}\) of a Banach space \(\mathbf {X}\), we will always equip M with the trace \(\sigma \)-algebra

of the Borel \(\sigma \)-algebra \(\mathscr {B}_{\mathbf {X}}\). A Borel measure on M is then a measure defined on \(M \Cap \mathscr {B}_{\mathbf {X}}\).

Note that if \((\varOmega , \mathscr {A})\) is any measurable space, then \({\varPhi : \varOmega \rightarrow M}\) is measurable if and only if it is measurable considered as a map \({\varPhi : \varOmega \rightarrow (\mathbf {X}, \mathscr {B}_{\mathbf {X}})}\).

2 General Results on Phase Transitions in Banach Spaces

In this section, we establish an abstract version of the phase transition considered in (1.4) for signal classes in general Banach spaces and a class of measures that satisfy a uniform growth property that we term “critical” (see Definition 3). We will show in Sect. 2.1 that such critical measures automatically induce a phase transition behavior. We furthermore show in Sect. 2.2 that criticality is preserved under pushforward by “nice” mappings. The existence of critical measures is by no means trivial; quite the opposite, their construction for a class of sequence spaces in Sect. 3—and for Besov and Sobolev spaces on domains in Sect. 4—constitutes an essential part of the present article.

2.1 Measures of Logarithmic Growth

Definition 3

Let \(\mathcal {S}\ne \emptyset \) be a subset of a Banach space \(\mathbf {X}\), and let \(s_0 \in [0,\infty )\).

A Borel probability measure \(\mathbb {P}\) on \(\mathcal {S}\) has (logarithmic) growth order \(s_0\) (with respect to \(\mathbf {X})\) if for every \(s > s_0\), there are constants \(\varepsilon _0, c> 0\) (depending on \(s,s_0,\mathbb {P},\mathcal {S},\mathbf {X}\)) such that

We say that \(\mathbb {P}\) is critical for \(\mathcal {S}\) (with respect to \(\mathbf {X}\)) if \(\mathbb {P}\) has logarithmic growth order  , with the optimal compression rate

, with the optimal compression rate  as defined in Eq. (1.1).

as defined in Eq. (1.1).

Remark

-

(1)

If \(\mathbb {P}\) has growth order \(s_0\), then \(\mathbb {P}\) also has growth order \(\sigma \), for arbitrary \(\sigma > s_0\).

-

(2)

Instead of Property (2.1), one could equivalently only require that the measure of balls centered at points of \(\mathcal {S}\) decays rapidly. More precisely, (2.1) is valid for certain \(\varepsilon _0,c > 0\) (depending on \(s,s_0,\mathbb {P},\mathcal {S},\mathbf {X}\)) if and only if there exist \(\varepsilon _1,\omega > 0\) (depending on \(s,s_0,\mathbb {P},\mathcal {S},\mathbf {X}\)) satisfying

$$\begin{aligned} \mathbb {P}\bigl (\mathcal {S}\cap {{\,\mathrm{Ball}\,}}(\mathbf {x}, \varepsilon ; \mathbf {X})\bigr ) \le 2^{- \omega \cdot \varepsilon ^{-1/s}} \qquad \forall \, \mathbf {x}\in \mathcal {S}\text { and } \varepsilon \in (0, \varepsilon _1) . \end{aligned}$$(2.2)Indeed, if (2.1) holds, then so does (2.2) (with \(\omega = c\) and \(\varepsilon _1 = \varepsilon _0\)). Conversely, suppose (2.2) holds for certain \(\varepsilon _1, \omega > 0\). Set \(c := \omega / 2^{1/s} > 0\) and \(\varepsilon _0 := \varepsilon _1 / 2\) and let \(\varepsilon \in (0,\varepsilon _0)\) and \(\mathbf {x}\in \mathbf {X}\) be arbitrary. First, if \(\mathcal {S}\cap {{\,\mathrm{Ball}\,}}(\mathbf {x},\varepsilon ;\mathbf {X}) = \emptyset \), then trivially \(\mathbb {P}\bigl (\mathcal {S}\cap {{\,\mathrm{Ball}\,}}(\mathbf {x},\varepsilon ;\mathbf {X})\bigr ) = 0 \le 2^{-c \cdot \varepsilon ^{-1/s}}\). Otherwise, there exists \(\mathbf {y}\in \mathcal {S}\cap {{\,\mathrm{Ball}\,}}(\mathbf {x},\varepsilon ;\mathbf {X})\) and then \({{\,\mathrm{Ball}\,}}(\mathbf {x},\varepsilon ;\mathbf {X}) \subset {{\,\mathrm{Ball}\,}}(\mathbf {y}, 2\varepsilon ; \mathbf {X})\) and \(2 \varepsilon < \varepsilon _1\). Hence, (2.2) shows

$$\begin{aligned} \mathbb {P}\big ( \mathcal {S}\cap {{\,\mathrm{Ball}\,}}(\mathbf {x},\varepsilon ;\mathbf {X}) \big ) \le \mathbb {P}\big ( \mathcal {S}\cap {{\,\mathrm{Ball}\,}}(\mathbf {y},2\varepsilon ;\mathbf {X}) \big ) \le 2^{-\omega \cdot (2\varepsilon )^{-1/s}} = 2^{-c \cdot \varepsilon ^{-1/s}} \end{aligned}$$by our choice of c. \(\blacktriangleleft \)

The motivation for considering the growth order of a measure is that it leads to bounds regarding the measure of elements \(\mathbf {x}\in \mathcal {S}\) that are well-approximated by a given codec; see Eq. (2.3) below. Furthermore, as we will see in Corollary 2, if \(\mathbb {P}\) is a probability measure of growth order \(s_0\), then necessarily  , so critical measures have the minimal possible growth order.

, so critical measures have the minimal possible growth order.

The following theorem summarizes our main structural results, showing that critical measures always exhibit a compressibility phase transition.

Theorem 4

Let the signal class \(\mathcal {S}\) be a subset of the Banach space \(\mathbf {X}\), let \(\mathbb {P}\) be a Borel probability measure on \(\mathcal {S}\) that is critical for \(\mathcal {S}\) with respect to \(\mathbf {X}\), and set  . Then, the following hold:

. Then, the following hold:

-

(i)

Let \(s > s^*\) and let \(c= c(s) > 0\) and \(\varepsilon _0 = \varepsilon _0(s)\) as in Eq. (2.1). Then, for any

, we have $$\begin{aligned} \mathrm {Pr}\big ( \Vert \mathbf {x}- D_R(E_R(\mathbf {x})) \Vert _{\mathbf {X}} \le \varepsilon \big ) \le 2^{R - c \cdot \varepsilon ^{-1/s}} \qquad \forall \, \varepsilon \in (0,\varepsilon _0) , \end{aligned}$$(2.3)

, we have $$\begin{aligned} \mathrm {Pr}\big ( \Vert \mathbf {x}- D_R(E_R(\mathbf {x})) \Vert _{\mathbf {X}} \le \varepsilon \big ) \le 2^{R - c \cdot \varepsilon ^{-1/s}} \qquad \forall \, \varepsilon \in (0,\varepsilon _0) , \end{aligned}$$(2.3)where we use the notation from Eq. (1.6).

-

(ii)

For every \(s > s^*\) and every codec

, the set

, the set  is a \(\mathbb {P}^*\)-null-set:

is a \(\mathbb {P}^*\)-null-set:

-

(iii)

For every \(0 \le s < {s^*}\), there exists a codec

with distortion

with distortion

for a constant \(C = C(s, \mathcal {C}) > 0\). In particular, the set of s-compressible signals

defined in Eq. (1.2) satisfies

defined in Eq. (1.2) satisfies  and hence

and hence  .

.

Remark

-

(1)

Note that the theorem does not make any statement about the case \(s = s^*\). In this case, the behavior depends on the specific choices of \(\mathcal {S}\) and \(\mathbb {P}\).

-

(2)

As noted above, the question of the existence of a critical probability measure \(\mathbb {P}\) is nontrivial. \(\blacktriangleleft \)

The proof of Theorem 4 is divided into several auxiliary results. Part (i) is contained in the following lemma.

Lemma 1

Let \(\mathcal {S}\ne \emptyset \) be a subset of a Banach space \(\mathbf {X}\), and let \(\mathbb {P}\) be a Borel probability measure on \(\mathcal {S}\) that is of logarithmic growth order \(s_0 \ge 0\) with respect to \(\mathbf {X}\).

Let \(s > s_0\) and let \(c= c(s) > 0\) and \(\varepsilon _0 = \varepsilon _0(s)\) as in Eq. (2.1). Then, for any \(R \in \mathbb {N}\) and  , we have

, we have

Further, for any given \(s > s_0\) and \(K > 0\) there exists a minimal code-length \(R_0 = R_0(s,s_0,K,\mathbb {P},\mathcal {S},\mathbf {X}) \in \mathbb {N}\) such that every  satisfies

satisfies

Remark

The lemma states that the measure of the subset of points \(\mathbf {x}\in \mathcal {S}\) with approximation error \(\mathcal{E}_R(\mathbf {x}) := \Vert \mathbf {x}- D_R(E_R(\mathbf {x}))\Vert _\mathbf {X}\) satisfying \(\mathcal{E}_R(\mathbf {x}) \le K \cdot R^{-s}\) for some \(s > s_0\) decreases exponentially with R. In fact, the proof shows that the approximation error is decreasing asymptotically superexponentially. \(\blacktriangleleft \)

Proof

Let \(s > s_0\) and let \(c, \varepsilon _0\) as in Eq. (2.1). For \(R \in \mathbb {N}\) and \(\varepsilon \in (0, \varepsilon _0)\), define \( A (R, \varepsilon ) := \lbrace \mathbf {x}\in \mathcal {S}:\Vert \mathbf {x}- D_R (E_R(\mathbf {x})) \Vert _{\mathbf {X}} \le \varepsilon \rbrace . \) By definition,

Since \(\mathbb {P}\) is of growth order \(s_0\) and because of \(|\mathrm {range}(D_R)| \le 2^R\), we can apply (2.1) and the definition of the outer measure \(\mathbb {P}^*\) (see Eq. (1.5)) to deduce

This proves the first part of the lemma.

To prove the second part, let \(s > s_0\), and choose \(\sigma = \frac{s + s_0}{2}\), noting that \(\sigma \in (s_0, s)\). Therefore, the first part of the lemma, applied with \(\sigma \) instead of s, yields \(c, \varepsilon _0 > 0\) such that \( \mathbb {P}^*(\{ \mathbf {x}\in \mathcal {S}:\Vert \mathbf {x}- D_R(E_R(\mathbf {x})) \Vert _{\mathbf {X}} \le \varepsilon \}) \le 2^{R - c \cdot \varepsilon ^{-1/\sigma }} \) for all \(R \in \mathbb {N}\) and \(\varepsilon \in (0, \varepsilon _0)\).

Note that \(\varepsilon := K \cdot R^{-s} \le \frac{\varepsilon _0}{2} < \varepsilon _0\) holds as soon as \(R \ge \big \lceil (2 K / \varepsilon _0)^{1/s} \, \big \rceil =: R_1\). Finally, since \(s / \sigma > 1\) we can find a code-length \(R_2 = R_2 (s,s_0,\varepsilon _0,K) \in \mathbb {N}\) such that

Overall, we thus see that (2.4) holds, with \(R_0 := \max \{ R_1, R_2 \}\). \(\square \)

Proposition 1

Let \(\mathcal {S}\ne \emptyset \) be a subset of the Banach space \(\mathbf {X}\). If \(\mathbb {P}\) is a Borel probability measure on \(\mathcal {S}\) that is of growth order \(s_0 \in [0,\infty )\), then, for every \(s > s_0\) and every codec  , we have

, we have

Proof

First, note that

where \( A^{(s)}_{K,R} := \lbrace \mathbf {x}\in \mathcal {S}:\Vert \mathbf {x}- D_R (E_R(\mathbf {x})) \Vert _{\mathbf {X}} \le K \cdot R^{-s} \rbrace . \)

By \(\sigma \)-subadditivity of \(\mathbb {P}^*\), it is thus enough to show \(\mathbb {P}^*\bigl (\bigcap _{R \in \mathbb {N}} A_{K,R}^{(s)}\bigr ) = 0\) for each \(K \in \mathbb {N}\). To see that this holds, note that Lemma 1 shows

This easily implies \(\mathbb {P}^* \bigl ( \bigcap _{R \in \mathbb {N}} A_{K,R}^{(s)}\bigr ) = 0\). \(\square \)

The proof of Theorem 4 merely consists of combining the preceding lemmas.

Proof of Theorem 4

Proof of (i): This is contained in the statement of Lemma 1.

Proof of (ii): This follows from Proposition 1.

Proof of (iii): This follows from the definition of the optimal compression rate: for \(0 \le s < s^*\) there exists a codec  such that

such that

for a constant \(C > 0\) and all \(\mathbf {x}\in \mathcal {S}\). In particular, this implies  , and therefore

, and therefore  . \(\square \)

. \(\square \)

We close this subsection by showing that if \(\mathbb {P}\) is a probability measure with logarithmic growth order \(s_0\), then this growth order is at least as large as the optimal compression rate of the set on which \(\mathbb {P}\) is defined. This justifies the nomenclature of “critical measures” as introduced in Definition 3.

Corollary 2

Let \(\mathcal {S}\ne \emptyset \) be a subset of \(\mathbf {X}\), and \(\mathbb {P}\) be a Borel probability measure on \(\mathcal {S}\) of growth order \(s_0 \ge 0\). Then,  , with

, with  as defined in Eq. (1.1).

as defined in Eq. (1.1).

Proof

Suppose for a contradiction that  , and let

, and let  . By definition of

. By definition of  , there exists a codec

, there exists a codec  such that

such that  . By Proposition 1, we thus obtain the desired contradiction

. By Proposition 1, we thus obtain the desired contradiction  . \(\square \)

. \(\square \)

2.2 Transferring Critical Measures

Our main goal in this paper is to prove a phase transition as in (1.4) for \(\mathcal {S}\) being the unit ball of suitable Besov- or Sobolev spaces. To do so, we will first prove (in Sect. 3) that such a phase-transition occurs for a certain class of sequence spaces and then, transfer this result to the Besov- and Sobolev spaces, essentially by discretizing these function spaces using suitable wavelet systems. In the present subsection, we formulate a general result that allows such a transfer from a phase transition as in (1.4) from one space to another.

The precise (very general, but slightly technical) transference result reads as follows:

Theorem 5

Let \(\mathbf {X}, \mathbf {Y}, \mathbf {Z}\) be Banach spaces, and let \(\mathcal {S}_\mathbf {X}\subset \mathbf {X}\), \(\mathcal {S}_\mathbf {Y}\subset \mathbf {Y}\), and \(\mathcal {S}\subset \mathbf {Z}\). Assume that

-

1.

;

; -

2.

there exists a Lipschitz continuous map \(\varPhi : \mathcal {S}_\mathbf {X}\subset \mathbf {X}\rightarrow \mathbf {Z}\) satisfying \(\varPhi (\mathcal {S}_{\mathbf {X}}) \supset \mathcal {S}\);

-

3.

there exists a Borel probability measure \(\mathbb {P}\) on \(\mathcal {S}_\mathbf {Y}\) that is critical for \(\mathcal {S}_{\mathbf {Y}}\) with respect to \(\mathbf {Y}\);

-

4.

there exists a (not necessarily surjective) measurable map \(\varPsi : \mathcal {S}_{\mathbf {Y}} \rightarrow \mathcal {S}\) that is expansive, meaning that there exists \(\kappa > 0\) satisfying

$$\begin{aligned} \Vert \varPsi (\mathbf {x}) - \varPsi (\mathbf {x}') \Vert _{\mathbf {Z}} \ge \kappa \cdot \Vert \mathbf {x}- \mathbf {x}' \Vert _{\mathbf {Y}} \qquad \forall \, \mathbf {x}, \mathbf {x}' \in \mathcal {S}_{\mathbf {Y}}. \end{aligned}$$

Then,  , and the push-forward measure \(\mathbb {P}\circ \varPsi ^{-1}\) is a Borel probability measure on \(\mathcal {S}\) that is critical for \(\mathcal {S}\) with respect to \(\mathbf {Z}\).

, and the push-forward measure \(\mathbb {P}\circ \varPsi ^{-1}\) is a Borel probability measure on \(\mathcal {S}\) that is critical for \(\mathcal {S}\) with respect to \(\mathbf {Z}\).

Geometric intuition behind Theorem 5. Top: An encoder/decoder pair \((E_R,D_R)\) with distortion at most \(\delta / (2L)\) corresponds to a covering of \(\mathcal {S}_{\mathbf {X}}\) by \(2^R\) balls of radius \(\delta / (2L)\), not necessarily centered inside \(\mathcal {S}_{\mathbf {X}}\) (red centers; also see Lemma 10). By doubling the radius, one can “move the centers inside \(\mathcal {S}_{\mathbf {X}}\).” Using the Lipschitz map \(\varPhi \) satisfying \(\varPhi (\mathcal {S}_{\mathbf {X}}) \supset \mathcal {S}\), this yields a covering of \(\mathcal {S}\) by \(2^R\) balls of radius \(\delta \), and hence, an encoder/decoder pair with distortion at most \(\delta \). This entails  . Bottom: Since \(\varPsi \) is expansive, the inverse image under \(\varPsi \) of a ball of radius \(\varepsilon \) is contained in a ball of radius \(2\varepsilon /\kappa \), ensuring that the push-forward measure \(\mathbb {P}\circ \varPsi ^{-1}\) is of logarithmic growth order

. Bottom: Since \(\varPsi \) is expansive, the inverse image under \(\varPsi \) of a ball of radius \(\varepsilon \) is contained in a ball of radius \(2\varepsilon /\kappa \), ensuring that the push-forward measure \(\mathbb {P}\circ \varPsi ^{-1}\) is of logarithmic growth order  on \(\mathcal {S}\), which implies

on \(\mathcal {S}\), which implies  ; see Corollary 2

; see Corollary 2

Remark

-

(1)

As mentioned in Sect. 1.5, regarding the measurability of \(\varPsi \), \(\mathcal {S}_{\mathbf {Y}}\) is equipped with the trace \(\sigma \)-algebra of the Borel \(\sigma \)-algebra on \(\mathbf {Y}\), and analogously for \(\mathcal {S}\).

-

(2)

In most practical applications of this theorem, one is given a Lipschitz continuous map \(\varPhi : \mathbf {X}\rightarrow \mathbf {Z}\) and a (not necessarily surjective) measurable, expansive map \(\varPsi : \mathbf {Y}\rightarrow \mathbf {Z}\) satisfying \(\varPhi (\mathcal {S}_{\mathbf {X}}) \supset \mathcal {S}\) and \(\varPsi (\mathcal {S}_{\mathbf {Y}}) \subset \mathcal {S}\). For greater generality, in the above theorem we only assume that \(\varPhi ,\varPsi \) are defined on \(\mathcal {S}_{\mathbf {X}}\) and \(\mathcal {S}_{\mathbf {Y}}\), respectively. \(\blacktriangleleft \)

Proof

The proof is given in Appendix A. \(\square \)

3 Proof of the Phase Transition in \(\ell ^2(\mathcal {I})\)

In this section, we provide the proof of the phase transition for a class of sequence spaces associated with Sobolev- and Besov spaces; these sequence spaces are defined in Sect. 3.1, where we also formulate the main result (Theorem 6) concerning the compressibility phase transition for these spaces. Section 3.2 establishes elementary embedding results for these spaces and provides a lower bound for their optimal compression rate; the latter essentially follows by adapting results by Leopold [20] to our setting. The construction of the critical probability measure for the sequence spaces is presented in Sect. 3.3, while the proof of Theorem 6 is given in Sect. 3.4.

3.1 Main Result

Definition 4

(d-regular partitions) Let \(\mathcal {I}\) be a countably infinite index set, and \(\mathscr {P}= (\mathcal {I}_m)_{m \in \mathbb {N}}\) be a partition of \(\mathcal {I}\); that is, \(\mathcal {I}= \biguplus _{m=1}^\infty \mathcal {I}_m\), where the union is disjoint. For \(d \in \mathbb {N}\), we call \(\mathscr {P}\) a d-regular partition, if there are \(0< a< A < \infty \) satisfying

Convention: We will always assume that \(\mathcal {I}\), \(\mathscr {P}\) and d have this meaning.

Associated with a d-regular partition, we now define the following family of weighted sequence spaces.

Definition 5

(Sequence spaces) Let \(p,q \in (0,\infty ]\) and \(\alpha , \theta \in \mathbb {R}\). For any sequence \({\mathbf {x}= (x_i)_{i \in \mathcal {I}} \in \mathbb {R}^{\mathcal {I}}}\), we define

The mixed-norm sequence space  is

is

For brevity, we also define  and

and

In the remainder of this section, we will prove the existence of a critical measure on each of the sets  , provided that \(\alpha > d \cdot (\frac{1}{2} - \frac{1}{p})_+\). In the proof, the (otherwise not really important) spaces

, provided that \(\alpha > d \cdot (\frac{1}{2} - \frac{1}{p})_+\). In the proof, the (otherwise not really important) spaces  will play an essential role. The main result of this section is thus the following theorem, the proof of which is given in Sect. 3.4 below.

will play an essential role. The main result of this section is thus the following theorem, the proof of which is given in Sect. 3.4 below.

Theorem 6

Let \(p,q \in (0,\infty ]\) and \(\alpha \in \mathbb {R}\), and assume that \(\alpha > d \cdot \big ( \frac{1}{2} - \frac{1}{p} \big )_+\). Then,  is compact and hence, Borel measurable, its optimal compression rate is given by

is compact and hence, Borel measurable, its optimal compression rate is given by  , and there exists a Borel probability measure \(\mathbb {P}_{\mathscr {P},\alpha }^{p,q}\) on

, and there exists a Borel probability measure \(\mathbb {P}_{\mathscr {P},\alpha }^{p,q}\) on  that is critical for

that is critical for  with respect to \(\ell ^2(\mathcal {I})\). In particular, the phase transition described in Theorem 4 holds.

with respect to \(\ell ^2(\mathcal {I})\). In particular, the phase transition described in Theorem 4 holds.

Remark

Explicitly, the proof shows for any \(s > s^*= \frac{\alpha }{d} - (\frac{1}{2} - \frac{1}{p})\) that

where \(\varepsilon _0 = \varepsilon _0^{(0)} \cdot (s-s^*)^{2/q} \cdot e^{-s \cdot (d+1)}\) and \(c = c^{(0)} \cdot 2^{-d} \cdot (s-s^*)^{2/(s q)}\) for constants \(\varepsilon _0^{(0)} = \varepsilon _0^{(0)} (p,q,a,A) > 0\) and \(c^{(0)} = c^{(0)}(s^*,p,q,a,A) > 0\) with a, A as in (3.1). This provides control on how fast \(c,\varepsilon _0\) deteriorate as \(s \downarrow s^*\) or \(d \rightarrow \infty \). These bounds are probably not optimal. \(\blacktriangleleft \)

3.2 Embedding Results and a Lower Bound for the Compression Rate

Having introduced the signal classes  , we now collect two technical ingredients needed to construct the measures

, we now collect two technical ingredients needed to construct the measures  on these sets: A lower bound for the optimal compression rate of

on these sets: A lower bound for the optimal compression rate of  (Proposition 2) and certain elementary embeddings between the spaces

(Proposition 2) and certain elementary embeddings between the spaces  for different choices of the parameters (Lemma 2).

for different choices of the parameters (Lemma 2).

Lemma 2

Let \(p,q,r \in (0,\infty ]\) and \(\alpha ,\theta ,\vartheta \in \mathbb {R}\). If \(q > r\) and \(\vartheta > \frac{1}{r} - \frac{1}{q}\), then  . More precisely, there exists a constant \(\kappa = \kappa (r,q,\vartheta ) \ge 1\) such that

. More precisely, there exists a constant \(\kappa = \kappa (r,q,\vartheta ) \ge 1\) such that  for all \(\mathbf {x}\in \mathbb {R}^{\mathcal {I}}\).

for all \(\mathbf {x}\in \mathbb {R}^{\mathcal {I}}\).

Proof

The claim follows by an elementary application of Hölder’s inequality; the details can be found in Appendix H. \(\square \)

The following result shows that the supremal compression rate of the class  identified in Theorem 6 is realized by a suitable codec—thus, the supremum in Eq. (1.1) is attained in this setting.

identified in Theorem 6 is realized by a suitable codec—thus, the supremum in Eq. (1.1) is attained in this setting.

Proposition 2

Let \(p,q \in (0,\infty ]\) and \(\alpha \in (0,\infty )\), and assume \({\alpha > d \cdot (\tfrac{1}{2} - \tfrac{1}{p})_+}\). Then, we have  , and the set

, and the set  is compact with

is compact with  .

.

Furthermore, there exists a codec  satisfying

satisfying

Proof

In essence, this an entropy estimate for sequence spaces; see [12]. Since the precise proof mainly consists in translating the results in [20] to our setting, it is deferred to Appendix B. \(\square \)

3.3 Construction of the Measure

We now come to the technical heart of this section—the construction of the measures  . We will provide different constructions for \(q = \infty \) and for \(q < \infty \): Since for \(q = \infty \) the class

. We will provide different constructions for \(q = \infty \) and for \(q < \infty \): Since for \(q = \infty \) the class  has a natural product structure (Lemma 3), we define the measure as a product measure (Definition 6). We then use the embedding result of Lemma 2 to transfer the measure on

has a natural product structure (Lemma 3), we define the measure as a product measure (Definition 6). We then use the embedding result of Lemma 2 to transfer the measure on  to the general signal classes

to the general signal classes  ; see Definition 7.

; see Definition 7.

We start with the elementary observation that the balls  can be written as infinite products of finite-dimensional balls.

can be written as infinite products of finite-dimensional balls.

Lemma 3

The balls of the mixed-norm sequence spaces  satisfy (up to canonical identifications) the factorization

satisfy (up to canonical identifications) the factorization

Proof

We identify \(\mathbf {x}\in \mathbb {R}^\mathcal {I}\) with  , as defined in Eq. (3.2). Set \(w_m := m^\theta \cdot 2^{\alpha m}\) for \(m \in \mathbb {N}\). The statement of the lemma then follows by recalling that

, as defined in Eq. (3.2). Set \(w_m := m^\theta \cdot 2^{\alpha m}\) for \(m \in \mathbb {N}\). The statement of the lemma then follows by recalling that

\(\square \)

With Lemma 3 in hand, we can readily define  as a product measure.

as a product measure.

Definition 6

(Measures for \(q=\infty \)) Let \(\mathscr {P}= (\mathcal {I}_m)_{m \in \mathbb {N}}\) be a d-regular partition of \(\mathcal {I}\). Let \(\mathscr {B}_{m}\) be the Borel \(\sigma \)-algebra on \(\mathbb {R}^{\mathcal {I}_m}\) and denote the Lebesgue measure on \((\mathbb {R}^{\mathcal {I}_m}, \mathscr {B}_{m})\) by \(\mu _{m}\).

For \(p \in (0,\infty ]\) and \(w_m > 0\) define the probability measure \(\mathbb {P}_{m}^{p,w_m}\) on \((\mathbb {R}^{\mathcal {I}_m}, \mathscr {B}_{m})\) by

Given \(p \in (0,\infty ]\) and \(\alpha ,\theta \in \mathbb {R}\) define \(w_m := m^\theta \cdot 2^{\alpha m}\), let \(\mathscr {B}_{\mathcal {I}}\) denote the product \(\sigma \)-algebra on \(\mathbb {R}^{\mathcal {I}}\) and define  as the product measure of the family \(\bigl (\mathbb {P}_{m}^{p, w_m}\bigr )_{m \in \mathbb {N}}\) (see, e.g., [11, Section 8.2]):

as the product measure of the family \(\bigl (\mathbb {P}_{m}^{p, w_m}\bigr )_{m \in \mathbb {N}}\) (see, e.g., [11, Section 8.2]):

With the help of the preceding results, we can now describe the construction of the measure  on

on  , also for \(q < \infty \). A crucial tool will be the embedding result from Lemma 2.

, also for \(q < \infty \). A crucial tool will be the embedding result from Lemma 2.

Definition 7

(Measures for \(q<\infty \)) Let the notation be as in Definition 6.

For given \(q \in (0,\infty ]\), choose (according to Lemma 2) a constant \(\kappa = \kappa (q) \ge 1\) (with \(\kappa = 1\) if \(q = \infty \)) such that  for all \(\mathbf {x}\in \mathbb {R}^{\mathcal {I}}\), and define

for all \(\mathbf {x}\in \mathbb {R}^{\mathcal {I}}\), and define

In the following, we verify that the measures defined according to Definitions 6 and 7 are indeed (Borel) probability measures on the signal classes  and

and  , respectively. To do so, we first show that the signal classes are measurable with respect to the product \(\sigma \)-algebra \(\mathscr {B}_{\mathcal {I}}\), and we compare this \(\sigma \)-algebra to the Borel \(\sigma \)-algebra on \(\ell ^2(\mathcal {I})\).

, respectively. To do so, we first show that the signal classes are measurable with respect to the product \(\sigma \)-algebra \(\mathscr {B}_{\mathcal {I}}\), and we compare this \(\sigma \)-algebra to the Borel \(\sigma \)-algebra on \(\ell ^2(\mathcal {I})\).

Lemma 4

Let \(\mathscr {B}_{\mathcal {I}}\) denote the product \(\sigma \)-algebra on \(\mathbb {R}^{\mathcal {I}}\) and let \(p,q \in (0,\infty ]\) and \({\alpha , \theta \in \mathbb {R}}\). Then, the (quasi)-norm  is measurable with respect to \(\mathscr {B}_{\mathcal {I}}\). In particular,

is measurable with respect to \(\mathscr {B}_{\mathcal {I}}\). In particular,  .

.

Further, the Borel \(\sigma \)-algebra \(\mathscr {B}_{\ell ^2}\) on \(\ell ^2(\mathcal {I})\) coincides with the trace \(\sigma \)-algebra \({\ell ^2(\mathcal {I}) \Cap \mathscr {B}_{\mathcal {I}}}\).

Proof

The (mainly technical) proof is deferred to Appendix H. \(\square \)

Lemma 5

-

(a)

The measure

is a probability measure on the measurable space

is a probability measure on the measurable space  .

. -

(b)

If \(\alpha > d \cdot (\frac{1}{2} - \frac{1}{p})_+\), then

, and the measure

, and the measure  is a probability measure on

is a probability measure on  , where \(\mathscr {B}_{\ell ^2}\) denotes the Borel \(\sigma \)-algebra on \(\ell ^2(\mathcal {I})\).

, where \(\mathscr {B}_{\ell ^2}\) denotes the Borel \(\sigma \)-algebra on \(\ell ^2(\mathcal {I})\).

Proof

For the first part, Lemma 4 implies that  , so that

, so that  is a measure on

is a measure on  . Furthermore, Lemma 3 and Definition 6 show

. Furthermore, Lemma 3 and Definition 6 show  .

.

For the second part, recall from Proposition 2 that  , so that Lemma 4 implies

, so that Lemma 4 implies  , which easily implies that

, which easily implies that  is a measure on

is a measure on  . Finally, observe that

. Finally, observe that  by choice of \(\kappa \) in Definition 7, and hence

by choice of \(\kappa \) in Definition 7, and hence

\(\square \)

3.4 Proof of Theorem 6

In this subsection, we prove that the measures  constructed in Definition 7 are critical, provided that \(\alpha > d \cdot (\frac{1}{2} - \frac{1}{p})_+\). An essential ingredient for the proof is the following estimate for the volumes of balls in \(\ell ^p ([m])\).

constructed in Definition 7 are critical, provided that \(\alpha > d \cdot (\frac{1}{2} - \frac{1}{p})_+\). An essential ingredient for the proof is the following estimate for the volumes of balls in \(\ell ^p ([m])\).

Lemma 6

Let \(m \in \mathbb {N}\) and \(p \in (0,\infty ]\). The m-dimensional Lebesgue measure of \({{\,\mathrm{Ball}\,}}(0,1;\ell ^p ([m]))\) is

For every \(p \in (0,\infty ]\), there exist constants \(c_p \in (0,1]\) and \(C_p \in [1,\infty )\), such that

Proof

A proof of (3.6) can be found, e.g., in [18, Theorem 5].

For proving (3.7), we use that in [18, Lemma 4] it is shown that for each \(p \in (0,\infty )\) there are constants \(\eta _p, \omega _p > 0\) satisfying

It is clear that this remains true also for \(p = \infty \); in fact, since \(\varGamma (1) = 1\), one can simply choose \(\eta _\infty = \omega _\infty = 1\) in this case.

By (3.6), we see that

and the estimate (3.8) implies

Hence, we can choose  and

and  . \(\square \)

. \(\square \)

We are finally equipped to prove Theorem 6.

Proof of Theorem 6

Step 1: We show for \(s^*:= \frac{\alpha }{d} - (\frac{1}{2} - \frac{1}{p})\) and arbitrary \(\theta \in [0,\infty )\) that the measure  has growth order \(s^*\) with respect to \(\ell ^2(\mathcal {I})\).

has growth order \(s^*\) with respect to \(\ell ^2(\mathcal {I})\).

To this end, let \(s > s^*\) be arbitrary, and let \(\varepsilon \in (0, \varepsilon _0)\) (for a suitable \(\varepsilon _0 > 0\) to be chosen below), and \(\mathbf {x}\in \ell ^2(\mathcal {I})\). We estimate the measure  by estimating the measure of certain finite-dimensional projections of the ball, exploiting the product structure of the measure: Recall the identification \(\mathbf {x}= (\mathbf {x}_m)_{m \in \mathbb {N}}\), where \(\mathbf {x}_m = \mathbf {x}|_{\mathcal {I}_m}\). Set \(w_m := m^\theta \cdot 2^{\alpha m}\) for \(m \in \mathbb {N}\), as in Definition 6. For arbitrary \(m \in \mathbb {N}\), we have

by estimating the measure of certain finite-dimensional projections of the ball, exploiting the product structure of the measure: Recall the identification \(\mathbf {x}= (\mathbf {x}_m)_{m \in \mathbb {N}}\), where \(\mathbf {x}_m = \mathbf {x}|_{\mathcal {I}_m}\). Set \(w_m := m^\theta \cdot 2^{\alpha m}\) for \(m \in \mathbb {N}\), as in Definition 6. For arbitrary \(m \in \mathbb {N}\), we have

Using the product structure of  (cf. Eq. (3.5)) and the constant \(C_p \ge 1\) from Lemma 6, we thus see for each \(m \in \mathbb {N}\) that

(cf. Eq. (3.5)) and the constant \(C_p \ge 1\) from Lemma 6, we thus see for each \(m \in \mathbb {N}\) that

From (3.1), we see that \(n_m = 2^{d m} \, \eta _m\) for a certain \(\eta _m \in [a,A]\) and hence  . Furthermore, a straightforward curve discussion of the function

. Furthermore, a straightforward curve discussion of the function  for \(\delta = (s - s^*) \cdot \ln 2\) shows that \(x^\theta \le \bigl (\frac{\theta }{e \cdot \ln 2 \cdot (s - s^*)}\bigr )^\theta \cdot 2^{x (s-s^*)}\) for all \(x > 0\), with the convention \(0^0 = 1\). Combining these observations, we see for \(K_1^{(1)} := (\theta / (e \ln 2))^\theta \) that

for \(\delta = (s - s^*) \cdot \ln 2\) shows that \(x^\theta \le \bigl (\frac{\theta }{e \cdot \ln 2 \cdot (s - s^*)}\bigr )^\theta \cdot 2^{x (s-s^*)}\) for all \(x > 0\), with the convention \(0^0 = 1\). Combining these observations, we see for \(K_1^{(1)} := (\theta / (e \ln 2))^\theta \) that

where \(K_1 = K_1(\theta ,p,a,A) \ge 1\).

For \(K_2^{(0)} := C_p K_1 \ge 1\) and \(K_2 := K_2^{(0)} / (s - s^*)^{\theta }\), we thus see that \(K_2^{(0)} = K_2^{(0)}(\theta ,p,a,A)\) and

A good candidate for an upper bound for  is associated with a positive integer close to

is associated with a positive integer close to

Choose a positive \(\varepsilon _0 > 0\) so small that \({\widetilde{m}}(\varepsilon ) > 1\) for all \(\varepsilon \in (0, \varepsilon _0)\). An easy calculation shows that one can choose \(\varepsilon _0 = 1/(K_2 \cdot e^{s(d+1)}) = \varepsilon _0^{(0)} \cdot (s-s^*)^\theta \cdot e^{-s(d+1)}\), where \(\varepsilon _0^{(0)} = \varepsilon _0^{(0)}(\theta ,p,a,A) > 0\).

Set \(m_0 := \lfloor \widetilde{m}(\varepsilon ) \rfloor \in \mathbb {N}\). Note that \(2^{d s \cdot \widetilde{m}(\varepsilon )} = e^{- s} / (K_2 \cdot \varepsilon )\), and hence \(K_2 \, \varepsilon \, 2^{d s \cdot m_0} \le e^{- s } < 1\). For the exponent in (3.9), observe that

where \(K_3 = K_3^{(0)} \cdot 2^{-d} \cdot (s-s^*)^{\theta /s}\) and \(K_3^{(0)} = K_3^{(0)}(s^*,\theta ,p,a,A) > 0\) is given by \(K_3^{(0)} = a \big / \bigl (e \cdot (K_2^{(0)})^{1/s^*}\bigr )\). Now, (3.9) can be estimated further, yielding

for \(K_4 := s^*\cdot K_3\), so that \(K_4 = K_4^{(0)} \cdot 2^{-d} \cdot (s-s^*)^{\theta /s}\) for a suitable constant \(K_4^{(0)} = K_4^{(0)}(s^*,\theta ,p,a,A) > 0\). Since \(s > s^*\) was arbitrary, this shows that  is of logarithmic growth order \(s^*\); see Definition 3.

is of logarithmic growth order \(s^*\); see Definition 3.

Step 2: We show that  is of growth order \(s^*\) with respect to \(\ell ^2(\mathcal {I})\) on

is of growth order \(s^*\) with respect to \(\ell ^2(\mathcal {I})\) on  .

.

To see this, let \(s > s^*\) be arbitrary and choose (by virtue of Step 1) \(\varepsilon _0, c > 0\) such that  for all \(\mathbf {x}\in \ell ^2(\mathcal {I})\) and \(\varepsilon \in (0, \varepsilon _0)\). From the explicit formulas given in Step 2, we see that \(\varepsilon _0 = \varepsilon _0^{(0)} \cdot (s-s^*)^{2/q} \cdot e^{-s(d+1)}\) for \(\varepsilon _0^{(0)} = \varepsilon _0^{(0)}(p,q,a,A) > 0\), and furthermore that \(c = c^{(0)} \cdot 2^{-d} \cdot (s-s^*)^{2/(s q)}\) for \(c^{(0)} = c^{(0)}(s^*,p,q,a,A) > 0\). Recall from Definition 7 that

for all \(\mathbf {x}\in \ell ^2(\mathcal {I})\) and \(\varepsilon \in (0, \varepsilon _0)\). From the explicit formulas given in Step 2, we see that \(\varepsilon _0 = \varepsilon _0^{(0)} \cdot (s-s^*)^{2/q} \cdot e^{-s(d+1)}\) for \(\varepsilon _0^{(0)} = \varepsilon _0^{(0)}(p,q,a,A) > 0\), and furthermore that \(c = c^{(0)} \cdot 2^{-d} \cdot (s-s^*)^{2/(s q)}\) for \(c^{(0)} = c^{(0)}(s^*,p,q,a,A) > 0\). Recall from Definition 7 that  for a suitable \(\kappa = \kappa (q) \ge 1\). Define \(\varepsilon _0' := \varepsilon _0 / \kappa \) and \(c' := c \cdot \kappa ^{-1/s^*}\).

for a suitable \(\kappa = \kappa (q) \ge 1\). Define \(\varepsilon _0' := \varepsilon _0 / \kappa \) and \(c' := c \cdot \kappa ^{-1/s^*}\).

Now, if \(\varepsilon \in (0,\varepsilon _0')\), then \(\kappa \varepsilon \in (0,\varepsilon _0)\) and hence

where the last step used that \(\kappa \ge 1\) and \(s > s^*\). Overall, we have shown that  is of growth order \(s^*\) with respect to \(\ell ^2(\mathcal {I})\).

is of growth order \(s^*\) with respect to \(\ell ^2(\mathcal {I})\).