Abstract

Semantic information in the human brain is organized into multiple networks, but the fine-grain relationships between them are poorly understood. In this study, we compared semantic maps obtained from two functional magnetic resonance imaging experiments in the same participants: one that used silent movies as stimuli and another that used narrative stories. Movies evoked activity from a network of modality-specific, semantically selective areas in visual cortex. Stories evoked activity from another network of semantically selective areas immediately anterior to visual cortex. Remarkably, the pattern of semantic selectivity in these two distinct networks corresponded along the boundary of visual cortex: for visual categories represented posterior to the boundary, the same categories were represented linguistically on the anterior side. These results suggest that these two networks are smoothly joined to form one contiguous map.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Data are available on Box (https://berkeley.box.com/s/l95gie5xtv56zocsgugmb7fs12nujpog) and at https://gallantlab.org/. All data other than anatomical brain images (as there is concern that anatomical images could violate participant privacy) have been shared. However, we have provided matrices that map from volumetric data to cortical flat maps for visualization purposes.

Code availability

Custom code used for cortical surface-based analyses is available at https://github.com/gallantlab/vl_interface.

References

Barsalou, L. W. Perceptual symbol systems. Behav. Brain Sci. 22, 577–609 (1999).

Damasio, A. R. The brain binds entities and events by multiregional activation from convergence zones. Neural Comput. 1, 123–132 (1989).

Ralph, M. A. L., Jefferies, E., Patterson, K. & Rogers, T. T. The neural and computational bases of semantic cognition. Nat. Rev. Neurosci. 18, 42–55 (2017).

Snowden, J. S., Goulding, P. J. & Neary, D. Semantic dementia: a form of circumscribed cerebral atrophy. Behav. Neurol. 2, 167–182 (1989).

Warrington, E. K. The selective impairment of semantic memory. Q. J. Exp. Psychol. 27, 635–657 (1975).

Wilkins, A. & Moscovitch, M. Selective impairment of semantic memory after temporal lobectomy. Neuropsychologia 16, 73–79 (1978).

Jefferies, E., Patterson, K., Jones, R. W., Bateman, D. & Lambon Ralph, M. A. A category-specific advantage for numbers in verbal short-term memory: evidence from semantic dementia. Neuropsychologia 42, 639–660 (2004).

Kramer, J. H. et al. Distinctive neuropsychological patterns in frontotemporal dementia, semantic dementia, and Alzheimer disease. Cogn. Behav. Neurol. 16, 211–218 (2003).

Hodges, J. R., Patterson, K., Oxbury, S. & Funnell, E. Semantic dementia. Progressive fluent aphasia with temporal lobe atrophy. Brain 115, 1783–1806 (1992).

Hodges, J. R. et al. The differentiation of semantic dementia and frontal lobe dementia (temporal and frontal variants of frontotemporal dementia) from early Alzheimer’s disease: a comparative neuropsychological study. Neuropsychology 13, 31–40 (1999).

Damasio, H., Grabowski, T. J., Tranel, D., Hichwa, R. D. & Damasio, A. R. A neural basis for lexical retrieval. Nature 380, 499–505 (1996).

Damasio, H., Tranel, D., Grabowski, T., Adolphs, R. & Damasio, A. Neural systems behind word and concept retrieval. Cognition 92, 179–229 (2004).

Devereux, B. J., Clarke, A., Marouchos, A. & Tyler, L. K. Representational similarity analysis reveals commonalities and differences in the semantic processing of words and objects. J. Neurosci. 33, 18906–18916 (2013).

Fairhall, S. L. & Caramazza, A. Brain regions that represent amodal conceptual knowledge. J. Neurosci. 33, 10552–10558 (2013).

Kanwisher, N., McDermott, J. & Chun, M. M. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311 (1997).

Epstein, R. & Kanwisher, N. A cortical representation of the local visual environment. Nature 392, 598–601 (1998).

Downing, P. E., Jiang, Y., Shuman, M. & Kanwisher, N. A cortical area selective for visual processing of the human body. Science 293, 2470–2473 (2001).

Huth, A. G., Nishimoto, S., Vu, A. T. & Gallant, J. L. A continuous semantic space describes the representation of thousands of object and action categories across the human brain. Neuron 76, 1210–1224 (2012).

Huth, A. G., de Heer, W. A., Griffiths, T. L., Theunissen, F. E. & Gallant, J. L. Natural speech reveals the semantic maps that tile human cerebral cortex. Nature 532, 453–458 (2016).

Deniz, F., Nunez-Elizalde, A. O., Huth, A. G. & Gallant, J. L. The representation of semantic information across human cerebral cortex during listening versus reading is invariant to stimulus modality. J. Neurosci. 39, 7722–7736 (2019).

Kay, K. N., Naselaris, T., Prenger, R. J. & Gallant, J. L. Identifying natural images from human brain activity. Nature 452, 352–355 (2008).

Mitchell, T. M. et al. Predicting human brain activity associated with the meanings of nouns. Science 320, 1191–1195 (2008).

Naselaris, T., Kay, K. N., Nishimoto, S. & Gallant, J. L. Encoding and decoding in fMRI. Neuroimage 56, 400–410 (2011).

Nishimoto, S. et al. Reconstructing visual experiences from brain activity evoked by natural movies. Curr. Biol. 21, 1641–1646 (2011).

Miller, G. A. WordNet: a lexical database for English. Commun. ACM 38, 39–41 (1995).

Nakamura, K. et al. Functional delineation of the human occipito-temporal areas related to face and scene processing. A PET study. Brain 123, 1903–1912 (2000).

Hasson, U., Harel, M., Levy, I. & Malach, R. Large-scale mirror-symmetry organization of human occipito-temporal object areas. Neuron 37, 1027–1041 (2003).

Dilks, D. D., Julian, J. B., Paunov, A. M. & Kanwisher, N. The occipital place area is causally and selectively involved in scene perception. J. Neurosci. 33, 1331–6a (2013).

Aguirre, G. K., Zarahn, E. & D’Esposito, M. An area within human ventral cortex sensitive to ‘building’ stimuli: evidence and implications. Neuron 21, 373–383 (1998).

Ono, M., Kubik, S. & Abernathy, C. D. Atlas of the Cerebral Sulci (Thieme Medical Publishers, 1990).

Friedman, L. & Glover, G. H., Fbirn Consortium. Reducing interscanner variability of activation in a multicenter fMRI study: controlling for signal-to-fluctuation-noise-ratio (SFNR) differences. Neuroimage 33, 471–481 (2006).

Ojemann, J. G. et al. Anatomic localization and quantitative analysis of gradient refocused echo-planar fMRI susceptibility artifacts. Neuroimage 6, 156–167 (1997).

Van Essen, D. C., Anderson, C. H. & Felleman, D. J. Information processing in the primate visual system: an integrated systems perspective. Science 255, 419–423 (1992).

Modha, D. S. & Singh, R. Network architecture of the long-distance pathways in the macaque brain. Proc. Natl Acad. Sci. USA 107, 13485–13490 (2010).

Ercsey-Ravasz, M. et al. A predictive network model of cerebral cortical connectivity based on a distance rule. Neuron 80, 184–197 (2013).

Visser, M., Jefferies, E. & Lambon Ralph, M. A. Semantic processing in the anterior temporal lobes: a meta-analysis of the functional neuroimaging literature. J. Cogn. Neurosci. 22, 1083–1094 (2009).

Lewis, J. W., Talkington, W. J., Puce, A., Engel, L. R. & Frum, C. Cortical networks representing object categories and high-level attributes of familiar real-world action sounds. J. Cogn. Neurosci. 23, 2079–2101 (2011).

Norman-Haignere, S., Kanwisher, N. G. & McDermott, J. H. Distinct cortical pathways for music and speech revealed by hypothesis-free voxel decomposition. Neuron 88, 1281–1296 (2015).

Levy, I., Hasson, U., Avidan, G., Hendler, T. & Malach, R. Center–periphery organization of human object areas. Nat. Neurosci. 4, 533 (2001).

Nunez-Elizalde, A. O., Huth, A. G. & Gallant, J. L. Voxelwise encoding models with non-spherical multivariate normal priors. Neuroimage 197, 482–492 (2019).

Gao, J. S., Huth, A. G., Lescroart, M. D. & Gallant, J. L. Pycortex: an interactive surface visualizer for fMRI. Front. Neuroinform. 9, 23 (2015).

Acknowledgements

We thank J. Nguyen for assistance transcribing and aligning story stimuli and B. Griffin and M.-L. Kieseler for segmenting and flattening cortical surfaces. Funding: This work was supported by grants from the National Science Foundation (NSF) (IIS1208203), the National Eye Institute (EY019684 and EY022454) and the Center for Science of Information, an NSF Science and Technology Center, under grant agreement CCF-0939370. S.F.P. was also supported by the William Orr Dingwall Neurolinguistics Fellowship. A.G.H. was also supported by the William Orr Dingwall Neurolinguistics Fellowship and the Burroughs-Wellcome Fund Career Award at the Scientific Interface.

Author information

Authors and Affiliations

Contributions

S.F.P., A.G.H. and J.L.G. conceptualized the experiment. A.G.H., N.Y.B. and F.D. collected the data. S.F.P., A.G.H., N.Y.B., J.S.G. and A.O.N.-E. contributed to analysis. S.F.P., A.G.H. and J.L.G. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Additional information

Peer review information Nature Neuroscience thanks Christopher Baldassano and Johan Carlin for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

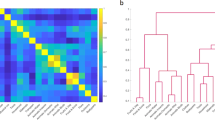

Extended Data Fig. 1 Evaluation of the visual and linguistic semantic models.

Model weights are estimated on the training dataset, then are used to predict brain activity to a held-out dataset. Prediction performance is the correlation of actual and predicted brain activity for each voxel. These performance values are presented simultaneously using a 2-dimensional colormap on the flattened cortex around the occipital pole for each subject. Red voxels are locations where the visual semantic model is performing well, blue voxels are where the linguistic semantic model is performing well, and white voxels are where both models are performing equally well. These maps show where the visual and linguistic networks of the brain abut each other.

Extended Data Fig. 2 Visual and linguistic representations of place concepts.

Identical analysis to Fig. 2a, but for the other 10 subjects. The color of each voxel indicates the representation of place-related information according to the legend at the right. The model weights for vision and language are shown in red and blue, respectively. White borders indicate ROIs found in separate localizer experiments. Three relevant place ROIs are labeled: PPA, OPA, and RSC. Centered on each ROI there is a modality shift gradient that runs from visual semantic categories (red) posterior to linguistic semantic categories (blue) anterior.

Extended Data Fig. 3 Visual and linguistic representations of body part concepts.

Identical analysis to Fig. 2b, but for the other 10 subjects. The color of each voxel indicates the representation of body-related information according to the legend at the right. The model weights for vision and language are shown in red and blue, respectively. White borders indicate ROIs found in separate localizer experiments. The relevant body ROI is labeled: EBA. Centered on each ROI there is a modality shift gradient that runs from visual semantic categories (red) posterior to linguistic semantic categories (blue) anterior.

Extended Data Fig. 4 Visual and linguistic representations of face concepts.

Identical analysis to Fig. 2c, but for the other 10 subjects. The color of each voxel indicates the representation of face-related information according to the legend at the right. The model weights for vision and language are shown in red and blue, respectively. White borders indicate ROIs found in separate localizer experiments. The relevant face ROI is labeled: FFA. Centered on each ROI there is a modality shift gradient that runs from visual semantic categories (red) posterior to linguistic semantic categories (blue) anterior.

Extended Data Fig. 5 Analysis region around the boundary of the occipital lobe.

The thin yellow line indicates the estimated border of the occipital lobe of the brain in each individual subject. This was manually drawn to follow the parieto-occipital sulcus and connect to the preoccipital notch on both ends. The area of the brain which was analyzed in this study was limited to vertices within 50 mm of this border, which is shown in black on each individual’s brain.

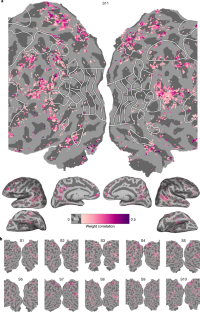

Extended Data Fig. 6 Locations of category-specific modality shifts across cortex for alternate parameter set 1.

Identical analysis to Fig. 4, but with an ROI size of 10x25mm. Shown here is the flattened cortex around the occipital pole for one typical subject, along with inflated hemispheres. The modality shift metric calculated at each location near the boundary of the occipital lobe is plotted as an arrow. The arrow color represents the magnitude of the shift. The arrow is directed to show the shift from vision to language. Only locations where the modality shift is statistically significant are shown. Areas of fMRI signal dropout are indicated with hash marks. There are strong modality shifts in a clear ring around visual cortex in the same locations seen in Fig. 4.

Extended Data Fig. 7 Locations of category-specific modality shifts across cortex for alternate parameter set 2.

Identical analysis to Fig. 4, but with an ROI size of 10x10mm. Shown here is the flattened cortex around the occipital pole for one typical subject, along with inflated hemispheres. The modality shift metric calculated at each location near the boundary of the occipital lobe is plotted as an arrow. The arrow color represents the magnitude of the shift. The arrow is directed to show the shift from vision to language. Only locations where the modality shift is statistically significant are shown. Areas of fMRI signal dropout are indicated with hash marks. There are strong modality shifts in a ring around visual cortex in the same locations seen in Fig. 4, though the pattern is more noisy due to the shortened analysis windows.

Supplementary information

Supplementary Information

Supplementary Table 1

Rights and permissions

About this article

Cite this article

Popham, S.F., Huth, A.G., Bilenko, N.Y. et al. Visual and linguistic semantic representations are aligned at the border of human visual cortex. Nat Neurosci 24, 1628–1636 (2021). https://doi.org/10.1038/s41593-021-00921-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41593-021-00921-6

This article is cited by

-

The neural and cognitive basis of expository text comprehension

npj Science of Learning (2024)

-

A retinotopic code structures the interaction between perception and memory systems

Nature Neuroscience (2024)

-

Network neurosurgery

Chinese Neurosurgical Journal (2023)

-

Immediate neural impact and incomplete compensation after semantic hub disconnection

Nature Communications (2023)

-

Semantic reconstruction of continuous language from non-invasive brain recordings

Nature Neuroscience (2023)