Abstract

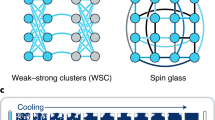

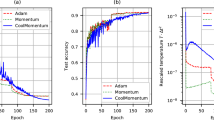

Many important challenges in science and technology can be cast as optimization problems. When viewed in a statistical physics framework, these can be tackled by simulated annealing, where a gradual cooling procedure helps search for ground-state solutions of a target Hamiltonian. Although powerful, simulated annealing is known to have prohibitively slow sampling dynamics when the optimization landscape is rough or glassy. Here we show that, by generalizing the target distribution with a parameterized model, an analogous annealing framework based on the variational principle can be used to search for ground-state solutions. Modern autoregressive models such as recurrent neural networks provide ideal parameterizations because they can be sampled exactly without slow dynamics, even when the model encodes a rough landscape. We implement this procedure in the classical and quantum settings on several prototypical spin glass Hamiltonians and find that, on average, it substantially outperforms traditional simulated annealing in the asymptotic limit, illustrating the potential power of this yet unexplored route to optimization.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

The SA code and the SQA code are publicly available at https://github.com/therooler/piqmc52. Our variational neural annealing implementation with RNNs is publicly available at https://github.com/VectorInstitute/VariationalNeuralAnnealing53. The hyperparameters we use are provided in Supplementary Appendix D.

References

Lucas, A. Ising formulations of many NP problems. Front. Phys. 2, 5 (2014).

Barahona, F. On the computational complexity of Ising spin glass models. J. Phys. A Math. Gen. 15, 3241–3253 (1982).

Kirkpatrick, S., Gelatt, C. D. & Vecchi, M. P. Optimization by simulated annealing. Science 220, 671–680 (1983).

Koulamas, C., Antony, S. & Jaen, R. A survey of simulated annealing applications to operations research problems. Omega 22, 41–56 (1994).

Hajek, B. A tutorial survey of theory and applications of simulated annealing. In 1985 24th IEEE Conference on Decision and Control 755–760 (IEEE, 1985).

Svergun, D. Restoring low resolution structure of biological macromolecules from solution scattering using simulated annealing. Biophys. J. 76, 2879–2886 (1999).

Johnson, D. S., Aragon, C. R., McGeoch, L. A. & Schevon, C. Optimization by simulated annealing: an experimental evaluation; Part II, graph coloring and number partitioning. Oper. Res. 39, 378–406 (1991).

Abido, M. A. Robust design of multimachine power system stabilizers using simulated annealing. IEEE Trans. Energy Convers. 15, 297–304 (2000).

Karzig, T., Rahmani, A., von Oppen, F. & Refael, G. Optimal control of Majorana zero modes. Phys. Rev. B 91, 201404 (2015).

Gielen, G., Walscharts, H. & Sansen, W. Analog circuit design optimization based on symbolic simulation and simulated annealing. In Proc. 15th European Solid-State Circuits Conference (ESSCIRC ’89) 252–255 (1989).

Santoro, G. E., Martoňák, R., Tosatti, E. & Car, R. Theory of quantum annealing of an Ising spin glass. Science 295, 2427–2430 (2002).

Brooke, J., Bitko, D., Rosenbaum, T. F. & Aeppli, G. Quantum annealing of a disordered magnet. Science 284, 779–781 (1999).

Mitra, D., Romeo, F. & Sangiovanni-Vincentelli, A. Convergence and finite-time behavior of simulated annealing. Adv. Appl. Probab. 18, 747–771 (1986).

Delahaye, D., Chaimatanan, S. & Mongeau, M. in Handbook of Heuristics (eds Gendreau, M. & Potvin, J. Y.) 1–35 (Springer, 2019); https://doi.org/10.1007/978-3-319-91086-4_1

Sutskever, I., Martens, J. & Hinton, G. Generating text with recurrent neural networks. In Proc. 28th International Conference on International Conference on Machine Learning (ICML ’11) 1017–1024 (Omnipress, 2011).

Larochelle, H. & Murray, I. The neural autoregressive distribution estimator. In Proc. Fourteenth International Conference on Artificial Intelligence and Statistics, Proceedings of Machine Learning Research Vol. 15 (eds Gordon, G. et al.) 29–37 (JMLR, 2011); http://proceedings.mlr.press/v15/larochelle11a.html

Vaswani, A. et al. Attention is all you need. Preprint at https://arxiv.org/pdf/1706.03762.pdf (2017).

Wu, D., Wang, L. & Zhang, P. Solving statistical mechanics using variational autoregressive networks. Phys. Rev. Lett. 122, 080602 (2019).

Sharir, O., Levine, Y., Wies, N., Carleo, G. & Shashua, A. Deep autoregressive models for the efficient variational simulation of many-body quantum systems. Phys. Rev. Lett. 124, 020503 (2020).

Hibat-Allah, M., Ganahl, M., Hayward, L. E., Melko, R. G. & Carrasquilla, J. Recurrent neural network wave functions. Phys. Rev. Res 2, 023358 (2020).

Roth, C. Iterative retraining of quantum spin models using recurrent neural networks. Preprint at https://arxiv.org/pdf/2003.06228.pdf (2020).

Feynman, R. Statistical Mechanics: a Set of Lectures (Avalon, 1998).

Long, P. M. & Servedio, R. A. Restricted Boltzmann machines are hard to approximately evaluate or simulate. In Proc. 27th International Conference on Machine Learning (ICML ’10) 703–710 (Omnipress, 2010).

Boixo, S. et al. Evidence for quantum annealing with more than one hundred qubits. Nat. Phys. 10, 218–224 (2014).

Kadowaki, T. & Nishimori, H. Quantum annealing in the transverse Ising model. Phys. Rev. E 58, 5355–5363 (1998).

Born, M. & Fock, V. Beweis des Adiabatensatzes. Z. Phys. 51, 165–180 (1928).

Mbeng, G. B., Privitera, L., Arceci, L. & Santoro, G. E. Dynamics of simulated quantum annealing in random Ising chains. Phys. Rev. B 99, 064201 (2019).

Zanca, T. & Santoro, G. E. Quantum annealing speedup over simulated annealing on random Ising chains. Phys. Rev. B 93, 224431 (2016).

Spin Glass Server (Univ. Cologne); https://software.cs.uni-koeln.de/spinglass/

Dickson, N. G. et al. Thermally assisted quantum annealing of a 16-qubit problem. Nat. Commun. 4, 1903 (2013).

Gomes, J., McKiernan, K. A., Eastman, P. & Pande, V. S. Classical quantum optimization with neural network quantum states. Preprint at https://arxiv.org/pdf/1910.10675.pdf (2019).

Sinchenko, S. & Bazhanov, D. The deep learning and statistical physics applications to the problems of combinatorial optimization. Preprint at https://arxiv.org/pdf/1911.10680.pdf (2019).

Zhao, T., Carleo, G., Stokes, J. & Veerapaneni, S. Natural evolution strategies and quantum approximate optimization. Preprint at https://arxiv.org/pdf/2005.04447.pdf (2020).

Martoňák, R., Santoro, G. E. & Tosatti, E. Quantum annealing by the path-integral Monte Carlo method: the two-dimensional random Ising model. Phys. Rev. B 66, 094203 (2002).

Mezard, M., Parisi, G. & Virasoro, M. Spin Glass Theory and Beyond (World Scientific, 1986); https://www.worldscientific.com/doi/pdf/10.1142/0271

Sherrington, D. & Kirkpatrick, S. Solvable model of a spin-glass. Phys. Rev. Lett. 35, 1792–1796 (1975).

Hamze, F., Raymond, J., Pattison, C. A., Biswas, K. & Katzgraber, H. G. Wishart planted ensemble: a tunably rugged pairwise Ising model with a first-order phase transition. Phys. Rev. E 101, 052102 (2020).

Mills, K., Ronagh, P. & Tamblyn, I. Controlled online optimization learning (COOL): finding the ground state of spin Hamiltonians with reinforcement learning. Preprint at https://arxiv.org/pdf/2003.00011.pdf (2020).

Bengio, Y., Lodi, A. & Prouvost, A. Machine learning for combinatorial optimization: a methodological tour d'horizon. Eur. J. Oper. Res. 290, 405–421 (2020).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016); http://www.deeplearningbook.org

Kelley, R. Sequence modeling with recurrent tensor networks (2016); https://openreview.net/forum?id=ROVmGqlgmhvnM0J1IpNq

Chang, S. et al. Dilated recurrent neural networks. Preprint at https://arxiv.org/pdf/1710.02224.pdf (2017).

Bengio, Y., Simard, P. & Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 5, 157–166 (1994).

Hihi, S. E. & Bengio, Y. Hierarchical recurrent neural networks for long-term dependencies. In Advances in Neural Information Processing Systems 8 (eds Touretzky, D. S. et al.) 493–499 (MIT Press, 1996); http://papers.nips.cc/paper/1102-hierarchical-recurrent-neural-networks-for-long-term-dependencies.pdf

Vidal, G. Class of quantum many-body states that can be efficiently simulated. Phys. Rev. Lett. 101, 110501 (2008).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at https://arxiv.org/pdf/1412.6980.pdf (2014).

Bravyi, S., Divincenzo, D. P., Oliveira, R. & Terhal, B. M. The complexity of stoquastic local Hamiltonian problems. Quantum Inf. Comput. 8, 361–385 (2008).

Ozfidan, I. et al. Demonstration of a nonstoquastic Hamiltonian in coupled superconducting flux qubits. Phys. Rev. Appl. 13, 034037 (2020).

Mohamed, S., Rosca, M., Figurnov, M. & Mnih, A. Monte Carlo gradient estimation in machine learning. Preprint at https://arxiv.org/pdf/1906.10652.pdf (2019).

Zhang, S.-X., Wan, Z.-Q. & Yao, H. Automatic differentiable Monte Carlo: theory and application. Preprint at https://arxiv.org/pdf/1911.09117.pdf (2019).

Norris, N. The standard errors of the geometric and harmonic means and their application to index numbers. Ann. Math. Stat. 11, 445–448 (1940).

Simulated Classical and Quantum Annealing (GitHub, 2021); https://github.com/therooler/piqmc

Variational Neural Annealing (GitHub, 2021); https://github.com/VectorInstitute/VariationalNeuralAnnealing

Acknowledgements

We acknowledge J. Raymond for suggesting to use the Wishart planted ensemble as a benchmark for our variational annealing set-up and for a careful reading of the manuscript. We also thank C. Roth, C. Zhou, M. Ganahl, S. Pilati and G. Santoro for fruitful discussions. We are also grateful to L. Hayward for providing her plotting code to produce our figures using the Matplotlib library. Our RNN implementation is based on Tensorflow and NumPy. We acknowledge support from the Natural Sciences and Engineering Research Council (NSERC), a Canada Research Chair, the Shared Hierarchical Academic Research Computing Network (SHARCNET), Compute Canada, Google Quantum Research Award and the Canadian Institute for Advanced Research (CIFAR) AI chair programme. Resources used in preparing this research were provided, in part, by the Province of Ontario, the Government of Canada through CIFAR, and companies sponsoring the Vector Institute (www.vectorinstitute.ai/#partners). Research at Perimeter Institute is supported in part by the Government of Canada through the Department of Innovation, Science and Economic Development Canada and by the Province of Ontario through the Ministry of Economic Development, Job Creation and Trade.

Author information

Authors and Affiliations

Contributions

M.H., E.M.I. and J.C. conceived and designed the research. M.H., E.M.I. and R.W. performed the numerical experiments. All authors contributed to the analysis of the results and writing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

Given the broad applicability of our strategies, we disclose that we have filed a United States provisional patent application protecting our discoveries (patent application no. 63/123,917).

Additional information

Peer review information Nature Machine Intelligence thanks Titus Neupert and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary discussion. Figs. 1–4 and Tables 1 and 2.

Rights and permissions

About this article

Cite this article

Hibat-Allah, M., Inack, E.M., Wiersema, R. et al. Variational neural annealing. Nat Mach Intell 3, 952–961 (2021). https://doi.org/10.1038/s42256-021-00401-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-021-00401-3

This article is cited by

-

Quantum approximate optimization via learning-based adaptive optimization

Communications Physics (2024)

-

Variational Monte Carlo with large patched transformers

Communications Physics (2024)

-

Enhancing combinatorial optimization with classical and quantum generative models

Nature Communications (2024)

-

Learning nonequilibrium statistical mechanics and dynamical phase transitions

Nature Communications (2024)

-

Language models for quantum simulation

Nature Computational Science (2024)