Abstract

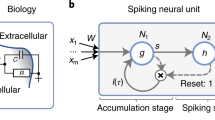

Inspired by detailed modelling of biological neurons, spiking neural networks (SNNs) are investigated as biologically plausible and high-performance models of neural computation. The sparse and binary communication between spiking neurons potentially enables powerful and energy-efficient neural networks. The performance of SNNs, however, has remained lacking compared with artificial neural networks. Here we demonstrate how an activity-regularizing surrogate gradient combined with recurrent networks of tunable and adaptive spiking neurons yields the state of the art for SNNs on challenging benchmarks in the time domain, such as speech and gesture recognition. This also exceeds the performance of standard classical recurrent neural networks and approaches that of the best modern artificial neural networks. As these SNNs exhibit sparse spiking, we show that they are theoretically one to three orders of magnitude more computationally efficient compared to recurrent neural networks with similar performance. Together, this positions SNNs as an attractive solution for AI hardware implementations.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data analysed during this study are open source and publicly available. The dataset for ECG streaming dataset is derived from original QTDB dataset (https://physionet.org/content/qtdb/1.0.0/). Spiking datasets (SHD and SSC) belong to Spiking Heidelberg Datasets, which are available at https://zenkelab.org/resources/spiking-heidelberg-datasets-shd/. The MNIST dataset can be downloaded from http://yann.lecun.com/exdb/mnist/. The Soli dataset can be downloaded at https://polybox.ethz.ch/index.php/s/wG93iTUdvRU8EaT. TIMIT Acoustic-Phonetic Continuous Speech Corpus are available on request via https://doi.org/10.35111/17gk-bn40. Further information can be found in our repository (see the Code Availability section). Source data are provided with this paper.

Code availability

The code used in the study is publicly available from the GitHub repository (https://github.com/byin-cwi/Efficient-spiking-networks).

References

Hassabis, D., Kumaran, D., Summerfield, C. & Botvinick, M. Neuroscience-inspired artificial intelligence. Neuron 95, 245–258 (2017).

Maass, W. Networks of spiking neurons: the third generation of neural network models. Neural Netw. 10, 1659–1671 (1997).

Gerstner, W., Kempter, R., Van Hemmen, J. L. & Wagner, H. A neuronal learning rule for sub-millisecond temporal coding. Nature 383, 76–78 (1996).

Davies, M. et al. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018).

Bohte, S. M., Kok, J. N. & La Poutré, J. A. SpikeProp: backpropagation for networks of spiking neurons. In European Symposium on Artificial Neural Networks (ESANN) Vol. 48, 17–37 (ESANN, 2000).

Shrestha, S. B. & Orchard, G. Slayer: spike layer error reassignment in time. In Advances in Neural Information Processing Systems Vol. 31, 1412–1421 (NeurIPS, 2018).

Zenke, F. & Ganguli, S. Superspike: supervised learning in multilayer spiking neural networks. Neural Comput. 30, 1514–1541 (2018).

Kheradpisheh, S. R., Ganjtabesh, M., Thorpe, S. J. & Masquelier, T. STDP-based spiking deep convolutional neural networks for object recognition. Neural Netw. 99, 56–67 (2018).

Falez, P., Tirilly, P., Bilasco, I. M., Devienne, P. & Boulet, P. Multi-layered spiking neural network with target timestamp threshold adaptation and STDP. In International Joint Conference on Neural Networks (IJCNN) 1–8 (IEEE, 2019).

Neftci, E. O., Mostafa, H. & Zenke, F. Surrogate gradient learning in spiking neural networks. IEEE Signal Process. Mag. 36, 61–63 (2019).

Wunderlich, T. C. & Pehle, C. Event-based backpropagation can compute exact gradients for spiking neural networks. Sci. Rep. 11, 12829 (2021).

Bellec, G. et al. A solution to the learning dilemma for recurrent networks of spiking neurons. Nat. Commun. 11, 1–15 (2020).

Sengupta, A., Ye, Y., Wang, R., Liu, C. & Roy, K. Going deeper in spiking neural networks: VGG and residual architectures. Front. Neurosci. 13, 95 (2019).

Roy, K., Jaiswal, A. & Panda, P. Towards spike-based machine intelligence with neuromorphic computing. Nature 575, 607–617 (2019).

Yin, B., Corradi, F. & Bohté, S. M. Effective and efficient computation with multiple-timescale spiking recurrent neural networks. In International Conference on Neuromorphic Systems 2020 1–8 (ACM, 2020).

Werbos, P. J. Backpropagation through time: what it does and how to do it. Proc. IEEE 78, 1550–1560 (1990).

Elfwing, S., Uchibe, E. & Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 107, 3–11 (2018).

Elfwing, S., Uchibe, E. & Doya, K. Expected energy-based restricted boltzmann machine for classification. Neural Netw. 64, 29–38 (2015).

Gerstner, W. & Kistler, W. M. Spiking Neuron Models: Single Neurons, Populations, Plasticity (Cambridge Univ. Press, 2002).

Izhikevich, E. M. Simple model of spiking neurons. IEEE Trans. Neural Netw. 14, 1569–1572 (2003).

Bellec, G., Salaj, D., Subramoney, A., Legenstein, R. & Maass, W. Long short-term memory and learning-to-learn in networks of spiking neurons. In Advances in Neural Information Processing Systems 787–797 (NeurIPS, 2018).

Bohte, S. M. Error-backpropagation in networks of fractionally predictive spiking neurons. In International Conference on Artificial Neural Networks (ICANN) 60–68 (Springer, 2011).

Wong, A., Famouri, M., Pavlova, M. & Surana, S. Tinyspeech: attention condensers for deep speech recognition neural networks on edge devices. Preprint at https://arxiv.org/abs/2008.04245 (2020).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Horowitz, M. 1.1 Computing’s energy problem (and what we can do about it). In 2014 IEEE International Solid-State Circuits Conference Digest of Technical Papers (ISSCC) 10–14 (IEEE, 2014).

Ludgate, P. E. On a proposed analytical machine. In The Origins of Digital Computers 73–87 (Springer, 1982).

Shewalkar, A., Nyavanandi, D. & Ludwig, S. A. Performance evaluation of deep neural networks applied to speech recognition: RNN, lSTM and GRU. J. Artif. Intell. Soft Comput. Res. 9, 235–245 (2019).

Laguna, P., Mark, R. G., Goldberg, A. & Moody, G. B. A database for evaluation of algorithms for measurement of QT and other waveform intervals in the ECG. In Computers in Cardiology 1997 673–676 (IEEE, 1997).

Cramer, B., Stradmann, Y., Schemmel, J. & Zenke, F. The Heidelberg spiking data sets for the systematic evaluation of spiking neural networks. IEEE Transactions on Neural Networks and Learning Systems 1–14 (IEEE, 2020); https://doi.org/10.1109/TNNLS.2020.3044364

Wang, S., Song, J., Lien, J., Poupyrev, I. & Hilliges, O. Interacting with Soli: exploring fine-grained dynamic gesture recognition in the radio-frequency spectrum. In Proc. 29th Annual Symposium on User Interface Software and Technology 851–860 (ACM, 2016).

Warden, P. Speech commands: a dataset for limited-vocabulary speech recognition. Preprint at https://arxiv.org/abs/1804.03209 (2018).

Garofolo, J. S. TIMIT Acoustic Phonetic Continuous Speech Corpus (Linguistic Data Consortium, 1993).

Pellegrini, T., Zimmer, R. & Masquelier, T. Low-activity supervised convolutional spiking neural networks applied to speech commands recognition. In 2021 IEEE Spoken Language Technology Workshop (SLT) 97–103 (IEEE, 2021).

Kundu, S., Datta, G., Pedram, M. & Beerel, P. A. Spike-thrift: Towards energy-efficient deep spiking neural networks by limiting spiking activity via attention-guided compression. In Proc. IEEE/CVF Winter Conference on Applications of Computer Vision 3953–3962 (IEEE, 2021).

Fang, W. et al. Incorporating learnable membrane time constant to enhance learning of spiking neural networks. Preprint at https://arxiv.org/abs/2007.05785 (2020).

Amir, A. et al. A low power, fully event-based gesture recognition system. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 7243–7252 (IEEE, 2017).

Paszke, A. et al. Pytorch: an imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems Vol. 32, 8024–8035 (NeurIPS, 2019).

Zenke, F. et al. Visualizing a joint future of neuroscience and neuromorphic engineering. Neuron 109, 571–575 (2021).

Zenke, F. & Neftci, E. O. Brain-inspired learning on neuromorphic substrates. Proc. IEEE Vol. 109, 1–16 (IEEE, 2021).

Keijser, J. & Sprekeler, H. Interneuron diversity is required for compartment-specific feedback inhibition. Preprint at https://doi.org/10.1101/2020.11.17.386920 (2020).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. In 3rd International Conference on Learning Representations (DBLP, 2015).

Lichtsteiner, P., Posch, C. & Delbruck, T. A 128 × 128 120 db 15 μs latency asynchronous temporal contrast vision sensor. IEEE J. Solid-State Circuits 43, 566–576 (2008).

McFee, B. et al. librosa: audio and music signal analysis in Python. In Proc.14th Python in Science Conference Vol. 8, 18–25 (SciPy, 2015).

Li, S., Li, W., Cook, C., Zhu, C. & Gao, Y. Independently recurrent neural network (indrnn): building a longer and deeper RNN. In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 5457–5466 (IEEE, 2018).

Arjovsky, M., Shah, A. & Bengio, Y. Unitary evolution recurrent neural networks. In International Conference on Machine Learning 1120–1128 (ACM, 2016).

Zenke, F. & Vogels, T. P. The remarkable robustness of surrogate gradient learning for instilling complex function in spiking neural networks. Neural Comput. 0, 1–27 (2021).

Perez-Nieves, N., Leung, V. C., Dragotti, P. L. & Goodman, D. F. Neural heterogeneity promotes robust learning. Preprint at https://www.biorxiv.org/content/10.1101/2020.12.18.423468v2.full (2021).

de Andrade, D. C., Leo, S., Viana, M. L. D. S. & Bernkopf, C. A neural attention model for speech command recognition. Preprint at https://arxiv.org/abs/1808.08929 (2018).

Graves, A. & Schmidhuber, J. Framewise phoneme classification with bidirectional lstm and other neural network architectures. Neural Netw. 18, 602–610 (2005).

Hunger, R. Floating Point Operations in Matrix-Vector Calculus (Munich Univ. Technology, 2005).

Acknowledgements

B.Y. is funded by the NWO-TTW Programme ‘Efficient Deep Learning’ (EDL) P16-25. We gratefully acknowledge the support from the organizers of the Capo Caccia Neuromorphic Cognition 2019 workshop and Neurotech CSA, as well as J. Wu and S. S. Magraner for helpful discussions.

Author information

Authors and Affiliations

Contributions

B.Y., F.C. and S.B. conceived the experiments, B.Y. conducted the experiments, B.Y., F.C. and S.B. analysed the results. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Machine Intelligence thanks Thomas Nowotny and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Effects of different time constant initialization schemes on network training and performance on the SoLi dataset.

a, Training accuracy b, Training Loss c, Mean Firing rate of the network. The MGconstant is the network where τ is initialized with a single value; for MGuniform the network is initialized with uniformly distributed time-constants near the single value of MGconstant; for MGstd5, a normal distribution with std 5.0 is used near the same single value.

Extended Data Fig. 2 SI-panel.

a, Bi-directional SRNN architecture. b, Computational cost computation of different layers for regular RNNs and GRU units. The computational complexity calculation follows50.

Extended Data Fig. 3 Variants of Multi-Gaussian gradient.

As illustrated, we remove either the left(MG-R) or right(MG-L) negative part of the Multi-Gaussian gradient for comparison, leaving on the ablated part the positive Gaussian gradient.

Extended Data Fig. 4 Study of different forms of gradients on ECG-LIF.

(a,b) shows the result of the using various Multi-Gaussian negative gradient ablations on the ECG-LIF task where the σ of the central (positive) Gaussian as defined in Eq (1) is varied. The effect of varying σ is shown for test accuracy (a) and sparsity (b). We find that also then, the standard Multi-Gaussian outperforms variations in terms of accuracy and sparsity.

Extended Data Fig. 5 A grid search was performed on the SoLi dataset and SHD for the h and s parameters of the multi-Gaussian surrogate gradient.

In the grid search, we calculated the performance of each pair of parameters by averaging the test accuracy and firing rate over tri-folder cross-validation. The white dashed line delineates the upper left region for models with high accuracy ( > 0.91) in (a) and high firing rate ( > 0.09) in (b). The red lines in (c) approximately delineate regions with accuracy above and below 0.87, and the white curve in (d) approximately demarcates models with an average firing rate above or below 0.1.

Supplementary information

Supplementary Information

Table 1

Source data

Source Data Fig. 1

Fig. 3 Source Data.

Rights and permissions

About this article

Cite this article

Yin, B., Corradi, F. & Bohté, S.M. Accurate and efficient time-domain classification with adaptive spiking recurrent neural networks. Nat Mach Intell 3, 905–913 (2021). https://doi.org/10.1038/s42256-021-00397-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-021-00397-w

This article is cited by

-

Spike frequency adaptation: bridging neural models and neuromorphic applications

Communications Engineering (2024)

-

Temporal dendritic heterogeneity incorporated with spiking neural networks for learning multi-timescale dynamics

Nature Communications (2024)

-

In AI, is bigger always better?

Nature (2023)

-

Sparse-firing regularization methods for spiking neural networks with time-to-first-spike coding

Scientific Reports (2023)

-

Accurate online training of dynamical spiking neural networks through Forward Propagation Through Time

Nature Machine Intelligence (2023)