Abstract

We consider the simplices

and

which are called the Schläfli orthoschemes of types A and B, respectively. We describe the tangent cones at their j-faces and compute explicitly the sums of the conic intrinsic volumes of these tangent cones at all j-faces of \(K_n^A\) and \(K_n^B\). This setting contains sums of external and internal angles of \(K_n^A\) and \(K_n^B\) as special cases. The sums are evaluated in terms of Stirling numbers of both kinds. We generalize these results to finite products of Schläfli orthoschemes of type A and B and, as a probabilistic consequence, derive formulas for the expected number of j-faces of the Minkowski sums of the convex hulls of a finite number of Gaussian random walks and random bridges. Furthermore, we evaluate the analogous angle sums for the tangent cones of Weyl chambers of types A and B and finite products thereof.

Similar content being viewed by others

1 Introduction

The Schläfli orthoscheme of type B in \({\mathbb {R}}^n\), denoted by \(K_n^B\), is the simplex spanned by the \(n+1\) vertices

or, equivalently,

The classical intrinsic volumes of \(K_n^B\) were computed by Gao and Vitale [13] in order to evaluate the intrinsic volumes of the so-called Brownian motion body. The Schläfli orthoscheme of type A in \({\mathbb {R}}^{n+1}\), denoted by \(K_n^A\), was studied by Gao [12] in the context of Brownian bridges and is defined as the simplex spanned by the vertices \(P_0,\ldots ,P_{n+1}\), where \(P_0:=(0,\ldots ,0)\) and

for \(i=1,\ldots ,n+1\). Equivalently, the Schläfli orthoscheme \(K_n^A\) can be expressed as

We will present further details on the probabilistic meaning of Schläfli orthoschemes in Sect. 3.1.

In the present paper, we evaluate certain angle sums or, more generally, sums of conic intrinsic volumes, of the Schläfli orthoschemes. For a polytope P, let \({\mathcal {F}}_j(P)\) denote the set of all j-dimensional faces of P. The tangent cone of P at its j-dimensional face F is the convex cone \(T_F(P)\) defined by

where v is any point in F not belonging to a face of smaller dimension. We explicitly compute the conic intrinsic volumes of the tangent cones of the Schläfli orthoschemes at their j-dimensional faces and, in particular, the sum of the conic intrinsic volumes over all such faces. The k-th conic intrinsic volume of a convex cone C, denoted by \(\upsilon _k(C)\), is a spherical or conic analogue of the usual intrinsic volume of a convex set and will be formally introduced in Sect. 2.2. Among other results, we will show that

where the numbers \(\genfrac[]{0.0pt}{}{n}{k}\) and  are the Stirling numbers of the first and second kind, respectively.

are the Stirling numbers of the first and second kind, respectively.

Furthermore, we will compute the analogous angle (and conic intrinsic volume) sums for the tangent cones of Weyl chambers of type A and B which are convex cones in \({\mathbb {R}}^n\) defined by

The corresponding formulas are given by

where the numbers B[n, k] and \(B\{n,k\}\) denote the B-analogues of the Stirling numbers of the first and second kind, respectively, which we will formally introduce in Sect. 2.3. An application of (1.1) and (1.2) to a problem of compressed sensing will be given in Sect. 3.6. Observe that in the special cases \(k=n\) and \(k=j\), (1.1) and (1.2) yield formulas for the sums of the internal and external angles of Schläfli orthoschemes and Weyl chambers.

We will generalize the above results to finite products of Schläfli orthoschemes and finite products of Weyl chambers leading to rather complicated formulas in terms of coefficients in the Taylor expansion of a certain function. The main results on angle and conic intrinsic volume sums will be stated in Sect. 3.2. As a probabilistic interpretation of these results, we consider convex hulls of Gaussian random walks and random bridges in Sect. 3.4. The expected numbers of j-faces of the convex hull of a single Gaussian random walk or a Gaussian bridge in \({\mathbb {R}}^d\) (even in a more general non-Gaussian setting) were already evaluated in [19]. Our general result on the angle sums of products of Schläfli orthoschemes yields a formula for the expected number of j-faces of the Minkowski sum of several convex hulls of Gaussian random walks or Gaussian random bridges.

It turns out that the tangent cones of the Schläfli orthoschemes (and of the Weyl chambers) are essentially products of Weyl chambers of type A and B. We will derive (1.1) and (1.2) as a special case of a more general Proposition 3.8 stated in Sect. 3.3. This proposition gives a formula for the sum of the conic intrinsic volumes of a product of Weyl chambers in terms of the generalized Stirling numbers of the first and second kind. The main ingredients in the proof of this proposition are the known formulas for the conic intrinsic volumes of Weyl chambers; see e.g. [20, Thm. 4.2] or [14, Thm. 1.1].

2 Preliminaries

In this section we collect notation and facts from convex geometry and combinatorics. The reader may skip this section at first reading and return to it when necessary.

2.1 Facts from Convex Geometry

For a set \(M\subset {\mathbb {R}}^n\) denote by \({{\,\mathrm{lin}\,}}M\) (respectively, \({{\,\mathrm{aff}\,}}M\)) its linear (respectively, affine) hull, that is, the minimal linear (respectively, affine) subspace containing M. Equivalently, \({{\,\mathrm{lin}\,}}M\) (respectively, \({{\,\mathrm{aff}\,}}M\)) is the set of all linear (respectively, affine) combinations of elements of M. The relative interior of M, denoted by \({{\,\mathrm{relint}\,}}M\), is the set of interior points of M relative to its affine hull \({{\,\mathrm{aff}\,}}M\). Let also \({{\,\mathrm{conv}\,}}M\) denote the convex hull of M which is defined as the minimal convex set containing M, or equivalently

Similarly, let \({{\,\mathrm{pos}\,}}M\) denote the positive (or conic) hull of M:

A set \(C\subset {\mathbb {R}}^n\) is called a (convex) cone if \(\lambda _1x_1+\lambda _2x_2\in C\) for all \(x_1,x_2\in C\) and \(\lambda _1,\lambda _2\ge 0\). Thus, \({{\,\mathrm{pos}\,}}M\) is the minimal cone containing M. The dual cone of a cone \(C\subset {\mathbb {R}}^n\) is defined as

where \(\langle \,{\cdot }\,,\,{\cdot }\,\rangle \) denotes the Euclidean scalar product. We will make use of the following simple duality relation that holds for arbitrary \(x_1,\ldots ,x_m\in {\mathbb {R}}^n\):

A polyhedral set \(P\subset {\mathbb {R}}^d\) is an intersection of finitely many closed half-spaces (whose boundaries need not pass through the origin). A bounded polyhedral set is called polytope. A polyhedral cone is an intersection of finitely many closed half-spaces whose boundaries contain the origin and therefore a special case of a polyhedral set. The faces of P (of arbitrary dimension) are obtained by replacing some of the half-spaces, whose intersection defines the polyhedral set, by their boundaries and taking the intersection. For \(k\in \{0,1,\ldots ,d\}\), we denote the set of k-dimensional faces of a polyhedral set P by \({\mathcal {F}}_k(P)\). Furthermore, we denote the number of k-faces of P by \(f_k(P):=|{\mathcal {F}}_k(P)|\). The tangent cone of P at a face \(F\in {\mathcal {F}}_k(P)\) is defined by

where v is any point in the relative interior of F. It is known that this definition does not depend on the choice of v. The normal cone of P at the face F is defined as the dual of the tangent cone, that is

It is easy to check that given a face F of a cone C, the corresponding normal cone \(N_F(C)\) satisfies \(N_F(C):=({{{\,\mathrm{lin}\,}}F})^\perp \cap C^\circ \), where \(L^\perp \) denotes the orthogonal complement of a linear subspace L.

2.2 Conic Intrinsic Volumes and Angles of Polyhedral Sets

Now let us introduce some geometric functionals of cones that we are going to consider. The following facts are mostly taken from [2, Sect. 2]; see also [23, Sect. 6.5]. At first, we define the conical intrinsic volumes which are the analogues of the usual intrinsic volumes in the setting of conic or spherical geometry.

Let \(C\subset {\mathbb {R}}^n\) be a polyhedral cone and g be an n-dimensional standard Gaussian random vector. Then, for \(k\in \{0,\ldots ,n\}\), the k-th conic intrinsic volume of C is defined by

Here, \(\Pi _C\) denotes the metric projection on C, that is \(\Pi _C(x)\) is the vector in C minimizing the Euclidean distance to \(x\in {\mathbb {R}}^n\). The conic intrinsic volumes of a cone C form a probability distribution on \(\{0,1,\ldots ,\dim C\}\), that is

The Gauss–Bonnet formula [2, Cor. 4.4] states that

for every cone C that is not a linear subspace, which implies that

Furthermore, the conic intrinsic volumes satisfy the product rule

where \(C_1\times \cdots \times C_m\) is the Cartesian product of \(C_1,\ldots ,C_m\). The product rule implies that the generating polynomial of the intrinsic volumes of C, defined by \(P_C(t):=\sum _{k=0}^{\dim C}\upsilon _k(C)t^k\), satisfies

For example, for an i-dimensional linear subspace L, we have \(\upsilon _k(C\times L)=\upsilon _{k-i}(C)\) for \(k\ge i\).

The solid angle (or just angle) of a cone \(C\subset {\mathbb {R}}^n\) is defined as

where Z is uniformly distributed on the unit sphere in the linear hull \({{\,\mathrm{lin}\,}}C\). Equivalently, we can take a random vector Z having a standard Gaussian distribution on the ambient linear subspace \({{\,\mathrm{lin}\,}}C\). For a d-dimensional cone \(C\subset {\mathbb {R}}^n\), where \(d\in \{1,\ldots ,n\}\), the d-th conical intrinsic volume coincides with the solid angle of C, that is

The internal angle of a polyhedral set P at a face F is defined as the solid angle of its tangent cone:

The external angle of P at a face F is defined as the solid angle of the normal cone of F with respect to P, that is

The conic intrinsic volumes of a cone \(C\subset {\mathbb {R}}^n\) can be computed in terms of the internal and external angles of its faces as follows:

Let \(W_{n-k}\subset {\mathbb {R}}^n\) be random linear subspace having the uniform distribution on the Grassmann manifold of all \((n-k)\)-dimensional linear subspaces. Then, following Grünbaum [16], the Grassmann angle \(\gamma _k(C)\) of a cone \(C\subset {\mathbb {R}}^n\) is defined as

for \(k\in \{0,\ldots ,n\}\). The Grassmann angles do not depend on the dimension of the ambient space, that is, if we embed C in \({\mathbb {R}}^N\) where \(N\ge n\), the Grassmann angle will be the same. If C is not a linear subspace, then \(\gamma _k(C)/2\) is also known as the k-th conic quermassintegral \(U_k(C)\) of C, see [17, (1)–(4)], or as the half-tail functional \(h_{k+1}(C)\), see [3]. The conic intrinsic volumes and the Grassmann angles are known to satisfy the linear relation

see [2, (2.10)], provided C is not a linear subspace.

2.3 Stirling Numbers and Their Generating Functions

In this section, we are going to recall the definitions of various kinds of Stirling numbers and their generating functions. As mentioned in the introduction, these numbers appear in various results presented in this paper.

The (signless) Stirling number of the first kind \(\genfrac[]{0.0pt}{}{n}{k}\) is defined as the number of permutations of the set \(\{1,\ldots ,n\}\) having exactly k cycles. Equivalently, these numbers can be defined as the coefficients of the polynomial

for \(n\in {\mathbb {N}}_0\), with the convention that \(\genfrac[]{0.0pt}{}{n}{k}=0\) for \(n\in {\mathbb {N}}_0\), \(k\notin \{0,\ldots ,n\}\), and \(\genfrac[]{0.0pt}{}{0}{0}=1\). By [22, (1.9),(1.15)], the Stirling numbers of the first kind can also be represented as the following sum:

The B-analogues of the Stirling numbers of the first kind, denoted by B[n, k], are defined as the coefficients of the polynomial

for \(n\in {\mathbb {N}}_0\) and, by convention, \(B[n,k]=0\) for \(k\notin \{0,\ldots ,n\}\). These numbers appear as entry A028338 in [24]. The exponential generating functions of the arrays \(\bigl (\genfrac[]{0.0pt}{}{n}{k}\bigr )_{n,k\ge 0}\) and \((B[n,k])_{n,k\ge 0}\) are given by

and

see [14, Prop. 2.3] for the proof of (2.13).

The Stirling number of the second kind  is defined as the number of partitions of the set \(\{1,\ldots ,n\}\) into k non-empty subsets. Similarly to (2.10), the Stirling numbers of the second kind can be represented as the following sum:

is defined as the number of partitions of the set \(\{1,\ldots ,n\}\) into k non-empty subsets. Similarly to (2.10), the Stirling numbers of the second kind can be represented as the following sum:

see [22, (1.9),(1.13)]. The B-analogues of the Stirling numbers of the second kind, denoted by \(B\{n,k\}\), are defined as

They appear as entry A039755 in [24] and were studied by Suter [25]. The exponential generating functions of the arrays  and \((B\{n,k\})_{n,k\ge 0}\) are given by

and \((B\{n,k\})_{n,k\ge 0}\) are given by

and

see [25, Thm. 4] for (2.16). The numbers \(B\{n,k\}\) and  appear as coefficients in the formulas

appear as coefficients in the formulas

see entry A039755 in [24] and also [4, 5] for combinatorial proofs of both identities, which should be compared to the formulas (2.9) and (2.11) for \(\genfrac[]{0.0pt}{}{n}{k}\) and their B-analogues B[n, k].

More generally, it is possible to define the r-Stirling numbers of the first and second kinds. For \(r\in {\mathbb {N}}_0\), the (signless) r-Stirling number of the first kind, denoted by \(\genfrac[]{0.0pt}{}{n}{k}_r\), is defined as the number of permutations of the set \(\{1,\ldots ,n\}\) having k cycles such that the numbers \(1,2,\ldots ,r\) are in distinct cycles; see [8, (1)]. The r-Stirling number of the second kind, denoted by  , is defined as the number of partitions of the set \(\{1,\ldots ,n\}\) into k non-empty disjoint subsets such that the numbers \(1,2,\ldots ,r\) are in distinct subsets; see [8, (2)]. Obviously, for \(r\in \{0,1\}\), the r-Stirling numbers of the first and second kinds coincide with the classical Stirling numbers, respectively. The r-Stirling numbers were introduced by Carlitz [9, 10] under the name weighted Stirling numbers.

, is defined as the number of partitions of the set \(\{1,\ldots ,n\}\) into k non-empty disjoint subsets such that the numbers \(1,2,\ldots ,r\) are in distinct subsets; see [8, (2)]. Obviously, for \(r\in \{0,1\}\), the r-Stirling numbers of the first and second kinds coincide with the classical Stirling numbers, respectively. The r-Stirling numbers were introduced by Carlitz [9, 10] under the name weighted Stirling numbers.

The exponential generating functions in one and two variables of the r-Stirling numbers of the first kind are given by

see [8, (36),(37)]. For the r-Stirling numbers of the second kind they are given by

see [8, (38),(39)]. The r-Stirling numbers can equivalently be defined in terms of the regular Stirling numbers by

where \(r^{{\overline{m}}}:=r(r+1)\cdot \ldots \cdot (r+m-1)\) denotes the rising factorial, \(r^{{\overline{0}}}:=1\), and

see [8, (27),(32)]. This even yields an analytic continuation of the r-Stirling numbers to non-integer (arbitrary complex) r, given by

and

Note the following special values:

For \(r=1/2\), we observe the following relations between the r-Stirling numbers and the numbers B[n, k] and \(B\{n,k\}\):

Both can easily be verified by comparing the generating functions. Let us also mention that besides the r-Stirling numbers there is another construction, the generalized two-parameter Stirling numbers [21] (see also [6]) containing Stirling numbers of both types and their B-analogues as special cases corresponding to the parameters \((d,a)=(1,0)\) and \((d,a)=(2,1)\), respectively. We will not use this general construction here.

3 Main Results

3.1 Schläfli Orthoschemes

The polytopes we are interested in this paper are called Schläfli orthoschemes. As mentioned in the introduction, the Schläfli orthoscheme of type B in \({\mathbb {R}}^n\) is defined as

Note that, for convenience, we set \(K_0^B:=\{0\}\). Similarly, the Schläfli orthoscheme of type A in \({\mathbb {R}}^{n+1}\) is defined as the convex hull of the \((n+1)\)-dimensional vectors \(P_0,P_1,\ldots ,P_{n+1}\), where \(P_0=(0,0,\ldots ,0)\) and

It is not difficult to check that

Again, we put \(K_0^A=\{0\}\). The index shift from type B to type A will turn out to be convenient since \(K_n^A\subset {\mathbb {R}}^{n+1}\) is an n-dimensional polytope. In fact, the Schläfli orthoschemes of type A and type B are simplices since they are convex hulls of \(n+1\) affinely independent vectors. The Schläfli orthoscheme of type B was already considered by Gao and Vitale [13] who among other things evaluated the classical intrinsic volumes of \(K_n^B\). Similar calculations for the Schläfli orthoscheme of type A were made by Gao [12].

The definition of the Schläfli orthoscheme can be motivated by a connection to random walks and random bridges. In fact, consider Gaussian random matrices \(G_B\in {\mathbb {R}}^{d\times n}\) and \(G_A\in {\mathbb {R}}^{d\times (n+1)}\), that is, random matrices having independent and standard Gaussian distributed entries. Then \(G_BK_n^B\) has the same distribution as the convex hull of a d-dimensional random walk \(S_0:=0,S_1,\ldots ,S_n\) with Gaussian increments. Similarly, \(G_AK_n^A\) has the same distribution as the convex hull of a d-dimensional Gaussian random bridge \(\widetilde{S}_0:=0,\widetilde{S}_1,\ldots ,\widetilde{S}_n,\widetilde{S}_{n+1}=0\) which is essentially a Gaussian random walk conditioned on the event that it returns to 0 in the \((n+1)\)-st step. We will explain these facts in Sect. 3.4 in more detail.

3.2 Sums of Conic Intrinsic Volumes in Weyl Chambers and Schläfli Orthoschemes

In this section, we state the main results of this paper concerning the sums of the conic intrinsic volumes of the tangent cones of Schläfli orthoschemes of type A and B and their products. The same is done for Weyl chambers of type A and B and their products. Our first result concerning the Schläfli orthoschemes of types A and B is the following theorem.

Theorem 3.1

Let \(j\in \{0,\ldots ,n\}\) and \(k\in \{0,\ldots ,n\}\) be given. Then, it holds that

As a consequence, we can derive formulas for the sums of the internal and external angles of \(K_n^B\) and \(K_n^A\) at their j-faces F.

Corollary 3.2

For \(j\in \{0,\ldots ,n\}\), the sum of the internal angles is given by

while the sum of the external angles are given by

Proof

The sums of the internal angles follow from Theorem 3.1 with \(k=n\), since \(K_n^B\) and \(K_n^A\) are both n-dimensional polytopes. In the case of external angles, we use the fact that the maximal linear subspaces contained in both \(T_F(K_n^B)\) and \(T_F(K_n^A)\) are j-dimensional, which implies that \(\upsilon _j(T_F(K_n^B))=\upsilon _{n-j}((T_F(K_n^B))^\circ )=\upsilon _{n-j}(N_F(K_n^B))=\alpha (N_F(K_n^B))\), and similarly for \(K_n^A\). Using Theorem 3.1 with \(k=j\) completes the proof. \(\square \)

We obtain similar results for the tangent cones of Weyl chambers of types A and B, which are the fundamental domains of the reflection groups of the respective type; see, e.g., [18]. More concretely, a Weyl chamber of type B (or \(B_{n}\)) is the polyhedral cone

The Weyl chamber of type A (or \(A_{n-1}\)) is the polyhedral cone

We set \(B^{(0)}=A^{(0)}=\{0\}\) for convenience.

Theorem 3.3

Let \(j\in \{0,\ldots ,n\}\) and \(k\in \{0,\ldots ,n\}\) be given. Then, it holds that

Theorems 3.1 and 3.3 are special cases of Proposition 3.8 which we shall state in Sect. 3.3 and which gives a formula for sums of the conic intrinsic volumes of a mixed product of Weyl chambers of both types A and B. We will give an application of Theorems 3.1 and 3.3 to a problem of compressed sensing in Sect. 3.6.

It has been pointed to us by an anonymous referee that there is a similarity between Theorem 3.3 and the formulas, derived by Amelunxen and Lotz [2, Sect. 6.1.3], for the sums of k-th conic intrinsic volumes of j-dimensional faces in the fans of reflection arrangements. Despite the seeming similarity between the formulas, no direct connection between the quantities under interest seems to exist, the proofs are different and, in fact, even the right-hand sides of the formulas include Stirling numbers in a different order and cannot be reduced to each other in a simple way.

For \(k=n\) and \(k=j\), Theorem 3.3 yields the following corollary on the sums of internal and external angles of \(B^{(n)}\) and \(A^{(n)}\).

Corollary 3.4

For \(j\in \{0,\ldots ,n\}\), the sums of internal angles of \(B^{(n)}\) and \(A^{(n)}\) are given by

while the sums of external angles are given by

3.2.1 Finite Products of Schläfli Orthoschemes and Weyl Chambers

The above theorems can be extended to finite products of Schläfli orthoschemes and Weyl chambers. Let \(b\in {\mathbb {N}}\) and define \(K^B:=K_{n_1}^B\times \cdots \times K_{n_b}^B\), \(K^A:=K_{n_1}^A\times \cdots \times K_{n_b}^A\) for \(n_1,\ldots ,n_b\in {\mathbb {N}}_0\) with \(n:=n_1+\cdots +n_b\). Furthermore, for \(d\in \{0,1/2,1\}\), let

Here, \([t^N]f(t):=f^{(N)}(0)/N!\) denotes the coefficient of \(t^N\) in the Taylor expansion of a function \(f:{\mathbb {R}}\rightarrow {\mathbb {R}}\) around 0 and

is the coefficient of \(x_1^{N_1}\cdot \ldots \cdot x_b^{N_b}\) in the multidimensional Taylor expansion of a function \(g:{\mathbb {R}}^b\rightarrow {\mathbb {R}}\). Note that \(R_d(k,j,b,(n_1,\ldots ,n_b))=0\) for \(k<j\).

Theorem 3.5

Let \(j\in \{0,\ldots ,n\}\) and \(k\in \{0,\ldots ,n\}\) be given. Then, it holds that

The proof of Theorem 3.5 is postponed to Sect. 4.2. For finite products of Weyl chambers \(W^B:=B^{(n_1)}\times \cdots \times B^{(n_b)}\) and \(W^A:=A^{(n_1)}\times \cdots \times A^{(n_b)}\), we obtain the following theorems.

Theorem 3.6

For \(j\in \{0,\ldots ,n\}\) and \(k\in \{0,\ldots ,n\}\), it holds that

The proof of Theorem 3.6 is similar to that of Theorem 3.5 and will be omitted. In the proof of Theorem 3.5 we will observe that if we additionally sum over all possible \(n_1,\ldots ,n_b\) with fixed sum n, the formulas in terms of Taylor coefficients simplify as follows.

Proposition 3.7

For all \(j\in \{0,\ldots ,n\}\) and \(k\in \{0,\ldots ,n\}\) we have

The proof is postponed to Sect. 4.3.

3.3 Method of Proof of Theorems 3.1 and 3.3

The main ingredient in proving Theorems 3.1 and 3.3 is the following proposition.

Proposition 3.8

Let \((j,b)\in {\mathbb {N}}_0^2\setminus \{(0,0)\}\) and \(n\in {\mathbb {N}}\). For \(l=(l_1,\ldots ,l_{j+b})\) such that \(l_1,\ldots ,l_j\in {\mathbb {N}}\), \(l_{j+1},\ldots ,l_{j+b}\in {\mathbb {N}}_0\), and \(l_1+\cdots +l_{j+b}=n\) we define

Then, for all \(k\in \{0,\ldots ,n\}\), we have

We will prove this proposition in Sect. 4.1 by computing the generating function of the intrinsic volumes. An alternative proof of Proposition 3.8 can be found in the arXiv version of this paper, see [15, Sect. 4.2]. In order to see that Theorems 3.1 and 3.3 follow from Proposition 3.8, we describe the collections of tangent cones of the Schläfli orthoschemes and Weyl chambers of types A and B at their corresponding faces.

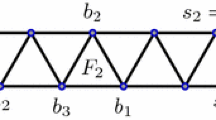

3.3.1 Schläfli Orthoschemes of Type B

The faces of \(K_n^B\) (and of any polytope in general) are obtained by replacing some of the linear inequalities in its defining conditions by equalities. Thus, each j-face of \(K_n^B\) is determined by a collection \(J:=\{i_0,\dots ,i_j\}\) of indices \(0\le i_0<i_1<\cdots <i_j\le n\) and given by

Note that for \(i_0=0\), no \(x_i\) is required to be 1. Similarly, for \(i_j=n\), no \(x_i\) is required to be 0. Take a point \(x=(x_1,\ldots ,x_n)\in {{\,\mathrm{relint}\,}}F_J\). For this point, all inequalities in the defining condition of \(F_J\) are strict. By definition, the tangent cone of \(K_n^B\) at \(F_J\) is given by

It follows that

which is isometric to the product

where the polyhedral cones

\(i\in \mathbb N_0\), are the Weyl chambers of type B and A, respectively. We arrive at the following lemma.

Lemma 3.9

The collection of tangent cones \(T_F(K_n^B)\), where F runs through the set of all j-faces \({\mathcal {F}}_j(K_n^B)\), coincides (up to isometry) with the collection

Equivalently, it coincides (up to isometry) with the collection

If the isometry type of some cone appears with some multiplicity in one collection, then it appears with the same multiplicity in the other collections.

3.3.2 Schläfli Orthoschemes of Type A

Now, we consider the tangent cones of Schläfli orthoschemes of type A. Recall that

Note that, unlike in the B-case, the simplex \(K_n^A\) (which has dimension n) is contained in \({\mathbb {R}}^{n+1}\). For us it will be easier to consider the following unbounded set:

Denote by \(L_{n+1}\) the 1-dimensional linear subspace \(L_{n+1}=\{x\in {\mathbb {R}}^{n+1}:x_1=\cdots =x_{n+1}\}\). Then \(L_{n+1}^\perp =\{x\in {\mathbb {R}}^{n+1}:x_1+\cdots +x_{n+1}=0\}\) and we have

where \(\oplus \) denotes the orthogonal sum. Thus, there is a one-to-one correspondence \({\mathcal {F}}_j(K_n^A)\rightarrow {\mathcal {F}}_{j+1}(\widetilde{K}_n^A)\) between the j-faces of \(K_n^A\) and the \((j+1)\)-faces of \(\widetilde{K}_n^A\) given by \(F\mapsto L_{n+1}\oplus F\). Furthermore, for every j-face F of \(K_n^A\) we have a relation between the tangent cones of \(K_n^A\) and \(\widetilde{K}_n^A\) given by

Now, consider the collection of tangent cones \(T_F(\widetilde{K}_n^A)\), where \(F\in {\mathcal {F}}_j(\widetilde{K}_n^A)\) for some \(j\in \{1,\ldots ,{n+1}\}\) and \(n\in {\mathbb {N}}_0\), more closely. The faces of \(\widetilde{K}_n^A\) are obtained by replacing some inequalities in the defining conditions of \(\widetilde{K}_n^A\) by equalities. Thus, there are two types of j-faces of \(\widetilde{K}_n^A\) for \(j\in \{1,\ldots ,{n+1}\}\).

The j-faces of the first type are of the form

for \(1\le i_1<\cdots <i_{j-1}\le n\). Note that for \(j=1\), this reduces to the 1-face \(\{x\in {\mathbb {R}}^{n+1}:x_1=\cdots =x_{n+1}\}\). To determine the tangent cone at \(F_1\), take some point in the relative interior of this face. For this point, all inequalities in the defining condition of \(F_1\) are strict. Call this point \(x=(x_1,\ldots ,x_{n+1})\in {{\,\mathrm{relint}\,}}F_1\). By definition, we have

It follows that

Thus, \(T_{F_1}(\widetilde{K}_n^A)\) is equal to \(A^{(l_1)}\times \ldots \times A^{(l_j)}\), where \(l_1,\ldots ,l_j\in {\mathbb {N}}\) satisfy \(l_1+\cdots +l_j=n+1\) and are given by \(l_1=i_1\), \(l_2=i_2-i_1\), \(\ldots \), \(l_j=n+1-i_{j-1}\).

The j-faces of \(\widetilde{K}_n^A\) of the second type are of the form

for \(1\le i_1<\cdots <i_j\le n\). The defining condition consists of \(j+1\) groups of equalities and the additional condition \(x_1-x_{n+1}=1\). Again, take a point \(x\in {{\,\mathrm{relint}\,}}F_2\). For this point all inequalities in the defining condition of \(F_2\) are strict. Hence, the tangent cone is given by

where in the last step we merged two groups of inequalities. Hence, \(T_{F_2}(\widetilde{K}_n^A)\) is isometric to \(A^{(l_1+l_{j+1})}\times A^{(l_2)}\times \cdots \times A^{(l_j)}\), where \(l_1,\ldots ,l_{j+1}\in {\mathbb {N}}\) are such that \(l_1+\cdots +l_{j+1}=n+1\), that is they form a composition of \(n+1\) into \(j+1\) parts.

We can combine both types of tangent cones into one type as follows. For a \((j+1)\)-composition \(l_1+\cdots +l_{j+1}=n+1\), the numbers \(k_1:=l_1+l_{j+1}\), \(k_2:=l_2\), \(\ldots \), \(k_j:=l_j\) form a j-composition of \(n+1\). This association is not injective since each j-composition \(k_1+\cdots +k_j=n+1\) of \(n+1\) is assigned to \(k_1-1\) compositions of \(n+1\) into \(j+1\) parts. Indeed, we can represent \(k_1\) as \(1+(k_1-1),2+(k_2-2),\ldots ,(k_1-1)+1\). Thus, combining both types of tangent cones yields the following lemma.

Lemma 3.10

The collection of tangent cones \(T_F(\widetilde{K}_n^A)\), where F runs through the set of all j-faces \({\mathcal {F}}_j(\widetilde{K}_n^A)\), coincides (up to isometry) with the collection of cones

where each cone of the above collection is repeated \(l_1\) times (or taken with multiplicity \(l_1\)).

Then, Theorem 3.1 can be deduced from Proposition 3.8 and Lemma 3.9 (in the B-case), respectively Lemma 3.10 (in the A-case), as follows.

Proof of Theorem 3.1 assuming Proposition 3.8

We start with the B-case. For \(j\in \{0,\ldots ,n\}\) and \(k\in \{0,\ldots ,n\}\), we have

where we used Lemma 3.9 in the first step and Proposition 3.8 with \(b=2\) in the second step.

The A-case requires slightly more work. Using the identity \(\upsilon _{k+1}(K_n^A\oplus L_{n+1})=\upsilon _k(K_n^A)\) and (3.1), we obtain

Applying Lemma 3.10\(j+1\) times with multiplicity \(l_1\) replaced by \(l_1,\ldots ,l_{j+1}\), we can observe that the collection of tangent cones \(T_F(\widetilde{K}_n^A)\), where F runs through all \((j+1)\)-faces of \({\mathcal {F}}_{j+1}({\widetilde{K}}_n^A)\) and each cone of this collection is repeated \(j+1\) times, coincides (up to isometry) with the collection of cones

where each cone is taken with multiplicity \(l_1+\cdots +l_{j+1}= n+1\). Therefore, we arrive at

where we used Proposition 3.8. Dividing both sides by \(j+1\) yields the claim. \(\square \)

3.3.3 Weyl Chambers of Type B

For \(n\in {\mathbb {N}}\) recall that

and \(B^{(0)}:=\{0\}\) by convention. Now, let \(j\in \{0,\ldots ,n\}\). Each j-face of \(B^{(n)}\) is determined by a collection \(J:=\{i_1,\ldots ,i_j\}\) of indices \(1\le i_1<\cdots <i_j\le n\), and given by

Note that for \(i_j=n\), no \(x_i\)’s are required to be 0, and for \(j=0\), we obtain the 0-dimensional face \(\{0\}\). In order to determine the tangent cone \(T_{F_J}(B^{(n)})\) take a point \(x=(x_1,\ldots ,x_n)\in {{\,\mathrm{relint}\,}}F_J\). Again, this point satisfies the defining conditions of \(F_J\) with inequalities replaced by strict inequalities. Thus, the tangent cone is given by

The above reasoning yields the following lemma.

Lemma 3.11

The collection of tangent cones \(T_F(B^{(n)})\), where F runs through the set of all j-faces \({\mathcal {F}}_j(B^{(n)})\), coincides with the collection of polyhedral cones

3.3.4 Weyl Chambers of Type A

For \(n\in {\mathbb {N}}\) recall that

For \(j\in \{1,\ldots ,n\}\) every j-face of \(A^{(n)}\) is determined by a collection \(J:=\{i_1,\ldots ,i_{j-1}\}\) of indices \(1\le i_1<\cdots <i_{j-1}\le n-1\), and given by

Note that for \(j=1\), we obtain the 1-face \(\{x\in {\mathbb {R}}^n:x_1=\cdots =x_n\}\). In order to determine the tangent cone \(T_{F_J}(A^{(n)})\) consider a point \(x=(x_1,\ldots ,x_n)\in {{\,\mathrm{relint}\,}}F_J\). In a fashion similar to the case of a B-type Weyl chamber, we can characterize the tangent cone of \(A^{(n)}\) at \(F_J\) as follows:

This yields the following analogue of Lemma 3.11.

Lemma 3.12

The collection of tangent cones \(T_F(A^{(n)})\), where F runs through the set of all j-faces \({\mathcal {F}}_j(A^{(n)})\), coincides with the collection of polyhedral cones

Proof of Theorem 3.3 assuming Proposition 3.8

We start with the B-case. For \(j\in \{0,\ldots ,n\}\) and \(k\in \{0,\ldots ,n\}\), we have

where we used Lemma 3.11 in the first step, Proposition 3.8 with \(b=1\) in the second step, and the formulas in (2.23) in the last step.

In the A-case, for \(j\in \{1,\ldots ,n\}\) and \(k\in \{1,\ldots ,n\}\), we have

where we used Lemma 3.12 in the first step and Proposition 3.8 with \(b=0\) in the second step. For \(j=0\) or \(k=0\) the formula is evidently true as well.\(\square \)

3.3.5 Identities for the Generalized Stirling Numbers

Let us also mention that Proposition 3.8, combined with (2.2) and (2.3), yields the following identities for the generalized Stirling numbers. The full proof can be found in the arxiv version of this paper, see [15].

Corollary 3.13

For \(n\in {\mathbb {N}}\), \(j\in \{0,\ldots ,n\}\), and \(2b\in {\mathbb {N}}_0\), the following identities hold:

Note that (3.5) is a special case of the orthogonality relation between the r-Stirling numbers proven by Broder [8, Thm. 25]. Relation (3.4) is also known for \(b=0,1\), the numbers on the right-hand side being the Lah numbers.

3.4 Expected Face Numbers: Convex Hulls of Gaussian Random Walks and Bridges

The above theorems on the angle sums of the tangent cones of Schläfli orthoschemes yield results on the expected number of faces of Gaussian random walks and random bridges, and their Minkowski sums. Consider independent d-dimensional standard Gaussian random vectors

and let \(n_1+\cdots +n_b=n\ge d\). For every \(i\in \{1,\ldots ,b\}\) we define a random walk \((S_0^{(i)},S_1^{(i)},\ldots ,S_{n_i}^{(i)})\) by

Consider the convex hulls of these random walks:

The following theorem gives a formula for the expected number of j-faces of the Minkowski sum of b such convex hulls defined by

Theorem 3.14

Let \(0\le j<d\le n\) be given and define \(C^{(1)}_{n_1},\ldots ,C^{(b)}_{n_b}\) as above. Then we have

where we recall that

The same formula holds for the Minkowski sum of Gaussian random bridges which are essentially Gaussian random walks under the condition that they return to 0 in the last step. To state it, consider independent d-dimensional standard Gaussian random vectors

and define the random walks \(\bigl ({S}^{(i)}_0,{S}^{(i)}_1,\ldots ,{S}^{(i)}_{n_i+1}\bigr )\) as above. We define the Gaussian bridge \(\bigl (\widetilde{S}^{(i)}_0,\widetilde{S}^{(i)}_1,\ldots ,\widetilde{S}^{(i)}_{n_i+1}\bigr )\) as a process having the same distribution as the Gaussian random walk \(\bigl (S_0^{(i)},S_1^{(i)},\ldots ,S_{n_i+1}^{(i)}\bigr )\) conditioned on the event that \(S^{(i)}_{n_i+1}=0\). Equivalently, the Gaussian bridge \(\bigl (\widetilde{S}^{(i)}_0,\widetilde{S}^{(i)}_1,\ldots ,\widetilde{S}^{(i)}_{n_i+1}\bigr )\) can be constructed as

for \(i=1,\ldots ,b\). Note that \(\widetilde{S}^{(i)}_{n_i+1}=0\). Define the convex hulls of the Gaussian bridges by

Theorem 3.15

Let \(0\le j<d\le n\) be given and \(\widetilde{C}^{(1)}_{n_1},\ldots ,\widetilde{C}^{(a)}_{n_b}\) be as above. Then, we have

For a single convex hull (\(b=1\)), the expected number of j-faces of \(C^{(1)}_n\) and \(\widetilde{C}^{(1)}_{n}\) is already known (in a more general case), see [19, Thms. 1.2 and 5.1], and given by

This formula is a special case of Theorems 3.14 and 3.15 with \(b=1\) and \(n_1\) replaced by n.

3.5 Method of Proof of Theorems 3.14 and 3.15

The main ingredient in the proof of the named theorems is the following lemma which is due to Affentranger and Schneider [1, (5)].

Lemma 3.16

Let \(P\subset {\mathbb {R}}^n\) be a convex polytope with non-empty interior and \(G\in {\mathbb {R}}^{d\times n}\) be a Gaussian random matrix, that is, its entries are independent and standard normal random variables. Then, for all \(0\le j<d\le n\) we have

where the Grassmann angles \(\gamma _d\) were defined in (2.7).

In fact, Affentranger and Schneider [1] proved this formula for a random orthogonal projection of P, while the fact that Gaussian matrices yield the same result follows from a result of Baryshnikov and Vitale [7]. Due to the relation between Grassmann angles and conic intrinsic volumes stated in (2.8), the lemma can be written as

Using (2.4), it also follows that under the conditions of Lemma 3.16 we have

Now take a Gaussian matrix \(G_B=(\xi _{i,j})\in {\mathbb {R}}^{d\times n}\), where \(\xi _{i,j}\), \(i\in \{1,\ldots ,d\}\), \(j\in \{1,\ldots ,n\}\), are independent standard Gaussian random variables. Then, we claim that \(G_BK^B\) has the same distribution as the Minkowski sum \(C^{(1)}_{n_1}+\cdots +C^{(b)}_{n_b}\). Similarly, for a Gaussian matrix \(G_A\in {\mathbb {R}}^{d\times (n+b)}\), we claim that \(G_AK^A\) has the same distribution as \(\widetilde{C}^{(1)}_{n_1}+\cdots +\widetilde{C}^{(b)}_{n_b}\).

In order to see this, consider the case of a single Schläfli orthoscheme \(K_{n_1}^B\) first. Let \(G_B^{(1)}=(\xi _{i,j})\in {\mathbb {R}}^{d\times n_1}\) be a Gaussian matrix. We know that the Schläfli orthoscheme \(K_{n_1}^B\) is the simplex given as the convex hull of the \(n_1\)-dimensional vectors

Thus, we obtain

where \({\mathop {=}\limits ^{d}}\) denotes the distributional equality of two random elements.

Similarly, we consider a Gaussian matrix \(G_A^{(1)}\in {\mathbb {R}}^{d\times (n_1+1)}\) for the Schläfli orthoscheme \(K_{n_1}^A\). We know that \(K_{n_1}^A\) is the convex hull of the \((n_1+1)\)-dimensional vectors \(P_0=(0,0,\ldots ,0)\) and

Thus, in the same way we get

The product case follows from the following observation. Let \(G_B\in {\mathbb {R}}^{d\times n}\) be a Gaussian matrix and \(n_1+\cdots +n_b=n\). Then, we can represent \(G_B\) as the row of b independent matrices \(G_B=\bigl (G_B^{(1)},\ldots ,G_B^{(b)}\bigr )\), where \(G_B^{(i)}\in {\mathbb {R}}^{d\times n_i}\) is itself a Gaussian matrix for \(i=1,\ldots ,b\). We can represent each vector \(x\in {\mathbb {R}}^n\) as the column of b vectors, i.e., \(x=(x^{(1)},\ldots ,x^{(b)})^\top \), where \(x^{(i)}\in {\mathbb {R}}^{n_i}\) for \(i=1,\ldots ,b\). Then, we easily observe that

It follows that

In the same way we obtain for a Gaussian matrix \(G_A\in {\mathbb {R}}^{d\times (n+b)}\), \(n_1+\cdots +n_b=n\), that

Now, we can finally prove Theorems 3.14 and 3.15.

Proof of Theorem 3.14

Let \(1\le j<d\le n\) and \(G_B\in {\mathbb {R}}^{d\times n}\) be a Gaussian matrix. As we already observed, \(G_BK^B{\mathop {=}\limits ^{d}}C^{(1)}_{n_1}+\cdots +C^{(b)}_{n_b}\). Thus, (3.6) yields

where we used Theorem 3.5 in the last step.\(\square \)

Proof of Theorem 3.15

Let \(1\le j<d\le n\) and let \(G_A\in {\mathbb {R}}^{d\times (n+b)}\) be a Gaussian matrix. We have already seen that \(G_AK^A{\mathop {=}\limits ^{d}}\widetilde{C}^{(1)}_{n_1}+\cdots +\widetilde{C}^{(b)}_{n_b}\). Although the polytope \(K^A\subset {\mathbb {R}}^{n+b}\) is only n-dimensional, we can still apply Lemma 3.16, and therefore also (3.6), to the ambient linear subspace \({{\,\mathrm{lin}\,}}K^A\) since the Grassmann angles do not depend on the dimension of the ambient linear subspace. Combining this with Theorem 3.5, we obtain

which completes the proof. \(\square \)

3.6 Application to Compressed Sensing

Let us briefly mention an application of Theorems 3.1 and 3.3 to compressed sensing. Donoho and Tanner [11] have considered the following problem. Let \(x=(x_1,\ldots ,x_n)\) be an unknown signal belonging to some polyhedral set \(P\subset {\mathbb {R}}^n\) and let \(G:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^k\) be a Gaussian matrix, where \(k\le n\). We would like to recover the signal x from its image \(y=Gx\). Following Donoho and Tanner [11], we denote the event that such a recovery is uniquely possible by

The recovery is uniquely possible if and only if \((x+\ker G)\cap P=\{x\}\). Assume that \(x\in {{\,\mathrm{relint}\,}}F\) for some j-face \(F\in {\mathcal {F}}_j(P)\) of P. Then, the following equivalence holds:

Using this observation, Donoho and Tanner [11] computed the probability of unique recovery explicitly in the cases when \(P={\mathbb {R}}_+^n\) is the non-negative orthant or \(P=[0,1]^n\) is the unit cube. By the way, the equivalence (3.7) was stated by Donoho and Tanner [11, Lems. 2.1 and 5.1] in these special cases, but it easily generalizes to any polyhedral set. We are now going to use Theorems 3.1 and 3.3 to compute the probabilities of unique signal recovery in the case when P is a Weyl chamber or a Schläfli orthoscheme, which corresponds to natural isotonic constraints frequently imposed in statistics.

3.6.1 Weyl Chambers

We consider the following model for a random signal x that belongs to the Weyl chamber \(B^{(n)}\) of type B. Let \(0 < j \le n\) be given together with j positive numbers \(a_1,\ldots , a_j\). Select a random and uniform subset \(\{i_1,\ldots ,i_j\}\subseteq \{1,\ldots ,n\}\) with \(1\le i_1<\cdots <i_j\le n\). Now, define the corresponding j-face \(F^B(i_1,\ldots ,i_j)\) of \(B^{(n)}\) by

and consider the signal \(x=(x_1,\ldots ,x_n)\) given by

Then, by construction, \(F^B(i_1,\ldots ,i_j)\) is random and uniformly distributed on \({\mathcal {F}}_j(B^{(n)})\). Moreover, x belongs to \({{\,\mathrm{relint}\,}}F^B(i_1,\ldots ,i_j)\).

Proposition 3.17

Let \(0 \le j \le k \le n\) and let \(x\in {\mathbb {R}}^n\) be a random signal constructed as above (where we put \(x=0\) if \(j=0\)). If \(G:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^k\) is a Gaussian random matrix, then it holds that

Proof

Using the above equivalence (3.7) and the construction of x, we obtain that

Since \(\ker G\) is rotationally invariant, and thus a uniformly distributed \((n-k)\)-dimensional linear subspace, we conclude that the probability on the right-hand side coincides with \(1-\gamma _k(T_F(B^{(n)}))\), where \(\gamma _k(T_F(B^{(n)}))\) denotes the k-th Grassmann angle of \(T_F(B^{(n)})\). Thus, relation (2.8) yields

where the last equality follows from Theorem 3.3. \(\square \)

In the A-case the construction is analogous. Let \(0 < j \le n\) and let \(a_1,\ldots ,a_{j}\) be positive numbers. Select a random and uniform subset \(\{i_1,\ldots ,i_{j-1}\}\subseteq \{1,\ldots ,n-1\}\) with \(1\le i_1<\cdots <i_{j-1}\le n-1\), put \(i_j:=n\), and define the corresponding j-face \(F^A(i_1,\ldots ,i_{j-1})\) of \(A^{(n)}\) by

which is uniformly distributed in \({\mathcal {F}}_j(A^{(n)})\). We define the random signal \(x=(x_1,\ldots ,x_n)\) by \(x_m=\sum _{l:i_l\ge m}a_l\) for \(m=1,\ldots ,n\). Then, x belongs to the relative interior of \(F^A(i_1,\ldots ,i_{j-1})\). This yields the following proposition, which is analogous to the B-case and can be proven in a similar way.

Proposition 3.18

Let \(0<j\le k\le n\) and \(x\in {\mathbb {R}}^n\) be a random signal constructed as above. If \(G:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^k\) is a Gaussian random matrix, then it holds that

3.6.2 Schläfli Orthoschemes

Again, we start with the B-case. Let \(0\le j \le n\) be given. Take \(j+1\) positive numbers \(a_0,a_1,\ldots , a_j\) satisfying \(a_0+\cdots +a_j=1\) and select a random and uniform subset \(\{i_0,\ldots ,i_j\}\subseteq \{0,\ldots ,n\}\) with \(0\le i_0<\cdots <i_j\le n\). Now, define the corresponding j-face \(S^B(i_0,\ldots ,i_j)\) of \(K_n^B\) by

Consider the signal \(x=(x_1,\ldots ,x_n)\) given by

By construction, we have \(x\in {{\,\mathrm{relint}\,}}S^B(i_0,\ldots ,i_j)\) and \(S^B(i_0,\ldots ,i_j)\) is random and uniformly distributed on \({\mathcal {F}}_j(K^B_n)\). Then, the B-case of Theorem 3.1 yields the following proposition.

Proposition 3.19

Let \(0\le j\le k\le n\) and let \(x\in {\mathbb {R}}^n\) be a random signal constructed as above. If \(G:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^k\) is a Gaussian random matrix, then it holds that

In the A-case, recall the definition of the Schläfli orthoscheme of type A:

Take \(0\le j\le n\) and positive numbers \(a_1,\ldots ,a_{j+1}\) satisfying \(a_1+\cdots +a_{j+1}=1\). Also, select a random and uniform subset \(\{i_1,\ldots ,i_{j+1}\}\subseteq \{1,\ldots ,n+1\}\) such that \(1\le i_1<\cdots <i_{j+1}\le n+1\). If \(i_{j+1}=n+1\), we define the corresponding j-face of \(K_n^A\) to be

(which corresponds to the type of face described in (3.2)), while for \(i_j\le n\) we define

(which corresponds to the type of face described in (3.3)). Due to the one-to-one correspondence between the collections of indices \(1\le i_1<\cdots < i_{j+1}\le n+1\) and the j-faces of \(K_n^A\), the face \(S^A(i_1,\ldots ,i_{j+1})\) is uniformly distributed on the set \({\mathcal {F}}_j(K_n^A)\) of all j-faces. We consider the random signal \(x=(x_1,\ldots ,x_{n+1})\) given by

where c is chosen to ensure that \(x_1+\cdots +x_{n+1} = 0\). It can be easily checked that the signal x belongs to the relative interior of \(S^A(i_1,\ldots ,i_j)\). Then, the A-case of Theorem 3.1 yields the following proposition.

Proposition 3.20

\(Let\, 0\le j\le k\le n\) and \(x\in {\mathbb {R}}^{n+1}\) be a random signal constructed as above. If \(G:{\mathbb {R}}^{n+1}\rightarrow {\mathbb {R}}^k\) is a Gaussian random matrix, then it holds that

4 Proofs: Angle Sums of Weyl Chambers and Schläfli Orthoschemes

In this section, we present the proofs of Propositions 3.8, 3.7, and Theorem 3.5. Most of the proofs rely on explicit expressions for the conic intrinsic volumes of the Weyl chambers. Recall that the Weyl chambers \(A^{(n)}\) and \(B^{(n)}\) are defined by

for \(n\in {\mathbb {N}}\), and we put \(B^{(0)}:=\{0\}\). The intrinsic volumes of the Weyl chambers are known explicitly and given by

for \(k=0,\ldots ,n\); see, e.g., [20, Thm. 4.2] or [14, Thm. 1.1]. The B[n, k]’s denote the B-analogues of the Stirling numbers of the first kind as defined in (2.11).

4.1 Proof of Proposition 3.8

Let \((j,b)\in {\mathbb {N}}_0^2\setminus \{(0,0)\}\). Recall that for \(l=(l_1,\ldots ,l_{j+b})\) such that \(l_1,\ldots ,l_j\in {\mathbb {N}}\), \(l_{j+1},\ldots ,l_{j+b}\in {\mathbb {N}}_0\), and \(l_1+\cdots +l_{j+b}=n\), we define

Our goal is to show that for all \(k\in \{0,\ldots ,n\}\),

Proof of Proposition 3.8

Let \((j,b)\in {\mathbb {N}}_0^2\setminus \{(0,0)\}\) and \(k\in \{0,\ldots ,n\}\) be given. By the product formula for conic intrinsic volumes (2.5), the generating polynomial of the intrinsic volumes of \(T_l\) can be written as

We can consider each sum on the right-hand side separately. Using the representations of Stirling numbers of the first kind and their B-analogues from (2.9) and (2.11), as well as the intrinsic volumes of the Weyl chambers stated in (4.2), we obtain

and

Note that for \(i=0\) we put \((t+1)(t+3)\cdot \ldots \cdot (t+2i-1):=1\) by convention, which is consistent with \(\upsilon _0(\{0\})=1\). This yields the following formula:

where \(t^{{\overline{r}}}:=t(t+1)\cdot \ldots \cdot (t+r-1)\), \(r\in {\mathbb {N}}\), denotes the rising factorial. Thus, the k-th conic intrinsic volume of \(T_l\) is the coefficient of \(t^k\) in the above polynomial \(P_{T_l}(t)\). Note that this already implies \(\nu _k(T_l)=0\) for \(k<j\). Thus, the left-hand side of (4.3) is 0, which coincides with the right-hand side, since for \(k<j\) we have

Therefore, we only need to consider the case \(k\ge j\). Let \(P^{(n)}_{l_1,\ldots ,l_{j+b}}(m)\), where \(m=0,\ldots ,n\), be the coefficients of the polynomial

Using the notation just introduced, we obtain

Now, let \([t^N]f(t):=f^{(N)}(0)/N!\) be the coefficient of \(t^N\) in the Taylor expansion of a function f around 0. Define

Then, we can observe that

Thus, to prove the proposition, it suffices to show that for all \((j,b)\in {\mathbb {N}}_0^2\setminus \{(0,0)\}\) and \(k\in \{j,\ldots ,n\}\) we have

To this end, we can introduce a new variable x and write, by expanding the product,

Using (2.9) and the exponential generating function in two variables for the Stirling numbers of the first kind stated in (2.12), we obtain

From (2.11) and (2.13), we similarly get

Thus, we have

where we set \(c=c(x)=\log (1-x)\). The exponential generating function of the b/2-Stirling numbers stated in (2.18) yields

It follows that

Furthermore, using (2.17) we obtain

Taking all this into consideration, we obtain

which coincides with (4.5) and therefore completes the proof. \(\square \)

4.2 Proof of Theorem 3.5

Let \(b\in {\mathbb {N}}\). Recall that \(K^B=K_{n_1}^B\times \cdots \times K_{n_b}^B\) and \(K^A=K_{n_1}^A\times \cdots \times K_{n_b}^A\) for \(n_1,\ldots ,n_b\in {\mathbb {N}}_0\) such that \(n:=n_1+\cdots +n_b\), where

for \(d\in {\mathbb {N}}\), denote the Schläfli orthoschemes of types B and A in \({\mathbb {R}}^d\), respectively \({\mathbb {R}}^{d+1}\). For convenience, we set \(K_0^B=K_0^A=\{0\}\). We want to show that

holds for all \(j\in \{0,\ldots ,n\}\) and \(k\in \{0,\ldots ,n\}\), where for \(d\in \{0,{1}/{2},1\}\) we define

Proof of Theorem 3.5

We divide the proof into three steps. In the first step we describe the tangent cones \(T_F(K^B)\) in terms of products of Weyl chambers. In Step 2, we derive a formula for the generalized angle sums of the \(T_F(K^B)\)’s and show that the derived formula simplifies to the desired constant \(R_1(k,j,b,(n_1,\ldots ,n_b))\). In the third step, following the arguments of the B-case, we write the conic intrinsic volumes of the tangent cones \(T_F(K^A)\) in terms of products of Weyl chambers, counted with certain multiplicity, and show that the formula for the generalized angle sums in the B-case also holds in the A-case.

Step 1. Let \(j\in \{0,\ldots ,n\}\) and \(k\in \{0,\ldots ,n\}\) be given. It is easy to check that the constant \(R_d(k,j,b,(n_1,\ldots ,n_b))\) vanishes for \(k<j\), so that we need to consider the case \(k\ge j\) only. For each j-face F of \(K^B\), there are numbers \(j_1,\ldots ,j_b\in {\mathbb {N}}_0\) satisfying \(j_1+\cdots +j_b=j\), such that

for some \(F_1\in {\mathcal {F}}_{j_1}(K_{n_1}^B),\ldots , F_b\in {\mathcal {F}}_{j_b}(K_{n_b}^B)\). Thus, the tangent cone of \(K^B\) at F is given by the following product formula:

In order to see this, observe that \({{\,\mathrm{relint}\,}}F={{\,\mathrm{relint}\,}}F_1 \times \cdots \times {{\,\mathrm{relint}\,}}F_b\). Take \(x=(x^{(1)},\ldots ,x^{(b)})\in {{\,\mathrm{relint}\,}}F\subset {\mathbb {R}}^n\), where \(x^{(i)}\in {{\,\mathrm{relint}\,}}F_i\subset {\mathbb {R}}^{n_i}\) for \(i=1,\ldots ,b\). Then, we have

Applying Lemma 3.9 to the individual terms in the product, we observe that the collection

where \(F_1\in {\mathcal {F}}_{j_1}(K_{n_1}^B),\ldots , F_b\in {\mathcal {F}}_{j_b}(K_{n_b}^B)\), coincides (up to isometries) with the collection of cones

where \(i_1^{(1)},\ldots ,i_{j_1}^{(1)},\ldots ,i_1^{(b)},\ldots ,i_{j_b}^{(b)}\in {\mathbb {N}}\) and \(i_0^{(1)},i_{j_1+1}^{(1)},\ldots ,i_0^{(b)},i_{j_b+1}^{(b)}\in {\mathbb {N}}_0\) are such that

This yields

Step 2. Similarly to the proof of Proposition 3.8, we observe that \(\nu _k(G_i)\) is the coefficient of \(t^k\) in the polynomial

Following the same arguments as in the proof of Proposition 3.8, we obtain that

We introduce new variables \(x_1,\ldots ,x_b\) and expand the product to write the right-hand side as follows:

Using the formulas (4.6) and (4.7) we arrive at

By introducing a new variable u and expanding the product again, we arrive at

which completes the proof of the B-case.

Step 3. In the A-case, instead of considering \(K^A\), we look at \({\widetilde{K}}^A=\widetilde{K}^A_{n_1}\times \cdots \times {\widetilde{K}}^A_{n_b}\) for \(n_1,\ldots ,n_b\in {\mathbb {N}}_0\) such that \(n:=n_1+\cdots +n_b\), where

denotes the unbounded polyhedral set related to \(K_{d}^A\). Note that \({\widetilde{K}}_0^A={\mathbb {R}}\).

Following the arguments of Step 1, we can write the tangent cones of \(T_F(K^A)\) (and also \(T_F({\widetilde{K}}^A)\)) as products of tangent cones at their respective faces. Using \(\upsilon _k(K_n^A)=\upsilon _{k+1}(K_n^A\oplus L_{n+1})\) and (3.1), we obtain

Applying Lemma 3.10 to each individual tangent cone in the product, we see that the collection of tangent cones \(T_{F_1}(\widetilde{K}_{n_1}^A)\times \cdots \times T_{F_b}(\widetilde{K}_{n_b}^A)\), where \(F_1\in {\mathcal {F}}_{j_1+1}(\widetilde{K}_{n_1}^A),\ldots ,F_b\in {\mathcal {F}}_{j_b+1}(\widetilde{K}_{n_b}^A)\), coincides (up to isometry) with the collection

where \(i_1^{(1)},\ldots ,i_{j_1+1}^{(1)},\ldots ,i_1^{(b)},\ldots ,i_{j_b+1}^{(b)}\in {\mathbb {N}}\) are such that

and each cone of the above collection is taken with multiplicity \(i_1^{(1)}\cdot \ldots \cdot i_1^{(b)}\). Thus, the formula in (4.11) can be rewritten as

for \(k\in \{b,\ldots ,n+b\}\) and \(j\in \{b,\ldots ,n+b\}\). The conic intrinsic volume on the right-hand side of (4.12) is given as the coefficient of \(t^{k+b}\) in the following polynomial:

Thus, the term in (4.12) simplifies to

which coincides with (4.10) and therefore completes the proof. Note that we used (4.6) and

which follows from the binomial series. \(\square \)

4.3 Proof of Proposition 3.7

Our goal is to show that

holds for \(j\in \{0,\ldots ,n\}\) and \(k\in \{0,\ldots ,n\}\).

Proof of Proposition 3.7

At first we show the formula for \(K^B\). In (4.8) we saw that

holds true for all \(j,k\in \{0,\ldots ,n\}\), where

Note that in the second sum on the right-hand side the indices satisfy \(i_0^{(l)},i_{j_l+1}^{(l)}\in {\mathbb {N}}_0\) and \(i_1^{(l)},\ldots ,i_{j_l}^{(l)}\in {\mathbb {N}}\) for all \(l\in \{1,\ldots ,b\}\). Thus, we obtain

The last equation follows from simple renumbering. Applying Proposition 3.8 with b replaced by 2b yields

where we used the well-known fact that the number of compositions of j into b non-negative integers (which may be 0) is given by \(\left( {\begin{array}{c}j+b-1\\ b-1\end{array}}\right) \). The formula for \(K^A\) follows from

which is due to Theorem 3.5. This completes the proof. \(\square \)

References

Affentranger, F., Schneider, R.: Random projections of regular simplices. Discret. Comput. Geom. 7(3), 219–226 (1992)

Amelunxen, D., Lotz, M.: Intrinsic volumes of polyhedral cones: a combinatorial perspective. Discret. Comput. Geom. 58(2), 371–409 (2017)

Amelunxen, D., Lotz, M., McCoy, M.B., Tropp, J.A.: Living on the edge: phase transitions in convex programs with random data. Inf. Inference 3(3), 224–294 (2014)

Bagno, E., Biagioli, R., Garber, D.: Some identities involving second kind Stirling numbers of types \(B\) and \(D\). Electron. J. Combin. 26(3), # 3.9 (2019)

Bagno, E., Garber, D.: Signed partitions—a balls into urns approach (2019). arXiv:1903.02877

Bala, P.: A \(3\) parameter family of generalized Stirling numbers (2015). https://oeis.org/A143395/a143395.pdf

Baryshnikov, Y.M., Vitale, R.A.: Regular simplices and Gaussian samples. Discret. Comput. Geom. 11(2), 141–147 (1994)

Broder, A.Z.: The \(r\)-Stirling numbers. Discret. Math. 49(3), 241–259 (1984)

Carlitz, L.: Weighted Stirling numbers of the first and second kind—I. Fibonacci Q. 18(2), 147–162 (1980)

Carlitz, L.: Weighted Stirling numbers of the first and second kind—II. Fibonacci Q. 18(3), 242–257 (1980)

Donoho, D.L., Tanner, J.: Counting the faces of randomly-projected hypercubes and orthants, with applications. Discret. Comput. Geom. 43(3), 522–541 (2010)

Gao, F.: The mean of a maximum likelihood estimator associated with the Brownian bridge. Electron. Commun. Probab. 8, 1–5 (2003)

Gao, F., Vitale, R.A.: Intrinsic volumes of the Brownian motion body. Discret. Comput. Geom. 26(1), 41–50 (2001)

Godland, T., Kabluchko, Z.: Conic intrinsic volumes of Weyl chambers (2020). arXiv:2005.06205

Godland, T., Kabluchko, Z.: Angle sums of Schläfli orthoschemes (2020). arXiv:2007.02293 (extended version of the present paper)

Grünbaum, B.: Grassmann angles of convex polytopes. Acta Math. 121, 293–302 (1968)

Hug, D., Schneider, R.: Random conical tessellations. Discret. Comput. Geom. 56(2), 395–426 (2016)

Humphreys, J.E.: Reflection Groups and Coxeter Groups. Cambridge Studies in Advanced Mathematics, vol. 29. Cambridge University Press, Cambridge (1990)

Kabluchko, Z., Vysotsky, V., Zaporozhets, D.: Convex hulls of random walks: expected number of faces and face probabilities. Adv. Math. 320, 595–629 (2017)

Kabluchko, Z., Vysotsky, V., Zaporozhets, D.: Convex hulls of random walks, hyperplane arrangements, and Weyl chambers. Geom. Funct. Anal. 27(4), 880–918 (2017)

Lang, W: On sums of powers of arithmetic progressions, and generalized Stirling, Eulerian and Bernoulli numbers (2017). arXiv:1707.04451

Pitman, J.: Combinatorial Stochastic Processes. Lecture Notes in Mathematics, vol. 1875. Springer, Berlin (2006)

Schneider, R., Weil, W.: Stochastic and Integral Geometry. Probability and its Applications. Springer, Berlin (2008)

Sloane, N.J.A. (ed.): The On-Line Encyclopedia of Integer Sequences. https://oeis.org

Suter, R.: Two analogues of a classical sequence. J. Integer Seq. 3(1), # 00.1.8 (2000)

Acknowledgements

Supported by the German Research Foundation under Germany’s Excellence Strategy EXC 2044-390685587, Mathematics Münster: Dynamics–Geometry–Structure, and by the DFG priority program SPP 2265 Random Geometric Systems.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor in Charge: Kenneth Clarkson

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Godland, T., Kabluchko, Z. Angle Sums of Schläfli Orthoschemes. Discrete Comput Geom 68, 125–164 (2022). https://doi.org/10.1007/s00454-021-00326-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-021-00326-z

Keywords

- Schläfli orthoschemes

- Weyl chambers

- Polytopes

- Polyhedral cones

- Solid angles

- Conic intrinsic volumes

- Stirling numbers

- Random walks