Abstract

This paper concerns test matrices for numerical linear algebra using an error-free transformation of floating-point arithmetic. For specified eigenvalues given by a user, we propose methods of generating a matrix whose eigenvalues are exactly known based on, for example, Schur or Jordan normal form and a block diagonal form. It is also possible to produce a real matrix with specified complex eigenvalues. Such test matrices with exactly known eigenvalues are useful for numerical algorithms in checking the accuracy of computed results. In particular, exact errors of eigenvalues can be monitored. To generate test matrices, we first propose an error-free transformation for the product of three matrices Y SX. We approximate S by \({S^{\prime }}\) to compute \({YS^{\prime }X}\) without a rounding error. Next, the error-free transformation is applied to the generation of test matrices with exactly known eigenvalues. Note that the exactly known eigenvalues of the constructed matrix may differ from the anticipated given eigenvalues. Finally, numerical examples are introduced in checking the accuracy of numerical computations for symmetric and unsymmetric eigenvalue problems.

Similar content being viewed by others

1 Introduction

This paper concerns test matrices for numerical algorithms. If exact eigenvalues are known in advance, then it is useful for checking accuracy and stability of numerical methods for problems in numerical linear algebra. There are several matrices whose exact eigenvalues are known, for example, Circular, Clement, Frank, and tridiagonal Toeplitz matrices. Test matrices are well summarized in [4, Chapter 28]. This paper aims to generate a matrix with exactly known eigenvalues based on some standard forms, for example, the Schur or the Jordan normal forms, or a block diagonal form. Let \(X \in \mathbb {R}^{n \times n}\) be a non-singular matrix. For a matrix \(S \in \mathbb {R}^{n \times n}\), we consider a form:

If (1) is the Schur normal form, X is an orthogonal matrix, and S is an upper triangular matrix. For the Jordan normal form, \(S \in \mathbb {R}^{n \times n}\) is a matrix whose diagonal blocks are Jordan matrices. It indicates that S is an upper bidiagonal matrix and the sub-diagonal elements si,i+ 1 are either 1 or 0. For both normal forms, the diagonal elements sii (1 ≤ i ≤ n) are eigenvalues of A.

Let \(\mathbb {F}\) be a set of floating-point numbers as defined in IEEE 754 [5]. If we use floating-point numbers and floating-point arithmetic, then there are following problems to get a matrix with exactly known eigenvalues using (1).

- Problem 1:

-

Even if all elements in a non-singular matrix X can be represented by floating-point numbers (\(X \in \mathbb {F}^{n \times n}\)), elements in X− 1 may not be represented by floating-point numbers (\(X^{-1} \not \in \mathbb {F}^{n \times n}\)).

- Problem 2:

-

Even if \(X, S, X^{-1} \in \mathbb {F}^{n \times n}\), \(X^{-1} S X \in \mathbb {F}^{n \times n}\) is not always satisfied.

We first prepare a diagonal matrix S and a non-singular matrix X, next obtain the inverse matrix X− 1 using exact computations such as symbolic or rational arithmetic. Finally, we compute X− 1SX using exact computations, and the result X− 1SX is rounded to a floating-point matrix A. One may think sii (1 ≤ i ≤ n) are nearly eigenvalues of A, and these are useful as numerical tests. However, the Bauer-Fike theorem [2] states

where λ and μ are eigenvalues of A and A + E = X− 1SX, respectively. Therefore, sii can be far from the exact eigenvalues of A even if A is a floating-point matrix nearest to X− 1SX. In addition, the cost of symbolic or rational arithmetic is much expensive compared to that of numerical computations.

We propose methods that produce a matrix with specified eigenvalues on the basis of an error-free transformation of matrix multiplication. For a matrix \(S \in \mathbb {F}^{n \times n}\) given by a user, we approximate S by \(S^{\prime } \in \mathbb {F}^{n \times n}\), and obtain \(A:=X^{-1} S^{\prime } X \in \mathbb {F}^{n \times n}\) using the proposed method. We prove that no rounding error occurs in evaluating a product of three matrices \(X^{-1} S^{\prime } X\) using floating-point arithmetic. Hence, the exact eigenvalue of A can be known from the matrix \(S^{\prime }\).

This paper is organized as follows: In Section 2, basic theorems for floating-point numbers are introduced. In Section 3, we propose an error-free transformation for a product of three floating-point matrices. In Section 4, several methods for generating test matrices with exactly known eigenvalues are proposed based on the theory in Section 3, and we introduce numerical examples in checking the accuracy of numerical computations.

2 Basics of floating-point arithmetic

Notation and basic lemmas on floating-point arithmetic are first introduced. Notations (⋅), ▽(⋅), and △(⋅) indicate a computed result by floating-point arithmetic with rounding to nearest mode (roundTiesToEven), rounding downward mode (roundTowardNegative), and rounding upward mode (roundTowardPositive), respectively. Such rounding modes are defined in IEEE 754 [5]. We omit to write (⋅) for each operation for simplicity of description. For example, if we write ((a + b) + c) for \(a,b,c \in \mathbb {F}\), then it means ((a + b) + c). Assume that neither overflow nor underflow occurs in (⋅) and △(⋅). Let and S be a roundoff unit and the smallest positive number in \(\mathbb {F}\), respectively. A constant is the largest floating-point number in \(\mathbb {F}\). For example, \(\mathtt {u}=2^{-53}, \mathtt {u}_{S}=2^{-1074}\), and = 21024(1 −) for binary64 in IEEE 754. A function (a) for \(a \in \mathbb {R}\), the unit in the first place of the binary representation of a, is defined as

From the definition of the function, we have

We introduce several basic facts of floating-point numbers and floating-point arithmetic for \(a,b \in \mathbb {F}\) and \(x \in \mathbb {R}\):

The definition of the floating-point numbers by IEEE 754 immediately derives (4) and (5). The relation (6) and the proposition (7) are written in [8] and [3], respectively. In addition, the following lemmas are used in our proof.Footnote 1

Lemma 1

For \(\sigma , a \in \mathbb {F}\), if (σ + a) ≥(σ), then \(\mathtt {fl}(\sigma +a) \in 2 \mathtt {u} \cdot \mathtt {ufp}(\sigma ) \mathbb {Z}\). In addition, \(\mathtt {fl}((\sigma +a)-\sigma ) \in 2 \mathtt {u} \cdot \mathtt {ufp}(\sigma ) \mathbb {Z}\) holds true.

Proof

Using (4), (σ + a) ≤((σ + a)) from (8), and the assumption (σ + a) ≥(σ) in turn, we have

Because (9) and \(\sigma \in 2 \mathtt {u} \cdot \mathtt {ufp}(\sigma ) \mathbb {Z}\) from (4), \(\mathtt {fl}((\sigma +a)-\sigma ) \in 2 \mathtt {u} \cdot \mathtt {ufp}(\sigma ) \mathbb {Z}\) is satisfied.

□

Applying Theorem 3.5 in [8] and an induction of binary tree described in [6], we can immediately obtain the following lemma.

Lemma 2

For \(p \in \mathbb {F}^{n}\), assume that \(p_{i} \in \mathtt {u} \sigma \mathbb {Z}\) for all i, where \(\sigma \in \mathbb {F}\) is a power of two. If \(\displaystyle \sum \limits _{i=1}^{n} |p_{i}| \le \sigma \), then no rounding error occurs in the sum by any orders.

We assume that divide and conquer methods by, for example, Strassen [9] and Winograd [11] are not applied for dot product, matrix-vector, and matrix-matrix multiplication.

3 General theory for error-free computations

For \(Y \in \mathbb {F}^{m \times n}, S \in \mathbb {F}^{n \times p}, X \in \mathbb {F}^{p \times q}\), we obtain a matrix \(S^{\prime } \in \mathbb {F}^{n \times p} (S^{\prime } \approx S)\) in order to compute \(YS^{\prime }X\) without a rounding error. This technique is applied to generation of test matrices with exactly known eigenvalues.

We set several constants as follows: We define ϕij for xij≠ 0 and ψij for yij≠ 0 such that

where all ϕij and ψij are powers of two. The constants ϕij and ψij imply the unit in the last non-zero bit of xij and yij, respectively. Exceptionally, we set ϕij = 0 for xij = 0 and ψij = 0 for yij = 0. Next, we set constants β,γ,𝜃,ω as follows:

These imply

for all (i,j) pairs. Note that β,γ,𝜃,ω ≥ 1 and \(\displaystyle \underset {k,j}{\max \limits } \phi _{kj}>0, \underset {i,k}{\max \limits } \psi _{ik}>0\), because both X and Y are non-singular matrices.

If sij = 0 for all (i,j) pairs, then it is trivial that the matrix A is the zero matrix. Hence, we assume \(\displaystyle \max \limits _{i,j} |s_{ij}| > 0\). Let

-

nY: the maximum of the number of non-zero elements in row of Y,

-

nS: the maximum of the number of non-zero elements in row of S,

-

nX: the maximum of the number of non-zero elements in column of X.

We set \(n^{\prime } = {\min \limits } (n_{S}, n_{X})\). We define a constant σ as

where

Then,

is satisfied because all of β,γ,𝜃, and ω are powers of two. It is possible to set α as \(\displaystyle \mathtt {fl}_{\bigtriangleup } (n \cdot n^{\prime } \cdot \max \limits _{i{,j}} |{s}_{ii}|)\) using floating-point arithmetic. A matrix \(S^{\prime }\) is computed by

We introduce several lemmas.

Lemma 3

For \(S \in \mathbb {F}^{n \times n}\), σ in (14), β and γ in (11), and ψ and ω in (12),

and

are satisfied for all (i,j) pairs.

Proof

From (16), the inequality (3), and α in (15), we have

Because \(n_{Y}, n^{\prime }, \beta , \gamma , \theta , \omega \ge 1\), the inequality (18) can be obtained.

For xij = 0 and yij = 0, the inequalities (19) are trivially satisfied. Therefore, we assume xij≠ 0 (ϕij≠ 0) and yij≠ 0 (ψij≠ 0) for the rest of the proof. From (11), (12), and (3), we have

for all (i,j) pairs. Then, using (13),

are satisfied for all (i,j) pairs. Therefore, (19) is proved.

□

Lemma 4

For \(S \in \mathbb {F}^{n \times n}\) and σ in (14),

is satisfied for all (i,j) pairs.

Proof

From β,γ,𝜃,ω ≥ 1, (3), and α≠ 0 from \(\displaystyle \max \limits _{i,j} |s_{ij}| > 0\), we have

Therefore, application of (20) and (21) yields

and using (22), (14), (23), and (16) gives

Therefore,

can be derived.

□

Lemma 5

Assume that \(s^{\prime }_{ij}\) is obtained by (17). Then,

is satisfied.

Proof

Using the definition of σ in (14) gives σ ≥|sij| for all (i,j) pairs. From this and (7), we derive

where |δ|≤⋅(σ + sij) = ⋅(σ) from (6) and Lemma 4. Therefore, from Lemma 3

□

We show that rounding error never occurs in the evaluation of \(Y{S}^{\prime }X\).

Theorem 1

Let \(\sigma \in \mathbb {F}\) be defined as in (14) from the matrices Y,X and S. Assume that \(4 n_{Y} \cdot n^{\prime } \cdot \mathtt {u} \beta \gamma \theta \omega \le 1\). If a matrix \(S^{\prime }\) is obtained by (17), then

Proof

First, we show \(\mathtt {fl}(S^{\prime }X)=S^{\prime }X\). If xij = 0, 1 ≤ i ≤ p for some j (the j-th column vector of X is the zero vector), then

Hence, we assume there exists xij≠ 0 for any j for the rest of the proof. Since \(n_{Y}, n^{\prime }, \beta , \theta , \omega \ge 1\) and \(4 n_{Y} \cdot n^{\prime } \cdot \mathtt {u} \beta \gamma \theta \omega \le 1\) from the assumption, we have

Using Lemma 1 and (10) gives

and they yield

Next, we take an upper bound of \(\displaystyle \left | \sum \limits _{k=1}^{{p}} s_{ik}^{\prime } x_{kj} \right |\) using Lemma 5, Lemma 3, and (24):

Therefore, from (26) and (28), Lemma 2 derives \(\mathtt {fl}({S}^{\prime }X)={S}^{\prime }X\).

Next, we focus on \(YS^{\prime }X\). The (i,j) element of \(YS^{\prime }X\) is

Therefore, we derive

Application of (27), Lemma 3, and \(\displaystyle 1 + 4 n_{Y} \cdot n^{\prime } \cdot \mathtt {u} \beta \gamma \theta \omega \le 2\) obtained from the assumption yields

Hence, from (29) and (30), Lemma 2 verifies \(\mathtt {fl}({Y} (S^{\prime } X)) = {Y} S^{\prime } X\).

□

If we change the definition of \(n^{\prime }\), it is possible to verify \(\mathtt {fl}(({Y} S^{\prime }) X) = {Y} S^{\prime } X\) similarly. Remark that the proposed method has reproducibility,Footnote 2 because there is no rounding error in any order of computations in \(\mathtt {fl}({Y} (S^{\prime } X))\).

4 Test matrices based on specified matrices

In this section, we introduce how to generate a matrix with exactly known eigenvalues. We prepare \(S, X, Y \in \mathbb {F}^{n \times n} \ (Y = X^{-1})\). Assume that exact eigenvalues can be known quickly from the matrix S. For example, S is a diagonal matrix, triangular matrices, and tridiagonal Toeplitz matrix. We use MATLAB R2020a on Windows 10 with Intel(R) Core(TM) i7-8665U in numerical examples in this section.

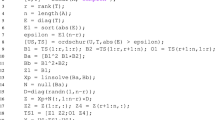

We introduce a basic algorithm for the generation of test matrices using MATLAB-like code on the basis of the discussion in Section 3. Greek alphabets cannot be used for variables on MATLAB. However, we exceptionally accept to use Greek alphabets in the description of algorithms for consistency with the theories in this paper.

In the algorithm, (⋅) at line 11 is not a built-in function in MATLAB. The calculation of (a) for \(a \in \mathbb {F}\) involves only three floating-point operations (see Algorithm 3.6 in [7]). Therefore, the cost for the ufp function is negligible. The function _ at line 6 in Algorithm 1 produces β and γ according to (11). The function _ similarly gives 𝜃 and ω based on (12).

To set multiple eigenvalues can be possible, because if sij = skℓ, then zij = zkℓ. We can also generate a rank deficient matrix with exactly known eigenvalues. However, even if sij≠skℓ, zij = zkℓ may be satisfied. It happens for relatively small |sij| compared to \(\displaystyle \max \limits _{i,j} |s_{ij}|\). If σ ≥|sij|, then zij = 0. In the worst case, the matrix Z becomes the zero matrix, and an obtained matrix A is useless. In addition, a test matrix with the specified singular values can be generated using Y = XT.

Note that we can generate a real matrix with complex eigenvalues. Let i be the imaginary unit. The basic of linear algebra derives that a ± bi are eigenvalues of the following skew-symmetric matrix:

where

For a discussion of complex eigenvalues, we set 2-by-2 matrices to the diagonal block of D. For example, if \(a \pm b i, \ c \pm d i, e \ (a,b,c,d,e \in \mathbb {F})\) are expected eigenvalues, then we set a skew-symmetric matrix as

If one wants to obtain a matrix with exactly known eigenvectors, then we obtain the matrix as follows.

Note that

in some cases due to a problem of rounding error. Then, \(S^{\prime }\) is not a skew-symmetric matrix. To solve the problem, we compute only

and set \(s_{ji}^{\prime }=-s_{ij}^{\prime }\) by copy of the computed result. For

Z is not a skew-symmetric matrix, while S is a skew-symmetric matrix.

The condition \(X, Y \in \mathbb {F}^{n \times n} \ (Y = X^{-1})\) is very special. Therefore, we use specified matrices for X in (1) from the following sections.

4.1 Using weighing matrix

Assume that \(D \in \mathbb {F}^{n \times n}\) is a diagonal matrix (\(n^{\prime }=1\)). First, we use a weighing matrix. Let I be the identity matrix. If \(W \in \mathbb {R}^{n \times n}\) with weight \(c \in \mathbb {N}\) is a weighing matrix, WTW = cI and wij ∈{− 1,0,1} for all (i,j) pairs. The weighing matrix is useful for the candidate of the matrix X, because β = γ = 𝜃 = ω = 1 in (11) and (12), and \(\displaystyle Y=X^{-1}=\frac {1}{c}W^{T}\).

Let \(W_{n,c} \in \mathbb {F}^{n \times n}\) be a weighing matrix such that \(W^{T}_{n,c} W_{n,c} = c I\). The notation ⊗ means the Kronecker product. For \(\displaystyle n=\prod \limits _{i=1}^{w} n_{i}\) and \(\displaystyle c = \prod \limits _{i=1}^{w} c_{i}\), \(n_{i}, \ c_{i} \in \mathbb {N}\), a weighing matrix Wn,c can be obtained by \(W_{n_{1},c_{1}} \otimes W_{n_{2},c_{2}} \otimes {\dots } \otimes W_{n_{w},c_{w}}\).

If we set \(Y = \displaystyle \frac {1}{c} W_{n,c}^{T} = \frac {1}{c_{1}} W_{n_{1},c_{1}}^{T} \otimes {\dots } \otimes \frac {1}{c_{w}} W_{n_{w},c_{w}}^{T}\), then the matrix Y may not be representable in floating-point numbers. It happens when c is not a power of two. For a given diagonal matrix D, we obtain σ from \(\displaystyle \mathtt {fl} \left (\frac {1}{c}D \right )\) instead of D and compute

Then, we can compute \(\displaystyle W_{n,c}^{T} (D^{\prime } W_{n,c})=:A\) without rounding errors. Hence, \(c d_{ii}^{\prime }\) is the exact eigenvalue of the matrix A. Note that \(c d_{ii}^{\prime }\) may not be representable in the floating-point numbers. An error-free transformation [3] produces floating-point vectors p and q such that

It is well known that we quickly obtain p and q using fused multiply-add (FMA instruction defined in IEEE 754). The function (a,b,c) for \(a,b,c \in \mathbb {F}\) provides a nearest floating-point number of ab + c. Then,

Hadamard matrix, the special case of weighing matrix (c = n), is very useful for the candidate of X.

The following codes generate a test matrix on MATLAB. Let \(d \in \mathbb {F}^{n}\) be a vector with expected eigenvalues. The exact eigenvalue of \(A \in \mathbb {F}^{n \times n}\) is pi + qi ≈ di. Note that the function hadamard on MATLAB R2020a in the following algorithm works when n, n/12 or n/20 is a power of two.Footnote 3

Note that Algorithm 2 produces a real symmetric matrix. For \(n \in \mathbb {F}\) and \(y \in \mathbb {F}^{n}\), the function at line 12 transforms ny to \(p+q, \ p,q \in \mathbb {F}^{n}\). If n is a power of two, q at line 12 is the zero vector.

At line 11, a matrix multiplication is computed. In the special cases, we can reduce the cost of the matrix multiplication. Let Hn be a Hadamard matrix with dimension n. We can generate one of 2n-by-2n Hadamard matrices by the following manner [10]

For a diagonal matrix \(D \in \mathbb {F}^{2n \times 2n}\), there exist matrices \(M, N \in \mathbb {R}^{n \times n}\) such that

where D1 and D2 are n-by-n diagonal matrices. Then, the matrices M and N can quickly be obtained, because D1 and D2 are diagonal matrices. Finally, we compute \(H_{2n}^{T} D H_{2n}\) by

Therefore, we only compute \({H_{n}^{T}} M = {H_{n}^{T}} D_{1} {H_{n}^{T}}\) and \({H_{n}^{T}} N = {H_{n}^{T}} D_{2} {H_{n}^{T}}\) as matrix multiplications to obtain \(H^{T}_{2n} D H_{2n}\). The cost of matrix multiplications can be reduced using this technique recursively.

Let r ⋅ n be a matrix size for the output, where \(r, n \in \mathbb {N}\) and r is a power of two.

where \(D_{i} \in \mathbb {F}^{n \times n}\), 1 ≤ i ≤ r are diagonal matrices, and O is the zero matrix.

Then, we only compute the following r matrix multiplications:

and Cij is obtained by

The total cost becomes \(2 k n^{3} + \mathcal {O}(n^{2}+k)\) floating-point operations for r-by-r block division. Note that the original cost without block computations is \(2 (k n)^{3} + \mathcal {O}((kn)^{2})\), that is much expensive compared to block computations for large k.

We introduce an algorithm producing a matrix with eigenvalues based on the block computations.

We show numerical examples for the performance using this technique. Figures 1 and 2 show computing times with r-by-r block computations. r = 1 means a computing time for Algorithm 2. Computing times for solving linear systems () and eigenvalue problems () using MATLAB are shown for comparison. Note that in this example produces only approximate eigenvalues such that d = (A). The figures indicate that computing time for the generation of test matrices is much shorter than that for solving linear systems and that for eigenvalue problems.

Remark 1

We mentioned that MATLAB’s hadamard function works in the case that n, n/12, or n/20 is the power of two. Even if n does not satisfy that restriction, the test matrices can be generated. Assume that Hk exists and k < n. Let \(P \in \mathbb {R}^{(n-k) \times (n-k)}\) be a permutation matrix. We generate

instead of the Hadamard matrix. Then, \(D^{\prime }\) is obtained by

The exact eigenvalue is \(k d_{ii}^{\prime }\) for 1 ≤ i ≤ k and \(d^{\prime }_{ii}\) for k + 1 ≤ i ≤ n.

We checked the accuracy of approximate eigenvalues obtained by . The vector \(d \in \mathbb {F}^{n}, \ n=4096\) in Algorithm 2 is set as follows.

- Ex.1::

-

d1 = 1, dn = 1010, intermediate values di, 2 ≤ i ≤ n − 1, are geometrically distributed.

- Ex.2::

-

di = 1, dn = 1010, 1 ≤ i ≤ n − 1.

We compare accuracy of eigenvalues by d = (A) and [V,D] = (A) in MATLAB. Let \(\hat \lambda _{1} \le \hat \lambda _{2} \le {\dots } \le \hat \lambda _{n}\) be computed eigenvalues. Figures 3 and 4 show relative errors

for all i, where p and q are obtained by Algorithm 2. From Fig. 3 for Ex.1, the accuracy of relatively small eigenvalue tends to be worse. Besides, approximate eigenvalues from [V,D] = (A) are better than those from d = (A) in terms of accuracy. In the case of Ex.2, we can see from Fig. 4 that some of the eigenvalues are not so accurate, and several computed eigenvalues are exact.

The quantity (31) for Ex.1

The quantity (31) for Ex.2

Using the Hadamard matrix, we introduce an algorithm generating a test matrix based on the Jordan normal form (\(n^{\prime }=2\)).

We show numerical examples using Algorithm 4. The vectors \(v \in \mathbb {F}^{n}\) and \(w \in \mathbb {F}^{n-1}\) for n = 4096 as arguments of Algorithm 4 are set as follows:

- Ex.3::

-

\(v_{1}=1, \ v_{i} = 10^{5}, \ 2 \le i \le n, \ w=(1,1,\dots ,1)^{T}\).

- Ex.4::

-

\(v_{i}=1, \ 1 \le i \le n-1, \ v_{n} = 10^{5}, \ w=(1,1,\dots ,1)^{T}\).

Then, we execute [A,p,q] = _(v,w). Figures 5 and 6 show the quantity (31) for all i, respectively. Approximate eigenvalues for these numerical examples have small imaginary parts so that we checked the accuracy of the real part of the computed eigenvalues. In both cases, the matrices have n − 1 multiple eigenvalues, but there are differences in the numerical examples. From Fig. 6, computed eigenvalues for n − 1 small eigenvalues are very inaccurate.

The quantity (31) for Ex.3

The quantity (31) for Ex.4

4.2 Eigenvector matrix X using random integer matrices

We use the LU decomposition and generate integer matricesFootnote 4 for X. Let L and U be a unit lower triangular matrix and a unit upper triangular matrix, respectively. We generate X = LU, where we set L and U as 0-1 matrices in order to obtain small constants β, γ, 𝜃, and ω. Then, Y = X− 1 is also an integer matrix. Because L and U are 0-1 matrices, there is no rounding error in (LU) up to n = − 1. On the other hand, the problem is that the exact Y = X− 1 can be obtained or not. Let \(\tilde Y\) be a numerically obtained result for X− 1. If \(\textbf {O} = \mathtt {fl}_{\bigtriangledown } (\tilde Y X - I) = \mathtt {fl}_{\bigtriangleup } (\tilde Y X - I)\), where I is the identity matrix, then \(\tilde Y = X^{-1}\). Using MATLAB notation, L and U are given as

where 0 ≤ k ≤ 1 is density of a matrix. The sparsity of L and U is controlled by \(k \in \mathbb {N}\).

The following algorithm generates A using random integer matrices.

We introduce numerical examples for Algorithm 5. We set n = 1,000,5,000, and \(d \in \mathbb {F}^{n}\) is generated by (n,1) using MATLAB R2020a. Table 1 shows

-

The product of four constants βγ𝜃ω

-

The 2-norm condition number of X, i.e., ∥X− 1∥2∥X∥2 = ∥Y ∥2∥X∥2

-

The relative change between di and ri, i.e., \(\displaystyle \max \limits _{i} \frac {|d_{i}-r_{i}|}{|d_{i}|}\)

-

The density of the matrix A, i.e., the number of non-zero elements of A divided by n2

for each k. The numbers in Tables 1 and 2 are averages of 10 examples. If k is small, we can obtain small βγ𝜃ω. It makes the relative change for v small. If k becomes large, then

-

The relative change for a small absolute value,

-

The condition number of X, and

-

Density of A

also become large.

5 Conclusion

We proposed methods generating matrices with specified eigenvalues based on the error-free transformation of floating-point numbers. They are useful for checking the accuracy and stability of numerical algorithms. Finally, we provided several possibilities for an eigenvector matrix and showed how to generate a real matrix with complex eigenvalues. The discussion can be extended to the generation of test matrices with complex eigenvalues and eigenvectors.

Notes

Thanks to Dr. Atsushi Minamihata for the discussion.

Reproducibility means ability to obtain always bit-wise identical results on the same input data on the same or different architectures [1].

This is not only for MATLAB R2020a, but also for more early versions.

We appreciate Prof. Siegfried M. Rump’s advice for this discussion.

References

Ahrens, P., Nguyen, H.D., Demmel, J.: Efficient Reproducible Floating Point Summation and BLAS, EECS Department, UC Berkeley, Technical Report No. UCB/EECS2016-121 Berkeley (2016)

Bauer, F.L., Fike, C.T.: Norms and exclusion theorems. Numer. Math. 2, 137–141 (1960)

Dekker, T.J.: A floating-point technique for extending the available precision. Numer. Math. 18, 224–242 (1971)

Higham, N.J.: Accuracy and Stability of Numerical Algorithms, 2nd edn. SIAM, Philadelphia (2002)

IEEE Standard for Floating-Point Arithmetic: Std 754-2019 (2019)

Jeannerod, C. -P., Rump, S.M.: Improved error bounds for inner products in floating-point arithmetic. SIAM J. Matrix Anal. & Appl. (SIMAX) 34, 338–344 (2013)

Rump, S.M.: Error estimation of floating-point summation and dot product. BIT Numer. Math. 52, 201–220 (2012)

Rump, S.M., Ogita, T., Oishi, S.: Accurate floating-point summation part I: faithful rounding. SIAM J. Sci. Comput. 31, 189–224 (2008)

Strassen, V.: Gaussian elimination is not optimal. Numer. Math. 13, 354–356 (1969)

Sylvester, J.J.: Thoughts on inverse orthogonal matrices, simultaneous sign successions, and tessellated pavements in two or more colours, with applications to Newton’s rule, ornamental tile-work, and the theory of numbers. Philos. Mag. 34, 461–475 (1867)

Winograd, S.: On multiplication of 2x2 matrices. Linear Algebra Its Appl. 4, 381–388 (1971)

Acknowledgements

This research is partially supported by JST / CREST and JSPS Kakenhi (20H04195). We sincerely express our thanks to the reviewer for the fruitful comments and Dr. Takeshi Terao for his kind advice of simplified codes of MATLAB.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ozaki, K., Ogita, T. Generation of test matrices with specified eigenvalues using floating-point arithmetic. Numer Algor 90, 241–262 (2022). https://doi.org/10.1007/s11075-021-01186-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-021-01186-7