Abstract

The diversity of tree species and the complexity of land use in cities create challenging issues for tree species classification. The combination of deep learning methods and RGB optical images obtained by unmanned aerial vehicles (UAVs) provides a new research direction for urban tree species classification. We proposed an RGB optical image dataset with 10 urban tree species, termed TCC10, which is a benchmark for tree canopy classification (TCC). TCC10 dataset contains two types of data: tree canopy images with simple backgrounds and those with complex backgrounds. The objective was to examine the possibility of using deep learning methods (AlexNet, VGG-16, and ResNet-50) for individual tree species classification. The results of convolutional neural networks (CNNs) were compared with those of K-nearest neighbor (KNN) and BP neural network. Our results demonstrated: (1) ResNet-50 achieved an overall accuracy (OA) of 92.6% and a kappa coefficient of 0.91 for tree species classification on TCC10 and outperformed AlexNet and VGG-16. (2) The classification accuracy of KNN and BP neural network was less than 70%, while the accuracy of CNNs was relatively higher. (3) The classification accuracy of tree canopy images with complex backgrounds was lower than that for images with simple backgrounds. For the deciduous tree species in TCC10, the classification accuracy of ResNet-50 was higher in summer than that in autumn. Therefore, the deep learning is effective for urban tree species classification using RGB optical images.

Similar content being viewed by others

Introduction

In urban ecosystems, trees provide several ecological services such as climate regulation, air quality improvement and water conservation (Endreny 2018; Fassnacht et al. 2016), as well as some social benefits such as increasing residential property values and accelerating urbanization (Ren et al. 2018). The ability to regulate temperature and relative humidity is closely related to tree species, so the accurate classification of tree species is essential for sustainable urban development and management (Mozgeris et al. 2018; Ren et al. 2017). As an important technology, remote sensing has been widely used in urban forest surveys (Moskal et al. 2011; Dalponte et al. 2013; Liu and Wu 2018; Pu et al. 2018). Satellite imagery can cover a large area of urban forests, but data collection is easily affected by clouds and fog. As a platform for acquiring high-resolution images, unmanned aerial vehicle (UAV)-based remote sensing has been applied to many fields including urban tree species classification due to its low cost, fast data acquisition, and flexible operation (Swain et al. 2010; Puliti et al. 2015; Lin et al. 2015; Huang et al. 2018; Liu et al. 2018).

Different tree species have different spectral and textural characteristics. At present, some scholars use hyperspectral and LiDAR data to classify tree species (Koch et al. 2006; Alonzo et al. 2014; Dalponte et al. 2014; Dian et al. 2016). Maschler et al. (2018) used hyperspectral data to achieve the automatic classification of 13 tree species, and their results indicated that highly accurate tree species classifications can be obtained from hyperspectral data covering the visible and near-infrared (NIR) spectral regions. Liu et al. (2017) highlighted that the variables derived from LiDAR data contributed more to the accurate prediction of species than hyperspectral features. However, the process of collecting hyperspectral data is complicated, and the spectral interval information may have repeating parts for different tree species, which will reduce the accuracy of tree species classification. Weather conditions including rain, snow, fog, etc., will attenuate the laser beams during the acquisition of LiDAR data. Compared with hyperspectral and LiDAR data, RGB optical image collection is more convenient. Therefore, whether RGB optical images obtained by a UAV can be used as a tree-species-classification data source is a valuable research direction to be explored.

The development of appropriate algorithms plays a key role in tree species classification. Compared with traditional classification algorithms, machine learning has been proved to be more effective in tree species classification (Jones et al. 2010; Dalponte et al. 2012). Nevalainen et al. (2017) used random forest (RF) and multilayer perceptron (MLP) to classify individual trees based on 3D hyperspectral remote sensing. Dalponte et al. (2015) proposed that support vector machine (SVM) classifier is suitable for individual tree crown (ITC) level classification. In recent years, deep learning, especially convolutional neural networks (CNNs), have gained popularity in image classification due to their ability to characterize complex patterns within imagery datasets (Krizhevsky et al. 2012; Simonyan and Zisserman 2015; Szegedy et al. 2015; He et al. 2016). A few scholars have applied CNNs to urban tree species classification. Hartling et al. (2019) compared DenseNet with SVM and RF for different combinations of VNIR, SWIR and LiDAR data, and the results demonstrated that DenseNet has the highest classification accuracy for urban tree species. Xie et al. (2018) discriminated urban trees using the canopy height model (CHM) based on CNN. Though there have been great achievements in applying deep learning to image classification, the success depends on possessing large datasets to support CNN. To our knowledge, there is no large publicly available dataset for urban tree species classification except for PlantCLEF (Atito et al. 2017), which includes more than 10,000 plant categories, but it focuses on leaves not tree canopies.

Deep learning is well known for its excellent generalization ability for unseen data. However, there have been few studies on tree species classification using deep learning and RGB optical images so far due to the lack of relevant datasets. Deep learning cases based on RGB images have achieved great success in the past few years, and these studies have greatly motivated us to further study the potential of deep learning methods for solving tree species classification problems.

The objectives of this study are three folds: (1) propose a dataset with images of the canopies of 10 urban tree species, termed Tree Canopy Classification 10 (TCC10) and prove that the RGB optical images obtained by the UAV are an effective data source for the classification of urban tree species; (2) analyze the classification accuracy of AlexNet, VGG-16 and ResNet-50 on TCC10 dataset and compare CNNs with K-nearest neighbor (KNN) and BP neural network; and (3) study the impacts of the background complexity and deciduous tree species on the classification accuracy of CNNs.

Materials and methods

Study area

The study area is located in Lin’an, Zhejiang Province, in eastern China (29°56′ N to 30°23′ N, 118°51′ E to 119°52′ E) (Fig. 1). The area is a subtropical monsoon climate region with an average annual rainfall of 1613 mm and an average annual temperature of 16 °C, and the terrain is dominated by hills. Lin'an is rich in natural resources, and it is known as the “National Garden City”. In this study, Jinbei Street, Linglong Street, Taihuyuan Street and Zhejiang Agriculture and Forestry University in Lin’an were selected as the data collection areas.

Dataset

Most of the tree species in the study area are deciduous broad-leaved trees, such as Ginkgo biloba, Sapindus mukorossi, Celtis sinensis, Prunus cerasifera and Platanus acerifolia, and evergreen broad-leaved trees, such as Cinnamomum camphora, Magnolia grandiflora, Osmanthus fragrans and Michelia chapensis. In addition, a representative deciduous coniferous tree species in this area, Metasequoia glyptostroboides, was selected as the research object. We obtained the canopies from a total of 10 tree species to construct the proposed TCC10 dataset.

This study utilized an Inspire 2 rotor-wing UAV system (T650A, DJI, China) with a Zenmuse X5S camera onboard. The images were obtained in the summer and autumn of 2018 in an environment with mild weather and low wind speed. Because of the height of buildings around the data collection area, the flying height of the UAV was 40–60 m.

The complexity of the image background is an important factor in image classification. TCC10 dataset contains two types of data: tree canopy images with simple backgrounds and tree canopy images with complex backgrounds. In addition, each tree species in TCC10 dataset was collected from different study areas with rich changes in their backgrounds and light. For the deciduous tree species, tree canopy images from different seasons were collected. Figures 2 and 3 show two examples of 10 tree species in TCC10. TCC10 has a total of 19,302 tree canopy images, and the number of images in each category is shown in Table 1.

Methods

Classification methods

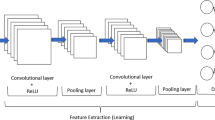

In the early years of deep learning, CNNs were difficult to apply to practical tasks due to their hardware limitations. The emergence of high-performance GPUs has allowed CNNs to perform well in image classification. Three CNN methods were applied to TCC10 dataset, namely, (1) AlexNet, (2) VGG-16, and (3) ResNet-50.

The AlexNet architecture utilized an 8-layer neural network, with 5 convolutional layers and 3 fully connected layers, including 630 million links, 60 million parameters and 650,000 neurons. VGGNet replaces the 7 × 7 and 5 × 5 convolution kernels in AlexNet, with multiple 3 × 3 convolution kernels by repeatedly stacking 3 × 3 small convolution kernels and 2 × 2 maximum pooling layers. VGGNet can extract finer features with fewer parameters and greatly improve the ability to extract nonlinear combination features. In addition, VGGNet increases the depth of the network. ResNet solves the problem that deep neural networks are difficult to train, and introduces the idea of deep residual learning, which effectively weakens the vanishing gradient problem.

Data augmentation

The data augmentation techniques such as rotation and brightness adjustment are used in this study (Fig. 4), and two experiments using TCC10 dataset are designed. The first experiment is tree species classification using tree canopy images with simple backgrounds. The number of images is 29,508 after data augmentation, and the images are randomly divided into a training dataset (80%) and a validation dataset (20%). The other experiment is tree species classification of tree canopy images with complex backgrounds. After the same data augmentation techniques, the number of images is 28,398. To ensure the comparability of the two experiments, the images are also randomly divided into a training dataset (80%) and a validation dataset (20%).

Evaluation indicators

To verify the validity of TCC10 dataset, we produced another test dataset for the two types of data, which contained a total of 2676 images. Each tree canopy image in the test dataset was different. To avoid the similarity of the trees in the same area, some tree species were collected from different study areas. In image classification, multiple indicators are usually used to evaluate the accuracy of the algorithm. We calculate the overall accuracy (OA) and kappa coefficient for the images in the test dataset. OA is calculated using Eq. (1).

where, Ni represents the correct number of classifications in i categories, and N represents the total number of images in the test dataset.

The basic functional form of the kappa coefficient [Eq. (2)] is given by:

where, A represents the number of true samples of each class, and B represents the number of samples of each class predicted.

Results and discussion

Classification results of TCC10 dataset

To compare the AlexNet, VGG-16, and ResNet-50 image classification methods on TCC10 dataset, the classification experiments for tree canopy images with simple and complex backgrounds were separately conducted. For tree canopy images with simple backgrounds, Adam optimizer was used in CNNs. The training processes of AlexNet, VGG-16, and ResNet-50 are shown in Fig. 5. The blue curves represent the accuracy and loss value changes of the training dataset; and the orange curves represent the accuracy and loss value changes of the test dataset. The accuracy changes of AlexNet tend to be stable after 20,000 steps in Fig. 5a, and the loss value changes show the same tendency in Fig. 5b. The training accuracy and loss value changes of VGG-16 also remain stable after 20,000 steps in Fig. 5c and d, and ResNet-50 has the slowest convergence rate in Fig. 5e and f.

Similar to the first experiment, CNNs were trained using tree canopy images with complex backgrounds (Fig. 6). The convergence rate of this experiment is slower than that of the first experiment. The training accuracy changes of AlexNet and VGG-16 tend to be stable after 30,000 steps in Fig. 6a and c. The loss value changes steadily after 30,000 steps in Fig. 6b and d. The training accuracy changes of ResNet-50 tend to be stable after 65,000 steps in Fig. 6e and f.

This study used OA and kappa coefficient to evaluate the effects of AlexNet, VGG-16 and ResNet-50 on TCC10. Table 2 shows the experimental parameters, OAs and kappa coefficients. ResNet-50 performed best, with an OA of 92.6% and kappa coefficient of 0.91. The results showed that the OAs and kappa coefficients of CNNs on images with simple backgrounds were higher than those on images with complex backgrounds, which proved that the background complexity can reduce the classification accuracy.

Classification accuracy of each tree species in TCC10 dataset

Figure 7 shows the confusion matrices for TCC10. Overall, ResNet-50 obtained the highest classification accuracy for most of the tree species. For tree canopy images with simple backgrounds, the OAs of ResNet-50 for Celtis sinensis, Cinnamomum camphora, Ginkgo biloba, Magnolia grandiflora, Michelia chapensis, Osmanthus fragrans and Platanus acerifolia were higher than the OAs of the other models, and the OAs of 6 tree species were above 90%. For Metasequoia glyptostroboides and Prunus cerasifera, VGG-16 showed the best results. For tree canopy images with complex backgrounds, the OAs of ResNet-50 for C. camphora, Ginkgo biloba, M. chapensis, O. fragrans and Sapindus mukorossi were higher than the OAs of the other models, and the other tree species performed better with VGG-16. The results demonstrated that the deep network and the residual structure in ResNet-50 had advantages for tree species classification on TCC10.

(a) Confusion matrix for AlexNet for tree canopy images with simple backgrounds, (b) confusion matrix for AlexNet for tree canopy images with complex backgrounds, (c) confusion matrix for VGG-16 for tree canopy images with simple backgrounds, (d) confusion matrix for VGG-16 for tree canopy images with complex backgrounds, (e) confusion matrix for ResNet-50 for tree canopy images with simple backgrounds, and (f) confusion matrix for ResNet-50 for tree canopy images with complex backgrounds

Comparison of multiple image classification methods

The classification accuracy of CNNs was compared with that of two image classification algorithms: KNN and BP neural network. Both KNN and BP neural network used histogram of oriented gradient (HOG) to describe the textural features of an image. For the comparison with CNN methods, 80% of the images were selected as the training dataset, and 20% were used as the test dataset. Figure 8 demonstrates that the accuracy of KNN and BP neural networks is less than 70%, and CNNs perform better in tree canopy classification. There are two reasons that could account for the tree species classification results: (1) The convolutional structure in a CNN enhances the signal characteristics of the image, and the CNN's inter-layer connections allow it to achieve better image classification performance. (2) Weight sharing makes the network more adaptable.

Deciduous tree species classification in the summer and autumn

The most obvious feature of deciduous trees is that their leaves change color in the fall. To explore the applicability of a deep learning method to the classification of deciduous tree species, ResNet-50 was used for the experiment on the tree canopy images with simple backgrounds (Table 3). The test dataset contains images of deciduous trees in the summer and autumn. In the summer, the classification accuracy of five tree species was above 90%. Prunus cerasifera obtained the highest classification accuracy (100%), and P. acerifolia (89.5%) obtained the lowest classification accuracy. Overall, the classification accuracy of six deciduous trees was higher in summer than in autumn. A possible reason is that the number of leaves is small in the autumn, and the characteristics of the tree canopies are not obvious. The weakening of features such as the shape, color, and texture reduce the classification accuracy of CNNs. The accuracy of Ginkgo biloba was the highest in the autumn because Ginkgo biloba has golden leaves, and the shape of the tree canopy is complete. However, C. sinensis and S. mukorossi have similar leaf shapes, and their characteristics are weakened in the autumn, which leads to lower classification accuracy.

Conclusion

In this study, a UAV was used to obtain RGB optical images of urban tree canopies, and a tree-canopy-image dataset with 10 species was proposed. We evaluated OAs and kappa coefficients of three representative CNNs (AlexNet, VGG-16, and ResNet-50) on the two types of TCC10 data. Our results demonstrated that the OAs and kappa coefficients of ResNet-50 were higher than those of AlexNet and VGG-16. For the tree canopy images with simple backgrounds, ResNet-50 had the highest OA of 92.6% and kappa coefficient of 0.91. Compared with those of the KNN and BP, the classification effects of CNNs were significantly better. Therefore, deep learning has the potential to be used for tree species classification using RGB optical images. In addition, OAs and kappa coefficients of CNNs for tree canopy images with simple backgrounds were higher than those with complex backgrounds. For the deciduous tree species in TCC10, the classification accuracy of ResNet-50 was higher in summer than in autumn, and the accuracy of P. cerasifera reached 100%. However, to further explore its utility, deep learning should be tested on more study areas and more tree species. We will continue to expand the scale of the dataset and research new deep learning methods.

References

Alonzo M, Bookhagen B, Roberts DA (2014) Urban tree species mapping using hyperspectral and lidar data fusion. Remote Sens Environ 148:70–83

Atito S, Yanikoglu B, Aptoula E (2017) Plant identification with large number of classes: Sabanciu-gebzetu system in plantclef 2017. In: Conference and labs of the evaluation forum, Dublin, Ireland, 11–14 September

Dalponte M, Bruzzone L, Gianelle D (2012) Tree species classification in the southern alps based on the fusion of very high geometrical resolution multispectral/hyperspectral images and lidar data. Remote Sens Environ 123:258–270

Dalponte M, Orka HO, Gobakken T, Gianelle D, Næsset E (2013) Tree species classification in boreal forests with hyperspectral data. IEEE Trans Geosci Remote Sens 51:2632–2645

Dalponte M, Ørka HO, Ene LT, Gobakken T, Næsset E (2014) Tree crown delineation and tree species classification in boreal forests using hyperspectral and ALS data. Remote Sens Environ 140:306–317

Dalponte M, Ene LT, Marconcin M, Gobakken T, Næsset E (2015) Semi-supervised SVM for individual tree crown species classification. ISPRS J Photogramm Remote Sens 110:77–87

Dian Y, Pang Y, Dong Y, Li Z (2016) Urban tree species mapping using airborne LiDAR and hyperspectral data. J Indian Soc Remote Sens 44:595–603

Endreny TA (2018) Strategically growing the urban forest will improve our world. Nat Commun 9:1160

Fassnacht FE, Latifi H, Stereńczak K, Modzelewska A, Lefsky M, Waser LT, Straub C, Ghosh A (2016) Review of studies on tree species classification from remotely sensed data. Remote Sens Environ 186:64–87

Hartling S, Sagan V, Sidike P, Maimaitijiang M, Carron J (2019) Urban tree species classification using a WorldView-2/3 and LiDAR data fusion approach and deep learning. Sensors 19:1284

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE conference on computer vision and pattern recognition, Las Vegas, USA, 26 June–1 July

Huang H, Li X, Chen C (2018) Individual tree crown detection and delineation from very-high-resolution UAV images based on bias field and marker-controlled watershed segmentation algorithms. IEEE J Sel Top Appl Earth Obs Remote Sens 11:1–10

Jones TG, Coops NC, Sharma T (2010) Assessing the utility of airborne hyperspectral and LiDAR data for species distribution mapping in the coastal Pacific Northwest, Canada. Remote Sens Environ 114:2841–2852

Koch B, Heyder U, Weinacker H (2006) Detection of individual tree crowns in airborne lidar data. Photogramm Eng Remote Sens 72:357–363

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, Lake Tahoe, USA, 3–6 December

Lin Y, Jiang M, Yao Y, Zhang L, Lin J (2015) Use of UAV oblique imaging for the detection of individual trees in residential environments. Urban For Urban Greening 14:404–412

Liu H, Wu C (2018) Crown-level tree species classification from AISA hyperspectral imagery using an innovative pixel-weighting approach. Int J Appl Earth Obs Geoinf 68:298–307

Liu L, Coops NC, Aven NW, Pang Y (2017) Mapping urban tree species using integrated airborne hyperspectral and LiDAR remote sensing data. Remote Sens Environ 200:170–182

Liu T, Li R, Zhong X, Jiang M, Jin X, Zhou P, Liu S, Sun C, Guo W (2018) Estimates of rice lodging using indices derived from UAV visible and thermal infrared images. Agric For Meteorol 252:144–154

Maschler J, Atzberger C, Immitzer M (2018) Individual tree crown segmentation and classification of 13 tree species using airborne hyperspectral data. Remote Sens 10:1218

Moskal LM, Styers DM, Halabisky M (2011) Monitoring urban tree cover using object-based image analysis and public domain remotely sensed data. Remote Sens 3:2243–2262

Mozgeris G, Juodkien V, Jonikavicius D, Straigyte L, Gadal S, Ouerghemmi W (2018) Ultra-light aircraft-based hyperspectral and colour-infrared imaging to identify deciduous tree species in an urban environment. Remote Sens 10:1668

Nevalainen O, Honkavaara E, Tuominen S, Viljanen N, Hakala T, Yu X, Hyyppä J, Saari H, Pölönen I, Imai N, Tommaselli AM (2017) Individual tree detection and classification with UAV-based photogrammetric point clouds and hyperspectral imaging. Remote Sens 9:185

Pu R, Landry S, Yu Q (2018) Assessing the potential of multi-seasonal high resolution Pléiades satellite imagery for mapping urban tree species. Int J Appl Earth Obs Geoinf 71:144–158

Puliti S, Ørka H, Gobakken T, Næsset E (2015) Inventory of small forest areas using an unmanned aerial system. Remote Sens 7:9632–9654

Ren Z, Pu R, Zheng H, Zhang D, He X (2017) Spatiotemporal analyses of urban vegetation structural attributes using multitemporal Landsat TM data and field measurements. Ann For Sci 74:54

Ren Z, Du Y, He X, Pu R, Zheng H, Hu H (2018) Spatiotemporal pattern of urban forest leaf area index in response to rapid urbanization and urban greening. J For Res 29(3):785–796

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: International conference on learning repre-sentations, San Diego, USA, 7–9 May

Swain KC, Thomson SJ, Jayasuriya HPW (2010) Adoption of an unmanned helicopter for low-altitude remote sensing to estimate yield and total biomass of a rice crop. Trans ASABE 53:21–27

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: IEEE conference on computer vision and pattern recognition, Boston, USA, 7–12 June

Xie Y, Bao H, Shekhar S, Knight J (2018) A TIMBER framework for mining urban tree inventories using remote sensing datasets. In: IEEE international conference on data mining, Singapore, 17–20 November

Author information

Authors and Affiliations

Corresponding author

Additional information

Corresponding editor: Yu Lei.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Project funding: This study was supported by Joint Fund of Natural Science Foundation of Zhejiang-Qingshanhu Science and Technology City (Grant No. LQY18C160002), National Natural Science Foundation of China (Grant No. U1809208), Zhejiang Science and Technology Key R&D Program Funded Project (Grant No. 2018C02013), Natural Science Foundation of Zhejiang Province (Grant No. LQ20F020005).

The online version is available at http://www.springerlink.com.

Rights and permissions

About this article

Cite this article

Zhang, C., Xia, K., Feng, H. et al. Tree species classification using deep learning and RGB optical images obtained by an unmanned aerial vehicle. J. For. Res. 32, 1879–1888 (2021). https://doi.org/10.1007/s11676-020-01245-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11676-020-01245-0