Deep Learning Methods for 3D Human Pose Estimation under Different Supervision Paradigms: A Survey

Abstract

:1. Introduction

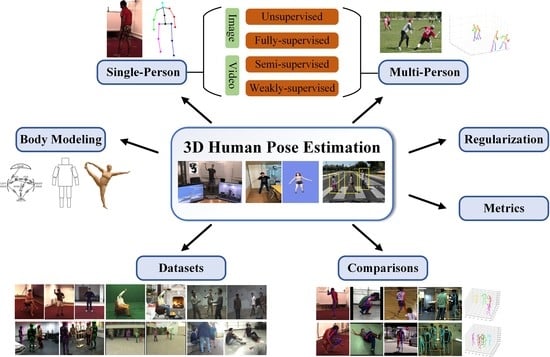

- A comprehensive survey of 3D human pose estimation based on deep learning, covering the literature from 2014 to 2021, is proposed. The estimation methods are reviewed hierarchically according to diverse application targets, data sources, and supervision modes.

- Supervision mode is a crucial criterion for deep learning method taxonomy. Different supervision modes are based on entirely different philosophies and are applicable for diverse scenarios. Therefore, as far as we know, this survey is the first to classify 3D human pose estimation methods based on different supervision modes to show their characteristics, similarities, and differences.

- Eight datasets and three evaluation metrics that are widely used for 3D human pose estimation are introduced. Both quantitative results and qualitative analysis of the state-of-the-art methods mentioned in this review are presented based on the datasets mentioned above and metrics.

- As the prior processing and subsequent refinement phases, research studies of human body modeling and regularization are discussed, along with the pipeline of 3D human pose estimation.

2. Human Body Models

3. Preliminary Issues

3.1. The Standard Methodologies of 3D Pose Estimation

- Single-person 3D pose estimation falls into two categories: two-stage and One-stage methods, as shown in Figure 3a. Two-stage methods involve two steps; first, we employ 2D keypoints detection models to obtain 2D joint locations, and then we lift 2D keypoints to 3D keypoints by deep learning methods. This kind of approach mainly suffers from inherent depth ambiguity in the second step, which is the key problem that many works aim to resolve. One-stage methods mean regressing 3D joint locations directly from a still image. These methods require much training data with 3D annotations, but manual annotation is costly and demanding.

- Multi-person 3D pose estimation is divided into two categories: top-down and bottom-up methods, as demonstrated in Figure 3b. Top-down methods first detect the human candidates and then apply single-person pose estimation for each of them. Bottom-up methods first detect all keypoints, followed by grouping them into different people. Two kinds of approaches have their advantages: top-down methods tend to be more accurate, while bottom-up methods tend to be relatively faster [38].

3.2. Input Paradigm

- Image-based methods take static images as input, only taking spatial context into account, which differs from video-based methods.

- Video-based methods meet more challenges than image-based methods, such as temporal information processing, correspondence between spatial information and temporal information and motion changes in different frames, etc.

3.3. Supervision Form

- Unsupervised methods do not require any multi-view image data, 3D skeletons, correspondences between 2D–3D points, or use previously learned 3D priors during training.

- Fully-supervised methods rely on large training sets annotated with ground-truth 3D positions coming from multi-view motion capture systems.

- Weakly-supervised methods realize the supervision through other existing or easily obtained cues rather than ground-truth 3D positions. The form of cues can be various, such as paired 2D ground-truth or camera parameters, etc.

- Semi-supervised methods use part of annotated data (e.g., 10% of 3D labels), which means labeled training data is scarce.

4. Taxonomy

5. Single-Person 3D Pose Estimation

5.1. Single-Person 3D Pose Estimation from Images

5.1.1. Unsupervised

5.1.2. Semi-Supervised

5.1.3. Fully-Supervised

5.1.4. Weakly-Supervised

5.2. Single-Person 3D Pose Estimation from Videos

5.2.1. Unsupervised

5.2.2. Semi-Supervised

5.2.3. Fully-Supervised

5.2.4. Weakly-Supervised

6. Multi-Person 3D Pose Estimation

6.1. Multi-Person 3D Pose Estimation from Still Images

6.1.1. Fully-Supervised

6.1.2. Weakly-Supervised

6.2. Multi-Person 3D Pose Estimation from Video

6.2.1. Fully-Supervised

7. Regularization

8. Existing Datasets and Evaluation Metrics

8.1. Evaluation Datasets

- The Human3.6M Dataset [36]. This dataset contains 3.6 million video frames with the corresponding annotated 3D and 2D human joint positions, from 11 actors. Each actor performs 15 different activities captured from 4 unique camera views. It allows us to capture data from 15 sensors (4 digital video cameras, 1 time-of-flight sensor, and 10 motion cameras), using hardware and software synchronization. The capture area was about 6 m × 5 m, and within it, we had roughly 4 m × 3 m of effective capture space, where subjects were fully visible in all video cameras.

- HumanEva-I & II Datasets [84]. The ground truth annotations of both datasets were captured using ViconPeak’s commercial MoCap system. The HumanEva-I dataset contains 7-view video sequences (4 grayscales and 3 colors) synchronized with 3D body poses. There are four subjects with markers on their bodies performing six common actions (e.g., walking, jogging, gesturing, throwing and catching a ball, boxing, combo) in a 3 m × 2 m capture area. HumanEva-II is an extension of HumanEva-I dataset for testing, which contains two subjects performing the action combo.

- The MPI-INF-3DHP Dataset [49]. It is a recently proposed 3D dataset, including constrained indoor and complex outdoor scenes. It records eight actors (four females and four males) performing eight activities (e.g., walking/standing, exercise, sitting, crouch/reach, on the floor, sports, miscellaneous) from 4 camera views. It is collected with a markerless multi-camera MoCap system in both indoor and outdoor scenes.

- The 3DPW Dataset [85]. It is a very recent dataset, captured mostly in outdoor conditions, using IMU sensors to compute pose and shape ground truth. It contains nearly 60,000 images containing one or more people performing various actions in the wild.

- The CMU Panoptic Dataset [75]. This dataset consists of 31 full HD and 480 VGA video streams from synchronized cameras, with a speed of 29.97 FPS and a total duration of 5.5 h. It provides high-quality 3D pose annotations, which are computed using all the camera views. It is the most complete, open, and free-to-use dataset that can be used for 3D human pose estimation tasks. However, considering that they released annotations quite recently, most works in literature use it only for qualitative evaluations [86], or for single-person pose detection [52], discarding multi-person scenes.

- The Campus Dataset [87]. This dataset uses three cameras to capture the interaction of three people in an outdoor environment. This small training data tend to be over-fitting. As for evaluation metrics, this dataset adopts the Average 3D Point Error and the Percentage of Correct Parts for evaluation.

- The Shelf Dataset [87]. This dataset includes 3D annotated data and adopts the same metrics as the Campus dataset. However, it is more complex than Campus dataset. In the dataset, four people close together are disassembling a shelf, and five calibrated cameras are deployed in the scene, but each view is heavily occluded.

- The MuPoTS-3D Dataset [72]. This dataset is a recently released multi-person 3D pose test dataset containing 20 test sequences captured by an unmarked motion capture system in five indoor and fifteen outdoor environments. Each sequence contains various activities for 2–3 people. The evaluation metric is the 3D PCK (the percentage of correct keypoints within a radius of 15 cm) of all annotated personnel. When there is a missed detection, all joints of the missed person will be considered incorrect. Another evaluation mode is to evaluate only the detected joints.

8.2. Evaluation Metrics

8.3. Summary on Datasets

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bridgeman, L.; Volino, M.; Guillemaut, J.Y.; Hilton, A. Multi-Person 3D Pose Estimation and Tracking in Sports. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 15–20 June 2019; pp. 2487–2496. [Google Scholar]

- Arbués-Sangüesa, A.; Martín, A.; Fernández, J.; Rodríguez, C.; Haro, G.; Ballester, C. Always Look on the Bright Side of the Field: Merging Pose and Contextual Data to Estimate Orientation of Soccer Players. arXiv 2020, arXiv:2003.00943. [Google Scholar]

- Hwang, D.H.; Kim, S.; Monet, N.; Koike, H.; Bae, S. Lightweight 3D Human Pose Estimation Network Training Using Teacher-Student Learning. arXiv 2020, arXiv:2001.05097. [Google Scholar]

- Kappler, D.; Meier, F.; Issac, J.; Mainprice, J.; Cifuentes, C.G.; Wüthrich, M.; Berenz, V.; Schaal, S.; Ratliff, N.; Bohg, J. Real-time Perception meets Reactive Motion Generation. arXiv 2017, arXiv:1703.03512. [Google Scholar] [CrossRef] [Green Version]

- Hayakawa, J.; Dariush, B. Recognition and 3D Localization of Pedestrian Actions from Monocular Video. arXiv 2020, arXiv:2008.01162. [Google Scholar]

- Andrejevic, M. Automating surveillance. Surveill. Soc. 2019, 17, 7–13. [Google Scholar] [CrossRef]

- Mehta, D.; Sridhar, S.; Sotnychenko, O.; Rhodin, H.; Shafiei, M.; Seidel, H.P.; Xu, W.; Casas, D.; Theobalt, C. VNect: Real-time 3D Human Pose Estimation with a Single RGB Camera. arXiv 2017, arXiv:1705.01583. [Google Scholar] [CrossRef] [Green Version]

- Li, G.; Tang, H.; Sun, Y.; Kong, J.; Jiang, G.; Jiang, D.; Tao, B.; Xu, S.; Liu, H. Hand gesture recognition based on convolution neural network. Clust. Comput. 2019, 22, 2719–2729. [Google Scholar] [CrossRef]

- Skaria, S.; Al-Hourani, A.; Lech, M.; Evans, R.J. Hand-gesture recognition using two-antenna doppler radar with deep convolutional neural networks. IEEE Sens. J. 2019, 19, 3041–3048. [Google Scholar] [CrossRef]

- Sun, Y.; Xue, B.; Zhang, M.; Yen, G.G.; Lv, J. Automatically Designing CNN Architectures Using the Genetic Algorithm for Image Classification. IEEE Trans. Cybern. 2020, 50, 3840–3854. [Google Scholar] [CrossRef] [Green Version]

- Sun, X.; Xv, H.; Dong, J.; Zhou, H.; Chen, C.; Li, Q. Few-shot Learning for Domain-specific Fine-grained Image Classification. IEEE Trans. Ind. Electron. 2020, 68, 3588–3598. [Google Scholar] [CrossRef] [Green Version]

- Han, F.; Zhang, D.; Wu, Y.; Qiu, Z.; Wu, L.; Huang, W. Human Action Recognition Based on Dual Correlation Network. In Asian Conference on Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2019; pp. 211–222. [Google Scholar]

- Zhang, D.; He, L.; Tu, Z.; Zhang, S.; Han, F.; Yang, B. Learning motion representation for real-time spatio-temporal action localization. Pattern Recognit. 2020, 103, 107312. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Wang, Y.; Zhou, J.; Wang, C.; Lu, H. Stacked deconvolutional network for semantic segmentation. IEEE Trans. Image Process. 2019. [Google Scholar] [CrossRef]

- Zhang, D.; He, F.; Tu, Z.; Zou, L.; Chen, Y. Pointwise geometric and semantic learning network on 3D point clouds. Integr. Comput. Aided Eng. 2020, 27, 57–75. [Google Scholar] [CrossRef]

- Duvenaud, D.K.; Maclaurin, D.; Iparraguirre, J.; Bombarell, R.; Hirzel, T.; Aspuru-Guzik, A.; Adams, R.P. Convolutional Networks on Graphs for Learning Molecular Fingerprints. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015; pp. 2215–2223. [Google Scholar]

- Yang, W.; Ouyang, W.; Li, H.; Wang, X. End-to-End Learning of Deformable Mixture of Parts and Deep Convolutional Neural Networks for Human Pose Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3073–3082. [Google Scholar]

- Pavlakos, G.; Zhou, X.; Daniilidis, K. Ordinal Depth Supervision for 3D Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7307–7316. [Google Scholar]

- Cheng, Y.; Yang, B.; Wang, B.; Wending, Y.; Tan, R. Occlusion-Aware Networks for 3D Human Pose Estimation in Video. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 723–732. [Google Scholar]

- Cheng, Y.; Yang, B.; Wang, B.; Tan, R.T. 3D Human Pose Estimation Using Spatio-Temporal Networks with Explicit Occlusion Training. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 10631–10638. [Google Scholar]

- Chen, Y.; Tian, Y.; He, M. Monocular human pose estimation: A survey of deep learning-based methods. Comput. Vis. Image Underst. 2020, 192, 102897. [Google Scholar] [CrossRef]

- Sarafianos, N.; Boteanu, B.; Ionescu, B.; Kakadiaris, I.A. 3D human pose estimation: A review of the literature and analysis of covariates. Comput. Vis. Image Underst. 2016, 152, 1–20. [Google Scholar] [CrossRef]

- Gong, W.; Zhang, X.; Gonzàlez, J.; Sobral, A.; Bouwmans, T.; Tu, C.; Zahzah, E.h. Human pose estimation from monocular images: A comprehensive survey. Sensors 2016, 16, 1966. [Google Scholar] [CrossRef]

- Belagiannis, V.; Amin, S.; Andriluka, M.; Schiele, B.; Navab, N.; Ilic, S. 3D pictorial structures revisited: Multiple human pose estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1929–1942. [Google Scholar] [CrossRef]

- Belagiannis, V.; Wang, X.; Schiele, B.; Fua, P.; Ilic, S.; Navab, N. Multiple human pose estimation with temporally consistent 3D pictorial structures. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 742–754. [Google Scholar]

- Fischler, M.A.; Elschlager, R.A. The representation and matching of pictorial structures. IEEE Trans. Comput. 1973, 100, 67–92. [Google Scholar] [CrossRef]

- Ju, S.X.; Black, M.J.; Yacoob, Y. Cardboard people: A parameterized model of articulated image motion. In Proceedings of the Second International Conference on Automatic Face and Gesture Recognition, Killington, VT, USA, 14–16 October 1996; pp. 38–44. [Google Scholar]

- Loper, M.; Mahmood, N.; Romero, J.; Pons-Moll, G.; Black, M.J.; Bashirov, R.; Ianina, A.; Iskakov, K.; Kononenko, Y.; Strizhkova, V.; et al. Real-time RGBD-based Extended Body Pose Estimation. arXiv 2021, arXiv:2103.03663. [Google Scholar]

- Cootes, T.; Taylor, C.; Cooper, D.; Graham, J. Active Shape Models-Their Training and Application. Comput. Vis. Image Underst. 1995, 61, 38–59. [Google Scholar] [CrossRef] [Green Version]

- Sidenbladh, H.; De la Torre, F.; Black, M.J. A framework for modeling the appearance of 3D articulated figures. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition (Cat. No. PR00580), Grenoble, France, 28–30 March 2000; pp. 368–375. [Google Scholar]

- Loper, M.; Mahmood, N.; Romero, J.; Pons-Moll, G.; Black, M.J. SMPL: A skinned multi-person linear model. Acm Trans. Graph. (TOG) 2015, 34, 1–16. [Google Scholar] [CrossRef]

- Anguelov, D.; Srinivasan, P.; Koller, D.; Thrun, S.; Rodgers, J.; Davis, J. SCAPE: Shape completion and animation of people. Acm Trans. Graph. (TOG) 2005, 24, 408–416. [Google Scholar] [CrossRef]

- Joo, H.; Simon, T.; Sheikh, Y. Total Capture: A 3D Deformation Model for Tracking Faces, Hands, and Bodies. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8320–8329. [Google Scholar]

- Bogo, F.; Kanazawa, A.; Lassner, C.; Gehler, P.; Romero, J.; Black, M.J. Keep it SMPL: Automatic estimation of 3D human pose and shape from a single image. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 561–578. [Google Scholar]

- Kanazawa, A.; Black, M.J.; Jacobs, D.W.; Malik, J. End-to-End Recovery of Human Shape and Pose. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7122–7131. [Google Scholar]

- Ionescu, C.; Papava, D.; Olaru, V.; Sminchisescu, C. Human3.6M: Large scale datasets and predictive methods for 3D human sensing in natural environments. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1325–1339. [Google Scholar] [CrossRef]

- Moon, G.; Chang, J.Y.; Lee, K.M. Camera Distance-Aware Top-Down Approach for 3D Multi-Person Pose Estimation From a Single RGB Image. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 10132–10141. [Google Scholar]

- Dabral, R.; Gundavarapu, N.B.; Mitra, R.; Sharma, A.; Ramakrishnan, G.; Jain, A. Multi-person 3D human pose estimation from monocular images. In Proceedings of the International Conference on 3D Vision (3DV), Quebec City, QC, Canada, 16–19 September 2019; pp. 405–414. [Google Scholar]

- Kundu, J.N.; Seth, S.; Rahul, M.; Rakesh, M.; Radhakrishnan, V.B.; Chakraborty, A. Kinematic-Structure-Preserved Representation for Unsupervised 3D Human Pose Estimation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 11312–11319. [Google Scholar]

- Kocabas, M.; Karagoz, S.; Akbas, E. Self-Supervised Learning of 3D Human Pose Using Multi-View Geometry. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1077–1086. [Google Scholar]

- Kundu, J.N.; Seth, S.; Jampani, V.; Rakesh, M.; Babu, R.V.; Chakraborty, A. Self-Supervised 3D Human Pose Estimation via Part Guided Novel Image Synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6152–6162. [Google Scholar]

- Rhodin, H.; Meyer, F.; Spörri, J.; Müller, E.; Constantin, V.; Fua, P.; Katircioglu, I.; Salzmann, M. Learning Monocular 3D Human Pose Estimation from Multi-view Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8437–8446. [Google Scholar]

- Rhodin, H.; Salzmann, M.; Fua, P. Unsupervised Geometry-Aware Representation for 3D Human Pose Estimation. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2018; pp. 765–782. [Google Scholar]

- Yang, W.; Ouyang, W.; Wang, X.; Ren, J.; Li, H.; Wang, X. 3D Human Pose Estimation in the Wild by Adversarial Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5255–5264. [Google Scholar]

- Qiu, H.; Wang, C.; Wang, J.; Wang, N.; Zeng, W. Cross View Fusion for 3D Human Pose Estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 4341–4350. [Google Scholar]

- Li, S.; Chan, A.B. 3D human pose estimation from monocular images with deep convolutional neural network. In Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 332–347. [Google Scholar]

- Bugra, T.; Isinsu, K.; Mathieu, S.; Vincent, L.; Pascal, F. Structured Prediction of 3D Human Pose with Deep Neural Networks. In Proceedings of the British Machine Vision Conference (BMVC), York, UK, 19–22 September 2016; pp. 130.1–130.11. [Google Scholar]

- Pavlakos, G.; Zhou, X.; Derpanis, K.G.; Daniilidis, K. Coarse-to-Fine Volumetric Prediction for Single-Image 3D Human Pose. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1263–1272. [Google Scholar]

- Mehta, D.; Rhodin, H.; Casas, D.; Fua, P.; Sotnychenko, O.; Xu, W.; Theobalt, C. Monocular 3D human pose estimation in the wild using improved cnn supervision. In Proceedings of the international conference on 3D vision (3DV), Qingdao, China, 10–12 October 2017; pp. 506–516. [Google Scholar]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. 2D Human Pose Estimation: New Benchmark and State of the Art Analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3686–3693. [Google Scholar]

- Ganin, Y.; Lempitsky, V. Unsupervised domain adaptation by backpropagation. In Proceedings of the International Conference on Machine learning, Lille, France, 7–9 July 2015; pp. 1180–1189. [Google Scholar]

- Iskakov, K.; Burkov, E.; Lempitsky, V.; Malkov, Y. Learnable Triangulation of Human Pose. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 7717–7726. [Google Scholar]

- Remelli, E.; Han, S.; Honari, S.; Fua, P.; Wang, R. Lightweight Multi-View 3D Pose Estimation through Camera-Disentangled Representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6040–6049. [Google Scholar]

- Sun, J.; Wang, M.; Zhao, X.; Zhang, D. Multi-View Pose Generator Based on Deep Learning for Monocular 3D Human Pose Estimation. Symmetry 2020, 12, 1116. [Google Scholar] [CrossRef]

- Luvizon, D.; Picard, D.; Tabia, H. Multi-task Deep Learning for Real-Time 3D Human Pose Estimation and Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2752–2764. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tome, D.; Russell, C.; Agapito, L. Lifting from the Deep: Convolutional 3D Pose Estimation from a Single Image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5689–5698. [Google Scholar]

- Zhou, X.; Huang, Q.; Sun, X.; Xue, X.; Wei, Y. Towards 3D Human Pose Estimation in the Wild: A Weakly-supervised Approach. arXiv 2017, arXiv:1704.02447. [Google Scholar]

- Wandt, B.; Rosenhahn, B. RepNet: Weakly Supervised Training of an Adversarial Reprojection Network for 3D Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7774–7783. [Google Scholar]

- Chen, X.; Lin, K.Y.; Liu, W.; Qian, C.; Lin, L. Weakly-Supervised Discovery of Geometry-Aware Representation for 3D Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10887–10896. [Google Scholar]

- Güler, R.A.; Kokkinos, I. HoloPose: Holistic 3D Human Reconstruction In-The-Wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10876–10886. [Google Scholar]

- Güler, R.A.; Neverova, N.; Kokkinos, I. DensePose: Dense Human Pose Estimation in the Wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7297–7306. [Google Scholar]

- Iqbal, U.; Molchanov, P.; Kautz, J. Weakly-Supervised 3D Human Pose Learning via Multi-view Images in the Wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5243–5252. [Google Scholar]

- He, Y.; Yan, R.; Fragkiadaki, K.; Yu, S.I. Epipolar Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7779–7788. [Google Scholar]

- Tung, H.Y.F.; Tung, H.W.; Yumer, E.; Fragkiadaki, K. Self-supervised learning of motion capture. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5242–5252. [Google Scholar]

- Pavllo, D.; Feichtenhofer, C.; Grangier, D.; Auli, M. 3D Human Pose Estimation in Video With Temporal Convolutions and Semi-Supervised Training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7745–7754. [Google Scholar]

- Li, Z.; Wang, X.; Wang, F.; Jiang, P. On Boosting Single-Frame 3D Human Pose Estimation via Monocular Videos. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 2192–2201. [Google Scholar]

- Lin, M.; Lin, L.; Liang, X.; Wang, K.; Cheng, H. Recurrent 3D Pose Sequence Machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5543–5552. [Google Scholar]

- Cai, Y.; Ge, L.; Liu, J.; Cai, J.; Cham, T.J.; Yuan, J.; Thalmann, N.M. Exploiting Spatial-Temporal Relationships for 3D Pose Estimation via Graph Convolutional Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 2272–2281. [Google Scholar]

- Wang, J.; Yan, S.; Xiong, Y.; Lin, D. Motion Guided 3D Pose Estimation from Videos. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 764–780. [Google Scholar]

- Zhou, X.; Zhu, M.; Leonardos, S.; Derpanis, K.G.; Daniilidis, K. Sparseness Meets Deepness: 3D Human Pose Estimation from Monocular Video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4966–4975. [Google Scholar]

- Dabral, R.; Mundhada, A.; Kusupati, U.; Afaque, S.; Sharma, A.; Jain, A. Learning 3D Human Pose from Structure and Motion. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2018; pp. 679–696. [Google Scholar]

- Mehta, D.; Sotnychenko, O.; Mueller, F.; Xu, W.; Sridhar, S.; Pons-Moll, G.; Theobalt, C. Single-shot multi-person 3D pose estimation from monocular rgb. In Proceedings of the International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 120–130. [Google Scholar]

- Zanfir, A.; Marinoiu, E.; Zanfir, M.; Popa, A.I.; Sminchisescu, C. Deep network for the integrated 3D sensing of multiple people in natural images. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 8420–8429. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Joo, H.; Liu, H.; Tan, L.; Gui, L.; Nabbe, B.; Matthews, I.; Kanade, T.; Nobuhara, S.; Sheikh, Y. Panoptic Studio: A Massively Multiview System for Social Motion Capture. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3334–3342. [Google Scholar]

- Tu, H.; Wang, C.; Zeng, W. VoxelPose: Towards Multi-camera 3D Human Pose Estimation in Wild Environment. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 197–212. [Google Scholar]

- Rogez, G.; Weinzaepfel, P.; Schmid, C. LCR-Net: Localization-Classification-Regression for Human Pose. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1216–1224. [Google Scholar]

- Rogez, G.; Weinzaepfel, P.; Schmid, C. Lcr-net++: Multi-person 2D and 3D pose detection in natural images. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 1146–1161. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Elmi, A.; Mazzini, D.; Tortella, P. Light3DPose: Real-time Multi-Person 3D Pose Estimation from Multiple Views. In Proceedings of the 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 2755–2762. [Google Scholar] [CrossRef]

- Mehta, D.; Sotnychenko, O.; Mueller, F.; Xu, W.; Elgharib, M.; Fua, P.; Seidel, H.P.; Rhodin, H.; Pons-Moll, G.; Theobalt, C. XNect: Real-Time Multi-Person 3D Motion Capture with a Single RGB Camera. ACM Trans. Graph. 2020, 39. [Google Scholar] [CrossRef]

- Wandt, B.; Ackermann, H.; Rosenhahn, B. Ackermann, H.; Rosenhahn, B. A Kinematic Chain Space for Monocular Motion Capture. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2018; pp. 31–47. [Google Scholar]

- Chen, T.; Fang, C.; Shen, X.; Zhu, Y.; Chen, Z.; Luo, J. Anatomy-aware 3D Human Pose Estimation with Bone-based Pose Decomposition. IEEE Trans. Circuits Syst. Video Technol. 2021. [Google Scholar] [CrossRef]

- Sigal, L.; Balan, A.O.; Black, M.J. Humaneva: Synchronized video and motion capture dataset and baseline algorithm for evaluation of articulated human motion. Int. J. Comput. Vis. 2010, 87, 4. [Google Scholar] [CrossRef]

- Von Marcard, T.; Henschel, R.; Black, M.J.; Rosenhahn, B.; Pons-Moll, G. Recovering Accurate 3D Human Pose in the Wild Using IMUs and a Moving Camera. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany; pp. 614–631.

- Dong, J.; Jiang, W.; Huang, Q.; Bao, H.; Zhou, X. Fast and Robust Multi-Person 3D Pose Estimation from Multiple Views. arXiv 2019, arXiv:1901.04111. [Google Scholar]

- Belagiannis, V.; Amin, S.; Andriluka, M.; Schiele, B.; Navab, N.; Ilic, S. 3D Pictorial Structures for Multiple Human Pose Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1669–1676. [Google Scholar]

- Martinez, J.; Hossain, R.; Romero, J.; Little, J.J. A Simple Yet Effective Baseline for 3D Human Pose Estimation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2659–2668. [Google Scholar]

- Nibali, A.; He, Z.; Morgan, S.; Prendergast, L. 3D human pose estimation with 2D marginal heatmaps. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 1477–1485. [Google Scholar]

- Kolotouros, N.; Pavlakos, G.; Black, M.; Daniilidis, K. Learning to Reconstruct 3D Human Pose and Shape via Model-Fitting in the Loop. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 2252–2261. [Google Scholar]

- Chen, C.H.; Tyagi, A.; Agrawal, A.; Drover, D.; Rohith, M.; Stojanov, S.; Rehg, J.M. Unsupervised 3D Pose Estimation With Geometric Self-Supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5707–5717. [Google Scholar]

- Wang, L.; Chen, Y.; Guo, Z.; Qian, K.; Lin, M.; Li, H.; Ren, J.S. Generalizing Monocular 3D Human Pose Estimation in the Wild. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27 October–2 November 2019; pp. 4024–4033. [Google Scholar]

- Ershadi-Nasab, S.; Noury, E.; Kasaei, S.; Sanaei, E. Multiple human 3D pose estimation from multiview images. Multimed. Tools Appl. 2018, 77, 15573–15601. [Google Scholar] [CrossRef]

- Chen, L.; Ai, H.; Chen, R.; Zhuang, Z.; Liu, S. Cross-View Tracking for Multi-Human 3D Pose Estimation at over 100 FPS. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3279–3288. [Google Scholar]

| Scenario | Input Paradigm | Degree of Supervision | 3D Annotation 1 | Weak Annotation 2 | Scale 3 | Wild 4 |

|---|---|---|---|---|---|---|

| Single-Person (Section 5) | Image-based (Section 5.1) | Unsupervised (Section 5.1.1) | − | − | − |  |

| Semi-supervised (Section 5.1.2) | ◯ | − |  |  | ||

| Fully-supervised (Section 5.1.3) | ◯ | − |  |  | ||

| Weakly-supervised (Section 5.1.4) | − | ◯ |  |  | ||

| Video-based (Section 5.2) | Unsupervised (Section 5.2.1) | − | − | − |  | |

| Semi-supervised (Section 5.2.2) | ◯ | − |  |  | ||

| Fully-supervised (Section 5.2.3) | ◯ | − |  |  | ||

| Weakly-supervised (Section 5.2.4) | − | ◯ |  |  | ||

| Multi-Person (Section 6) | Image-based (Section 6.1) | Fully-supervised (Section 6.1.1) | ◯ | − |  |  |

| Weakly-supervised (Section 6.1.2) | − | ◯ |  |  | ||

| Video-based (Section 6.2) | Fully-supervised (Section 6.2.1) | ◯ | − |  |  |

| METHOD | MPJPE (mm) | SUPERVISION | TYPE |

|---|---|---|---|

| Li et al. [46] (2014) | 132.2 | fully-supervised | monocular |

| Zhou et al. [70] (2016) | 113.0 | weakly-supervised | monocular |

| Tekin et al. [47] (2016) | 116.8 | fully-supervised | monocular |

| Tome et al. [56] (2017) | 88.4 | weakly-supervised | monocular |

| Zhou et al. [57] (2017) | 64.9 | weakly-supervised | monocular |

| Kanazawa et al. [35] (2017) | 56.8 | weakly-supervised | monocular |

| Pavllo et al. [65] (2018) | 46.8 | semi-supervised | monocular |

| Pavlakos et al. [18] (2018) | 44.7 | weakly-supervised | monocular |

| Cheng et al. [19] (2019) | 42.9 | semi-supervised | monocular |

| RepNet [58] (2019) | 50.9 | weakly-supervised | monocular |

| HoloPose [60] (2019) | 50.4 | weakly-supervised | monocular |

| Luvizon et al. [55] (2020) | 48.6 | fully-supervised | monocular |

| Cheng et al. [20] (2020) | 40.1 | semi-supervised | monocular |

| Sun et al. [54] (2020) | 35.8 | fully-supervised | monocular |

| Martinez et al. [88] (2017) | 87.3 | fully-supervised | multi-view |

| Kocabas et al. [40] (2019) | 76.6 | semi-supervised | multi-view |

| Iskakov et.al [52] (2019) | 17.7 | fully-supervised | multi-view |

| Remelli et al. [53] (2020) | 30.2 | fully-supervised | multi-view |

| He et al. [63] (2020) | 19.0 | weakly-supervised | multi-view |

| METHOD | 3DPCK | AUC | MPJPE (mm) | SUPERVISION | TYPE |

|---|---|---|---|---|---|

| Mehta et al. [49] (2017) | 76.5 | 40.8 | - | fully-supervised | monocular |

| Zhou et al. [57] (2017) | 69.2 | 32.5 | - | weakly-supervised | monocular |

| Yang et al. [44] (2018) | 69.0 | 32.0 | - | semi-supervised | monocular |

| MargiPose [89] (2019) | 95.1 | 62.2 | 60.1 | weakly-supervised | monocular |

| SPIN [90] (2019) | 92.5 | 55.6 | 67.5 | weakly-supervised | monocular |

| RepNet [58] (2019) | 82.5 | 58.5 | 97.8 | weakly-supervised | monocular |

| Chen et al. [91] (2019) | 71.1 | 36.3 | - | unsupervised | monocular |

| Chen et al. [83] (2021) | 87.9 | 54.0 | 78.8 | fully-supervised | monocular |

| Wang et al. [92] (2019) | 71.2 | 33.8 | - | weakly-supervised | multi-view |

| METHOD | Total 3DPCK (a) | Total 3DPCK (b) | SUPERVISION | TYPE |

|---|---|---|---|---|

| LCR-Net [77] (2017) | 53.8 | 62.4 | weakly-supervised | monocular |

| ORPM [72] (2018) | 65.0 | 69.8 | fully-supervised | monocular |

| Moon et al. [37] (2019) | 81.8 | 82.5 | fully-supervised | monocular |

| XNect [81] (2020) | 70.4 | 75.8 | semi-supervised | monocular |

| Lcr-net++ [78] (2019) | 70.6 | 74.0 | weakly-supervised | monocular |

| HG-RCNN [38] (2019) | 71.3 | 74.2 | weakly-supervised | monocular |

| METHOD | Average PCP | AP | SUPERVISION | TYPE |

|---|---|---|---|---|

| 3DPS [87] (2014) | 75.8 | - | fully-supervised | multi-view |

| Belagiannis et al. [25] (2014) | 78.0 | - | weakly-supervised | multi-view |

| Belagiannis et al. [24] (2015) | 84.5 | - | fully-supervised | multi-view |

| Ershadi et al. [93] (2018) | 90.6 | - | weakly-supervised | multi-view |

| Dong et al. [86] (2019) | 96.3 | 61.6 | weakly-supervised | multi-view |

| Bridgeman et al. [1] (2019) | 92.6 | - | unsupervised | multi-view |

| Tu et al. [76] (2020) | 96.7 | 91.4 | fully-supervised | multi-view |

| Chen et al. [94] (2020) | 96.6 | - | unsupervised | multi-view |

| METHOD | Average PCP | AP | SUPERVISION | TYPE |

|---|---|---|---|---|

| 3DPS [87] (2014) | 71.4 | - | fully-supervised | multi-view |

| Belagiannis et al. [25] (2014) | 76.0 | - | weakly-supervised | multi-view |

| Belagiannis et al. [24] (2015) | 77.5 | - | fully-supervised | multi-view |

| Ershadi et al. [93] (2018) | 88 | - | weakly-supervised | multi-view |

| Dong et al. [86] (2019) | 96.9 | 75.4 | weakly-supervised | multi-view |

| Bridgeman et al. [1] (2019) | 96.7 | - | unsupervised | multi-view |

| Tu et al. [76] (2020) | 97.0 | 80.5 | fully-supervised | multi-view |

| Light3DPose [80] (2021) | 89.8 | - | weakly-supervised | multi-view |

| Chen et al. [94] (2020) | 96.8 | - | unsupervised | multi-view |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, D.; Wu, Y.; Guo, M.; Chen, Y. Deep Learning Methods for 3D Human Pose Estimation under Different Supervision Paradigms: A Survey. Electronics 2021, 10, 2267. https://doi.org/10.3390/electronics10182267

Zhang D, Wu Y, Guo M, Chen Y. Deep Learning Methods for 3D Human Pose Estimation under Different Supervision Paradigms: A Survey. Electronics. 2021; 10(18):2267. https://doi.org/10.3390/electronics10182267

Chicago/Turabian StyleZhang, Dejun, Yiqi Wu, Mingyue Guo, and Yilin Chen. 2021. "Deep Learning Methods for 3D Human Pose Estimation under Different Supervision Paradigms: A Survey" Electronics 10, no. 18: 2267. https://doi.org/10.3390/electronics10182267