Abstract

A decision-making system is one of the most important tools in data mining. The data mining field has become a forum where it is necessary to utilize users' interactions, decision-making processes and overall experience. Nowadays, e-learning is indeed a progressive method to provide online education in long-lasting terms, contrasting to the customary head-to-head process of educating with culture. Through e-learning, an ever-increasing number of learners have profited from different programs. Notwithstanding, the highly assorted variety of the students on the internet presents new difficulties to the conservative one-estimate fit-all learning systems, in which a solitary arrangement of learning assets is specified to the learners. The problems and limitations in well-known recommender systems are much variations in the expected absolute error, consuming more query processing time, and providing less accuracy in the final recommendation. The main objectives of this research are the design and analysis of a new transductive support vector machine-based hybrid personalized hybrid recommender for the machine learning public data sets. The learning experience has been achieved through the habits of the learners. This research designs some of the new strategies that are experimented with to improve the performance of a hybrid recommender. The modified one-source denoising approach is designed to preprocess the learner dataset. The modified anarchic society optimization strategy is designed to improve the performance measurements. The enhanced and generalized sequential pattern strategy is proposed to mine the sequential pattern of learners. The enhanced transductive support vector machine is developed to evaluate the extracted habits and interests. These new strategies analyze the confidential rate of learners and provide the best recommendation to the learners. The proposed generalized model is simulated on public datasets for machine learning such as movies, music, books, food, merchandise, healthcare, dating, scholarly paper, and open university learning recommendation. The experimental analysis concludes that the enhanced clustering strategy discovers clusters that are based on random size. The proposed recommendation strategies achieve better significant performance over the methods in terms of expected absolute error, accuracy, ranking score, recall, and precision measurements. The accuracy of the proposed datasets lies between 82 and 98%. The MAE metric lies between 5 and 19.2% for the simulated public datasets. The simulation results prove the proposed generalized recommender has a great strength to improve the quality and performance.

Similar content being viewed by others

Introduction

Recently e-learning is considered one of the progressive methods in online education in long-lasting terms, contrasting to the customary head-to-head process of educating with culture. Through e-learning, an ever-increasing number of learners have profited from different programs. Notwithstanding, the highly assorted variety of the students on the internet presents new difficulties to the conservative one-estimate fit-all learning systems, in which a solitary arrangement of learning assets is specified to every students. The problems and limitations in the well-known recommenders are much differences in the expected absolute error, consuming more query processing time, and providing less accuracy in the final recommendation.

Today, e-learning has increased as another option in contrast to conservative learning to accomplish the objectives of learning for everyone. E-learning has various clarities with a few times befuddling understandings [1,2,3,4]. E-learning is defined as the utilization of web advancements to provide learning to the students whenever and wherever effectively. Mainly, the e-learning technique is more versatile than conventional learning. Conventional education depends on a ‘one size fits all’ scheme that tends to help just a single instructive form since, generally, in-classroom circumstances, an instructor frequently manages several students at the same time. In such a situation, the students are forced to use a uniform course material foregoing their own needs, attributes or inclinations. [5]. Once the teacher starts to give detailed, organized guidance to the students, the class profitability expands. Additionally, it is greatly troublesome for an instructor to choose the ideal learning methodology for each student in a class. When the teacher can compute each one of the methodologies, it is significantly harder to apply numerous procedures in a classroom [6, 7]. A customized e-learning condition permits to adjustment of the content, automatically or the associate the courseware to meet the students’ requirements.

The significant research in e-learning is the design of a better recommender for the learners. To resolve the problems in the existing methods, the proposed hybrid recommendation model has been designed that consists of the following essential functions: domain function, learner function, application function, adaptation function, along session monitor. New strategies are designed and evaluated on the public benchmark datasets. The experimental analysis concludes that the enhanced clustering strategy discovers clusters based on random sizes. The proposed method minimizes the expected absolute error even when the learner size L exceeds 250 while reducing the computational complexity and increasing the accuracy. Section “Literature survey” explains on the literature survey that includes the recent contributions made to recommender systems. The proposed methodology is focused on in section “Proposed methodology”. The simulation of the proposed model and the experimental outcomes are analyzed in section “Experimental results and analysis”. The final inferences are analyzed in section “Conclusions and future work”.

Literature survey

A few customized e-learning frameworks have been accounted for in the literature utilizing the learner’s qualities like the stage of knowledge, learning methods, learner inspiration, and media inclinations. Many authors have long strived to the behavior report of students in educating along with learning methods. The learning method, as both learner characteristics and an instructional procedure, is explained in [8]. The learner’s characteristics are markers of how a learner studies along with what he/she likes to study [9,10,11]. As an instructional procedure, it educates the discernment, setting, with the substance of learning. It may likewise be characterized as how an individual gathers, forms, and composes data. This way, the learning method enables the teachers to have a review of the propensities and inclinations of the individual students. The recommender framework was designed to consolidate content-based investigation, collaborative filtering, and information mining strategies. It examines understudies perusing information and produces scores to help understudies in the determination of important exercises [12]. A new structure is developed for customized learning recommender frameworks given the suggestion method that distinguishes the understudy learning necessity and then utilizes matching rules to create proposals of learning materials. The investigation alludes to multi-criteria choice models and fuzzy sets innovation to manage student's preferences and prerequisite examination. Student capacity is a factor that is disregarded while actualizing a personalization scheme that prompts the bewilderment of the student [13]. A framework for customized e-learning is suggested by consolidating the mutual filtering technique by the entry reaction hypothesis. The framework gives a single way to students, and hence ensures successful learning. The technique of framing lessons and materials and determining the capacity of students using the Maximum Likelihood Estimation (MLE) is discussed in [14].

There is a critical requirement for suggestions in an online gathering because of its fragile range or constitution. A non-specific personalization system is designed and evaluated according to the Comtella-D conversation debate. The feedback with communications from clients supply as a personalization administers to recognize the proper suggestion technique given client input information. Results demonstrate that mutual filtering methods may be utilized effectively on small datasets, similar to a conversation debate [15]. The teaching procedures match with student's personalities by using indicator. According to the creative methodology, a structure for building a flexible learning administration framework through deeming student's preferences has been produced. The student's summary is originated based on the outcomes acquired through the learners in the list of learning methods poll. Then interactions are modeled by using the Bayesian model [16]. The practical context-aware suggestions in e-learning frameworks designed are relied upon domain perspective modeling in numerous situations to make the customized proposals for the instructors as well as researchers who would create the learning assets which would be utilized through the learners. The suggestion procedure is categorized into three phases: initially, making societal context positions of interest as indicated by the client's stage. Secondly, it is required to inspect the current status of the social, area, and client context. Finally, it is required to examine the attainability of any particular learning objects, which ought to be suggested [17].

An ontology-based approach for semantic content recommendation towards context-aware e-learning is developed to enhance the efficiency and effectiveness of learning [18]. The recommender takes knowledge about the learner (user context), knowledge about the content, and knowledge about the domain being learned into consideration. An effective e-learning recommendation system based on self-organizing maps and association mining is developed in [19]. This research constructs a hybrid system with an artificial neural network (ANN) and data-mining (DM) techniques to classify the e-Learner groups. A personalized learning full-path recommendation model based on LSTM neural networks is developed in [20]. This model relies on clustering and machine learning techniques. Based on a feature of similarity metric, the designed system at first clusters a collection of learners and train a Long Short-Term Memory (LSTM) model to predict their learning paths and performance. Personalized learning full paths are then selected from the results of path prediction. Finally, a suitable learning full-path is explicitly recommended to a test learner. In this work, a series of experiments have been carried out against learning resource datasets. The novel recommender algorithm that recommends personalized learning objects based on student learning styles is developed in [21]. Various similarity metrics are considered in an experimental study to investigate the best similarity metrics to use in a recommender system for learning objects. The approach is based on the Felder and Silverman learning style model, which is used to represent both the student learning styles and the learning object profiles.

An approach for building architecture for a recommender system for the e-learning environment, considering learning style and learner's knowledge level, is discussed in [22]. The designed approach is based on four modules. Some of the problems in the present recommenders are: much differences in the expected absolute error and consuming much computational time which results in less accuracy in its recommendation to the learners [23,24,25,26,27,28,29]. Thus the proposed model develops a new transductive support vector machine (TSVM) based hybrid and personalized recommendation to the learners in solving real-time applications. The literature survey of recent recommendation systems is analyzed in Table 1 [23,24,25,26,27,28,29]. The recent recommendation methods, datasets, advantages, and limitations are analyzed in Table 1.

Proposed methodology

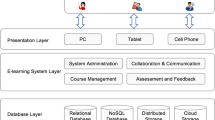

This research develops a hybrid recommendation method, which allows the learners to use the teaching material organized in any suitable course. The designed system is used for learners those who have not any programming knowledge. Moreover, it consists of a part of the information for testing the obtained data. Mainly, it targets suggesting functional and motivating materials toward e-learners depending on their diverse conditions, preferences, knowledge ideas, and extra significant attributes. The architectural diagram of the proposed recommender is drawn in Fig. 1.

Some of the new strategies are expected to design and evaluate the standard datasets to improve the performance of a hybrid recommender. This research attempts to design the recommender system with the following new strategies: Modified One Source Denoising (MOSD) approach, which is applied to preprocess the learner dataset; Modified Anarchic Society Optimization (MASO) strategy that is applied to improve the performance measurements; Enhanced Generalized Sequential Pattern (EGSP) strategy that is applied to mine the sequential pattern of learners; that is applied to evaluate the extracted habits and interests.

Depending on the different models of learning method with learners’ behaviors, the grouping is carried out via the use of the most widely used enhanced Density-Based Spatial Clustering of Applications with Noise (DBSCAN) clustering algorithm. DBSCAN groups similar learners. It observes learners groups as dense regions of objects in the information space to divide the regions of low-density objects. The enhanced DBSCAN strategy applies the concept of density reachability and density connectivity. SVM is a supervised Machine Learning (ML) model that applies classification methods.

TSVM is generally a continuous classifier that regularly finds the best hyperplane in the learner's space with a transductive procedure to include unlabeled samples in the training step. TSVM is also applied in learning strategies. The major issue of this clustering is that it needs to tune its parameters. To solve this issue, these parameters are tuned through the use of an Anarchic Society Optimization (ASO) which is a swarm-intelligence-based new optimization method. A value of society parts is chosen initially inside the search gap for tuning of two parameters. The advantages of the proposed generalized model over some of the existing methods are reducing the computational time with good accuracy in the recommendation. The proposed model also minimizes the expected absolute error even when the learner size L exceeds 250. The purpose of the functions of the new model is shown in Table 2.

The proposed generalized hybrid recommendation model consists of the following important functions: domain function, learner function, application function, adaptation function, along session monitor. The domain function represents the storage of essential learning substances, tutorials, along tests for everyone. The learner frame collects the complete learner’s information.

This framework makes utilization of the information to figure the learner’s conduct and consequently adjusts towards their necessities. The application function carries out the adjustment. The adjustment function tries to shorten the instructional rules through the application function. These two functions are isolated so that including new substance gatherings and various functionalities would be less demanding.

Inside the session screen part, the plan progressively re-manufactures the student display all through the session, with the motivation behind to keep up a way of the learner's occasions with their improvement, toward recognize and identify their mistakes and presumably forward the conference, therefore. At the last meeting, every last student's top pick is traced. If a learner couldn't concur with the framework's suppositions with regards to their inclinations, they can demonstrate and figure adjustments in it for the time of the learning session. Then, the student model is applied together with the required information that is further used to prepare the subsequent session. The adjustment function gives two personalized normal strides.

The structure of the new recommender is shown in algorithm 1 and its flowchart is depicted in Fig. 2.

MOSD Approach for data preprocessing

The system distinguishes various models of learning methods in addition to learner's behavior and checking the server blogs of the learners. Typically, the log file might include different unnecessary responses in a minimal period. Unfortunately, these behaviors might happen numerous times in a similar structure. Identifying the noise and the steps to eliminate are incredibly significant to modify the result of information examination in the prospect. The MOSD is presented in algorithm 2.

When implementing t-source for t re-rates, then it will not be practical to have the things re-rated several times using a similar user. If everyone’s rating differs through more than a specified verge, there is a strong disagreement condition with the score that is aloof from the present dataset. Then, if at least two ratings are different there is a mild disagreement condition.

Enhanced clustering for the learners

Recommendations should not be completely designed for the entire group of learners since the skills of learners using related learning notices and their talent toward handle a chore can differ appropriately toward variations in their information stage. In this research, the clustering technique is carried out as an initial step towards group learners, depending on the learning methods of the customer. These groups are utilized to recognize logical options in prevalent cycles of learning behaviors.

Depending on the different models of learning method with learners’ behaviors, the grouping is carried out via the use of the most widely used enhanced DBSCAN clustering algorithm. DBSCAN is a clustering strategy that groups similar learners. It observes learners groups as dense regions of objects in the information space to divide the regions of low-density objects.

The enhanced DBSCAN strategy applies the concept of density reach ability and density connectivity. {x1, x2, x3… xn} represent the number of learner’s information positions representing the set of data points. This strategy takes two parameters, namely distance ε, and minimum-points, i.e., the least number of points needed to construct a cluster. It simply requires finding out every maximum solidity linked gap to gather the learners in a learning gap. These spaces are known as groups. Every learner's data, which is not contained in any group is measured as noise and be able to be ignored by the original learners. It makes use of the suggestion of solidities such as reachability and connectivity. The enhanced clustering is shown in algorithm 3.

Density reach ability

The learner's data at a position p is said to be solidity available from the learner's data at a position q if position p is in ε distance from learners data at a position q with q has the adequate value of learner’s data at positions in its neighbors who are inside space ε.

Density connectivity

A learner’s data at a position p and q are said to be in the direction of its solidity linked if there be a learner’s data at a position r which has the adequate value of learners data at positions in its neighbors with both the learner's data at a positions p and q are in the ε space. This is sequence progression. Therefore, if q is neighbor of learners data at a position r, r is neighbor of learners data at a position s, s is neighbor of learners data at a position t which in spin is neighbor of learners data at a position p implies with the purpose of learners data at a position q is neighbor of learners data at a position p.

Calculating the constraints ε as well as minimum-points

The dynamic steps permit two input learner's data positions; the automatically created parameters differ related in the direction of various learner's data positions. The distance among the two learners data positions is given by distance (a, b) and defined as follows:

where a = (xa1, xa2,...,xap) and b = (xb1,xb2,...,xbp) are two p-dimensional learners data positions, and q > 0.

Routine constraints creation

The enhanced clustering (EC) algorithm discovers groups inside varied solidity, through creating multiple pairs of ε as well as minimum-points routinely.

The major issue of this clustering is that it needs to tune its two parameters ε and minimum-points. To solve this issue, these two parameters are tuned through the use of an ASO. In the ASO, a Swarm Intelligence (SI) based new optimization method, a value of society parts are chosen initially inside the search gap for tuning of two parameters ε and minimum-points. Then, the clustering accuracy of every part is calculated depending on these parameters. Let \({X}_{i}\left(k\right)\) denote the position of the ith member in the kth iteration. Let \({X}^{*}(\) k) define the best position experienced by all the members in the kth iteration. The best position visited by the ith member during the first k iterations is \({P}_{i}\left(k\right)\). The best position visited by all members during the first k iterations is G(k). According to the computed fitness value and comparison with \({X}^{*}(\) k), \({P}_{i}\left(k\right)\) and G(k), the movement arrangement, a new value of the part, will be found. After a sufficient number of iterations, at least one of the part’s determinations reaches the optimal position of the cluster parameters. The elements of the algorithm, according to movement policies, are defined as follows.

Movement arrangement depends on the present positions

The initial movement arrangement for the ith individual from parameters ε and minimum-points in the kth step \({\text{MP}}_{i}^{{{\text{current}}}}\). (k) is acknowledged relying upon the present area. The Fickleness Index FIi (k) for the ith individual in the kth step is applied in choosing the movement strategy. This measurement evaluates the satisfaction of the present position of the ith part contrasted and past individuals' parameters. If the fitness is positive, the Fickleness Index (\(0 \le {\text{FI}}_{i} \left( k \right) \le 1) \). can be defined as follows:

The range of \(\propto_{i} \). is \(0 \le\,\, \propto_{i}\,\, \le 1.\) Based on the value of \({\text{FI}}_{i} \left( k \right)\), the ith member can choose its next location. If F\({\text{I}}_{i}\) (k) is lower, then the ith part has the best group parameters go among every last one part. In this manner, it is enhanced to choose the development strategy relying upon \(X^{*} \)(k). Otherwise, the ith member is not satisfied with its position. Hence the development strategy intended for the ith part related to the appraisal of F\({\text{I}}_{i}\). (k) is defined as follows:

Movement arrangement depends on the some other positions

The subsequent movement methodology intended for the ith part in the kth cycle is \({\mathrm{MP}}_{i}^{\mathrm{society}}(k)\). This is executed, relying upon the areas of different individuals. Even though each part should move toward G(k) intelligently, the relationship of the part is not normally fitting toward the progressive condition.

The External Irregularity index, \({\text{EI}}_{i} \left( k \right)\) defines the distance of an ith part of the population from G (k). This index helps in determining if the community part is hypothetical in the direction of behaving more reasonably with less expanded. This index is also used to describe the direction of the movement arrangement depending on the area of other parts.

Therefore, the E\({\text{I}}_{i}\) (k) for the ith part in the kth iteration is evaluated.

\(\theta_{i}\). and \(\delta_{i}\). are positive numbers and D (k) is a suitable coefficient of variation. If the population part is secured in the direction of G (k), then it will contain a further logical action. Otherwise, it shows a revolutionary action depending on anarchy. Consequently, with the concern of a threshold designed for E\({\text{I}}_{i}\) (k), it is probably in the direction of describing the movement arrangement depending on the area of other parts is defined.

As the threshold congregates to unity, the parts would perform further rationally and the members would show more routine behaviors.

Movement arrangement depends on past positions

The movement arrangement procedure planned for the ith part in the kth step, \({\text{MP}}_{i}^{{{\text{past}}}} \left( k \right) \). is performed depending upon the before zones of the individual part. This development strategy is applied when the area of the ith part in the kth step is compared with \(P_{i} \left( k \right)\). If the area of the ith part is close \(P_{i} \left( k \right),\) then the part plays out additionally prudently. Otherwise, the part displays strange activities. To apply the development strategy depending upon earlier territories, the Internal Irregularity index \({\text{II}}_{i} \)(k) for the ith part in the kth step is defined.

\(\beta_{i} > 0\). Similar to the previous arrangement, with chosen of a threshold designed for \( {\text{II}}_{i} \left( k \right)\), the movement arrangement depending on earlier areas is defined.

Combination of movement arrangements

Altogether toward picking the last development strategy, the three arrangements are combined. As per the development approaches enlisted, every part should join these courses of action by a strategy or a technique and push toward another area. One of the slightest requesting courses is to pick the procedure with the most superb answer. The accompanying substitute is toward fusing the development arrangements progressively, which is named the back-to-back mix rule. The hybrid advance can be intended for constant issues coded as chromosomes.

The modified ASO algorithm is presented in Algorithm 4, which takes an input of the N parts and produces the outcomes ε and minimum-points, which are to be optimized. Let S represents the solution space and the function f: S \(\to \) R is to be reduced in S. Assume that a society with N members is searching for the best location of living, that is, the global minimum of function f in the S. Initially some of the society members are randomly chosen from S. Then the fitness function, which represents the function to search for the best location of living, is evaluated. Then fitness function and the comparison with \(X(\)k), \({P}_{i}\left(k\right)\) and G (k) values provide a way to find the selection of the new position of the member. After sufficient iterations, at least one of the members reaches the near optimum.

EGSP strategy

Learners with different learning methods have various arrangements of rehashed succession. Consequently, students are grouped relying upon their practices, and afterward, personal conduct standards are found intended for each student through the utilization of the EGSP. The EGSP is proposed for comprehending continuous example mining issues. One route toward utilization of the stage-wise model is toward initially finding every single one of the ongoing students in a stage-wise method. This primarily reports for the checking of events of every last singleton part in the folder. In this way, non-common points are expelled toward channels the exchanges. At last, every transfer incorporates just the incessant parts at first controlled. This altered database is then given as a contribution toward the EGSP calculation. This progression needs one to disregard the entire database. With various disregards, the database is completed by methods for the EGSP strategy and is explained in the following sections.

Candidate creation

Specified the range of recurrent (i−1) and their recurrent cycles Fn (i−1), the applicants used for the subsequent pass are created through combining Fn (i−1) using itself. As a minimum, a few of whose sub-cycles are not regular is removed in means of the trimming step.

Pruning phase

A pruning step removes some cycle as a minimum one whose series is not recurrent.

Support counting

Frequently, a mess tree-based hunt is proposed for capable sustain including. Subsequently, non-maximal repetitive sequences are removed. The hash tree-based search is applied for effective data verification. The database is completed by methods for the EGSP strategy. In the primary exceed, every single one particular thing (1 sequence) is tallied. Based on the items continuously obtained, the place of candidate 2-sequence is made, and in this manner, their recurrence is perceived. These successive 2-groupings are utilized to make the applicant 3-cycles whose calculation is rehashed, pending the position that no more frequent sequences are found. The calculation incorporates some noteworthy advances. The hash tree-based searching is explained in Algorithm 5.

ETSVM evaluation

TSVM is generally a continuous classifier that regularly finds the best hyperplane in the learner's space with a transductive procedure to include unlabeled samples in the training step. To know the learner's data, the modified TSVM is presented in this section. In the modified TSVM, depending on the percentage ideal answer, the learner's rating can be explained, which is completed in the direction of dividing the clustered user that depends on frequent sequences ratings. The learning step of the ETSVM is designed as an optimization problem and defined as follows:

The learning step of the ETSVM is designed as an optimization problem and defined as follows:

Subject to the constraints:

The constants C and \(C^{*}\) are user mentioned penalty values of the training. The transductive samples \(\xi_{i}\) and \(\xi_{j}^{*}\) are random variables and d denotes count of transductive samples. Modified TSVM training is related to the direction of handling the issues mentioned above. Finally, the function of the conclusion of the modified TSVM with Lagrange multipliers \(\alpha_{i}\) and \(\alpha_{j}^{*}\) is defined as follows:

Trust-based weighted mean item

An easy suggestion method stipulates in the direction of calculating how an end-user likes an objective item i be able to estimate the standard rating for i through regarded as the rating ru,i from complete scheme’s customer u who is previously well-known using i, this occurs when a recommender scheme is there that does not include a trusted system. The trust-based weighted mean method is evaluated by calculating the faith rates ta,u that provides the reverse level in the direction for the raters u are faith. This method allows in the direction of a division between the sources such that additional weight is assigned in the direction of ratings of improved trusted users. Let RT be the set of customers with faith rate ta,u. Then the rank of item i for the customer a is evaluated as follows:

Recommendation process

The learner behavior and preferences are categorized as follows:

-

1.

Clicks—defines a small listing of substances.

-

2.

Selection—describes the substance chosen with extra toward the haul.

-

3.

Learning—this step is described as reading the substance.

All of these steps are utilized in recognizing the learner's comparative penchants LPij used for every substance referred from the corresponding information resources. The rule used to find the LPij is defined as follows:

\({\text{LP}}_{ij}^{c} , {\text{LP}}_{ij}^{s}\) and \({\text{LP}}_{ij}^{l}\) define the references count towards the defined steps performed by learner i in the material j respectively. \({\text{Max}}_{1 \le j \le \left| M \right|} \left( {{\text{LP}}_{ij}^{c} } \right), {\text{Max}}_{1 \le j \le \left| M \right|} \left( {{\text{LP}}_{ij}^{s} } \right) {\text{and}} {\text{Max}}_{1 \le j \le \left| M \right|} \left( {{\text{LP}}_{ij}^{l} } \right) \) define the maximum number of defined steps performed by learner i in material M. \({\text{Min}}_{1 \le j \le \left| M \right|} \left( {{\text{LP}}_{ij}^{c} } \right), {\text{Min}}_{1 \le j \le \left| M \right|} \left( {{\text{LP}}_{ij}^{s} } \right) {\text{and}} {\text{Min}}_{1 \le j \le \left| M \right|} \left( {{\text{LP}}_{ij}^{l} } \right)\) define the minimum number of steps performed by learner i in the material M respectively.

Experimental results and analysis

The proposed personalized recommendation system is used for improving students learning knowledge. The proposed model is simulated for different public datasets – movies, music, books, food, merchandise, healthcare, dating, scholarly paper, and open university learning recommendations. The proposed model is simulated on an Intel Xeon processor with 256 GB DDR3 in Windows 10 Professional OS under JDK 1.8 environment and the outcomes are analyzed. The measurements are evaluated using the computational time, Mean Absolute Error (MAE), accuracy, ranking score, recall, and precision. The experimental parameters are defined in Table 3.

The datasets are collected from https://gist.github.com/entaroadun/1653794. For example, the books recommendation dataset provides information as shown in Figs. 3, 4, and 5, respectively. BX-Users include the clients. Note that client IDs have been anonymized with a guide to whole numbers. Statistic information is given (`Location`, `Age`) if accessible. Something else, these meadows include NULL-values. BX-Books are distinguished through their separate ISBN.

Illogical ISBNs have just been expelled from the dataset. Besides, few substance-based data given are taken from Amazon Web Services. BX-Book-Ratings contain the book rating data. Evaluations (`Book-Rating`) are either unequivocal, communicated on a scale from 1 to 10 (elevated qualities indicating elevated gratefulness), or certain, communicated through 0. The proposed model is analyzed with collaborative filtering (CF), mass diffusion heat spreading (MDHS), user profile oriented diffusion (UPOD), trust-based hybrid recommendation (TBHR), hybrid recommendation (HR), modified cluster-based intelligent collaborative filtering (MCIF), specific features based personalized recommender (SPFR), cluster-based intelligent hybrid recommendation (CIHR), DBSCAN & SVM based hybrid recommender (DSHR) [23,24,25,26,27,28,29,30,31,32].

MAE analysis

The MAE is figured out by adding these total blunders of the N that is proportional to rating forecast matches with afterward ascertaining normal esteem. The MAE metric for each of the combinations \(p_{i}\) and \(q_{i}\) of anticipated appraisals \(p_{i}\) along with the genuine evaluations \(q_{i}\) is computed as follows:

For minimum MAE, the accuracy will be better. Figure 6 signifies the evaluation output of the proposed recommender with some of the existing methods. The designed scheme has achieved better output than the existing methods. It has been found that when the number of learners L increases, the mean absolute error decreases. The designed scheme has achieved better output than the existing methods. The MAE metric lies between 5 and 19.2% for the simulated datasets. The comparison of MAE with some of the recent and well-known methods is depicted in Fig. 7.

Accuracy analysis

The scheme prefers appropriate appearance techniques. Figure 8 signifies accuracy comparison with the existing methods. A learner tally is tallied on X-axis as well as query processing time is measured on Y-axis. The modified TSVM is used to calculate the information of the learners. Depending on the profit of the great response, the mark of the learners may be clarified, with then depends on the recurrent rating cycle; the clustered customers may be separated. The accuracy of the proposed model increases when the number of learners L increases. It has been found that the accuracy of the proposed dataset ranges from 88 to 98% when 50 ≤ L ≤ 250.

The scheme prefers appropriate appearance techniques. The accuracy of the proposed datasets lies between 82 and 98%. Figure 9 shows the significance of the proposed methodology for accuracy over the public data sets.

Query processing time analysis

The computational time of the proposed model with others is sketched in Figs. 10 and 11.

A learner tally is tallied in X-axis along with query processing time that is measured in Y-axis. Enhanced clustering is carried out in the proposed method to discover the clusters based on their random sizes and shapes. The proposed strategy achieved significant performance. When L increases, computational time also increases. It has been found that the computational time is increased in linear complexity.

Ranking score analysis

The minimum ranking score provides the better recommendation. The comparison of the ranking score metric with others is depicted in Fig. 12. The proposed method achieves a significant ranking score compared to the other methods.

Recall analysis

Figure 13 shows the comparison of the Recall metric with other methods and the higher recall is expected. The proposed method achieves a better recall metric compared to the other methods.

Precision analysis

Figure 14 shows the comparison of Precision metric with other methods and high metric value is expected.

The average metrics comparison with other techniques for public datasets is sketched in Fig. 15. It has been experimentally found that the proposed model behaves significantly well in terms of MAE, accuracy, ranking score, recall, and precision metrics.

The average computational time of the 16 datasets for the different methods is calculated and is compared with the proposed method and shown in Fig. 16. It has been analyzed that this recommender minimizes the average computational time compared to the other methods on an average for the 16 different public datasets. The experimental analysis concludes that the EC strategy discovers clusters based on random size and shapes. It has also been analyzed that depends on the profit of the great response, the mark of the learners may be clarified, with then depends on the recurrent rating cycle; the clustered customers may be separated. The proposed method minimizes the expected absolute error while reducing the query processing times and increasing the accuracy.

Conclusions and future work

The recommender scheme structure is offered to the modified e-learning, particularly offers suggestions to assist the learners in recognizing as well as deciding the efficient data. This research is focused on the design, generalized modeling of an enhanced density-based spatial clustering of relevance by noise with a trust-based support vector machine to achieve a hybrid and personalized recommendation for the learners. In this research, the cluster is created effectively through an EC algorithm. Then the modified TSVM algorithm calculates the learner's evaluation based on their habits and their concerns. This new recommender provides better performance with other methods in terms of expected absolute error, accuracy, ranking score, recall, and precision measurements. The accuracy of the proposed datasets lies between 82 and 98%. The MAE metric lies between 5 and 19.2% for the simulated datasets. The experimental analysis concludes that the EC strategy discovers clusters based on random size and shapes. The proposed model works well in terms of all metric evaluations on an average for the considered 16 different public datasets for machine learning. It has also been analyzed that depends on the profit of the great response, the mark of the learners may be clarified, with then depends on the recurrent rating cycle; the clustered customers may be separated. The proposed method minimizes the expected absolute error while reducing the query processing times and increasing the accuracy. The empirical results show that this new recommender is expected to keep the recommendation up-to-date.

The following are the future suggestions to this research:

-

The dataset can be initially processed for the learner requirements using an evolutionary model.

-

The complexity can further be reduced using soft computing strategies to obtain a better recommendation based on the evaluation of different metrics.

-

The EGSP strategy can be applied in parallel for different clusters to mine the sequential pattern of learners in parallel so that query-processing time can be reduced with the design of new evolutionary models [33, 34].

References

Masoumi D, Lindström B (2012) Quality in e-learning: a framework for promoting and assuring quality in virtual institutions. J Comput Assist Learn 28(1):27–41

Ossiannilsson E, Landgren L (2012) Quality in e-learning—a conceptual framework based on experiences from three international benchmarking projects. J Comput Assist Learn 28(1):42–51

Alptekin SE, Karsak EE (2011) An integrated decision framework for evaluating and selecting e-learning products. Appl Soft Comput 11(3):2990–2998

Sudhana KM, Raj VC, Suresh RM (2013) An ontology-based framework for context-aware adaptive e-learning system. IEEE Int Conf Comput Commun Inf 2013:1–6

Kolekar SV, Sanjeevi SG, Bormane DS (2010) Learning style recognition using artificial neural network for adaptive user interface in e-learning. IEEE Int Conf Comput Commun Inf 2010:1–5

Fernández-Gallego B, Lama M, Vidal JC, Mucientes M (2013) Learning analytics framework for educational virtual worlds. Procedia Comput Sci 25:443–447

Anitha A, Krishnan N (2011) A dynamic web mining framework for E-learning recommendations using rough sets and association rule mining. Int J Comput Appl 12(11):36–41

Keefe JW (1987) Learning style theory and practice. In: National Association of Secondary School Principals, 1904 Association Dr., Reston, p 22091

Tam V, Lam EY, Fung ST (2012) Toward a complete e-learning system framework for semantic analysis, concept clustering and learning path optimization. In: IEEE 12th international conference on advanced learning technologies, pp 592–596

Ghauth KI, Abdullah NA (2010) Learning materials recommendation using good learners’ ratings and content-based filtering. Educ Tech Res Dev 58(6):711–727

Verbert K, Manouselis N, Ochoa X, Wolpers M, Drachsler H, Bosnic I, Duval E (2012) Context-aware recommender systems for learning: a survey and future challenges. IEEE Trans Learn Technol 5(4):318–335

Hsu MH (2008) A personalized English learning recommender system for ESL students. Expert Syst Appl 34(1):683–688

Lu J (2004) A personalized e-learning material recommender system. In: International conference on information technology and applications, pp 1–7

Chen CM, Lee HM, Chen YH (2005) Personalized e-learning system using item response theory. Comput Educ 44(3):237–255

Abel F, Gao Q, Houben GJ, Tao K (2011) Analyzing user modeling on twitter for personalized news recommendations. In: international conference on user modeling, adaptation, and personalization, pp 1–12

El Bachari E, El Hassan Abelwahed MEA (2011) E-Learning personalization based on dynamic learners’ preference. Int J Comput Sci Inf Technol 3(3):20–217

Gallego D, Barra E, Aguirre S, Huecas G (2012) A model for generating proactive context-aware recommendations in e-learning systems. In: IEEE Frontiers in Education Conference Proceedings, pp 1–6

Yu Z, Nakamura Y, Jang S, Kajita S, Mase K (2007) Ontology-based semantic recommendation for context-aware e-learning. In: International conference on ubiquitous intelligence and computing, pp 898–907

Tai DWS, Wu HJ, Li PH (2008) Effective e-learning recommendation system based on self-organizing maps and association mining. Electron Libr 26(3):239–344

Zhou Y, Huang C, Hu Q, Zhu J, Tang Y (2018) Personalized learning full-path recommendation model based on LSTM neural networks. Inf Sci 444:135–152

Nafea SM, Siewe F, He Y (2019) A novel algorithm for course learning object recommendation based on student learning styles. In: IEEE international conference on innovative trends in computer engineering (ITCE), pp 192–201

Doja MN (2020) An improved recommender system for E-Learning environments to enhance learning capabilities of learners. Proc ICETIT 2019:604–612

Shu J, Shen X, Liu H, Yi B, Zhang Z (2018) A content-based recommendation algorithm for learning resources. Multimedia Syst 24(2):163–173

Fu M, Qu H, Moges D, Lu L (2018) Attention based collaborative filtering. Neurocomputing 311:88–98

Moreno MN, Segrera S, López VF, Muñoz MD, Sánchez ÁL (2016) Web mining based framework for solving usual problems in recommender systems. A case study for movies׳ recommendation. Neurocomputing 176:72–80

Polatidis N, Georgiadis CK (2016) A multi-level collaborative filtering method that improves recommendations. Expert Syst Appl 48:100–110

Monsalve-Pulido J, Aguilar J, Montoya E, Salazar C (2020) Autonomous recommender system architecture for virtual learning environments. Appl Comput Inf 2020:1–20

Tewari AS (2020) Generating items recommendations by fusing content and user-item based collaborative filtering. Procedia Comput Sci 167:1934–1940

Roy PK, Chowdhary SS, Bhatia R (2020) A machine learning approach for automation of resume recommendation system. Procedia Comput Sci 167:2318–2327

Bhaskaran S, Santhi B (2019) An efficient personalized trust based hybrid recommendation (tbhr) strategy for e-learning system in cloud computing. Clust Comput 22(1):1137–1149

Bhaskaran S, Marappan R, Santhi B (2020) Design and comparative analysis of new personalized recommender algorithms with specific features for large scale datasets. Mathematics 8(7):1–27

Bhaskaran S, Marappan R, Santhi B (2021) Design and analysis of a cluster-based intelligent hybrid recommendation system for e-learning applications. Mathematics 9(2):1–21

Marappan R, Sethumadhavan G (2018) Solution to graph coloring using genetic and tabu search procedures. Arab J Sci Eng 43(2):525–542

Marappan R, Sethumadhavan G (2020) Complexity analysis and stochastic convergence of some well-known evolutionary operators for solving graph coloring problem. Mathematics 8(3):1–20

Acknowledgements

The funding support by SASTRA Deemed University is acknowledged by the authors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bhaskaran, S., Marappan, R. Design and analysis of an efficient machine learning based hybrid recommendation system with enhanced density-based spatial clustering for digital e-learning applications. Complex Intell. Syst. 9, 3517–3533 (2023). https://doi.org/10.1007/s40747-021-00509-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-021-00509-4