Abstract

Non-convex discrete-time optimal control problems in, e.g., water or power systems, typically involve a large number of variables related through nonlinear equality constraints. The ideal goal is to find a globally optimal solution, and numerical experience indicates that algorithms aiming for Karush–Kuhn–Tucker points often find solutions that are indistinguishable from global optima. In our paper, we provide a theoretical underpinning for this phenomenon, showing that on a broad class of problems the objective can be shown to be an invariant convex function (invex function) of the control decision variables when state variables are eliminated using implicit function theory. In this way, optimality guarantees can be obtained, the exact nature of which depends on the position of the solution within the feasible set. In a numerical example, we show how high-quality solutions are obtained with local search for a river control problem where invexity holds.

Similar content being viewed by others

1 Introduction

Discrete-time optimal control typically involves the solution of an optimization problem, which need not be convex. Such non-convex optimization problems arise, for example, in systems driven by nonlinear partial differential equations (PDEs), such as water, gas, and power systems [1, 12, 24]. A typical objective in such problems is to steer the system into tracking target values for certain state variables, e.g., stabilizing a water level around the desired level [19].

One of the desiderata for a solution to an optimization problem is global optimality. Although locally optimal solutions are often used, globally optimal ones typically yield substantially better objective values. While approaches aiming for global optimality in general nonlinear optimization have been proposed [9, 21, 31, 36], these approaches are often incompatible with tight computation time limits or large problem sizes. Therefore, common work-arounds are to use linearizations [3, 17, 18], convex restrictions, or convex relaxations [25, 27, 28], which provide tractability yet at the cost of model accuracy, or to resort to genetic algorithms [33, 37, 38].

It is most desirable, however, to obtain a globally optimal solution to the “most exact” nonlinear model without resorting to computationally expensive techniques. Ample numerical evidence exists that locally optimal solutions to non-convex discrete-time optimal control problems are often of high quality, hardly distinguishable from true global optima and/or better than other applied methods [7, 12, 21, 26].

In this paper, we provide a theoretical underpinning for this phenomenon by showing that, in the problems we study, the objective function composed with the dynamics is invex in the original sense of Hanson [23] and Craven [16]. Invexity is a generalization of convexity that certifies, roughly speaking, that on an open set a stationary point of an invex function is its global minimum [10, 32]. Despite the large body of theoretical work, we are not aware of research leveraging invexity in a large-scale applied (engineering) context.

We use invexity to prove optimality guarantees for KKT points of problems belonging to a class of discrete-time optimal control problems, including full global optimality for KKT points in the interior of the feasible set. Because the invexity of the involved functions is not readily seen and arises from a function implicitly defined by the problem’s constraints, we refer to it as hidden invexity. This is analogous to the term hidden convexity [11] that is used when the convexity of a problem is not immediately apparent. The hidden convexity result of [11] showed that by reformulating an original, non-convex problem, one can solve a convex problem whose optimal solution is a global optimum of the non-convex problem.

Our result, in turn, relies on a direct analysis of the non-convex problem using the notion of invexity and the language of tangent cones. It allows us to prove global optimality properties for KKT points directly. This is also how our result differs from convex restriction and convex relaxation methods.

The research contribution of our work, seen from the perspective of different fields, is as follows:

-

(a)

From the mathematical optimization angle, we show that for a large class of non-convex problems, certifying the hidden invexity and using standard local solvers is a viable alternative to the use of solvers for general non-convex optimization problems.

-

(b)

From the optimal control angle, we show that for a large class of discrete-time optimal control problems, invex formulations exist, preserving the exact nonlinear dynamics. This allows to tractably determine high-quality solutions to large-scale problems.

-

(c)

From the nonlinear analysis angle, we show that the hidden invexity of a discrete-time optimal control problem yields so-called conic-intersection optimality guarantees for KKT points with active inequality constraints, and global optimality for KKT points in the interior of the domain of the control variables. The notion of conic-intersection optimality will be formally defined in Sect. 3.

The remainder of this paper is structured as follows. Section 2 introduces the notion of regular problems for which we establish our result. In Section 3 we prove the main result of the paper. Section 4 presents a numerical study for a single river segment modelled using a nonlinear PDE.

2 Regular discrete-time optimal control problems

In this section we describe the class of discrete-time optimal control problems for which we demonstrate invexity. Consider the problem

where we refer to the variables \(x \in {\mathbb {R}}^m\) as states and the variables \(u \in {\mathbb {R}}^n\) as controls. These names are motivated by the fact that the controls implicitly determine the values of the states through the equality constraints \(c(x,u)=0\). The function \(f: {\mathbb {R}}^n \rightarrow {\mathbb {R}}\) is the objective and the function \(g: {\mathbb {R}}^m \rightarrow {\mathbb {R}}^n\) is the output function mapping states x to outputs \(y:=g(x)\). These concepts originate in control theory and are essential to our analysis. The relationship between the controls u, the implicitly defined states x, and the output variables y, is illustrated in Fig. 1.

We denote the set of admissible controls as \(U := \{u \in {\mathbb {R}}^n: \ d(u) \le 0 \}\), where d is a vector-valued function whose components are the control inequality constraint functions, with the inequality holding component-wise, and denote the set of indices of the coordinates of d by \({\mathcal {I}}\).

Our goal is to show that the objective of problem  (\({\mathcal {P}}\)) is invex as a function of the controls u, under certain conditions. However, the equality constraints in

(\({\mathcal {P}}\)) is invex as a function of the controls u, under certain conditions. However, the equality constraints in  (\({\mathcal {P}}\)) can involve nonlinear functions, making the analysis cumbersome. We shall alleviate this difficulty by eliminating the constraints using implicit function theory and analyzing the problem using total gradients with respect to u, wherein the derivatives of the state variables \(x_i\) with respect to u are expressed explicitly. This step is used for the analysis, but is not required in numerical implementation.

(\({\mathcal {P}}\)) can involve nonlinear functions, making the analysis cumbersome. We shall alleviate this difficulty by eliminating the constraints using implicit function theory and analyzing the problem using total gradients with respect to u, wherein the derivatives of the state variables \(x_i\) with respect to u are expressed explicitly. This step is used for the analysis, but is not required in numerical implementation.

If the Jacobian \(\nabla _x c\) is invertible, then the total Jacobian of the states x with respect to the controls u may be expressed using the implicit function theorem as

in which the prefix \(\nabla _x\) denotes the matrix of partial derivatives with respect to the components of x, and \(D_x\) the matrix of total derivatives with respect to x, of a given function. In what follows, by \(\nabla ^{-1}\) and \(D^{-1}\) we denote the inverses of the respective matrices, if they exist.

In order to establish our result, we need some conditions. In the following, we define the regular problems for which invexity can be demonstrated. After the definition, we discuss each of the conditions.

Definition 2.1

Consider problem  (\({\mathcal {P}}\)) with states x and controls u. Let the functions f, g, c and d be continuously differentiable. We say that

(\({\mathcal {P}}\)) with states x and controls u. Let the functions f, g, c and d be continuously differentiable. We say that  (\({\mathcal {P}}\)) is regular if the following conditions are satisfied:

(\({\mathcal {P}}\)) is regular if the following conditions are satisfied:

the linear independence constraint qualifications (LICQ):

-

1.

the Jacobian matrix of the equality constraints c with respect to the state vector x, i.e., \(\nabla _x c(x,u)\), is square and full-rank for all (x, u) such that \(c(x,u)=0\), \(u \in U\),

-

2.

the gradient vectors of the active inequality constraints \(d_i\) at the point u, i.e., \(\nabla _u d_i(u)\) for all \(i \in {\mathcal {I}}\) such that \(d_i(u)=0\), are linearly independent for all \(u \in U\),

the uniqueness condition:

-

3.

for all \(u \in U\), the constraints \(c(x,u)=0\) have a unique solution x,

the output-controllability condition:

-

4.

the output function \(g: {\mathbb {R}}^m \rightarrow {\mathbb {R}}^n\) is such that the square matrix

$$\begin{aligned} -\nabla _x g (x) \, \nabla ^{-1}_x c(x,u) \, \nabla _{u} c(x,u), \end{aligned}$$(2)is invertible for all (x, u) such that \(c(x,u)=0\), \(u \in U\),

and the convexity condition:

-

5.

the objective function \(f: {\mathbb {R}}^n \rightarrow {\mathbb {R}}\) is convex.

Note that in problem  (\({\mathcal {P}}\)), no explicit bounds or inequality constraints are imposed on the state vector x. State bounds \(e(x)\le 0\) do not fit the formalism \(d(u)\le 0\) of problem

(\({\mathcal {P}}\)), no explicit bounds or inequality constraints are imposed on the state vector x. State bounds \(e(x)\le 0\) do not fit the formalism \(d(u)\le 0\) of problem  (\({\mathcal {P}}\)) whence, in general, a problem with explicit state bounds is not regular. In practice, imposing explicit state bounds can lead to a situation where the projection of the feasible set in terms of (u, x) onto the space of u becomes a disconnected set. However, terms penalizing deviation from output variable “bounds” may be included in the objective function f.

(\({\mathcal {P}}\)) whence, in general, a problem with explicit state bounds is not regular. In practice, imposing explicit state bounds can lead to a situation where the projection of the feasible set in terms of (u, x) onto the space of u becomes a disconnected set. However, terms penalizing deviation from output variable “bounds” may be included in the objective function f.

We now discuss each of the conditions of Definition 2.1.

Condition 1 is a linear independence constraint qualification (LICQ, [34]). It is required for the state vector x to be well-defined as an implicit function of u, and for us to be able to apply the implicit function theorem. Condition 1 is often straightforward to demonstrate if the dynamics of the underlying model are integrable in time. For the dynamics to be integrable in time, it is required that the number of states be equal to the number of equations, and furthermore that the Jacobian of the equations with respect to the states is non-singular.

Condition 2 is the second LICQ and is satisfied for standard domains such as balls and boxes. It prevents the constraints \(d(u)\le 0\) from defining a lower-dimensional subspace of \({\mathbb {R}}^n\).

Condition 3 states that the implicit function \(u \mapsto x\) is uniquely defined on U. It is a standard assumption in discrete-time optimal control, as this required uniqueness property typically is satisfied if the dynamics are (uniquely) integrable in time. Note that by Condition 1 alone, implicit function theory would only provide for an implicit function that is locally unique. It would not guarantee an implicit function that is uniquely defined on all of U.

Condition 4 states that different states should map to different outputs. Conditions 1 and 2 imply that the implicit function \(u \mapsto x\) is injective. Therefore x[U], i.e., the image of the set of admissible controls under the implicit function x, is an n-dimensional manifold in \({\mathbb {R}}^m\). Any mapping g that is invertible on this manifold x[U] is therefore a valid choice.

Another interpretation of Condition 4 is the following. If we would require the LICQ and uniqueness conditions to hold on \({\mathbb {R}}^n\) (rather than only on U), Condition 4 would imply that for every \(y \in {\mathbb {R}}^n\), there would exist a control input \(u \in {\mathbb {R}}^n\) such that \(y=(g \circ x)(u)\). This hypothetical condition is exactly the classical output-controllability [35] condition.

Condition 5 is standard and includes objectives such as p-norms raised to the p-th power with \(p \ge 2\).

Before looking at examples of regular problems, it is instructive to consider a few irregular problems to show that the regularity conditions indeed eliminate some of the well-known NP-hard problems.

Example 2.1

Let \([0,1] \subset U \subset {\mathbb {R}}\). If problem  (\({\mathcal {P}}\)) contains a binary restriction constraint \(u(1-u)=0\), then Condition 4 is not satisfied at \(u=0.5\).

(\({\mathcal {P}}\)) contains a binary restriction constraint \(u(1-u)=0\), then Condition 4 is not satisfied at \(u=0.5\).

Example 2.2

Let \(U \subset {\mathbb {R}}\). If problem  (\({\mathcal {P}}\)) contains binary restriction constraints of the form

(\({\mathcal {P}}\)) contains binary restriction constraints of the form

then Condition 2 is not satisfied at \(u=0\) and at \(u=1\).

Example 2.3

Let \([-1,1] \subset U \subset {\mathbb {R}}\). If problem  (\({\mathcal {P}}\)) contains a sinusoidal constraint \(u=\sin x\), then Condition 3 is, in general, not satisfied.

(\({\mathcal {P}}\)) contains a sinusoidal constraint \(u=\sin x\), then Condition 3 is, in general, not satisfied.

Example 2.4

Let \(0 \in U \subset {\mathbb {R}}\). If problem  (\({\mathcal {P}}\)) contains a bilinear constraint of the form \(u=x_1 x_2\), then the LICQ Condition 1 is, in general, not satisfied.

(\({\mathcal {P}}\)) contains a bilinear constraint of the form \(u=x_1 x_2\), then the LICQ Condition 1 is, in general, not satisfied.

Example 2.5

Let \((0,0) \in U \subset {\mathbb {R}}^2\). If problem  (\({\mathcal {P}}\)) contains a bilinear constraint of the form \(x=u_1 u_2\), then Condition 4 is, in general, not satisfied.

(\({\mathcal {P}}\)) contains a bilinear constraint of the form \(x=u_1 u_2\), then Condition 4 is, in general, not satisfied.

Example 2.6

Let \(0 \in U \subset {\mathbb {R}}\). If problem  (\({\mathcal {P}}\)) contains a piecewise constraint of the form

(\({\mathcal {P}}\)) contains a piecewise constraint of the form

then Condition 4 is, in general, not satisfied whenever \(u_1 < 0\).

We now proceed to introduce examples of systems meeting the regularity conditions. A first example is provided by affine constraint functions satisfying the appropriate rank conditions.

Example 2.7

Consider problem  (\({\mathcal {P}}\)) with control vector \(u \in {\mathbb {R}}^n\), state vector \(x \in {\mathbb {R}}^m\), output vector \(y \in {\mathbb {R}}^n\), and trajectory tracking objective

(\({\mathcal {P}}\)) with control vector \(u \in {\mathbb {R}}^n\), state vector \(x \in {\mathbb {R}}^m\), output vector \(y \in {\mathbb {R}}^n\), and trajectory tracking objective

with \(p \in [2, \infty )\) and affine output function

subject to the bounds

and affine constraints

with matrix A square and invertible, matrices B and C full rank and matrix C such that the square matrix \(CA^{-1}B\) is invertible. This problem is regular.

Our next example considers trigonometric constraints, which commonly arise in control of systems with axes of rotation such as vehicles, ships, and aircraft.

Example 2.8

Problem  (\({\mathcal {P}}\)) with control vector \(u \in {\mathbb {R}}^2\), state vector \(x \in {\mathbb {R}}^2\), output vector \(y \in {\mathbb {R}}^2\), and trajectory tracking objective

(\({\mathcal {P}}\)) with control vector \(u \in {\mathbb {R}}^2\), state vector \(x \in {\mathbb {R}}^2\), output vector \(y \in {\mathbb {R}}^2\), and trajectory tracking objective

with \(p \in [2, \infty )\) and output function

subject to the bounds

and constraints

is regular. Note that for Condition 4 to hold, \(u_1\) must be bounded away from zero.

Some problems with piecewise-defined constraints that are also continuously differentiable, are regular:

Example 2.9

Problem  (\({\mathcal {P}}\)) with control vector \(u \in {\mathbb {R}}^2\), state vector \(x \in {\mathbb {R}}^3\), output vector \(y \in {\mathbb {R}}^2\), and trajectory tracking objective

(\({\mathcal {P}}\)) with control vector \(u \in {\mathbb {R}}^2\), state vector \(x \in {\mathbb {R}}^3\), output vector \(y \in {\mathbb {R}}^2\), and trajectory tracking objective

with \(p \in [2, \infty )\) and output function

subject to the bounds

and constraints

is regular. Unlike in Example 2.6, the state vector x of this example is always sensitive to the control variables \(u_1\) and \(u_2\).

Problems with structure similar to that of Example 2.9 commonly occur when modelling water flow over a weir (a small dam), the crest of which may or may not lie below the upstream water surface.

Since bilinear constraints are very common, we also show how certain bilinear problems satisfy the regularity conditions.

Example 2.10

Problem  (\({\mathcal {P}}\)) with control vector \(u \in {\mathbb {R}}^2\), state vectors \(x \in {\mathbb {R}}^2\) and \(z \in {\mathbb {R}}^2\), output vector \(y \in {\mathbb {R}}^2\), and objective

(\({\mathcal {P}}\)) with control vector \(u \in {\mathbb {R}}^2\), state vectors \(x \in {\mathbb {R}}^2\) and \(z \in {\mathbb {R}}^2\), output vector \(y \in {\mathbb {R}}^2\), and objective

with \(p \in [2, \infty )\) and output function

subject to the bounds

and constraints

with the fixed initial condition \(z_0 \in {\mathbb {R}}\), is regular as long as \(z_0\) is chosen such that \(z_i \ne 0\), \(i \in \{1,2\}\), for all feasible u. Conditions 1–2 and 4–5 are readily verified. To verify Condition 3, i.e., that for any \(u \in U\) the constraints admit a unique solution, note that the constraints (3)–(6) may be solved in the given order, starting from the fixed initial value \(z_0\), inserting the computed value for \(x_1\) into the subsequent equation, etc.

Problems with structure similar to that of Example 2.10 commonly occur when modelling the power generation of a hydroelectric turbine in a power station. Instantaneous generation (u) is non-negative and bounded, and it is bilinear in flow (x) and the water level difference (z) across a dam, which is never zero. At the same time, an increase in flow (x) results in a decrease of the water level difference (z). Similar reasoning applies to the power consumption of pumps.

The conditions of Definition 2.1 are satisfied by certain optimization problems constrained by discretized hyperbolic PDEs, if a suitable discretization is chosen. An example of an optimization problem constrained by appropriately discretized Saint-Venant equations [39] is presented and analyzed in Sect. 4.

In the next Section, we present our main theoretical result.

3 Hidden invexity

3.1 Introduction

In this section we present our main result that regular problems, in the sense of Definition 2.1, have hidden invexity when reduced to optimization over control variables. We first give the definition of invexity.

Definition 3.1

Consider an open set \(X \subset {\mathbb {R}}^n\). A function \({h}: X \rightarrow {\mathbb {R}}\) is called invex on X if there exists a vector function \(\eta _h(x_2,x_1): X \times X \rightarrow {\mathbb {R}}^n\) such that

for all \(x_1, x_2 \in X\).

Note that the above becomes a definition of convexity in case \(\eta _h(x_2, x_1) = x_2 - x_1\).

The name invex follows from invariant convex [16]. A function is invex if and only if every stationary point is a global minimum. To see the first implication, set \(\nabla {h}=0\) in (7). A concise proof of the reverse implication can be found in [10, Theorem 1].

The definition of invexity is usually stated for functions defined on open sets, wheareas our goal is to optimize over a closed set U defined by inequality constraints. There exists an entire family of extensions of the notion of invexity to constrained optimization problems (KT-invexity [29], HC-invexity [16, 23, 29], Type I/Type II invexity [22]). However, each of them is difficult to apply to real-world problems like ours, due to the need to find a common function \(\eta \) for the objective and the constraints. Therefore, we shall stay with the standard notion of invexity and eliminate the equality constraints from the problem in order to show invexity of the objective function on the interior of U. In this process, we extend the analysis to the boundary of the feasible set U using the geometry of the tangent cones.

3.2 Main result

In our analysis, instead of constructing the function \(\eta _h\) of Definition 3.1 explicitly, we will use the fact that invexity of functions arises naturally in the composition of convex functions with transformations that are full-rank, i.e., that have an invertible Jacobian [15]. We will now show how regular problems fit this scheme.

In the following we assume that all conditions of Definition 2.1 hold. Per Condition 5 of Definition 2.1, the objective function f of a regular problem  (\({\mathcal {P}}\)) is convex. In order to obtain an invex function on the set of admissible controls U, we compose the objective f with a full-rank transformation. We construct this full-rank transformation by using the implicit function theorem to express the state vector x as a function \(u \mapsto x\). Condition 1 ensures that the conditions of the implicit function theorem are met, and Condition 3 ensures that the function \(u \mapsto x\) is globally unique. Problem

(\({\mathcal {P}}\)) is convex. In order to obtain an invex function on the set of admissible controls U, we compose the objective f with a full-rank transformation. We construct this full-rank transformation by using the implicit function theorem to express the state vector x as a function \(u \mapsto x\). Condition 1 ensures that the conditions of the implicit function theorem are met, and Condition 3 ensures that the function \(u \mapsto x\) is globally unique. Problem  (\({\mathcal {P}}\)) can therefore be rewritten as:

(\({\mathcal {P}}\)) can therefore be rewritten as:

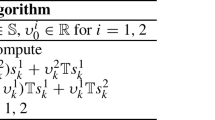

In  (\({\mathcal {P}}^U\)), the composition \(T: U \rightarrow Y:=T[U]\), \(T(u):=(g \circ x)(u)\), will be playing the role of the invertible transformation, and the composition \(f \circ T\) will be shown to be invex. This setup is illustrated in Figs. 1 and 2.

(\({\mathcal {P}}^U\)), the composition \(T: U \rightarrow Y:=T[U]\), \(T(u):=(g \circ x)(u)\), will be playing the role of the invertible transformation, and the composition \(f \circ T\) will be shown to be invex. This setup is illustrated in Figs. 1 and 2.

The strength of our result for a particular KKT point with \((u^*,\lambda ^*)\) will depend on the place where point \(y^*=T(u^*)\) is in the set Y: in the interior, or on the boundary. In general, the set Y is non-convex and optimality guarantees are stated in terms of “the set of points in Y that can be seen” from \(y^*\). To make this rigorous, we first recall the definition of the tangent cone [20, 34].

Definition 3.2

Let \(Y \subset {\mathbb {R}}^n\) be a non-empty set. A vector \(d \in {\mathbb {R}}^n\) is called tangent to Y at \(y \in Y\), if there exist sequences \(\{y^k\} \subset Y\), \(\{t_k\} \subset {\mathbb {R}}^+\) such that

The set of all tangent vectors at \(y \in Y\) is the tangent cone of Y at y, denoted as \({\mathcal {T}}_Y(y)\).

Tangent cones provide the vocabulary to define our notion of conic-intersection minimum.

Definition 3.3

Consider a regular problem  (\({\mathcal {P}}^U\)). We say that a point \(u^*\) is a conic-intersection minimum if it is a global minimum on the set

(\({\mathcal {P}}^U\)). We say that a point \(u^*\) is a conic-intersection minimum if it is a global minimum on the set

where \({\mathcal {T}}_Y(T(u^*))\) denotes the tangent cone of Y at \(T(u^*)\).

The definition states, in rough terms, that a conic-intersection minimum is a global minimum with respect to the interior of the domain and all inactive boundary segments (invexity), minus any points “hidden from view” due to local non-convexity of the active boundary segments in Y. The geometric meaning is illustrated in Fig. 3.

The highlighted areas illustrate sets \(T[V(u^*)]\) within which a solution \(y^*=T(u^*)\) is globally optimal, for an interior (left) and a boundary solution (right). The sets are shown in the output space \(Y=T[U]\) to highlight the role of the tangent cones. Note that in the left panel the point \(y^*\) is in the interior of Y, hence a global minimum in Y, despite the fact that there are some points in Y that are not “visible” from the tangent cone at \(y^*\). This is thanks to invexity

We now state our main result whose proof is presented in Sect. 3.3.

Theorem 3.1

Consider a regular problem  (\({\mathcal {P}}^U\)). Let \((u^*,\lambda ^*)\) be a KKT point of this problem. Then \(u^*\) is a conic-intersection minimum.

(\({\mathcal {P}}^U\)). Let \((u^*,\lambda ^*)\) be a KKT point of this problem. Then \(u^*\) is a conic-intersection minimum.

Before proceeding to the corollary, we note that the reverse statement of Theorem 3.1, i.e., that every minimum is a KKT point, follows from the LICQ Conditions 1 and 2 in Definition 2.1. These LICQ conditions form the regularity condition required for every minimum to be a KKT point [34, Theorem 12.1].

The corollary is a direct consequence of the fact that for an interior point, \({\mathcal {T}}_Y(T(u^*))={\mathbb {R}}^n\).

Corollary 1

Consider a regular problem  (\({\mathcal {P}}^U\)). Consider a KKT point \((u^*, \lambda ^*)\) such that \(u^* \in {{\,\mathrm{int}\,}}U\). Then \(u^*\) is a global minimum of \(f \circ T\) on U.

(\({\mathcal {P}}^U\)). Consider a KKT point \((u^*, \lambda ^*)\) such that \(u^* \in {{\,\mathrm{int}\,}}U\). Then \(u^*\) is a global minimum of \(f \circ T\) on U.

Corollary 1 is exactly the characterization of an invex function on \({{\,\mathrm{int}\,}}U\). In other words, then, the function \(h:=f \circ T\) is invex on \({{\,\mathrm{int}\,}}U\), with

The expression for \(\eta _h\) follows from the convexity, hence invexity, of f on \(Y=T[U]\) with \(\eta _f(y_2,y_1)=y_2-y_1=T(u_2)-T(u_1)\) (cf. Definition 3.1). By continuity of h we also know that none of the points on the boundary of U map to lower objective values, whence a KKT point in the interior of U is a global minimum on all of U.

We will now explain the meaning of the general result. For this, it is instructive to first recall the reference situation: general nonlinear optimization. A KKT point of a nonlinear optimization problem need not be a local minimum; it may also be a local maximum, or a saddle point. Furthermore, in case that a KKT point is a local minimum, it is only guaranteed to be minimal within an arbitrarily small neighbourhood of itself. From a numerical point of view, generic nonlinear optimization problems are hard: local solvers may converge to KKT points that are local maxima or saddle points.

For a regular problem, Theorem 3.1 provides a stronger characterization of KKT points. Although there is no guarantee that a KKT point \((u^*, \lambda ^*)\) on the boundary of U is not a saddle point or a local maximum with respect to U, Theorem 3.1 states that \(u^*\) is a local minimum, and therefore not a maximum or a saddle point, with respect to \(V(u^*)\). This is important from a numerical point of view, since it implies that a local solver will converge to a local minimum (and certainly not a maximum) with respect to \(V(u^*)\). Secondly, it states that the point \(u^*\) is in fact a global minimum over \(V(u^*)\).

The meaning of the global optimality of the KKT point \(u^*\) over \(V(u^*)\) is best understood by analyzing two distinct cases. The first is when \(u^* \in {{\,\mathrm{int}\,}}U\), i.e., \(u^*\) lies in the interior of U, in which case \(V(u^*)=U\) so that \(u^*\) is a global minimum over U. The second is when \(u^*\) lies on the boundary of U. In that case, its objective value is no greater than the objective values for all points u corresponding to points y that lie in the intersection of the translated tangent cone with the set Y itself, i.e., that lie in the set \(\left( T(u^*)+{\mathcal {T}}_Y(T(u^*))\right) \cap Y\). Both cases are illustrated in Fig. 3.

A conic-intersection minimum on the boundary need not be globally optimal, as is demonstrated by the following example:

Example 3.1

Problem  (\({\mathcal {P}}\)) with control vector \(u \in {\mathbb {R}}^2\), state vector \(x \in {\mathbb {R}}^2\), output vector \(y \in {\mathbb {R}}^2\), and objective

(\({\mathcal {P}}\)) with control vector \(u \in {\mathbb {R}}^2\), state vector \(x \in {\mathbb {R}}^2\), output vector \(y \in {\mathbb {R}}^2\), and objective

and output function

subject to the bounds

and constraints

is regular. The point \(u^*=(-0.5, 1)\) is a KKT point on the boundary, and therefore a conic-intersection minimum by Theorem 3.1. It is thus a global minimum on the set

The point \(u^*\) is not, however, globally optimal on U, since a lower objective function value is obtained at the point (1, 1).

A real-world application of Theorem 3.1 is discussed in Sect. 4.

3.3 Proof of Theorem 3.1

Recall that the function \(u \mapsto x\) exists by virtue of the implicit function theorem, the conditions of which are satisfied due to Condition 1 of Definition 2.1. By Condition 3, this function is uniquely defined on the feasible set U.

Consider the transformation \(T = g \circ x\). By Condition 4 of Definition 2.1, the matrix \(D_u T\) is invertible whence, by the inverse function theorem, the transformation T itself is invertible. The transformation and its use within the optimization problem is illustrated in Fig. 2.

As per the definition of problem  (\({\mathcal {P}}\)), the feasible set is described using the constraints \(d(u) \le 0\). We will first show that a point \((u^*,\lambda ^*)\) is a KKT point of the optimization problem

(\({\mathcal {P}}\)), the feasible set is described using the constraints \(d(u) \le 0\). We will first show that a point \((u^*,\lambda ^*)\) is a KKT point of the optimization problem

if and only if \((T(u^*),\lambda ^*)\) is a KKT point of the optimization problem

Afterwards, we will analyze the global optimality structure of the KKT points. Let

denote the Lagrangian of problem  (\({\mathcal {P}}^U\)), and let

(\({\mathcal {P}}^U\)), and let

denote the Lagrangian of problem  (\({\mathcal {P}}^Y\)). We will use the standard definition of KKT points following [34]. KKT points \((u, \lambda )\) of

(\({\mathcal {P}}^Y\)). We will use the standard definition of KKT points following [34]. KKT points \((u, \lambda )\) of  (\({\mathcal {P}}^U\)) correspond to stationary points \((u, \lambda )\) of the Lagrangian \({\mathcal {L}}^U\) by which we have, using the fact that the transformation T is invertible, that:

(\({\mathcal {P}}^U\)) correspond to stationary points \((u, \lambda )\) of the Lagrangian \({\mathcal {L}}^U\) by which we have, using the fact that the transformation T is invertible, that:

Since \(D_u T\) is invertible, a point \((u^*,\lambda ^*)\) is a stationary point of \({\mathcal {L}}^U\) if and only if \((T(u^*),\lambda ^*)\) is a stationary point of \({\mathcal {L}}^Y\). Similar reasoning applies to the primal and dual feasibility conditions (\(d(u^*) \le 0\) and \(\lambda ^* \ge 0\)) as well as to the complementarity condition (\(\lambda ^*_i d_i(u^*)=0\)). This completes the first part of the proof.

We now analyze the KKT points. For this, rather than using the definition of invexity directly, we will use properties of the tangent cones. In this way, we will also be able to reason about points on the boundary of the feasible set; recall that invexity is defined on open sets, i.e., sets without their boundary (cf. Definition 3.1).

We first recall a few definitions. Relevant references are [5, 20, 34].

Definition 3.4

The set

is called the active set for the problem  (\({\mathcal {P}}^Y\)) at the point \(y^* \in Y\).

(\({\mathcal {P}}^Y\)) at the point \(y^* \in Y\).

Definition 3.5

The set

is called the set of linearized feasible directions for the problem  (\({\mathcal {P}}^Y\)) at the point \(y^* \in Y\).

(\({\mathcal {P}}^Y\)) at the point \(y^* \in Y\).

Definition 3.6

The cone

is called the polar cone of the cone K.

Let \((u^*,\lambda ^*)\) be a KKT point of  (\({\mathcal {P}}^U\)). Our aim is to show that \(u^*\) is global minimum of f on the set \(V(u^*)\) as defined in (8). For this, it is convenient to reason about \(y^*=T(u^*)\) and problem

(\({\mathcal {P}}^U\)). Our aim is to show that \(u^*\) is global minimum of f on the set \(V(u^*)\) as defined in (8). For this, it is convenient to reason about \(y^*=T(u^*)\) and problem  (\({\mathcal {P}}^Y\)). By Condition 2 of Definition 2.1, the LICQ holds for the constraint function \(d \circ T^{-1}\). Therefore, \({\mathcal {F}}(y^*)={\mathcal {T}}_Y(y^*)\). See [34, Theorem 12.1] for proof of this fact.

(\({\mathcal {P}}^Y\)). By Condition 2 of Definition 2.1, the LICQ holds for the constraint function \(d \circ T^{-1}\). Therefore, \({\mathcal {F}}(y^*)={\mathcal {T}}_Y(y^*)\). See [34, Theorem 12.1] for proof of this fact.

Since, by the first part of the proof, \((y^*,\lambda ^*)\) is also KKT point, we have

Following the definition of the set of linearized feasible directions \({\mathcal {F}}(y^*)\), for all \(t \in {\mathcal {F}}(y^*)={\mathcal {T}}_Y(y^*)\) we have that \(t^T D_y (d_i \circ T^{-1})(y^*) \le 0\) for all \(i \in {\mathcal {A}}(y^*)\). Because of this and the facts that \(\lambda ^*_i \ge 0\) for all \(i \in {\mathcal {A}}(y^*)\) and \(\lambda ^*_i=0\) for all \(i \in {\mathcal {I}} \setminus {\mathcal {A}}(y^*)\), it follows from (9) that \(-t^T \nabla _y f(y^*) \le 0\) for all \(t \in {\mathcal {F}}(y^*)={\mathcal {T}}_Y(y^*)\). Therefore \(-\nabla _y f(y^*) \in ({\mathcal {T}}_Y(y^*))^{\circ }\), the polar cone of the tangent cone.

Since \(T[V(u^*)] \subset Y\), it follows directly from Definition 3.2 that \({\mathcal {T}}_{T[V(u^*)]}(y^*) \subset {\mathcal {T}}_Y(y^*)\). The inclusion reverses when taking polar cones, so that

This means that for every tangent vector \(t \in {\mathcal {T}}_{[V(u^*)]}(y^*)\), we have \(t^T \nabla _y f(y^*) \ge 0\). By convexity of f (Condition 5 of Definition 2.1), for every \(y \in T[V(u^*)]\),

The second inequality follows from the fact that \(y-y^* \in {\mathcal {T}}_{Y}(y^*)\) by construction of the set \(V(u^*)\). We conclude that \(y^*\) is a global minimum of f on \(T[V(u^*)]\), whence \(u^*\) is a global minimum of \(f \circ T\) on \(V(u^*)\). \(\square \)

4 Numerical experiment: river water level control

4.1 Introduction

In this section, we describe a numerical experiment involving a discrete-time optimization problem for a system driven by hyperbolic partial differential equations. The experiment illustrates how a search for a KKT point using a local solver always leads to the same conic-intersection optimum. This suggests that this solution is in fact a conic-intersection or global optimum, as predicted by the theory. In turn, this highlights the practical relevance of our result: without further analysis, one would only be able to claim any global properties of a solution using computationally expensive general-purpose solvers for non-convex problems such as, e.g., Couenne [9], or, alternatively, seeding a local search with a large number of different starting points in order to obtain increased confidence in the quality of the solution.

We consider a single river segment under the decision maker’s control, illustrated in Fig. 4. The river segment has two endpoints: (i) the upstream endpoint – flow through this point over time is treated as an external parameter for which a time series is available, (ii) the downstream endpoint – a gate where we control the outflow with decision variables. The goal is to schedule the water release at the downstream endpoint over time so that the level over the river segment deviates as little as possible from the target level. This models the control problem faced by operators of impounded rivers, i.e., rivers where segments are separated by weirs or dams, such as the Meuse and the Moselle in Europe.

Given the upstream inflow, downstream outflow, and initial conditions, the time evolution of the level and flow of water at each point of the river is governed by hyperbolic partial differential equations [39]. These are known as the Saint-Venant equations, and are given by the momentum equation

with the longitudinal coordinate x increasing in the flow direction of the river, time t, flow (discharge) Q, water level H, cross section A, hydraulic radius \(R:=A/P\), wetted perimeter P, Chézy friction coefficient C, gravitational constant g, and by the mass balance (or continuity) equation

In a setting with bidirectional flow, the |Q| factor in the momentum equation (10) may be approximated by a smooth function [12]. In this section, however, we will only consider unidirectional flow with \(Q > 0\), so that \(|Q|=Q\). We will also not consider wetting and drying of the channel, whence we assume that \(A > 0\) and \(P > 0\).

4.2 Discretization and analysis

In order to build up a finite-dimensional optimization problem, we conduct a spatial discretization with \(N + 1\) equidistant points at which the flows \(Q_i\) are computed. Between each pair of adjacent flow computation points we consider the level \(H_i\) points, such that \(H_i\) is always between \(Q_{i-1}\) and \(Q_i\). For each of these points, we consider a time discretization with T equidistant steps. We denote the corresponding flow and level over time by \(Q_i(t_{j})\) and \(H_i(t_{j})\). This staggered grid is illustrated in Fig. 5.

The approximation scheme is semi-implicit in time, following [13]. Semi-implicit means that for the equations at time step \(t_j\), some terms are evaluated explicitly at time step \(t_{j-1}\), and other terms are evaluated implicitly at time step \(t_{j}\), requiring the solution of a system of equations to determine their values. The semi-implicit schemes of [13, 14] strike a balance between numerical stability on the one hand, and non-singular tridiagonal Jacobian structure on the other hand.

The discretized mass balance equation is

and the discretized momentum equation is

with

spatial index i, temporal index j, spatial step size \(\Delta x\), temporal step size \(\Delta t\), and convective acceleration \(e_{i,j}\). The parameter \(C_i\) indicates the local friction coefficient, and \(H^b_{i}\) indicates the local bottom level. The convective acceleration term \(e_{i,j}\) is discretized explicitly in time as

Similar to Example 2.10, the equations are such that they can be solved forward in time, starting from initial and boundary conditions. Some boundary conditions may be fixed a priori, whereas others may be given by control variables. In the example grid of Fig. 5, \(Q_0\) is a fixed boundary condition, whereas \(Q_{N}\) is controlled. The values at time step \(t_0\) are taken fixed; these are the initial conditions.

We define the optimization problem as

where the objective is to keep the level at the H node upstream of the gate, i.e., \(H_N\), as close as possible to the target level \({\overline{H}}_N\). The function of the remaining states \(H_i(t_j)\), \(i < N\), is to ensure physically accurate wave propagation in the up- and downstream directions.

First, we relate problem  (\({\mathcal {RP}}\)) to the general problem

(\({\mathcal {RP}}\)) to the general problem  (\({\mathcal {P}}\)). The decision variables \(Q_N(t_j)\) play the role of the control vector u, the remaining variables \(Q_{i}(t_j)\) where \(i < N\) and \(H_i(t_j)\) play the role of the state vector x, and the output vector y consists of the subset of state variables \(H_N(t_j)\).

(\({\mathcal {P}}\)). The decision variables \(Q_N(t_j)\) play the role of the control vector u, the remaining variables \(Q_{i}(t_j)\) where \(i < N\) and \(H_i(t_j)\) play the role of the state vector x, and the output vector y consists of the subset of state variables \(H_N(t_j)\).

We now proceed to analyze the problem  (\({\mathcal {RP}}\)) in light of the conditions of Definition 2.1.

(\({\mathcal {RP}}\)) in light of the conditions of Definition 2.1.

Condition 1 follows from the fact that the Jacobian of the equations (11) – (12) with respect to the states at time step \(t_j\) is tridiagonal. In [13, p. 128] this Jacobian is shown to be non-singular, thanks to the semi-implicit approximation scheme. The equations (11) – (12) do not depend on future states at time step \(t_k\), \(k > j\). Therefore, the full rank of the Jacobian \(\nabla _x c\), i.e., the Jacobian of all equality constraints c with respect to the complete state vector x, follows by induction on the final time index T.

Condition 2 follows from the linearly independent inequality constraints on the outflow variables \(Q_N(t_j)\).

Condition 3 follows from the fact that, given states at time step \(t_{j-1}\), the states at time step \(t_{j}\) are uniquely defined. If the cross section A is a linear function of the water level H, this follows readily: in this case the equations (11) – (12) are linear in the states at time step \(t_{j}\), and the Jacobian to these states is full-rank as per Condition 1. In [14] it is shown that the uniqueness result also extends to a class of nonlinear, monotonic cross section functions \(A=A(H)\).

Condition 4 follows from two considerations. First of all, only equation (11) depends on the control variables. Since the dependency is linear, and every control variable occurs in exactly one constraint (11), the Jacobian \(\nabla _u c\) is full-rank. Secondly, for a given control variable \(Q_N(t_j)\), only the states at \(t_k\), \(k \ge j\) are sensitive to it. Consequently, the mapping from the control variables \(Q_N(t_j)\) to the output variables \(H_N(t_j)\) is injective.

Condition 5 follows from the fact that the objective function f in problem  (\({\mathcal {RP}}\)) is convex in the output variables \(H_N(t_j)\).

(\({\mathcal {RP}}\)) is convex in the output variables \(H_N(t_j)\).

4.3 Application

We now present a concrete problem, based upon the experimental setting from the draft [6]. In this problem, we consider a single river segment with \(N = 10\) uniformly spaced level nodes and rectangular cross section, an upstream inflow boundary condition provided with a fixed time series, as well as a controllable downstream release boundary condition. The hydraulic parameters and initial conditions are summarized in Table 1. The model starts from steady state: the initial flow rate is uniform and the water level decreases linearly along the length of the channel. Our objective is to keep the water level at the H node upstream of the gate, i.e., \(H_N\), at the target value \({\overline{H}}_N=0\) m.

A solution to the optimization problem was obtained using the interior point solver IPOPT [40] and is plotted in Fig. 6. By releasing water in anticipation of the inflow using the decision variable \(Q_N\), the optimization is able to reduce water level fluctuations and keep the water levels close to the target.Footnote 1

The IPOPT solve, starting from an all-zero starting point, takes approximately 0.1 s to complete on a 2.6 GHz Intel Core i7 CPU.

Since some of the bounds on the control variables are active, the conic intersection \(V(u^*)\) of Theorem 3.1 need not cover the entire set U. The solution quality was analyzed numerically by seeding the optimization with a large number of different starting points. Latin hypercube sampling [30] was used to compute \(1\,000\) different starting points, for each of which IPOPT computed a solution. The standard deviation of the solution vectors was found to be in the order of \(10^{-11} - 10^{-13} \approx 0\) per solution vector coordinate, illustrating how every starting point resulted in an – for all practical purposes – identical solution. This provides evidence that the found solution is in fact globally optimal, or at least nearly so.

There is also ample other numerical evidence that solutions of this type are globally optimal or very close to it. In [7], the solution quality and run time of an interior point-type method (IPM) is benchmarked against a so-called reduced genetic algorithm (RGA, [38]) for a class of water optimization problems. The IPM search finds qualitatively consistent solutions that always obtain better objective function values than the RGA. This benchmark includes problems with multiple river segments, multiple spatial control points, and both coarser and finer discretizations of the Saint-Venant equations in time and space.

Similar results are reported in [21] for drinking water distribution networks, where local search using IPOPT finds solutions with objective values within a relative distance of \(10^{-3}\) of those found using the global solver Couenne – in a fraction of the computation time. The general-purpose global solvers require multiple minutes or hours to run, but the local search completes in a few seconds at most.

5 Conclusions and outlook

In this work, we used the invariant convexity (invexity) to provide a theoretical framework to understand the good (often optimal) performance of local solvers on a class of non-convex problems that arise in discrete-time optimal control. We showed that KKT points for such problems are conic-intersection optima. Conic-intersection optimality implies global optimality for solutions in the interior of the feasible set, and is somewhat weaker – yet significantly stronger than local optimality – on the boundary.

For a concrete PDE-constrained problem that is representative of a real-world water management problem, we demonstrated hidden invexity and verified the high quality of solutions obtained by local search numerically. To the best of our knowledge, this is the first time that the theory of invex functions has been leveraged in a large-scale applied engineering context.

To make our results applicable beyond the domain of discrete-time optimal control (where an expert can typically check whether the conditions of Definition 2.1 hold for a given discretization), there are two natural next steps that, however, fall outside the scope of this work. First, it would be of great help to provide automated tools for detecting hidden invexity. Second, one could also investigate the use of hidden invexity to accelerate branch-and-bound algorithms for general non-convex global optimization.

On an analytical level, another interesting direction would be to extend our result to continuous-time optimal control.

6 Story

This research was driven by the observation that local search algorithms applied to certain well-structured non-convex discrete-time optimal control problems find solutions that are very close to being globally optimal. Non-convex problems with this characteristic are common in water resources management. We challenged ourselves to find an analytical explanation, as well as exact conditions, for this phenomenon. After pursuing various avenues of investigation, our research led to the concise result described in the present paper. This result is, to the best of our knowledge, the first application of the theory of invex functions to real-world non-convex optimal control.

Availability of data and material

Not applicable.

Notes

An example implementation in Python using the algorithmic differentiation package CasADi [4] and homotopy continuation [2, 8] to solve the nonlinear equality constraints numerically using IPOPT, is available online at https://github.com/jbaayen/homotopy-example.

References

Ackermann, T., et al.: Real-time modeling for navigation and hydropower in the river Mosel. J. Water Resour. Plann. Manage. 126(5), 298–303 (2000)

Allgower, E.L., Georg, K.: Numerical Continuation Methods: An Introduction, vol. 13. Springer, Berlin (2012)

Amann, K.-U., Arnold, E., Sawodny, O.: Online real-time scheduled model predictive feedforward control for impounded river reaches applied to the Moselle river. In: 2016 IEEE International Conference on Automation Science and Engineering (CASE). IEEE. (2016), pp. 1276–1281

Andersson, J.A., et al.: CasADi: a software framework for nonlinear optimization and optimal control. Math. Program. Comput. 11(1), 1–36 (2019)

Aubin, J.-P., Ekeland, I.: Applied Nonlinear Analysis. Wiley, New York (1984)

Baayen, J. H., Piovesan, T., VanderWees, J.: Continuation method for PDE-constrained global optimization: Analysis and application to the shallow water equations. In: arXiv:1801.06507 (2020)

Baayen, J. H., Vreeken, D. J., Archambeau, P.: Optimization methods for hydraulic systems. https://publicwiki.deltares.nl/download/attachments/138543226/Baayen_2019-09-13%20Comparison%20Optimization%20Methods.pdf. 2019

Baayen, J.H., et al.: An overview of continuation methods for non-linear model predictive control of water systems. IFAC-PapersOnLine 52(23), 73–80 (2019)

Belotti, P., et al.: Branching and bounds tightening techniques for non-convex MINLP. Optim. Methods Softw. 24(4–5), 597–634 (2009)

Ben-Israel, A., Mond, B.: What is invexity? ANZIAM J. 28(1), 1–9 (1986)

Ben-Tal, A., Teboulle, M.: Hidden convexity in some nonconvex quadratically constrained quadratic programming. Math. Program. 72(1), 51–63 (1996)

Burgschweiger, J., Gnädig, B., Steinbach, M.C.: Optimization models for operative planning in drinking water networks. Optim. Eng. 10(1), 43–73 (2009)

Casulli, V., Cheng, R.T.: Stability analysis of Eulerian-Lagrangian methods for the onedimensional shallow-water equations. Appl. Math. Modell. 14(3), 122–131 (1990)

Casulli, V., Zanolli, P.: A conservative semi-implicit scheme for open channel flows. J. Appl. Sci. Comput. 5, 1–10 (1998)

Craven, B. D.: Duality for generalized convex fractional programs. In: Generalized concavity in optimization and economics (1981), pp. 473–489

Craven, B.D.: Invex functions and constrained local minima. Bull. Australian Math. Soc. 24(3), 357–366 (1981)

Eschenbach, E.A., et al.: Goal programming decision support system for multiobjective operation of reservoir systems. J. Water Resour. Plann. Manage. 127(2), 108–120 (2001)

Falk, A.K.V., et al.: Model predictive control of a large-scale river network. Procedia Eng. 154, 80–87 (2016)

García, C.E., Prett, D.M., Morari, M.: Model predictive control: theory and practice—a survey. Automatica 25(3), 335–348 (1989)

Geiger, C., Kanzow, C.: Theorie und Numerik restringierter Optimierungsaufgaben. Springer, Berlin (2013)

Ghaddar, B., et al.: Polynomial optimization for water networks: global solutions for the valve setting problem. Eur. J. Oper. Res. 261(2), 450–459 (2017)

Hanson, M.A.: Invexity and the Kuhn–Tucker theorem. J. Math. Anal. Appl. 236(2), 594–604 (1999)

Hanson, M.A.: On suffciency of the Kuhn–Tucker conditions. J. Math. Anal. Appl. 80(2), 545–550 (1981)

Hante, F. M. et al.: Challenges in optimal control problems for gas and fluid flow in networks of pipes and canals: From modeling to industrial applications. In: Industrial mathematics and complex systems. Springer, (2017), pp. 77–122

Horvffath, K., et al.: Convex modeling of pumps in order to optimize their energy use. Water Resour. Res. 55(3), 2432–2445 (2019)

Joseph-Duran, B., et al.: Minimization of sewage network overflow. Water Resour. Manage. 28(1), 41–63 (2014)

Lee, D., et al.: Convex restriction of power flow feasibility sets. IEEE Trans. Control Netw. Syst. 6(3), 1235–1245 (2019)

Madani, R., Sojoudi, S., Lavaei, J.: Convex relaxation for optimal power flow problem: Mesh networks. IEEE Trans. Power Syst. 30(1), 199–211 (2014)

Martin, D.: The essence of invexity. J. Optim. Theory Appl. 47(1), 65–76 (1985)

McKay, M.D., Beckman, R.J., Conover, W.J.: A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 42(1), 55–61 (2000)

Misener, R., Floudas, C.A.: ANTIGONE: algorithms for continuous/integer global optimization of nonlinear equations. J. Global Optim. 59(2–3), 503–526 (2014)

Mishra, S.K., Giorgi, G.: Invexity and Optimization, vol. 88. Springer, Berlin (2008)

Nicklow, J., et al.: State of the art for genetic algorithms and beyond in water resources planning and management. J. Water Resour. Plann. Manage. 136(4), 412–432 (2010)

Nocedal, J., Wright, S.: Numerical Optimization. Springer, Berlin (2006)

Ogata, K., Yang, Y.: Modern Control Engineering. Prentice-Hall, Hoboken (1997)

Sahinidis, N.V.: BARON: a general purpose global optimization software package. J. Global Optim. 8(2), 201–205 (1996)

Van Zyl, J.E., Savic, D.A., Walters, G.A.: Operational optimization of water distribution systems using a hybrid genetic algorithm. J. Water Resour. Plann. Manage. 130(2), 160–170 (2004)

Vermuyten, E., et al.: Combining model predictive control with a reduced genetic algorithm for real-time ood control. In: Journal of Water Resources Planning and Management 144.2 (2018), p. 04017083

Vreugdenhil, C.B.: Numerical Methods for Shallow-Water Flow, vol. 13. Springer, Berlin (2013)

Wächter, A., Biegler, L.T.: On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math. Program. 106(1), 25–57 (2006)

Acknowledgements

The authors thank Dick den Hertog and Vyacheslav Kungurtsev for the critical reading of the manuscript and their comments.

Funding

The first author’s work was financed by the KISTERS Group. The second author’s work was financed by The Dutch Research Council (NWO) Grant VI.Veni.191E.035.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Not applicable.

Code availability

The code for the case study of Sect. 4 is available online at https://github.com/jbaayen/homotopy-example.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Baayen, J.H., Postek, K. Hidden invariant convexity for global and conic-intersection optimality guarantees in discrete-time optimal control. J Glob Optim 82, 263–281 (2022). https://doi.org/10.1007/s10898-021-01072-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-021-01072-5