Abstract

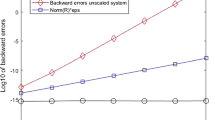

One of the most successful methods for solving a polynomial (PEP) or rational eigenvalue problem (REP) is to recast it, by linearization, as an equivalent but larger generalized eigenvalue problem which can be solved by standard eigensolvers. In this work, we investigate the backward errors of the computed eigenpairs incurred by the application of the well-received compact rational Krylov (CORK) linearization. Our treatment is unified for the PEPs or REPs expressed in various commonly used bases, including Taylor, Newton, Lagrange, orthogonal, and rational basis functions. We construct one-sided factorizations that relate the eigenpairs of the CORK linearization and those of the PEPs or REPs. With these factorizations, we establish upper bounds for the backward error of an approximate eigenpair of the PEPs or REPs relative to the backward error of the corresponding eigenpair of the CORK linearization. These bounds suggest a scaling strategy to improve the accuracy of the computed eigenpairs. We show, by numerical experiments, that the actual backward errors can be successfully reduced by scaling and the errors, before and after scaling, are both well predicted by the bounds.

Similar content being viewed by others

Notes

Throughout this paper, we assume that the coefficient matrix \(D_m\) of the highest order term is nonzero.

In this case, \(\beta _0\) is identified with 1.

References

Amiraslani, A., Corless, R.M., Lancaster, P.: Linearization of matrix polynomials expressed in polynomial bases. IMA J. Numer. Anal. 29(1), 141–157 (2008)

Antoniou, E.N., Vologiannidis, S.: A new family of companion forms of polynomial matrices. Electr. J. Linear Algebra 11(411), 78–87 (2004)

Berljafa, M., Güttel, S.: A rational Krylov toolbox for Matlab, MIMS EPrint 2014.56. Manchester Institute for Mathematical Sciences, The University of Manchester, UK pp. 1–21 (2014)

Betcke, T.: Optimal scaling of generalized and polynomial eigenvalue problems. SIAM J. Matrix Anal. Appl. 30(4), 1320–1338 (2008)

Betcke, T., Higham, N.J., Mehrmann, V., Schröder, C., Tisseur, F.: NLEVP: a collection of nonlinear eigenvalue problems. ACM Trans. Math. Softw. 39(7), 1–28 (2013)

Driscoll, T.A., Hale, N., Trefethen, L.N.: Chebfun Guide. Pafnuty Publications (2014). http://www.chebfun.org/docs/guide/

Effenberger, C., Kressner, D.: Chebyshev interpolation for nonlinear eigenvalue problems. BIT Numer. Math. 52(4), 933–951 (2012)

Fiedler, M.: A note on companion matrices. Linear Algebra App. 372, 325–331 (2003)

Gaubert, S., Sharify, M.: Tropical scaling of polynomial matrices. In: Positive systems, pp. 291–303. Springer (2009)

Gohberg, I., Lancaster, P., Rodman, L.: Matrix Polynomials. SIAM, New York (2009)

Grammont, L., Higham, N.J., Tisseur, F.: A framework for analyzing nonlinear eigenproblems and parametrized linear systems. Linear Algebra Appl. 435, 623–640 (2011)

Güttel, S., Tisseur, F.: The nonlinear eigenvalue problem. Acta Numerica 26, 1–94 (2017)

Güttel, S., Van Beeumen, R., Meerbergen, K., Michiels, W.: NLEIGS: a class of fully rational Krylov methods for nonlinear eigenvalue problems. SIAM J. Sci. Comput. 36(6), A2842–A2864 (2014)

Higham, N.J., Li, R.C., Tisseur, F.: Backward error of polynomial eigen problems solved by linearization. SIAM J. Matrix Anal. Appl. 29(4), 1218–1241 (2007)

Hilliges, A., Mehl, C., Mehrmann, V.: On the solution of palindromic eigenvalue problems. In: Proceedings of the 4th European Congress on Computational Methods in Applied Sciences and Engineering (ECCOMAS). Jyväskylä, Finland (2004)

Karlsson, L., Tisseur, F.: Algorithms for Hessenberg-triangular reduction of Fiedler linearization of matrix polynomials. SIAM J. Sci. Comput. 37(3), C384–C414 (2015)

Lawrence, P.W., Corless, R.M.: Backward error of polynomial eigenvalue problems solved by linearization of Lagrange interpolants. SIAM J. Matrix Anal. Appl. 36(4), 1425–1442 (2015)

Lawrence, P.W., Van Barel, M., Van Dooren, P.: Backward error analysis of polynomial eigenvalue problems solved by linearization. SIAM J. Matrix Anal. Appl. 37(1), 123–144 (2016)

Mackey, D.S., Mackey, N., Mehl, C., Mehrmann, V.: Vector spaces of linearizations for matrix polynomials. SIAM J. Matrix Anal. Appl. 28(4), 971–1004 (2006)

Tisseur, F.: Backward error and condition of polynomial eigenvalue problems. Linear Algebra Appl. 309(1–3), 339–361 (2000)

Tisseur, F., Higham, N.J.: Structured pseudospectra for polynomial eigenvalue problems, with applications. SIAM J. Matrix Anal. Appl. 23(1), 187–208 (2001)

Van Beeumen, R., Meerbergen, K., Michiels, W.: Compact rational Krylov methods for nonlinear eigenvalue problems. SIAM J. Matrix Anal. Appl. 36(2), 820–838 (2015)

Acknowledgements

We are grateful to Françoise Tisseur for bringing this project to our attention and to Marco Fasondini for his constructive commentaries which led us to improve our work. We thank Ren-Cang Li, Behnam Hashemi, and Yangfeng Su for very helpful discussions in various phases of this project. We also thank the anonymous reviewers for very helpful comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Data Availability

Data sharing not applicable to this article as no datasets were ge nerated or analysed during the current study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

HC is supported by the National Natural Science Foundation of China under grant No.12001262, No.61963028, and No.11961048, Natural Science Foundation of Jiangxi Province with No.20181ACB20001, and Double-Thousand Plan of Jiangxi Province No. jxsq2019101008. KX is supported by Anhui Initiative in Quantum Information Technologies under grant AHY150200.

A Proof of (14)

A Proof of (14)

We now show that \(G(\lambda )\) given by (14) satisfies (12) with \(\mathbf{L} _m(\lambda )\) corresponding to the orthogonal basis and \(g(\lambda ) = 1\). To simplify the notation, we drop the argument \(\lambda \) of the basis function \(b_j^{(k)}(\lambda )\) in the rest of this proof.

We shall concentrate on showing that the product of \(G(\lambda )\) and the kth column of \(\mathbf{L} _m(\lambda )\) is kth column of \(e_1^T\otimes R_m(\lambda )\), that is

which is true if

both hold.

Equation (29a) is given by the definition of the orthogonal basis. We now show (29b) by induction.

For \(j = k\), (29b) reads

which reduces to

since \(b_{-1}^{(k+1)} = 0\) and \(b_0^{(k)} = b_0^{(k-1)} = 1\). Equation (30) is the recurrence relation (13) with 0 and \(k-1\) in place of j and k, respectively.

For \(j = k+1\), (29b) becomes

To show (31), we substitute \(b_1^{(k)} = \frac{\lambda + \alpha _k}{\beta _{k+1}}b_0^{(k)}\) into to have

where \(b_0^{(k-1)} = b_0^{(k)} = b_0^{(k+1)} = 1\) is used. Since the terms in the parentheses, by the recurrence relation, are just \(b_1^{(k-1)}\), the task is boiled down to verify

This is, again, a variant of the recurrence relation of (13).

Now suppose that (29b) and

both hold for any \(k-1 \leqslant j \leqslant m-2\). By the recurrence relation (13), we have

where we now substitute (29b) and (32) for \(b_{j-(k-1)}^{(k-1)}\) and \(b_{j+1-(k-1)}^{(k-1)}\) respectively to have

where we have used two variants of the recurrence relation

Dropping \(\beta _{j+2}\)’s in (33) yields

This, by induction, shows (29b), which, along with (29a), verifies (28).

The proofs for the first and the last two columns of \(G(\lambda )\mathbf{L} _m(\lambda )\) are essentially the same, though the structures of \(\mathbf{L} _m(\lambda )\) for these columns are slightly different from that of a general kth column.

Rights and permissions

About this article

Cite this article

Chen, H., Xu, K. On the Backward Error Incurred by the Compact Rational Krylov Linearization. J Sci Comput 89, 15 (2021). https://doi.org/10.1007/s10915-021-01625-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-021-01625-6

Keywords

- Backward error

- Polynomial eigenvalue problem

- Linearization

- Taylor basis

- Newton basis

- Lagrange interpolation

- Orthogonal polynomials

- Chebyshev polynomials