Abstract

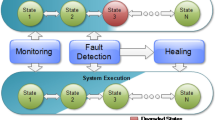

This paper investigates the use of group goals and plans as programming abstractions that provide explicit constructs for goals and plans involving coordinated action by groups of agents, with a focus on the BDI agent model. We define a group goal construct, which specifies subgoals that the group members must satisfy for the group goal to succeed, subject to timeouts on the members beginning work on the goal, and then completing their subgoals. A group plan containing one or more group goals can be dynamically distributed amongst a set of agents and jointly executed without a need for explicit coordinating communication between agents. We define formal semantics that model the coordination needed to determine a group goal’s success or failure as updates to a shared state machine for the group goal. We implement the semantics directly as rewrite rules in Maude, and verify using LTL model checking that the intended coordination behaviour is achieved. An implementation of group plans and goals for the Jason agent platform is also described, based on an integration of Jason with the ZooKeeper coordination middleware via a set of generic Jason plans supporting group goals, and the Apache Camel integration framework. A evaluation of the performance of this implementation is presented, showing that the approach is scalable.

Similar content being viewed by others

Notes

For simplicity, the set of joined agents is not maintained once the group goal has failed (in the case that some agents’ subgoals have failed before all agents have started their own subgoals). The state machine also does not record the set of all agents whose subgoals failed. In practice this information is useful to an agent when a group goal fails, as it will help it to plan how to recover from the failure. Our implementation described in Sect. 7 stores this information, but does not currently return it to agents when they are notified of a group goal’s failure.

There may be cases when the programmer would like an agent with a subgoal that is achieved quickly to be able to begin working on the following group goal without delay. Given our current state machine for group goals, this early start to a group goal would start the join timeout timer for the goal before the previous goal is fully achieved. While it may be possible to predict an appropriate timeout period, adding this capability calls for a more sophisticated treatment of timeouts, which we leave for future work.

In Jason, agent programs consist of initial beliefs, rules (Horn clauses) and plans. Plans are expressed using the syntax Trigger : ContextCond \(\texttt {<-}\) PlanBody, where ContextCond and PlanBody are optional. Triggers are events such as the creation of a new goal (+!GoalTerm) or a new belief (+BeliefTerm). ContextCond is a logical formula stating when the plan is applicable, and is evaluated using beliefs and rules. A plan body contains a sequence of actions (terms with no prefix), built-in internal actions (terms whose functor contains a “.”), belief additions and deletions (with prefix ‘\(+\)’ and ‘−’, respectively), queries over beliefs and rules (with prefix ‘?’), and subgoals (with prefix ‘!’). Some control structures such as if-then-else are also supported within a plan body.

To provide a declarative definition of our transition system, we assume that these operations are applied to copies of the state machines. For practical applications, copying is not needed.

This is solely to prevent repeated applications of the group failure and group success rules, and an implementation is likely to use an alternative technique to achieve that.

The state machine is specified as a Maude functional theory, including equations defining the operations on state machines used in our semantic rules, and our semantic rules are defined as conditional rewrite rules.

As LTL semantics applies to infinite paths, “deadlock states”—those that have no transitions from them—are modelled as having a single transition to themselves.

This is not intended to be an accurate model of time: different local agent operations on intention stacks will take very different lengths of time in practice. However, our approach is sufficient to verify our semantics using model checking.

More precisely, our semantics were refined iteratively, with a number of subtle bugs corrected, until all six properties were verified. This illustrates the limitations of defining operational semantics based on mathematical intuition alone, and the benefits of implementing and verifying them using a tool such as Maude.

This uses the Jason BDI interpreter in a standalone mode, and provides its own implementation of agent perception, action and messaging, via user-provided Camel routes.

This placement excludes camel-agent’s handing of the preceding notify_my_part_succeeded action (by delivering the notification into the Notify local success Camel route) from the timing calculation—it is our intention to evaluate the performance of the enterprise-level Camel and ZooKeeper middleware for coordinating the execution of group goals, not the camel-agent research software.

The ZooKeeper servers were not restarted between runs, but the znodes used in these experiments were deleted.

This was confirmed by generating a “notched” version of the box plots, showing confidence intervals.

The plan in line 31 is triggered by Jason’s “-!goal” failure-handling event if the plan above it fails.

References

Agent Oriented Software Pty Ltd. JACK intelligent agents teams manual, release 5.5. https://aosgrp.com/media/documentation/jack/JACK_Teams_Manual.pdf, (2005).

Alagar, V. S., & Periyasamy, K. (2011). Extended finite state machine. Specification of software systems (pp. 105–128). London: Springer.

Aldewereld, H., Dignum, V., & Vasconcelos, W. W. (2016). Group norms for multi-agent organisations. ACM Transactions on Autonomous and Adaptive Systems, 11(2), 15:1-15:31.

Alechina, N., van der Hoek, W., & Logan, B. (2017). Fair decomposition of group obligations. Journal of Logic and Computation, 27(7), 2043–2062.

Apache Software Foundation. (2010). ZooKeeper programmer’s guide, ZooKeeper 3.1 documentation. https://zookeeper.apache.org/doc/r3.1.2/zookeeperProgrammers.html#sc_zkDataModel_znodes.

Banker, R. D., Datar, S. M., Kemerer, C. F., & Zweig, D. (1993). Software complexity and maintenance costs. Communications of the ACM, 36(11), 81–94.

Bellifemine, F. L., Caire, G., & Greenwood, D. (2007). Developing multi-agent systems with JADE. New Jersey: John Wiley and Sons Ltd.

Boissier, O., Bordini, R. H., Hübner, J. F., Ricci, A., & Santi, A. (2013). Multi-agent oriented programming with JaCaMo. Science of Computer Programming, 78(6), 747–761.

Bordini, R. H., Hübner, J. F., & Wooldridge, M. (2007). Programming multi-agent systems in AgentSpeak using Jason. New Jersey: Wiley.

Clavel, M., Durán, F., Eker, S., Escobar, S., Lincoln, P., Martí-Oliet, N., Meseguer, J., & Talcott, C. (2016). Maude manual (version 2.7.1). http://maude.cs.illinois.edu/w/images/e/e0/Maude-2.7.1-manual.pdf.

Clavel, M., Durán, F., Eker, S., Lincoln, P., Martí-Oliet, N., Meseguer, J., & Talcott, C. (2003). The Maude 2.0 system. In R. Nieuwenhuis (Ed.), Rewriting techniques and applications. Lecture notes in computer science, vol. 2706, pp. 76–87. Berlin: Springer.

Cohen, P. R., & Levesque, H. J. (1991). Teamwork. Nous, 25(4), 487–512.

Cranefield, S. (2006). Reliable group communication and institutional action in a multi-agent trading scenario. In F. Dignum, R. M. van Eijk, & R. Flores (Eds.), Agent communication II. Lecture notes in artificial intelligence, vol. 3859, pp. 258–272. Berlin: Springer.

Cranefield, S. (2016). Supporting group plans in the BDI architecture using coordination middleware (extended abstract). In Proceedings of the 15th international conference on autonomous agents and multiagent systems, pp. 1427–1428. IFAAMAS.

Cranefield, S., & Ranathunga, S. (2013). Embedding agents in business processes using enterprise integration patterns. Engineering multi-agent systems. Lecture notes in computer science, vol. 8245, pp. 97–116. Berlin: Springer.

Decker, K. (1996). TAEMS: A framework for environment centered analysis and design of coordination mechanisms. In G. O. Hare & N. Jennings (Eds.), Foundations of Distributed Artificial Intelligence (pp. 429–448). New Jersey: Wiley.

Dignum, F., Dunin-Keplicz, B., & Verbrugge, R. (2001). Creating collective intention through dialogue. Logic Journal of the IGPL, 9(2), 289–304.

Dignum, V. (2004). A Model for Organizational Interaction: Based on Agents, Founded in Logic. PhD dissertation, Institute for Information and Computing Sciences, University of Utrecht.

Dybalova, D. (2017). Flexible autonomy and context in human-agent collectives. PhD thesis, University of Nottingham, http://eprints.nottingham.ac.uk/43397/1/Thesis.pdf.

Ellis, J. (2013). Lightweight transactions in Cassandra 2.0. DataStax Developer Blog post, http://www.datastax.com/dev/blog/lightweight-transactions-in-cassandra-2-0.

Esteva, M., de la Cruz, D., & Sierra, C. (2002). ISLANDER: an electronic institutions editor. In Proceedings of the 1st international joint conference on autonomous agents and multiagent systems, pp. 1045–1052. ACM.

Esteva, M., Rosell, B., Rodríguez-Aguilar, J.A., & Arcos, J.L. (2004). AMELI: An agent-based middleware for electronic institutions. In Proceedings of the 3rd International joint conference on autonomous agents and multiagent systems, vol. 1, pp. 236–243. IEEE computer society.

Griffiths, N., Luck, M., & d’Inverno, M. (2003). Annotating cooperative plans with trusted agents. Trust, reputation, and security: Theories and practice. Lecture notes in computer science, vol. 2631, pp. 87–107, Berlin: Springer.

Grosz, B. J., & Kraus, S. (1996). Collaborative plans for complex group action. Artificial Intelligence, 86(2), 269–357.

Hübner, J. F., Sichman, J. S., & Boissier, O. (2002). A model for the structural, functional, and deontic specification of organizations in multiagent systems. Advances in artificial intelligence, 16th Brazilian symposium on artificial intelligence. Lecture notes in computer science, vol. 2507, pp. 118–128, Berlin: Springer.

Hunt, P., Konar, M., Junqueira, F.P., & Reed, B. (2010). ZooKeeper: Wait-free coordination for Internet-scale systems. In Proceedings of the USENIX annual technical conference. USENIX Association.

Ibsen, C., & Anstey, J. (2010). Camel in Action. Manning.

Jennings, N. (1995). Controlling cooperative problem solving in industrial multi-agent systems using joint intentions. Artificial Intelligence, 75(2), 195–240.

Kaminka, G. A., & Tambe, M. (2000). Robust agent teams via socially-attentive monitoring. Journal of Artificial Intelligence Research, 12, 105–147.

Kanat-Alexander, M. (2012). Code Simplicity. O’Reilly Media.

Kinny, D., Ljungberg, M., Rao, A. S., Sonenberg, L., Tidhar, G., & Werner, E. (1994). Planned team activity. Artificial social systems. Lecture notes in computer science, vol. 830, pp. 227–256, Berlin: Springer.

Lam, E.S.L., Cervesato, I., & Fatima, N. (2015). Comingle: Distributed logic programming for decentralized mobile ensembles. In Coordination Models and Languages – 17th IFIP WG 6.1 International Conference, COORDINATION 2015, volume 9037 of Lecture Notes in Computer Science, pp. 51–66. Springer, Berlin.

Lamport, L. (1998). The part-time parliament. ACM Transactions on Computer Systems, 16(2), 133–169.

Levesque, H.J., Cohen, P.R., & Nunes, J.H.T. (1990). On acting together. In Proceedings of the 8th national conference on artificial intelligence, pp. 94–99. AAAI Press/The MIT Press, Cambridge.

Microsoft Corporation. (2016). Windows Server 2016 accurate time. https://docs.microsoft.com/en-us/windows-server/identity/ad-ds/get-started/windows-time-service/accurate-time.

Muise, C. J., Dignum, F., Felli, P., Miller, T., Pearce, A. R., & Sonenberg, L. (2016). Towards team formation via automated planning. Coordination, organizations, institutions, and norms in agent systems XI. Lecture notes in computer science, vol. 9628, pp. 282–299, Berlin: Springer.

Newell, A. (1990). Unified theories of cognition. Cambridge: Harvard University Press.

Ogheneovo, E. (2014). On the relationship between software complexity and maintenance costs. Journal of Computer and Communications, 2, 1–16.

Oliehook, F. A., & Amato, C. (2016). A concise introduction to decentralized POMDPs. Berlin: Springer.

Omicini, A., Ricci, A., & Viroli, M. (2006). Coordination artifacts as first-class abstractions for MAS engineering: State of the research. Software engineering for multi-agent systems IV, research issues and practical applications. Lecture notes in computer science, vol. 3914, pp. 71–90, Berlin: Springer.

Omicini, A., & Zambonelli, F. (1999). Coordination for Internet application development. Autonomous Agents and Multi-Agent Systems, 2(3), 251–269.

Plotkin, G. D. (2004). A structural approach to operational semantics. The Journal of Logic and Algebraic Programming, 60–61, 17–139.

Poslad, S.J., Bourne, R.A., Hayzelden, A.L.G., & Buckle, P. (2001). Agent technology for communications infrastructure: An introduction, chapter 1, pp. 1–18. John Wiley and Sons Ltd., New Jersey.

Pynadath, D. V., & Tambe, M. (2002). The communicative multiagent team decision problem: Analyzing teamwork theories and models. Journal of Artificial Intelligence Research, 16, 389–423.

Rao, A. (1996). AgentSpeak(L): BDI agents speak out in a logical computable language. In W. van de Velde & J. Perram (Eds.), Agents breaking away. Lecture notes in computer science, vol. 1038, pp. 42–55. Berlin: Springer.

Rao, A.S., & Georgeff, M.P. (1991). Modeling rational agents within a BDI-architecture. In Proceedings of the second international conference on principles of knowledge representation and reasoning, pp. 473–484. Morgan Kaufmann.

Rogers, T. J., Ross, R., & Subrahmanian, V. (2000). IMPACT: A system for building agent applications. Journal of Intelligent Information Systems, 14, 95–113.

Sardina, S., & Padgham, L. (2011). A BDI agent programming language with failure handling, declarative goals, and planning. Autonomous Agents and Multi-Agent Systems, 23(1), 18–70.

Tambe, M. (1997). Towards flexible teamwork. Journal of Artificial Intelligence Research, 7, 83–124.

Tang, C., Kooburat, T., Venkatachalam, P., Chander, A., Wen, Z., Narayanan, A., Dowell, P., & Karl, R. (2015). Holistic configuration management at facebook. In Proceedings of the 25th symposium on operating systems principles, SOSP ’15, pp. 328–343. ACM.

Thangarajah, J., Padgham, L., & Winikoff, M. (2003). Detecting and avoiding interference between goals in intelligent agents. In Proceedings of the eighteenth international joint conference on artificial intelligence, pp. 721–726. Morgan Kaufmann.

van Riemsdijk, M. B., de Boer, F. S., Dastani, M., & Meyer, J.-J.C. (2007). Prototyping 3APL in the Maude term rewriting language. In K. Inoue, K. Satoh, & F. Toni (Eds.), Computational logic in multi-agent systems: 7th international workshop, CLIMA VII, Hakodate, Japan, May 8–9, 2006, Revised Selected and Invited Papers (pp. 95–114). Berlin: Springer.

Verdejo, A., & Martí-Oliet, N. (2006). Executable structural operational semantics in Maude. The Journal of Logic and Algebraic Programming, 67(1), 226–293.

Winikoff, M., & Cranefield, S. (2014). On the testability of BDI agent systems. Journal of Artificial Intelligence Research, 51, 71–131.

Wooldridge, M., & Jennings, N. R. (1999). The cooperative problem-solving process. Journal of Logic and Computation, 9(4), 563–592.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

A Dynamic distribution of a group plan

This appendix illustrates how group plans can be dynamically distributed to agents that have been recruited to jointly execute the plan. Figure 15 shows the Jason source code for an agent that is coordinating the selection of agents to participate in a group plan for the early stages of building a house, and then supervising the work. The group plan (shown in lines 61 to 66) involves the serial execution of two group goals: to build the foundations and to build the walls. The first group goal involves an agent playing the role of foundation_digger and digging the foundations while an agent with the supervisor role monitors the work. The second group goal is similar, with an agent in the wall_builder role building the walls, monitored by a supervisor. The foundation digger is not involved in the second goal, and so has the default “no-op” subgoal, and likewise, the wall builder has the default no-op subgoal for the first group goal. To illustrate the inclusion of other types of goal in a group plan, the agents all begin the plan by printing (locally) a message that they are starting to execute the plan.

The agent running this code begins with an “oversee house building” goal (line 12). The plan triggered by this goal (lines 15–19) looks up all group plans for the house_built goal (it will find only one, in lines 61–66), and triggers the two plans shown in lines 21–31 to try each discovered group plan in turn until one succeeds.Footnote 15 Some query goals called by these plans (lines 23 and 25) invoke Jason rules (Horn clauses) imported in line 1, and other goals trigger the plans in lines 33 to 59—the details are not important here. The key aspects of the code are that the coordinating agent sends invitations to other agents to take on roles (line 41), then after all roles have been filled, the group plan is sent to all group members (line 53), and they are requested to achieve the triggering goal for the group plan (line 54). The coordinator, having predetermined that it will take on the overseer role (line 9), then prepends the agent-instantiated group plan to its plan base (line 57) and begins executing it itself (line 58). Line 68 shows the coordinator’s local plan for the overseer role’s monitor subgoal, which for simplicity of presentation takes the negligent approach of doing nothing.

B Group goal semantics in Maude

The group goal semantics from Fig. 5 encoded in Maude

Figure 16 shows an excerpt of the Maude code that implements the group goal semantics presented in Fig. 5. This shows the direct correspondence between those semantics and our implementation used for model checking. A separate set of rules (not shown) encodes the local agent transitions shown in Fig. 9.

Each semantic rule is implemented by a conditional rewrite rule of the following form:

crl [RuleName] : Term1 => Term2 if Condition .

The rules in Fig. 16 non-deterministically rewrite terms representing configuration triples. For brevity, declarations of the (typed) variables and the data structures for their types are not shown, but we present a summary below. The full code can be found at https://bitbucket.org/scranefield/bdigroupplans/.

-

The first element in a configuration triple is a set of goal trees (SGT), where each goal tree is an association (using the operator ‘::’) between an agent name (a string) and an intention stack. Note that the operator ‘,’ represents set union (which Maude knows to be associative, commutative and idempotent), and Maude’s ‘order-sorted’ type system enables a singleton set to denoted by a set element alone (with no additional syntax). Thus, the first element of the triple in line 2 matches any set of goal trees in which agent A has the intention stack LPB APB.

-

LPB denotes a list of plan bodies. Maude uses juxtaposition to denote (associative) list concatenation, and, as for sets, single list elements can also denote singleton lists. Thus, the intention stack for agent A in line 2 matches any list of plan bodies that ends with the atomic (explained below) plan body APB.

-

group-size, jto and cto are constants with the values used in our model checking: the group size is 2 and \((\texttt {jto},\texttt {cto})\) ranged over \(\{10,20,30\}^2\) in different runs.

-

PB represents a plan body (a list of goals). It may also include a list of back-up plan bodies as discussed in Sect. 5. A postfix succeeded or failed operator represents a success or failure flag attached to the plan body. Within a plan body, a local goal is denoted by a string (the goal name), and a group goal has the form gg(GName). The function current-goal retrieves the current goal in a plan body. This is the first element in the list of goals, as goals are removed after succeeding.

-

APB denotes an atomic plan body: one that has no success or failure flag associated with it directly (as opposed to any flag that may be associated with its current goal). The functions current-goal-succeeded and current-goal-failed test whether the current goal in an atomic plan body has a success or failure flag (respectively).

-

The second element in a configuration triple is a set of group goal states (GGSs). This is represented by a Maude Map, which is a set of mappings GName -> GGS between a goal name (a string) and a group goal state. The latter is a tuple containing the current state in the state machine shown in Fig. 1, the number of agents involved in the group goal, countdown values for join and completion timeouts, two sets of agents recording those that have joined the goal and those that have succeeded with their subgoals, and the last time an update to the state was made.

The initialise function creates a new group goal state tuple with the goal in the Initial state of the state machine, and the functions started, succeeded and failed return an updated group goal state resulting from the state machine receiving the respective event. The (overloaded) ‘::’ operator tests whether a group goal state is in a specified state of the state machine (e.g. see line 62), and the ag-has-joined and ag-has-succeeded functions test whether the specified agent is included in the state tuple’s set of joined or succeeded agents (respectively).

Maude’s function $hasMapping is used in lines 6 and 57 to test whether the set of group goal states includes a state tuple for a given group goal.

-

The third element in a configuration is a time point T, modelled as a non-negative integer. The function advance-time(T) returns T + 1.

Note that the condition for the no-op rule includes the bound T < 1000 to ensure the rules define a finite transition system, and the fairness constraint T rem 5 =/= 0 that ensures that agent progress cannot be delayed forever, as discussed in Sect. 6.

The conditions for the local-computation and no-op plans are defined by the Boolean-valued functions presented in lines 54–97: coordination-rule-applies, pure-coordination-rule-applies and local-success-now-waiting-for-others. Each of these functions is defined by a series of conditional equations (ceq) for different cases that give a result of true, followed by an unconditional equation (eq), adorned with an owise (“otherwise”) attribute that declares that any inputs not matching the argument terms in the preceding cases should have a result of false. For semantic purity, Maude automatically computes the required condition for each “otherwise” case and converts the unconditional equation to a conditional one.

Rights and permissions

About this article

Cite this article

Cranefield, S. Enabling BDI group plans with coordination middleware: semantics and implementation. Auton Agent Multi-Agent Syst 35, 45 (2021). https://doi.org/10.1007/s10458-021-09525-7

Accepted:

Published:

DOI: https://doi.org/10.1007/s10458-021-09525-7