Abstract

Little is known about screening tools for adults in high HIV burden contexts. We use exit survey data collected at outpatient departments in Malawi (n = 1038) to estimate the sensitivity, specificity, negative and positive predictive values of screening tools that include questions about sexual behavior and use of health services. We compare a full tool (seven relevant questions) to a reduced tool (five questions, excluding sexual behavior measures) and to standard of care (two questions, never tested for HIV or tested > 12 months ago, or seeking care for suspected STI). Suspect STI and ≥ 3 sexual partners were associated with HIV positivity, but had weak sensitivity and specificity. The full tool (using the optimal cutoff score of ≥ 3) would achieve 55.6% sensitivity and 84.9% specificity for HIV positivity; the reduced tool (optimal cutoff score ≥ 2) would achieve 59.3% sensitivity and 68.5% specificity; and standard of care 77.8% sensitivity and 47.8% specificity. Screening tools for HIV testing in outpatient departments do not offer clear advantages over standard of care.

Similar content being viewed by others

Introduction

In order to achieve control of the HIV epidemic, UNAIDS has set a series of ambitious targets striving for 95% of individuals living with HIV to know their status, 95% of those to initiate ART, and 95% of those on ART to reach viral suppression [1]. There are, however, persistent gaps in identifying people living with HIV. Approximately 11% of people living with HIV in Eastern and Southern Africa do not know their HIV status [2]. Although there has been progress in the past decade, it has been slow and variable by region [3]. Additionally, as HIV testing coverage increases and HIV incidence declines [4], more resources are needed to diagnose each new case [5, 6].

The global community is thus seeking innovative ways to more efficiently identify people who should be tested for HIV—including new technologies (self-testing) [7], new operational approaches to testing (e.g., index partner testing, community-based contact tracing, and social or risk network testing) [8,9,10], and the use of screening tools to identify those most in need of testing [11]. Clinical HIV guidelines in many African settings currently recommend testing all pregnant women, individuals with newly-diagnosed tuberculosis, and all sexually active adults annually and whenever they seek care for a sexually transmitted infection (STI) [4]—but, risk assessment through screening may be more sensitive and/or specific than this standard of care (SOC). Screening tools have previously been developed for pediatric HIV diagnosis in sub-Saharan Africa [12, 13], and may be a useful tool to prioritize high-risk adults for testing. If a screening tool has sufficiently high sensitivity and specificity for HIV infection, it can guide how to use limited testing resources including supplies, staff and space.

Outpatient departments (OPDs) offer an opportunity for case finding [14] as many adults present regularly to OPDs for acute services [15, 16]. Facility-based testing also offers advantages through better linkage to ART services and cost savings compared to community-based testing [8, 17, 18]; however traditional provider-initiated-testing-and-counseling (PITC) has low coverage in OPD settings due to overcrowded and understaffed departments [19, 20] and has traditionally demonstrated low HIV positivity rates among those tested [14]. The “yield” (positivity among those tested) of current diagnostic approaches in Africa is also declining more broadly [21]. An HIV screening tool specific to OPD settings may improve testing efficiencies. However, understanding sensitivity and specificity of the tool is critical, as the introduction of a new workflow in OPD will impose additional time required for patients and staff. Therefore, assessing tool performance relative to SOC is an important first step in the decision to implement an HIV screening tool in OPD settings.

The nature of screening tool questions for crowded OPD settings also requires careful consideration. For example, questions regarding sexual risk behavior may unintentionally stigmatize certain individuals and populations. Although questions about recent sexual behavior may be predictive of HIV status [22, 23], these may not be feasible to implement in busy OPD settings due to lack of private spaces to ask sensitive questions and obtain accurate responses [19, 22]. For example, during screening for STI or HIV testing in the United States, health care providers in busy emergency departments were routinely less likely to ask questions regarding sexual health as compared to other non-sensitive topics [22]. In order to develop more efficient strategies for HIV testing in OPD settings, more evidence is needed about how screening tools perform with and without sensitive questions, and compared to SOC.

The goal of our study was to assess whether a screening tool could increase the efficiency of HIV testing among adults seeking care in OPD settings in Malawi, as compared to SOC. We compare the estimated performance of two versions of a screening tool—one with sexual behavior questions (i.e., full tool) and one without (i.e., reduced tool)—to assess the sensitivity and specificity of potential screening tools in OPD settings.

Methods

Parent Study

Data were collected during exit surveys from a large cluster-randomized trial which aimed to assess the impact of facility HIV self-testing among adult outpatients compared to PITC. Health facilities were randomized to one of three arms (five facilities per arm) using constrained randomization based on region and facility type. The intervention is described in more detail elsewhere [24, 25]. For this secondary analysis, we used data from the HIV self-testing arm (five facilities) due to high HIV testing coverage within the arm, therefore with sufficient numbers of outpatients tested in order to reach sufficient power for this sub-analysis. In the HIV self-testing arm, outpatients were approached in outpatient waiting spaces and encouraged to test for HIV if they were > 15 years of age, had never received an HIV-positive diagnosis, and had not tested HIV-negative within the last 3 months (or never tested). Facilities were equally spread across central and southern Malawi and varied in facility type: one district hospital; one mission hospital; and three large health centers.

Data Collection

A subset of outpatients at participating facilities were recruited using a systematic sampling strategy. Research staff recruited every tenth outpatient exiting the outpatient department to complete an exit survey. Eligibility criteria were: ≥ 15 years of age; accessed and completed all outpatient services to be received that day. Oral consent was attained and exit surveys completed in private, quiet locations at the health facility. The survey was administered by trained research staff, who asked survey questions aloud and recorded the respondents’ answers on a tablet using data collection software. From the five HIV self-testing arm facilities, 2183 adult outpatients were approached to complete exit surveys about their testing experience and 2097 respondents were eligible and completed a survey (September 2017–February 2018). This analysis includes survey data from individuals who reported testing for HIV on the day of enrollment, and reported never testing HIV-positive prior to the day of enrollment (in order to examine screening tool performance in the context of new HIV diagnoses).

Survey Tool

The exit survey tool was created based on conceptually-driven hypotheses about associations with HIV status, including: (1) sociodemographic variables; (2) previous use of HIV services and test results; (3) risky sexual behavior in the past 12 months; (4) health services received that day; and (5) use of HIV testing and result of any HIV test received that day. All surveys were completed in the local language (Chichewa) and lasted approximately 20 min on average.

Data Analysis

We used questions from the exit survey to approximate a screening tool; questions that captured factors hypothetically associated with HIV risk were included in the analysis.

Operationalizing the Screening Tool

We included seven variables in the full screening tool. We included suspected sexually transmitted infections (STI) (defined as attending the facility for an STI) because STIs are a known risk factor for seroconversion [26], and attending the facility for malaria-like symptoms since malaise and fever have previously been associated with recent HIV seroconversion in similar settings [27]. We also included “overlooked” groups who do not have a standard entry point for HIV testing: women below the age of 25 (since women often test during pregnancy at antenatal visits), and men over the age of 24 because men almost exclusively seek care via OPD for acute needs [15, 28], therefore if they are not engaged at OPD they may not be reached. These groups are also important for the evolving HIV epidemic in the region: both men and young women have higher HIV positivity, test less often and may not be well-served by current HIV testing modalities [3, 29,30,31]. Finally, never tested for HIV or tested > 12-months ago was included as SOC. The response to each tool question was scored as a 0/1 (no/yes), and these responses were summed to create the total screening tool score.

We also dichotomized two questions with continuous response options (number of recent health visits, and number of recent sexual partners). Cut points were selected based on association with HIV status: we assessed the “inflection point” at which these items became strongly associated with HIV positivity (e.g., having two or more sexual partners was not significantly associated but having three or more sexual partners was).

Analyzing Screening Tool Performance

The analysis aimed to estimate the performance of screening tools, comprised of survey questions as described above, to predict HIV positivity among outpatients testing for HIV during routine outpatient services. We analyzed the data in two ways (1) using all relevant questions from the survey (items 1–7 in Table 1) and (2) using a reduced tool that excluded questions about number of sexual partners and sex outside of marriage (items 1–5 in Table 1). The SOC approach to HIV testing was captured by items 1 and 2 in Table 1.

Of the 2097 outpatients who completed a survey, 1034 were excluded from this analysis due to not testing for HIV on the day of enrollment, 11 were excluded because they had previously tested HIV-positive and 14 were excluded because they either refused to disclose the test result or reported not knowing the test result. All included respondents (n = 1038) had complete data for the survey questions used to calculate the screening tool score. This sample size is sufficient for assessing a tool with 40% sensitivity (with specificity up to 99%) given estimated adult HIV prevalence of 9% [32].

We used logistic regression to assess the relationship between HIV status and respondent characteristics (age and sex), response to each screening tool item, and total screening tool scores (full and reduced tool). We calculated the sensitivity, specificity, negative and positive predictive values (NPV and PPV, respectively) for each screening tool item and total screening tool scores (full and reduced) using Stata v14. The optimal screening tool cutoff was identified by plotting the receiver operating characteristic curve and estimating the partial area under the curve (correcting for ties).

Ethical Review

The parent trial including the exit survey methodology and instrument received ethical approval from the Malawi National Health Sciences Review Committee and the University of California Institutional Review Board.

Results

As described above, a total of 1038 adults participated in an exit survey, used a HIV self-test kit, and had a known HIV test result so are included in this analysis. Most participants were female (n = 684, 65.9%) and the mean age was 32.2 years (SD 13.0) (Table 2). Overall, 2.6% of respondents (n = 27) tested HIV-positive. Approximately half of respondents had been tested > 12 months ago (or never tested) (n = 540, 52.0%), 44.2% were a member of an “overlooked” group (women < 25 and men > 24 years) (n = 459); and just under one-quarter reporting having ≥ 3 sexual partners in the past year (n = 247, 23.8%). The average score was 1.5 (SD 1.1) for the full screening tool (out of a maximum possible score of 7) and 1.2 (SD 0.8) for the reduced screening tool (out of a maximum possible score of 5).

HIV positivity was significantly and strongly associated with screening questions (Table 2). Among those with a suspected STI, 11.1% tested HIV-positive (versus only 2.5% of those who attended OPD for other reasons); and 6.5% of people reporting > 3 sexual partners in the past 12-months were HIV-positive (versus 2.2% of people with fewer sexual partners). In adjusted models that included covariates for age and sex, the odds of being HIV-positive was approximately 2–4 times higher for all screening questions compared to respondents who answered “no” to the same question (Appendix Table A1). HIV positivity increased with higher screening tool score (both full and reduced tool) (Appendix Table A2).

Table 3 examines the specificity and sensitivity of each item and of the full and reduced (without sexual behavior questions) screening tools. The most sensitive items were: tested ≥ 12 months ago or never tested (sensitivity 74.1%, 95% CI 53.7–88.9%), and being in an “overlooked” group (sensitivity 59.3%, 95% CI 38.8–77.6%). However, both had low specificity (56.2% and 48.6%, respectively). The items with highest specificity were: suspected STI (specificity 98.4%, 95% CI 97.4–99.1%); suspected malaria (specificity 92.9%, 95% CI 91.1–94.4%); and > 3 recent sexual partners (specificity 91.5%, 95% CI 89.6–93.1%)—however, these items had low sensitivity (7.4%, 14.8%, 22.2%, respectively).

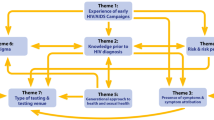

For the full screening tool, examining the score as a continuous measure shows that at a cutoff of ≥ 1, the tool specificity was 16.0% (95% CI 13.8–18.4%) and sensitivity was 92.6% (95% CI 75.7–99.1%) for HIV test positivity. Using a cutoff score of ≥ 2 the specificity was 55.8% (95% CI 52.7–58.9%) and sensitivity was 70.4% (95% CI 49.8–86.2%). At a cutoff score of ≥ 3 the specificity was 84.9% (95% CI 82.5–87.0%) and sensitivity was 55.6% (95% CI 35.3–74.5%)—and this is the optimal cutoff score based on the ROC (Fig. 1a) (partial area under ROC curve 0.70, 95% CI 0.61–0.80). The positive predictive value at the ≥ 3 item cutoff score is 8.9% and the negative predictive value is 98.6%.

The reduced screening tool had improved specificity when compared to the full tool at all cutoff points, but sensitivity was slightly lower. At a score of ≥ 1, the specificity was 21.2% (95% CI 18.7–23.8%) and sensitivity was 92.6% (95% CI 75.7–99.1%) for HIV test positivity. At a score of ≥ 2 the specificity was 68.5% (95% CI 65.6–71.4%) and sensitivity 59.3% (95% CI 38.8–77.6%), with a positive predictive value of 4.8% and a negative predictive value of 98.4%; and this is the optimal cutoff with a partial area under ROC curve of 0.64 (95% CI 0.54–0.74) (Fig. 1b).

The SOC screen—i.e., tested > 12 months ago or never, and/or presented with an STI—had a sensitivity of 77.8% (95% CI 57.7–91.4%) and a specificity of 47.8% (95% CI 44.7–50.9%). SOC screening had a positive predictive value of 3.8% (95% CI 2.4–5.8%) and a negative predictive value of 98.8% (95% CI 97.3–99.5%).

Discussion

We found that in OPD settings neither a tool with sexual behavior questions nor one excluding sexual behavior questions performed better than the standard of care approach to HIV testing in Malawi, and we could not identify a set of questions that was both highly sensitive and specific. The full screening tool at an optimal score of ≥ 3 would achieve 55.6% sensitivity and 84.9% specificity for HIV positivity among adult outpatients in Malawi. The reduced tool at an optimal score of ≥ 2 would achieve 59.3% sensitivity and 68.5% specificity, while standard of care of having at least one of two questions would achieve a sensitivity of 77.8% and specificity of 47.8%. Although standard of care screening had the highest sensitivity, it also had lower specificity than either full or reduced tools at their optimal cutoff scores. Similar to other literature, newly-diagnosed HIV infection was highly correlated with having suspected STI and ≥ 3 sexual partners in the past 12-months, [33,34,35], although adding these questions did not improve screening tool performance.

Our findings highlight the challenging trade-offs in finding an approach that is efficient (i.e., tool sensitivity) and still reaches the majority of unidentified individuals living with HIV (i.e., tool specificity). Given the adult HIV prevalence in Malawi (8.9%) [32], the treatment-adjusted prevalence of 1.7% [36], and the fact that less than 23% of individuals living with HIV were undiagnosed at the time of our study [37], for each 1000 adult outpatients screened, the full screening tool at an optimal score (≥ 3) would result in 162 people being tested for HIV, and 14 of these people would test positive; while among those not tested, there would be 12 HIV-positive people left undiagnosed. If 1000 people were screened using the reduced tool, using its optimal score (≥ 2), 322 would undergo an HIV test, among which 15 people would be diagnosed as HIV-positive and 11 HIV-positive people would be left undiagnosed.

One aim of screening tools is to save resources by prioritizing who gets tested. To achieve this, tools must have high sensitivity and specificity because their implementation adds burden for facility staff who are already under significant constraints due to high patient volume and limited resources in OPD settings. Program data from other screening experiences among outpatients in Malawi indicate that multiple-question screening tools (with sexual behavior questions) require five to seven minutes to administer per person screened [38]. Screening tools that include questions about sexual behavior also require private space for implementation, as well as patient-provider trust in order to promote open, honest responses from outpatients [22, 23].

Screening tool performance is affected by underlying prevalence, and there is an increased likelihood of more false positives in low-prevalence settings, which would affect the screening tool’s predictive values. Therefore, in contexts with controlled or near-controlled epidemics, it may be particularly challenging to identify adult HIV testing screening tools with sufficiently high specificity to offer efficiencies over standard of care, and sufficiently high sensitivity to capture most infected individuals. A recent paper analyzed the performance of a similar adult outpatient screening tool in Kenya, and found that the optimal set of questions (which included both demographic characteristics and sexual risk behavior questions) would reduce the number of people requiring testing by 75%, but would miss approximately half of HIV-positive individuals [33]. Taken together with our findings from Malawi, it is evident that screening tools are not an optimal solution for HIV testing in outpatient departments. As local epidemics evolve, multi-pronged approaches will be increasingly necessary to find the relatively small number of individuals unidentified with HIV.

While screening tools for adults in OPD settings have suboptimal performance, screening tools for pediatric HIV testing in sub-Saharan African OPDs show more promise, with sensitivity of approximately 71–92% and specificity of approximately 32–88% [39]. Pediatric tools may perform better than adult tools in OPD settings because questions related to vertical transmission risk are easier to include in a screening tool, compared to HIV horizontal acquisition risk factors for adults which may encompass a range of sensitive behaviors and exposures. Additionally, the physical manifestations of HIV infection may be easier to detect in children than in adults, for example frequent infections and failure to thrive [40]. Even still, the highest-performing pediatric HIV screening tool identified in a recent systematic review (with sensitivity and specificity ≥ 95%) was quite complex and may be difficult to take to scale. The tool incorporated 17 questions that included locally-relevant risk factors for HIV status such as father’s occupation, location and health status [39]—suggesting that deploying optimal screening tools, even for pediatrics, requires substantial resources including dedicated and trained personnel to administer lengthy questionnaires.

Without a well-performing screening tool, what strategies can increase HIV testing efficiency in OPD settings? Facility HIV self-testing can test many adults without increased personnel or the need for additional infrastructure [25]. HIV self-testing is effective and can provide cost savings for identifying newly-diagnosed adults in OPD as compared to standard blood-based testing [41] – but does require procurement and distribution of a high volume of test kits, which may be challenging in low-resource health systems. Other options might be facility-based testing “campaigns” or targeted time periods of concentrated testing aimed at achieving high coverage with consolidated resources. Reaching the remaining unidentified individuals living with HIV will likely require an approach that combines sustained OPD testing with higher-yield testing strategies, such as index partner testing, leveraging social networks of high-risk populations, and providing work-based testing for men in higher-risk occupations [8,9,10]. Programs and policymakers should also consider whether ensuring comprehensive HIV testing using SOC in OPDs, despite increasing inefficiencies and cost due to evolution of the HIV epidemic, is simply a necessary cost of sustaining control of a generalized epidemic.

This study has some limitations that should be noted. We performed a post-hoc analysis of a “constructed” hypothetical screening tool based on questions from an exit survey collected as part of a large trial about HIV self-testing. Some key risk factors that may be predictive of HIV positivity among adults—such as region of residence, number of lifetime partners, household wealth, migratory labor, and suspect tuberculosis [42,43,44,45,46]—were not included in the parent study survey tool so could not be analyzed here. There may have been selection bias into participation into the parent study (those who opted to use the HIV self-test), and there may have been response bias to the exit survey questions, especially those about sexual behavior. For example, people may have answered questions about their risk behaviors based on their HIV status, as diagnosed just prior to data collection. Additionally, the survey was asked at the conclusion of each person’s clinical encounter, so their experience at the facility that day—including learning of their HIV status for those newly diagnosed—may have affected their responses to survey questions. Lastly, the scoring algorithm used here is simplistic (summing dichotomous values) and does not consider the relative importance of different factors or how this might affect overall scores, for example through a weighted average. Given the small number of HIV cases in this population and our interest in identifying a screening tool that could be easily implemented in a busy OPD setting, we pursued this less complex approach when designing the screening tool. However, a more nuanced clinical predictive tool, that scores based on strength of association between the factor and the outcome, is worthy of further study particularly in lager populations with higher underlying HIV prevalence.

Conclusions

While we found screening questions that were highly correlated with HIV status, the screening tools (full or reduced) did not offer compelling advantages to efficiency or feasibility beyond the SOC. These findings, in addition to the added burden and complexities of introducing an adult screening tool within limited-resource OPD settings, and the large heterogeneity in health facility and epidemiological contexts within countries, call into question the use of screening tools for improving the efficiency of HIV testing in OPDs. While identification of an effective screening tool would represent a needed step forward in fighting the HIV epidemic, this study highlights the need for rigorous evidence before introducing such an approach at scale.

Change history

12 September 2021

A Correction to this paper has been published: https://doi.org/10.1007/s10461-021-03458-8

References

UNAIDS Understanding Fast-Track. Accelerating action to end the AIDS epidemic by 2030. Geneva: Joint United Nations Programme on HIV/AIDS; 2015.

UNAIDS. AIDSInfo 2020

UNAIDS. Data Report 2019. Geneva. 2019.

WHO. Consolidated guidelines on HIV Testing Services for a changing epidemic. Geneva: WHO; 2019.

Dwyer-Lindgren L, Cork MA, Sligar A, Steuben KM, Wilson KF, Provost NR, et al. Mapping HIV prevalence in sub-Saharan Africa between 2000 and 2017. Nature. 2019;570(7760):189–93.

Remme M, Siapka M, Sterck O, Ncube M, Watts C, Vassall A. Financing the HIV response in sub-Saharan Africa from domestic sources: moving beyond a normative approach. Soc Sci Med. 2016;169:66–76.

Granich R, Williams B, Montaner J, Zuniga JM. 90–90–90 and ending AIDS: necessary and feasible. Lancet. 2017;390(10092):341–3.

Sharma M, Ying R, Tarr G, Barnabas R. Systematic review and meta-analysis of community and facility-based HIV testing to address linkage to care gaps in sub-Saharan Africa. Nature. 2015;528(7580):S77–85.

Dave S, Peter T, Fogarty C, Karatzas N, Belinsky N, PantPai N. Which community-based HIV initiatives are effective in achieving UNAIDS 90–90–90 targets? A systematic review and meta-analysis of evidence (2007–2018). PloS ONE. 2019;14(7):e0219826.

Lillie TA, Persaud NE, DiCarlo MC, Gashobotse D, Kamali DR, Cheron M, et al. Reaching the unreached: performance of an enhanced peer outreach approach to identify new HIV cases among female sex workers and men who have sex with men in HIV programs in West and Central Africa. PloS ONE. 2019;14(4):e0213743.

Opito R, Nanfuka M, Mugenyi L, Etukoit MB, Mugisha K, Opendi L, et al. A case of TASO tororo surge strategy: using double layered screening to increase the rate of identification of new HIV positive clients in the community. Science. 2019;5(1):19–25.

Lowenthal E, Lawler K, Harari N, Moamogwe L, Masunge J, Masedi M, et al. Validation of the pediatric symptom checklist in HIV-infected Batswana. J Child Adolesc Ment Health. 2011;23(1):17–28.

Odafe S, Onotu D, Fagbamigbe JO, Ene U, Rivadeneira E, Carpenter D, et al. Increasing pediatric HIV testing positivity rates through focused testing in high-yield points of service in health facilities—Nigeria, 2016–2017. PloS ONE. 2020;15(6):e0234717.

De Cock KM, Barker JL, Baggaley R, El Sadr WM. Where are the positives? HIV testing in sub-Saharan Africa in the era of test and treat. AIDS. 2019;33(2):349–52.

Dovel K, Balakasi K, Gupta S, Mphande M, Robson I, Kalande P, et al.: Missing men or missed opportunity? Men’s frequent use of health services in Malawi. In: International AIDS conference; San Fransisco, CA. 2020.

Yeatman S, Chamberlin S, Dovel K. Women’s (health) work: a population-based, cross-sectional study of gender differences in time spent seeking health care in Malawi. PLoS ONE. 2018. https://doi.org/10.1371/journal.pone.0209586.

Sanga ES, Lerebo W, Mushi AK, Clowes P, Olomi W, Maboko L, et al. Linkage into care among newly diagnosed HIV-positive individuals tested through outreach and facility-based HIV testing models in Mbeya, Tanzania: a prospective mixed-method cohort study. BMJ Open. 2017;7(4):e013733.

Maughan-Brown B, Beckett S, Kharsany AB, Cawood C, Khanyile D, Lewis L, et al. Poor rates of linkage to HIV care and uptake of treatment after home-based HIV testing among newly diagnosed 15-to-49 year-old men and women in a high HIV prevalence setting in South Africa. AIDS Care. 2020. https://doi.org/10.1080/09540121.2020.1719025.

Mabuto T, Hansoti B, Charalambous S, Hoffmann C. Understanding the dynamics of HIV testing services in South African primary care facilities: project SOAR results brief. Washington, DC: Population Council; 2018.

Roura M, Watson-Jones D, Kahawita TM, Ferguson L, Ross DA. Provider-initiated testing and counselling programmes in sub-Saharan Africa: a systematic review of their operational implementation. AIDS. 2013;27(4):617–26.

Giguère K, Eaton JW, Marsh K, Johnson LF, Johnson CC, Ehui E, et al. Trends in knowledge of HIV status and efficiency of HIV testing services in sub-Saharan Africa, 2000–20: a modelling study using survey and HIV testing programme data. Lancet HIV. 2021;5:e688.

Niforatos JD, Nowacki AS, Cavendish J, Gripshover BM, Yax JA. Emergency provider documentation of sexual health risk factors and its association with HIV testing: a retrospective cohort study. Am J Emerg Med. 2019;37(7):1365–7.

Haukoos JS, Lyons MS, Lindsell CJ, Hopkins E, Bender B, Rothman RE, et al. Derivation and validation of the Denver Human Immunodeficiency Virus (HIV) risk score for targeted HIV screening. Am J Epidemiol. 2012;175(8):838–46.

Dovel K, Shaba F, Nyirenda M, Offorjebe OA, Balakasi K, Phiri K, et al. Evaluating the integration of HIV self-testing into low-resource health systems: study protocol for a cluster-randomized control trial from EQUIP Innovations. Trials. 2018;19(1):498.

Dovel K, Nyirenda M, Shaba F, Offorjebe A, Balakasi K, Nichols B, et al. Facility-based HIV self-testing for outpatients dramatically increases HIV testing in Malawi: a cluster randomized trial. Lancet Global Health. 2018;21:28.

Ward H, Rönn M. The contribution of STIs to the sexual transmission of HIV. Curr Opin HIV AIDS. 2010;5(4):305.

Rutstein SE, Ananworanich J, Fidler S, Johnson C, Sanders EJ, Sued O, et al. Clinical and public health implications of acute and early HIV detection and treatment: a scoping review. J Int AIDS Soc. 2017;20(1):21579.

Dovel K, Dworkin SL, Cornell M, Coates TJ, Yeatman S. Gendered health institutions: examining the organization of health services and men’s use of HIV testing in Malawi. J Int AIDS Soc. 2020;23:e25517.

Drammeh B, Medley A, Dale H, De AK, Diekman S, Yee R, et al. Sex differences in HIV testing—20 PEPFAR-supported sub-Saharan African Countries, 2019. Morb Mortal Wkly Rep. 2020;69(48):1801.

Merzouki A, Styles A, Estill J, Orel E, Baranczuk Z, Petrie K, et al. Identifying groups of people with similar sociobehavioural characteristics in Malawi to inform HIV interventions: a latent class analysis. J Int AIDS Soc. 2020;23(9):e25615.

Lightfoot M, Dunbar M, Weiser SD. Reducing undiagnosed HIV infection among adolescents in sub-Saharan Africa: provider-initiated and opt-out testing are not enough. PLoS Med. 2017;14(7):e1002361.

UNAIDS. Country Factsheet: Malawi HIV/AIDS estimates. 2019.

Muttai H, Guyah B, Musingila P, Achia T, Miruka F, Wanjohi S, et al. Development and validation of a sociodemographic and behavioral characteristics-based risk-score algorithm for targeting HIV testing among adults in Kenya. AIDS Behav. 2020. https://doi.org/10.1007/s10461-020-02962-7.

Chen L, Jha P, Stirling B, Sgaier SK, Daid T, Kaul R, et al. Sexual risk factors for HIV infection in early and advanced HIV epidemics in sub-Saharan Africa: systematic overview of 68 epidemiological studies. PloS ONE. 2007;2(10):e1001.

Rosenberg MS, Gómez-Olivé FX, Rohr JK, Houle BC, Kabudula CW, Wagner RG, et al. Sexual behaviors and HIV status: a population-based study among older adults in rural South Africa. J Acquir Immune Defic Syndr (1999). 2017;74(1):e9.

HIV Testing Services Dashboard. In: World Health Organization, editor. 2021.

Ministry of Health Social Welfare Tanzania, Ministry of Health Zanzibar, National Bureau of Statistics Tanzania, Office of Chief Government Statistician Tanzania, ICF International. Tanzania Service Provision Assessment Survey 2014–2015. Dar es Salaam, Tanzania: MoHSW/Tanzania, MoH/Tanzania, NBS/Tanzania, OCGS/Tanzania, and ICF International. 2016.

PEPFAR. Implementing Partners Strategic Meeting. Malawi. 2019.

Clemens SL, Macneal KD, Alons CL, Cohn JE. Screening algorithms to reduce burden of pediatric HIV testing. Pediatr Infect Dis J. 2020. https://doi.org/10.1097/INF.0000000000002715.

Lowenthal ED, Bakeera-Kitaka S, Marukutira T, Chapman J, Goldrath K, Ferrand RA. Perinatally acquired HIV infection in adolescents from sub-Saharan Africa: a review of emerging challenges. Lancet Infect Dis. 2014;14(7):627–39.

Nichols B, Offorjebe OA, Cele R, Shaba F, Balakasi K, Chivwara M, et al. Economic evaluation of facility-based HIV self-testing among adult outpatients in Malawi. J Int AIDS Soc. 2020;23:e25612.

Nutor JJ, Duah HO, Agbadi P, Duodu PA, Gondwe KW. Spatial analysis of factors associated with HIV infection in Malawi: indicators for effective prevention. BMC Public Health. 2020;20(1):1–14.

Nliwasa M, MacPherson P, Gupta-Wright A, Mwapasa M, Horton K, Odland JØ, et al. High HIV and active tuberculosis prevalence and increased mortality risk in adults with symptoms of TB: a systematic review and meta-analyses. J Int AIDS Soc. 2018;21(7):e25162.

Nliwasa M, MacPherson P, Mukaka M, Mdolo A, Mwapasa M, Kaswaswa K, et al. High mortality and prevalence of HIV and tuberculosis in adults with chronic cough in Malawi: a cohort study. Int J Tuberc Lung Dis. 2016;20(2):202–10.

Anglewicz P, VanLandingham M, Manda-Taylor L, Kohler H-P. Migration and HIV infection in Malawi: a population-based longitudinal study. AIDS (Lond, Engl). 2016;30(13):2099.

Camlin CS, Akullian A, Neilands TB, Getahun M, Bershteyn A, Ssali S, et al. Gendered dimensions of population mobility associated with HIV across three epidemics in rural Eastern Africa. Health Place. 2019;57:339–51.

Acknowledgements

We are grateful to the Partners in Hope programs team and facility staff who gave their time and insights throughout the study. We thank Tanya Shewchuk and Naoko Doi for input on data interpretation, and Eric Lungu for continued support around data cleaning and data coding.

Funding

This study was supported by the United States Agency for International Development (Cooperative Agreement AID-OAA-A-15-00070). CM receives support from Center for HIV Identification, Prevention, and Treatment (CHIPTS; NIMH Grant MH58107); and the National Center for Advancing Translational Sciences (NCATS Grant KL2TR001882). KD receives support from the Fogarty International Center (Grant K01TW011484), and the Bill and Melinda Gates Foundation (Grant 001423). KD and RH receive support from UCLA CFAR (Grant AI028697). The views in this publication do not necessarily reflect the views of the U.S. Agency for International Development (USAID), the U.S. President’s Emergency Plan for AIDS Relief (PEPFAR), or the United States Government.

Author information

Authors and Affiliations

Contributions

KD and RH led the parent trial. KD designed this sub-analysis. CM led this analysis, and all authors provided input on interpretation of findings. CM wrote the first draft of the paper, with significant inputs from KD. All authors provided edits and approved the final version for submission.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interest to disclose.

Ethical Approval

The parent trial including the exit survey methodology and instrument received ethical approval from the Malawi National Health Sciences Review Committee and the University of California Los Angeles Institutional Review Board.

Informed Consent

All participants gave oral informed consent before engaging in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: “The abstract for this article was inadvertently error occurred after the text “... tools that include questions about sexual behavior and use of health services”; the following text was missing “We compare a full tool (seven relevant questions) to a reduced tool (five questions, excluding sexual behavior measures) and to standard of care (two questions, never tested for HIV or tested > 12 months ago, or seeking care for suspected STI)”.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Moucheraud, C., Hoffman, R.M., Balakasi, K. et al. Screening Adults for HIV Testing in the Outpatient Department: An Assessment of Tool Performance in Malawi. AIDS Behav 26, 478–486 (2022). https://doi.org/10.1007/s10461-021-03404-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10461-021-03404-8