Abstract

We review the materials science applications of the nested sampling (NS) method, which was originally conceived for calculating the evidence in Bayesian inference. We describe how NS can be adapted to sample the potential energy surface (PES) of atomistic systems, providing a straightforward approximation for the partition function and allowing the evaluation of thermodynamic variables at arbitrary temperatures. After an overview of the basic method, we describe a number of extensions, including using variable cells for constant pressure sampling, the semi-grand-canonical approach for multicomponent systems, parallelizing the algorithm, and visualizing the results. We cover the range of materials applications of NS from the past decade, from exploring the PES of Lennard–Jones clusters to that of multicomponent condensed phase systems. We highlight examples how the information gained via NS promotes the understanding of materials properties through a novel way of visualizing the PES, identifying thermodynamically relevant basins, and calculating the entire pressure–temperature(–composition) phase diagram.

Graphic abstract

Similar content being viewed by others

1 Introduction

The potential energy surface (PES) describes the interaction energy of a system of particles as a function of the spatial arrangement of atoms and, within the Born–Oppenheimer approximation, contains all structural and mechanical information—both microscopic and macroscopic—about the system. [1] Its global minimum corresponds to the ground-state structure, while the usually numerous local minima are other stable or metastable configurations linked to each other by transition states, which determine the pathways between these different structures, along with the transformation mechanisms. An alternative, equilibrium statistical mechanics view of the PES is given by the free energy of various phases, which quantifies the interplay between energetic factors, which favor lower potential energy, and entropic factors, which favor a large configuration space volume accessible to each phase. Those specific regions of the PES where the number of available configurations dramatically decreases as temperature decreases correspond to phase transitions, such as condensation and freezing. The description and understanding of these properties of the PES underpin a wide range of research areas, from interpreting dynamic processes in reaction chemistry, protein folding, and studying supercooled liquids to understanding the microscopic details of phase transitions.

Computer simulations have become an essential tool in exploring the PES, providing both thermodynamic information and an atomic level insight into materials properties. A plethora of computational methods have been developed, but most such techniques target only a particular part or aspect of the landscape, or are optimised to map only certain properties of complex landscapes. Global optimization methods focus on building a database of minima to find the lowest energy structure. These include basin hopping [2], genetic algorithms [3, 4], minima hopping [5] and simulated annealing [6] with adaptive cooling rates [7], as well as dedicated crystal structure prediction tools, such as AIRSS [8, 9], USPEX [10, 11], and CALYPSO [12, 13]. These techniques enable the exploration of the hitherto unknown basins of the PES, and have already lead to the discovery of novel phases of a range of materials [8, 9, 14]. However, while the dimensionality of the PES scales linearly with the number of atoms, the available configuration space volume scales exponentially with this number. It is also commonly thought that the number of local minima scales exponentially as well [15], which can dramatically increases the computational cost, rendering it impossible to perform an exhaustive search of all potential minima basins of even moderately complex systems.

Temperature–enthalpy curve (purple, enthalpy decreases from left to right) of lithium modelled by the embedded atom model (EAM) of Nichol and Ackland [27] at 14 MPa showing both the vaporisation (2450 K, – 0.3 to – 0.9 eV) and melting (700 K, – 1.40 to – 1.45 eV) transitions. Dashed lines illustrate the difference between the series of sampling levels for parallel tempering (left panel), Wang–Landau sampling (middle panel), and nested sampling (right panel). In the case of NS, these are equidistant in \(\ln \Gamma \) (for clarity, only one out of every 20,000 iterations). The density of NS levels as a function of enthalpy (blue, right y-axis, right panel only) shows the general increase in sampling density as enthalpy decreases, superposed with small amplitude peaks localized in the two phase transition ranges. Red arrows illustrate the direction of the sampling’s progress; purple dots are guide to the eye. The enthalpy curve was calculated with NS of 64 atoms and \(K=648\)

The physical behaviour of materials is often dominated by entropic effects, and the calculation of free energies requires sampling over vast regions of the PES instead of concentrating only on the minima structures. Temperature-accelerated dynamics [16], samples rare events and umbrella sampling [17], and metadynamics [18] enable the evaluation of relative free energies. A range of methods have been specifically developed to study phase transitions: Gibbs-ensemble Monte Carlo method to study the boiling curve [19], two-phase coexistence methods and the multithermal-multibaric approach [20] to determine the melting line, or thermodynamic integration and lattice-switch Monte Carlo [21] to pinpoint solid–solid transitions. Apart from these often being highly specific, with most of them optimal only for a single type of transition, if a solid phase is involved, they also require an advance knowledge of the corresponding crystalline structures, limiting their predictive power to exploring known phases.

Although all of the techniques mentioned above provide important information about different segments of the landscape, we can see that gaining a broader overview of the entire PES with these would be a challenging and highly laborious task. There are very few techniques that allow the unbiased sampling of large regions of the PES without prior knowledge of stable structures or estimated location of phase transitions. The two most widely used are parallel tempering [22, 23] and Wang–Landau sampling [24, 25]. However, these methods also face general challenges, illustrated in Fig. 1, demonstrating the location of sampling levels around first-order phase transitions, where the enthalpy of the system changes rapidly with temperature. Parallel tempering samples the PES at fixed temperatures (Fig. 1, left panel). The overlap between the distributions of energies and their corresponding atomic configurations at temperatures just above and just below a phase transition is very small, vanishing in the thermodynamic limit, due to the entropy jump. It is well understood that this makes equilibration of samplers that are in two different phases especially difficult [26]. Wang–Landau sampling (middle panel) is done on energy levels constructed to be equispaced. Although this provides a much better sampling of first-order phase transitions, the appropriate sampling levels still have to be determined manually.

The nested sampling (NS) scheme, introduced by Skilling [28,29,30], can overcome this challenge of equilibration by automatically creating, using a single top–down pass, a series of energy levels equispaced in \(\ln \Gamma \), where \(\Gamma \) is the configuration space volume accessible below each energy. As shown in the right-hand panel of Fig. 1, this means that sampling levels are much denser at lower energies or enthalpies, where they precisely sample small energy differences relevant for low temperatures, and also slightly denser as the method goes through each phase transition. In this work, we review how the nested sampling technique can be adapted to sample the PES of atomic systems, describe its advantages, and illustrate how thermodynamic and structural information can be extracted from the results, with examples from several different applications.

2 The nested sampling method

Nested sampling was introduced by Skilling [28,29,30] in the field of applied probability and inference, to sample sharply peaked probability densities in high-dimensional spaces. The algorithm can provide both posterior samples and an estimate of the Bayesian evidence (marginal likelihood), Z, of a model, as

where L is the likelihood function.

The NS technique has been quickly taken up in the field of astrophysics [31,32,33,34,35] and gravitational wave data analysis [36, 37], and has gradually been adapted to explore the parameter space in a wide range of disciplines, such as data analysis [38], signal processing [39], phylogenetics [40], and systems biology [41, 42].

The above integral also naturally translates to materials science problems. Sampling the 3N-dimensional configuration space of a system of N particles is the high-dimensional space, and the likelihood is given by the probability of microstates, which is proportional to the Boltzmann factor. Thermodynamic quantities depend on the PES through the canonical partition function of the system

where \(\beta \) is the inverse thermodynamic temperature, E is an energy, enthalpy, or related quantity, \({\mathbf {x}}\) and \({\mathbf {p}}\) are the positions and momenta, respectively, and the integral is carried out over the microstates of the system. To relate this expression to Eq. 1, it can be written in terms of an integral over E weighted by the derivative of the cumulative density of microstates \(\Gamma (E)\), as

up to an overall constant factor. If the energy can be separated into a sum of a position-dependent potential and a momentum-dependent kinetic contribution, the momentum-dependent partition function \(Z_p(\beta )\) can be factored out, and the integrand remains unchanged if \(\Gamma (E)\) and E are understood to mean only the position-dependent parts. If samples from \(\Gamma (E)\) are available at a set of energies \({E_i}\) in decreasing order, this equation can be approximated as

where \(w_i = \Gamma (E_{i-1})-\Gamma (E_{i})\) is the configuration space weight associated with each sample. Many thermodynamic properties can be related to simple functions of the partition function, including the free energy (its logarithm), internal energy (its first derivative with respect to the inverse thermodynamic temperature), and specific heat (its second derivative). Analogous approximations for these quantities can be derived by analytically applying the operations to each term in the sum. If the configurations corresponding to each energy \({\mathbf {x}}_i\) are available, expectation values of arbitrary position-dependent properties can also be evaluated from

where \(A({\mathbf {x}})\) is the value of the property for the specified positions. The fundamental modelling assumption underlying this approximation is that samples that are well suited to estimating the cumulative density of states \(\Gamma (E)\) are also well suited for estimating other observables.

Nested sampling addresses the problem of finding a suitable set of sample points and associated weights for estimating the above integrals. There is a large degree of efficiency to be gained by coarsely sampling parts of phase space which contribute very little to the overall sum, i.e., those with relatively high energy, and conversely, by refining the sampling in those—exponentially small—parts of phase space where the energy is low.

2.1 The iterative algorithm

The basic NS algorithm applied to sampling the potential energy landscape of atomistic systems is illustrated in Fig. 2. The sampling is initialised by generating a pool of K uniformly distributed (in \({\mathbf {x}}\) space) random configurations, often referred to as the ‘live set’ or ‘walkers’. These represent the “top” of the PES, the high-energy gas-like configurations, since that phase inevitably dominates a truly uniform sampling due to its large configuration space volume. Then, the following iteration is performed, starting the loop at \(i=1\):

-

1.

Record the energy of the sample with the highest energy as \(U_i\), and use it as the new energy limit, \(U_\mathrm {limit} \leftarrow U_i\). The corresponding phase-space volume for \(U < U_\mathrm {limit}\) is \(\Gamma _i=\Gamma _0 [K/(K+1)]^i\).

-

2.

Remove the sample with energy \(U_i\) from the pool of the walkers and generate a new configuration uniformly random in the configuration space, subject to the constraint that its energy is less than \(U_\mathrm {limit}\).

-

3.

Let \(i\leftarrow i+1\) and iterate from step 1.

This iteration generates a sequence of samples \({\mathbf {x}}_i\), corresponding energies \(U_i = U({\mathbf {x}}_i)\), and weights \(w_i\) given by the differences between each sample’s volume and the volume of the next sample \(\Gamma _0 \big ( [K/(K+1)]^i - [K/(K+1)]^{i+1}\big )\), which together can be used to evaluated thermodynamic functions and other observables using Eqs. 4 and 5.

Illustration of the nested sampling algorithm, showing several steps of the iteration. Panels on the left-hand side represent the PES (the vertical axis being the potential energy, while the other two axes representing the phase-space volume). Black dots on the landscape demonstrate the members of the live set (in this illustration \(K=9\)) uniformly distributed in the allowed phase space—corresponding atomic configurations (assuming constant volume sampling) are shown in the right-hand side panels. In the middle two panels, the samples with the highest energy are shown by blue, with the corresponding energy contour illustrated by a dotted line

The NS process ensures that at each iteration, the pool of K samples is uniformly distributed in configuration space with energy \(U < U_\mathrm {limit}\). The finite sample size leads to a statistical error in \(\ln \Gamma _i\) , and also in the computed observables, that is asymptotically proportional to \(1/\sqrt{K}\), so any desired accuracy can be achieved by increasing K. Note that for any given K, the sequence of energies and phase volumes converges exponentially fast, and increasing K necessitates a new simulation from scratch.

It is important to note the absence of the temperature \(\beta \) from the actual sampling algorithm. Even without explicit dependence on the temperature, the sequence of configurations and weights generated by NS can be used to efficiently calculate expectation values with the Boltzmann weight. Within any one phase, the low-energy regions, which are exponentially emphasized by the Boltzmann factor, are well sampled by the NS algorithm’s configurations, which are exponentially concentrated (as a function of iteration) into the shrinking low-energy configuration space volume. Between thermodynamically stable phases, where the configuration space volume decreases a lot. The high overlap between successive samples eases equilibration, allowing the process to smoothly go from the typical high-temperature phase configuration distribution to the low-temperature phase distribution. Thus, the expectation value of any observable can be calculated at an arbitrary temperature during the postprocessing, simply by re-evaluating the partition function and expectation value with a different \(\beta \) over the same sample set, obviating the need to generate a new sample set specific to each desired temperature. The range of accessible temperatures is, however, limited by the range of energies sampled. As the temperature goes down, so do the relevant energies, and as a result, any given finite length run only includes useful information above some minimum temperature, which is proportional to the rate of change of \(U_\mathrm {limit}\) as a function of iteration at the end of the run.

2.2 Generating new sample configurations

The initial live set consists of randomly generated configurations, ensuring their uniform distribution in the phase space. But how can we practically maintain this requirement as the sampling progresses? As the configuration space shrinks exponentially with decreasing energy, naive rejection sampling—where uniformly distributed random configurations are proposed, and only accepted if their energy is below the current limit \(U_i < U_\mathrm {limit}\)—quickly becomes impractical, because essentially all proposals are rejected. Instead, we randomly select and clone an existing configuration from within the current live set, as a starting point for generating the new configuration. This cloned sample is then moved in configuration space long enough that we can treat it as an independent sample. A Monte Carlo procedure to reproduce the target distribution, namely uniform in configuration space below the limiting energy, simply consists of proposing moves that obey detailed balance (i.e., are reversible) and rejecting moves that exceed the energy maximum.

Illustration of different move types employed to decorrelate the cloned configuration. Changes of atomic position use one of single-atom MC steps (a1) or all-atom GMC moves (a2) or all-atom TE-HMC moves (a3). Changes of simulation cell use volume change (b), cell shear (c), and cell stretch (d) moves. Possible changes of atom types in multicomponent systems use swapping identities of different types (e), changing the type of a single atom in semi-grand-canonical moves (f), and changing the number of atoms by insertion or deletion in grand-canonical moves (g)

Various types of moves are needed to efficiently explore all of the relevant degrees of freedom, illustrated in Fig. 3. The most obvious is the motion of the atoms, which can be carried out with single-atom moves, but these are efficient only if the resulting energy change can be calculated in O(1) time. This is in principle true for all short-ranged interatomic potentials, although implementations to actually carry out this calculation efficiently are not necessarily available. Naive multiatom moves with sufficiently large displacements are hard to propose, since the associated energy change increases as the square root of the number of atoms. Instead, collective moves with reasonable displacements and acceptance rates can be generated by Galilean Monte Carlo (GMC) [43, 44], where the entire configuration is moved in straight line segments along a direction in the full 3N-dimensional space, reflecting specularly from the boundary of the allowed energy range, and accepted or rejected based on the final energy after a pre-determined number of steps \(n_\mathrm {steps}\). A qualitatively different option is to use total energy Hamiltonian Monte Carlo (TE-HMC), where a velocity and corresponding kinetic energy is assigned to each atom (not necessarily using the physical atomic masses), and the total energy including both potential and kinetic terms is subject to the NS limit. In this case short, fixed-length, constant energy, constant cell, molecular dynamics (MD) trajectories are used to propose collective moves, which are once again accepted or rejected based on their final total energy [45]. This approach can propose large-displacement collective moves with very high probability of acceptance. However, the probability distribution of the spatial degrees of freedom of the live set can become bimodal near phase transitions, with one peak dominating above (in potential energy and/or temperature) and another peak below, negating many of the advantages of NS which depend on a high overlap of the distribution between sequential iterations.

In single-component non-periodic systems, atomic position moves are sufficient to explore configuration space. In periodic systems under constant pressure, however, additional moves associated with the periodicity (represented by the periodic cell vectors) are also required, and the quantity of interest is no longer the potential energy but rather the enthalpy, \(U+PV\), where V is the simulated system volume and P is an applied pressure. Note that this pressure has to be greater than 0, since without the PV enthalpy contribution, the increased configuration space volume available to particles in an increasingly large cell would give infinite entropy, overcoming any finite interaction energy gained by condensation and the system would always remain in the gas phase. The volume (and more generally the periodic cell shape) of the system becomes an output of the NS simulation, and its expectation value as a function of temperature must be evaluated in postprocessing. Monte Carlo moves associated with the cell can be separated into two categories—those that change the volume, and those that do not. The former consists of uniform scaling moves, and must reproduce the correct probability distribution which is proportional to \(V^N\). This is enforced by proposing isotropic rescaling moves that take the cell volume from its current value to a new value that is uniformly distributed within some small range. These proposed moves are filtered by a rejection sampling procedure to produce a probability proportional to \(V^N\) before the final acceptance or rejection by the deformed cell energy. Additional volume-preserving simple shear (off-diagonal deformation gradient) and stretch (diagonal deformation gradient) moves are also proposed with a uniform distribution in strain. However, it can be shown that simulation cells that are anisotropic (long in some directions and short in the others) dominate the configuration space [46, 47]. At early iterations and high energies, where the system is disordered and interatomic interactions are relatively unimportant, this does not significantly affect the sampled energies. At later iterations and lower energies, where the system mainly samples a crystalline lattice, such anisotropic cells prevent positions fluctuations that vary along the short directions, equivalent to restricting the sampling of phonons along those directions to only very short wavelengths. Because in a crystal, the periodic cell directions must be compatible with integer numbers of atomic layers (Fig. 4), it is difficult for the system to vary the cell shape, and the samples can become trapped in very anisotropic cells that are favored in earlier iterations. To prevent this, a minimum cell height criterion can be added, with an optimal value that minimizes effects on the free energy differences between the disordered and ordered systems [46, 47].

Illustration of the effect of flexible simulation cell. a Demonstrates the continuous change of the cell ratios allowed in case of disordered system; b shows the discrete set of possible cells that accommodate integer numbers of layers, both on the 2D orthogonal example. c Shows the convergence of heat capacity with respect of the minimum height ratio of the simulation cell, demonstrated in case of the periodic system of 64 atoms modelled by the Lennard–Jones potential at \(p=0.064~\epsilon /\sigma ^3\) [47, 48]. Coloured rectangles serve as the legend, illustrating the allowed distortion in 2D. The smaller the minimum height ratio, \(h_{\mathrm {min}}\), the more flat the cell can become. The peaks at lower temperature correspond to the melting transition, and this can be seen converged for the minimum height ratio to be at least 0.65. The higher temperature peak corresponds to the vaporisation and this requires 0.35 for convergence

It is also possible to carry out the NS iteration with a uniform probability density in cell volume, which removes the need for the special volume move rejection sampling step. In this case, all expectation values computed by postprocessing of the NS trajectory must take into account the factor of \(V^N\) associated with the configuration that led to each energy in the sequence. We have found empirically that without the bias to large cell volume caused by the \(V^N\) probability density during sampling, even with \(P=0\), the NS trajectory samples a wide range of cell volumes. The resulting trajectories can be used to evaluate expectation values at a range of pressures by adding the PV term to the enthalpy in the postprocessing expressions. The expected distribution of volumes is Gaussian, and deviations from this distribution in the postprocessing weights indicate that the range of volumes is not compatible with the pressure.

For single-component systems, atomic position and cell steps are sufficient, but this is not necessarily the case for systems with multiple chemical species. Especially in solid phases and at low temperatures, it is nearly impossible for atoms to move between lattice sites, even if they can vibrate about their equilibrium positions. To speed up the exploration of chemical order (especially important for systems that undergo solid-state order-disorder transitions), one can add atom swap moves, switching the chemical species of a randomly selected pair of atoms, and accepting or rejecting based on the final energy. If inter-atom correlations are important, it may be useful to propose moves jointly swapping the species of compact clusters of atoms, but this has not appeared to be necessary so far. While atom swap moves are sufficient for constant composition simulations, it is also possible to vary the composition in a semi-grand-canonical (s-GC) ensemble, where the total number of particles is fixed, but not the number of any particular chemical species [49, 50]. In this case, the energy or enthalpy is augmented by a chemical potential term \(\sum _i n_i \mu _i\), where i indicates chemical species, \(n_i\) is the number of atoms of species i, and \(\mu _i\) is its applied chemical potential. Since only relative energies are meaningful, the absolute chemical potentials are irrelevant, and only a set of \(N_\mathrm {species}-1\) differences \(\Delta \mu _{1j}\) sufficient to fix all chemical potentials up to an overall shift is needed. Similarly to the case of volume for constant pressure NS, for s-GC simulations, the composition is a quantity that evolves during the NS iteration, and must be evaluated as a function of temperature by postprocessing. An example of such a phase diagram is given below in Sect. 3.2.

Note that conventional grand-canonical ensemble sampling has not been tried, at least for bulk systems, because it is unlikely to be efficient. If the particle numbers of all species can vary, the cell volume would have to be fixed to prevent a degeneracy with the total particle number. However, this would require density changes to be sampled entirely by changing the number of particles, which is difficult to do with appreciable acceptance probability in condensed systems. Since the number of particles is typically small, it would also discretize the possible densities in ways that may be incompatible with the material’s low-energy crystal periodicity. Varying the number of particles of only a subset of the atom types during sampling would not suffer from this issue, but has not yet been tested.

The MC trajectory that decorrelates a configuration initially generated by cloning a random remaining live point consists of a sequence of these types of moves. Single-atom moves or single short GMC or MD trajectories, individual cell volume, shear, and stretch moves, and individual atom swap and s-GC moves (as appropriate for the type of simulation) at fixed relative frequencies are randomly selected. Each step is accepted if the final configuration is below the current energy limit. These steps are performed until a desired MC walk length, typically defined in terms of the number of energy or force evaluations (\(n_\mathrm {steps}\) for GMC or MD, 1 for all other step types), believed to be sufficient to generate a new uniformly distributed configuration, is reached. To ensure efficient exploration, the sizes of the steps must be adjusted during the progress of the NS. For every fixed number of iterations, a series of pilot walks, each with only a single move type, is initiated to determine the optimal step size. Each pilot walk is repeated, adjusting the step size, until the acceptance rate is in a desired range, typically around 0.25–0.5. The quantity varied for single-atom steps is the step size, for GMC, it is the size of each step in the trajectory, and for MD, it is the integration time step (the number of steps for the latter two is fixed). For cell steps, the quantity varied is the volume or strain range. Atom swap and s-GC steps have no adjustable parameters. The resulting configurations are discarded, so these pilot walks do not contribute to creating new uniformly sampled configurations. This optimization is essential for efficient simulation, and optimal values vary substantially for different phases.

2.3 Parameters and performance

The computational cost of an NS run depends in a systematic way on the parameters of the simulation. For most chemically specific interatomic potentials (as opposed to toy models), the cost is dominated by the energy and force evaluations needed for the MC sampling that produces new uncorrelated samples from cloned samples that are initially identical. The total cost of the NS process is therefore proportional to the product of the cost for each evaluation, the number of evaluations per NS iteration, and the total number of iterations.

The choice of interatomic interaction model not only controls the cost for each evaluation, but also the physical or chemical meaning of the results. Typically, relatively fast potentials are used, but these often have two issues. One is that they make incorrect predictions for low-energy structures that have not been previously noticed, because they have not been used in the context of a method that thoroughly samples the PES to calculate converged equilibrium properties. The other is that parameter files made available for standard simulation codes often fail in parts of configuration space that NS samples, especially at early iterations and high energies, because such configurations were not considered relevant to any real applications during development. Nevertheless, these failures can produce artifacts in NS trajectories (e.g., atoms that come too close together and never dissociate), and must be corrected by, for example, smoothing or increasing core repulsion.

The number of evaluations for each new uncorrelated sample is proportional to an overall walk length parameter, L. This parameter needs to be large enough that each cloned configuration becomes uncorrelated with its source, and again samples the relevant configuration space uniformly. If it is not, the set of configurations may be stuck with only configurations relevant for high energies, and take too many iterations, or perhaps entirely fail, to find basins that are important at low energy. Such an error can result in underestimating the transition temperature, or failing to detect the transition at all.

The size of the live set K is another important factor in the accuracy of the results, determining the resolution with which the PES is mapped during the sampling. If it is too low, there will be systematic discretization errors, as well as noise, in the configuration space volume estimates and any quantities derived from them. A distinct problem caused by too small a live set is the likelihood of missing basins that are important late in the iteration process through a phenomenon called extinction. Any basins that are separated by barriers at some energy must be found, while the NS limiting energy is higher than the barrier, because later the cloning and MC walk process will not be able to reach them. However, even if there are samples in such an isolated basin when the limiting energy cuts it off from the other samples, there is the possibility that the number of samples will fluctuate to 0, in which case knowledge of that basin will be lost and cannot be rediscovered. If there are \(K_\mathrm{b}\) samples in a basin, its fluctuations will be of order \(\sqrt{K_\mathrm{b}}\), relative fluctuations will be of order \(1/\sqrt{K_\mathrm{b}}\), and the chance to fluctuate to zero will increase as K decreases. In the highly multimodal PES characteristic of materials systems, this is an important limit on the minimum necessary live set size. One approach to address this issue in bulk systems, diffusive NS, is discussed at the end of this section, and others that have been proposed for clusters, where the problem is even more frequent, are mentioned in the following section on applications to clusters.

The number of NS iterations is set by the minimum temperature that needs to be described, since the range of configurations relevant at each temperature is set by the balance between the decreasing configuration space volume and the increasing Boltzmann factor as iteration number increases and energy decreases. For fixed minimum temperature, the amount of compression in configuration space is fixed, so the number of iterations increases linearly with the number of walkers in the live set K. There is some evidence, as illustrated in Fig. 5, that with increasing K, the minimum sufficient L decreases, and vice versa, if K is large enough to allow sampling of all the relevant basins. This may be understood if the distance that the cloned configuration needs to diffuse in configuration space decreases as K increases, for example, if it only needs to be lost among the neighboring configurations, rather than fully explore the entire available space.

Constant pressure heat capacity around the melting transition, calculated for the periodic system of 64 LJ particles. Black lines show the converged result; purple lines illustrate the variation of independent NS runs of the same system, showing an estimate for the uncertainty. The number of walkers, K, is increased left to right, the length of the walk, L, is increased top to bottom. The total cost is the total number of energy evaluations performed, and is constant along diagonal panels—grey lines are guide to the eye

The scaling of the cost with the number of atoms N is more complicated. The first, trivial contribution is due to the cost of each energy or force evaluation, which scales at least linearly (for a localized interatomic potential) in the number of atoms. In addition, the configuration space volume ratio associated with a pair of phases scales with N, so that the number of iterations increases linearly. As a result, the overall scaling of computational cost with the number of atoms is at least \(O(N^2)\). Note also that only the increase with walk length L and the N-dependent cost for each evaluation are amenable to parallelization—costs associated with the number of iterations are inherently sequential, and cannot be offset by adding more parallel tasks if other limits apply (Sect. 2.4). In practice, we find that for good convergence, periodic solids require systems sizes of N = 32–256 atoms, walk lengths L of 100s to 1000s, and K = 500–5000 walkers. These result in \(10^5\) to \(10^7\) iterations being needed to reach the global minimum at temperatures below any structural phase transitions.

It also has to be noted that using system sizes mentioned above will inevitably cause a finite-size effect. First-order phase transitions will be smeared out in temperature, rather than being truly discontinuous. In addition, there is a systematic shift, usually underestimating the temperature of the vaporisation transition, and overestimating that of the melting transition. The magnitude of this effect varies depending on the studied system and pressure, but is generally between 3 and 8% if using 64 atoms [51, 52].

Since its introduction by Skilling, several modifications and improvements have been suggested to increase the efficiency of the sampling algorithm. A multilevel exploration of the original top–down algorithm, the diffusive nested sampling [53], has shown improved efficiency in exploring some high-dimensional functions. Introducing varying number of live points in dynamic nested sampling has shown improved efficiency in the distribution of samples [54].

The PES of atomistic systems is often very different in its features from the parameter spaces explored in other disciplines. Thus, these modifications to the technique are not always applicable, or offer only limited improvement. While in typical data analysis applications, a 30-dimensional function is considered to be high dimensional, the dimensionality of the atomistic PES is at least an order of magnitude larger. A potentially large number of local minima structures mean that the PES is often highly multimodal; moreover, the relative ratio of these modes is of the utmost significance as they correspond to phase transitions in the material. Finally, while calculating the partition function is the key advantage of NS, we are rarely interested in its value directly. Practically useful thermodynamic information is extracted from the sampling as its first or second derivative, which is found to converge much faster than the actual value of the partition function.

A conceptually very similar algorithm, the density of states partitioning method used by Do and Wheatley [55,56,57] can be regarded as an NS with using only a single walker which is allowed to revisit previously explored higher energy states, and reducing the phase-space volume by a constant factor of two at every sampling level. Their calculations also used known crystalline structures as starting configurations [58]. A further similar techniques has since been proposed, the Nonequilibrium Importance Sampling of Rotskoff and Vanden-Eijnden to calculate the density of states and Bayes factors, using nonequilibrium trajectories [59].

2.4 Parallelization

The fact that NS relies on a live set of many configurations might appear to suggest that parallelization would be straightforward, but, in fact, the naive algorithm is entirely sequential. One configuration at a time is eliminated and another is cloned, and all of the computational cost is in the MC trajectory to decorrelate the cloned configuration. Several modifications have been proposed to provide for some level of parallelism, including discarding and cloning multiple configurations [60,61,62], moving many live points by a small amount at each iteration [51], and combining multiple independent NS runs [39].

One approach is to eliminate multiple configurations with the highest energies at each NS iteration [61, 62]. In the same way that the highest energy configuration provides an estimate of the boundary of the \(K/(K+1)\) fraction of configuration space volume, the \(N_p\)’th highest configuration provides an estimate of the \((K-(N_p-1))/(K+1)\) fraction of configuration space volume. Since eliminating several configurations must be followed by creating the same number of new decorrelated configurations, the computational cost of the MC walks required can be parallelized over the \(N_p\) independent configurations. Overall, the fraction of configuration space removed at each NS iteration increases by a factor of \(N_p\), and the number of NS iterations to achieve a certain compression decreases by a factor of \(N_p\). This reduced number of iterations is compensated by the computational cost of each iteration increasing by a factor of \(N_p\), but that cost can be parallelized over \(N_p\) tasks. Unfortunately, the variance in the computed phase-space volume increases with the number of configurations eliminated at each NS iteration (Appendix B.2.a of Ref. [48]), increasing the amount of noise and limiting the extent of parallelization using this method.

Another approach is to apply the MC walk not only to the newly cloned configuration, but also to \(N_p-1\) other randomly selected configurations, where \(N_p\) is the number of parallel tasks [51]. This leads to fluctuations in the length of the MC walk each configuration experiences between when it is cloned (and identical to another configuration) and when it is eliminated (which is the only time its position or energy affect calculated properties). If at each NS iteration, each of \(N_p\) configuration experiences an MC trajectory that is \(L/N_p\) steps, the mean number of steps for eliminated configurations remains equal to L, although the variance of the walk length increases (Appendix B.2.b of Ref. [48]). Each of these shorter MC trajectories can be simulated by one of \(N_p\) parallel tasks. While there are no quantitative results for how much this variability affects computed properties, it appears empirically that the effect is small, and this parallelization method is most commonly used in NS for materials. The degree of parallelization is limited by the need to keep \(L/N_p > 1\), so that at least some MC steps are taken at each iteration. For GMC or TE-HMC, the ratio must be larger than the length of a single short trajectory, typically of order 10 GMC segments or MD timesteps. Each short trajectory must be performed in its entirety to maintain the coherence between steps that gives these methods the ability to propose large-amplitude moves with non-negligible acceptance probability. In practice, the ratio \(L/N_p\) must be kept significantly larger to preserve good load balance between the tasks and because in the highly parallel regime (\(N_p\) of order 100) parts of the process other than the MC trajectory generation become significant.

A final approach to parallelizing NS is to run multiple independent trajectories and combine them after they are complete [39]. The combination of \(N_p\) independent runs with \(K'\) live points each is approximately equivalent to a single \(N_p K'\) live point run. While this approach has been explored for other applications of NS, it has not been widely used in materials. A possible reason is that the multiplicity of minima in typical materials systems is extremely large, and as a result, a serious problem is presented by extinction, as described in the previous subsection. The scope for parallelism is therefore limited by the need to keep \(K'=K/N_p\) large enough to prevent extinction in any important minima. While it has not been carefully studied, it may that the smallest \(K'\) that sufficiently avoids extinction is already too computationally expensive to allow for multiple runs.

2.5 Analyzing the results

The energies, configuration space volumes, and cell volumes generated by NS are sufficient to carry out the weighted sum in Eq. 4 numerically to evaluate the partition function. More importantly, physically meaningful quantities such as the energy or enthalpy and its derivative, the specific heat, can be computed as a function of temperature from \(Z(\beta )\). Rapid changes in the energy as a function of temperature, corresponding to peaks in the specific heat, are associated with abrupt changes in structure. For bulk systems in the thermodynamic limit, these become first-order transitions with singular specific heat, approximated by sharp peaks in the finite-size simulated systems. These peaks provide distinctive signatures of such transitions, and can be used to identify transition temperatures for phase diagrams.

An example of the raw and processed output from a single constant pressure NS simulation of the Lennard–Jones system is shown in Fig. 6. As seen in the top panel, the enthalpy as a function of NS iteration decreases monotonically, by construction, but its slope becomes more negative when the system goes through phase transitions. At each temperature a narrow range of iterations (and enthalpies) contributes significantly to the weighted sum, shifting smoothly within each phase, and jumping discontinuously between phases. The derivative of the enthalpy with respect to iteration, which is inversely proportional to \(\frac{\partial H}{ \partial S}=T\) and gives an estimate of the temperature that the current NS iteration will contribute to [48], decreases monotonically except for a temporary rise as the NS iteration process begins to go through the transition energy range. The sharp peaks in \(c_p(T)\) shown in the bottom panel coincide with the discontinuities in the ensemble average of the enthalpy, as they must, since they are calculated as analytical derivatives of the same weighted sum.

Nested sampling of the periodic system of 64 LJ particles. Top panel shows the enthalpy and the (estimated instantaneous) temperature as a function of the NS iterations. The bottom panel shows the enthalpy and the constant pressure heat capacity as a function of temperature for the same run. Shaded grey and green areas show the iteration and enthalpy ranges, respectively, where the partition function contribution is 10 times smaller than at the peak for the same temperature

2.6 Implementations for materials problems

One software implementation of the nested sampling and analysis algorithms discussed above is provided by pymatnest [63]. It uses python and the atomic simulation environment (ASE) package [64] to implement the Monte Carlo procedure and parallelization strategies described in the previous sections in a way that abstracts the material-dependent energy and force calculations. The PES evaluations can use any ASE calculator, including potentials implemented in the widely used LAMMPS MD software [65], although the computational cost is significant. Another python-based implementation, designed for non-periodic systems, is available [66], and forms the basis for the implementation [67] of the superposition enhanced nested sampling variant [62], discussed in more detail below.

3 Applications

3.1 Configuration space of clusters

The use of the nested sampling framework for sampling the potential energy landscape of materials was first demonstrated on small Lennard–Jones clusters [68]. Lennard–Jones clusters are popular test systems for new phase-space exploration schemes, due to the low computational cost of the interaction function and the large amount of data on their properties available in the literature [1, 2, 69, 70]. Nielsen also used NS to study LJ\(_{17}\) [71]. Apart from calculating thermodynamic properties, this also demonstrated that NS can provide a broad-brush view of the landscape, giving a helpful overview of the system. Visualizing the 3N-dimensional PES is a challenging task and projecting the landscape using ad-hoc order parameters can be very misleading. An efficient way of overcoming this is to represent the hierarchy of known minima and transition states using a disconnectivity graph [72,73,74], capturing the topology of the entire landscape. However, disconnectivity graphs do not include any information on the entropic contribution of different basins, and thus cannot provide guidance in understanding the thermodynamic behaviour of the system. NS naturally provides this missing information which can be used for more informative visualization. If distinct structures are identified and thus sampled configurations are sorted according to the basin they belong to using an appropriate metric to calculate the distance of configurations, the relative phase-space volume of these basins can be easily calculated: since configurations in the live set are uniformly distributed at every sampling level, the ratio of number of samples in different basins tells us their relative volume. An alternative that does not require explicit reference to the live set is presented in the next section. We used this information to construct the energy landscape chart of several Lennard–Jones clusters [68], where basins of minima explored by NS are shown as funnels with appropriate widths representing their phase-space volume.

The example of LJ\(_8\) is shown in Fig. 7, showing the global minimum structure along the seven known local minima. While all of these were found, it is obvious from this representation that their contribution to the phase-space volume is negligible compared to the main funnel leading to the global minimum, and thus, we can safely assume them to be thermodynamically irrelevant in this case. Similar energy landscape charts were also used by Burkoff et al. [60] to provide a qualitative insights into the folding process of a couple of poly-peptides, sampled by NS.

As the size of the system and thus the number of local minima increases, we cannot expect NS to explore all of them: since the size of the live set, K, controls the resolution of the PES we can see, thus narrow basins will inevitably be missed. However, since narrower basins contribute the least to the partition function, this does not hinder our ability to calculate thermodynamic properties and give an overview of the significant structures. This has also been demonstrated studying the melting behaviour of a CuPt nano-alloy [75], and studying the PES of small water clusters [76], using NS to find the few thermodynamically relevant minima. This also shed light on the connection between the thermodynamic properties and the features of the energy landscape: if local minima gave an overall small contribution to the total partition function, a sharp “melting” peak was observed on the heat capacity curve, while the existence of competing structures at finite temperature were associated with a more gradual transition. Figure 8 illustrates the analysis of the NS results on the cluster of 13 particles modelled by the mW potential of water [77].

Nested sampling of 13 mW water particles. Upper panel shows the heat capacity curve. In the middle panel, each dot corresponds to a configuration generated during nested sampling, showing their average \(Q_4\) bond order parameter [78] as a function of temperature and is coloured according to the average ring size within the cluster to help distinguish different basins of the PES. The bottom panel shows the relative phase-space volume ratio of global minimum basin (purple) and the local minimum basin (red), while the grey area represents the contribution of any other structure to the total partition function. Temperatures corresponding to the peaks on the heat capacity curve, indicating phase transitions, are shown by vertical dotted lines. Middle panel reprinted with permission from Fig. 2 of Ref. [76]. Copyright 2019 by the Royal Society of Chemistry

While in the large majority of systems, the basins with small phase-space volume can be disregarded without affecting our overall understanding, there are exceptions, especially in case of clusters. Some systems display a highly frustrated PES, where high barriers divide the landscape and the relative phase-space volumes of basins are significantly different at different energy levels. If a basin which is narrow at higher energies becomes the most significant at a lower energy level, we see a corresponding low-temperature (often solid–solid) phase transition in the system. In such cases, the NS live set has to be large enough to ensure a high resolution capable of capturing and sampling sufficiently the narrow basin of interest. To evade the increased computational cost and ensure that known minima are not “lost” during the sampling, Martiniani et al. proposed a method combining nested sampling with global optimisation techniques, superposition enhanced nested sampling [62]. In this case, sample configurations are not only generated from existing members of the live set, but are also drawn from a pre-existing database, proportional to their statistical weights, calculated using the harmonic superposition approximation (HSA). Its speed-up and accuracy has been demonstrated for LJ\(_{31}\) and LJ\(_{38}\), but databases miss the astronomical number of high-energy local minima that would be necessary to counteract the inaccuracy of HSA at high energies. For larger systems with broken ergodicity, such as LJ\(_{75}\), even this method struggles. To overcome the difficulty of sampling in these particular systems, the funnel-hopping Monte Carlo [79] and nested basin sampling [80] approaches have been recently proposed.

3.2 Phase diagram of materials

For bulk, rather than cluster, systems, our knowledge of the PES is often distilled into the form of a phase diagram, showing the stability regions of thermodynamically distinct phases or structures under different conditions, most typically temperature, pressure, or composition. This is crucial in both academic and industrial materials science applications, to be able to predict the state and the properties of a material. However, as we have discussed, obtaining the entire phase diagram under a wide range of conditions is a highly laborious task, requiring the use of multiple, conceptually different methods, and hence, it is rarely performed in an exhaustive way.

The first application of the NS technique to a bulk system, which used a periodic supercell approximation, was done on the hard sphere solid [81], calculating the compressibility from the partition function to locate the fluid–solid-phase transition and discussing the phase-space volume of jammed structures. The following year, Nielsen et al. performed constant pressure NS simulations of two Lennard–Jones clusters in a hard sphere cavity, as well as a periodic LJ system in a fixed cubic box [82].

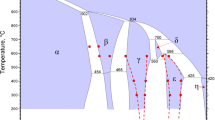

These works were followed by an extension of NS to constant pressure sampling in a fully flexible periodic simulation cell, which allowed, for the first time, the calculation of the entire pressure–temperature phase diagram of a material. The first such simulations were performed for the Lennard–Jones potential and four EAM models of aluminium, as well as for the composition–temperature–pressure phase diagram of an EAM model of the NiTi shape memory alloy, calculating the martensitic phase transition [51]. This work demonstrated how the peaks in the heat capacity curve enable us to locate not just the boiling and melting curves, but also the Widom line above the critical point, and the solid–solid-phase transitions, as demonstrated in Fig. 9. To locate the critical point in the nested sampling calculations, we drew on the results of Bruce and Wilding [83]: for a finite system at and below the critical point, the density distribution appears bimodal (at the temperature corresponding to the maxima of the heat capacity peak), while above the critical point, the density distribution transitions quickly becomes unimodal. We used this argument to estimate the critical pressure to be between the two adjacent sampling pressures where the modality of the distribution changes, and demonstrated that results provided by this approach are in very good agreement with those calculated by the Gibbs-ensemble Monte Carlo technique [51]. Similar simulations of phase transitions at a single pressure were also calculated for the mW model of water and a system of coarse-grained bead-spring polymers [48].

Identifying different structures using suitably chosen order parameters enables the separation of different basins of the PES. As Fig. 10 shows, NS not only identifies the most stable structure, but provides information on the thermodynamically relevant metastable phases as well. Similar extensive phase diagram comparison studies have been performed for a range of EAM type potentials of iron [85] and lithium [52].

Nested sampling of the Mishin EAM for aluminium [86]. The upper panel shows a section of the temperature–pressure phase diagram, featuring the melting line and the phase boundaries of the three crystalline phases identified by NS: fcc, hcp, and bcc. The bottom panels show the average \(Q_6\) bond order parameter [78] as a function of enthalpy for configuration generated during nested sampling at three different pressures. \(Q_6\) order parameter for the perfect crystalline structures are shown by horizontal dotted lines, and the phase transition temperature between hcp and bcc is marked by a vertical dashed line in the middle panel

It is also possible to characterize these basins without the need to refer to the live set, as was done for the Lennard–Jones clusters in Sect. 3.1. The entire trajectory is converted into a graph, where each configuration is a node, and the edges are connections to its k nearest neighbors in feature space (i.e., k most similar configurations defined from a structurally relevant similarity measure). The network is separated into connected components, and each component defines a basin, identified by its minimum energy structure. The configuration space volume associated with each basin is the sum of weights associated with each sample in the connected component. In order of decreasing energy, one node (sample) and all of its edges are eliminated, and the new network is analyzed for connected components. If removing the highest energy node causes a basin to decompose into two or more disconnected subgraphs, those become new basins with their own volumes, and the energy barrier between them is simply the energy of the last node that connected them. This process is repeated until all the samples have been eliminated. To visualize the results, the left and right edges of each basin at each iteration are plotted, so that the x-axis distance between them is proportional to the basin’s volume at the same iteration, at a y-axis position equal to the energy of that iteration. Because the range of volumes can be extremely large (e.g. \(10^{96}\) for a 64-atom Li cell), it must be scaled to be visible in a plot. Keeping the relative volumes of the basins at each energy in correct proportion, scaling the overall width to a function that decreases exponentially with energy leads to a visually pleasing result that conveys the rapid decrease in configuration space.

An example from an NS simulation of Li at an applied pressure of 15 GPa with an embedded atom model (EAM) [52] is shown in Fig. 11, using the similarity defined by the configuration-averaged SOAP overlap [87, 88] with \(n_\mathrm {max}=8\), \(l_\mathrm {max}=8\), and a cut-off of three times the nearest-neighbor distance of 2.55 Å(from the NS trajectory’s minimum energy configuration). The raw data are somewhat noisy, with many spurious but tiny clusters, so the basins are merged if the barrier between them is less than 2 eV or if they contain fewer than 10 samples when they split off. The visualization procedure identifies three remaining basins. The first to split off is the hexagonal close-packed structure (hcp, AB stacking of close-packed layers), which persists until low enthalpy but always occupies a very small fraction of the configuration space. At only slightly lower enthalpy, the face centered cubic structure (fcc, ABC stacking) basin splits off, occupying approximately half of the explored configuration space volume. The remaining basin constitutes the ground state, with mixed hcp and fcc-like ABAC stacking, but this structure coexists with fcc for about 4 eV, only dominating at enthalpies below about \(-15\) eV. The competition between these basins leads to variations in the observed structure as a function of temperature.

Visualization of the potential energy landscape of lithium at \(P=15\) GPa described by an EAM [89]. At high enthalpy, there is no separation into different basins, and all configurations are associated with a single basin. The three basins that become distinct at lower enthalpies (dashed lines indicate the enthalpy of each barrier) correspond to the hcp structure (orange, left), fcc structure (green, center), and mixed ABAC stacking (right, blue). At each enthalpy value, the relative widths of the basins correspond to their relative configuration space volumes, and the overall width is scaled to be exponential with enthalpy. The ground-state mixed stacking basin is not closed at the bottom to indicate that we have no data on its minimum enthalpy, although it is likely to be close to the lowest sampled value

An initial study of a multicomponent system described an order–disorder transition in a solid solution binary-LJ system [48]. The CuAu alloy has also been repeatedly used as a model binary system: after calculating the melting line [48], the entire composition–temperature phase diagram of the CuAu binary system was also calculated [90], and both NS and WL used to study the order–disorder of the occupancy of lattice sites, without the liquid phase being explored [91]. NS was also used to determine the mean number of hydrogen atoms trapped in the vacancies of the \(\alpha \)-Fe phase [92]. An initial fixed composition NS simulation was used to determine the chemical potential of a single H atom within a relatively small supercell of perfect crystal. Simulations at a range of different H concentrations were carried out with NS, and then combined into a single grand-canonical partition function (for variable H atom number) using the calculated chemical potential.

The semi-grand-canonical ensemble was used to simulate the temperature–composition phase diagram for a machine-learning potential of the AgPd alloy [50]. In this case, the composition is an output observable of the NS simulation, as plotted in Fig. 12 for a number of NS trajectories at different chemical potential difference values. When the trajectory goes through a phase transition with a two-phase region, in addition to the discontinuity in the equilibrium structure and average energy above and below the transition, the equilibrium composition changes as well. As a result, the peak in the specific heat as a function of temperature is correlated with an abrupt jump in the composition, from the value in the high-temperature phase to that in the low-temperature phase. Finite system size effects broaden the transition, as seen in the figure, where the change in composition as a function of T across the two-phase region is rapid but far from discontinuous. The change becomes more abrupt as the system size increases, approaching a discontinuity, jumping from the liquidus to the solidus, in the large system limit.

Temperature–composition phase diagram for Ag\(_x\)Pd\(_{1-x}\) alloy using an ML interatomic potential, calculated by s-GC NS (solid lines representing n(T)), fixed composition NS (blue circles representing transition T and vertical bars indicating peak width), and direct two-phase coexistence simulation for a larger system (red circle). Dotted lines indicate boundaries of experimentally observed two-phase region, and all simulation results are shifted up by 200 K to facilitate comparison with experimental shape and extent of two-phase region. The s-GC NS constant \(\Delta \mu \) n(T) curves show a gradual shift in composition as temperature changes, interrupted by an abrupt change in the (finite-size broadened) phase transition region. The left and right edges of the lower slope parts of each curve are the high- and low-T boundaries of the two-phase coexistence region, respectively. The composition-dependent phase transition between 1000 K and 1500 K is the solid–liquid transition, and the low-temperature phase transition, only shown at one composition, is a solid-phase order–disorder transition. Taken From Fig. 5 in Ref. [50]

An important conclusion of these calculations is that the macroscopic behaviour of potential models can be very different from what is expected by chemical intuition, and thus suggested by previous calculations. Using NS has lead to the identification of previously unknown crystalline phases, new ground-state structures, and phase transitions not anticipated before, for a significant proportion of tested potential models. Since using nested sampling, the calculation of the phase diagram is no longer a bottleneck, the reliability of the chosen model can be easily established by performing the sampling, and thus, we can determine the conditions where the model is valid, before it is used for a specific purpose or study. While in some cases, the newly identified structure has little influence on the practical use of the model (e.g., a bcc structure transforming to body-centered-tetragonal at very low temperatures [85]), in other cases, these shed light on significant new behaviour of the model, which affects computational findings in general. Most notably, NS calculations on the Lennard–Jones model led to the discovery of new global minima: depending on the pressure and the applied cut-off distance, the LJ ground-state structure can be different stacking variants of close packed layers [93]. Also, recent NS studies of the hard-core double-ramp Jagla model revealed a previously unknown thermodynamically stable crystalline phase, drawing surprising similarities to water [94].

3.3 Broader applications in materials science

While in the majority of materials applications, thermodynamic properties derived from the partition function are the target calculated quantities, its absolute value can also be the focus of interest. For example, the partition function is necessary to establish the correct temperature dependence of spectral lines and their intensity. NS, as a unique tool to give access directly to the partition function, has been used to calculate the rovibrational quantum partition function of small molecules of spectroscopic interest, using the path-integral formalism [95]. This has showed that NS can be efficiently used, especially at elevated temperatures and in case of larger molecules, where the direct-Boltzmann-summation technique of variationally computed rovibrational energies becomes computationally unfeasible.

The NS algorithm has also been applied to sample the same path through phase space as would be covered in traditional coupling-parameter-based methods such as thermodynamic integration and perturbation approaches, but without the need to define the coupling parameter a priori. The combined method, Coupling Parameter Path Nested Sampling [96], can be used to estimate the free energy difference between two systems with different potential energy functions by continuously sampling favorable states from the reference system potential energy function to the target potential energy function. The case studies of a Lennard–Jones system at various densities and a binary fluid mixture showed very good agreement with previous results.

Nested Sampling has been used to sample transition paths between different conformations of atomistic systems [97]. The space of paths is a much larger space than that of the configurations themselves. To make it finite-dimensional, the paths are discretised, and the well-established statistical mechanics of transition path sampling (TPS) is leveraged [98]. The top panel of Fig. 13 shows a double-well toy model with just two degrees of freedom. There are two pathways that connect the two local minima, and the barrier via the top path is slightly higher. The results of the Nested Transition Path Sampling (NTPS) shows the expected result: at high energies, the paths are random (black); at medium energies, the short path dominates (grey), but at low energies, the longer bottom path, which has the lowest barrier energy, dominates (white).

The bottom panel of Fig. 13 shows the results of NTPS applied to a Lennard–Jones cluster of 7 particles in two dimensions. There are four transition states that facilitate permutational rearrangements of the ground-state structure. Depending on the temperature (\(1/\beta \)), the fractions of the different mechanisms that lead to successful rearrangements change, as shown. The estimate of the same fractions just from the transition state barrier energies is shown by dashed lines. The difference becomes large at high temperatures, where anharmonic effects neglected by transition state theory but included in NS become significant.

Nested sampling has also been used for more traditional Bayesian inference problems in the context of materials modelling [99]. There, the task was to reconstruct the distribution of spatially varying material properties (Young’s modulus and Poisson ratio) from the observation of the displacement at a few selected points of a specimen under load. Under assumption of linear elasticity, the displacement is a linear function of the stress, given fixed material properties. Since the stresses cannot be observed, and the displacements as a function of the unknown material properties are highly nonlinear, determining the material parameters is a difficult inverse problem. The use of nested sampling allowed rigorous model comparison by calculating the Bayesian evidence. Furthermore, under realistic regimes including boundary conditions, number of observations, and level of noise, the likelihood surface turned out to be highly multimodal. The best reconstructions were obtained by considering the mean of the posterior, sampling over a very diverse parameter range, and significantly outperformed the naive estimate that takes the parameters corresponding to the maximum likelihood.

Nested transition path sampling for a model double-well potential (top) and two-dimensional LJ\(_7\) (bottom). The inset shows the heatmap of the double-well potential, with three example transition paths: a high-energy path (black), a medium-energy short path through the slightly higher top barrier (grey), and a low-energy longer path through the slightly lower bottom barrier. In the main plot, the greyscale of the paths correspond to the NS iteration (and thus also path energy). For LJ\(_7\), the graph shows the fraction of various rearrangement mechanisms as a function of temperature (top x axis, with inverse temperature shown on the bottom x axis). The dashed lines correspond to the expected fraction based on the barrier heights and assuming harmonic transition state theory. Reprinted figure with permission from Ref. [97]. Copyright 2018 by the American Physical Society

4 Conclusions and future directions

The nested sampling method is a powerful way of navigating exponentially peaked and multimodal probability distributions, when one is interested in integrals rather than just finding the highest peak. NS uses an iterative mapping and sorting algorithm to convert the multidimensional phase-space integral into a one-dimensional integral, enabling the direct calculation of the partition function for materials given a potential energy function. In its materials science application, NS is a top–down approach, sampling the potential energy surface of the atomic system in a continuous fashion from high to low energy, where the sampling levels are automatically determined in a way that naturally adapts to changes of the phase-space volume. Its output can be postprocessed to compute arbitrary properties at any temperature without repeating the computationally demanding step of generating the relevant configuration samples.

The practical benefit and implications of the NS results are inherently connected to the quality of the interaction model employed to calculate the energy of atomic configuration. Since NS thoroughly explores the configuration space and finds equilibrium configurations, it cannot be restricted to specific experimentally known structures if they are not also equilibrium phases of the computational potential energy model. While on one hand, this can be turned to our advantage, and used to determine the reliability and accuracy of existing and widely used models in reproducing desired macroscopic behaviour, for many materials, in particular with multiple chemical species, sufficiently accurate and fast potential energy models are not yet available. The increasing accuracy and efficiency of machine-learning interatomic potentials is a promising avenue to fulfill this need, complementing NS to make an emerging and powerful tool for predicting material properties.

The unique advantage of NS has been demonstrated for a range of atomistic systems, gaining a broad overall view of the entire PES and providing both thermodynamic and corresponding structural information, without any prior knowledge of potential phases or transitions. This capability inspires materials calculations from a new perspective, both in terms of allowing high-throughput and automated calculation of these thermodynamic properties, and also in enabling sampling those parts of the configuration space that are considered challenging. Its success in the systems studied so far provides motivation to explore the application of NS for a wider range of materials problems; studying glass transition and behaviour of disordered phases, phase transitions of systems exhibiting medium-range order; molecular systems, from simple particles to polymers and proteins; or sampling regions around the critical point where fluctuations are typically large.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The current manuscript is a review article of previous works, hence no new data and results are presented.]

References

D. Wales, Energy Landscapes (Cambridge University Press, Cambridge, 2003)

D.J. Wales, J.P.K. Doye, Global optimization by basin-hopping and the lowest energy structures of Lennard-Jones clusters containing up to 110 atoms. J. Phys. Chem. A 101, 5111 (1997)

I. Rata, A.A. Shvartsburg, M. Horoi, T. Frauenheim, K.W. Siu, K.A. Jackson, Single-parent evolution algorithm and the optimization of Si clusters. Phys. Rev. Lett. 85, 546 (2000)

N.L. Abraham, M.I.J. Probert, A periodic genetic algorithm with real-space representation for crystal structure and polymorph prediction. Phys. Rev. B 73, 224104 (2006)

S. Goedecker, Minima hopping: an efficient search method for the global minimum of the potential energy surface of complex molecular systems. J. Chem. Phys. 120, 9911 (2004)

S. Kirkpatrick, C.D. Gelatt, M.P. Vecchi, Optimization by simulated annealing. Science 220, 671 (1983)

M. Karabina, S.J. Stuart, Simulated annealing with adaptive cooling rates. J. Chem. Phys. 153, 114103 (2020)

C.J. Pickard, R.J. Needs, High-pressure phases of silane. Phys. Rev. Lett. 97, 045504 (2006)

C.J. Pickard, R.J. Needs, Highly compressed ammonia forms an ionic crystal. Nat. Mat. 7, 775 (2008)

A. Oganov, C. Glass, Crystal structure prediction using evolutionary algorithms: principles and applications. J. Chem. Phys. 124, 244704 (2006)

C. Glass, A. Oganov, N. Hansen, Uspex—evolutionary crystal structure prediction. Comp. Phys. Comm. 175, 713 (2006)

Y. Wang, J. Lv, L. Zhu, Y. Ma, Crystal structure prediction via particle swarm optimization. Phys. Rev. B 82, 094116 (2010)

Y. Wang, J. Lv, L. Zhu, Y. Ma, Calypso: a method for crystal structure prediction. Comp. Phys. Comm. 183, 2063 (2012)

Y. Ma, M. Eremets, A.R. Oganov, Y. Xie, I. Trojan, S. Medvedev, A.O. Lyakhov, M. Valle, V. Prakapenka, Transparent dense sodium. Nature 458, 182 (2009)

M.R. Hoare, Structure and dynamics of simple microclusters. Adv. Chem. Phys. 40, 49 (1979)

F. Montalenti, A.F. Voter, Exploiting past visits or minimum-barrier knowledge to gain further boost in the temperature-accelerated dynamics method. J. Chem. Phys. 116, 4819 (2002)

B.A. Berg, T. Neuhaus, Multicanonical ensemble: a new approach to simulate first-order phase transitions. Phys. Rev. Lett. 68, 9 (1992)

C. Micheletti, A. Laio, M. Parrinello, Reconstructing the density of states by history-dependent metadynamics. Phys. Rev. Lett. 92, 170601 (2004)

A. Panagiotopoulos, Direct determination of phase coexistence properties of fluids by monte carlo simulation in a new ensemble. Mol. Phys. 61, 813 (1987)

P.M. Piaggi, M. Parrinello, Calculation of phase diagrams in the multithermal-multibaric ensemble. J. Chem. Phys. 150, 244119 (2019)

A.D. Bruce, N.B. Wilding, G.J. Ackland, Free energy of crystalline solids: a lattice-switch monte carlo method. Phys. Rev. Lett. 79, 3002 (1997)

D.D. Frantz, D.L. Freemann, J.D. Doll, Reducing quasi-ergodic behavior in monte carlo simulations by j-walking: applications to atomic clusters. J. Chem. Phys. 93, 2769 (1990)

R.H. Swendsen, J.S. Wang, Replica monte carlo simulation of spin-glasses. Phys. Rev. Lett. 57, 2607 (1986)

F. Wang, D.P. Landau, Efficient, multiple-range random walk algorithm to calculate the density of states. Phys. Rev. Lett. 86, 2050 (2001)

A.N. Morozov, S.H. Lin, Accuracy and convergence of the wang-landau sampling algorithm. Phys. Rev. E 76, 026701 (2007)

E. Marinari, Optimized monte carlo methods, in Advances in Computer Simulation: Lectures Held at the Eötvös Summer School, edited by J. Kertész and I. Kondor (Springer, Berlin, 1998) p. 50

A. Nichol, G.J. Ackland, Property trends in simple metals: an empirical potential approach. Phys. Rev. B 93, 184101 (2016)

J. Skilling, Bayesian inference and maximum entropy methods in science and engineering. AIP Conf. Proc. 735, 395 (2004)

J. Skilling, Bayesian Anal. 735, 833 (2006)

J. Skilling, Nested sampling’s convergence, AIP Conf. Proc., 1193, 277 (2009)

P. Mukherjee, D. Parkinson, A.R. Liddle, A nested sampling algorithm for cosmological model selection. Astrophys. J. 638, 51 (2006)

F. Feroz, M.P. Hobson, Multimodal nested sampling: an efficient and robust alternative to mcmc methods for astronomical data analysis. Mon. Not. R. Astron. Soc. 384, 449 (2008)

F. Feroz, M.P. Hobson, M. Bridges, Multinest: an efficient and robust Bayesian inference tool for cosmology and particle physics. Mon. Not. R. Astron. Soc. 398, 1601 (2009)

A. Fowlie, W. Handley, L. Su, Nested sampling cross-checks using order statistics. MNRAS 497, 5256–5263 (2020)

J. Buchner, Collaborative nested sampling: Big data versus complex physical models. Astron. Soc. Pac. 131, 108005 (2019)

F. Feroz, J. Gair, M. Hobson, E. Porter, Use of the multinest algorithm for gravitational wave data analysis. J. Phys. Conf. Ser. 26, 215003 (2009)

J.R. Gair, F. Feroz, S. Babak, P.G.M.P. Hobson, A. Petiteau, E.K. Porter, Nested sampling as a tool for lisa data analysis. J. Phys.: Conf. Ser. 228, 012010 (2010)

B.J. Brewer, Computing entropies with nested sampling. Entropy 19, 422 (2017)

R.W. Henderson, P.M. Goggans, L. Cao, Combined-chain nested sampling for efficient Bayesian model comparison. Digit. Signal Process. 70, 84 (2017)

P.M. Russel, B.J. Brewer, S. Klaere, R.R. Bouckaert, Model selection and parameter inference in phylogenetics using nested sampling. Syst. Biol. 68, 219 (2019)

N. Pullen, R.J. Morris, Bayesian model comparison and parameter inference in systems biology using nested sampling. PLoS One 9, e88419 (2014)

J. Mikelson, M. Khammash, Likelihood-free nested sampling for parameter inference of biochemical reaction networks. PLoS Comput. Biol. 16, e1008264 (2020)

J. Skilling, Bayesian computation in big spaces-nested sampling and Galilean monte carlo. AIP Conf. Proc. 1443, 145 (2012)

M. Betancourt, Nested sampling with constrained Hamiltonian monte carlo. AIP Conf. Proc. 1305, 165 (2011)

S. Duane, A. Kennedy, B.J. Pendleton, D. Roweth, Hybrid monte carlo. Phys. Lett. B 195, 216 (1987)

D. Frenkel, Simulations: the dark side. Eur. Phys. J. Plus 128, 10 (2013)

R.J.N. Baldock, Classical Statistical Mechanics with Nested Sampling (University of Cambridge, Springer Theses, Springer, 2017). (Ph.D. thesis)