Abstract

Background

Australian health and medical research funders support substantial research efforts, and incentives within grant funding schemes influence researcher behaviour. We aimed to determine to what extent Australian health and medical funders incentivise responsible research practices.

Methods

We conducted an audit of instructions from research grant and fellowship schemes. Eight national research grants and fellowships were purposively sampled to select schemes that awarded the largest amount of funds. The funding scheme instructions were assessed against 9 criteria to determine to what extent they incentivised these responsible research and reporting practices: (1) publicly register study protocols before starting data collection, (2) register analysis protocols before starting data analysis, (3) make study data openly available, (4) make analysis code openly available, (5) make research materials openly available, (6) discourage use of publication metrics, (7) conduct quality research (e.g. adhere to reporting guidelines), (8) collaborate with a statistician, and (9) adhere to other responsible research practices. Each criterion was answered using one of the following responses: “Instructed”, “Encouraged”, or “No mention”.

Results

Across the 8 schemes from 5 funders, applicants were instructed or encouraged to address a median of 4 (range 0 to 5) of the 9 criteria. Three criteria received no mention in any scheme (register analysis protocols, make analysis code open, collaborate with a statistician). Importantly, most incentives did not seem strong as applicants were only instructed to register study protocols, discourage use of publication metrics and conduct quality research. Other criteria were encouraged but were not required.

Conclusions

Funders could strengthen the incentives for responsible research practices by requiring grant and fellowship applicants to implement these practices in their proposals. Administering institutions could be required to implement these practices to be eligible for funding. Strongly rewarding researchers for implementing robust research practices could lead to sustained improvements in the quality of health and medical research.

Similar content being viewed by others

Background

Australian health and medical research funders support substantial research efforts in health and biomedical research. Each year, $7.9 billion (4% of all spending on health) is spent on health and medical research in Australia, with funds channelled through the National Health and Medical Research Council (NHMRC, $845 million), the Australian Research Council’s contribution to health and medical research (ARC, $79 million), and the Medical Research Future Fund (MRFF, $392 million) [1]. Research grants and fellowships are usually awarded by merit on a competitive basis. The ability to secure funding can potentially make or break research careers by developing research programs and teams or impeding their development [2, 3]. Consequently, incentives in grant funding schemes substantially influence the behaviour of researchers [4].

Health and medical research funders, and the scientific community as a whole, recognise that the quality of published research and current reporting practices can be inadequate. Research findings can be poorly reported or at high risk of bias, as highlighted in the ongoing COVID-19 pandemic [5, 6], so ensuring research rigour is especially important. Funders such as the NHMRC and the ARC have called for stronger emphasis on responsible research practices, methodological rigor, and transparency [7, 8]. However, it is not known if these calls translate to incentives for responsible research practices in funding applications. This is important, because funders significantly influence research culture and could positively impact researchers’ behaviour. Thus, we conducted an audit of instructions from research grant and fellowship schemes to determine to what extent Australian health and medical funders incentivise responsible research practices.

Method

Category 1 to 3 [9] research grants and fellowships were purposively sampled from the 2018 Australian Competitive Grants Register [10]. Category 1 grants are Australian competitive grant research and development (R&D) income. Category 2 grants are other public sector R&D income, including grants from local, state or partial government bodies. Category 3 grants are industry and other R&D income, including grants from the private sector, philanthropic and international sources.

Funding schemes were sampled to select government and non-profit schemes that awarded the largest amount of funds, as identified by The University of Sydney (Research Grants and Contracts: https://www.sydney.edu.au/research/research-funding.html) at the time of the audit (Sep 2019). These schemes were selected because they are highly competitive and might influence the behaviour of many researchers. For funders with multiple schemes, recurring investigator-initiated schemes were sampled. Schemes were excluded if they were special calls, internal schemes, international funding, or partnership grants. The following 8 schemes were assessed: ARC Discovery Projects, ARC Discovery Early Career Researcher Award (DECRA), NHMRC Project Grants, NHMRC Career Development Fellowships (CDF), NHMRC Clinical Trials and Cohort Studies (CTCS) Grants, Diabetes Australia Research Trust (DART) General Grants and Millennium Awards, National Breast Cancer Foundation (NBCF) Investigator Initiated Research Scheme, Bupa Foundation (Australia) Limited Bupa Health Foundation grants.

Nine criteria were developed to assess instructions from the schemes. We focused on incentives for responsible research practices based on principles from large consensus discussions on assessing scientists [11] and the Transparency and Openness Promotion Guidelines [12]. These principles are important as they are in keeping with principles of research integrity, research rigour, and the Australian Code for the Responsible Conduct of Research [13]. Our criteria determined if instructions from the schemes incentivise applicants to: (1) publicly register study protocols before starting data collection, (2) register analysis protocols before starting data analysis, (3) make study data openly available, (4) make analysis code openly available, (5) make research materials openly available, (6) discourage use of publication metrics, (7) conduct quality research (e.g. adhere to reporting guidelines), (8) collaborate with a statistician, and (9) adhere to other responsible research practices. If a scheme stipulated applicants to comply with supporting governance documents (e.g. Australian Code for the Responsible Conduct of Research 2007 [13]), data were extracted from the supporting documents.

Each criterion was answered using one of the following responses: “Instructed”, “Encouraged”, or “No mention”. Data were extracted by one investigator (KR) using a customised form in REDCap electronic data capture tools, and independently checked by another investigator (JD, CK). Differences were resolved by discussion. The questions to assess the 9 criteria and their interpretation are as follows:

-

1.

Do instructions incentivise publicly registering study protocols before starting data collection?

Instructions must state that study protocols must be publicly registered with a date and time-stamp before starting data collection.

-

2.

Do instructions incentivise registering analysis protocols before starting data analysis?

Instructions must state that analysis protocols must be registered with a date and time-stamp before starting data collection.

-

3.

Do instructions incentivise making study data openly available to the research community?

Instructions must state that data must be made openly or publicly available.

-

4.

Do instructions incentivise making analysis code openly available to the research community?

Instructions must state that computer code used to analyse the data must be made openly or publicly available.

-

5.

Do instructions incentivise making research materials openly available to the research community?

Instructions must state that materials used to conduct the research must be made openly or publicly available. Examples include but are not limited to supplemental appendices, questionnaires, survey instruments, scoring rubrics, visual stimuli, and scripts used by research personnel.

-

6.

Do instructions incentivise the discouragement of publication metrics?

Instructions must state that publication metrics (e.g., impact factor, H-index) should not be used.

-

7.

Do instructions incentivise research quality (e.g., adherence to reporting guidelines)?

Instructions must state at least 1 mechanism to promote research quality that was not part of former criteria. Examples include but are not limited to adhering to reporting guidelines, adhering to ethical standards, avoiding or acknowledging biases, prioritising robust methodology, broadening research dissemination, and maximising the value and impact of all research output.

-

8.

Do instructions incentivise collaboration with a statistician?

Instructions must state that applicants consult a statistician for complex quantitative analyses.

-

9.

Do instructions incentivise any other responsible research practices?

Instructions must state any at least 1 other responsible research practice that was not covered in Question 7. Examples include but are not limited to research training, and declaring conflicts of interest.

Full details on definitions of the questions to assess the 9 criteria and definitions of the scores are provided in Additional files 1 and 2. The study protocol and analysis plan, and all data are available on the Open Science Framework (https://osf.io/vnxu6/, https://osf.io/2jesc). A checklist for survey research was used to ensure key information from this audit were reported [14].

Results

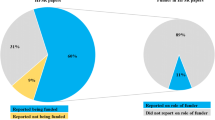

Across the 8 schemes from 5 funders, applicants were instructed or encouraged to address a median of 4 (range 0 to 5) of the 9 criteria (Tables 1 and 2). Three criteria received no mention in any scheme (register analysis protocols, make analysis code open, collaborate with a statistician). With respect to funders, the ARC and NHMRC instructed or encouraged applicants to address 4 or more criteria. However, the smaller funders made no mention of almost all criteria. Applicants were only instructed to register study protocols (NHMRC CTCS), discourage use of publication metrics (NHMRC Project Grants, NHMRC CDF, NHMRC CTCS) and conduct quality research (ARC Discovery Projects, ARC DECRA); other criteria were encouraged but were not required.

Discussion

In our analysis of 8 funding schemes, the schemes only satisfied some criteria on incentives to improve responsible research practices. The ARC and NHMRC were the most robust and stipulated compliance to the Australian Code for the Responsible Conduct of Research. Overall, however, the schemes’ incentives for applicants to implement such practices did not seem strong as they were at most encouraged rather than being required. Grant and fellowship applicants were only instructed to comply with 3 out of 9 criteria in 5 out of 8 schemes. The ability to secure funding and the pressure to publish are potentially strong drivers of research conduct. Consequently, the lack of strong incentives for responsible research practices in funded research may undermine efforts to improve research rigour.

We aimed to assess if Australian health and medical funders incentivise responsible research practices by sampling broadly from the 2018 Australian Competitive Grants Register. To obtain a sample of funding schemes that was feasible to assess yet sufficiently representative of Australian funding schemes, we sampled purposively from the Register, ensuring that schemes from the two major national funders (ARC, NHMRC) as well as smaller funders (e.g., Bupa Foundation) were included. This potentially means our findings are generalisable to the wider population of all schemes for funding health and medical research in Australia.

Our 9 criteria were developed using a selection of recommendations from large consensus discussions on assessing scientists, and implemented principles of research integrity and open science [11, 12]. Although some of our criteria assess reproducible research practices (criteria 1 to 5), others assess broader aspects of research conduct (criteria 6 and 8) and research quality and integrity (criteria 7 and 9). So our criteria assess broad aspects of research integrity and rigour, which extend beyond aspects such as fraud, research reproducibility and open science.

Funders incentivised some responsible research practices (e.g. minimise use of publication metrics, make study data openly available, make research materials openly available) but made no mention of others (e.g. register analysis protocols before starting data analysis, make analysis code openly available, collaborate with a statistician). What might explain these differences? Funders might regard some aspects of responsible research practices as standard practice, and do not see the need to state these explicitly in funding scheme instructions. Or, funders might lack awareness in aspects of responsible research practices and their value. Barriers to implementing incentives for responsible research practices in grant and fellowship schemes may be similar to barriers to adopting open science practices in research publishing. In publishing, limited adoption of open science practices could be due to the rapid need for data (e.g. early during the COVID-19 pandemic), the pressure to publish at high volume and rapidly, potential lack of thoroughness in peer review, and strong institutional incentives for research productivity [15]. In contrast, grant and fellowship applications are reviewed by an expert panel but are never published (to preserve intellectual property and privacy). The limited implementation of incentives for responsible research practices by funders could be due to strong perceptions of what makes grant and fellowship applications “excellent”, such as innovation, significance, and impact. Strengthening the incentives for responsible research practices in funding scheme instructions may require further investigation and joint effort between funders and administering institutions.

Simply encouraging or recommending responsible research practices seems unlikely to substantially change researcher behaviour. For example, journal instructions to authors indicate acceptable standards for publication, and should be used to improve reporting practices. However, audits of papers published before and after the introduction of journal guidelines to improve statistical reporting found no improvement in the proportion of papers reporting statistics correctly [15, 16], or defining statistics correctly in figures [17]. Indeed, a large survey of journals’ instructions to authors across the sciences show that only low to moderate percentages of journals implement transparent reporting practices [18]. Not surprisingly, the quality of published research reports is largely inadequate [19,20,21]. If most researchers do not implement the recommended reporting practices in journal instructions, it does not seem likely they will implement responsible research practices that are encouraged in funding applications but are not required.

Different strategies could be used to encourage greater uptake of responsible research practices in grant and fellowship funding schemes. First, applicants could be scored and ranked against criteria that strongly incentivise responsible research practices. With competition as a strong driving force, this approach could incentivise new ways to implement these practices, wider implementation, or increased emphasis on aspects of research reproducibility. Second, aspects of responsible research practices could be implemented as criteria for eligibility, with applicants assessed at submission. Third, if applicants are successful, a proportion of funds awarded could be withheld until applicants provide evidence to show they are implementing responsible research practices. The latter two approaches would underscore that responsible research practices are standard procedures in the conduct of research, and may help normalise such research behaviours. Given the recent calls for research reproducibility and transparency [8, 17, 22], it is important that researchers are rewarded for implementing responsible research practices in funded research. This could lead to sustained improvements in the quality of health and medical research.

Some of our criteria would benefit from more nuanced interpretation. In criterion (4), we assessed if funding instructions incentivise applicants to make analysis code openly available. “Analysis code” is often thought to mean computer code, however computer code may not be relevant in some study designs, such as qualitative research or systematic reviews. We could apply a broad interpretation of “analysis code” to mean any method or strategy to analyse data. This would then include methods such as spreadsheets, binary files, or typed/handwritten procedures describing how data were analysed. In criterion (6), we assessed if funding instructions discourage the use of publication metrics. Journal impact factors are often inappropriately used to assess the calibre of individual researchers [23]. The 3 funding schemes that satisfied this criterion specifically stated that impact factors should not be used. In future, this criterion could be refined to refer specifically to impact factors. In criterion (7), we assessed if funding instructions incentivised the conduct of quality research. We applied a broad interpretation of research quality and assessed if funding instructions stated at least 1 mechanism to promote research quality that was not part of former criteria. Examples of these are adhering to reporting guidelines, adhering to ethical standards, and avoiding or acknowledging biases. Thus, this criterion applies broadly to the conduct of research, not only its reporting. In future, this criterion could be refined to include more specific mechanisms to promote research quality, as prompts to auditors. Lastly, in criterion (8), we assessed if funding instructions incentivised applicants to collaborate with a statistician. We included this criterion because statisticians are often involved in research design [24] and as experts in the peer review process [25], but many published studies in our fields still describe common errors from inappropriate statistical techniques. We wanted to assess if funders are aware of these limitations and seek to rectify them. As it is, our study showed that no funding scheme satisfied this criterion. An appropriate methodologist for a study would depend on the study design, and there is a range of methodological expertise across the sciences (e.g. qualitative research, computational modelling, etc.). In future, this criterion could be refined to apply a broader interpretation of methodological expertise.

Our criteria were developed to assess instructions from funding schemes, but they can be adapted and applied in the conduct of research. As an example, we applied the same 9 criteria where relevant in conducting this audit. The study protocol with its methods and analysis plan were publicly registered on the Open Science Framework (OSF) before data collection began. All data and research materials are openly available from the project repository, or in the Additional files 1 and 2 of this manuscript. There was no computer analysis code for this project; data were manually extracted and analysed as outlined in the Methods. We avoided the use of publication metrics in justifying the rationale of this study or discussing our findings. We used a checklist for survey research to ensure that key information were reported. One of the study investigators is a qualified statistician (AB); if this investigator was not involved in the study, we would have sought independent statistical advice had our study required complex statistical analysis and other investigators lacked methodological expertise. One aspect of other responsible research practices is the broad dissemination of research findings to maximise the benefits from research. To implement this aspect of responsible research practice, we chose to submit this work to an open-access journal with a strong focus on research integrity and transparency. Thus, our criteria can be adapted and applied in research studies.

Conclusions

In summary, Australian grant and fellowship funding schemes incentivise some aspects of responsible research practices, but these incentives could be strengthened. A next step could involve examining our criteria to determine how they could be adapted to suit different funding contexts, barriers to their practical application, and strategies to overcome or mitigate these barriers [26]. Subsequently, funders could then require grant and fellowship applicants to implement responsible research practices. Administering institutions could be required to implement these practices in order to be eligible for funding. These strategies could provide stronger incentives for responsible research practices in funded research, and enhance research rigour. As stated by the NHMRC CEO Professor Anne Kelso, “poor quality publications, data that can’t be understood or replicated won’t change the world … they won’t add to knowledge, they won’t lead to improvements in the length or quality of our lives, and they won’t solve our problems” [27].

Availability of data and materials

All data are available from the project repository at the OSF (https://osf.io/vnxu6/). The study protocol and analysis plan were registered on the OSF (https://osf.io/2jesc).

Abbreviations

- ARC:

-

Australian Research Council

- CDF:

-

Career Development Fellowship

- CTCS:

-

Clinical Trials and Cohort Studies

- DART:

-

Diabetes Australia Research Trust General Grants and Millennium Awards

- DECRA:

-

Discovery Early Career Researcher Award

- NBCF:

-

National Breast Cancer Foundation Investigator Initiated Research Scheme

- NHMRC:

-

National Health and Medical Research Council.

- R&D:

-

Research and development

References

Research Australia. Funding health & medical research in Australia. 2016. Available from: https://researchaustralia.org/australian-research-facts/

Hardy MC, Carter A, Bowden N. What do postdocs need to succeed? A survey of current standing and future directions for Australian researchers. Palgrave Commun. 2016;2:1–9.

Woolston C. Uncertain prospects for postdoctoral researchers. Nature. 2020;588:181–4.

National Health and Medical Research Council. Survey of research culture in Australian NHMRC-funded institutions. Canberra: Survey findings report; 2019. p. 2020.

Wynants L, Van Calster B, Collins GS, Riley RD, Heinze G, Schuit E, et al. Prediction models for diagnosis and prognosis of covid-19: Systematic review and critical appraisal. BMJ. 2020;369:m1328.

Yu Y, Shi Q, Zheng P, Gao L, Li H, Tao P, et al. Assessment of the quality of systematic reviews on COVID-19: A comparative study of previous coronavirus outbreaks. J Med Virol. 2020;92:883–90.

Australian Research Council. Inquiry into Funding Australia’s Research (Submission 46). Canberra; 2018. Available from: https://www.aph.gov.au.

National Health and Medical Research Council. NHMRC’s Research Quality Strategy. Canberra; 2019. Available from: https://www.nhmrc.gov.au/about-us/publications/nhmrcs-research-quality-strategy.

Department of Education and Training, Australian Government. Higher Education Research Data Collection Specifications; 2019. p. 2018. [cited 2021 Feb 23]. Available from: https://www.dese.gov.au/uncategorised/resources/2019-higher-education-research-data-collection-specifications

Department of Education Skills and Employment. 2018 Australian Competitive Grants Register. 2018. Available from: https://docs.education.gov.au/node/50206

Moher D, Naudet F, Cristea IA, Miedema F, Ioannidis JPA, Goodman SN. Assessing scientists for hiring, promotion, and tenure. PLoS Biol. 2018;16(3):e2004089. https://doi.org/10.1371/journal.pbio.2004089.

Nosek BA, Alter G, Banks GC, Borsboom D, Bowman SD, Breckler SJ, et al. Promoting an open research culture. Science. 2015;348:1422–5.

NHMRC, ARC, Universities Australia. Australian code for the responsible conduct of research 2018. Canberra: Commonwealth of Australia; 2018.

Kelley K, Clark B, Brown V, Sitzia J. Good practice in the conduct and reporting of survey research. Int J Qual Health Care. 2003;15(3):261–6. https://doi.org/10.1093/intqhc/mzg031.

Besançon L, Peiffer-Smadja N, Segalas C, Jiang H, Masuzzo P, Smout C, et al. Open science saves lives: Lessons from the COVID-19 pandemic. bioRxiv. 2020. https://doi.org/10.1101/2020.08.13.249847.

Curran-Everett D, Benos DJ. Guidelines for reporting statistics in journals published by the American Physiological Society: the sequel. Adv Physiol Educ. 2007;31(4):295–8. https://doi.org/10.1152/advan.00022.2007.

Diong J, Butler AA, Gandevia SC, Héroux ME. Poor statistical reporting, inadequate data presentation and spin persist despite editorial advice. PLoS One. 2018;13:e0202121.

Malički M, IjJ A, Bouter L, ter Riet G. Journals’ instructions to authors: a cross-sectional study across scientific disciplines. PLoS One. 2019;14:e0222157.

Caulley L, Catalá-López F, Whelan J, Khoury M, Ferraro J, Cheng W, et al. Reporting guidelines of health research studies are frequently used inappropriately. J Clin Epidemiol. 2020;122:87–94. https://doi.org/10.1016/j.jclinepi.2020.03.006.

Glasziou P, Altman DG, Bossuyt P, Boutron I, Clarke M, Julious S, et al. Reducing waste from incomplete or unusable reports of biomedical research. Lancet. 2014;383(9913):267–76. https://doi.org/10.1016/S0140-6736(13)62228-X.

Boutron I, Ravaud P. Misrepresentation and distortion of research in biomedical literature. Proc Natl Acad Sci U S A. 2018;115(11):2613–9. https://doi.org/10.1073/pnas.1710755115.

Moynihan R, Bero L, Hill S, Johansson M, Lexchin J, MacDonald H, et al. Pathways to independence: towards producing and using trustworthy evidence. BMJ. 2019;367:l6576.

Tennant JP, Crane H, Crick T, Davila J, Enkhbayar A, Havemann J, et al. Ten hot topics around scholarly publishing. Publications. 2019;7(2):34. https://doi.org/10.3390/publications7020034.

Foley EF, Roos DE. Feedback survey on the Royal Australian and new Zealand College of Radiologists Faculty of radiation oncology trainee research requirement. J Med Imaging Radiat Oncol. 2020;64(2):279–86. https://doi.org/10.1111/1754-9485.12994.

Mansournia MA, Collins GS, Nielsen RO, Nazemipour M, Jewell NP, Altman DG, et al. A cHecklist for statistical assessment of medical papers (the CHAMP statement): explanation and elaboration. Br J Sports Med. 2021;0:bjsports-2020-103652.

Moher D, Bouter L, Kleinert S, Glasziou P, Sham MH, Barbour V, et al. The Hong Kong principles for assessing researchers: fostering research integrity. PLoS Biol. 2020;18(7):e3000737. https://doi.org/10.1371/journal.pbio.3000737.

Doherty Institute. NHMRC CEO professor Anne Kelso discusses the funding landscape. 2019. Available from: https://www.youtube.com/watch?v=0wRLws6iS3s&t=350s

Acknowledgements

Not applicable.

Funding

Cynthia Kroeger, Adrian Barnett and Lisa Bero are supported by the Australian National Health and Medical Research Council (NHMRC). The NHMRC had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

Joanna Diong, Lisa Bero, Cynthia Kroeger and Adrian Barnett conceived the idea for this project. Cynthia Kroeger and Katherine Reynolds collected the data. Cynthia Kroeger, Katherine Reynolds and Joanna Diong extracted the data. Joanna Diong wrote the paper. All authors discussed the results and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

We declare there are no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Definitions of questions to assess the 9 criteria.

Additional file 2.

Definitions of scores.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Diong, J., Kroeger, C.M., Reynolds, K.J. et al. Strengthening the incentives for responsible research practices in Australian health and medical research funding. Res Integr Peer Rev 6, 11 (2021). https://doi.org/10.1186/s41073-021-00113-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41073-021-00113-7