Abstract

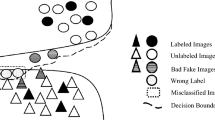

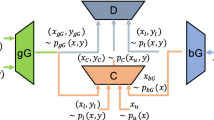

Generative adversarial network (GAN) has been successfully extended to solve semi-supervised image classification tasks recently. However, it is still a great challenge for GAN to exploit the unlabeled images for boosting its classification ability when labeled images are very limited. In this paper, we propose a novel CCS-GAN model for semi-supervised image classification, which aims to improve its classification ability by utilizing the cluster structure of unlabeled images and ’bad’ generated images. Specifically, it employs a new cluster consistency loss to constrain its classifier to keep the local discriminative consistency in each cluster of unlabeled images and thus provides implicit supervised information to boost the classifier. Meanwhile, it adopts an enhanced feature matching approach to encourage its generator to produce adversarial images from the low-density regions of real distribution, which can enhance the discriminative ability of the classifier during adversarial training and suppress the mode collapse problem. Extensive experiments on four benchmark datasets show that: the proposed CCS-GAN achieves very competitive performance in semi-supervised image classification tasks when compared with several state-of-the-art competitors.

Similar content being viewed by others

Notes

Available: https://tiny-imagenet.herokuapp.com.

References

Goodfellow, I. J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D.: Generative adversarial nets. In: Advances in Neural Information Processing Systems, pp. 2672-2680 (2014)

Song, H., Wang, M., Zhang, L., Li, Y., Jiang, Z., Yi, G.: \(\text{ S}^{2}\text{ RGAN }\): sonar-image super-resolution based on generative adversarial network. Vis. Comput. (2020). https://doi.org/10.1007/s00371-020-01986-3

Zhu, J.Y., Park, T., Isola, P.,Efros, A.A.:Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2223-2232 (2017)

Brock, A., Donahue, J., Simonyan, K.: Large scale gan training for high fidelity natural image synthesis. arXiv:1809.11096 (2018)

Pan, Z., Yu, W., Yi, X., Khan, A., Yuan, F., Zheng, Y.: Recent progress on generative adversarial networks (GANs): a survey. IEEE Access 7, 36322–36333 (2019)

Springenberg, J.T.: Unsupervised and semi-supervised learning with categorical generative adversarial networks. arXiv:1511.06390 (2015)

Salimans, T., Goodfellow, I., Zaremba, W., Cheung, V., Radford, A., Chen, X.: Improved techniques for training gans. In: Advances in Neural Information Processing Systems, pp. 2234-2242 (2016)

Hu, C., Wu, X.J., Kittler, J.: Semi-supervised learning based on GAN with mean and variance feature matching. IEEE Trans. Cogn. Dev. Syst. 11(4), 539–547 (2019)

Dai, Z., Yang, Z., Yang, F., Cohen, W.W., Salakhutdinov, R.R.: Good semi-supervised learning that requires a bad gan. In: Advances in Neural Information Processing Systems, pp. 6510-6520 (2017)

Qi, G.J., Zhang, L., Hu, H., Edraki, M., Wang, J., Hua, X.S.: Global versus localized generative adversarial nets. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp.1517-1525 (2018)

Lecouat, B., Foo, C.S., Zenati, H., Chandrasekhar, V.R.: Semi-supervised learning with gans: revisiting manifold regularization. arXiv:1805.08957 (2018)

Li C.X., Xu, K., Zhu, J., Zhang, B.: Triple generative adversarial nets. In: Advances in Neural Information Processing Systems, pp.4088-4098 (2017)

Jin, W.D., Yang, P., Tang, P.: Double discriminator generative adversarial networks and their application in detecting nests built in catenary and semisupervized learning. Sci. Sin. Inform. 48, 888–902 (2018). https://doi.org/10.1360/N112017-00290

Ni, Y., Song, D., Zhang, X., Wu, H., Liao, L.: CAGAN: consistent adversarial training enhanced GANs. In: Proceedings of the 27th International Conference on Artificial Intelligence, pp.2588-2594 (2018)

Wei, X., Gong, B.Q., Liu, Z.X., Lu, W., Wang, L.: Improving the improved training of wasserstein gans: a consistency term and its dual effect. arXiv:1803.01541 (2018)

Li, W., Wang, Z., Yue, Y., Li, J., Speier, W., Zhou, M., Arnold, C.: Semi-supervised learning using adversarial training with good and bad samples. Mach. Vision. Appl., 31(49), (2020). https://doi.org/10.1007/s00138-020-01096-z

Xu, Z., Wang, H., Yang, Y.: Semi-supervised self-growing generative adversarial networks for image recognition. Multimed. Tools Appl. (2020). https://doi.org/10.1007/s11042-020-09602-1

Chen, Z., Ramachandra, B., Vatsavai R. R.: Consistency regularization with generative adversarial networks for semi-supervised learning. arXiv:2007.03844 (2020)

Zhu, X., Goldberg, A.B.: Introduction to semi-supervised learning. Morgan & Claypool (2009). https://doi.org/10.2200/S00196ED1V01Y200906AIM006

Rasmus, A., Berglund, M., Honkala, M., Berglund, M., Raiko, T.: Semi-supervised learning with ladder networks. In: Advances in Neural Information Processing Systems, pp.3546–3554 (2015)

Laine, S., Aila, T.: Temporal ensembling for semi-supervised learning. arXiv:1610.02242 (2016)

Tarvainen, A., Valpola, H.: Mean teachers are better role models: weight-averaged consistency targets improve semi-supervised deep learning results. In: Advances in Neural Information Processing Systems, pp. 1195–1204 (2017)

Miyato, T., Maeda, S.I., Koyama, M., Ishii, S.: Virtual adversarial training: a regularization method for supervised and semi-supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 41(8), 1979–1993 (2019)

Yalniz, I., Jégou, H., Chen, K., Paluri, M., Mahajan, D.: Billion-scale semi-supervised learning for image classification. arXiv:1905.00546 (2019)

Qiao, S., Shen, W., Zhang, Z., Wang, B.,Yuille , A.: Deep co-training for semi-supervised image recognition. In: Proceedings of the European Conference on Computer Vision, pp. 135-152 (2018)

Kingma, D.P., Rezende D.J., Rezende, S., Welling, M.: Semi-supervised learning with deep generative models. In: Advances in Neural Information Processing Systems, pp.3581-3589 (2014)

Kumar, A., Sattigeri, P., Fletcher, T.: Semi-supervised learning with gans: Manifold invariance with improved inference. In: Advances in Neural Information Processing Systems, pp.5534-5544 (2017)

Odena, A., Olah, C., Shlens, J.: Conditional image synthesis with auxiliary classifier gans. In: Proceedings of the 34th International Conference on Machine Learning, pp.2642-2651 (2017)

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Chapelle, O., Weston, J., Schölkopf, B.: Cluster kernels for semi-supervised learning. In: Advances in Neural Information Processing Systems, pp.601-608 (2002)

Alpaydin, E.: Introduction to machine learning, 3rd edn. MIT press, London (2010)

Luo, Y.C., Zhu, J., Li, M.X., Ren, Y., Zhang, B.: Smooth neighbors on teacher graphs for semi-supervised learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8896-8905 (2018)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp.1097-1105 (2012)

Kuncheva, L.I., Rodríguez, J.J., Plumpton, C.O., Linden, D.E., Johnston, S.J.: Random subspace ensembles for fMRI classification. IEEE Trans. Med. Imaging 29(2), 531–542 (2010)

LeCun, Y., Cortes, C., Burges, C.J.: The MNIST database of handwritten digits. http://yann.lecun.com/ exdb/mnist (2010)

Krizhevsky, A., Hinton, G.: Learning multiple layers of features from tiny images. Technical Report, University of Toronto (2009)

Netzer, Y., Wang, T., Coates, A., Bissacco, A., Wu, B., Ng, A.Y.: Reading digits in natural images with unsupervised feature learning. In: NIPS Workshop on Deep Learning and Unsupervised Feature Learning, pp.1-9 (2011)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv:1412.6980 (2014)

Bengio, Y., Lamblin, P., Popovici, D., Larochelle, H.: Greedy layer-wise training of deep networks. In: Advances in Neural Information Processing Systems, pp.153-160 (2007)

Deng, J., Dong, W., Socher, R., Li, L., Li, K., Fei-Fei, L.: Imagenet: A large-scale hierarchical image database. In: IEEE Conference on Computer Vision and Pattern Recognition, pp.248-255 (2009)

Song, M.F.: A personalized active method for 3D shape classification. Vis. Comput. 37, 497–514 (2021)

Liang, Y.Q., He, F.Z., Z, X.T.: 3D mesh simplification with feature preservation based on whale optimization algorithm and differential evolution. Integr. Comput-Aid E., 27, 417-435 (2020)

Acknowledgements

This work is partially supported by the Research Planning Fund of Humanities and Social Sciences of the Ministry of Education, China (No. 16XJAZH002), the Fundamental Research Funds for the Central Universities, China (No. JBK2102049), the China Scholarship Council (CSC) and the Faculty Sabbatical Leave Fund from University of Central Arkansas, USA.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, L., Sun, Y. & Wang, Z. CCS-GAN: a semi-supervised generative adversarial network for image classification. Vis Comput 38, 2009–2021 (2022). https://doi.org/10.1007/s00371-021-02262-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-021-02262-8