Abstract

Mathematics education for students with and at-risk for a disability is important and high-quality, research-supported practices should be used for online teaching—whether intentional or as a result of a global pandemic. This single-case design multiple probe replicated across participants study explored the online delivery of an intervention package consisting of the virtual-abstract instructional sequence—taught via modified explicit instruction—and the system of least prompts to three upper elementary students with a disability or at-risk in solving equivalent fractions. Researchers determined a functional relation existed between the intervention package and student accuracy. Researchers also found students were independent and able to maintain accuracy when instruction did not proceed either following the intervention or with the support of boost sessions. Implications for providing mathematical interventions to students with disabilities or at-risk online exist. Further, the study lends support to virtual manipulative-based instructional sequences, as the setting, population, and implementation of explicit instruction differed in this study as compared to previous research.

Similar content being viewed by others

Introduction

For students with and without disabilities, teachers traditionally taught and students traditionally learned face-to-face, meaning in the same physical space. However, March 2020 drastically altered the medium of education for students with and without disabilities with the global covid-19 pandemic. As a result of the pandemic, schools ended face-to-face instruction for the remainder of the 2019–2020 academic year and delivered emergency remote online instruction. In Fall 2020, schools delivered instruction via multiple options, including fully face-to-face, hybrid (part face-to-face and part online), and fully online (Mcelrath, 2020).

While the global covid-19 pandemic shone a light on online teaching and learning for students with disabilities and those at-risk, both these groups of students have been taught online prior to the public health crisis (Smith, et al., 2016a, 2016b; Smith, et al., 2016a, 2016b; Vasquez III & Straub, 2012). Despite opportunities for online learning that predate the pandemic, limited research and guidance on teaching students with disabilities and students at-risk online existed, even prior to the pandemic (Basham et al., 2016). This lack of research and attention to online education in general extends to mathematics education (Vasquezz III & Straub, 2012).

Although limited research exists on mathematics instruction for students with disabilities online, some researchers have explored teaching mathematics online to students in general, including at the elementary and secondary levels. For example, Heppen et al. (2017) explored online and face-to-face algebra credit recovery courses for at-risk students. They found students in the online courses felt the content was more difficult, were less likely to receive credit, and were less likely to demonstrate achievement in algebra. For entering sixth-grade students, Osborne and Shaw (2020) found an online intervention supporting mathematics and science was cost-effective for schools. Participating students were supported in terms of preventing summer learning loss, with students engaging in more lessons achieving higher performance. Finally, to address the less discourse occurring in online mathematics instruction than face-to-face, Choi and Walters (2018) implemented synchronous discourse sessions within traditionally asynchronous elementary mathematics classes. The authors found students with the synchronous discourse experienced higher achievement and were more likely to be deemed proficient on state standardized assessments, suggesting the importance of talking about mathematics.

Mathematics Education

Although the field lacks solid research regarding how to teach mathematics online to students with disabilities or those at-risk, researchers suggested technology is beneficial in teaching and supporting students with disabilities in mathematics (Kiru et al., 2018; Spooner et al., 2019) as well as determined it to be evidence-based or research-based practices in mathematics for students with disabilities (e.g., Bouck et al., 2018b; Peltier et al., 2020; Spooner et al., 2019). While technology to support online teaching was not explicitly examined, Kiru et al. (2018) suggested technology-mediated interventions were beneficial to students with learning disabilities and students at-risk. Studies involving effective technology-mediated interventions included a range of ages and mathematical skills and concepts. Kiru et al. further found that despite the efficacy and research base for explicit instruction in mathematics for students with disabilities and those at-risk, few of the studies implementing technology-mediated interventions involved this instructional approach. For students with more extensive support needs, Spooner et al. (2019) determined both technology-aided instruction as well as explicit instruction were evidence-based practices for teaching mathematics.

Within technology-specific mathematics interventions for students with disabilities or those at-risk, researchers and practitioners both attended to manipulatives and manipulative-based instructional sequences (see Bouck & Park, 2018; Bouck et al., 2018b). Historically, research and use of manipulatives for all students has focused on concrete manipulatives—physical objects one can manipulative to help gain conceptual understanding of mathematical ideas and skills (Bouck & Flanagan, 2010). Use of concrete manipulatives themselves as well as within a graduated sequence of instruction—concrete-representational-abstract (CRA) instructional sequence—are considered best, research-based, or even evidence-based practices (Bouck & Park, 2018; Bouck et al., 2018b; Maccini & Gagnon, 2000; Peltier et al., 2020).

The CRA instructional sequence is considered an evidence-based instructional practice for students with learning disabilities (Agrawal & Morin, 2016; Bouck et al., 2018b), although its use with a variety of students with disabilities and at-risk for a disability dates back decades (Underhill, 1977). Researchers examined and determined the efficacy of the CRA instructional sequences for students at-risk (e.g., Flores, 2010) as well as students with autism (e.g., Stroizer et al., 2015), and intellectual disability (Bouck et al., 2017b). Despite the solid research regarding the CRA, some have questioned if the use of concrete manipulatives is the most appropriate tool for all students and/or if educators should take advantage of advances in technology to use virtual manipulatives in place of concrete manipulatives within the graduated sequence of instruction (Bouck et al., 2017c; Bouck & Sprick, 2019). As such, researchers have examined and cultivated the research base regarding the virtual-representational-abstract (VRA) instructional sequence, and variations of this approach (e.g., virtual-abstract [VA] instructional sequence and virtual-representational [VR] instructional sequence; e.g., Bouck et al., 2017c, 2019).

Virtual Manipulative-Based Instructional Sequences

As noted, over the past few years researchers have increasingly examined virtual manipulative-based instructional sequences, such as the VRA, VA, and VR (e.g., Bouck et al., 2017c, 2019). Although an evidence-based synthesis does not exist regarding virtual manipulative-based instructional sequences, individual studies including students with disabilities suggest the efficacy and efficiency of these approaches across a variety of mathematical areas such as fractions (Bouck et al., 2020b), algebra (Bouck et al., 2019), and basic operations (Bouck et al., 2018a). In making decisions regarding which virtual manipulative-based instructional sequence to implement, Bouck and Long (2021) suggested one element for educators to consider is the mathematical focus. In other words, Bouck et al. (2017c) suggested some mathematical skills and concepts lend themselves better to the inclusion of a representational phase than others. For example, Bouck et al. (2017a) found fractions a challenging mathematical domain for drawing pictorial representations given the need for precision.

In the existing studies, predominantly involving middle school students with intellectual disability, autism, or learning disabilities, researchers determined students acquired targeted math skills when taught via the VRA, VA, or VR instructional sequence (e.g., Bouck et al., 2017c, 2019). However, in some studies, students struggled to maintain (Bouck et al., 2018a, 2020a). As such, researchers created intervention packages with the virtual manipulative-based instructional sequences, such as adding fading, overlearning, or the system of least prompts to the instructional sequence (Bouck et al., 2020c; Park et al., 2020a, 2020b). In other studies, researchers implemented abstract boost sessions, in which another instructional session with the abstract—or numerical strategies—phase was implemented prior to re-evaluating for maintenance (e.g., Bouck et al., 2020b). Researchers found positive results with regard to students maintaining skills with intervention packages and boost sessions.

Regardless of the specific virtual manipulative-based instructional sequence (e.g., VRA, VA), one constant across the research is the use of explicit instruction (Bouck & Sprick, 2019). As previously noted, explicit instruction is an evidence-based practice for teaching mathematics to students with disabilities (Gersten et al., 2009; National Center on Intensive Interventions, 2016; Riccomini et al., 2017; Spooner et al., 2019). The CRA and virtual-manipulative-based instructional sequences generally use explicit instruction as the means of teaching students how to approach understanding and solving problems. As operationalized, explicit instruction in mathematics to deliver manipulative-based instructional sequences generally involves a modeling phase, in which a researcher or educator demonstrates how to solve with a verbal narration; a guided phase, in which a student solves but a researcher or educator provides feedback, prompts, and cues as needed; and an independent phase, in which a student solves problems themselves without support (Agrawal & Morin, 2016; Doabler & Fien, 2013).

Current Study

The research base regarding virtual manipulative-based instructional sequences is growing. However, to date, the research has involved face-to-face instruction, secondary students, and traditional explicit instruction. In this study, researchers expanded the literature by exploring the delivery of a virtual manipulative-based instructional sequence—the VA—within an online environment, including elementary students with disabilities or at-risk (i.e., struggling), and modifying explicit instruction to fit within the constraints of online teaching. As such, researchers explored teaching students to find equivalent fractions via the VA instructional sequence in an online environment. They sought to address the following research questions: (a) Does a functional relation exist between the VA instructional sequence delivered online in conjunction with the system of least prompts (SLP) and student accuracy in finding equivalent fractions? (b) Do students become independent in solving mathematical problems when taught online using the VA instructional sequence and the SLP, (c) Do students maintain their accuracy in finding equivalent fractions when instruction does not proceed, and (d) What are the perceptions of parents and students towards learning math online and learning it via the VA sequence?

Method

Participants

This study involved three students identified with a disability and receiving special education services or deemed at-risk and receiving services as part of a response to intervention (RtI) model. Each student’s parents contacted the researchers to participate as a result of social media recruitment; each parent identified their child as struggling in mathematics and as benefiting from a one-on-one intervention relative to mathematics. Specifically, the recruitment ad indicated researchers were targeting students with disabilities or those who struggle in mathematics. When interested parents reached out, researchers asked if their child received special education services, RtI services, or demonstrated repeated struggles with grade-level mathematics. Further, the researchers screened students via the KeyMath-3 assessment and examined for discrepancies between current grade level and grade-level equivalency of different mathematical areas. The KeyMath-3 (Connolly, 2007) is a diagnostic mathematics assessment that provides educators with information about students’ performance on mathematical concepts and skills. The KeyMath-3 involves 10 subtests; however, individuals can choose to only administer select subtests. For the purposes of the study, researchers administered the numeration subtest and then the subtests affiliated with operations: mental computation and estimation, addition and subtraction, and multiplication and division (Pearson, 2011).

The inclusion criteria for participants included: (a) parent identified struggle in mathematics, (b) struggle in mathematics confirmed by KeyMath-3 assessment administered by researchers (i.e., below grade level), (c) struggle to find equivalent fractions as documented on the KeyMath-3 assessment as well as at least three baseline sessions to confirm KeyMath-3 struggles, (d) parent identified student as having a disability and/or at-risk, and (e) lack of prior exposure to virtual manipulatives taught as part of a virtual manipulative graduated instructional sequence. Parental consent and student assent were obtained prior to starting the study.

Kristy

Kristy was a 10-year-old white female student in the fifth grade at the time of the study. At the start of the study, Kristy was receiving all of her education virtually, however, about three-fourths into data collection, her school returned to face-to-face instruction for a few days per week. Her mother reported that Kristy struggled in math and received additional support from her teachers at school; however, she did not have a diagnosis of a disability. According to the researcher-administered KeyMath-3 assessment, Kristy’s raw score was 20 for numeration (grade equivalency of 4.1). Her raw score for mental computation and estimation was 14 (grade equivalency of 3.2), addition and subtraction 15 (grade equivalency 3.1), and multiplication and division with a score of 7 (grade equivalency of 3.8). For total operations, Kristy raw score was 36, with a grade equivalency of 3.4. From further analysis of her errors and incorrect KeyMath-3 answers as well as baseline probing, researchers determined Kristy struggled with fractions—she could identify but not find equivalent fractions or perform operations involving fractions that needed equivalent fractions on the KeyMath-3 assessment or the three baseline sessions.

Claudia

Claudia was a 10-year-old, white, fifth-grade girl identified with PDD-NOS. She was attending school virtually and received special education services and support. Claudia’s teacher had administered the KeyMath-3 within the last year and her parents did not want it re-administered but provided the evaluation report. On the numeration subtest, Claudia’s raw score was 10, which had a grade equivalency of 1.4. On her mental computation and estimation and addition and subtraction subtests, her scores placed her grade equivalency at 1.7 and 1.8, respectively. Her multiplication and division were a grade equivalency of 4.2. Her overall total operations score was 22, which was equivalent grade-wise to 2.4. Per her self-report, Claudia liked aspects of math, such as multiplication but did not enjoy working with fractions. Based on researcher probing, Claudia could identify fractions but could not find equivalent fractions or successfully perform operations involving fractions that needed equivalent fractions on the KeyMath-3 assessment or her four baseline sessions.

Stacey

Stacey was an 11-year-old, white female in the sixth-grade. Stacey was receiving school instruction all virtually during the course of the study. Stacey was not formerly identified as having a disability but routinely struggled in mathematics and her mother was concerned about her mathematics performance as well as confidence. By her own admission, Stacey did not enjoy mathematics. According to the KeyMath-3 assessment administered by researchers, Stacey’s raw numeration score was 24 (grade equivalency of 5.0). For mental computation and estimation, her raw score was 17 (grade equivalency of 4.2), for addition and subtraction 17 (grade equivalency 3.5), and multiplication and division with a score of 9 (grade equivalency of 4.3). For total operations, Stacey’s raw score was 43 with a grade equivalency of 4.0. When analyzing her results as well as further probing, Stacey struggled with fractions—she could identify but could not find equivalent fractions nor perform operations involving fractions that needed equivalent fractions on the KeyMath-3 assessment. During baseline, she struggled with finding equivalent fractions that did not involve \(\frac{1}{2}\) .

Setting

All parts of the research study occurred online using Zoom. All three participants, as well as the two researchers, connected from their homes. At the start of the study, the researchers provided the parents of each participant an individualized, secure Zoom link to use throughout the study for the set days and times agreed upon. In each session, researchers worked one-on-one with students, with the exception of when interobserver agreement (IOA) data were collected. For the most part, parents were not immediately present on the screen but could be secured as needed. Both participants and researchers kept their cameras on for the duration of each session and also shared screens as appropriate. Each session lasted no more than 30 min.

Materials

Researchers used computers, web-based virtual manipulative or whiteboard app, learning sheets or probes, and Zoom. As noted, each session was delivered virtually via Zoom, so each student completed their sessions on a computer or similar device. Each Zoom link was unique and involved a passcode and waiting room for participant security. Both students and researchers could share screens while in the Zoom session. Researchers provided any needed links, such the virtual manipulative or virtual whiteboard through the Zoom chat feature.

Researchers used free apps from Math Learning Center. For all three girls, the researchers used the Math Learning Center fraction pieces virtual manipulative (see Fig. 1). The Math Learning Center fraction manipulative presents a white screen, which can be written on with virtual markers. Students can select fraction strips or fraction circles—for this study, it was always a fraction strip. Students could determine the denominator (i.e., the number of pieces the strip was divided into) and then color the sections to represent the numerator. For all three participants, researchers also used the Math Learning Center whiteboard app during the abstract sessions. The researchers opted to use the Math Learning Center apps as they were free, met the mathematical area targeted for all students, and did not require a login.

The researchers created learning sheets and probes to be used during baseline, intervention, and maintenance. Each learning sheet—used in intervention—and probe—used in baseline and maintenance—was previously used in prior research projects. Each learning sheet was unique, even if a few problems were repeated. For the equivalent fraction problems, the denominators were halves, thirds, fourths, fifths, sixths, eighths, tenths, and twelfths (e.g., \(\frac{1}{3} = \frac{{}}{{12}}{\text{ or }}\frac{4}{{10}} = \frac{{}}{5}\)). Probes consisted of five problems. Students were not given the probes, but the problems were presented orally, and if needed, shown on the screen (i.e., typed in advanced and shared via a screen share). The learning sheets consisted of three sections: one problem for modeling, one problem for guided, and five problems for independent. Researchers modeled one problem, then provided feedback and prompts as students solved one problem, and finally had the student complete five problems.

Experimental Design

Researchers used a single-case multiple probe replicated across participants design to examine the relationship between the intervention package consisting of the VA instructional sequence and the SLP delivered online and student accuracy and independence in solving the problems targeted at each students’ mathematical area of struggle. With the multiple probe across participants design, each student began simultaneously in baseline and completed a minimum of three baseline sessions. The first student—Kristy—entered baseline when she had a stable and zero-celerating or decelerating trend after at least three baseline sessions. When Kristy had completed two intervention sessions—in the virtual phase—with 100% accuracy and at least 80% independence, the second student—Claudia—entered intervention, after completing at least one additional baseline; Claudia’s baseline also had to be stable with a zero-celerating or decelerating for her to start intervention. Similarly, when the second student—Claudia—achieved two intervention sessions in the virtual phase with 100% accuracy and at least 80% independence, the third student—Stacey—completed another baseline session. If Stacey’s baseline data were stable with a zero-celerating or decelerating trend, she began intervention. Students continued in intervention until completing a minimum of three sessions with virtual manipulatives with 100% accuracy and at least 80% independence and three sessions with numerical strategies (i.e., abstract phase) with 100% accuracy and at least 80% independence.

Independent and Dependent Variables

The independent variable in the study was the intervention package consisting of the virtual-abstract (VA) instructional sequence and the SLP delivered via online instruction. Two dependent variables were evaluated: (a) student accuracy in solving the problems, represented as a percentage—out of five problems, and (b) student independence in completing the steps of the task analysis for finding equivalent fractions, represented as a percentage (see Fig. 2 for the task analyses for finding equivalent fractions). Specifically, to determine independence, researchers counted the number of steps of the task analysis for each student in the respective phase in which no prompt was given, following the SLP. For virtual sessions, each problem had eight steps, for a total possible of 40 across each session; independence was then the total number of steps in which no prompt was given divided by 40. For abstract sessions, each problem had four task analysis steps, for a total of 20 across each session; independence was likewise calculated except the total non-prompted steps was divided by 20. Researchers provided prompts for students following the SLP hierarchy, which were administered after a 10-s wait time for failure to initiate or when a student completed a step incorrectly. For both accuracy and independence, researchers used data sheets with the tasks analysis steps specified for each of the five problems during the independent phase as well as the one problem for guided (data collection sheet available upon request from the first author).

Procedures

Researchers worked one-on-one with students, with each student completing at least three baseline sessions, six intervention sessions, and two maintenance sessions. If students did not maintain at least 80% accuracy, researchers provided boost sessions. Researchers met with students once or twice per week for 30-min online sessions. The researchers were a faculty member whose research focuses on mathematical interventions for students with disabilities and response-to-intervention in mathematics at the secondary level and a doctoral student trained by the first author to deliver mathematical interventions.

Baseline

Kristy completed three baseline sessions, Claudia four, and Stacey five, consistent with a multiple probe across participants designs. During baseline sessions, each student answered a probe consisting of five finding equivalent fraction problems. The researcher presented the problems orally and students wrote them down on a virtual or physical whiteboard. Researchers repeated any problems and students repeated back the problems to confirm understanding. To solve, students wrote on a physical or virtual whiteboard. For students to transition from baseline to intervention, each needed to have completed at least three baseline sessions and have a stable and zero-celerating or decelerating baseline trend, with all baseline accuracy less than 40%. For students two and three, the previous student had to have completed two intervention sessions—in the virtual phase—with 100% accuracy and at least 80% independence.

Intervention

The intervention involved the intervention package consisting of the VA instructional sequence and the SLP presented via online instruction to students. In the VA instructional sequence, researchers taught students to understand and solve finding equivalent fractions problems with virtual manipulatives (e.g., fraction tiles) and then with numerical strategies. The VA is a graduated sequence, meaning students completed at least three sessions using virtual manipulatives to solve mathematics problems and then at least three sessions using numerical strategies (i.e., abstract phrase) to solve problems. To transition between phases, students needed to solve problems with 100% accuracy and 80% independence for three sessions; any session in which a student achieved less than 100% accuracy or 80% independence was repeated.

In both the virtual and abstract phases, researchers modeled one problem and then provided feedback, prompts, and cues to students for one problem, before advancing to five problems in the independence phase. The modeling and guiding of one problem each represents a modification from standard explicit instruction in mathematics (Agrawal & Morin, 2016; Doabler & Fien, 2013), which the authors did to stay within a targeted 30-min online session. The modeling portion, while fewer problems, was similar to traditional explicit instruction in which the researcher physically demonstrated on the screen how to solve the problem with a virtual manipulative or numerical strategies while providing a think-aloud (i.e., verbal narration of the steps and strategies they were doing; see Fig. 1). For the guided portion, the researcher’s prompts and feedback included such remarks as “what do you do first?,” “did you color in the correct number of pieces of the fraction?,” “good work; you solved it correctly by following the steps,” or “let me show you another problem, I think we have a misunderstanding.” If a student was not at least 80% independent and 100% accurate on the guided problem, the researcher ended the session. The next session began with the researcher modeling and guiding students through problem. Sessions in which students did not advance to the independent phase were noted in data collection.

The SLP used throughout the virtual and abstract intervention phases included three levels: indirect verbal prompt (e.g., “what do you do next”), direct verbal prompt (e.g., “find the relationship between the two denominators; can you multiply or divide the first denominator by a factor to get the second denominator?”), and modeling (i.e., reshowing the problem). Researchers provided a 10-s wait time before implementing the SLP at each step of the task analysis if students did not initiate (refer to Fig. 2); researchers also implemented the SLP if students incorrectly solved each step up until the last step of the final answer.

Virtual

In the virtual phase, researchers taught students to solve the equivalent fraction problems via the free fraction pieces virtual manipulative from the Math Learning Center. In solving equivalent fractions (e.g., \(\frac{2}{3}\) = \(\frac{}{12}\)), the researcher began by activating the background knowledge of fraction vocabulary, such as numerator and denominator, as well as what equivalent meant. The researcher then focused on representing the fractions with the virtual fraction pieces. This started by putting a fraction representing one whole and then directly underneath and aligned a piece divided into equal sections to represent the first fraction (e.g., thirds). The researcher then shaded the number of thirds represented (e.g., two). Next, the researcher, created a fraction piece to represent the second fraction (e.g., twelves). The researcher colored in the number of twelves until they were aligned with the colored \(\frac{2}{3}\), which was eight. The researcher reinforced the visual strategy by discussing the numerical strategy, such as identifying the relationship between 3 and 12 (i.e., if one multiplies 3 by 4, they get 12), and what one does to the denominator (e.g., multiplies by 4), one must do to the numerator as we are focused on equivalent fractions or fractions with the same value. Two times four equals eight, so \(\frac{2}{3}\) = \(\frac{8}{12}\).

Abstract

In the abstract phase, researchers taught students to solve the equivalent fraction problems via numerical strategies, and answers were expressed on the free whiteboard app from the Math Learning Center or a physical whiteboard per student preference. In solving equivalent fractions (e.g., \(\frac{2}{3}\) = \(\frac{}{6}\)), the researcher similarly began by refreshing students’ background knowledge of fraction vocabulary, such as numerator and denominator, as well as the concept of equivalence. The researcher presented the numerical strategy, such as identifying the relationship between 3 and 6 (i.e., if one multiplies 2 by 3, they get 6, including writing the 3 s multiplication fact families [3 \(\times\) 1 = 3; 3 \(\times\) 2 = 6]), and then emphasizing that based on equivalence, what ones does the denominator (e.g., multiplies by 3), one must also do to the numerator. In this case, two times two equal four, so \(\frac{2}{3}\) = \(\frac{4}{6}\).

Maintenance

To assess for maintenance, researchers administered assessments one week apart starting one week after the last intervention session. The maintenance probes were similar to baseline in that students completed five finding equivalent fractions problems without instruction proceeding as well as virtual manipulatives were not made available during the session. If students did not maintain at 80% accuracy during maintenance, researchers provided an abstract boost session. In the abstract boost sessions, researchers provided another session of explicit instruction—modeling one problem and then guiding students through solving one problem and then students solving five problems. After each abstract boost session, students completed two additional maintenance probes. If students were 80–100% accurate in both maintenance probes, the study was discontinued; if not, researchers provided another boost session.

Inter-Observer Agreement and Procedural Fidelity

To assess for interobserver agreement (IOA), two researchers attended at least 25% of sessions in each intervention phase. The second researcher collected data at the same time with regard to accuracy and independence. Researchers calculated IOA by summing the number of agreements for both accuracy and independence and dividing each by the number of agreements plus disagreements. The IOA was 100% for accuracy for each student during baseline and maintenance. During intervention, IOA for all three students was 100% for accuracy as well as independence in all phases.

To evaluate procedural fidelity, researchers used a checklist: (a) researchers gave student the appropriate materials for phase or condition, (b) researchers implemented modified explicit instruction (i.e., modeling one problem, guided one problem, (c) students did not progress and repeated the modeling and guided if students were not 100% accurate or 80% independence during the guided portion, and (c) researchers collected accuracy and independence data via a task analysis collection sheet. Researchers assessed for procedural fidelity during the IOA sessions; procedural fidelity was 100% for all phases/conditions for all students.

Social Validity

To evaluate social validity, researchers asked participants questions at the end of intervention. Participants were asked the questions orally while on Zoom. The questions focused on participants’ thoughts about the intervention as well as solving the problems with the virtual manipulatives or with numerical strategies. Researchers also inquired about the delivery of the mathematics instruction online.

Data Analysis

Researchers analyzed the data via visual analysis as well as conducted calculations. With visual analysis, researchers determined the immediacy of the effect (i.e., compared data from last baseline session to data from first intervention session) for accuracy as well as the overlap between baseline and intervention phases. To calculate stability, researchers found the median of the accuracy data for baseline and intervention, then calculated if the data for each phase was within 25% of the median (Ledford & Gast, 2018). If they were, then the data were stable; if not, variable. To calculate trend, researchers determined each phase’s mid-point, mid-date, and mid-rate for accuracy and connected the mid-date and mid-rate (White & Haring, 1980). This allowed the researchers to conclude if the trend was accelerating, decelerating, and zero-celerating. The researchers also calculated trend for the independence data during intervention. To calculate effect size, researchers entered the accuracy data into an online calculator and computed the Tau-U between participants’ baseline and intervention data as well as baseline and maintenance data, excluding any boost sessions. Using standard metrics, a Tau-U between 0.20 and 0.60 was moderate, between 0.60 and 0.80 large, and greater than 0.80 very large (Vannest et al., 2016).

Results

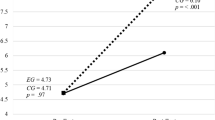

Overall, researchers found a functional relation between the intervention package involving the VA instructional sequence and the system of least prompts and student accuracy in finding equivalent fractions (see Fig. 3). All three students experienced an immediate effect when entering intervention. Two of the three students maintained without boost sessions; one required two boost sessions. However, all achieved 100% maintenance at least once.

Kristy

Kristy answered zero questions correctly during her three baseline sessions. She experienced an immediate effect after her first virtual intervention session, in which she was 100% accurate. Kristy was 100% accurate for all three virtual sessions as well as her first two abstract sessions. On her third abstract session, Kristy answered one problem incorrectly and repeated the session, answering with 100% accuracy the next session. The Tau-U between Kristy’s baseline and intervention was 1.0, indicating a very large effect. On her first maintenance session, Kristy’s accuracy was 40%. She completed her first abstract boost session with 100% accuracy and maintained 100% accuracy on her maintenance session the following week. On her third maintenance session however Kristy’s accuracy was 60%. Kristy received another boost session, in which her accuracy was 100%. She maintained 100% accuracy for the following two additional maintenance sessions. The Tau-U between Kristy’s baseline data and maintenance (excluding boost sessions) was 1.0, indicative of a very large effect.

In terms of independence, Kristy was always at least 80% independent across all sessions. She was generally more independent in the virtual phase (95–100%) than the abstract phase (80–95%). Her independence data were stable and zero-celerating in the virtual and abstract phases. In her abstract boost sessions, she was 100% and 90% independent, respectively. When Kristy needed prompts, they were generally indirect verbal prompts and they were often provided on the step of the task analysis involving applying the operation to the numerator. She also required more prompts on problems involving applying division to the denominator and numerator (e.g., \(\frac{2}{{12}} = \frac{{}}{6}\)) than those involving multiplication (e.g. \(\frac{3}{5} = \frac{{}}{{10}}\) ).

Claudia

Claudia answered zero problems correctly during her four baseline sessions. She too experienced an immediate effect in her first intervention session, which was 100% accurate. In fact, when progressing into the independent phase for each session regardless of phase Claudia was always 100% accurate. Claudia repeated zero sessions during the virtual manipulative phase but repeated the first abstract phase session twice. On the first abstract session administration, Claudia did not progress to the independent phase as she was not at least 80% independent in the guided portion of explicit instruction. On the second administration of the first abstract session, Claudia was 100% accurate but only 75% independent on the probe and hence repeated it a third time (100% accurate and 100% independent). The Tau-U between Claudia’s accuracy in baseline and intervention was 1.0; the Tau-U between Claudia’s baseline and maintenance was also 1.0. Claudia’s accuracy was 100% on her two maintenance sessions and no abstract boost sessions were provided.

In terms of independence, Claudia needed one prompt in the first virtual session but was 100% independent the next two. Claudia struggled more in the abstract phase initially. On the first session in which we progressed to the probe, Claudia’s independence was 75%. After that session, her independence ranged from 95 to 100%. Her independence data were stable and accelerating in both the virtual and abstract phases. Prompting never moved beyond indirect verbal prompts. On the session with 75% prompting (first probe for abstract phase), Claudia struggled with finding the relationship between the denominators and completing the operation (i.e., multiplication or division) with the numerator.

Stacey

Stacey’s first two baselines were 20% accurate; remaining baseline sessions were 0% accurate. In further examination, the problems Stacey answered correctly in baseline were problems involving finding the equivalent of \(\frac{1}{2}\) . In all independent and abstract intervention sessions, she was 100% accurate and repeated zero sessions. In maintenance, Stacey was 100% accurate on her first session, and 80% accurate on her second maintenance session. Stacey’s Tau-U between baseline and intervention accuracy was 1.0; it was likewise 1.0 between baseline and maintenance accuracy.

Stacey was relatively independent as well. She needed one prompt her first virtual session, one prompt her first abstract session, and one prompt on her last abstract session; otherwise, she was 100% independent. In terms of stability and trend, Stacey’s independence data were stable and accelerating in the virtual phase and stable and zero-celerating in the abstract phase. The prompts provided were (n = 3) indirect verbal prompts and (n = 1) direct verbal prompt, which was finding the relationship between the denominators for three of the four problems. For the fourth and last prompt delivered during the last abstract sessions, it was focused on a minor calculation error.

Social Validity

Overall, the students were positive about learning math online. Although they admitted they did not always enjoy math, they were willing and liked meeting with the researchers one-on-one. When asked which she liked better—virtual manipulatives or numerical strategies—Claudia liked the numerical strategies (or “just the math” as she put it) better but indicated she didn’t know why. Stacey indicated a slight preference for the virtual manipulatives when first learning and that the fraction pieces were easy to use and helped her to understand. However, as she became proficient at the numerical strategies, she was quickly able to find the equivalent fraction. Similarly, Kristy told researchers she preferred the virtual manipulatives because they were easier to use and she liked that she could see the fractions next to each other; she also indicated she thought about the fraction tiles when she solving with numerical strategies.

Discussion

Mathematics education for students with and at-risk for a disability is important and while the global pandemic impacted schooling as it is traditionally known for K-12 students, researchers need to examine effective practices to teach and support these students in an online environment. This study explored the intervention package of the VA instructional sequence and the SLP—delivered via online instruction—to teach three upper elementary students with disabilities or at-risk to solve problems involving finding equivalent fractions. Researchers found a functional relation between the intervention package and student accuracy. The three students were independent and accurate in finding equivalent fractions across the intervention phases and two of the three were successful with maintaining without boost sessions.

This study represents one of the first to focus on teaching mathematics concepts to upper elementary students with or at-risk of disabilities in a completely online environment. Further, it extends the emerging research base on virtual manipulative-based instructional sequences by focusing on upper elementary students—as opposed to middle school students—and utilizing free online virtual manipulative apps on the computer as opposed to paid apps on tablets. From the results, teaching students mathematical concepts via explicit instruction and utilizing virtual manipulatives and numerical strategies online is feasible and effective. Although limited previous research exists, researchers have suggested online mathematics teaching can support students at-risk or struggling, particularly when it is provided with synchronous interaction (Choi & Walters, 2018; Osborne & Shaw, 2020). Each of the students in this study acquired finding equivalent fractions and few intervention sessions were repeated across the three students due to lack of accuracy (100%) or independence (minimum of 80%).

Although the students successfully acquired and relatively successfully maintained finding equivalent fractions, the researchers implemented modifications to the intervention package when delivered online. Typically, explicit instruction involves modeling two problems and guiding two problems (Agrawal & Morin, 2016; Doabler & Fien, 2013). However, given time constraints when working with students—intentionally limiting students to 30-min sessions to be sensitive to their other online work, overall screen time, as well as pilot work suggesting explicit instruction took longer online due to potential technology issues, researchers modified the explicit instruction to involve one modeled and one guided problem. While research is limited regarding the efficacy of delivering interventions with modified explicit instruction, the results of this study suggest the potential. Researchers were able to effectively provide the modified explicit instruction to students as evident by student acquisition and maintenance.

As noted, previous work on the VA instructional sequence has focused on secondary students with disabilities—primarily targeting students with intellectual disability, autism, and learning disabilities (e.g., Bouck et al., 2017c, 2019). The VA instructional sequence is an emerging practice supported by research in helping students acquire mathematical skills and concepts; this study expands that to include not only upper elementary students but also students struggling or at-risk for a disability. The results suggest the VA instructional sequence can be an effective intervention at the upper elementary grades, for students who are yet to be identified as having a disability (or already identified) but are struggling in mathematics, and delivered via online instruction.

The results of this study also support use of virtual manipulative-based instructional sequences as part of an intervention package, rather than just stand-alone interventions. Previous research with the VA and other manipulative-based instructional sequences demonstrated success in students maintaining when intervention packages were used, such as incorporating the SLP or adding boost sessions during maintenance when students struggled (Bouck et al., 2020b, 2020c). As such, this study also supports increased research regarding mathematics education that emphasizes intervention packages, rather than stand-alone interventions, for supporting struggling students and those at-risk (Kellems et al., 2016; Losinki et al., 2019; Park et al., 2020b; Root et al., 2017).

Implications for Practice

An implication for practice of this research is the efficacy of teaching virtual manipulative-based instructional sequences via online instruction to students with disabilities or those who are struggling. As teachers moved K-12 mathematics instruction online for all students, they were provided with limited resources and research-based strategies for doing so. This research presents educators with a research-supported example of teaching mathematics to students with or at-risk of disabilities in a virtual learning environment. Given research suggesting lower or less positive mathematics results from students learning virtually (e.g., Ahn, 2016; Heppen et al., 2017; Woodworth et al., 2015), this research supports virtual instruction for students with disabilities or at-risk. The intervention was effective and efficient, considering the number of sessions and that researchers only met with students once per week. The results support the potential online instruction or intervention for upper elementary students.

This research also suggests that educators can modify explicit instruction and still achieve student acquisition and maintenance of mathematical skills and concepts. Providing educators with flexibility, particularly in the era of a pandemic in which they have less time to work with students in mathematics and are doing so virtually, is an important contribution of this research. Another implication is the use of a free virtual manipulative. Much of the previous research on virtual manipulatives as part of an instructional sequence utilized for-purchase manipulatives; this study relied on a free web-based app from the Math Learning Center (note, the app can also be downloaded for mobile devices for free but for the purposes of this study the web-based version was used). Teachers do not need to spend money on high-quality virtual manipulatives; high-quality free virtual manipulatives exist to successfully support students.

Limitations and Future Directions

This study is not without limitations. For one, researchers relied on parental nominations of students being at-risk or struggling and lacked school records. Second, the researchers provided the interventions and did so in a one-on-one environment. To consider issues of generalizability, researchers would want to examine the feasibility of teachers or interventionists delivering the intervention package virtually to students as well as consider the delivery in a small group setting as opposed to one-on-one. Unfortunately, given that students were from different classrooms and states and had limited availability to meet with researchers, small group administration of the intervention was unfortunately not feasible. Another potential limitation was the study investigated an intervention package, making it difficult to attribute the positive acquisition effects to the VA instructional sequence, the SLP, or the combination. However, the researchers viewed intervention packages as more realistic of actual educational practices than systematically evaluating each component. Additionally, researchers only probed for maintenance up to two weeks; in the future, researchers may want to extend the probing further to get a longer-term picture of maintenance. Finally, the researchers used the KeyMath-3 to confirm student struggles in mathematics, including with identifying fractions. The researchers administered the KeyMath-3 virtually; however, the assessment is not normed for online administration.

Researchers should continue to explore online delivery of mathematics interventions—including virtual manipulative-based instructional sequences—for students with and at-risk of disabilities. Beyond the 2019–2020 and 2020–2021 school year of increased virtual education for all students, online delivery is likely to continue to some degree. Educators need to be provided with research-based interventions for supporting all students in mathematics. This exploration can include different mathematical domains as well as considering the virtual-representational-abstract (VRA) instructional sequence in addition to the VA instructional sequence.

References

Agrawal, J., & Morin, L. L. (2016). Evidence-based practices: Applications of concrete representational abstract framework across math concepts for students with mathematics disabilities. Learning Disabilities Research and Practice, 31(1), 34–44.

Ahn, J. (2016). Enrollment and achievement in Ohio's virtual charter schools. Thomas B. Fordham Institute. Retrieved from, https://files.eric.ed.gov/fulltext/ED570139.pdf

Basham, J. D., Carter, R. A., Rice, M. F., & Ortiz, K. (2016). Emerging state policy in online special education. Journal of Special Education Leadership, 29(2), 70–78.

Bouck, E. C., Bassette, L., Shurr, J., Park, J., Kerr, J., & Whorley, A. (2017a). Teaching equivalent fractions to secondary students with disabilities via the virtual-representational-abstract instructional sequence. Journal of Special Education Technology, 32(4), 220–231. https://doi.org/10.1177/0162643417727291

Bouck, E. C., & Flanagan, S. M. (2010). Virtual manipulatives: What are they and how can teachers use them. Intervention in School and Clinic, 45(3), 186–191. https://doi.org/10.1177/1053451209349530

Bouck, E. C., & Long, H. (2021). Manipulatives and manipulative-based instructional sequences. In E. C. Bouck, J. R. Root, & B. Jimenez (Eds.), Mathematics education and students with autism, intellectual disability, and other developmental disabilities (pp. 104–134). DADD.

Bouck, E. C., & Park, J. (2018). Mathematics manipulatives to support students with disabilities: A systematic review of the literature. Education and Treatment of Children, 41(1), 65–106. https://doi.org/10.1353/ETC.2018.0003

Bouck, E. C., Park, J., Cwiakala, K., & Whorley, A. (2020a). Learning fraction concepts through the virtual-abstract instructional sequence. Journal of Behavioral Education, 29(2020), 519–542. https://doi.org/10.1007/s10864-019-09334-9

Bouck, E. C., Park, J., Maher, C., & Whorley, A. (2020b). Learning fractions with a virtual manipulative based graduated instructional sequence. Education and Training in Autism and Developmental Disabilities, 55(1), 45–59. https://doi.org/10.1177/1088357620943499

Bouck, E. C., Park, J., & Nickell, B. (2017a). Using the concrete-representational-abstract approach to support students with intellectual disability to solve change-making problems. Research in Developmental Disabilities, 60, 24–36. https://doi.org/10.1016/j.ridd.2016.11.006

Bouck, E. C., Park, J., Satsangi, R., Cwiakala, K., & Levy, K. (2019). Using the virtual-abstract instructional sequence to support acquisition of algebra. Journal of Special Education Technology, 34(4), 253–268. https://doi.org/10.1177/0162643419833022

Bouck, E. C., Park, J., Shurr, J., Bassette, L., & Whorley, A. (2018a). Using the virtual–representational–abstract approach to support students with intellectual disability in mathematics. Focus on Autism and Developmental Disabilities, 33(4), 237–248. https://doi.org/10.1177/1088357618755696

Bouck, E. C., Park, J., Sprick, J., Shurr, J., Bassette, L., & Whorley, A. (2017c). Using the virtual-abstract instructional sequence to teach addition of fractions. Research in Developmental Disabilities, 70, 163–174. https://doi.org/10.1016/j.ridd.2017.09.002

Bouck, E. C., Satsangi, R., & Park, J. (2018b). The concrete-representational-abstract approach for students with learning disabilities: An evidence-based practice synthesis. Remedial and Special Education, 39, 211–228. https://doi.org/10.1177/0741932517721712

Bouck, E. C., Shurr, J., & Park, J. (2020c). Virtual manipulative-based intervention package to teach multiplication and division to secondary students with developmental disabilities. Focus on Autism and Developmental Disabilities.

Bouck, E. C., & Sprick. (2019). The virtual-representational-abstract framework for supporting students with disabilities in mathematics. Intervention in School and Clinic, 54, 173–180.

Choi, J., & Walters, A. (2018). Exploring the impact of small-group synchronous discourse sessions in online math learning. Online Learning, 22(4), 47–64. https://doi.org/10.2459/olj.v22i.1511

Connolly, A. J. (2007). KeyMath-3 diagnostic assessment. Pearson.

Doabler, C. T., & Fien, H. (2013). Explicit mathematics instruction: What teachers can do for teaching students with mathematics difficulties. Intervention in School and Clinic, 48(5), 276–285. https://doi.org/10.1177/1053451212473151

Flores, M. M. (2010). Teaching subtraction with regrouping to students experiencing difficulty in mathematics. Preventing School Failure: Alternative Education for Children and Youth, 53(3), 145–152. https://doi.org/10.3200/PSFL.53.3.145-152

Gersten, R., Chard, D. J., Jayanthi, M., Baker, S., Mophy, P., & Flojo, J. (2009). Mathematics instruction for students with learning disabilities: A meta-analysis of instructional components. Review of Educational Research, 79(3), 1202–1242. https://doi.org/10.3102/0034654309334431

Heppen, J. B., Sorensen, N., Allensworth, E., Walters, K., Rickles, J., Taylor, S. S., & Michelman, V. (2017). The struggle to pass algebra: Online vs. face-to-face credit recovery for at-risk urban students. Journal of Research on Educational Effectiveness, 10(2), 272–296. https://doi.org/10.1080/19345747.2016.1168500

Kellems, R. O., Frandsen, K., Hansen, B., Gabrielsen, T., Clarke, R., Simons, K., & Clements, K. (2016). Teaching multi-step math skills to adults with disabilities via video prompting. Research in Developmental Disabilities, 58, 31–44. https://doi.org/10.1016/j.ridd.2016.08.013

Kiru, E. W., Doabler, C. T., Sorrells, A. M., & Cooc, N. A. (2018). A synthesis of technology-mediated mathematics interventions for students with or at risk for mathematics learning disabilities. Journal of Special Education Technology, 33(2), 111–123. https://doi.org/10.1177/0162643417745835

Ledford, J. R., & Gast, D. L. (2018). Single case research methodology: Applications in special education and behavioral sciences. Routledge.

Losinski, M., Ennis, R. P., Sanders, S., & Wiseman, N. (2019). An investigation of SRSF to teach fractions to students with disabilities. Exceptional Children, 85(3), 291–308. https://doi.org/10.1177/0014402918813980

Maccini, P., & Gagnon, J. C. (2000). Best practices for teaching mathematics to secondary students with special needs. Focus on Exceptional Children, 32(5), 1–22.

Mcelrath, K. (2020). Schooling during the COVID-19 pandemic. The United States Census Bureau. https://www.census.gov/library/stories/2020/08/schooling-during-the-covid-19-pandemic.html

National Center on Intensive Intervention. (2016). Principles for designing intervention in mathematics. Office of Special Education, U.S. Department of Education. Retrieved from, http://www.intensiveintervention.org/sites/default/files/Princip_Effect_Math_508.pdf

Osborne, S., & Shaw, R. (2020). Using online interventions to address summer learning loss in rising sixth-graders. Dissertations. 931. Retrieved from, https://irl.umsl.edu/dissertation/931

Park, J., Bouck, E. C., & Fisher, M. (2020a). Using the virtual-representational-abstract instructional sequence with overlearning to students with disabilities in mathematics. Journal of Special Education. https://doi.org/10.1177/0022466920912527

Park, J., Bouck, E. C., & Smith, J. P. (2020b). Using the virtual-representational-abstract instructional sequence with fading support to teach subtraction to students with developmental disabilities. Journal of Autism and Developmental Disabilities, 50, 63–75. https://doi.org/10.1007/s10803-019-04225-4

Pearson, Inc. (2011). Overview of KeyMath-3. Retrieved from, http://images.pearsonclinical.com/images/pdf/keymath-3handout.pdf

Peltier, C., Morin, K. L., Bouck, E. C., Lingo, M. E., Pulos, J. M., Scheffler, F. A., Suk, A., Mathews, L. A., Sinclair, T. E., & Deardorff, M. E. (2020). A meta-analysis of single-case research using mathematics manipulatives with students at risk or identified with a disability. The Journal of Special Education, 54(1), 3–15. https://doi.org/10.1177/0022466919844516

Riccomini, P. J., Morano, S., & Hughes, C. A. (2017). Big ideas in special education: Specially designed instruction, high-leverage practices, explicit instruction, and intensive instruction. Teaching Exceptional Children, 50(1), 20–27. https://doi.org/10.1177/0040059917724412

Root, J., Saunders, A., Spooner, F., & Brosh, C. (2017). Teaching personal finance mathematical problem solving to individuals with moderate intellectual disability. Career Development and Transition for Exceptional Individuals, 40(1), 5–14. https://doi.org/10.1177/2165143416681288

Smith, S. J., Basham, J. D., Rice, M., & Carter, R. A. (2016a). Preparing special education teachers for online learning: Findings from a survey of teacher educators. Journal of Special Education Technology, 31(3), 170–178. https://doi.org/10.1177/0162643416660834

Smith, S. J., Burdette, P. J., Cheatham, G. A., & Harvey, S. P. (2016b). Parental role and support for online learning of student with disabilities: A paradigm shift. Journal of Special Education Leadership, 29(1), 101–112.

Spooner, F., Root, J. R., Saunders, A. F., & Browder, D. M. (2019). An updated evidence-based practice review on teaching mathematics to students with moderate and severe developmental disabilities. Remedial and Special Education, 40(3), 150–165. https://doi.org/10.1177/0741932517751055

Stroizer, S., Hinton, V., Flores, M., & ad Terry, L. . (2015). An investigation of the effects of CRA instruction and students with autism spectrum disorder. Education and Training in Autism and Developmental Disabilities, 50(2), 223–236.

Underhill, R. (1977). Teaching elementary school mathematics. Merrill.

Vannest, K. J., Parker, R. I., Gonen, O., & Adiguzel, T. (2016). Single case research: Web-based calculator for SCR analysis. (Version 2.0) [Web-based application]. Texas A&M University. Retrieved from, www.singlecaseresearch.org

Vasquez, E., III., & Straub, C. (2012). Online instruction for K-12 special education: A review of the empirical literature. Journal of Special Education Technology, 27(3), 31–40. https://doi.org/10.1177/016264341202700303

White, O. R., & Haring, N. G. (1980). Exceptional teaching (2nd ed.). Merrill.

Woodworth, J. L., Raymond, M. E., Chirbas, K., Gonzalez, M., Negassi, Y., Snow, W., & Van Donge, C. (2015). Online charter school study. Center for Research on Education Outcomes. Retrieved from, https://credo.stanford.edu/sites/g/files/sbiybj6481/f/online_charter_study_final.pdf

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Bouck, E.C., Long, H. Online Delivery of a Manipulative-Based Intervention Package for Finding Equivalent Fractions. J Behav Educ 32, 313–333 (2023). https://doi.org/10.1007/s10864-021-09449-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10864-021-09449-y