Abstract

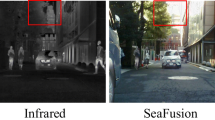

The main bottleneck faced by total variation methods for image fusion is that it is difficult to design a novel optimization model that can be solved by numerical methods. This paper proposes a general framework of total variation optimized by deep learning for infrared and visible image fusion, which combines the advantages of deep convolutional neural networks. Under this framework, any arbitrary convex or non-convex total variation model for image fusion can be designed, and its optimization solution can be obtained through neural network learning. The core idea of the proposed framework is to transform the designed variational model into a loss function of a deep convolutional neural network, and then use the initial fused image of a source image and the output fused image to represent the data item, use the output image and the source image to represent the regularization term, and finally use a deep neural network learning method to obtain the optimal fused image. Based on the proposed framework, further research on pre-fusion, network model and regularization item can be carried out. To verify the effectiveness of the proposed framework, we designed a specific non-convex total variational model and performed experiments on the infrared and visible image datasets. Experimental results show that the proposed method has strong robustness, and compared with the fused images obtained by current state-of-art algorithms in terms of objective evaluation metrics and visual effects, the fused image obtained by the proposed method has more competitive advantages. Our code is publicly available at https://github.com/gzsds/globaloptimizationimagefusion.

Similar content being viewed by others

References

Ma, J., Yu, W., Liang, P., Li, C., Jiang, J.: FusionGAN: a generative adversarial network for infrared and visible image fusion. Inf. Fusion 48, 11 (2019)

Wang, J., Li, Q., Jia, Z., Kasabov, N., Yang, J.: A novel multi-focus image fusion method using PCNN in nonsubsampled contourlet transform domain. Optik 126(20), 2508 (2015)

Jin, X., Jiang, Q., Yao, S., Zhou, D., Nie, R., Lee, S.J., He, K.: Infrared and visual image fusion method based on discrete cosine transform and local spatial frequency in discrete stationary wavelet transform domain. Infrared Phys. Technol. 88, 1 (2018)

Zhang, Q., Liu, Y., Blum, R.S., Han, J., Tao, D.: Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: a review. Inf. Fusion 40, 57 (2018)

Yang, B., Li, S.: Multifocus image fusion and restoration with sparse representation. IEEE Trans. Instrum. Meas. 59(4), 884 (2009)

Li, S., Yin, H.: Multimodal image fusion with joint sparsity model. Opt. Eng. 50(6), 067007 (2011)

Gao, Z., Yang, M., Xie, C.: Space target image fusion method based on image clarity criterion. Opt. Eng. 56(5), 053102 (2017)

Zhang, Y., Zhang, L., Bai, X., Zhang, L.: Infrared and visual image fusion through infrared feature extraction and visual information preservation. Infrared Phys. Technol. 83, 227 (2017)

Li, S., Kang, X., Hu, J.: Image fusion with guided filtering. IEEE Trans. Image Process. 22(7), 2864 (2013)

Liu, Y., Liu, S., Wang, Z.: Multi-focus image fusion with dense SIFT. Inf. Fusion 23, 139 (2015)

Kong, W., Lei, Y., Zhao, H.: Adaptive fusion method of visible light and infrared images based on non-subsampled shearlet transform and fast non-negative matrix factorization. Infrared Phys. Technol. 67, 161 (2014)

Liu, Y., Liu, S., Wang, Z.: A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 24, 147 (2015)

Ma, J., Zhou, Z., Wang, B., Zong, H.: Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 82, 8 (2017)

Ma, T., Ma, J., Fang, B., Hu, F., Quan, S., Du, H.: Multi-scale decomposition based fusion of infrared and visible image via total variation and saliency analysis. Infrared Phys. Technol. 92, 154 (2018)

Prabhakar, K.R., Srikar, V.S., Babu, R.V.L DeepFuse: a deep unsupervised approach for exposure fusion with extreme exposure image Pairs. In: ICCV, pp. 4724–4732 (2017)

Liu, Y., Chen, X., Peng, H., Wang, Z.: Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 36, 191 (2017)

Li, H., Wu, X.J., Kittler, J.: Infrared and visible image fusion using a deep learning framework. In: 2018 24th International Conference on Pattern Recognition (ICPR), pp. 2705–2710. IEEE, (2018)

Li, H., Wu, X.J.: DenseFuse: a fusion approach to infrared and visible images. IEEE Trans. Image Process. 28(5), 2614 (2018)

Kumar, M., Dass, S.: A total variation-based algorithm for pixel-level image fusion. IEEE Trans. Image Process. 18(9), 2137 (2009)

Ma, J., Chen, C., Li, C., Huang, J.: Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fusion 31, 100 (2016)

Li, H., Yu, Z., Mao, C.: Fractional differential and variational method for image fusion and super-resolution. Neurocomputing 171, 138 (2016)

Zhao, J., Cui, G., Gong, X., Zang, Y., Tao, S., Wang, D.: Fusion of visible and infrared images using global entropy and gradient constrained regularization. Infrared Phys. Technol. 81, 201 (2017)

Bengio, Y., Lodi, A., Prouvost, A.: Machine learning for combinatorial optimization: a methodological tour d’Horizon (2018). arXiv preprint arXiv:1811.06128

Ma, J., Ma, Y., Li, C.: Infrared and visible image fusion methods and applications: a survey. Inf. Fusion 45, 153 (2019)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition (2014). arXiv preprint arXiv:1409.1556

Zhang, Q., Maldague, X.: An adaptive fusion approach for infrared and visible images based on NSCT and compressed sensing. Infrared Phys. Technol. 74, 11 (2016)

Ma, Y., Chen, J., Chen, C., Fan, F., Ma, J.: Infrared and visible image fusion using total variation model. Neurocomputing 202, 12 (2016)

Emami, P., Pardalos, P.M., Elefteriadou, L., Ranka, S.: Machine learning methods for solving assignment problems in multi-target tracking (2018). arXiv preprint arXiv:1802.06897

Bello, I., Pham, H., Le, Q.V., Norouzi, M., Bengio, S.: Neural combinatorial optimization with reinforcement learning (2016). arXiv preprint arXiv:1611.09940

Da Cunha, A.L., Zhou, J., Do, M.N.: The nonsubsampled contourlet transform: theory, design, and applications. IEEE Trans. Image Process. 15(10), 3089 (2006)

Kingma, D.P., Ba, J.: The nonsubsampled contourlet transform: theory, design, and applications (2014). arXiv preprint arXiv:1412.6980

Li, H., Wu, X., Kittler, J.: MDLatLRR: a novel decomposition method for infrared and visible image fusion. IEEE Trans. Image Process. 29, 4733 (2020)

Zhao, Z., Xu, S., Zhang, C., Liu, J., Li, P., Zhang, J.: (2020) arXiv preprint arXiv:2003.09210

Roberts, J.W., Van Aardt, J.A., Ahmed, F.B.: Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2(1), 023522 (2008)

Cui, G., Feng, H., Xu, Z., Li, Q., Chen, Y.: Detail preserved fusion of visible and infrared images using regional saliency extraction and multi-scale image decomposition. Opt. Commun. 341, 199 (2015)

Qu, G., Zhang, D., Yan, P.: Information measure for performance of image fusion. Electron. Lett. 38(7), 313 (2002)

Haghighat, M.B.A., Aghagolzadeh, A., Seyedarabi, H.: A non-reference image fusion metric based on mutual information of image features. Comput. Electr. Eng. 37(5), 744 (2011)

Xydeas, C., Petrovic, V.: Objective image fusion performance measure. Electron. Lett. 36(4), 308 (2000)

Piella, G., Heijmans, H.: A new quality metric for image fusion. In: Proceedings 2003 International Conference on Image Processing (Cat. No. 03CH37429), vol. 3, pp. III–173. IEEE (2003)

Eskicioglu, A.M., Fisher, P.S.: Image quality measures and their performance. IEEE Trans. Commun. 43(12), 2959 (1995)

Acknowledgements

This work has been partially supported by the Ministry of education Chunhui project (Grant No: Z2016149) and Sichuan science and technology program (Grant No: 2021YFG0022).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Gao, Z., Wang, Q. & Zuo, C. A total variation global optimization framework and its application on infrared and visible image fusion. SIViP 16, 219–227 (2022). https://doi.org/10.1007/s11760-021-01963-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-021-01963-w