Abstract

Partial differential equations (PDEs) are a fundamental tool in the modeling of many real-world phenomena. In a number of such real-world phenomena the PDEs under consideration contain gradient-dependent nonlinearities and are high-dimensional. Such high-dimensional nonlinear PDEs can in nearly all cases not be solved explicitly, and it is one of the most challenging tasks in applied mathematics to solve high-dimensional nonlinear PDEs approximately. It is especially very challenging to design approximation algorithms for nonlinear PDEs for which one can rigorously prove that they do overcome the so-called curse of dimensionality in the sense that the number of computational operations of the approximation algorithm needed to achieve an approximation precision of size \({\varepsilon }> 0\) grows at most polynomially in both the PDE dimension \(d \in \mathbb {N}\) and the reciprocal of the prescribed approximation accuracy \({\varepsilon }\). In particular, to the best of our knowledge there exists no approximation algorithm in the scientific literature which has been proven to overcome the curse of dimensionality in the case of a class of nonlinear PDEs with general time horizons and gradient-dependent nonlinearities. It is the key contribution of this article to overcome this difficulty. More specifically, it is the key contribution of this article (i) to propose a new full-history recursive multilevel Picard approximation algorithm for high-dimensional nonlinear heat equations with general time horizons and gradient-dependent nonlinearities and (ii) to rigorously prove that this full-history recursive multilevel Picard approximation algorithm does indeed overcome the curse of dimensionality in the case of such nonlinear heat equations with gradient-dependent nonlinearities.

Similar content being viewed by others

1 Introduction

Partial differential equations (PDEs) play a prominent role in the modeling of many real-world phenomena. For instance, PDEs appear in financial engineering in models for the pricing of financial derivatives, PDEs such as the Schrödinger equation appear in quantum physics to describe the wave function of a quantum-mechanical system, PDEs are used in operations research to characterize the value function of control problems, PDEs provide solutions for backward stochastic differential equations (BSDEs) which itself appear in several models from applications, and stochastic PDEs such as the Zakai equation or the Kushner equation appear in nonlinear filtering problems to describe the density of the state of a physical system with only partial information available.

The PDEs in the above named models contain often nonlinearities and are typically high-dimensional, where, e.g., in the models from financial engineering the dimension of the PDE usually corresponds to the number of financial assets in the associated hedging or trading portfolio, where, e.g., in quantum physics the dimension of the PDE is, loosely speaking, three times the number of electrons in the considered physical system, where, e.g., in optimal control problems the dimension of the PDE is determined by the dimension of the state space of the control problem, and where, e.g., in nonlinear filtering problems the dimension of the PDE corresponds to the degrees of freedom in the considered physical system.

Such high-dimensional nonlinear PDEs can in nearly all cases not be solved explicitly and it is one of the most challenging tasks in applied mathematics to solve high-dimensional nonlinear PDEs approximately. In particular, it is very challenging to design approximation methods for nonlinear PDEs for which one can rigorously prove that they do overcome the so-called curse of dimensionality in the sense that the number of computational operations of the approximation method needed to achieve an approximation precision of size \({\varepsilon }> 0\) grows at most polynomially in both the PDE dimension \(d \in \mathbb {N}\) and the reciprocal of the prescribed approximation accuracy \({\varepsilon }\).

Recently, several new stochastic approximation methods for certain classes of high-dimensional nonlinear PDEs have been proposed and studied in the scientific literature. In particular, we refer, e.g., to [11, 12, 26, 29, 30, 53] for BSDE-based approximation methods for PDEs in which nested conditional expectations are discretized through suitable regression methods, we refer, e.g., to [10, 39, 41, 42] for branching diffusion approximation methods for PDEs, we refer, e.g., to [1,2,3, 6,7,8, 13, 14, 16, 17, 21, 24, 25, 31, 34,35,36, 40, 43, 48, 50, 52, 54,55,58, 60, 62, 63] for deep learning based approximation methods for PDEs, and we refer to [4, 5, 20, 28, 46, 47] for numerical simulations, approximation results, and extensions of the in [19, 45] recently introduced full-history recursive multilevel Picard approximation methods for PDEs. In the following we abbreviate full-history recursive multilevel Picard as MLP.

Branching diffusion approximation methods are also in the case of certain nonlinear PDEs as efficient as plain vanilla Monte Carlo approximations in the case of linear PDEs, but the error analysis only applies in the case where the time horizon \(T \in (0,\infty )\) and the initial condition, respectively, are sufficiently small and branching diffusion approximation methods fail to converge in the case where the time horizon \(T \in (0,\infty )\) exceeds a certain threshold (cf., e.g., [41, Theorem 3.12]). For MLP approximation methods it has been recently shown in [4, 45, 46] that such algorithms do indeed overcome the curse of dimensionality for certain classes of gradient-independent PDEs. Numerical simulations for deep learning based approximation methods for nonlinear PDEs in high dimensions are very encouraging (see, e.g., the above named references [1,2,3, 6,7,8, 13, 14, 16, 17, 21, 24, 25, 31, 34,35,36, 40, 43, 48, 50, 52, 54,55,58, 60, 62, 63]) but so far there is only partial error analysis available for such algorithms (which, in turn, is strongly based on the above-mentioned error analysis for the MLP approximation method; cf. [44] and, e.g., [9, 23, 32, 33, 36, 49, 51, 61, 62]). To sum up, to the best of our knowledge until today the MLP approximation method (see [45]) is the only approximation method in the scientific literature for which it has been shown that it does overcome the curse of dimensionality in the numerical approximation of semilinear PDEs with general time horizons.

The above-mentioned articles [4, 28, 45, 46] prove, however, only in the case of gradient-independent nonlinearities that MLP approximation methods overcome the curse of dimensionality and it remains an open problem to overcome the curse of dimensionality in the case of PDEs with general time horizons and gradient-dependent nonlinearities. This is precisely the subject of this article. More specifically, in this article we propose a new MLP approximation method for nonlinear heat equations with gradient-dependent nonlinearities and the main result of this article, Theorem 5.2 in Sect. 5 below, proves that the number of realizations of scalar random variables required by this MLP approximation method to achieve a precision of size \({\varepsilon }> 0\) grows at most polynomially in both the PDE dimension \(d \in \mathbb {N}\) and the reciprocal of the prescribed approximation accuracy \({\varepsilon }\). To illustrate the findings of the main result of this article in more detail, we now present in the following theorem a special case of Theorem 5.2.

Theorem 1.1

Let \(T,\delta ,\lambda \in (0,\infty )\), let \(u_d = ( u_d(t,x) )_{ (t,x) \in [0,T] \times \mathbb {R}^d }\in C^{1,2}([0,T]\times \mathbb {R}^d,\mathbb {R})\), \(d\in \mathbb {N}\), be at most polynomially growing functions, let \(f_d \in C( \mathbb {R}\times \mathbb {R}^d,\mathbb {R})\), \(d\in \mathbb {N}\), let \(g_d \in C( \mathbb {R}^d,\mathbb {R})\), \(d\in \mathbb {N}\), let \(L_{d,i}\in \mathbb {R}\), \(d,i \in \mathbb {N}\), assume for all \(d\in \mathbb {N}\), \(t\in [0,T)\), \(x=(x_1,x_2, \ldots ,x_d)\), \(\mathfrak x=(\mathfrak x_1,\mathfrak x_2, \ldots ,\mathfrak x_d)\), \(z=(z_1,z_2,\ldots ,z_d)\), \(\mathfrak {z}=(\mathfrak z_1, \mathfrak z_2, \ldots , \mathfrak z_d)\in \mathbb {R}^d\), \(y,\mathfrak {y} \in \mathbb {R}\) that

and \( d^{-\lambda }(|g_d(0)|+|f_d(0,0)|)+\sum _{i=1}^d L_{d,i}\le \lambda , \) let \( ( \Omega , \mathcal {F}, {\mathbb {P}}) \) be a probability space, let \( \Theta = \cup _{ n \in \mathbb {N}} \mathbb {Z}^n \), let \( Z^{d, \theta } :\Omega \rightarrow \mathbb {R}^d \), \(d\in \mathbb {N}\), \( \theta \in \Theta \), be i.i.d. standard normal random variables, let \(\mathfrak {r}^\theta :\Omega \rightarrow (0,1)\), \(\theta \in \Theta \), be i.i.d. random variables, assume for all \(b\in (0,1)\) that \({\mathbb {P}}(\mathfrak {r}^0\le b)=\sqrt{b}\), assume that \((Z^{d,\theta })_{(d, \theta ) \in \mathbb {N}\times \Theta }\) and \((\mathfrak {r}^\theta )_{ \theta \in \Theta }\) are independent, let \( \mathbf{U}_{ n,M}^{d,\theta } = ( \mathbf{U}_{ n,M}^{d,\theta , 0},\mathbf{U}_{ n,M}^{d,\theta , 1},\ldots ,\mathbf{U}_{ n,M}^{d,\theta , d} ) :(0,T]\times \mathbb {R}^d\times \Omega \rightarrow \mathbb {R}^{1+d} \), \(n\in \mathbb {Z}\), \(M,d\in \mathbb {N}\), \(\theta \in \Theta \), satisfy for all \( n,M,d \in \mathbb {N}\), \( \theta \in \Theta \), \( t\in (0,T]\), \(x \in \mathbb {R}^d\) that \( \mathbf{U}_{-1,M}^{d,\theta }(t,x)=\mathbf{U}_{0,M}^{d,\theta }(t,x)=0\) and

and for every \(d,M,n \in \mathbb {N}\) let \({\text {RV}}_{d,n,M}\in \mathbb {N}\) be the number of realizations of scalar random variables which are used to compute one realization of \( \mathbf{U}_{n,M}^{d,0}(T,0):\Omega \rightarrow \mathbb {R}\) (cf. (176) for a precise definition). Then there exist \(c\in \mathbb {R}\) and \(N=(N_{d,{\varepsilon }})_{(d, {\varepsilon }) \in \mathbb {N}\times (0,1]}:\mathbb {N}\times (0,1] \rightarrow \mathbb {N}\) such that for all \(d\in \mathbb {N}\), \({\varepsilon }\in (0,1]\) it holds that \( \sum _{n=1}^{N_{d,{\varepsilon }}}{\text {RV}}_{d,n,\lfloor n^{1/4} \rfloor } \le c d^c \varepsilon ^{-(2+\delta )}\) and

Theorem 1.1 is an immediate consequence of Corollary 5.4 in Sect. 5 below. Corollary 5.4, in turn, follows from Theorem 5.2 in Sect. 5, which is the main result of this article. In the following we add a few comments regarding some of the mathematical objects appearing in Theorem 1.1 above.

The real number \(T \in (0,\infty )\) in Theorem 1.1 above describes the time horizon of the PDE under consideration (see (2) in Theorem 1.1 above). Theorem 1.1 reveals under suitable Lipschitz assumptions that the MLP approximation method in (3) above overcomes the curse of dimensionality in the numerical approximation of the gradient-dependent semilinear PDEs in (2) above. Theorem 1.1 even proves that the computational effort of the MLP approximation method in (3) required to obtain a precision of size \({\varepsilon }\in (0,1]\) is bounded by \(c d^c {\varepsilon }^{ -( 2 + \delta ) }\) where \(c\in \mathbb {R}\) is a constant which is completely independent of the PDE dimension \(d\in \mathbb {N}\) and where \(\delta \in (0,\infty )\) is an arbitrarily small positive real number which describes the convergence order which we lose when compared to standard Monte Carlo approximations of linear heat equations. The real number \(\lambda \in (0,\infty )\) in Theorem 1.1 above is an arbitrary large constant which we employ to formulate the Lipschitz and growth assumptions in Theorem 1.1 (see (1) and below (2) in Theorem 1.1 above).

The functions \(u_d :[0,T]\times \mathbb {R}^d \rightarrow \mathbb {R}\), \(d \in \mathbb {N}\), in Theorem 1.1 above are the solutions of the PDEs under consideration; see (2) in Theorem 1.1 above. Note that for every \(d \in \mathbb {N}\) we have that (2) is a PDE where the time variable \(t \in [0,T]\) takes values in the interval [0, T] and where the space variable \(x \in \mathbb {R}^d\) takes values in the d-dimensional Euclidean space \(\mathbb {R}^d\). The functions \(f_d :\mathbb {R}\times \mathbb {R}^d\rightarrow \mathbb {R}\), \(d \in \mathbb {N}\), describe the nonlinearities of the PDEs in (2) and the functions \(g_d:\mathbb {R}^d\rightarrow \mathbb {R}\), \(d\in \mathbb {N}\), describe the initial conditions of the PDEs in (2). The quantities \(\lfloor n^{ 1 / 4 } \rfloor \), \(n \in \mathbb {N},\) in (4) in Theorem 1.1 above describe evaluations of the standard floor function in the sense that for all \(n \in \mathbb {N}\) it holds that \(\lfloor n^{ 1 / 4 } \rfloor = \max ( [0,n^{ 1 / 4 }]\cap \mathbb {N})\). Note that in this work for every \(d\in \mathbb {N}\), \(a\in \mathbb {R}\), \(b=(b_1,b_2,\ldots , b_d) \in \mathbb {R}^d\) we sometimes write \((a,b)\in \mathbb {R}^{d+1}\) as an abbreviation for the vector \((a,b_1,b_2,\ldots ,b_d)\in \mathbb {R}^{d+1}\). In particular, observe that for all \(d\in \mathbb {N}\), \(x=(x_1,x_2,\ldots , x_d),z=(z_1,z_2,\ldots ,z_d)\in \mathbb {R}^d\), \(t\in (0,T]\) it holds that \((g_d(x+[2t]^{1/2}z)-g_d(x))(1,[2t]^{-1/2}z)=(g_d(x+z\sqrt{2t})-g_d(x))(1,z_1\sqrt{(2t)^{-1}},z_2\sqrt{(2t)^{-1}},\ldots , z_d\sqrt{(2t)^{-1}})\) (cf. the first line in (3) above).

Theorem 1.1 proves under suitable assumptions (cf. (1) in Theorem 1.1 above for details) that the MLP approximation method in (3) above overcomes the curse of dimensionality in the numerical approximation of the gradient-dependent semilinear heat equations in (2) above. In order to give the reader a better understanding why the approximation scheme in (3) above is capable of overcoming the curse of dimensionality in the numerical approximation of gradient-dependent semilinear PDEs, we now outline a brief derivation of the approximation scheme in (3) in the special case where \(f\in C(\mathbb {R}\times \mathbb {R}^d,\mathbb {R})\), \(d\in \mathbb {N}\), and \(g_d\in C(\mathbb {R}^d,\mathbb {R})\), \(d\in \mathbb {N}\), are globally bounded functions. For this observe that the Feynman–Kac formula and the assumptions of Theorem 1.1 ensure that the solutions \(u_d :[0,T]\times \mathbb {R}^d \rightarrow \mathbb {R}\), \(d \in \mathbb {N}\), of the PDEs in (2) satisfy that for all \(d\in \mathbb {N}\), \(t\in [0,T]\), \(x\in \mathbb {R}^d\) it holds that

Note that (5) are stochastic fixed-point equations with the solutions \(u_d :[0,T]\times \mathbb {R}^d \rightarrow \mathbb {R}\), \(d \in \mathbb {N}\), of the PDEs in (2) being the fixed points of the stochastic fixed-point equations. MLP approximation methods are powerful approximation techniques for stochastic fixed-point equations but the MLP approach cannot be directly applied to (5) as the gradients \((\nabla _x u_d)( t, x )\), \(t \in [0,T]\), \(x \in \mathbb {R}^d\), of the solutions \(u_d :[0,T]\times \mathbb {R}^d \rightarrow \mathbb {R}\), \(d \in \mathbb {N}\), of the PDEs in (2) appear on the right-hand side of (5) but not on the left-hand side of (5).

To get over this obstacle, we reformulate (5) by means of the Bismut–Elworthy–Li formula (cf., e.g., Da Prato and Zabczyk [15, Theorem 2.1]) to obtain other stochastic fixed-point equations with no derivatives appearing on the right-hand side of the stochastic fixed point equations so that the MLP machinery can be brought into play. More formally, note that the Bismut–Elworthy–Li formula ensures that the solutions \(u_d :[0,T]\times \mathbb {R}^d \rightarrow \mathbb {R}\), \(d \in \mathbb {N}\), of the PDEs in (2) satisfy that for all \(d\in \mathbb {N}\), \(t\in (0,T]\), \(x\in \mathbb {R}^d\) it holds that

Next let \(\mathbf{u}_d = ( \mathbf{u}_{ d, 1 }, \mathbf{u}_{ d, 2 }, \ldots , \mathbf{u}_{ d, d + 1 } ) :[0,T] \times \mathbb {R}^d \rightarrow \mathbb {R}^{ d + 1 },\) \(d \in \mathbb {N}\), satisfy for all \(d \in \mathbb {N}\), \(k \in \{ 2, 3, ..., d + 1 \}\), \(x = (x_1,x_2,\ldots ,x_d) \in \mathbb {R}^d\), \(t \in [0,T]\) that \(\mathbf{u}_{ d, 1 }( t, x ) = u(t,x)\) and \( \mathbf{u}_{ d, k }( t, x ) = (\frac{\partial }{\partial x_{k-1}})u(t,x)\) and observe that (5) and (6) reveal that for all \(d\in \mathbb {N}\), \(t\in (0,T]\), \(x\in \mathbb {R}^d\) it holds that

Observe that (7) are stochastic fixed-point equations with no derivatives appearing on the right-hand side of (7) so that we are now in the position to apply the MLP approach to (7). Next we briefly sketch how MLP approximations for (7) can be obtained and, thereby, we briefly outline the derivation of the MLP approximation schemes in (3) above (cf., e.g., E et al. [18, Section 1.2]). For this observe that (7) can also be written as the fixed point equation \(u=\Phi (u)\) where \(\Phi \) is the self-mapping on the set of all bounded functions in \(C((0, T ] \times \mathbb {R}^d , \mathbb {R}^{d+1})\) which is described through the right-hand side of (7). We now define Picard iterates \( \mathfrak {u}_n \), \( n \in \mathbb {N}_0 \), by means of the recursion that for all \(n \in \mathbb {N}\) it holds that \( \mathfrak {u}_0 = 0 \) and \( \mathfrak {u}_n = \Phi ( \mathfrak {u}_{ n - 1 } ) \). Next we observe that a telescoping sum argument and the fact that for all \(k \in \mathbb {N}\) it holds that \(u_k = \Phi ( u_{ k - 1 } )\) demonstrate that for all \( n \in \mathbb {N}\) it holds that

Roughly speaking, the MLP approximations in (3) can then be derived by approximating the expectations and temporal Lebesgue integrals in (8) within the fixed point function \(\Phi \) through appropriate Monte Carlo approximations with different numbers of Monte Carlo samples (cf., e.g., Heinrich [37], Heinrich and Sindambiwe [38], and Giles [27] for related multilevel Monte Carlo approximations).

Theorem 1.1 above in this introductory section is a special case of the more general approximation results in Sect. 5 in this article, and these more general approximations results treat more general PDEs than (2) as well as more general MLP approximation methods than (3). More specifically, in (2) above we have for every \(d \in \mathbb {N}\) that the nonlinearity \(f_d\) depends only on the PDE solution \(u_d\) and the spatial gradient \(\nabla _x u_d\) of the PDE solution but not on \(t \in [0,T]\) and \(x \in \mathbb {R}^d\), while in Corollary 5.1 and Theorem 5.2 in Sect. 5 the nonlinearities of the PDEs may also depend on \(t \in [0,T]\) and \(x \in \mathbb {R}^d\). Corollary 5.1 and Theorem 5.2 also provide error analyses for a more general class of MLP approximation methods. In particular, in Theorem 1.1 above the family \(\mathfrak {r}^\theta :\Omega \rightarrow (0,1)\), \(\theta \in (0,1)\), of i.i.d. random variables satisfies for all \(b\in (0,1)\) that \({\mathbb {P}}(\mathfrak {r}^0\le b)=\sqrt{b}\) and Corollary 5.1 and Theorem 5.2 are proved under the more general hypothesis that there exists \(\alpha \in (0,1)\) such that for all \(b\in (0,1)\) it holds that \({\mathbb {P}}(\mathfrak {r}^0\le b)=b^\alpha \) (see, e.g., (160) in Theorem 5.2). It is a crucial observation of this article that, roughly speaking, in the case of PDEs with gradient-dependent nonlinearities time integrals in the semigroup formulations of the PDEs under consideration cannot be approximated with continuous uniformly distributed random variables (corresponding to the case \(\alpha =1\)) as it was done, e.g., in [4, 45, 46]; cf. Sect. 5 for a more detailed discussion of this observation. Furthermore, the more general approximation result in Corollary 5.1 in Sect. 5 also provides an explicit upper bound for the constant \(c \in \mathbb {R}\) in Theorem 1.1 above (see (144) in Corollary 5.1).

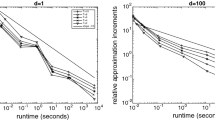

We also refer to the references [5] and [20] for numerical simulations for MLP approximation methods for semilinear high-dimensional PDEs. We would like to point out that there are two variants of MLP approximation methods in the scientific literature. First, MLP approximation methods with the temporal Lebesgue integrals being approximated by deterministic quadrature rules have been proposed in [19, 20] in 2016. In [20] this first variant of MLP approximation methods has also been tested numerically, also in the case of gradient-dependent nonlinearities (see [20, Section 3.2]), but the existing convergence results for this first variant of MLP approximation methods are only applicable under very strong regularity assumptions and even exclude many semilinear heat equations with globally Lipschitz continuous gradient-independent nonlinearities (cf. [19, Corollary 3.19] and [47, Corollary 4.8]). Thereafter, MLP approximation methods with the temporal Lebesgue integrals being approximated by Monte Carlo approximations have been proposed in [45] in 2018 and further studied and extended in [4, 28, 46] as well as in the present article. This second variant of MLP approximation methods has been shown to overcome the curse of dimensionality under only mild (local) Lipschitz continuity assumptions on the nonlinearity of the PDEs under consideration (see, e.g., [45, Theorem 1.1], [4, Theorem 1.1], [46, Theorem 1.1], and [28, Corollary 1.2]) and this second variant of MLP approximation methods has also been tested numerically in [5] but only in the case of gradient-independent nonlinearities and it remains a topic of future research to perform numerical simulations for the MLP approximation methods proposed in this article (belonging somehow to the ’second variant’ of MLP approximation methods) in the case of PDEs with gradient-dependent nonlinearities.

The remainder of this article is organized as follows. In Sect. 2 we establish a few identities and upper bounds for certain iterated deterministic integrals. The results of Sect. 2 are then used in Sect. 3 in which we introduce and analyze the considered MLP approximation methods. In Sect. 4 we establish suitable a priori bounds for exact solutions of PDEs of the form (2). In Sect. 5 we combine the findings of Sects. 3 and 4 to establish in Theorem 5.2 below the main approximation result of this article.

2 Analysis of Certain Deterministic Iterated Integrals

In this section we establish in Corollary 2.5 below an upper bound for products of certain independent random variables. Corollary 2.5 below is a central ingredient in our error analysis for MLP approximations in Sect. 3 below. Our proof of Corollary 2.5 employs the essentially well-known factorization lemma for certain conditional expectations in Lemma 2.4 below as well as a few elementary identities and estimates for certain deterministic iterated integrals which are provided in Lemmas 2.1, 2.2, and Corollary 2.3 below.

2.1 Identities for Certain Deterministic Iterated Integrals

Lemma 2.1

Let \(T,\beta ,\gamma \in (0,\infty )\), let \(\rho :(0,1)\rightarrow (0,\infty )\) be \({\mathcal {B}}((0,1))/{\mathcal {B}}((0,\infty ))\)-measurable, and let \(\varrho :[0,T]^2\rightarrow (0,\infty )\) satisfy for all \(t\in [0,T)\), \(s\in (t,T]\) that \(\varrho (t,s)=\tfrac{1}{T-t}\rho (\tfrac{s-t}{T-t})\). Then it holds for all \(j\in \mathbb {N}\), \(s_0\in [0,T)\) that

Proof of Lemma 2.1

We prove (9) by induction on \(j\in \mathbb {N}\). For the base case \(j=1\) note that integration by substitution yields that for all \(s_0\in [0,T)\) it holds that

This proves (9) in the base case \(j=1\). For the induction step \(\mathbb {N}\ni j\rightsquigarrow j+1\in \mathbb {N}\) note that the induction hypothesis and integration by substitution imply for all \(s_0\in [0,T)\) that

Induction thus proves (9). This completes the proof of Lemma 2.1. \(\square \)

Lemma 2.2

Let \(\alpha \in (0,1)\), \(T,\gamma \in (0,\infty )\), \(\beta \in (0,\alpha \gamma +1)\) and let \(\rho :(0,1)\rightarrow (0,\infty )\) and \(\varrho :[0,T]^2\rightarrow (0,\infty )\) satisfy for all \(r\in (0,1)\), \(t\in [0,T)\), \(s\in (t,T]\) that \(\rho (r)=\frac{1-\alpha }{r^\alpha }\) and \(\varrho (t,s)=\tfrac{1}{T-t}\rho (\tfrac{s-t}{T-t})\). Then it holds for all \(j\in \mathbb {N}\), \(s_0\in [0,T)\) that

Proof of Lemma 2.2

Throughout this proof let \(B :(0,\infty )\times (0,\infty )\rightarrow \mathbb {R}\) satisfy for all \(x,y\in (0,\infty )\) that

Note that (13) and the fact that for all \(x, y \in (0,\infty )\) it holds that \(B(x,y) = \frac{\Gamma (x)\Gamma (y)}{ \Gamma (x+y) }\) ensure that for all \(i\in \mathbb {N}_0\) it holds that

Lemma 2.1 hence implies that for all \(j\in \mathbb {N}\), \(s_0\in [0,T)\) it holds that

This establishes (12). The proof of Lemma 2.2 is thus completed. \(\square \)

2.2 Estimates for Certain Deterministic Iterated Integrals

Corollary 2.3

Let \(\alpha \in (0,1)\), \(T,\gamma \in (0,\infty )\), \(\beta \in [\alpha \gamma ,\alpha \gamma +1]\) and let \(\rho :(0,1)\rightarrow (0,\infty )\) and \(\varrho :[0,T]^2\rightarrow (0,\infty )\) satisfy for all \(r\in (0,1)\), \(t\in [0,T)\), \(s\in (t,T]\) that \(\rho (r)=\frac{1-\alpha }{r^\alpha }\) and \(\varrho (t,s)=\tfrac{1}{T-t}\rho (\tfrac{s-t}{T-t})\). Then it holds for all \(j\in \mathbb {N}\), \(s_0\in [0,T)\) that

Proof of Corollary 2.3

First, observe that Wendel’s inequality for the gamma function (see, e.g., Wendel [64] and Qi [59, Section 2.1]) ensures that for all \(x\in (0,\infty )\), \(s\in [0,1]\) it holds that

Moreover, note that the fact that for all \(x\in (0,\infty )\) it holds that \(\ln ^\prime (x)=x^{-1}\) demonstrates that for all \(j\in \mathbb {N}\), \(\lambda \in (0,\infty )\) it holds that

Combining this, (17), and the fact that \(\alpha \gamma -\beta +1\in [0,1]\) with the fact that for all \(x\in [0,\infty )\) it holds that \(1+x\le e^{x}\) proves that for all \(j\in \mathbb {N}\) it holds that

Lemma 2.2 hence implies that for all \(j\in \mathbb {N}\), \(s_0\in [0,T)\) it holds that

This establishes (16). The proof of Corollary 2.3 is thus completed. \(\square \)

2.3 Estimates for Products of Certain Independent Random Variables

Lemma 2.4

Let \(T\in (0,\infty )\), \(d\in \mathbb {N}\), \(F\in C((0,1)\times [0,T) \times \mathbb {R}^d, [0,\infty ))\), let \((\Omega ,\mathcal F,{\mathbb {P}})\) be a probability space, let \(\rho :\Omega \rightarrow (0,1)\) and \(\tau :\Omega \rightarrow (0,T)\) be random variables, let \(W:[0,T]\times \Omega \rightarrow \mathbb {R}^d\) be a standard Brownian motion with continuous sample paths, let \(f:[0,T) \rightarrow [0,\infty ]\) satisfy for all \(t\in [0,T)\) that \(f(t)={\mathbb {E}}[F(\rho , t, W_{t+(T-t)\rho }-W_t)]\), let \({\mathcal {G}}\subseteq \mathcal F\) be a sigma-algebra, let \(\mathcal H=\sigma ({\mathcal {G}} \cup \sigma (\tau ,(W_{\min \{s,\tau \}})_{s\in [0,T]}))\), and assume that \(\rho \), \(\tau \), W, and \({\mathcal {G}}\) are independent. Then it holds \({\mathbb {P}}\)-a.s. that

Proof of Lemma 2.4

First, note that independence of \(\rho \), \(\tau \), W, and \({\mathcal {G}}\) ensures that it holds \({\mathbb {P}}\)-a.s. that

Next observe that independence of \(\rho \), \(\tau \), and W and the tower property of conditional expectations (cf., e.g., Hutzenthaler et al. [45, Lemma 2.2] (applied with \({\mathcal {G}}=\sigma (\rho , (W_{s})_{s\in [0,T]})\), \(S=(0,T)\), \({\mathcal {S}}={\mathcal {B}}((0,T))\), \(U(t,\omega )={\mathbf {1}}_{A}(t){\mathbf {1}}_{B}((W_{\min \{s,t\}}(\omega ))_{s\in [0,T]})) F(\rho (\omega ),t, W_{t+(T-t)\rho (\omega )}(\omega )-W_t(\omega ))\), \(Y(\omega )=\tau (\omega )\) for \(t\in (0,T)\), \(\omega \in \Omega \) in the notation of [45, Lemma 2.2])) prove that for all \(A\in {\mathcal {B}}((0,T))\), \(B\in {\mathcal {B}}(C([0,T],\mathbb {R}^d))\) it holds that

The fact that for all \(t\in [0,T]\), \(r\in [t,T]\) it holds that \((W_{\min \{s,t\}})_{s\in [0,T]}\) and \(W_r-W_t\) are independent hence proves that for all \(A\in {\mathcal {B}}((0,T))\), \(B\in {\mathcal {B}}(C([0,T],\mathbb {R}^d))\) it holds that

This and, e.g., Hutzenthaler et al. [45, Lemma 2.2] (applied with \({\mathcal {G}}=\sigma (\rho , (W_{s})_{s\in [0,T]})\), \(S=(0,T)\), \({\mathcal {S}}={\mathcal {B}}((0,T))\), \(U(t,\omega )={\mathbf {1}}_{A}(t){\mathbf {1}}_{B}((W_{\min \{s,t\}}(\omega ))_{s\in [0,T]})) g(t)\), \(Y(\omega )=\tau (\omega )\) for \(t\in (0,T)\), \(\omega \in \Omega \) in the notation of [45, Lemma 2.2]) proves that for all \(A\in {\mathcal {B}}((0,T))\), \(B\in {\mathcal {B}}(C([0,T],\mathbb {R}^d))\) it holds that

Combining this with (22) establishes (21). The proof of Lemma 2.4 is thus completed. \(\square \)

Corollary 2.5

Let \(T\in (0,\infty )\), \(d\in \mathbb {N}\), \(j\in \mathbb {N}_0\), \(\mathbf {e}_1=(1,0,0\ldots ,0)\), \(\mathbf {e}_2=(0,1,0,\ldots ,0)\), ..., \(\mathbf {e}_{d+1}=(0,0,\ldots , 0,1)\in \mathbb {R}^{d+1}\), \(\nu _0,\nu _1, \ldots , \nu _j\in \{1,2,\ldots , d+1\}\), \(\alpha \in (0,1)\), \(p\in (1,\infty )\) satisfy \(\alpha (p-1)\le \frac{p}{2} \le \alpha (p-1)+1\), let \(\langle \cdot , \cdot \rangle :\mathbb {R}^{d+1}\times \mathbb {R}^{d+1} \rightarrow \mathbb {R}\) be the standard scalar product on \(\mathbb {R}^{ d + 1 }\), let \( ( \Omega , \mathcal {F}, {\mathbb {P}}) \) be a probability space, let \( W=(W^1,W^2,\ldots ,W^d) :[0,T] \times \Omega \rightarrow \mathbb {R}^d \) be a standard Brownian motion with continuous sample paths, let \(\mathfrak {r}^{(n)}:\Omega \rightarrow (0,1)\), \(n\in \mathbb {N}_0\), be i.i.d. random variables, assume that W and \((\mathfrak {r}^{(n)})_{n\in \mathbb {N}_0}\) are independent, let \(\rho :(0,1)\rightarrow (0,\infty )\) and \(\varrho :[0,T]^2\rightarrow (0,\infty )\) satisfy for all \(b\in (0,1)\), \(t\in [0,T)\), \(s\in (t,T]\) that \(\rho (b)=\frac{1-\alpha }{b^\alpha }\), \({\mathbb {P}}(\mathfrak {r}^{(0)}\le b)=\int _0^b \rho (u)\,du\), and \(\varrho (t,s)=\tfrac{1}{T-t}\rho (\tfrac{s-t}{T-t})\), let \(S:\mathbb {N}_0 \times [0,T)\times \Omega \rightarrow [0,T)\) satisfy for all \(n \in \mathbb {N}_0\), \(t\in [0,T)\) that \(S(0,t)=t\) and \(S(n+1,t)=S(n,t)+(T-S(n,t))\mathfrak {r}^{(n)}\), and let \(t\in [0,T)\). Then

Proof of Corollary 2.5

Throughout this proof let \(\mathbb F_n\subseteq \mathcal F\), \(n\in \mathbb {N}_0\), satisfy for all \(n\in \mathbb {N}\) that \(\mathbb F_0=\{\emptyset , \Omega \}\) and that \(\mathbb F_n=\sigma (\mathbb F_{n-1} \cup \sigma (S(n,t), (W_{\min \{s,S(n,t)\}})_{s\in [0,T]}))\) and let \(v = ( v_1, v_2, \ldots , v_d) \in \mathbb {R}^d\) satisfy \(v_1 = v_2 = \cdots = v_{ d } = 1\). Note that for all \(r\in [0,T)\), \(s\in [r,T]\), \(i\in \{1,2,\ldots ,d\}\), \(n\in \mathbb {N}\) it holds that \(S(n,r)>S(n-1,r)\) and

Next we claim that for all \(k\in \{1,2,\ldots ,j+1\}\) it holds \({\mathbb {P}}\)-a.s. that

We prove (28) by backward induction on \(k\in \{1,2,\ldots ,j+1\}\). For the base case \(k=j+1\) note that the fact that \(S(j+1,t)=S(j,t)+(T-S(j,t))\mathfrak {r}^{(j)}\), Lemma 2.4 (applied with \(F(r,s,x)=\big |\frac{1}{\varrho (s,s+(T-s)r)}\big \langle \mathbf {e}_{\nu _j},\big ( 1, \tfrac{ x }{(T-s)r } \big )\big \rangle \big |^p\), \(\rho =\mathfrak {r}^{(j)}\), \(\tau =S(j,t)\), \({\mathcal {G}}=\mathbb {F}_{j-1}\) for \(r\in (0,1)\), \(s\in [0,T)\), \(x\in \mathbb {R}^d\) in the notation of Lemma 2.4), e.g., Hutzenthaler et al. [45, Lemma 2.3], the hypothesis that W and \(\mathfrak {r}^{(j)}\) are independent, (27), and the fact that for all \(r\in [0,T)\), \(s\in (r,T]\) it holds that \(\varrho (r,s)=\frac{1}{T-r}\rho (\frac{s-r}{T-r})\) ensure that it holds \({\mathbb {P}}\)-a.s. that

This establishes (28) in the base case \(k=j+1\). For the induction step \(\{2,3\ldots ,j+1\} \ni k+1\rightsquigarrow k \in \{1,2,\ldots ,j\}\) assume that there exists \(k\in \{1,2,\ldots ,j\}\) which satisfies that

Observe that the tower property, the fact that the random variable \(\frac{1}{\varrho (S(k-1,t),S(k,t))} \big ( 1, \frac{ W_{S(k,t)}- W_{S(k-1,t)} }{S(k,t)-S(k-1,t) } \big ) \) is \(\mathbb F_k/{\mathcal {B}}(\mathbb {R})\)-measurable, and (30) ensure that it holds \({\mathbb {P}}\)-a.s. that

The fact that \(S(k,t)=S(k-1,t)+(T-S(k-1,t))\mathfrak {r}^{(k-1)}\) and Lemma 2.4 (applied with \(\rho =\mathfrak {r}^{(k-1)}\), \(\tau =S(k-1,t)\), \({\mathcal {G}}=\mathbb {F}_{k-2}\) in the notation of Lemma 2.4) hence prove that it holds \({\mathbb {P}}\)-a.s. that

This, e.g., Hutzenthaler et al. [45, Lemma 2.3], the hypothesis that W and \(\mathfrak {r}^{(k-1)}\) are independent, (27), and the fact that for all \(r\in [0,T)\), \(s\in (r,T]\) it holds that \(\varrho (r,s)=\frac{1}{T-r}\rho (\frac{s-r}{T-r})\) assure that it holds \({\mathbb {P}}\)-a.s. that

Induction thus proves (28). Next observe that (28) implies that

Corollary 2.3 (applied with \(\beta =\frac{p}{2}\) and \(\gamma =p-1\) in the notation of Corollary 2.3), the fact that \(\frac{p}{2}\in [\alpha (p-1),\alpha (p-1)+1]\), and the fact that \((\frac{p}{2}-\alpha (p-1))(1-(\frac{p}{2}-\alpha (p-1)))\le \frac{1}{4}\) therefore show that

Combining this with the fact that \(\alpha (p-1)-\frac{p}{2}+1\le p-1-\frac{p}{2}+1=\frac{p}{2}\) establishes (26). The proof of Corollary 2.5 is thus completed. \(\square \)

3 Full-History Recursive Multilevel Picard Approximation Methods

In this section we introduce and analyze a class of new MLP approximation methods for nonlinear heat equations with gradient-dependent nonlinearities. In the main result of this section, Proposition 3.5 in Sect. 3.3 below, we provide a detailed error analysis for these new MLP approximation methods. We will employ Proposition 3.5 in our proofs of the approximation results in Sect. 5 below (cf. Corollary 5.1, Theorem 5.2, Corollary 5.4, and Corollary 5.5 in Sect. 5 below). Our proof of Proposition 3.5 employs suitable recursive error bounds for the proposed MLP approximations, which we establish in Lemma 3.4 below. Our proof of Lemma 3.4, in turn, uses appropriate measurability and distribution properties of the proposed MLP approximations, which we establish in the elementary auxiliary result in Lemma 3.2 below, and appropriate explicit representations for expectations of the proposed MLP approximations, which we establish in the auxiliary result in Lemma 3.3 below. In Setting 3.1 we formulate the mathematical framework which we employ to analyze the proposed MLP approximations. In particular, in (39) in Setting 3.1 we introduce the proposed MLP approximations.

3.1 Description of MLP Approximations

Setting 3.1

Let \(\left\| \cdot \right\| _1:(\cup _{n\in \mathbb {N}}\mathbb {R}^n)\rightarrow \mathbb {R}\) satisfy for all \(n\in \mathbb {N}\), \(x=(x_1,x_2,\ldots ,x_n)\in \mathbb {R}^n\) that \(\Vert x\Vert _1=\sum _{i=1}^n |x_i|\), let \( T \in (0,\infty ) \), \( d \in \mathbb {N}\), \( \Theta = \cup _{ n \in \mathbb {N}} \mathbb {Z}^n \), \(L=(L_1,L_2,\ldots ,L_{d+1})\in [0,\infty )^{d+1}\), \(K=(K_1,K_2,\ldots , K_d)\in [0,\infty )^d\), \(\mathbf {e}_1=(1,0,\ldots ,0)\), \(\mathbf {e}_2=(0,1,0,\ldots ,0)\), ..., \(\mathbf {e}_{d+1}=(0,0,\ldots ,1)\in \mathbb {R}^{d+1}\), \(\rho \in C((0,1),(0,\infty ))\), \(\mathbf{u}=(\mathbf{u}_1, \mathbf{u}_2, \ldots , \mathbf{u}_{d+1}) \in C([0,T)\times \mathbb {R}^d,\mathbb {R}^{1+d})\), let \(\langle \cdot , \cdot \rangle :\mathbb {R}^{d+1}\times \mathbb {R}^{d+1} \rightarrow \mathbb {R}\) satisfy for all \(v=(v_1,v_2,\ldots , v_{d+1})\), \(w=(w_1,w_2,\ldots , w_{d+1})\in \mathbb {R}^{d+1}\) that \(\langle v, w\rangle =\sum _{i=1}^{d+1}v_iw_i\), let \(\varrho :[0,T]^2\rightarrow \mathbb {R}\) satisfy for all \(t\in [0,T)\), \(s\in (t,T)\) that \(\varrho (t,s)=\frac{1}{T-t}\rho (\frac{s-t}{T-t})\), let \( ( \Omega , \mathcal {F}, {\mathbb {P}}) \) be a probability space, let \( W^{ \theta }=(W^{\theta ,1}, W^{\theta ,2}, \ldots , W^{\theta ,d}) :[0,T] \times \Omega \rightarrow \mathbb {R}^d \), \( \theta \in \Theta \), be i.i.d. standard Brownian motions with continuous sample paths, let \(\mathfrak {r}^\theta :\Omega \rightarrow (0,1)\), \(\theta \in \Theta \), be i.i.d. random variables, assume for all \(b\in (0,1)\) that \({\mathbb {P}}(\mathfrak {r}^0\le b)=\int _0^b \rho (s)\,ds\), assume that \((W^\theta )_{\theta \in \Theta }\) and \((\mathfrak {r}^\theta )_{\theta \in \Theta }\) are independent, let \(\mathcal {R}^{\theta }:[0,T)\times \Omega \rightarrow [0,T)\), \(n \in \mathbb {N}_0\), satisfy for all \(\theta \in \Theta \), \(t\in [0,T)\) that \(\mathcal {R}^{\theta } _t = t+ (T-t)\mathfrak {r}^{\theta }\), let \( f\in C([0,T]\times \mathbb {R}^d\times \mathbb {R}^{1+d},\mathbb {R})\), \( g\in C(\mathbb {R}^d, \mathbb {R}) \), \( F:C([0,T)\times \mathbb {R}^d,\mathbb {R}^{1+d}) \rightarrow C([0,T)\times \mathbb {R}^d,\mathbb {R}) \) satisfy for all \(t\in [0,T)\), \(x=(x_1,x_2,\ldots ,x_d)\), \(\mathfrak {x}=(\mathfrak {x}_1, \mathfrak {x}_2,\ldots ,\mathfrak {x}_d)\in \mathbb {R}^d\), \(u=(u_1,u_2,\ldots ,u_{d+1})\), \(\mathfrak {u}=(\mathfrak {u}_1,\mathfrak {u}_2,\ldots ,\mathfrak {u}_{d+1})\in \mathbb {R}^{1+d}\), \(\mathbf{v}\in C([0,T)\times \mathbb {R}^d,\mathbb {R}^{1+d})\) that

and \((F(\mathbf{v}))(t,x)=f(t,x,\mathbf{v}(t,x))\), and let \( \mathbf{U}_{ n,M}^{\theta }=(\mathbf{U}_{ n,M}^{\theta ,1}, \mathbf{U}_{ n,M}^{\theta ,2}, \ldots , \mathbf{U}_{ n,M}^{\theta ,d+1}) :[0,T)\times \mathbb {R}^d\times \Omega \rightarrow \mathbb {R}^{1+d} \), \(n,M\in \mathbb {Z}\), \(\theta \in \Theta \), satisfy for all \( n,M \in \mathbb {N}\), \( \theta \in \Theta \), \( t\in [0,T)\), \(x \in \mathbb {R}^d\) that \( \mathbf{U}_{-1,M}^{\theta }(t,x)=\mathbf{U}_{0,M}^{\theta }(t,x)=0\) and

3.2 Properties of MLP Approximations

Lemma 3.2

(Measurability properties) Assume Setting 3.1 and let \(M\in \mathbb {N}\). Then

-

(i)

for all \(n \in \mathbb {N}_0\), \(\theta \in \Theta \) it holds that \( \mathbf{U}_{ n,M}^{\theta } :[0, T) \times \mathbb {R}^d \times \Omega \rightarrow \mathbb {R}^{d+1} \) is a continuous random field,

-

(ii)

for all \(n \in \mathbb {N}_0\), \(\theta \in \Theta \) it holds that \( \sigma ( \mathbf{U}^\theta _{n, M} ) \subseteq \sigma ( (\mathfrak {r}^{(\theta , \vartheta )})_{\vartheta \in \Theta }, (W^{(\theta , \vartheta )})_{\vartheta \in \Theta }) \),

-

(iii)

for all \(n, m \in \mathbb {N}_0\), \(i,j,k,l, \in \mathbb {Z}\), \(\theta \in \Theta \) with \((i,j) \ne (k,l)\) it holds that \( \mathbf{U}^{(\theta ,i,j)}_{n,M} \) and \( \mathbf{U}^{(\theta ,k,l)}_{m,M} \) are independent,

-

(iv)

for all \(n \in \mathbb {N}_0\), \(\theta \in \Theta \) it holds hat \(\mathbf{U}_{ n,M}^{\theta }\), \(W^\theta \), and \(\mathfrak {r}^\theta \) are independent,

-

(v)

for all \(n \in \mathbb {N}_0\) it holds that \( \mathbf{U}^\theta _{n, M} \), \( \theta \in \Theta \), are identically distributed, and

-

(vi)

for all \(\theta \in \Theta \), \(l\in \mathbb {N}\), \(i\in \mathbb {N}\), \(t\in [0,T)\), \(x\in \mathbb {R}^d\) it holds that

$$\begin{aligned} \tfrac{ F( \mathbf{U}_{l-1,M}^{(\theta ,-l,i)}) (\mathcal {R}^{(\theta , l,i)}_t,x+W_{\mathcal {R}^{(\theta , l,i)}_t}^{(\theta ,l,i)}-W_t^{(\theta ,l,i)}) }{\varrho (t,\mathcal {R}^{(\theta , l,i)}_t)} \left( 1 , \tfrac{ W_{\mathcal {R}^{(\theta , l,i)}_t}^{(\theta ,l,i)}- W^{(\theta , l, i)}_{t} }{ \mathcal {R}^{(\theta , l,i)}_t-t} \right) \end{aligned}$$(40)and

$$\begin{aligned} \tfrac{ F( \mathbf{U}_{l-1,M}^{(\theta ,l,i)}) (\mathcal {R}^{(\theta , l,i)}_t,x+W_{\mathcal {R}^{(\theta , l,i)}_t}^{(\theta ,l,i)}-W_t^{(\theta ,l,i)}) }{\varrho (t,\mathcal {R}^{(\theta , l,i)}_t)} \left( 1 , \tfrac{ W_{\mathcal {R}^{(\theta , l,i)}_t}^{(\theta ,l,i)}- W^{(\theta , l, i)}_{t} }{ \mathcal {R}^{(\theta , l,i)}_t-t} \right) \end{aligned}$$(41)are identically distributed.

Proof of Lemma 3.2

First, observe that (39), the hypothesis that for all \(M \in \mathbb {N}\), \(\theta \in \Theta \) it holds that \(\mathbf{U}^\theta _{0, M} = 0\), the fact that for all \(\theta \in \Theta \) it holds that \(W^\theta \) and \(\mathcal {R}^\theta \) are continuous random fields, the hypothesis that \(f\in C([0,T]\times \mathbb {R}^d \times \mathbb {R}\times \mathbb {R}^d, \mathbb {R})\), the hypothesis that \(g\in C(\mathbb {R}^d, \mathbb {R})\), the fact that \(\varrho |_{\{(s,t)\in [0,T)^2:s<t\}}\in C( \{(s,t)\in [0,T)^2:s<t\}, \mathbb {R}) \), and induction on \(\mathbb {N}_0\) establish Item (i). Next note that Item (i), the hypothesis that \(f\in C([0,T]\times \mathbb {R}^d \times \mathbb {R}\times \mathbb {R}^d, \mathbb {R})\), and, e.g., Beck et al. [2, Lemma 2.4] assure that for all \(n \in \mathbb {N}_0\), \(\theta \in \Theta \) it holds that \(F(\mathbf{U}^\theta _{n, M})\) is \( ( \mathcal {B}((0, T) \times \mathbb {R}^d ) \otimes \sigma (\mathbf{U}^\theta _{n, M}) )/ \mathcal {B}(\mathbb {R})\)-measurable. The hypothesis that for all \(M \in \mathbb {N}\), \(\theta \in \Theta \) it holds that \(\mathbf{U}^\theta _{0, M} = 0\), (39), the fact that for all \(\theta \in \Theta \) it holds that \(W^\theta \) is \( ( \mathcal {B}([0, T]) \otimes \sigma (W^\theta ) )/ \mathcal {B}(\mathbb {R})\)-measurable, the fact that for all \(\theta \in \Theta \) it holds that \(\mathcal {R}^\theta \) is \( ( \mathcal {B}([0, T)) \otimes \sigma (\mathfrak {r}^\theta ) )/ \mathcal {B}([0, T) )\)-measurable, and induction on \(\mathbb {N}_0\) hence prove Item (ii). In addition, note that Item (ii) and the fact that for all \(i,j,k,l, \in \mathbb {Z}\), \(\theta \in \Theta \) with \((i,j) \ne (k,l)\) it holds that \(((\mathfrak {r}^{(\theta ,i,j, \vartheta )}, W^{(\theta ,i,j, \vartheta )}))_{\vartheta \in \Theta }\) and \(((\mathfrak {r}^{(\theta ,k,l, \vartheta )}, W^{(\theta ,k,l, \vartheta )}))_{\vartheta \in \Theta }\) are independent prove Item (iii). Furthermore, observe that Item (ii) and the fact that for all \(\theta \in \Theta \) it holds that \((\mathfrak {r}^{(\theta , \vartheta )})_{\vartheta \in \Theta }\), \((W^{(\theta , \vartheta )})_{\vartheta \in \Theta }\), \(W^\theta \), and \(\mathfrak {r}^\theta \) are independent establish Item (iv). Next observe that the hypothesis that for all \(\theta \in \Theta \) it holds that \(\mathbf{U}^\theta _{0, M} = 0\), the hypothesis that \((W^\theta )_{\theta \in \Theta }\) are i.i.d., the hypothesis that \((\mathcal {R}^\theta )_{\theta \in \Theta }\) are i.i.d., Items (i)–(iii), Hutzenthaler et al. [45, Corollary 2.5], and induction on \(\mathbb {N}_0\) establish Item (v). Furthermore, observe that Item (ii), the fact that for all \(\theta \in \Theta \), \(l\in \mathbb {N}\), \(i\in \mathbb {N}\) it holds that \((\mathfrak {r}^{(\theta ,-l,i, \vartheta )})_{\vartheta \in \Theta }\), \((W^{(\theta ,-l,i, \vartheta )})_{\vartheta \in \Theta }\), \(W^{\theta ,l,i}\), and \(\mathfrak {r}^{\theta ,l,i}\) are independent, and, e.g., Hutzenthaler et al. [45, Lemma 2.3] imply that for every \(\theta \in \Theta \), \(l,i\in \mathbb {N}\), \(t\in [0,T)\), \(x\in \mathbb {R}^d\) and every bounded \({\mathcal {B}}(\mathbb {R}^{d+1})/{\mathcal {B}}(\mathbb {R})\)-measurable \(\psi :\mathbb {R}^{d+1}\rightarrow \mathbb {R}\) it holds that

This, Item (v), Item (ii), the fact that for all \(\theta \in \Theta \), \(l, i\in \mathbb {N}\) it holds that \((\mathfrak {r}^{(\theta ,l,i, \vartheta )})_{\vartheta \in \Theta }\), \((W^{(\theta ,l,i, \vartheta )})_{\vartheta \in \Theta }\), \(W^{\theta ,l,i}\), and \(\mathfrak {r}^{\theta ,l,i}\) are independent, and, e.g., Hutzenthaler et al. [45, Lemma 2.3] imply that for every \(\theta \in \Theta \), \(l,i\in \mathbb {N}\), \(t\in [0,T)\), \(x\in \mathbb {R}^d\) and every bounded \({\mathcal {B}}(\mathbb {R}^{d+1})/{\mathcal {B}}(\mathbb {R})\)-measurable \(\psi :\mathbb {R}^{d+1}\rightarrow \mathbb {R}\) it holds that

This establishes Item (vi). The proof of Lemma 3.2 is thus completed. \(\square \)

Lemma 3.3

(Approximations are integrable) Assume Setting 3.1, let \(p\in (1,\infty )\), \(M \in \mathbb {N}\), \(x \in \mathbb {R}^d\), and assume for all \(q\in [1,p)\), \(t\in [0,T)\) that

Then

-

(i)

it holds for all \(\theta \in \Theta \), \(q\in [1,\infty )\), \(\nu \in \{1,2,\ldots , d+1\}\) that

$$\begin{aligned} \sup _{u\in (0,T]}\sup _{t\in [0,u)}\sup _{y\in \mathbb {R}^d} {\mathbb {E}}\!\left[ \big |\big (g(y+W^{\theta }_u-W^{\theta }_t)-g(y)\big ) \big \langle \mathbf {e}_\nu , \big ( 1 , \tfrac{ W^{\theta }_{u}- W^{\theta }_{t} }{ u - t } \big ) \big \rangle \big |^q\right] <\infty , \end{aligned}$$(45) -

(ii)

it holds for all \(n\in \mathbb {N}_0\), \(\theta \in \Theta \), \(q\in [1,p)\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots , d+1\}\) that

$$\begin{aligned}&\sup _{s\in [t,T)} \left| {\mathbb {E}}\!\left[ \left| (\mathbf{U}_{n,M}^{\theta }(s,x+W_{s}^\theta -W_{t}^\theta ))_\nu \right| ^q \right] \right. \nonumber \\&\left. \quad + {\mathbb {E}}\!\left[ \tfrac{ \big | \big (F(\mathbf{U}_{n,M}^{\theta })\big ) (\mathcal {R}^{\theta }_s,x+W_{\mathcal {R}^{\theta }_s}^{\theta }-W_t^{\theta }) \big |^q }{\left[ \varrho (s,\mathcal {R}^{\theta }_s)\right] ^q} \left| \big \langle \mathbf {e}_\nu , \big ( 1 , \tfrac{ W_{\mathcal {R}^{\theta }_s}^{\theta }- W^{\theta }_{s} }{ \mathcal {R}^{\theta }_s-s} \big ) \big \rangle \right| ^q \right] \right| <\infty , \end{aligned}$$(46)and

-

(iii)

it holds for all \(n\in \mathbb {N}\), \(\theta \in \Theta \), \(t\in [0,T)\) that

$$\begin{aligned} {\mathbb {E}}\!\left[ \mathbf{U}_{n,M}^{\theta }(t,x)\right]&={\mathbb {E}}\!\left[ g(x+W_T^{\theta }-W_t^{\theta }) \Big ( 1 , \tfrac{ W^{\theta }_{T}- W^{\theta }_{t} }{ T - t } \Big ) \right] \nonumber \\&\quad + {\mathbb {E}}\!\left[ \left( F( \mathbf{U}_{n-1,M,Q}^{\theta })\right) \!(\mathcal {R}^{\theta }_t,x+W_{\mathcal {R}^{\theta }_t}^{\theta }-W_t^{\theta }) \Big ( 1 , \tfrac{ W^{\theta }_{\mathcal {R}^{\theta }_t}- W^{\theta }_{t} }{ {\mathcal {R}^{\theta }_t - t} } \Big ) \right] . \end{aligned}$$(47)

Proof of Lemma 3.3

Observe that the Cauchy–Schwarz inequality, (36), Jensen’s inequality, and the fact that for all \(u\in (0,T]\), \(t\in [0,u)\), \(i\in \{1,2,\ldots ,d\}\), \(\theta \in \Theta \) it holds that \(W^{\theta ,i}_u-W^{\theta ,i}_t\) and \((u-t)^{1/2}T^{-1/2}W^{0,1}_T\) are identically distributed yield that for all \(\theta \in \Theta \), \(q\in [1,\infty )\), \(\nu \in \{1,2,\ldots , d+1\}\) it holds that

This proves Item (i). Next observe that Lemma 3.2 ensures that for all \(n\in \mathbb {N}_0\), \(\theta \in \Theta \) it holds that \(W^{\theta }\), \(\mathcal {R}^{\theta }\), and \(\mathbf{U}_{n,M}^{\theta }\) are independent continuous random fields. Combining this and, e.g., Hutzenthaler et al. [45, Lemma 2.3] with Hölder’s inequality (applied with \(p=\frac{p+q}{2q}\), \(q=\frac{p+q}{p-q}\) in the notation of Hölder’s inequality) and the fact that for all \(s\in [0,T]\), \(h\in (0,\infty )\), \(\theta \in \Theta \) it holds that \(W^{\theta ,1}_{s+h}-W^{\theta ,1}_s\) and \(h^{1/2}T^{-1/2}W^{0,1}_T\) are identically distributed demonstrates that for all \(t\in [0,T)\), \(s\in [t,T)\), \(n \in \mathbb {N}_0\), \(\theta \in \Theta \), \(q\in [1,p)\), \(\nu \in \{1,2,\ldots ,d+1\}\) it holds that

In the next step we prove (46) by induction on \(n\in \mathbb {N}_0\). For the base case \(n=0\) observe that (49), (44), and the fact that for all \(\theta \in \Theta \) it holds that \(U^\theta _{0,M} = 0\) ensure that for all \(\theta \in \Theta \), \(q\in [1,p)\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots , d+1\}\) it holds that

This establishes (46) in the base case \(n=0\). For the induction step \(\mathbb {N}_0 \ni n-1 \rightsquigarrow n \in \mathbb {N}\) assume that there exists \(n\in \mathbb {N}\) which satisfies for all \(k \in \mathbb {N}_0 \cap [0, n)\), \(\theta \in \Theta \), \(q\in [1,p)\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots , d+1\}\) that

Observe that (39) and Jensen’s inequality ensure that for all \(\theta \in \Theta \), \(q\in [1,p)\), \(t\in [0,T)\), \(s \in [t, T)\), \(\nu \in \{1,2,\ldots , d+1\}\) it holds that

This, e.g., Hutzenthaler et al. [45, Corollary 2.5], Lemma 3.2, Item (i), and (51) yield that for all \(\theta \in \Theta \), \(q\in [1,p)\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots , d+1\}\) it holds that

Jensen’s inequality and (44) hence yield that for all \(\theta \in \Theta \), \(q\in [1,p)\), \(t\in [0,T)\) it holds that

This, (49), and (44) imply that for all \(\theta \in \Theta \), \(q\in [1,p)\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots , d+1\}\) it holds that

Combining this with (53) demonstrates that for all \(\theta \in \Theta \), \(q\in [1,p)\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots , d+1\}\) it holds that

Induction thus proves Item (ii). Next we prove Item (iii). Note that Item (iii), e.g., Hutzenthaler et al. [45, Corollary 2.5], Item (v) of Lemma 3.2, the fact that \((\mathcal {R}^{\theta },W^{\theta })\), \(\theta \in \Theta \), are identically distributed, e.g., Hutzenthaler et al. [45, Lemma 2.3], and the fact that for every \(t\in [0,T)\), \(s\in [t,T]\), \(\theta \in \Theta \) it holds that \({\mathbb {P}}(\mathcal {R}^{\theta }_t\le s)=\int _t^s\varrho (t,r)\,dr\) yield that for all \(n\in \mathbb {N}\), \(\theta \in \Theta \), \(t\in [0,T)\) it holds that

This establishes Item (iii). The proof of Lemma 3.3 is thus completed. \(\square \)

3.3 Error Analysis for MLP Approximations

Lemma 3.4

(Recursive bound for global error) Assume Setting 3.1, let \(p\in (1,\infty )\), \(M \in \mathbb {N}\), let \(S:\mathbb {N}_0 \times [0,T)\times \Omega \rightarrow [0,T)\) satisfy for all \(\theta \in \Theta \), \(n \in \mathbb {N}_0\), \(t\in [0,T)\) that \(S(0,t)=t\) and \(S(n+1,t)=\mathcal {R}^{(n)}_{S(n,t)}\), and assume for all \(q\in [1,p)\), \(t\in [0,T)\), \(x\in \mathbb {R}^d\) that

Then it holds for all \(n\in \mathbb {N}\), \(t\in [0,T)\), \(x\in \mathbb {R}^d\), \(\nu _0 \in \{1,2,\ldots , d+1\}\) that

Proof of Lemma 3.4

First, we analyze the Monte Carlo error. Item (i) of Lemma 3.3, Item (ii) of Lemma 3.3, and Item (vi) of Lemma 3.2 ensure that for all \(l\in \mathbb {N}\), \(x\in \mathbb {R}^d\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots ,d+1\}\) it holds that

Moreover, observe that Lemma 3.2 yields that

-

(a)

it holds for all \(x\in \mathbb {R}^d\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots ,d+1\}\) that

$$\begin{aligned} \big (g(x+W_T^{(0,0,-i)}-W_t^{(0,0,-i)})-g(x)\big ) \big \langle \mathbf {e}_\nu , \big ( 1 , \tfrac{ W^{(0,0,-i)}_{T}- W^{(0,0,-i)}_{t} }{ T - t } \big )\big \rangle ,\quad i\in \mathbb {N}_0, \end{aligned}$$(61)are independent random variables,

-

(b)

it holds for all \(x\in \mathbb {R}^d\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots ,d+1\}\) that

$$\begin{aligned} \tfrac{ (F( \mathbf{U}_{l,M}^{(0,l,i)})-\mathbb {1}_{\mathbb {N}}(l)F( \mathbf{U}_{l-1,M}^{(0,-l,i)})) (\mathcal {R}_t^{(0,l,i)},x+W_{\mathcal {R}_t^{(0,l,i)}}^{(0,l,i)}-W_t^{(0,l,i)}) }{\varrho (t,\mathcal {R}^{(0, l,i)}_t)} \Big \langle \mathbf {e}_\nu , \Big ( 1 , \tfrac{ W_{\mathcal {R}_t^{(0,l,i)}}^{(0,l,1)}-W_t^{(0,l,i)} }{ {\mathcal {R}_t^{(0,l,i)} - t} } \Big )\Big \rangle , \quad l,i\in \mathbb {N}_0, \end{aligned}$$(62)are independent random variables, and

-

(c)

it holds for all \(x\in \mathbb {R}^d\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots ,d+1\}\) that

$$\begin{aligned} \big (g(x+W_T^{(0,0,-i)}-W_t^{(0,0,-i)})-g(x)\big ) \big \langle \mathbf {e}_\nu , \big ( 1 , \tfrac{ W^{(0,0,-i)}_{T}- W^{(0,0,-i)}_{t} }{ T - t } \big )\big \rangle ,\quad i\in \mathbb {N}_0, \end{aligned}$$(63)and

$$\begin{aligned} \tfrac{ \left( F( \mathbf{U}_{l,M}^{(0,l,i)})-\mathbb {1}_{\mathbb {N}}(l)F( \mathbf{U}_{l-1,M}^{(0,-l,i)})\right) \! (\mathcal {R}_t^{(0,l,i)},x+W_{\mathcal {R}_t^{(0,l,i)}}^{(0,l,i)}-W_t^{(0,l,i)}) }{\varrho (t,\mathcal {R}^{(0, l,i)}_t)} \Big \langle \mathbf {e}_\nu , \Big ( 1 , \tfrac{ W_{\mathcal {R}_t^{(0,l,i)}}^{(0,l,1)}-W_t^{(0,l,i)} }{ {\mathcal {R}_t^{(0,l,i)} - t} } \Big )\Big \rangle , \quad l,i\in \mathbb {N}_0, \end{aligned}$$(64)are independent.

Combining this, (60), and (39) with the fact that for all \(N\in \mathbb {N}\), \(X_1, X_2, \ldots , X_N \in L^1({\mathbb {P}};\mathbb {R})\) with \(\forall \, i\in \{1,2,\ldots ,N\}\), \(j\in \{1,2,\ldots ,N\}\setminus \{i\}\), \(A,B\in {\mathcal {B}}(\mathbb {R}) :{\mathbb {P}}(\{X_i \in A\}\cap \{X_j\in B\})={\mathbb {P}}(X_i \in A){\mathbb {P}}(X_j\in B)\) it holds that \({{\text {Var}}}(\sum _{i=1}^NX_i)=\sum _{i=1}^N{{\text {Var}}}(X_i)\) implies that for all \(m\in \mathbb {N}\), \(x\in \mathbb {R}^d\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots ,d+1\}\) it holds that

The triangle inequality and (36) hence yield that for all \(m\in \mathbb {N}\), \(x\in \mathbb {R}^d\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots ,d+1\}\) it holds that

This and the triangle inequality ensure that for all \(m\in \mathbb {N}\), \(x\in \mathbb {R}^d\), \(t\in [0,T)\), \(\nu \in \{1,2,\ldots ,d+1\}\) it holds that

Next we analyze the time discretization error. Item (ii) of Lemma 3.3 ensures that for all \(m\in \mathbb {N}\), \(t\in [0,T)\), \(x\in \mathbb {R}^d\) it holds that

Combining this, (38), (37), Item (i) of Lemma 3.3, and Jensen’s inequality shows that for for all \(m\in \mathbb {N}\), \(t\in [0,T)\), \(x\in \mathbb {R}^d\), \(\nu \in \{1,2,\ldots ,d+1\}\) it holds that

In the next step we combine the established bounds for the Monte Carlo error (see (67) above) and the time discretization error (see (69) above) to obtain a suitable bound for the overall approximation error. More formally, observe that (67) and (69) ensure that for all \(m\in \mathbb {N}\), \(t\in [0,T)\), \(x\in \mathbb {R}^d\), \(\nu \in \{1,2,\ldots , d+1\}\) it holds that

This shows that for all \(m\in \mathbb {N}\), \(t\in [0,T)\), \(x\in \mathbb {R}^d\), \(\nu \in \{1,2,\ldots , d+1\}\) it holds that

In the next step we iterate (71). More formally, we claim that for all \(n,k\in \mathbb {N}\), \(t\in [0,T)\), \(x\in \mathbb {R}^d\), \(\nu _0 \in \{1,2,\ldots , d+1\}\) it holds that

We now prove (72) by induction on \(k\in \mathbb {N}\). Observe that (71) establishes (72) in the base case \(k=1\). For the induction step \(\mathbb {N}\ni k\rightsquigarrow k+1\in \mathbb {N}\) assume that there exists \(k\in \mathbb {N}\) which satisfies that (72) holds for k. Observe that Item (iv) of Lemma 3.2 shows that for all \(m\in \mathbb {N}\) it holds that \(\mathbf{U}^0_{m,M}\), \(W^0\), and \((\mathfrak {r}^{(k)})_{k\in \mathbb {N}_0}\) are independent. Combining this and (71) yields that for all \(l_1\in \mathbb {N}\), \(x\in \mathbb {R}^d\), \(t\in [0,T)\), \(\nu _0,\nu _1,\ldots , \nu _{k} \in \{1,2,\ldots , d+1\}\) it holds that

This and the induction hypothesis establish (72) in the case \(k+1\). Induction thus proves (72). Next note that (72) (applied with \(k=n\) in the notation of (72)) demonstrates that for all \(n\in \mathbb {N}\), \(t\in [0,T)\), \(x\in \mathbb {R}^d\), \(\nu _0 \in \{1,2,\ldots , d+1\}\) it holds that

Combining this with the fact that for all \(n\in \mathbb {N}\), \(j\in \{0,1,\ldots , n-1\}\) it holds that

establishes (59). The proof of Lemma 3.4 is thus completed. \(\square \)

Proposition 3.5

(Global approximation error) Assume Setting 3.1, let \(t \in [0,T)\), \(x\in \mathbb {R}^d\), \(\nu _0\in \{1,2,\ldots ,d+1\}\), \(M,n\in \mathbb {N}\), \(p\in [2,\infty )\), \(\alpha \in (\frac{p-2}{2(p-1)},\frac{p}{2(p-1)})\), \(\beta =\frac{\alpha }{2}-\frac{(1-\alpha )(p-2)}{2p}\), \(C\in \mathbb {R}\) satisfy that

and assume for all \(s\in (0,1)\) that \(\rho (s)=\frac{1-\alpha }{s^\alpha }\). Then

Proof of Proposition 3.5

Throughout this proof assume without loss of generality that

(otherwise (77) is clear), let \(S:\mathbb {N}_0 \times [0,T)\times \Omega \rightarrow [0,T)\) satisfy for all \(n \in \mathbb {N}_0\), \(t\in [0,T)\) that \(S(0,t)=t\) and \(S(n+1,t)=\mathcal {R}^{(n)}_{S(n,t)}\), and let \(\mathfrak C\in \mathbb {R}\) satisfy

Observe that the triangle inequality ensures that for all \(\nu \in \{1,2,\ldots ,d+1\}\), \(s\in [0,T)\) it holds that

Moreover, observe that (36) assures that for all \(j\in \{0,1,\ldots ,n-1\}\), \(\nu _1,\nu _2,\ldots ,\nu _j \in \{1,2,\ldots ,d+1\}\) it holds that

Next note that the hypothesis that \(p\in [2,\infty )\) and the fact that \(\alpha \in (\frac{p-2}{2(p-1)},\frac{p}{2(p-1)})\) imply that \(\frac{p}{2}\in [\alpha (p-1),\alpha (p-1)+1]\) and \(\alpha \in (0,1)\). This, (81), (80), and Corollary 2.5 (applied with \(p=2\) in the notation of Corollary 2.5) show that for all \(j\in \{0,1,\ldots ,n-1\}\), \(\nu _1,\nu _2,\ldots ,\nu _j \in \{1,2,\ldots ,d+1\}\) it holds that

The fact that \(\Gamma (\frac{p+1}{2})\ge \Gamma ( \frac{3}{2})=\frac{\sqrt{\pi }}{2}\), the fact that \(\frac{2(p-1)}{p}\ge 1\), and the fact that \(\alpha < 1\) prove that

Moreover, note that the fact that \(p\ge 2\) ensures that for all \(j\in \mathbb {N}_0\) it holds that \((ej)^{1/8}\le \left[ e\left( \tfrac{pj}{2}+1\right) \right] ^{1/8}\) and \(\Gamma (j+1)^{\alpha /2}\ge \Gamma (j+1)^{\beta }\). Combining this with (82) and (83) proves that for all \(j\in \{0,1,\ldots ,n-1\}\), \(\nu _1,\nu _2,\ldots ,\nu _j \in \{1,2,\ldots ,d+1\}\) it holds that

Corollary 2.5, the fact that \(p\ge 2\), the fact that \(\alpha >\frac{p-2}{2(p-1)}\), and the fact that \(\frac{2}{p}\Gamma (\frac{2}{p})\le 1\) prove that for all \(j\in \{0,1,\ldots ,n-1\}\), \(\nu _1,\nu _2,\ldots ,\nu _j \in \{1,2,\ldots ,d+1\}\) it holds that

Combining this with Hölder’s inequality and the fact that the random variables \(W^0\) and \(\mathfrak {r}^{(n)}\), \(n\in \mathbb {N}\), are independent proves that for all \(j\in \{0,1,\ldots ,n-1\}\), \(\nu _1,\nu _2,\ldots ,\nu _{j+1} \in \{1,2,\ldots ,d+1\}\) it holds that

Moreover, observe that for all \(j\in \{0,1,\ldots ,n-1\}\), \(\nu _1,\nu _2,\ldots ,\nu _{j+1} \in \{1,2,\ldots ,d+1\}\) it holds that

In the next step we intend to apply Lemma 3.4. For this we now verify the hypotheses of Lemma 3.4. More formally, observe that the fact that \(\sup _{s\in [t,T)} \Vert (F(0))(s,x+W^0_{s}-W^0_t) \Vert _{L^{2p/(p-2)}({\mathbb {P}};\mathbb {R})}<\infty \) and the fact that \(\tfrac{2p}{p-2}\ge 2\) ensure that for all \(r\in [1,2)\) it holds that

Moreover, observe that for all \(r\in [1,2)\) it holds that

Combining this, (88), and Lemma 3.4 with (84), (86), and (87) proves that

Moreover, observe that for all \(j\in \mathbb {N}_0\) it holds that

Combining this with (90) proves that

This, the fact that \(2\sqrt{\mathfrak C}\Vert L\Vert _1\le 2\sqrt{\mathfrak C}\max \{1,\Vert L\Vert _1\}\le C\), the fact that \(p\ge 2\), and the fact that \(C\ge 1\) imply that

Next note that for all \(j\in \mathbb {N}_0\) it holds that \(\sum _{i=0}^{n-1} \genfrac(){0.0pt}1{n-1}{i} = 2^{n-1}\) and

Combining this with (92) assures that

This establishes (77). The proof of Proposition 3.5 is thus completed. \(\square \)

4 Regularity Analysis for Solutions of Certain Differential Equations

The error analysis in Sect. 3.3 above provides upper bounds for the approximation errors of the MLP approximations in (39). The established upper bounds contain certain norms of the unknown exact solutions of the PDEs which we intend to approximate; see, e.g., the right-hand side of (77) in Proposition 3.5 in Sect. 3.3 above for details. In Lemma 4.2 below we establish suitable upper bounds for these norms of the unknown exact solutions of the PDEs which we intend to approximate. In our proof of Lemma 4.2 we employ certain a priori estimates for solutions of BSDEs which we establish in the essentially well-known result in Lemma 4.1 below (see, e.g., El Karoui et al. [22, Proposition 2.1 and Equation (2.12)] for results related to Lemma 4.1).

4.1 Regularity Analysis for Solutions of Backward Stochastic Differential Equations (BSDEs)

Lemma 4.1

Let \(T\in (0,\infty )\), \(t\in [0,T)\), \(d\in \mathbb {N}\), \(L,\mathfrak {L}\in [0,\infty )\), let \(\left\| \cdot \right\| :\mathbb {R}^d \rightarrow [0,\infty )\) be the standard norm on \(\mathbb {R}^d\), let \( \langle \cdot , \cdot \rangle :\mathbb {R}^d \times \mathbb {R}^d \rightarrow [0,\infty ) \) be the standard scalar product on \(\mathbb {R}^d\), let \((\Omega ,\mathcal {F},{\mathbb {P}})\) be a probability space with a normal filtration \((\mathbb {F}_s)_{s\in [t,T]}\), let \(f_1, f_2 :[t,T] \times \mathbb {R}\times \mathbb {R}^d \times \Omega \rightarrow \mathbb {R}\) be functions, assume for all \(s\in [t,T]\), \(i\in \{1,2\}\) that the function \([t,s] \times \mathbb {R}\times \mathbb {R}^d \times \Omega \ni (u,y,z, \omega ) \mapsto f_i(u,y,z,\omega )\in \mathbb {R}\) is \(({\mathcal {B}}([t,s]) \otimes \mathcal {B}(\mathbb {R}) \otimes \mathcal {B}(\mathbb {R}^d)\otimes \mathbb {F}_s)/\mathcal {B}(\mathbb {R})\)-measurable, assume that for all \(s\in [t,T]\), \(y,\mathfrak {y}\in \mathbb {R}\), \(z,\mathfrak {z}\in \mathbb {R}^d\) it holds \({\mathbb {P}}\)-a.s. that

let \(Y^1,Y^2:[t,T]\times \Omega \rightarrow \mathbb {R}\) and \(W:[t,T]\times \Omega \rightarrow \mathbb {R}^d\) be \((\mathbb {F}_s)_{s\in [t,T]}\)-adapted stochastic processes with continuous sample paths, assume that \((W_{s+t}-W_{t})_{s\in [0,T-t]}\) is a standard Brownian motion, let \(Z^k=(Z^{k,1},Z^{k,2}, \ldots , Z^{k,d}) :[t,T]\times \Omega \rightarrow \mathbb {R}^d\), \(k\in \{1,2\}\), be \((\mathbb {F}_s)_{s\in [t,T]}\)-adapted \((\mathcal {B}([t,T])\otimes \mathcal {F})\)/\(\mathcal {B}(\mathbb {R}^d)\)-measurable stochastic processes, assume that \(\sum _{i=1}^2{\mathbb {E}}\big [\sup _{s\in [t,T]}|Y^i_{s}|^2\big ]<\infty \) and \({\mathbb {P}}(\sum _{i=1}^2\int _t^T |f_i(s,Y^i_s,Z^i_s)|+\Vert Z^i_s\Vert ^2\,ds<\infty )=1\), and assume that for all \(s\in [t,T]\), \(i\in \{1,2\}\) it holds \({\mathbb {P}}\)-a.s. that

Then it holds \({\mathbb {P}}\)-a.s. that

Proof of Lemma 4.1

Throughout this proof assume without loss of generality that \(\sup _{s\in [t,T], y\in \mathbb {R}, z\in \mathbb {R}^d}\Vert f_1(s,y,z)-f_2(s,y,z)\Vert _{L^\infty ({\mathbb {P}};\mathbb {R})}<\infty \), let \(A:[t,T]\times \Omega \rightarrow \mathbb {R}\) and \(B=(B^1, B^2, \ldots , B^d):[t,T]\times \Omega \rightarrow \mathbb {R}^d\) satisfy for all \(s\in [t,T]\), \(j\in \{1,2,\ldots ,d\}\) that

and

and let \(\Gamma :[t,T]\times \Omega \rightarrow \mathbb {R}\) be a stochastic process with continuous sample paths which satisfies that for all \(s\in [t,T]\) it holds \({\mathbb {P}}\)-a.s. that \(\Gamma _s=e^{\int _t^s A_r-\frac{\Vert B_r\Vert ^2}{2} dr +\int _t^s\langle B_r,dW_r\rangle }\). Note that Itô’s formula implies that for all \(s\in [t,T]\) it holds \({\mathbb {P}}\)-a.s. that

Next observe that (97) ensures that for all \(u\in [t,T]\), \(i\in \{1,2\}\) it holds \({\mathbb {P}}\)-a.s. that \(Y^i_u = Y^i_t - \int _t^u f_i(r,Y^i_r,Z^i_r)\, dr + \int _t^u \langle Z^i_r, dW_r\rangle \). Combining this and (101) with Itô’s formula yields that for all \(u\in [t,T)\) it holds \({\mathbb {P}}\)-a.s. that

and

Moreover, note that (99) and (100) imply that for all \(s\in [t,T]\) it holds that

This, (102), and (103) assure that for all \(u\in [t,T]\) it holds \({\mathbb {P}}\)-a.s. that

Next let \(\tau _n:\Omega \rightarrow [t,T)\), \(n\in \mathbb {N}\), be the \((\mathbb F_s)_{s\in [t,T]}\)-stopping times which satisfy for all \(n\in \mathbb {N}\) that

Note that (105) and (106) assure that for all \(n\in \mathbb {N}\) it holds \({\mathbb {P}}\)-a.s. that

Moreover, observe that (96) ensures that \(\sup _{s\in [t,T]}\Vert B_s\Vert ^2\le \mathfrak {L}^2d\). The fact that \({\mathbb {E}}[ e^{-\int _t^T \frac{\Vert 2B_s\Vert ^2}{2} \,ds+\int _t^T\langle 2B_s,dW_s\rangle }]=1\) hence implies that

In addition, observe that (96) demonstrates that it holds \({\mathbb {P}}\)-a.s. that \(\sup _{s\in [t,T]}|A_s|\le L\). This, Doob’s martingale inequality, and (108) show that

Combining this with the Cauchy–Schwarz inequality and the assumption that \(\sum _{i=1}^2{\mathbb {E}}\big [\sup _{s\in [t,T]}|Y^i_{s}|^2\big ]<\infty \) proves that

Next observe that the fact that for all \(s\in [t,T]\) it holds that \(\Gamma _s\ge 0\) demonstrates that

This, (110), Lebesgue’s dominated convergence theorem, (107), the fact that \(Y^1\), \(Y^2\), and \(\Gamma \) are stochastic processes with continuous sample paths, and the fact that it holds \({\mathbb {P}}\)-a.s. that \(\lim _{n\rightarrow \infty }\tau _n=T\) ensure that it holds \({\mathbb {P}}\)-a.s. that

Next observe that the fact that \(\sup _{s\in [t,T]}|A_s|\le L\) demonstrates that for all \(s\in [t,T]\) it holds that

Combining this, (112), and the triangle inequality shows that it holds \({\mathbb {P}}\)-a.s. that

This proves (98). The proof of Lemma 4.1 is thus completed. \(\square \)

4.2 Regularity Analysis for Solutions of Partial Differential Equations (PDEs)

Lemma 4.2

Let \(T\in (0,\infty )\), \(d\in \mathbb {N}\), \(\eta , L_0,L_1,\ldots ,L_{d},\mathfrak {L}_1, \mathfrak {L}_2, \ldots , \mathfrak {L}_d,K_1,K_2,\ldots ,K_d\in \mathbb {R}\), \(f\in C( [0,T]\times \mathbb {R}^d\times \mathbb {R}\times \mathbb {R}^d, \mathbb {R})\), \(g\in C(\mathbb {R}^d, \mathbb {R})\), \(u = ( u(t,x) )_{ (t,x) \in [0,T] \times \mathbb {R}^d }\in C^{1,2}([0,T]\times \mathbb {R}^d,\mathbb {R})\), let \({\left| \left| \left| \cdot \right| \right| \right| }:\mathbb {R}^{d+1}\rightarrow [0,\infty )\) be a norm, assume for all \(t\in [0,T]\), \(x=(x_1,x_2,\ldots , x_d)\), \(\mathfrak {x}=(\mathfrak {x}_1,\mathfrak {x}_2,\ldots ,\mathfrak {x}_d)\), \(z=(z_1,z_2,\ldots , z_d)\), \(\mathfrak {z}=(\mathfrak {z}_1,\mathfrak {z}_2,\ldots ,\mathfrak {z}_d) \in \mathbb {R}^d\), \(y,\mathfrak {y} \in \mathbb {R}\) that

let \((\Omega ,\mathcal {F},{\mathbb {P}})\) be a probability space, and let \(W:[0,T]\times \Omega \rightarrow \mathbb {R}^d\) be a standard Brownian motion. Then

-

(i)

it holds for all \(s\in [0,T)\), \(x\in \mathbb {R}^d\) that

$$\begin{aligned}&{\mathbb {E}}\!\left[ {\big \vert \big \vert \big \vert g(x+W_{T-s})\big (1,\tfrac{W_{T-s}}{T-s} \big ) \big \vert \big \vert \big \vert } \right] \nonumber \\&\quad +{\mathbb {E}}\!\left[ \int _s^{T}{\big \vert \big \vert \big \vert \big [ f\big (t,x+W_{t-s},u(t,x+W_{t-s}),(\nabla _xu)(t,x+W_{t-s})\big )\big ] \big (1,\tfrac{W_{t-s}}{t-s} \big ) \big \vert \big \vert \big \vert } \,dt \right] <\infty , \end{aligned}$$(118) -

(ii)

it holds for all \(s\in [0,T)\), \(x\in \mathbb {R}^d\) that

$$\begin{aligned}&(u(s,x),(\nabla _x u)(s,x))={\mathbb {E}}\!\left[ g(x+W_{T-s})\big (1,\tfrac{W_{T-s}}{T-s} \big )\right] \nonumber \\&\quad +{\mathbb {E}}\!\left[ \int _s^{T}\left[ f\big (t,x+W_{t-s},u(t,x+W_{t-s}),(\nabla _xu)(t,x+W_{t-s})\big )\right] \big (1,\tfrac{W_{t-s}}{t-s} \big ) \,dt\right] , \end{aligned}$$(119) -

(iii)

it holds for all \(t\in [0,T]\), \(x=(x_1,x_2,\ldots , x_d)\), \(\mathfrak x=(\mathfrak {x}_1,\mathfrak {x}_2,\ldots , \mathfrak {x}_d)\in \mathbb {R}^d\) that

$$\begin{aligned} \left| u(t,x)-u(t,\mathfrak {x})\right| \le e^{L_0(T-t)}\Big (\textstyle \sum _{j=1}^d\displaystyle (K_j+(T-t)\mathfrak L_{j})|x_j-\mathfrak x_j|\Big ), \end{aligned}$$(120) -

(iv)

it holds for all \(t\in [0,T)\), \(x\in \mathbb {R}^d\), \(i\in \{1,2,\ldots ,d\}\) that

$$\begin{aligned} \big | (\tfrac{\partial }{\partial x_i}u)(t,x)\big |\le e^{L_0(T-t)} (K_i+(T-t)\mathfrak {L}_{i}), \end{aligned}$$(121)and

-

(v)

it holds for all \(x\in \mathbb {R}^d\), \(p\in [1,\infty )\) that

$$\begin{aligned}&\sup _{s\in [0,T]} \sup _{t\in [s,T]}\left( {\mathbb {E}}\!\left[ \left| u(t,x +W_t-W_s)\right| ^p\right] \right) ^{\!\nicefrac {1}{p}} \nonumber \\ {}&\quad \le e^{L_0T} \left[ \sup _{s\in [0,T]} ({\mathbb {E}}[|g(x+W_s)|^p])^{\!\nicefrac {1}{p}} +T\sup _{s,t\in [0,T]} ({\mathbb {E}}[|f(t,x+W_s,0,0)|^p])^{\!\nicefrac {1}{p}}\right. \nonumber \\&\qquad \left. +Te^{L_0T}\textstyle \sum _{j=1}^d \displaystyle L_{j} (K_j +T\mathfrak {L_j}) \right] . \end{aligned}$$(122)

Proof of Lemma 4.2

Throughout this proof let \(t\in [0,T)\), \(x=(x_1,x_2,\ldots , x_d)\), \(\mathfrak {x}=(\mathfrak {x}_1,\mathfrak {x}_2,\ldots ,\mathfrak {x}_d) \in \mathbb {R}^d\), let \(\left\| \cdot \right\| :\mathbb {R}^d \rightarrow [0,\infty )\) be the standard norm on \(\mathbb {R}^d\), let \( \langle \cdot , \cdot \rangle :\mathbb {R}^d \times \mathbb {R}^d \rightarrow [0,\infty ) \) be the standard scalar product on \(\mathbb {R}^d\), let \( F:C([0,T)\times \mathbb {R}^d,\mathbb {R}^{1+d}) \rightarrow C([0,T)\times \mathbb {R}^d,\mathbb {R}) \) and \(\mathbf{u}:[0,T)\times \mathbb {R}^d \rightarrow \mathbb {R}^{d+1}\) satisfy for all \(s\in [0,T)\), \(y\in \mathbb {R}^d\), \(\mathbf{v}\in C([0,T)\times \mathbb {R}^d,\mathbb {R}^{1+d})\) that \((F(\mathbf{v}))(s,y)=f(s,y,\mathbf{v}(s,y))\) and \(\mathbf{u}(s,y)=(u(s,y), (\nabla _y u)(s,y))\), and let \(Y,\mathfrak {Y}:[t,T]\times \Omega \rightarrow \mathbb {R}\) and \(Z,\mathfrak {Z}:[t,T]\times \Omega \rightarrow \mathbb {R}^d\) be stochastic processes which satisfy for all \(s\in [t,T]\) that

and \(\mathfrak {Z}_s=(\nabla _x u)(s,\mathfrak x +W_s-W_t)\). Observe that [47, Lemma 4.2] establishes Item (i) and Item (ii). Next we prove Item (iii). Note that Itô’s lemma yields that for all \(s\in [t,T]\) it holds \({\mathbb {P}}\)-a.s. that

and

Next note that (116) implies that there exists \(\lambda \in (\frac{1}{2},\infty )\) which satisfies that \(\sup _{s\in [0,T],\xi \in \mathbb {R}^d}\tfrac{|u(s,\xi )|}{1+\Vert \xi \Vert ^\lambda }<\infty \). Observe that Doob’s inequality implies that

and

Moreover, note that (115) implies that for all \(s\in [t,T]\), \(y,\mathfrak {y} \in \mathbb {R}\), \(z=(z_1,z_2,\ldots , z_d)\), \(\mathfrak {z}=(\mathfrak {z}_1,\mathfrak {z}_2,\ldots ,\mathfrak {z}_d) \in \mathbb {R}^d\) it holds \({\mathbb {P}}\)-a.s. that

and

Combining this with (124), (125), (126), (127), and Lemma 4.1 proves that

This establishes Item (iii). Next not that Item (iii) demonstrates that for all \(i\in \{1,2,\ldots ,d\}\) it holds that

This establishes Item (iv). In the next step we prove Item (v). Observe that Item (ii), Tonelli’s theorem, and the triangle inequality prove that for all \(r\in [0,T]\), \(s\in [r,T]\), \(p\in [1,\infty )\) it holds that

This and Jensen’s inequality show that for all \(r\in [0,T]\), \(s\in [r,T]\), \(p\in [1,\infty )\) it holds that

This, the triangle inequality, (115), and Item (iv) show that for all \(r\in [0,T]\), \(p\in [1,\infty )\) it holds that

In addition, note that the fact that \(\sup _{s\in [0,T],\xi \in \mathbb {R}^d}\tfrac{|u(s,\xi )|}{1+\Vert \xi \Vert ^\lambda }<\infty \) ensures that for \(p\in [1,\infty )\) it holds that

This, (134), and Gronwall’s inequality yield that for all \(p\in [1,\infty )\) it holds that

The proof of Lemma 4.2 is thus completed. \(\square \)

5 Overall Complexity Analysis for MLP Approximation Methods

In this section we combine the findings of Sects. 3 and 4 to establish in Theorem 5.2 below the main approximation result of this article; see also Corollary 5.1 and Corollary 5.4 below. The i.i.d. random variables \(\mathfrak {r}^\theta :\Omega \rightarrow (0,1)\), \(\theta \in \Theta \), appearing in the MLP approximation methods in Corollary 5.1 (see (140) in Corollary 5.1), Theorem 5.2 (see (160) in Theorem 5.2), and Corollary 5.4 (see (175) in Corollary 5.4) are employed to approximate the time integrals in the semigroup formulations of the PDEs under consideration. One of the key ingredients of the MLP approximation methods, which we propose and analyze in this article, is the fact that the density of these i.i.d. random variables \(\mathfrak {r}^\theta :\Omega \rightarrow (0,1)\), \(\theta \in \Theta \), is equal to the function \((0,1) \ni s \mapsto \alpha s^{\alpha -1}\in \mathbb {R}\) for some \(\alpha \in ( 0, 1 )\), or equivalently, that these i.i.d. random variables satisfy for all \(\theta \in \Theta \), \(b \in (0,1)\) that \({\mathbb {P}}( \mathfrak {r}^{ \theta } \le b ) = b^\alpha \) for some \(\alpha \in (0,1)\). In particular, in contrast to previous MLP approximation methods studied in the scientific literature (see, e.g., [4, 45, 46]) it is crucial in this article to exclude the case where the random variables \(\mathfrak {r}^\theta :\Omega \rightarrow (0,1)\), \(\theta \in \Theta \), are continuous uniformly distributed on (0, 1) (corresponding to the case \(\alpha = 1\)). To make this aspect more clear to the reader, we provide in Lemma 5.3 below an explanation why it is essential to exclude the continuous uniform distribution case \(\alpha = 1\). Note that the random variable \(\mathbf{U}:\Omega \rightarrow \mathbb {R}^{d+1}\) in Lemma 5.3 coincides with a special case of the random fields in (160) in Theorem 5.2 (applied with \(g_d(x)=0\), \(f_d(s,x,y,z)=1\), \(M=1\), \(t=0\) for \(s\in [0,T)\), \(x,z \in \mathbb {R}^d\), \(y\in \mathbb {R}\), \(d\in \mathbb {N}\) in the notation of Theorem 5.2).

5.1 Quantitative Complexity Analysis for MLP Approximation Methods

Corollary 5.1

Let \(\left\| \cdot \right\| _1:(\cup _{n\in \mathbb {N}}\mathbb {R}^n)\rightarrow \mathbb {R}\) and \(\left\| \cdot \right\| _\infty :(\cup _{n\in \mathbb {N}}\mathbb {R}^n)\rightarrow \mathbb {R}\) satisfy for all \(n\in \mathbb {N}\), \(x=(x_1,x_2,\ldots ,x_n)\in \mathbb {R}^n\) that \(\Vert x\Vert _1=\sum _{i=1}^n |x_i|\) and \(\Vert x\Vert _\infty =\max _{i\in \{1,2,\ldots ,n\}}|x_i|\), let \(T,\delta \in (0,\infty )\), \({\varepsilon }\in (0,1]\), \(d \in \mathbb {N}\), \(L=(L_0,L_1,\ldots ,L_{d}) \in \mathbb {R}^{d+1}\), \(K=(K_1,K_2,\ldots ,K_d)\), \(\mathfrak L=(\mathfrak L_1,\mathfrak L_2\ldots , \mathfrak L_d)\), \(\xi \in \mathbb {R}^d\), \(p\in (2,\infty )\), \(\alpha \in (\frac{p-2}{2(p-1)},\frac{p}{2(p-1)})\), \(\beta =\frac{\alpha }{2}-\frac{(1-\alpha )(p-2)}{2p}\), \(f\in C( [0,T]\times \mathbb {R}^d\times \mathbb {R}\times \mathbb {R}^d, \mathbb {R})\), \(g\in C(\mathbb {R}^d, \mathbb {R})\), let \(u = ( u(t,x) )_{ (t,x) \in [0,T] \times \mathbb {R}^d }\in C^{1,2}([0,T]\times \mathbb {R}^d,\mathbb {R})\) be an at most polynomially growing function, assume for all \(t\in (0,T)\), \(x=(x_1,x_2, \ldots ,x_d)\), \(\mathfrak x=(\mathfrak x_1,\mathfrak x_2, \ldots ,\mathfrak x_d)\), \(z=(z_1,z_2,\ldots ,z_d)\), \(\mathfrak {z}=(\mathfrak z_1, \mathfrak z_2, \ldots , \mathfrak z_d)\in \mathbb {R}^d\), \(y,\mathfrak {y} \in \mathbb {R}\) that

let \( F:C([0,T)\times \mathbb {R}^d,\mathbb {R}^{1+d}) \rightarrow C([0,T)\times \mathbb {R}^d,\mathbb {R}) \) satisfy for all \(t\in [0,T)\), \(x\in \mathbb {R}^d\), \(\mathbf{v}\in C([0,T)\times \mathbb {R}^d,\mathbb {R}^{1+d})\) that \((F(\mathbf{v}))(t,x)=f(t,x,\mathbf{v}(t,x))\), let \( ( \Omega , \mathcal {F}, {\mathbb {P}}) \) be a probability space, let \( \Theta = \cup _{ n \in \mathbb {N}} \mathbb {Z}^n \), let \( Z^{ \theta } :\Omega \rightarrow \mathbb {R}^d \), \( \theta \in \Theta \), be i.i.d. standard normal random variables, let \(\mathfrak {r}^\theta :\Omega \rightarrow (0,1)\), \(\theta \in \Theta \), be i.i.d. random variables, assume for all \(b\in (0,1)\) that \({\mathbb {P}}(\mathfrak {r}^0\le b)=b^{1-\alpha }\), assume that \((Z^\theta )_{\theta \in \Theta }\) and \((\mathfrak {r}^\theta )_{ \theta \in \Theta }\) are independent, let \( \mathbf{U}_{ n,M}^{\theta } = ( \mathbf{U}_{ n,M}^{\theta , 0},\mathbf{U}_{ n,M}^{\theta , 1},\ldots ,\mathbf{U}_{ n,M}^{\theta , d} ) :[0,T)\times \mathbb {R}^d\times \Omega \rightarrow \mathbb {R}^{1+d} \), \(n,M\in \mathbb {Z}\), \(\theta \in \Theta \), satisfy for all \( n,M \in \mathbb {N}\), \( \theta \in \Theta \), \( t\in [0,T)\), \(x \in \mathbb {R}^d\) that \( \mathbf{U}_{-1,M}^{\theta }(t,x)=\mathbf{U}_{0,M}^{\theta }(t,x)=0\) and

let \(({\text {RV}}_{n,M})_{(n,M)\in \mathbb {Z}^2}\subseteq \mathbb {Z}\) satisfy for all \(n,M \in \mathbb {N}\) that \({\text {RV}}_{0,M}=0\) and

and let \(C\in (0,\infty )\) satisfy that

Then there exists \(N\in \mathbb {N}\cap [2,\infty )\) such that

and

Proof of Corollary 5.1

Throughout the proof let \((\eta _{n,M})_{(n,M)\in \mathbb {N}^2}\subseteq \mathbb {R}\) satisfy for all \(n,M\in \mathbb {N}\) that

Note that Proposition 3.5 and Item (i) of Lemma 4.2 ensure that for all \(M,n \in \mathbb {N}\) it holds that

Combining this with Lemma 4.2 implies for all \(M,n \in \mathbb {N}\) that

Next observe that (137) and (138) demonstrate that

Combining this with (147) proves that \( \limsup _{n\rightarrow \infty }\eta _{n, \lfloor n^{2\beta } \rfloor } =0 \). Next let \(N\in \mathbb {N}\) satisfy that

and let \(\mathfrak {C}\in \mathbb {R}\) satisfy that

Note that the fact that \(C\ge \frac{1}{2}\) and (150) ensure that

Furthermore, observe that (147) and (150) demonstrate that

Combining this with (149) and (151) proves that

Moreover, observe that [45, Lemma 3.6] assures that for all \(n\in \mathbb {N}\) it holds that \({\text {RV}}_{n,\lfloor n^{2\beta } \rfloor }\le d(5\lfloor n^{2\beta } \rfloor )^n\). Hence, we obtain that

Note that the fact that \(2\beta \in (0,1)\) ensures that

Combining this and (153) with (154) proves that

This establishes (144). The proof of Corollary 5.1 is thus completed. \(\square \)

5.2 Qualitative Complexity Analysis for MLP Approximation Methods

Theorem 5.2

Let \(T,\delta ,\lambda \in (0,\infty )\), \(\alpha \in (0,1)\), \(\beta \in (\max \{\frac{1-2\alpha }{1-\alpha },0\},1-\alpha )\), let \(f_d \in C( [0,T]\times \mathbb {R}^d\times \mathbb {R}\times \mathbb {R}^d,\mathbb {R})\), \(d\in \mathbb {N}\), let \(g_d \in C( \mathbb {R}^d,\mathbb {R})\), \(d\in \mathbb {N}\), let \(\xi _d=(\xi _{d,1}, \xi _{d,2}, \ldots , \xi _{d,d}) \in \mathbb {R}^d\), \(d\in \mathbb {N}\), let \(L_{d,i}\in \mathbb {R}\), \(d,i \in \mathbb {N}\), let \(u_d = ( u_d(t,x) )_{ (t,x) \in [0,T] \times \mathbb {R}^d }\in C^{1,2}([0,T]\times \mathbb {R}^d,\mathbb {R})\), \(d\in \mathbb {N}\), be at most polynomially growing functions, let \( F_d :C([0,T)\times \mathbb {R}^d,\mathbb {R}^{1+d}) \rightarrow C([0,T)\times \mathbb {R}^d,\mathbb {R}) \), \(d\in \mathbb {N}\), be functions, assume for all \(d\in \mathbb {N}\), \(t\in [0,T)\), \(x=(x_1,x_2, \ldots ,x_d)\), \(\mathfrak x=(\mathfrak x_1,\mathfrak x_2, \ldots ,\mathfrak x_d)\), \(z=(z_1,z_2,\ldots ,z_d)\), \(\mathfrak {z}=(\mathfrak z_1, \mathfrak z_2, \ldots , \mathfrak z_d)\in \mathbb {R}^d\), \(y,\mathfrak {y} \in \mathbb {R}\), \(\mathbf{v}\in C([0,T)\times \mathbb {R}^d,\mathbb {R}^{1+d})\) that