Abstract

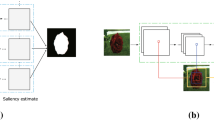

In recent years, salient object detection (SOD) has achieved significant progress with the help of convolution neural network (CNN). Most of the state-of-the-art methods segment the salient object by either aggregating the multilevel features from the CNN module or introducing the refinement module along with the baseline network. However, these models suffer from simplicity bias, where neural networks converge to global minima using the simple feature and remain invariant to complex predictive features. Very few methods concentrate on the neurophysiological behaviour of visual attention. As per Gestalt psychology, humans tend to perceive the objects as a whole rather than focus on the discrete elements of that object. The law of Closure (closed contour) is one of the Gestalt axioms that states that if there is a discontinuity in the object’s contour, we perceive the object as continuous in a smooth pattern. This paper proposes a two-way learning network, where Closure-guided Attention Network (CGAN) and the Coarse Saliency Networks (CSN) jointly supervise the feature-channel to mitigate the simplicity bias. Furthermore, a channel-wise attention residual network is incorporated in the Closure Guided module to alleviate the scale-space problem and generate smooth object contour. Finally, the closure map from CGAN fused with the coarse saliency map of the Coarse Saliency Network generates a salient object. Experimental result on five benchmark datasets demonstrates the significant improvements in our approach over the state-of-the-art method.

Similar content being viewed by others

Change history

08 October 2022

A Correction to this paper has been published: https://doi.org/10.1007/s00371-022-02680-2

References

Borji, A.: Boosting bottom-up and top-down visual features for saliency estimation. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2012, 438–445 (2012)

Wang, W. et al. Salient object detection in the deep learning era: an in-depth survey. IEEE Trans. Pattern Anal. Mach. Intell. (2021): n. pag

Li, Y., et al. The secrets of salient object segmentation. 2014 IEEE Conference on Computer Vision and Pattern Recognition, pp. 280–287 (2014)

Parkhurst, D.J., et al.: Modelling the role of salience in the allocation of overt visual attention. Vision. Res. 42, 107–123 (2002)

Niu, J., et al.: Exploiting contrast cues for salient region detection. Multimed. Tools Appl. 76, 10427–10441 (2016)

Wang, Q., Yuan, Y., Yan, P.: Visual saliency by selective contrast. IEEE Trans. Circ. Syst. Video Technol. 23.7, 1150–1155 (2012)

Wei, Y. et al. Geodesic saliency using background priors. ECCV (2012)

Zhu, W. et al. Saliency Optimization from Robust Background Detection. 2014 IEEE Conference on Computer Vision and Pattern Recognition (2014): 2814–2821

Niu, Y., et al.: Salient Object Segmentation Based on Superpixel and Background Connectivity Prior. IEEE Access 6, 56170–56183 (2018)

Ni, W., et al.: Background context-aware-based sar image saliency detection. IEEE Geosci. Remote Sens. Lett. 15, 1392–1396 (2018)

Sun, Y., Robert, B.F.: Object-based visual attention for computer vision. Artif. Intell 146, 77–123 (2003)

Einhäuser, W., et al.: Objects predict fixations better than early saliency. J. Vis. 8 8.14, 18.1-18.26 (2008)

Ji, Y., et al.: Graph model-based salient object detection using objectness and multiple saliency cues. Neurocomputing 323, 188–202 (2019)

Guo, F., et al.: Video saliency detection using object proposals. IEEE Trans Cybern 48(2018), 3159–3170 (2018)

Alexe, B., et al.: Measuring the objectness of image windows. IEEE Trans. Pattern Anal. Mach. Intell. 34(2012), 2189–2202 (2012)

Simonyan, K., Andrew Z. Very deep convolutional networks for large-scale image recognition. CoRR abs/1409.1556 n. pag. (2015)

Wu, Z. et al. Cascaded Partial Decoder for Fast and Accurate Salient Object Detection. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019): 3902–3911

Wang, T. et al. A stagewise refinement model for detecting salient objects in images. 2017 IEEE International Conference on Computer Vision (ICCV), pp 4039–4048, (2017)

Hou, Q., et al.: Deeply supervised salient object detection with short connections. IEEE Trans. Pattern Anal. Mach. Intell. 41, 815–828 (2019)

Zhang, L. et al. A Bi-Directional message passing model for salient object detection. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 1741–1750 (2018)

Deng, Z. et al. R3Net: Recurrent Residual Refinement Network for Saliency Detection. IJCAI (2018)

Feng, M et al. Attentive Feedback Network for Boundary-Aware Salient Object Detection. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019): 1623–1632

Duncan, J.: Selective attention and the organization of visual information. J. Exp. Psychol. General 113(4), 501–517 (1984)

Egly, R., Driver, J., Rafal, R.: Shifting visual attention between objects and locations: Evidence from normal and parietal-lesion patients. J. Exp. Psychol. Gen. 123, 161–177 (1994)

Marini, F., Carlo, A.M.: Gestalt perceptual organization of visual stimuli captures attention automatically: electrophysiological evidence. Front. Hum. Neurosci. 10, 446 (2016)

Hess R.F., May K.A., Dumoulin S.O. Oxford Handbook of Perceptual Organization. Oxford University Press; Oxford, UK: 2013. Contour integration: Psychophysical, neurophysiological and computational perspectives

Koffka, K. Principles of Gestalt Psychology. (2012)

Ronneberger, O. et al. U-Net: convolutional networks for biomedical image segmentation. MICCAI (2015)

Itti, L., Koch, C.: Computational modelling of visual attention. Nat. Rev. Neurosci. 2, 194–203 (2001)

Meur, O.L., et al.: A coherent computational approach to model bottom-up visual attention. IEEE Trans. Pattern Anal. Mach. Intell. 28, 802–817 (2006)

Murray, N., et al.: Saliency estimation using a non-parametric low-level vision model. CVPR 2011, 433–440 (2011)

Xu, X., Wang, J. Extended non-local feature for visual saliency detection in low contrast images. ECCV Workshops (2018)

Bruce, N. D. B., Tsotsos, J. K. Saliency based on information maximization. NIPS (2005)

Li Y et al. (2009) Visual saliency based on conditional entropy Asian Conference on Computer Vision. Springer, Berlin

Seo, H.J., Peyman, M.: Static and space-time visual saliency detection by self-resemblance. J. Vis 9(12), 15.1-15.27 (2009)

Lu, S., et al.: Robust and efficient saliency modelling from image co-occurrence histograms. IEEE Trans. Pattern Anal. Mach. Intell. 36, 195–201 (2014)

Liu, T. et al. (2007) Learning to detect a salient object. CVPR (2007)

Harel, J. et al. Graph-Based Visual Saliency. NIPS (2006)

Achanta, R. et al. Frequency-tuned salient region detection. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1597–1604 (2009)

Duan, L., et al.: Visual saliency detection by spatially weighted dissimilarity. CVPR 2011, 473–480 (2011)

Perazzi, F. et al. (2012) Saliency filters: Contrast based filtering for salient region detection. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 733–740 (2012)

Jiang, H. et al. Salient object detection: a discriminative regional feature integration approach. 2013 IEEE Conference on Computer Vision and Pattern Recognition (2013): 2083–2090

Shi, Y., et al.: Region contrast and supervised locality-preserving projection-based saliency detection. Vis. Comput. 31, 1191–1205 (2014)

Zhou, Q.: Object-based attention: saliency detection using contrast via background prototypes. Electron. Lett. 50(14), 997–999 (2014)

Cheng, M.-M., et al.: Global contrast based salient region detection. CVPR 2011, 409–416 (2011)

Zhou, Q., et al.: Salient region detection by fusing foreground and background cues extracted from single image. Math. Prob. Eng. 2016, 1 (2016)

He, S., et al.: SuperCNN: A superpixelwise convolutional neural network for salient object detection. Int. J. Comput. Vis. 115, 330–344 (2015)

Wang, L. et al. Deep networks for saliency detection via local estimation and global search. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) pp 3183–3192 (2015)

Zhao, R. et al. Saliency detection by multi-context deep learning. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015): 1265–1274

Liu, N., Han, J. DHSNet: Deep hierarchical saliency network for salient object detection. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016): 678–686

Zhang, P et al. Amulet: aggregating multi-level convolutional features for salient object detection. 2017 IEEE International Conference on Computer Vision (ICCV) (2017): 202–211

Xie, S., Zhuowen, Tu.: Holistically-nested edge detection. Int. J. Comput. Vision 125, 3–18 (2015)

Liu, N. et al. PiCANet: learning pixel-wise contextual attention for saliency detection. 2018 IEEE/CVF conference on computer vision and pattern recognition, pp 3089–3098 (2018)

Wu, Z et al. Stacked cross refinement network for edge-aware salient object detection. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp 7263–7272 (2019)

Wei, J. et al. “F3Net: Fusion, Feedback and Focus for Salient Object Detection.” ArXiv abs/1911.11445 (2019): n. pag

Zhang, Q., et al.: Attention and boundary guided salient object detection. Pattern Recognit 107, 107484 (2020)

Ramachandran, P. et al. Stand-alone self-attention in vision models. NeurIPS (2019).

Hu, J., et al.: Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 42, 2011–2023 (2020)

Wang, X. et al. Non-local Neural Networks. 2018 IEEE/CVF conference on computer vision and pattern recognition (2018): 7794–7803

Bello, I. et al. Attention augmented convolutional networks. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp 3285–3294, (2019).

Woo, S. et al. CBAM: Convolutional Block Attention Module. ECCV (2018)

Chen, H., Li, Y.: Three-stream attention-aware network for RGB-D salient object detection. IEEE Trans. Image Process. 28, 2825–2835 (2019)

Li, C., et al.: ASIF-Net: attention steered interweave fusion network for RGB-D salient object detection. IEEE Trans. Cybern. 51, 88–100 (2021)

Yang, H., et al.: ContourGAN: Image contour detection with generative adversarial network. Knowl. Based Syst. 164, 21–28 (2019)

Zhao, J. et al. EGNet: Edge Guidance Network for Salient Object Detection. 2019 IEEE/CVF International Conference on Computer Vision (ICCV) (2019), pp 8778–8787

Wang, T et al. Detect globally, refine locally: a novel approach to saliency detection. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (2018): 3127–3135

Qin, X. et al. BASNet: boundary-aware salient object detection. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 7471–7481, (2019)

Qin, X., et al.: U2-Net: Going deeper with nested u-structure for salient object detection. Pattern Recognit. 106, 107404 (2020)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: the name of the first affiliation was not correct

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Das, D.K., Shit, S., Ray, D.N. et al. CGAN: closure-guided attention network for salient object detection. Vis Comput 38, 3803–3817 (2022). https://doi.org/10.1007/s00371-021-02222-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-021-02222-2