Abstract

In the context of the surveillance of the maritime traffic, a major challenge is the automatic identification of traffic flows from a set of observed trajectories, in order to derive good management measures or to detect abnormal or illegal behaviours for example. In this paper, we propose a new modelling framework to cluster sequences of a large amount of trajectories recorded at potentially irregular frequencies. The model is specified within a continuous time framework, being robust to irregular sampling in records and accounting for possible heterogeneous movement patterns within a single trajectory. It partitions a trajectory into sub-trajectories, or movement modes, allowing a clustering of both individuals’ movement patterns and trajectories. The clustering is performed using non parametric Bayesian methods, namely the hierarchical Dirichlet process, and considers a stochastic variational inference to estimate the model’s parameters, hence providing a scalable method in an easy-to-distribute framework. Performance is assessed on both simulated data and on our motivational large trajectory dataset from the automatic identification system, used to monitor the world maritime traffic: the clusters represent significant, atomic motion-patterns, making the model informative for stakeholders.

Similar content being viewed by others

1 Introduction

For the last 30 years, the tracking of movements of individuals or objects has been eased by the increasing development of several tracking devices, such as the Global Positioning System (GPS) that allows recording geographical positions through time. Such devices have led to large movement databases that store trajectory data, in which each point represents a position in space at a given time. These databases contain a great deal of knowledge and require analysis (Demšar et al., 2015) such as the extraction of movement patterns across diverse trajectories in order to perform human mobility or traffic monitoring (Li et al., 2007; Zheng et al., 2008), motion prediction (Sung et al., 2012), human action recognition (Yao et al., 2017) or detection of abnormal behavior in maritime routes (Vespe & Mazzarella, 2016).

A striking example of large movement database comes from the worldwide maritime traffic surveillance. This monitoring relies on several sources of data, in a rising context of maritime big data (Garnier & Napoli, 2016). Among these sources lies the Automatic Identification System (AIS), which automatically collects messages from vessels around the world, at a high frequency. AIS data basically consist in GPS-like data, together with the instantaneous speed and heading, and some vessel specific static information (Clazzer et al., 2014). An example of such data is presented in Fig. 1 (left), considering 6 months of AIS data of vessels steaming in the Ushant traffic separation scheme in Brittany, west of France (Fablet et al., 2017). These data are characterized by their diversity as they (1) are collected at different frequencies (2) have different lengths (3) are not necessarily regularly sampled (4) represent very different behaviors, but (5) share common trends or similar subparts (called hereafter movement modes).

(Left) AIS data in the Ushant traffic separation scheme (Brittany, France), gathered during 6 months (7M. GPS obs.). Source: CLS, Brest, France and ANR SESAME (Fablet et al., 2017) (Right) Three trajectories extracted from the dataset, sharing common movement modes (characterized by the directions West–South–West and South–South–West). The green (+ shaped points) trajectory, however, subsequently adopts another movement mode (South–South–East). The red (triangles) and blue (crosses) trajectories belong to the same cluster, which is different from the one of the green (+ shaped) trajectory

One major challenge in this context is the extraction of movement patterns emerging from the observed data, considering trajectories that share similar movement modes (see Fig. 1 (right)). This issue can be restated from a machine learning point of view as a large-scale clustering task involving the definition of clustering methods that can handle such complex data while being efficient on large databases, and that are able to both cluster trajectories as a whole and detect common sub-trajectories.

Regarding the problem of clustering entire trajectories, existing methods in the literature follow two main trends:

-

The first class of methods is a density-based clustering framework that relies on the definition of typical motions in the trajectories (Ester et al., 1996; Rinzivillo et al., 2008; Yang et al., 2020). Similarities between full trajectories are considered to take into account local details in the segments.

-

An other category of methods relies on distance computation between trajectories, clustering together trajectories that are similar. The most classical distances used in this context are Dynamic Time Warping (Sakoe & Chiba, 1978) or LCSS (Vlachos et al., 2002), among others.

-

The last class of methods is a rule-based clustering framework, that relies on pre-conceived decision rules to build each cluster (Vespe & Mazzarella, 2016). The main advantage of these methods is their simplicity, but they suppose the prior knowledge of decision rules, and afterwards make strong independence assumption on observed points, that might not hold in practice.

In a more parametric approach, one can aim at modelling the heterogeneous nature of movement data (Nathan, 2008) to define a proper normality model, whose structure is made of clusters.

In this context, trajectories would belong to a cluster, and, moreover, a trajectory could be the bringing together of different moving patterns or movement modes.

In this context, different trajectories may share similar movement modes in a given portion. To learn common sub-trajectories in the clustering process, Lee et al. (2007) propose a partition-and-group framework based on a dedicated distance. Wang et al. (2011b) propose the definition of a normality model for trajectories through an unsupervised clustering framework inspired by topic models (Steyvers & Griffiths, 2007). Initially developed in the field of text analysis, topic models aim at classifying documents depending on their main topics. The clustering approach then estimates the different topics in each document as well as clusters documents together regarding their topics. In the transposition of topic models to trajectory analysis, Wang et al. (2011b) consider trajectories as documents and quantized GPS observations (position and velocity) as words. It is assumed that each point of the trajectory belongs to a semantic region (a topic), called hereafter a movement mode. A movement mode is therefore a specific distribution to be estimated from trajectory data. Trajectories are then considered as a mixture of movement modes, and a trajectory cluster is a set of trajectories with a common mixture distribution. Estimation of both movement modes and trajectory clusters is performed on a discretized space within a Bayesian framework using Gibbs sampling. If this approach still assumes independence of GPS observations (conditionally to their semantic region), it takes into account different heterogeneity levels for movement data. However, it requires a quantization of the space whose influence over results is not investigated, and relies on Gibbs sampling for inference, which is known to be hardly scalable (Blei et al., 2017).

Nevertheless, none of the aforementionned methods are able to deal with large databases, neither consider the continuous nature of the data. For an in-depth review of spatial trajectory clustering algorithms, one can refer to Yuan et al. (2017) or (Zheng, 2015, Section 6).

Given the challenges mentionned above, we propose in this paper a novel model, based on topic models, to cluster large and complex trajectory datasets in a scalable framework.

Following these works, the approach proposed here distinguishes yet from existing works in the definition of GPS observations distributions as it is now assumed that (1) the distribution lives in a continuous space, avoiding the quantization of the (position/velocity) space, and (2) within a movement mode (or a topic), observations are not independent. Each movement mode is modeled through the bivariate velocity as a continuous time Gaussian process, namely the Ornstein Uhlenbeck Process (OUP) (Uhlenbeck & Ornstein, 1930), that has been proposed in movement ecology as a flexible framework to model the fundamental units of movement (Gurarie et al., 2017). Thanks to its continuous time formulation, the OUP allows handling irregularly sampled data. Moreover, as a Gaussian process, it accounts for observation autocorrelation. The unsupervised clustering is made in a non parametric Bayesian context using a Hierarchical Dirichlet Process framework (Teh et al., 2006). To perform movement mode estimation in a scalable approach, we use asymptotic properties of the OUP together with stochastic variational inference (Hoffman et al., 2013). Contrary to MCMC methods that obtain samples from the posterior distribution, the variational approach solves an optimization problem, allowing the use of distributed computation and stochastic optimization to scale and speed up inference (Blei et al., 2017).

The remainder of this paper is organized as follows: in Sect. 2, the hierarchical parametric framework that models trajectory data is fully specified, and the proposed scalable approach to estimate model parameters from data is described in Sect. 3. The method is evaluated in Sect. 4, both on simulated data sets, to assess inference performance, and on a new dataset of current AIS data that is released with the paper, to show the interest of our scalable approach in a realistic context.

2 Movement model

In this section, we define a parametric framework to model trajectory data, i.e. sequences of geographical positions recorded through time.

In the following, the fundamental modelling of movement is first described, considering the individual’s velocity process. Velocity is observed directly (as in the AIS context) or is at least computed from the successive geographical locations of the individuals. The positions and velocities of an individual are modeled as two continuous time processes in \({\mathbb {R}}^2\), denoted by \((X_t)_{t\ge 0}\) and \((V_t)_{t\ge 0}\). Following a common paradigm, we assume that a moving individual’s trajectory might be the collection of heterogeneous patterns (Nathan, 2008), namely the movement modes. Different movement modes along a trajectory refer to different ways of moving in terms of velocity distribution, reflecting different behaviors, activities, or routes. It is assumed that the set of possible movement modes of tracked individuals during their trajectories is countable, and that a given movement mode can be adopted by several individuals.

Then, the modelling framework aims to account for two levels of heterogeneity possibly present in trajectory data: (1) heterogeneity of an individual’s movement within a single trajectory, and (2) heterogeneity between observed trajectories of several individuals.

Fundamental unit of movement Similarly to Gurarie et al. (2017), a movement mode is assumed to be characterized by a specific correlated velocity model, defined in a continuous-time framework. Formally, during a time segment \([\tau _1; \tau _2]\), if an individual adopts the movement mode k, then its velocity process \((V_t)_{\tau _1 \le t \le \tau _2}\) is assumed to be the solution of the following Stochastic Differential Equation (SDE) (see Øksendal, 2003 for a formal definition of SDEs):

where:

-

\(\mu _k \in {\mathbb {R}}^2\) is the asymptotic mean velocity of the kth movement mode;

-

\(\Gamma _k\) is a \(2\times 2\) real-valued matrix, and is an autocorrelation parameter, modelling the recall force of the process towards the mean \(\mu _k\);

-

\(\Sigma _k\) is a \(2\times 2\) real-valued matrix, and is a diffusion term, modelling the variability of the process around the mean \(\mu _k\);

-

\(W_t\) is a bivariate standard Brownian motion, modelling the stochasticity of the individual’s velocity process;

-

\(v_{\tau _1}\) is the initial condition of the SDE: the individual’s velocity at time \(\tau _1\).

The solution to Eq. (1) is a well known continuous time stochastic process, the Ornstein Uhlenbeck Process (OUP) (Uhlenbeck & Ornstein, 1930), also known as the mean reverting process. This mean reverting property is controlled by the recall parameter \(\Gamma _k\) and makes the OUP suitable to describe movement modes of individuals, that are often characterized by a mean velocity regime, which is reached by rapid and brutal accelerations (or decelerations, see Fig. 2). The OUP satisfies the three following properties:

-

1.

\((V_t)_{\tau _1 \le t \le \tau _2}\) is a continuous time Markov process;

-

2.

\((V_t)_{\tau _1 \le t \le \tau _2}\) is a Gaussian process, i.e. the law of the random variable \(V_{t}\vert V_{\tau _1} = v_{\tau _1}\) is Gaussian, with explicit mean and covariance (given in “Appendix 1”);

-

3.

if both eigenvalues of \(\Gamma _k\) are positive, then \((V_t)_{t \ge \tau _1}\) is an asymptotically stationary Gaussian Process, i.e., the law of \(V_{t}\vert V_{\tau _1} = v_{\tau _1}\) satisfies:

$$\begin{aligned} V_{t}\vert V_{\tau _1}\overset{t\rightarrow \infty }{\longrightarrow } {\mathscr {N}}\left( \mu _k, \varLambda _k\right) , \end{aligned}$$(2)where \(\varLambda _k\) is the asymptotic covariance matrix of the OUP (given in “Appendix 1”).

As discussed in Gurarie et al. (2017), the OUP offers a flexible framework to model wide range of autocorrelated velocity processes, such as highly directed movements or rotational ones. Knowing the individual’s velocity process, its position process, starting at position \(x_{\tau _1}\), is obtained by deterministic integration over time:

The resulting process \((X_t)_{\tau _1\le t\le \tau _2}\) is known as an Integrated Ornstein Ulhenbeck Process (IOUP), which remains a Gaussian Process.

Five realizations of bivariate Ornstein Ulhenbeck Processes of parameters \(\mu \), \(\Gamma \) and \(\Sigma \), solution of the SDE Eq. (1), starting at position \(v_0 = (0, 0)\) (top right corner)

Within trajectories heterogeneity To account for heterogeneous ways of moving during a single trajectory, we assume that an individual’s trajectory in a time segment [0, T] is a sequence of IOUPs. Formally, there exists a sequence of times \(\tau _0 = 0< \tau _1<\cdots < \tau _L = T\) such that the trajectory is a sequence of L successive IOUPs, each IOUP being defined over a time segment \([\tau _l, \tau _{l+1}]\). A simulated example of such a sequence is shown on Fig. 3.

(Left) Simulation of a sequence of Ornstein Uhlenbeck Processes defining a trajectory. The red triangle marks the starting velocity. The trajectory is made of two movement modes (identified by their colors and line styles), characterized by two different sets of parameters for the velocity process from Eq. (1). (Right) Bivariate velocity processes, together with the instant \(\tau _1\) at which parameters change (vertical lines)

Therefore, a trajectory is characterized by (1) the movement modes adopted within the trajectory, (2) the time spent by the tracked individual within each movement mode and (3) the transitions from one movement mode to another. Here, we do not impose specific modelling for the last two points. Our first main goal is then to estimate the different movement modes within trajectories from a given dataset.

Between trajectories heterogeneity In addition to this segmentation problem, we assume that the dataset is composed of different (unknown) clusters of (entire) trajectories. A cluster is characterized by similar distributions over movement modes. In other words, two trajectories belong to the same cluster if they are composed of the same movement modes in similar proportions. This clustering problem is illustrated in Fig. 4. Our second main goal is then to cluster together such trajectories.

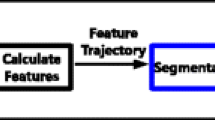

To summarize, the overall modelling framework for the two level clustering is depicted on Fig. 5.

Simulation of three different trajectories with the same model as in Fig. 3. The left panel shows the position processes, the right panel shows the velocity processes. All processes start from (0, 0). (Left) Two trajectories (in plain lines) are made of the same movement modes so they belong to the same cluster. The other trajectory (dashed line) has a different distribution over movement modes so it belongs to another cluster. (Right) The trajectories are plotted on the bivariate velocity space, showing the three possible movement modes. Two of them (red and blue) are shared by the two trajectory clusters

Modelling framework for the two level clustering of our movement data set (consisting in J trajectories). Equations for the Ornstein–Uhlenbeck dynamics are given in Sect. 2. Hidden movement mode dynamics are not fully specified, as a simplified independant model will be used for inference. Classical modelling framework would be a Markov chain or a semi Markov chain. Notations used in this figure are detailed in Sect. 3

3 Scalable two step clustering

The following three problems must be solved:

-

1.

Characterizing different movement modes present in the dataset;

-

2.

For each GPS observation, estimating in which movement modes it belongs;

-

3.

Clustering together trajectories that have the same distribution over movement modes.

In the previous section, we defined a realistic framework to model movement data, introducing a continuous time autocorrelated model over velocity process. The resulting framework therefore consists in a continuous time regime switching diffusion model. Estimating both parameters and movement mode assignation of data is in this case a statistical challenge, even in the case of a single trajectory (Patterson et al., 2017). For instance, Blackwell et al. (2016) use thinning Poisson process and MCMC methods to perform parameter estimation from movement data, resulting in highly time demanding approach. A big challenge for estimation methods therefore remains their scalability, especially in the dual clustering context we target here.

In order to perform scalable parameter inference and clustering of both trajectories and GPS observations (into movement modes), we adopt a pragmatic two-step approach that takes advantage of the inherent properties of the OUP and are described with more details hereafter:

- Step-1:

-

A first dual clustering is performed based on a simpler independent Gaussian mixture model, in order to estimate potential movement modes and trajectory clusters: it allows getting rid of within mode autocorrelation in the inference, and therefore eases the computations. The Gaussian hypothesis in this case is rather natural, as the OUP stationary distribution is Gaussian.

- Step-2:

-

Among the estimated movement modes, only those meeting a temporal consistency constraint are kept. Parameters of these consistent movement modes are then estimated, and used to reassign observations that were assigned to inconsistent movement modes. It ensures that only trajectory segments for which this stationary distribution was reached are kept to estimate movement modes.

Finally, we discuss the algorithmic complexity of the proposed two-steps procedure, justifying that the procedure remains tractable for large datasets while using a continuous time framework ine the movement modes modelling.

3.1 Step 1: simplified model based on an independence assumption

3.1.1 Hierarchical model

The data set is composed of J independent trajectories that we aim to cluster into C groups. This number of clusters is unknown and has to be inferred from data. Each trajectory j is a sequence of \(n_j\) velocities \((V_i^j)_{i = 1,\ldots , n_j}\).

For a trajectory j, we denote \(F_j = c\) if it belongs to the cluster c (\(1\le c \le C\)). We suppose that:

where \(w_c\) is an unknown parameter to be estimated and we denote \(\varvec{w}= \left( w_1,\ldots , w_C\right) \). In this first step, it is assummed that each cluster c is a Gaussian mixture distribution having K components and mixture weights \(\varvec{\pi }^c = \left( \pi _1^c,\ldots , \pi _K^c \right) \). Again, this number K is unknown and has to be inferred from data. Each mixture component models a movement mode. For each \(V_i^j\), we denote \(Z_i^j = k\) if the velocity belongs to the k-th movement mode. Conditionnally to the cluster assignation of the trajectory j, we therefore have:

We denote \(\varvec{\pi }= \left( \varvec{\pi }^1, \ldots , \varvec{\pi }^C \right) \) the set of all unknown movement modes weights to be estimated.

In this simplified model, conditionnally to their movement mode, all the velocities are mutually independent [as assumed in Wang et al. (2011b)]. This strong hypothesis eases the computation and ensures the scalability of the method as a first step, but will be relaxed in the second step. We therefore suppose that:

where \(\mu _k \in {\mathbb {R}}^2\) (resp. \(\varLambda _k \in {\mathscr {M}}_{2 \times 2}\)) is the mean velocity (resp. the precision matrix) of the k-th movement mode. The set of unknown movement parameters \(\left\{ (\mu _k, \varLambda _k)\right\} _{k=1 \ldots K}\) is denoted \({\mathscr {T}}\).

The movement parameter set \({\mathscr {T}}\), the trajectory clusters weights \(\varvec{w}\) and, for each cluster c, the movement mode weights \(\varvec{\pi }^c\) are the global variables [reusing the terminology used in Hoffman et al. (2013)] of the model that have to be estimated. Moreover, for each trajectory j, the cluster label \(F_j\) has to be estimated. Finally, for each observation \(V_i^j\), the movement mode label \(Z_i^j\) has to be estimated. Sets \(\varvec{F}= \left\{ F_j\right\} _{1\le j \le J}\) and \(\varvec{Z}= \left\{ Z_i^j\right\} _{1\le j \le J,~1 \le i \le n_j}\) are the local variables of the model to be estimated. The resulting hierarchical structure of this model is depicted on Fig. 6. The next section describes the scalable inference approach to estimate both local and global variables from the data.

Graphical representation of the hierarchical structure of the simplified model of Sect. 3.1

3.1.2 Bayesian estimation of the parameters using stochastic variational inference

In the first step, the inference of the local and global variables is made within a Bayesian context, considering unknown parameters as random variables. The inference therefore aims at obtaining the joint posterior distribution of local and global variables, denoted by \(p({\mathscr {T}}, \varvec{w}, \varvec{\pi }, \varvec{F}, \varvec{Z}\vert \varvec{V})\).

Together with the prior specification, a classical problem in Bayesian clustering is to set the number of clusters. In the following, we adopt a Bayesian non parametric approach by considering a possibly infinite set of movement modes and clusters in the data.

First, for a given movement k, we consider as prior distribution for \((\mu _k, \varLambda _k)\) a Gaussian–Whishart having hyperparameters \(m_0, \rho _0, \gamma _0\) and \(W_0\). This distribution is a common prior for Gaussian parameters as it provides nice conjugacy properties that we’ll use in our scalable purpose. The hyperparameters \(m_0\) and \(W_0\) are location hyperparameters for the mean and the precision matrix, while \(\rho _0\) and \(\gamma _0\) are concentration parameters, setting the amount of information carried by the prior.

As we do not specify a priori the number of possible movement modes, we assume that this number is infinite.

Each trajectory cluster c is therefore an infinite mixture distribution whose components are Gaussian distributions. The mixture weights prior is given by a stick breaking distribution, denoted by \(GEM(1, \beta )\), where \(\beta \) is a concentration hyperparameter (see Sethuraman, 1994 as well as “About the stick breaking construction” section of “Appendix” for details).

Finally, the amount of such clusters is itself infinite, and the prior distribution over cluster weights is again a stick breaking distribution \(GEM(1, \alpha )\) where \(\alpha \) is a concentration hyperparameter. The full prior specification is then given by the following equations:

The model depicted in Fig. 6 together with these priors is known as a Hierarchical Dirichlet Process (Teh et al., 2006). In this Bayesian context, the expression of the posterior density \(p({\mathscr {T}}, \varvec{w}, \varvec{\pi }, \varvec{F}, \varvec{Z}\vert \varvec{V})\) has no known analytical form. A classical way to obtain Bayesian estimators of quantities of interest would be alternatively to obtain samples from this posterior distribution. This can be done using MCMC methods, that can be implemented for Dirichlet processes using a Gibbs sampler (Neal, 2000), and were used in Wang et al. (2011b) for the inference in their discrete-space framework. However, it is known that the Gibbs sampler does not scale properly (Blei et al., 2017), and therefore could not be used in the context of this paper.

A more scalable alternative to this simulation-based approach is the use of variational inference (see Blei et al., 2017 for a recent review). Variational methods reduce the inference to an optimization problem by minimizing a divergence (typically the Kullback–Leibler divergence (Kullback & Leibler, 1951)) between the target posterior distribution and the members of a simpler family of distributions, the variational family. An appropriate and common variational family \({\mathscr {Q}}\) is the one which satisfies the mean field assumption, i.e. the set of q distributions that can be fully factorized. Our variational family is therefore such that:

The mean field inference problem reduces to find \(q^*\) such that:

where \({\mathbb {E}}_q[\cdot ]\) denotes the expectation with respect to the p.d.f. q. The right hand side of (4) is known as the evidence lower bound (ELBO), and can be computed for appropriate families of distributions \({\mathscr {Q}}\), and thus can be maximized.

In addition to this mean field property, the variational approximation of the posterior distribution is restricted to finite sets of parameters. Formally, the posterior distributions of the infinite cluster (resp. movement mode) weights are approximated by a distribution on a finite set of weights having \(C_{max}\) (resp. \(K_{max}\)) elements. Here, \(C_{max}\) and \(K_{max}\) are variational parameters given by the user. This variational approximation is known as the truncated stick breaking distribution (Hoffman et al., 2013) (see “Appendix 2” for details).

A known algorithm to compute \(q^*\) is the coordinate ascent variational inference (CAVI, Bishop, 2006). This iterative algorithm starts from an initial guess \(q^{(0)}\) for the optimal variational distribution, and successively updates each of its components by supposing the others known, and computes an expectation with respect to their distribution (Algorithm 1). For the model presented in Sect. 3.1 and the chosen prior distributions, all needed expectations can be computed explicitly (see “Appendix 2” for details).

As described in Hoffman et al. (2013), this algorithm can be seen as a gradient ascent, and is therefore suitable for stochastic approximations, resulting in the stochastic variational inference (SVI).

The estimation algorithm therefore reduces to:

-

1.

sample uniformly a batch from the data set,

-

2.

compute the expectations (as given in “Appendix 2”) using only this batch,

-

3.

update variational distributions using these expectations, as described in Hoffman et al. (2013).

This procedure can be performed online, therefore with no need of storing the data (Wang et al., 2011a). Performances of these stochastic methods are widely discussed in Wang et al. (2011a) and Hoffman et al. (2013).

It is worth noting here that all the needed computations can naturally be distributed (thanks to the independance simplification), as they are essentially a sum over simple operations involving single observations. Therefore, the SVI algorithm proposed here for the simplified model is widely scalable, unlike Gibbs sampling procedures.

As the optimization is done in a high dimensional space, and the algorithm only guarantees convergence to a local optimum, it is crucial to initialize the algorithm from different starting points, to ensure a good exploration of the space.Footnote 1 Again, different runs of the algorithm can be distributed.

3.2 Step 2: estimation of OUP parameters from clustering outputs

The previous section described the first step of our two-step approach to perform trajectory clustering and inference of movement modes. In this section, we define how the parameters of the movement modes, defined by Ornstein Uhlenbeck processes, are re-estimated from this first step.

This first step gives as an output the set of optimal variational distributions, namely:

-

\(q^*_{{\mathscr {T}}}\), the posterior distribution of \(\left\{ (\mu _k, \varLambda _k) \right\} _{1 \le k \le K_{max}}\), the parameters (under the Gaussian mixture assumption) of the \(K_{max}\) possible movement modes in the data set;

-

\(q^*_{\varvec{w}}\), the posterior distribution of the weights of the \(C_{max}\) possible trajectory clusters in the data set;

-

\(q^*_{\varvec{\pi }} = \left\{ q^*_{\varvec{\pi }^c}\right\} _{1\le c \le C_{max}}\) the posterior distribution of the weights of the \(K_{max}\) possible movement modes in each trajectory cluster c in the data set;

-

\(q^*_{\varvec{F}} = \left\{ q^*_{F_j}\right\} _{1\le j \le J}\) for each trajectory j, the posterior probability of being in each possible trajectory cluster;

-

\(q^*_{\varvec{Z}} = \left\{ q^*_{Z_i^j}\right\} _{1 \le j \le J, 1 \le i \le n_j}\) for each observation i of trajectory j, the posterior probability of being in each possible movement mode.

From the last two distributions, one can get estimators of clusters and movement modes present in the data. A classical estimator would be the maximum a posteriori (MAP), i.e. the cluster (resp. movement mode) label giving the maximum weight of the posterior multinomial distribution \(q^*_{F_j}\) (resp. \(q^*_{Z_i^j}\)).

In order to estimate the OUP parameters of the k-th movement mode, as defined in Sect. 2, a filter is applied on movement mode sequences based on their temporal consistency. Formally, for a trajectory j, let us consider a sequence of m successively recorded velocities \(V_{i_1}^j,\ldots ,V_{i_m}^j\), that were all estimated to belong to a same movement mode k. This sequence is said to be temporally consistent if its length \(t_{i_m} - t_{i_1}\) is larger than \(\delta \), a user chosen parameter, representing the minimal time lag for a movement mode. All consistent sequences estimated in a same movement mode are considered as independent realizations of OUP with common parameters. From the Markov and Gaussian properties of the OUP, the likelihood related to this data set can be easily maximized to obtain estimates of parameters \(\mu _k\), \(\Gamma _k\) and \(\Sigma _k\) (see “Appendix 1”). If an estimated movement mode k (i.e. a movement mode containing at least one observation) has no consistent sequence, this movement mode is considered as inconsistent. Finally, to refine the movement modes classification, observation sequences belonging to inconsistent movement modes are reallocated to the consistent movement mode whose parameters maximize their likelihood. The overall procedure is summarized in Algorithm 2.

Note that this second step depends on a parameter \(\delta \), which value is surely data dependent, but has a clear interpretation. Therefore, depending on the context, its value can easily be discussed with relevant experts or stakeholders.

The resulting consistent movement mode concept allows one to (1) have a good estimation of OUP parameters within a movement mode (as a consistent sequence will often be related to a large amount of points) and (2) filter out “noise” movement modes gathering few observations in a temporally inconsistent manner.

3.3 Algorithmic complexity

The goal of the two-step inference scheme is to make the approach tractable while still using fine-grained continuous time models inside movement modes.

Time complexity for our first step is at worse \(O(J \times t_{max} \times K_{max} + J \times C_{max})\) where \(t_{max}\) is the maximal number of time steps for a trajectory in the data set. It should be pointed out that our approach relies on a variational approximation of the stick breaking distribution, that imposes to set two parameters \(K_{max}\) and \(C_{max}\), as the maximal number of movement modes and trajectory clusters present in the data. These numbers should increase with n. It is worth noting that for our prior specification, a Dirichlet process of parameter \(\alpha \), the expected number of classes in a n data set is \(\alpha \log n\) (Ghosal & Van der Vaart, 2017, Proposition 4.8). The time complexity for this first step is hence \(O(\beta n \log n + \alpha J \log J)\) where \(n = \sum _{j}n_j\) is the total number of points in the data set.

Then, estimating OU parameters for a given movement mode in the second step of our process is linear in the number of observations assigned to this movement mode. Since this has to be done for all movement modes, complexity for this second step is linear in the total number of observations in the dataset.

Overall, computational complexity of the inference step is then quasilinear in n and, as discussed above, parts of the computations involved can be distributed. We show in Sect. 4 that these two properties allow tackling large scale datasets of GPS data in reasonable time.

4 Experiments

4.1 Experiments on simulated data

To ensure that the inference proposed in Sect. 3 suits the model defined in Sect. 2, a numerical experiment is performed on simulated data.

Simulation set up A data set of 40 trajectories containing overall 8000 observations is simulated, according to a model with 2 trajectory clusters, the two clusters being composed of respectively 2 and 3 movement modes.

Within each movement mode, velocities are drawn from an OUP whose parameters are movement mode dependent. All trajectories start at velocity (0, 0) and spend 50% of the whole time in the first movement mode before switching to a second movement mode. Trajectories of the first cluster spend then the remaining time in the second movement mode. The trajectories in the second cluster spend 12.5% in the second movement mode before switching to the third movement mode for the remaining time (37.5% of the overall time). Simulated data are shown in Fig. 7.

Movement mode estimation: results after the first step A first clustering is performed using the variational inference approach within a hierarchical Dirichlet process, as described in Sect. 3.1. The variational approximations of the stick breaking distributions are made with truncated breaking distribution with \(C_{max} = 5\) and \(K_{max} = 12\). Optimization is made independently from 200 starting points chosen randomly, performing 1000 iterations of the SVI, with a decreasing gradient step along iterations. The best estimate is chosen as the final point maximizing the ELBO defined in Eq. (4). The estimated movement mode for a velocity \(V_i^j\) is computed as the maximum a posteriori of the weights distribution \(q^*_{Z_i^j}\). It results that among the \(K_{max} = 12\) possible movement modes, only 5 of them contain at least one observation. Overall, 88.3% of the points are attributed to the correct movement mode (Table 1). However, two extra movement modes are estimated, corresponding to the transition phases towards the light blue movement mode and leaving from it (see Fig. 8).

It is worth noting here that this first phase is a continuous space version of the work of Wang et al. (2011b). This first phase of the presented approach, however, already improves on this baseline since it does not require to decide on a space quantization grid.

Movement mode estimation: results after the second step Including the second step described in Sect. 3.2, movement modes for inconsistent sequences are re-estimated. Using \(\delta \)-consistent sequences, OUP parameters are estimated and used to reallocate inconsistent ones. This results in a good reallocation of the problematic transitory phases having now an overall 96.1% good classification rate, as shown in Fig. 8 and Table 1.

One can see here that coming back to the OU property enables correct classification of transitory phases of the movement. This is due to the recall force in the OU that enables attaching any observed velocity that is not close to the mean velocity in the movement mode as soon as there is a trend for velocities to move toward that asymptotic mean.

Trajectory clusters estimation As expected on such a simple example, the trajectory cluster assignation is 100% right. It is worth noting here that it would not be the case on trickier examples (not shown here), for instance, when the clusters are distinguished by the order of movement modes sequence, which cannot be captured by the HDP for Gaussian mixtures used here.

Estimated movement modes (in the bivariate velocity space) after one step (left) and two steps (middle). The ground truth is shown on the right. After one step, 5 movement modes are present in data, after step 2, only three are remaining. The related contingency table for good labelling is shown on Table 1

4.2 Experiments on maritime traffic data

Dataset We now validate our clustering approach by analysing real data. The considered dataset records 6 months of AIS data of vessels steaming in the area of the Ushant traffic separation scheme (in Brittany, West of France). This is an area with one of the highest maritime traffic density in the world, with a clear separation scheme of two navigation lanes. Different kinds of vessels are sailing in the area, from cargos and tankers with high velocity and straight routes to sailboats or fishing vessels with lower speed and different sailing directions. As such, the area is highly monitored to avoid collision or grounding, and a better analysis and understanding of the different ship behaviors is of prime importance.

The whole trajectory dataset is shown on Fig. 1 and is available online.Footnote 2 It consists in 18,603 trajectories, gathering at all more than 7 millions GPS observations. Only trajectories having more than 30 points are kept, time lag between two consecutive observations ranges between 5 s and 15 h, with 95% of time lags below 3 min.

Two step movement modes estimation Model fit is performed considering a maximum of 90 movement modes and 30 trajectory clusters, using non-informative priors. 200 runs of 50,000 iterations for SVI are performed independently, each run taking approximately 8 h with SVI computations parallelized on a 8-core machine. The run leading to the highest ELBO is kept as the estimate.

The SVI leads to the estimation of 81 different movement modes containing (in the sense of maximum a posteriori probability) at least one observation, with 50 movement modes containing 95% of observations. The shapes (mean and covariance) of these movement modes are shown on Fig. 9. One can note that a lot of movement modes lead to a same steaming direction, but at different speeds. In this context, choosing more informative prior or adding a penalty on movement modes covariance parameters in the ELBO optimization could lead to a model with less movement modes.

Trajectory clusters estimation In addition to the movement modes estimated above, 29 (non-empty) trajectory clusters are identified. Three emblematic clusters are represented on Fig. 10, plotting 50 randomly chosen trajectories with the same colour code as in Fig. 9. The first two subfigures present the largest clusters (gathering respectively 18% and 12% of trajectories) and are composed of two preponderant movement modes: they represent the two main marine roads of the Ushant traffic separation scheme, the first one entering the English Channel, the second one exiting it. The third cluster gathers 5% of trajectories, and mostly puts together trips that are performed at low speed (below 5 knots), involving both sailing boats and fishing vessels.

50 trajectories of three estimated trajectory clusters, see the text for details. Colors correspond to those of Fig. 9

Comparison with other clustering method Our algorithm can be seen as an improved (continuous-space) version of the method presented in Wang et al. (2011b). As such, in order to validate the results of the clustering, we rather rely on a k-means algorithm using Dynamic Time Warping (DTW, Sakoe & Chiba, 1978) as a distance between trajectories considering them as sequences of 2D velocity vectors (using DTW Barycenter Averaging method from Petitjean et al. (2011) for centroid estimation). It is worth mentionning here that this method has a complexity at worse \(O(J C_{max}\times t_{max}^2)\) (where \(t_{max}\) is the maximal number of points for a trajectory in the data set). Thus, running this algorithm on the full dataset was too costly and we subsampled one tenth of the original dataset to feed the clustering. We used the implementation from the python library tslearn (Tavenard et al., 2017). We also run our algorithm on the same dataset and we obtain a Normalized Mutual Information between the clusterings equal to 0.63. The resulting five main clusters are presented in Fig. 2 in the “Appendix”, together with a confusion matrix between those five clusters. As no ground truth is available, we cannot determine which solution fits better but we note by a visual inspection of the clusters that the DTW-based clustering fails at differenciating ships that follow the same traffic lane (i.e. identifying sub-routes in the main traffic lanes). One should also note that, by assigning movement modes to the observations, our methods provides extra explanation for cluster assignments, compared to k-means that solely relies on inertia minimization. For instance, we show on Fig. 11 typical trajectories from two significant clusters (respectively 4th and 5th in terms of size). This example illustrates the relevance of our modelling framework in this context, where these two clusters differ in the initial route (one coming from southwest, the other from the south), thus differing in one movement mode, but then share two movement modes, for the rest of the route.

Typical trajectories from two estimated clusters. Trajectories starts at the south, and ends at the North East. In the first part of the trajectories, clusters are differentiated by the movement mode (South West to North East for on the left, South East to North West on the right). Then, all trajectories follow the same route

Interest of the post-filtering step The post filtering step was performed whith a \(\delta \) parameter of 2 h. In the present case, the main advantage of the second step, which consists in a re-estimate of inconsistent sequences, is to avoid the estimation of transitory movement modes. Figure 12 shows a trajectory belonging to the central cluster of Fig. 10. The transitory phase of the vessel, corresponding to its change of direction, is first assigned a specific movement mode at step 1 [as would be done by the baseline from Wang et al. (2011b)]. As this transition is brief and the corresponding observations can be considered consistent with the OUP attached with the blue movement mode, these observations are reassigned to that movement mode.

Example of result of the proposed second step in movement mode estimation. Both the velocity (left) and position (right) processes are shown (units are of no importance here). The red cross shows the first point of both processes. Colors correspond to different movement modes. The purple phase in the middle of the trajectory is inconsistent (as defined in the text). It is a transitory phase in the vessel’s velocity process. After the second step, it is assigned as the beginning of the second movement mode

5 Conclusion

In this work, we have defined a generic framework for the clustering of large trajectory data sets.

In our modelling, a trajectory is described by both the successive positions and velocities. Each trajectory is seen as a succession of movement modes in which a Ornstein Uhlenbeck process is used to model the velocity. A cluster of trajectories is then characterized by a its distribution over movement modes.

This framework is then specified in continuous time and space, which makes its formulation insensitive to GPS sampling, relaxing assumptions of previous models of the literature that are based on space quantization.

Inference is done in a two-step scalable approach using stochastic variational inference for a conjugate hierarchical Dirichlet process. This framework is easy to distribute as demonstrated experimentally, and has a quasilinear complexity in the number of observations.

Besides, we provide a dataset of several millions of observations in the AIS context to both validate our model and allow future competitive methods to compare on a real-world large-scale trajectory dataset. Note however that the proposed framework is generic and suits, to our opinion, to a wide range of trajectory data sets.

Future works include extension of our framework to model sojourn time in movement modes and/or transitions between modes. This would make anomaly detection possible, both at the observation level (abnormal observation given the estimated movement mode) and at the trajectory level (abnormal trajectory as an unlikely sequence of movement modes). Such an extended model could also be used as a fully generative model.

Notes

In this purpose also, it should be pointed out that any stochastic approach is better than the fully deterministic CAVI algorithm, as discussed in Hoos and Stützle (2004), for instance.

In our example, it was sampled uniformly in the continuous interval [80, 500].

In our example, it was sampled uniformly in the discrete interval [50, 450].

References

Bishop, C. (2006). Pattern recognition and machine learning. Information science and statistics. Berlin: Springer.

Blackwell, P., Niu, M., Lambert, M. S., & LaPoint, S. D. (2016). Exact Bayesian inference for animal movement in continuous time. Methods in Ecology and Evolution, 7(2), 184–195.

Blei, D., Kucukelbir, A., & McAuliffe, J. (2017). Variational inference: A review for statisticians. Journal of the American Statistical Association, 112, 859–877.

Clazzer, F., Munari, A., Berioli, M., & Blasco, F. L. (2014). On the characterization of AIS traffic at the satellite. In OCEANS 2014-TAIPEI (pp. 1–9). IEEE.

Demšar, U., Buchin, K., Cagnacci, F., Safi, K., Speckmann, B., Van de Weghe, N., et al. (2015). Analysis and visualisation of movement: An interdisciplinary review. Movement Ecology, 3(1), 5.

Ester, M., Kriegel, H. P., Sander, J., Xu, X., et al. (1996). A density-based algorithm for discovering clusters in large spatial databases with noise. KDD, 96(34), 226–231.

Fablet, R., Bellec, N., Chapel, L., Friguet, C., Garello, R., Gloaguen, P., Hajduch, G., Lefèvre. S., Merciol, F., Morillon, P., Morin, C., Simonin, M., Tavenard, R., Tedeschi, C., & Vadaine, R. (2017). Next step for big data infrastructure and analytics for the surveillance of the maritime traffic from AIS & Sentinel satellite data streams. In 2017 Conference on big data from space (BiDS’17) (pp. 371–374) poster.

Garnier, B., & Napoli, A. (2016). Exploiting the potential of the future “maritime big data”. In Maritime knowledge discovery and anomaly detection workshop.

Ghosal, S., & Van der Vaart, A. (2017). Fundamentals of nonparametric Bayesian inference (Vol. 44). Cambridge: Cambridge University Press.

Gurarie, E., Fleming, C. H., Fagan, W. F., Laidre, K. L., Hernández-Pliego, J., & Ovaskainen, O. (2017). Correlated velocity models as a fundamental unit of animal movement: Synthesis and applications. Movement Ecology, 5(1), 13.

Hoffman, M. D., Blei, D. M., Wang, C., & Paisley, J. (2013). Stochastic variational inference. The Journal of Machine Learning Research, 14(1), 1303–1347.

Hoos, H. H., & Stützle, T. (2004). Stochastic local search: Foundations and applications. Amsterdam: Elsevier.

Kullback, S., & Leibler, R. A. (1951). On information and sufficiency. The Annals of Mathematical Statistics, 22(1), 79–86.

Lee, J. G., Han, J., & Whang, K. Y. (2007). Trajectory clustering: a partition-and-group framework. In Proceedings of the 2007 ACM SIGMOD international conference on Management of data (pp. 593–604). ACM.

Li, X., Han, J., Lee, J. G., & Gonzalez, H. (2007). Traffic density-based discovery of hot routes in road networks. In International symposium on spatial and temporal databases (pp. 441–459). Springer.

Nathan, R. (2008). An emerging movement ecology paradigm. Proceedings of the National Academy of Sciences, 105(49), 19050–19051.

Neal, R. M. (2000). Markov chain sampling methods for Dirichlet process mixture models. Journal of Computational and Graphical Statistics, 9(2), 249–265.

Øksendal, B. (2003). Stochastic differential equations. In Stochastic differential equations (pp. 65–84). Springer.

Patterson, T., Parton, A., Langrock, R., Blackwell, P., Thomas, L., & King, R. (2017). Statistical modelling of individual animal movement: An overview of key methods and a discussion of practical challenges. Advances in Statistical Analysis, 101(4), 399–438.

Petitjean, F., Ketterlin, A., & Gançarski, P. (2011). A global averaging method for dynamic time warping, with applications to clustering. Pattern Recognition, 44(3), 678–693.

Rinzivillo, S., Pedreschi, D., Nanni, M., Giannotti, F., Andrienko, N., & Andrienko, G. (2008). Visually driven analysis of movement data by progressive clustering. Information Visualization, 7(3–4), 225–239.

Sakoe, H., & Chiba, S. (1978). Dynamic programming algorithm optimization for spoken word recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing, 26(1), 43–49.

Sethuraman, J. (1994). A constructive definition of Dirichlet priors. Statistica Sinica, 4, 639–650.

Steyvers, M., & Griffiths, T. (2007). Probabilistic topic models. Handbook of Latent Semantic Analysis, 427(7), 424–440.

Sung, C., Feldman, D., & Rus, D. (2012). Trajectory clustering for motion prediction. In 2012 IEEE/RSJ international conference on intelligent robots and systems (IROS) (pp. 1547–1552). IEEE.

Tavenard, R., Faouzi, J., Vandewiele, G., Divo, F., Androz, G., Holtz, C., Payne, M., Yurchak, R., Rußwurm, M., Kolar, K., & Woods, E. (2017). tslearn: A machine learning toolkit dedicated to time-series data. https://github.com/rtavenar/tslearn.

Teh, Y. W., Jordan, M. I., Beal, M. J., & Blei, D. M. (2006). Hierarchical Dirichlet processes. Journal of the American Statistical Association, 101(476), 1566–1581. https://doi.org/10.1198/016214506000000302.

Uhlenbeck, G. E., & Ornstein, L. S. (1930). On the theory of the Brownian motion. Physical Review, 36(5), 823.

Vespe, M., & Mazzarella, F. (eds) (2016). Maritime knowledge discovery and anomaly detection workshop proceedings. https://doi.org/10.2788/025881.

Vlachos, M., Kollios, G., & Gunopulos, D. (2002). Discovering similar multidimensional trajectories. In Proceedings of the 18th international conference on data engineering (pp. 673–684).

Wang, C., Paisley, J., & Blei, D. (2011a). Online variational inference for the hierarchical Dirichlet process. In Proceedings of AISTATS (pp. 752–760).

Wang, X., Ma, K. T., Ng, G. W., & Grimson, W. E. L. (2011b). Trajectory analysis and semantic region modeling using nonparametric hierarchical Bayesian models. International Journal of Computer Vision, 95(3), 287–312.

Yang, Y., Cai, J., Yang, H., Zhang, J., & Zhao, X. (2020). Tad: A trajectory clustering algorithm based on spatial-temporal density analysis. Expert Systems with Applications, 139, 112846.

Yao, T., Wang, Z., Xie, Z., Gao, J., & Feng, D. D. (2017). Learning universal multiview dictionary for human action recognition. Pattern Recognition, 64, 236–244.

Yu, S. Z. (2010). Hidden semi-Markov models. Artificial Intelligence, 174(2), 215–243.

Yuan, G., Sun, P., Zhao, J., Li, D., & Wang, C. (2017). A review of moving object trajectory clustering algorithms. Artificial Intelligence Review, 47(1), 123–144.

Zheng, Y. (2015). Trajectory data mining: An overview. ACM Transactions on Intelligent Systems and Technology (TIST), 6(3), 29.

Zheng, Y., Li, Q., Chen, Y., Xie, X., & Ma, W. Y. (2008). Understanding mobility based on GPS data. In Proceedings of the 10th international conference on ubiquitous computing (pp. 312–321). ACM.

Acknowledgements

Authors would like to thank CLS (Collecte Localisation Satellites) and Erwan Guegueniat for providing the raw data that allowed building the AIS dataset used in this paper.

Funding

This work has been supported by DGA and ANR through (i) the ANR/Astrid SESAME project (ref: ANR-16-ASTR-0026) and (ii) the ANR MATS project (ref: ANR-18-CE23-0006).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Editors: Dino Ienco, Thomas Corpetti, Minh-Tan Pham, Sebastien Lefevre, Roberto Interdonato.

Appendices

Appendix 1: Characteristics of the Ornstein–Uhlenbeck process

Notations:

-

\(I_d\) is the \(d\times d\) identity matrix;

-

For a matrix A, \(A^T\) is the transposed matrix of A;

-

For two \(2\times 2\) matrices A and B, \(A \oplus B\) denotes the Kronecker sum of A and B, defined by: \(A \oplus B = A \otimes I_2 + I_2\otimes B\), where \(\otimes \) denotes the Kronecker product. Note that in our case, \(A \oplus B\) is a \(4\times 4\) matrix;

-

For a matrix \(A = \begin{pmatrix} a_1&{} a_3\\ a_2&{} a_4 \end{pmatrix},~\mathbf{vec} \left( A \right) \) denotes the vectorization of A, i.e. the vector \(\mathbf{vec} \left( A \right) = \begin{pmatrix} a_1\\ a_2\\ a_3\\ a_4 \end{pmatrix}\).

Transition density Suppose that the process \((V_t)_{\tau _1 \le t \le \tau _2}\) is solution of Eq. (1), then,

where \(\Delta = t - \tau _1\) and

Likelihood Let \({\mathbf {v}}\) be a sequence of observations \(v_{t_1} = v_{\tau _1}, v_{t_2}, \ldots ,v_{t_n} = v_{\tau _2}\), discrete time observations of a OUP starting at \(V_{\tau _1}\) and such that \(V_{\tau _1}\) is a random variable with p.d.f. (possibly depending on \(\mu _k, \Gamma _k\) and \(\Sigma _k\)) \(p_{\tau _1}(\cdot )\). Then the likelihood of the observed sequence is given by:

where \(\Delta _i = t_{i+1} - t_i\) and \(\phi _{k,\Delta _i}(v_{t_{i+1}})\) is the p.d.f. of a Gaussian distribution with mean \(m_{k, \Delta _i}^{v_{t_i}}\) and covariance \(\varLambda _{k,\Delta _i}\)

Stationary distribution From above, one can see that if both \(\text {e}^{-\Gamma _k\Delta }\) and \(\text {e}^{-(\Gamma _k \oplus \Gamma _k)\Delta }\) vanishes to 0 when \(\Delta \) increases, then the process \((V_t)_{t\ge \tau _1}\) is asymptotically stationary, and

where

The vanishing condition is satisfied when both eigenvalues of \(\Gamma _k\) are positives.

Appendix 2: Computation for variational inference

1.1 Prior specification

Overall, the variables prior distributions are defined as follows:

where \(GW(\cdot )\) denotes the Gaussian Wishart distribution, depending on 4 hyperparameters, \(GEM(\cdot )\) denotes the stick breaking distribution depending on 1 hyperparameter, and \(Mult(\cdot )\) denotes the multinomial distribution (with weights as parameters). The choice of these distributions is convenient as it leads to a conjugate framework for conditional distributions, i.e., the conditional distribution of a hidden variable given the observations and the other hidden variables.

1.2 About the stick breaking construction

To build a prior for an infinite sequence of weights summing to one, we use the stick breaking construction. The topic weights \(\varvec{\pi }^c\) are build using an hyperparameter \(\alpha \):

Weights \(\pi ^c_k\) are sampled similarly, with an hyperparameter \(\beta \):

In the following, we rather deal with the sequence of \(\varvec{\nu }\) and \(\varvec{\eta ^c}\), as there are i.i.d. samples. We have, for each c and each k:

1.3 Likelihood

Keeping the notation of the main text, the likelihood of an observation \({\mathbf {V}}_i^j\) is given by:

which results, for the complete data set \({\mathbf {V}}\)

The distribution of latent allocation vectors (or local variables) \(F_j\) and \(Z_j^i\) is given by an (infinite) multinomial distributions depending on weights \(\varvec{w}\) and \(\varvec{\pi }\):

1.4 Variational approximations of the posterior distributions

Let \({\mathscr {L}} = \left\{ \{Z_i^j\}, \{F_j\}, \varvec{\eta }, \varvec{\nu }, \varvec{\mu }, \varvec{\varLambda } \right\} \) be the set of (local and global) hidden variables. For any hidden variable U in \({\mathscr {L}}\) the corresponding \(q^*(U)\) is given by

The complete joint distribution can be split in simpler terms:

1.4.1 Optimal variational distribution for \(\nu \)

The variational approximation of the trajectory cluster is a truncated stick breaking distribution. It is therefore defined by \(C_{max}\) random variables, such that the first \(C_{max}-1\) are beta distributed, and the last one equals to one almost surely. A simple computation using Eqs. (7) and (8) shows that for the \(C_{max}-1\) first terms, the variational distribution of \(\nu _c\) is given by:

where \({\mathbf {1}}\) denotes the indicator function.

1.4.2 Optimal variational distribution for \(\eta \)

Similarly to the clustering weights, a truncated stick breaking distribution is used. For the cluster c and the movement mode \(1\le k\le K_{max} - 1\), we have:

where we have written \(F_j = \left( f_{j,1},\ldots , f_{j,C_{max}}\right) \).

1.4.3 Optimal variational distribution for \((\mu , \varLambda )\)

This computation is done in Bishop (2006). For each movement mode \(1\le k \le K_{max}\), the optimal variational distribution is a \(\mathscr {GW}(m_k, \rho _k, \gamma _k, W_k)\) with:

where

1.4.4 Optimal variational distribution for \(F_j\)

The computation results in \(q^*(F_j)\) being a multinomial distribution on the set \([[1,C_{max}]]\) with the c-th weight proportional to:

Here, one can see that

This expectation can therefore be computed using the two following properties of the beta distribution. If \(X~\sim ~{\mathscr {B}}eta(\alpha _1,~\alpha _2)\), then

-

1.

\((1-X) \sim {\mathscr {B}}eta(\alpha _2, \alpha _1)\);

-

2.

\({\mathbb {E}}[\log X] = \psi (\alpha _1) - \psi (\alpha _1 + \alpha _2)\), where \(\psi \) is the digamma function.

Of course, the same remarks hold for \({\mathbb {E}}_{q_{\varvec{\eta }}}[\log \pi _k^c]\).

1.4.5 Optimal variational distribution for \(Z_i^j\)

The computation results in \(q^*(Z_i^j)\) being a multinomial distribution on the set \([[1,K_{max}]]\) with the k-th weight proportional to:

Appendix 3: Simulation design for experiments

We consider a framework with K = 12 movement modes, and C = 10 trajectory clusters. Each trajectory cluster c is characterized by:

-

Its initial movement mode density (the law of the first movement mode chosen during the cluster) denoted by \(\varvec{\pi }^c_0 = (\varvec{\pi }^c_{0,1},\ldots ,\varvec{\pi }^c_{0,K})\);

-

Its transition densities between movement modes (the laws for the next movement mode after having been in a cluster k) denoted by \(\varvec{\pi }^c_0 = (\varvec{\pi }^c_{k,1},\ldots ,\varvec{\pi }^c_{k,K})\) for all \(1\le k \le K\);

A movement mode k is characterized by:

-

Its movement parameters \(\mu _k, \Gamma _k, \Sigma _k\), i.e. the OUP parameters;

-

Its sojourn time distribution, i.e. the distribution of time spent in this movement mode, denoted by \(d_k\). In our example, \(d_k\) is the p.d.f. of gamma distribution \({\mathscr {G}}(\Delta _k, \frac{1}{2})\) where \( \Delta _k\) is a movement mode-specific parameter.

For each trajectory \(1\le j \le 500\), we independently repeat the following procedure:

-

Final time SampleFootnote 3 a final time \(T^j\);

-

Number of observations SampleFootnote 4 a number of observed points \(n_j\);

-

Observation times Sample independently \(\upsilon _1,\ldots , \upsilon _{n_j}\) points from a uniform \(U[0,T^j]\), and sort them to set times as \(t_1 := \upsilon _{(1)},\ldots t_{n_j} = \upsilon _{(n_j)}\);

-

Trajectory cluster Sample a trajectory cluster c with known probabilities \(\varvec{w}= (w_1,\ldots , w_{10})\);

-

First movement mode Sample \(k_0\) with probabilities \(\varvec{\pi }_0^c\);

-

First duration Set \(\tau _0 = 0\); Sample \(\delta _0\) from \(d_{k_0}\), set \(\tau _1 = \tau _0 + \delta _0\);

-

First OUP sampling Set an initial value \(V_{0}\). Sample an OUP \((V_t)_{\tau _0 \le t \le \tau _1}\) starting at \(v_0\) at all observation times between \(\tau _0\) and \(\tau _1\) and at \(\tau _1\);

-

Set i = 1;

-

While \(\tau _i < T^j\)

-

Movement mode Sample \(k_i\) with probabilities \(\varvec{\pi }_{k_{i-1}}^c\);

-

Duration Sample \(\delta _i\) from \(d_{k_0}\), set \(\tau _{i +1} = \tau _i + \delta _i\);

-

OUP sampling Sample a OUP \((V_t)_{\tau _{i} \le t \le \tau _{i+1}}\) starting at \(V_{\tau _i}\) at all observation times between \(\tau _i\) and \(\tau _{i+1}\), and at \(\tau _{i+1}\);

-

Set i = i + 1;

-

The resulting process is a continuous time hidden semi-Markov model (Yu, 2010) whose emission densities are autoccorelated OU processes.

Appendix 4: Additional experimental results

Table 2 represents the clusters obtained by our algorithm and those of a DTW-based k-means and Table 3 compares the results. The clusters are ordered by the number of trajectories they contain. In Table 2, one may notice that the DTW-based k-means algorithm puts in the 2 first clusters trajectories that enter the traffic scheme from the South. One also notices a correspondence between the clusters 1,4 (our) \(\rightarrow \) 1,2 (DTW), 2 (our) \(\rightarrow \) 3 (DTW), 3 (our) \(\rightarrow \) 4 (DTW), 5 (our) \(\rightarrow \) 5 (DTW).

Rights and permissions

About this article

Cite this article

Gloaguen, P., Chapel, L., Friguet, C. et al. Scalable clustering of segmented trajectories within a continuous time framework: application to maritime traffic data. Mach Learn 112, 1975–2001 (2023). https://doi.org/10.1007/s10994-021-06004-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-021-06004-8