Abstract

Machine learning (ML) holds significant potential for predicting soil properties in geotechnical design but at the same time poses challenges, including those of how to easily examine the performance of an algorithm and how to select an optimal algorithm. This study first comprehensively reviewed the application of ML algorithms in modelling soil properties for geotechnical design. The algorithms were categorized into several groups based on their principles, and the main characteristics of these ML algorithms were summarized. After that six representative algorithms are further detailed and selected for the creation of a ML-based tool with which to easily build ML-based models. Interestingly, automatic determination of the optimal configurations of ML algorithms is developed, with an evaluation of model accuracy, application of the developed ML model to the new data and investigation of relationships between the input variables and soil properties. Furthermore, a novel ranking index is proposed for the model comparison and selection, which evaluates a ML-based model from five aspects. Soil maximum dry density is selected as an example to allow examination of the performance of different ML algorithms, the applicability of the tool and the model ranking index to determining an optimal model.

Similar content being viewed by others

1 Introduction

Estimation of soil physical and mechanical properties is an inevitable step in geotechnical design for achieving the trade-off between the cost and engineering safety, such as the estimation of bearing capacity and long-term deformation. Site-specific laboratory testing is the most direct way of obtaining soil properties. For a certain type of soils, empirical or semi-empirical correlations have been developed for use in predicting soil properties [1,2,3,4,5,6,7]. However, these conventional regression-based correlations have limited prediction capabilities and cannot uncover the essential interconnections between input variables and the studied soil properties, hindering the development of a general prediction model and the use of existing data.

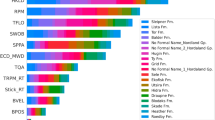

Machine learning (ML) provides novel insights into this issue because of its strong nonlinear fitting capability [8,9,10,11,12,13,14]. ML algorithms directly learn the relationships between the governing input variables and the studied soil properties [15,16,17,18]. In view of the large volume of research works regarding the application of ML algorithms to the prediction of soil properties, herein the well-known articles in the engineering, geology and methodology categories have been checked based on the Web of Science (Fig. 1). This type of research shows exponential growth and has prevailed since 2018 associated with the blossoming of ML.

The rapid development of ML has created a significant opportunity for predicting soil properties but at the same time poses challenges, such as how to easily assess an algorithm’s performance and how to select an optimal algorithm [19,20,21,22,23,24]. Thus, an easy-to-use tool is needed that can assist researchers or engineers in rapidly developing various ML-based models so that they can avoid having to learn ML from scratch. The development of such a tool would allow ready comparison of the performance of different ML-based models and selection of the optimal model based on a reasonable criterion.

This study first reviews the application of ML algorithms to predict soil properties, with more specified attention on six representative ML algorithms, i.e. genetic programming (GP), exponential polynomial regression (EPR), support vector regression (SVR), random forest (RF), feedforward neural network (FNN) and Monte Carlo dropout based artificial neural network (ANN_MCD), for application to prediction of soil properties. An easy-to-use ML-based modelling tool, a graphical user interface (GUI) platform so-called ErosMLM, is developed for use in building these ML-based models, with functions including automatic determination of optimal configurations of ML algorithms, evaluation of model accuracy, application of the developed ML-based model to new data and investigation of relationships between the input variables and soil properties. Meanwhile, a novel ranking index is proposed for model selection, which evaluates a ML-based model from five aspects, such as accuracy on the training set, accuracy on the testing set, ability to exploit and explore new data, monotonicity evaluation and model complexity. Soil maximum dry density is taken as an example when examining the developed tool, comparing model performance and selecting a model.

2 Review of ML Algorithms for Modelling of Soil Properties

2.1 Overview of Applied ML Algorithms

Linear and basic nonlinear regression methods aside, ML algorithms can be classified into different categories in geotechnical practice, as Table 1 shows. Symbolic regression algorithm-based models such as GP and EPR can be presented through an explicit formulation and thus are easy to use. SVR and tree-based algorithms such as RF are two types of classical ML algorithms that have shown excellent performance when handling high-dimensional data. FNN has an exceptional fitting capacity, particularly its network complexity can increase its adaptability to a high volume of data. A Bayesian-based model is characterized by uncertainty representation, in which the final prediction is assigned through an uncertainty evaluation. It should be noted that ANN_MCD was recently proposed for approximating Bayesian interference [25,26,27] and showed similarly excellent performance [28]. ANN_MCD is thus worth trying, because the uncertain algorithm is suitable for the reliability-based design in geotechnical engineering [29,30,31,32].

To this end, six ML algorithms – GP, EPR, SVR, RF, FNN and ANN_MCD (Fig. 2) – were ultimately selected and implemented for use in the development of ErosMLM. The principles of these algorithms are briefly introduced in the following sections. Their advantages or drawbacks are compared in the later sections.

2.2 Genetic Programming

GP is a type of symbolic regression method, consisting of terminals and functions [34, 48] (Fig. 2a). Terminals are conceptionally identical to the input variables X = (x1, x2, …, xn), and the function set F is a combination of various arithmetic operation such as {+ , –, × , /} [49]. Therefore, the output y of GP can be expressed using an explicit formulation using:

where c is a constant coefficient vector, which is generally obtained by the least square. The binary tree is the commonly used architecture in GP. The growth of a tree is controlled by the loss function, which is generally defined as the difference between the predicted and actual result. The tree evolves until obtaining the optimal structure that can minimize the loss value. Meanwhile, the operations during the evolution of the tree involve crossover and mutation. Crossover means the branch under a node has a probability of pc to be exchanged with different branches under other nodes, and the mutation means the arithmetic operation at each node has a probability of pm to be randomly changed.

2.3 Evolutionary Polynomial Regression

EPR is also a type of symbolic regression method [50] and its primary characteristic is that the original input variables are combined to form new transformed variables XT (Fig. 2b). Each transformed variable is the combination of original input variables, thereby the output y of EPR can be obtained using:

where E is an exponent matrix, and it is generally determined using meta-heuristic algorithms such as genetic algorithm and particle swarm optimization (PSO) [51,52,53]. The XT can be obtained by:

where n is the dimension of X. The dimension of XT (nt) and the values of elements in the exponent matrix are required to be prescribed, which are vitally important for the performance of EPR.

2.4 Support Vector Regression

SVR is characterized by structural risk minimization (Fig. 2c). For datasets that cannot be separated in the low-dimensional space, SVR can use kernel function to map datasets into a more high-dimensional space to find a hyperplane to separate all datasets [54]. The training of SVR is to find a hyperplane that can separate all datasets with a largest “gap”, which can be mathematically expressed using:

where m is a total of datasets X; ξ is the slack parameter, indicating the allowable error; C is the regularization parameter for imposing a penalty on error. The radial basis function is the commonly used kernel type, which can be obtained using:

where γ is the kernel coefficient.

2.5 Random Forest

Random forest (RF) is an ensemble ML algorithm, consisting of numerous decision trees (DTs) [55], in which each DT is an independent predictor (Fig. 2d). The final output of RF is the mean value of outputs generated by all DTs. Therefore, the most important hyper-parameters in RF are the number of DTs (ntree) and the number of features (mtry) at each node for dividing datasets. The number of datasets used for developing DT is determined using the bagging method [56], which generates a bootstrap set by sampling with replacement. In general, each bootstrap set based on bagging includes 2/3 datasets of the training set, and the remaining 1/3 referred to as out of the bag are used to estimate the error of DT [57].

2.6 Feedforward Neural Network

FNN is the most commonly used ML algorithm, consisting of input, hidden and output layers (Fig. 2e). The input data flows from the input layer to the output layer and the error is propagated from the output layer for updating all weights and biases until the difference between the predicted and actual results minimizes [58]. The weights and biases are generally updated using the gradient descend algorithm. The mathematic formulations of FNN can be expressed by:

where σ is the activation function; W and b are the weight matrix and bias vector, respectively; H is the output in the hidden layer.

2.7 Artificial Neural Network with Monte Carlo dropout

The predicted results of the formerly mentioned ML algorithms are deterministic, which means these algorithms can always output a fixed result as long as input a set of datasets (Fig. 2f). Gal and Ghahramani [25] thus proposed a novel theoretical framework that casts Monte Carlo dropout training in an ANN (ANN_MCD) to account for epistemic uncertainty. Dropout is a widely used overfitting prevention method [59], which inactivates each hidden neuron with a probability of p using:

In the conventional ANN, dropout only activates in the training phase, but in ANN_MCD, it activates in both training and testing phases. It means each hidden neuron has a fixed probability to be inactivated in the testing phase, i.e., the architecture of the ANN is not consistent. The output is different at each implementation, and this characteristic is used to represent model uncertainty. By performing stochastic calculation T times, the final output and uncertainty can be obtained using:

ANN_MCD has not been applying to model correlations of materials, but its output with an uncertainty evaluation is significant for the design and risk evaluation in civil engineering.

2.8 Summary and Suggestions

Based on the introduction of above mentioned six ML algorithms, each algorithm has its advantages and limitations (Table 2). The selection of an optimal ML algorithm thus depends on a comprehensive evaluation and detailed requirements of the studied issue.

3 Development of ML-based Modelling Tool

Considering the fast development in the ML domain, ML algorithms have been increasingly proposed, which leads to difficulties in advancing the understanding of these algorithms and applying them in engineering practice. To this end, the ErosMLM tool is developed, aiming to offer a platform to easily build ML-based models. The main interface is presented in Fig. 3a. It is programmed using Python language, and an open-source code tkinter is utilized for establishing a GUI framework. Four submodules are created: (1) “Develop” is designed to determine the optimal configuration of ML algorithms; (2) “Predict” is designed to directly use the existing ML-based models; (3) “Exit” is designed for the close of ErosMLM; and (4) “Help” is designed consisting of a manual for explaining the operation of ErosMLM. The development of this platform can be divided into six steps (see Fig. 3): (1) selecting a ML algorithm; (2) inputting the database in a required format; (3) preprocessing of datasets; (4) automatically determining the optimal configuration of the selected ML algorithm; (5) applying the ML-based model; (6) saving the results. The details of 3 key steps from 3 to 5 are presented as follows.

Introduction of ErosMLM: a main interface; b “Parametric analysis” module; c “Prediction on new data” module

3.1 Data Preprocessing

Herein, the former mentioned six algorithms can be selected. After selecting a ML algorithm, the implementation panel pops up. The grey value in each entry is the commonly used values, which guides users to assign values. The explanation of the label can also pop up when the cursor hovers over the label. The first step is to input datasets and implement data preprocessing (using the “Load file” button). The preprocessing of datasets involves the normalization, assignment of input and output dimensions and split of training and testing datasets. The min–max normalization method is set as the default (see Eq. (12)), in which all datasets are rescaled into the range (–1, 1) for eliminating the scaling effect of input variables. It only requires assigning the dimension of the output variable, thereafter the dimension of input variables can be automatically calculated. The split ratio specifies the proportion of datasets in the training set, which is generally set as 80%, and the data in the training and testing sets is determined by the random seed. It should be noted that additional processing procedures including k-fold cross-validation and batch size are implemented in the FNN and ANN_MCD algorithms, which are two commonly used strategies adopted in FNN and its variants for enhancing the robustness of the model and optimization process.

where xi, max and xi, min are the maximum and minimum values of the parameter xi, respectively; xnorm is the normalized value.

3.2 Automatic Determination of Optimal Configuration

Once completing the data pre-processing, the datasets are employed to develop a ML-based model. A necessary task but difficult for beginners for developing a ML-based model is to determine its hyper-parameters. In the submodule “Develop”, the grid search method is used to search the optimal combination of hyper-parameters because it can systematically search the whole studied space. The ranges of one or two hyper-parameters are pre-assigned, while the remaining hyper-parameters are fixed. The relationships between the performance of a ML-based model and the hyper-parameters can be comprehensively investigated under this condition. Herein, the coefficient of determination (R2) is the only applied indicator, because multiple indicators may trigger contradiction for the model selection and R2 is a suitable indicator to measure the agreement between the predicted and measured results.

where \(y_{i}^{a}\) and \(y_{i}^{p}\) are the actual and predicted values for the output variable, respectively; \(\bar{y}_{i}^{a}\) is the mean value of the output variable. The “Training” button is designed by clicking which, the training process can be activated. The optimal configurations are thereafter determined and shown in the “Output” panel. The relationships between the studied hyper-parameters and the model performance are shown in the bottom figures. The “Save” button is designed by clicking which, the optimal model, file and figures can be saved. Note that values shown in the panel can also be changed manually for advanced users or teaching/learning purpose.

3.3 Application of the Trained ML-based Model

Trigger of the “Main menu” button can return to the main interface, and then the users can enter the “Predict” submodule for applying the trained ML-based model. A module under the “Predict” is termed “Parametric analysis” to examine the monotonicity of the model (see Fig. 3b). Another module under the “Predict” is termed the “Prediction on new data” (see Fig. 3c).

3.3.1 Parametric Analysis

This module aims to investigate the relationships between the input variable and soil properties in the ML-based model. The first step is to load the original, new data file and the model. It should be noted that loading the original data file is to unify the upper and lower bounds with the original database, because the ML-based model is trained based on normalized value, which is a relative value. It is necessary the ensure the same criterion when implementing the normalization operation on the new data. As designed, after clicking the “Run” and “Save” button, the prediction results on the new datasets can be generated and saved.

Regarding the “Parametric analysis”, the preparation of new data files obeys the following three constraints required by the tool: (1) only the value of the studied variable varies at each set while the values of the remaining variables maintain unchanged; (2) for each studied variable, the number of data at each set is the same and the number of sets for each variable is also identical; and (3) the data for all variables are required to be prepared equally and the order of preparation is identical to the order of input variables.

It should be noted that the parametric analysis for the ML-based model is affected by the selection of input data. To obtain an objective result, herein a method is recommended. The distribution of each variable in the original dataset is known. Therefore, the values of the studied variable at the 20%, 30%, 40%, 50%, 60%, 70% and 80% percentile are selected, while the values of the remaining variables can be represented by their mean values (Num. of data = 7, Num. of sets = 1, see Fig. 3b), thereafter a set of data for investigating such studied variable is generated. The reason is that the relationship between the studied variable and the output variable may not be truly represented if the value of the input variable exceeds the main range (less than the value at 20% and larger than the value at 80% percentile). Herein, the values of the remaining variables can also be represented by several representative values (Num. of sets > 1), thereafter obtaining several different relationships between the studied variable and output variables, and the final result of the parametric analysis can be obtained by mean trend. A noteworthy concern is the constraint relations among variables should be satisfied.

3.3.2 Prediction on the New Data

In this module, users can load the trained ML-based model and apply it to new data. It aims to examine the exploitation and extrapolation ability of the trained ML-based model, because it still has a probability to meet unknown data that exceed the range of variables in the training set no matter how big the training set is. The results directly determine whether the trained model is sufficiently robust and can be further applied in engineering practice. The detailed implementation process can refer to the “Parametric analysis” module.

4 Comparative Study

The prediction of maximum dry density is taken as an example to examine the developed tool and compare the performance of different typical ML algorithms.

4.1 Data Source

The datasets used in this study are from Wang and Yin [60], who collected a total of 226 datasets, including gravel, sand and fines, from open literature. The six input variables were gravel content CG, sand content CS, fines content CF, liquid limit LL, plastic limit PL and compaction energy E, in addition to one output variable, maximum dry density ρd,max. Much research has revealed the strong relationships between these six input variables on ρd,max, so it is reasonable to use ML algorithms to develop prediction models of ρd,max based on these six variables. For this research, 80% of the data were randomly selected to train six ML-based models, with the remaining 20% used to test models.

4.2 Development of ML-based Models

All results presented in this section are obtained following the “Develop” section.

4.2.1 Genetic Programming

Figure 4a shows the initial settings of GP parameters for use in determining the optimal GP configuration. The value of pc is assigned in the range 0.1–0.9 with an interval of 0.1, and the value of pm is assigned in the range 0.05–0.2, with an interval of 0.05. The function set includes 14 commonly used arithmetic operations, with 6 operations used: {+ , –, × , /, √, log}. The population is set to 50 and the number of iterations is set to 1000, large enough to guarantee the convergence of loss value and the search for an optimal result. Mean square error (MSE) is set as the loss function, which can be formulated using

Figure 4b and c show R2 values for the training and testing sets, respectively, which are generated by GP using different combinations of pc and pm. There is no deterministic relationship between the value of pc or pm and the model performance. Models featuring pc = 0.1 or pm = 0.15 performed best with R2 values of 0.76 and 0.71 on the training and testing sets, respectively; the scatter plot is presented in Fig. 4d, compared with the actual results. The prediction performance of the GP-based model is poor when ρd,max is less than 1.5 or larger than 2.25, for which data are sparse. GP fails to capture the internal correlations in this area, owing to insufficient data, so that a large error is created.

4.2.2 Evolutionary Polynomial Regression

The initial settings of EPR are shown in Fig. 5a. The number of transformed terms in EPR varies from 1 to 15 with an interval of 1, and the corresponding R2 values are presented in Fig. 5b. To avoid complex formulations and overflow errors, the value of elements in the exponent matrix is limited to three discrete values [–1, 0, 1]. Besides, PSO is used to search for the optimal discrete values of elements in the exponent matrix, with MSE used as the loss function. Population size and the number of iterations are the same as the values assigned in the GP.

Figure 5b presents R2 values generated by the EPR-based model for various numbers of transformed terms. As the number of transformed terms increases, the accuracy of the training set improves. The accuracy is roughly saturated on the training set as the number of transformed terms reaches 9, but the model’s performance on the testing set still fluctuates. The optimal number of transformed terms is 10, which represents the ideal trade-off between accuracy and simplicity for this model. The corresponding R2 values on the training and testing sets are 0.92 and 0.87, respectively. The ρd,max predicted using the optimal EPR-based model shows excellent agreement with the actual ρd,max and clearly outperforms the GP-based model, particularly in predicting small values of ρd,max (Fig. 5c). For several data with large values, the EPR-based model still produces a discernible error.

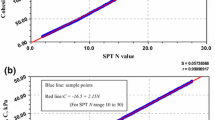

4.2.3 Support Vector Regression

Figure 6a presents the initial SVR settings. The loss function is MSE, and the values of C and γ increase by a constant factor of 2. Figures 6b and c present variations in model performance with various combinations of C and γ on the training and testing sets, respectively, clearly showing that the accuracy of the SVR-based model increases with C, because larger values of C impose a greater penalty for incorrect prediction; excessively large values of C may also create overfitting issues. Increasing values of γ also increase the model’s accuracy until it reaches the peak value, after which they can reduce its accuracy, indicating that an optimal value of γ in the kernel function can maximise SVR performance. The optimal combination of C and γ is 128 and 0.25, respectively, with corresponding R2 values on the training and testing sets of 0.95 and 0.89, respectively. All predicted data points are close to the line with a slope of 1, as Fig. 6d shows. The SVR-based model greatly improves prediction performance in comparison with explicit formulation-based models such as GP- and EPR-based models.

4.2.4 Random Forest

The initial settings of RF are presented in Fig. 7a. MSE is set as the loss function. The ntree increases from 10 to 80 with an interval of 10, and mtry increases from 1 to 6 (the dimension of input data). The performance of the RF-based model with various combinations of ntree and mtry on the training and testing sets is presented in Fig. 7b, c. It can be seen that the RF-based model shows excellent fitting ability in capturing the relationships between the input and output variables. The model developed based on random combinations of ntree and mtry can achieve excellent performance, which indicates the RF-based model is less sensitive to variations in hyper-parameters. The optimal ntree and mtry are 40 and 3, respectively, and the corresponding R2 values on the training and testing sets are 0.99 and 0.91, respectively. Figure 7d presents the scatter plot of the predicted and measured ρd,max. The data points are close to the line with a slope of 1, particularly on the training set, for which the predicted results are perfectly consistent with the actual results.

4.2.5 Feedforward Neural Network

The performance of the FNN-based model depends on numerous configurations. Herein, the effects of Nl and Nn on the performance of the FNN-based model are investigated, in which the number of hidden layers increases from 1 to 4 and the number of hidden neurons from 10 to 80. The remaining configurations are assigned by commonly used methods, as Fig. 8a shows. The activation function in the hidden layer is ReLU. Because the prediction of ρd,max is a regression issue, activation in the output layer is linear and the loss function is MSE. Because the performance of FNN relies heavily on initial weights and biases, the mean performance of a FNN-based model with 10 different initial weights and biases is used to represent the real performance of this model. The batch size is set as half of the training datasets.

The increasing complexity of the neural network can improve the fitting ability of the FNN, thereby showing higher accuracy on the training set. Simultaneously, it may induce a more severe overfitting issue, further reducing the accuracy on the testing set (see Fig. 8b and c). Finally, the model with Nl = 1 and Nn = 80 shows optimal performance, with R2 values on the training and testing sets of 0.96 and 0.86, respectively. Figure 8d presents the scatter plot; it can be seen that the predicted ρd,max shows excellent agreement with the actual ρd,max.

4.2.6 Artificial Neural Network with Monte Carlo Dropout

The implementation of ANN_MCD is similar to that of ANN. The configurations are identical to the FNN irrespective of the loss function and the activation function used in the output layer, which is assigned as the predefined default. Figure 9a presents the initial settings of ANN_MCD. Negative log-likelihood (NLL) is defined as the loss function for the uncertainty issue, intended to maximize the likelihood of approximating the actual result. Combined with k-fold cross-validation, the loss function can be formulated using

It should be noted that the dropout probability activates in the testing phase. Figures 9b and c show the performance of the ANN_MCD-based model with various combinations of Nl and Nn on the training and testing sets, respectively. Much as for the FNN-based model, the increasing complexity of the neural network improves accuracy on the training set, but an overfitting issue also arises after Nl exceeds 1. Finally, the model with Nl = 1 and Nn = 60 shows optimal performance, with R2 values on the training and testing sets of 0.95 and 0.86, respectively. The accuracy for the training set is less than for the results generated using the ANN-based model; the scatter plot is shown in Fig. 9d, which indicates that the uncertainty of the ANN_MCD sacrifices a certain degree of accuracy.

Based on the foregoing analysis results, the ML algorithms exhibit excellent potential for modelling correlations that involve soil properties. Table 3 summarizes the performance of the six ML algorithms on training and testing sets alike.

4.3 Parametric Analysis

To enhance the interpretability of the ML-based model, an investigation of what has been learnt by the ML-based model is needed. To this end, the relationships between the input and output variables in each ML-based model are revealed. Preparation of data obeys the constraints already mentioned. For each studied input variable, after obtaining its distribution in the original database, 7 values at the 20–80% integer percentile are extracted and form a set of data, with the values of the remaining input variables maintaining the individual mean value.

The results of the parametric analysis are presented in Fig. 10, conducted using the “Parametric analysis” module. The values of input as well as output variables are normalized by the individual maximum value, with their relationships roughly identical in six ML-based models. The relationships generated by the RF-based model are not as smooth as other ML algorithm-based models, because the output of RF is discrete owing to its computation theory [20, 40]. A slight difference is observed in the relationships of CS versus ρd,max and E versus ρd,max in SVR when the input variable value exceeds the range of most data in the database or the output of the model is not sensitive to the input variable. The relationships in the GP- and EPR-based models can be expressed by explicit formulations, thus showing the smoothest curves. The FNN- and ANN_MCD-based models also perform well at capturing the relationships between the input and output variables, showing a trend similar to that generated by the GP- and EPR-based model but exhibiting slight variation for CF versus ρd,max (FNN) and LL versus ρd,max (ANN_MCD).

Overall, ρd,max has a positive relationship with CG, CS and E and is negative to CF, LL and PL, consistent with the experimental observations. Increases in LL and PL generally means the increase in clay content, and the increase in CF induces the decrease in ρd,max [61]. The increase in E within a certain range can improve compactness and produce a larger ρd,max [62]. These results explain what has been learned using ML-based models, indicating their reasonableness.

4.4 Prediction on New Data

After the six ML-based models are well trained, they can be directly saved and applied to predict unknown data that are not exposed in the original database. This process can be implemented using the “Prediction on new data” module, with the intent of examining the exploitation and exploration abilities of ML-based models. An additional 19 data were collected from the literature [61, 63]. Figure 11 compares the results of given input variables to the six ML-based models with the actual results. Table 3 summarizes the values of R2 on the new data. The ranking of each model’s performance differs slightly from the results on the original training and testing sets, with the ANN_MCD-based model outperforming the RF-based model and showing the best performance. ANN_MCD is an uncertain algorithm, in which the predicted result is assigned through reliability evaluation. In other words, ANN_MCD knows what it does not know, allowing it to be reasonably deduced that ANN_MCD is more robust on unknown data. The FNN-based model also shows stable prediction performance on unknown data. The accuracy of the SVR- and RF-based models shows a clear decrease, indicating poor robustness, perhaps related to its failure to capture the relationships between some input parameters and the studied property. The GP- and EPR-based models still expose low prediction accuracy, relating to their limited mapping capability. Overall, the prediction accuracy of RF-, FNN- and ANN_MCD-based models on unknown data can be acceptable, offering a promising scenario for application.

5 Model Selection

Model selection has always garnered much attention in various domains, from different viewpoints. To the authors’ best knowledge, researchers have tended to approach its implementation from three aspects [64]: selection of (1) input variables, (2) algorithms or approaches, and (3) hyper-parameters of the applied algorithm or approach. Current methods and theories for model selection focus mainly on the first category, in which models with combinations of different input variables are developed and their performance are compared for selecting the optimal one [40]. In the second category, benchmark testing has generally been conducted to evaluate the performance of the selected algorithms or approaches [20]. In the third category, trial and error, grid search and hybrid algorithms have been the most commonly used methods [65].

In this study, the same input variables are used for all ML-based models, with their hyperparameters able to be directly determined by the search method built into the GUI platform. To this end, the model selection focuses on algorithm selection in this section. Researches have generally used error indicators to measure the performance of different ML-based models on the training and testing sets, a limited approach that cannot sufficiently evaluate the performance of a ML-based model. As already noted, this study has revealed several aspects of model performance, indicating that no model always outperforms all other models in all aspects. To overcome this issue, Zorlu et al. [66] developed a ranking method of comparing model performance, in which model performance for the training and testing sets are ranked by the values of error indicators. By summing the ranks on the training and tests, a final ranking of model performance can be produced and the optimal model identified. However, this ranking method is limited, because it merely accounts for model performance on the training and testing sets. Meanwhile, model performance on the training and testing sets was assigned the same weights, although performance on the testing set is more important.

Motivated by Zorlu et al. [66], a novel ranking index (RMS; see Eq. 16) is proposed with which to comprehensively and objectively evaluate the performance of various ML-based models from five aspects, i.e., accuracy on the training set, accuracy on the testing set, monotonicity, exploitation and exploration ability on new data, and model complexity that describes the model topology and the number of parameters. Meanwhile, the weights in the different aspects vary. This ranking index can be mathematically expressed by

where pTR, pTE, pPA, pEE and pMC are the weights of the aforementioned five aspects on the model selection, respectively, and RTR, RTE, RPA, REE and RMC are the rank of the performance of the studied model among all developed ML-based models in the aforementioned five aspects, respectively. Smaller RMS values indicate better performance, providing a direct method of model selection. Note that this is only a simple form for ranking index, more forms are also worth trying.

It should be noted that model complexity is a significant factor for the comparison of model performance. This study completes all implementations in the GUI, and because each ML-based model can be easily used by loading it, model complexity need not be considered. Based on the introduction in the former section, the most important factors for evaluating a ML-based model are its generalization ability on the unknown data and whether it can learn the correct relationships between the input variables and the studied soil property. Two such factors directly determine the applicability and the internal mechanism of a ML-based model. The model’s performance during the developing phase is less important, but performance on the testing set should draw more attention to the training set. Accordingly, pTR, pTE, pPA, pEE and pMC are ultimately assigned values of 0.1, 0.2, 0.3, 0.4 and 0, respectively. Table 3 provides detailed results for RTR, RTE, RPA and REE, with the final rank presented in its last column. ANN_MCD shows the best performance for modelling correlations of ρd,max, followed by models of FNN, RF, SVR, EPR and GP. Noted that this is only a simple example, values of weights can be changed according to the problem given.

6 Conclusions

This study first comprehensively reviewed the application of ML algorithms in modelling soil properties for geotechnical design. The algorithms were categorized into several groups based on their principles, and the main characteristics of these ML algorithms were summarized. Six representative ML algorithms were further detailed, including genetic programming (GP), evolutionary polynomial regression (EPR), support vector regression (SVR), random forest (RF), feedforward neural network (FNN) and artificial neural network integrated with Monte Carlo dropout (ANN_MCD).

These six algorithms were selected to develop a machine learning (ML)-based graphical user interface (GUI) platform. This GUI provides users with an easy-to-use tool for building a ML-based model, in which the entire process can be completed, including determination of optimal configurations for ML algorithms, evaluation of model accuracy, investigation of relationships between the input variables and soil properties, and application of the developed model to new data. The goal of developing this platform was to facilitate the application of ML algorithms to geotechnical design, but it is versatile and can be used in any domain.

In order to compare the performance of different ML algorithms, the soil maximum dry density ρd,max was selected as an example. Their performance on five aspects, accuracy on the training set, accuracy on the testing set, exploitation and exploration of the new data, parametric analysis and model complexity, was revealed. The RF-based model showed the highest accuracy on the training and testing sets, explicit formulation-based models (GP- and EPR-based) can capture the smoothest relationships between the input variables and the studied soil properties, and ANN-based models (FNN- and ANN_MCD-based) showed the greatest robustness on unknown data.

Considering that the ML-based model exhibited varying performance in different aspects, a novel ranking index was proposed with which to comprehensively evaluate the performance of various ML-based models on five aforementioned aspects, further facilitating model selection. ANN_MCD presented the optimal generalization ability for modelling correlations of ρd,max, followed by FNN, RF, SVR, EPR and GP.

Abbreviations

- b i :

-

Bias vector of the ith hidden layer

- c :

-

Constant coefficient vector

- C :

-

Regularization parameter

- E :

-

Exponent matrix

- F :

-

Function set

- gen :

-

Number of iterations

- H i :

-

Output of the ith hidden layer

- m :

-

Number of datasets

- mtry :

-

Number of features at each node

- n :

-

Dimension of input variables

- n t :

-

Dimension of transformed variables

- ntree :

-

Number of decision trees

- N :

-

Stochastic calculation times

- p :

-

Dropout probability

- p c :

-

Probability of crossover

- p m :

-

Probability of mutation

- pop :

-

Size of population

- r :

-

Bernoulli distribution with probability of p

- W i :

-

Weight matrix of the ith hidden layer

- x i, max :

-

Maximum value of the variable xi

- x i , min :

-

Minimum value of the variable xi

- x norm :

-

Normalized value of a dataset

- X = (x 1, x 2, …, x n):

-

Matrix of input variables

- XT :

-

Matrix of transformed variables

- y a i :

-

Actual value of the output variable

- y p i :

-

Predicted value of the output variable

- \(\bar{y}_{i}^{a}\) :

-

Mean value of the actual output variable

- y = (y 1, y 2, …, y n):

-

Output of the output layer

- γ :

-

Kernel coefficient

- ξ :

-

Slack parameter (default value: 0.1).

- σ :

-

Activation function

- \({\mathbb{E}}\) :

-

Mean value of output

References

Yin JH (1999) Properties and behaviour of Hong Kong marine deposits with different clay contents. Can Geotech J 36(6):1085–1095

Nagaraj TS, Murthy BRS (1986) A critical reappraisal of compression index equations. Géotechnique 36(1):27–32

Ouyang Z, Mayne PW (2019) Modified NTH method for assessing effective friction angle of normally consolidated and overconsolidated clays from piezocone tests. J Geotech Geoenviron Eng 145(10):04019067

Hattab M, Hammad T, Fleureau JM (2015) Internal friction angle variation in a kaolin/montmorillonite clay mix and microstructural identification. Géotechnique 65(1):1–11

Yoon GL, Kim BT, Jeon SS (2004) Empirical correlations of compression index for marine clay from regression analysis. Can Geotech J 41(6):1213–1221

Kootahi K (2017) Simple index tests for assessing the recompression index of fine-grained soils. J Geotech Geoenviron Eng 143(4):06016027

Hayden CP, Purchase-Sanborn K, Dewoolkar M (2018) Comparison of site-specific and empirical correlations for drained residual shear strength. Géotechnique 68(12):1099–1108

Zhang P, Jin Y. F, Yin Z. Y, Yang Y (2020) Random forest based artificial intelligent model for predicting failure envelopes of caisson foundations in sand. Appl Ocean Res 101:102223

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Krizhevsky A, Sutskever I, Hinton G, ImageNet classification with deep convolutional neural networks, NIPS, 2012

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521:436–444

Shen S-L, Atangana Njock PG, Zhou A, Lyu H-M (2021) Dynamic prediction of jet grouted column diameter in soft soil using Bi-LSTM deep learning. Acta Geotech 16:303–315

Atangana Njock P.G., Shen S.-L., Zhou A., Lyu H.-M. (2020) Evaluation of soil liquefaction using AI technology incorporating a coupled ENN / t-SNE model. Soil Dyn Earthq Eng, 130:105988

Lin S.-S., Shen S.-L., Zhang N., Zhou A. (2021) Comprehensive environmental impact evaluation for concrete mixing station (CMS) based on improved TOPSIS method. Sustainable Cities and Society, 69:102838

Kohestani VR, Hassanlourad M (2016) Modeling the mechanical behavior of carbonate sands using artificial neural networks and support vector machines. Int J Geomech 16(1):04015038

Penumadu D, Zhao RD (1999) Triaxial compression behavior of sand and gravel using artificial neural networks (ANN). Comput Geotech 24:207–230

Zhang N., Shen S.-L., Zhou A., Jin Y.-F. (2021) Application of LSTM approach for modelling stress–strain behaviour of soil. Appl Soft Comput, 100:106959

Zhang P, Yin ZY (2021) A novel deep learning-based modelling strategy from image of particles to mechanical properties for granular materials with CNN and BiLSTM. Comput Meth Appl Mech Eng 382:113858

Zhang P, Wu HN, Chen R.P, Chan HT (2020) Hybrid meta-heuristic and machine learning algorithms for tunneling-induced settlement prediction: a comparative study. Tunnell Undergr Space Technol, 99:103383

Zhang P, Yin ZY, Jin YF, Chan T, Gao FP (2021) Intelligent modelling of clay compressibility using hybrid meta-heuristic and machine learning algorithms. Geosci Front 12(1):441–452

Qi CC, Tang XL (2018) Slope stability prediction using integrated metaheuristic and machine learning approaches: a comparative study. Comput Ind Eng 118:112–122

Feng Y, Cui N, Hao W, Gao L, Gong D (2019) Estimation of soil temperature from meteorological data using different machine learning models. Geoderma 338:67–77

Zhang P, Yin ZY, Jin YF (2021) State-of-the-art review of machine learning applications in constitutive modeling of soils. Arch Comput Method Eng. https://doi.org/10.1007/s11831-020-09524-z

Elbaz K, Shen SL, Zhou AN, Yin ZY, Lyu HM (2021) Prediction of disc cutter life during shield tunnelling with AI via incorporation of genetic algorithm into GMDH-type neural network. Engineering 7(2):238–251

Gal Y., Ghahramani Z. (2015) Dropout as a Bayesian approximation: representing model uncertainty in deep learning. arXiv:1506.02142

Blundell C., Cornebise J., Kavukcuoglu K., Wierstra D. (2015) Weight uncertainty in neural networks. arXiv:1505.05424v2

Graves A. (2011) Practical variational inference for neural networks. NIPS

Zhang P, Jin YF, Yin ZY (2021) Machine learning–based uncertainty modelling of mechanical properties of soft clays relating to time-dependent behavior and its application. Int J Numer Anal Methods Geomech. https://doi.org/10.1002/nag.3215

Tan F, Zhou W-H, Yuen K-V (2018) Effect of loading duration on uncertainty in creep analysis of clay. Int J Numer Anal Methods Geomech 42(11):1235–1254

Zhou WH, Tan F, Yuen KV (2018) Model updating and uncertainty analysis for creep behavior of soft soil. Comput Geotech 100:135–143

Tan F, Zhou W-H, Yuen K-V (2016) Modeling the soil water retention properties of same-textured soils with different initial void ratios. J Hydrol 542:731–743

Zhou W-H, Yuen K-V, Tan F (2014) Estimation of soil–water characteristic curve and relative permeability for granular soils with different initial dry densities. Eng Geol 179:1–9

Zhang W, Goh ATC (2016) Multivariate adaptive regression splines and neural network models for prediction of pile drivability. Geosci Front 7(1):45–52

Cheng Z.-L, Zhou W.-H, Garg A (2020) Genetic programming model for estimating soil suction in shallow soil layers in the vicinity of a tree. Eng Geol, 268:105506

Yin ZY, Jin YF, Huang HW, Shen SL (2016) Evolutionary polynomial regression based modelling of clay compressibility using an enhanced hybrid real-coded genetic algorithm. Eng Geol 210:158–167

Wang R, Zhang K, Wang W, Meng Y, Yang L, Huang H (2020) Hydrodynamic landslide displacement prediction using combined extreme learning machine and random search support vector regression model. Eur J Environ Civ Eng:1–13

Samui P, Sitharam TG (2008) Least-square support vector machine applied to settlement of shallow foundations on cohesionless soils. Int J Numer Anal Met 32(17):419–427

Ai L, Fang NF, Zhang B, Shi ZH (2013) Broad area mapping of monthly soil erosion risk using fuzzy decision tree approach: integration of multi-source data within GIS. Int J Geogr Inf Sci 27(6):1251–1267

Qi C, Fourie A, Zhao X (2018) Back-analysis method for stope displacements using gradient-boosted regression tree and firefly algorithm. J Comput Civil Eng 32(5):04018031

Zhang P, Yin Z.Y, Jin YF, Chan THT (2020) A novel hybrid surrogate intelligent model for creep index prediction based on particle swarm optimization and random forest. Eng Geol, 265:105328

Sanikhani H, Deo RC, Yaseen ZM, Eray O, Kisi O (2018) Non-tuned data intelligent model for soil temperature estimation: a new approach. Geoderma 330:52–64

Yamaç SS, Şeker C, Negiş H (2020) Evaluation of machine learning methods to predict soil moisture constants with different combinations of soil input data for calcareous soils in a semi arid area. Agric Water Manage, 234:106121

Chen RP, Zhang P, Kang X, Zhong ZQ, Liu Y, Wu HN (2019) Prediction of maximum surface settlement caused by EPB shield tunneling with ANN methods. Soils Found 59(2):284–295

Yilmaz I, Marschalko M, Bednarik M, Kaynar O, Fojtova L (2012) Neural computing models for prediction of permeability coefficient of coarse-grained soils. Neural Comput Appl 21(5):957–968

Kiefa MAA (1998) General regression neural networks for driven piles in cohesionless soils. J Geotech Geoenviron Eng 124(12):1177–1185

Feng X, Jimenez R (2015) Predicting tunnel squeezing with incomplete data using Bayesian networks. Eng Geol 195:214–224

Goh ATC, Kulhawy FH, Chua CG (2005) Bayesian neural network analysis of undrained side resistance of drilled shafts. J Geotech Geoenviron Eng 131(1):84–93

Koza JR (1992) Genetic programming: on the programming of computers by natural selection, MIT Press. MA, Cambridge

Sette S, Boullart L (2001) Genetic programming: principles and applications. Eng Appl Artif Intel 14:727–736

Giustolisi O, Savic DA (2006) A symbolic data-driven technique based on evolutionary polynomial regression. J Hydroinform 8(4):235–237

Jin YF, Yin ZY (2020) Enhancement of backtracking search algorithm for identifying soil parameters. Int J Numer Anal Methods Geomech 44(9):1239–1261

Jin YF, Yin ZY (2020) An intelligent multi-objective EPR technique with multi-step model selection for correlations of soil properties. Acta Geotech 15(8):2053–2073

Yin ZY, Jin YF, Shen JS, Hicher PY (2018) Optimization techniques for identifying soil parameters in geotechnical engineering: comparative study and enhancement. Int J Numer Anal Methods Geomech 42(1):70–94

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20:273–297

Breiman L (2001) Random forests. Mach Learn 45:5–32

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Zhang P, Chen RP, Wu HN (2019) Real-time analysis and regulation of EPB shield steering using Random Forest. Automat Constr, 106:102860

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning representations by back-propagating errors. Nature 323(9):533–536

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15:1929–1958

Wang HL, Yin ZY (2020) High performance prediction of soil compaction parameters using multi expression programming. Eng Geol, 276:105758

Ören AH (2014) Estimating compaction parameters of clayey soils from sediment volume test. Appl Clay Sci 101:68–72

Gurtug Y, Sridharan A (2004) Compaction behaviour and prediction of its characteristics of fine grained soils with particular reference to compaction energy. Soils Found 44(5):27–36

AI-Khafaji A.N. (1993) Estimation of soil compaction parameters by means of Atterberg limits. Q J Eng Geol 26:359–368

Luo G (2016) A review of automatic selection methods for machine learning algorithms and hyper-parameter values. Netw Model Anal Health Inform Bioinforma 5:18

Zhang P, Yin ZY, Jin YF, Ye GL (2020) An AI-based model for describing cyclic characteristics of granular materials. Int J Numer Anal Methods Geomech 44(9):1315–1335

Zorlu K, Gokceoglu C, Ocakoglu F, Nefeslioglu HA, Acikalin S (2008) Prediction of uniaxial compressive strength of sandstones using petrography-based models. Eng Geol 96(3–4):141–158

Acknowledgements

This research was financially supported by the Research Grants Council (RGC) of Hong Kong Special Administrative Region Government (HKSARG) of China (Grant No.: 15220221, R5037-18F).

Author information

Authors and Affiliations

Contributions

PZ: conceptualization, methodology, analysis, writing-review and original draft. Z-Yu Y: supervision, methodology, visualization, writing-review and editing. Y-F J: validation, visualization and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that the work described has not been published before; that it is not under consideration for publication anywhere else; that its publication has been approved by all co-authors; that there is no conflict of interest regarding the publication of this article.

Data Availability Statement

(GUI download available: https://www.researchgate.net/publication/348617390_ErosMLM). All data used during the study are available from the corresponding author by request.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, P., Yin, ZY. & Jin, YF. Machine Learning-Based Modelling of Soil Properties for Geotechnical Design: Review, Tool Development and Comparison. Arch Computat Methods Eng 29, 1229–1245 (2022). https://doi.org/10.1007/s11831-021-09615-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11831-021-09615-5