Abstract

Gradient-based optimization algorithms can be studied from the perspective of limiting ordinary differential equations (ODEs). Motivated by the fact that existing ODEs do not distinguish between two fundamentally different algorithms—Nesterov’s accelerated gradient method for strongly convex functions (NAG-SC) and Polyak’s heavy-ball method—we study an alternative limiting process that yields high-resolution ODEs. We show that these ODEs permit a general Lyapunov function framework for the analysis of convergence in both continuous and discrete time. We also show that these ODEs are more accurate surrogates for the underlying algorithms; in particular, they not only distinguish between NAG-SC and Polyak’s heavy-ball method, but they allow the identification of a term that we refer to as “gradient correction” that is present in NAG-SC but not in the heavy-ball method and is responsible for the qualitative difference in convergence of the two methods. We also use the high-resolution ODE framework to study Nesterov’s accelerated gradient method for (non-strongly) convex functions, uncovering a hitherto unknown result—that NAG-C minimizes the squared gradient norm at an inverse cubic rate. Finally, by modifying the high-resolution ODE of NAG-C, we obtain a family of new optimization methods that are shown to maintain the accelerated convergence rates of NAG-C for smooth convex functions.

Similar content being viewed by others

1 Introduction

Machine learning has become one of the major application areas for optimization algorithms during the past decade. While there have been many kinds of applications, to a wide variety of problems, the most prominent applications have involved large-scale problems in which the objective function is the sum over terms associated with individual data, such that stochastic gradients can be computed cheaply, while gradients are much more expensive and the computation (and/or storage) of Hessians is often infeasible. In this setting, simple first-order gradient descent algorithms have become dominant, and the effort to make these algorithms applicable to a broad range of machine learning problems has triggered a flurry of new research in optimization, both methodological and theoretical.

We will be considering unconstrained minimization problems,

where f is a smooth convex function. Perhaps the simplest first-order method for solving this problem is gradient descent. Taking a fixed step size s, gradient descent is implemented as the recursive rule

given an initial point \(x_0\).

As has been known at least since the advent of conjugate gradient algorithms, improvements to gradient descent can be obtained within a first-order framework by using the history of past gradients. Modern research on such extended first-order methods arguably dates to Polyak [39], whose heavy-ball method incorporates a momentum term into the gradient step. This approach allows past gradients to influence the current step, while avoiding the complexities of conjugate gradients and permitting a stronger theoretical analysis. Explicitly, starting from an initial point \(x_{0},\; x_{1} \in {\mathbb {R}}^n\), the heavy-ball method updates the iterates according to

where \(\alpha > 0\) is the momentum coefficient. While the heavy-ball method provably attains a faster rate of local convergence than gradient descent near a minimum of f, it does not come with global guarantees. Indeed, [31] demonstrate that even for strongly convex functions the method can fail to converge for some choices of the step size.Footnote 1

The next major development in first-order methodology was due to Nesterov, who discovered a class of accelerated gradient methods that have a faster global convergence rate than gradient descent [34, 36]. For a \(\mu \)-strongly convex objective f with L-Lipschitz gradients, Nesterov’s accelerated gradient method (NAG-SC) involves the following pair of update equations:

given an initial point \(x_{0} = y_{0} \in {\mathbb {R}}^n\). Equivalently, NAG-SC can be written in a single-variable form that is similar to the heavy-ball method:

starting from \(x_{0}\) and \(x_{1} = x_{0} - \frac{2 s \nabla f(x_{0})}{1 + \sqrt{\mu s}}\). It is worthwhile mentioning that the Ravine method of Gelfand and Tsetlin is in a similar form [22]. Like the heavy-ball method, NAG-SC blends gradient and momentum contributions into its update direction, but defines a specific momentum coefficient \(\frac{1-\sqrt{\mu s}}{1+\sqrt{\mu s}}\). Nesterov also developed the estimate sequence technique to prove that NAG-SC achieves an accelerated linear convergence rate:

if the step size satisfies \(0 < s \le 1/L\). Moreover, for a (weakly) convex objective f with L-Lipschitz gradients, Nesterov defined a related accelerated gradient method (NAG-C) that takes the following form:

with \(x_{0} = y_{0} \in {\mathbb {R}}^n\). The choice of momentum coefficient \(\frac{k}{k+3}\), which tends to one, is fundamental to the estimate-sequence-based argument used by Nesterov to establish the following inverse quadratic convergence rate:

for any step size \(s \le 1/L\). Under an oracle model of optimization complexity, the convergence rates achieved by NAG-SC and NAG-C are optimal for smooth strongly convex functions and smooth convex functions, respectively [33]. For completeness, we remark that the convergence results for these accelerated methods can be carried over to the iterates \(y_k\)’s when the objective is smooth [36].

1.1 Gradient correction: small but essential

Throughout the present paper, we set \(\alpha = \frac{1 - \sqrt{\mu s}}{1 + \sqrt{\mu s}}\) and \(x_{1} = x_{0}-\frac{2s\nabla f(x_{0})}{1+\sqrt{\mu s}}\) to define a specific implementation of the heavy-ball method in (1.2). This choice of the momentum coefficient and the second initial point renders the heavy-ball method and NAG-SC identical except for the last (small) term in (1.4). Note that this choice of \(\alpha \) can take any value between 0 and 1. Despite their close resemblance, however, the two methods are in fact fundamentally different, with contrasting convergence results (see, for example, [14]). Notably, the former algorithm in general only achieves local acceleration, while the latter achieves acceleration method for all initial values of the iterate [31]. As a numerical illustration, Fig. 1 presents the trajectories that arise from the two methods when minimizing an ill-conditioned convex quadratic function. We see that the heavy-ball method exhibits pronounced oscillations throughout the iterations, whereas NAG-SC is monotone in the function value once the iteration counter exceeds 50.

This striking difference between the two methods can only be attributed to the last term in (1.4):

which we refer to henceforth as the gradient correction.Footnote 2 This term corrects the update direction in NAG-SC by contrasting the gradients at consecutive iterates. Although an essential ingredient in NAG-SC, the effect of the gradient correction is unclear from the vantage point of the estimate-sequence technique used in Nesterov’s proof. Accordingly, while the estimate-sequence technique delivers a proof of acceleration for NAG-SC, it does not explain why the absence of the gradient correction prevents the heavy-ball method from achieving acceleration for strongly convex functions.

A recent line of research has taken a different point of view on the theoretical analysis of acceleration, formulating the problem in continuous time and obtaining algorithms via discretization This can be done by taking continuous-time limits of existing algorithms to obtain ordinary differential equations (ODEs) that can be analyzed using the rich toolbox associated with ODEs, including Lyapunov functionsFootnote 3. For instance, [41] shows that

with initial conditions \(X(0) = x_0\) and \(\dot{X}(0) = 0\), is the exact limit of NAG-C (1.5) by taking the step size \(s \rightarrow 0\). Alternatively, the starting point may be a Lagrangian or Hamiltonian framework [43]. In either case, the continuous-time perspective not only provides analytical power and intuition, but it also provides design tools for new accelerated algorithms.

Unfortunately, existing continuous-time formulations of acceleration stop short of differentiating between the heavy-ball method and NAG-SC. In particular, these two methods have the same limiting ODE (see, for example, [44]):

and, as a consequence, this ODE does not provide any insight into the stronger convergence results for NAG-SC as compared to the heavy-ball method. As will be shown in Sect. 2, this is because the gradient correction, \(\frac{ 1 - \sqrt{\mu s} }{ 1 + \sqrt{\mu s} } s \left( \nabla f(x_{k}) - \nabla f(x_{k - 1}) \right) = O(s^{1.5})\), is an order of magnitude smaller than the other terms in (1.4) if \(s = o(1)\). Consequently, the gradient correction is not reflected in the low-resolution ODE (1.9) associated with NAG-SC, which is derived by simply taking \(s \rightarrow 0\) in both (1.2) and (1.4).

1.2 Overview of contributions

Just as there is not a single preferred way to discretize a differential equation, there is not a single preferred way to take a continuous-time limit of a difference equation. Inspired by dimensional-analysis strategies widely used in fluid mechanics in which physical phenomena are investigated at multiple scales via the inclusion of various orders of perturbations [38], we propose to incorporate \(O(\sqrt{s})\) terms into the limiting process for obtaining an ODE, including the (Hessian-driven) gradient correction \(\sqrt{s} \nabla ^{2} f(X) {\dot{X}}\) in (1.7). This will yield high-resolution ODEs that differentiate between the NAG methods and the heavy-ball method.

We list the high-resolution ODEs that we derive in the paper here:Footnote 4

-

(a)

The high-resolution ODE for the heavy-ball method (1.2):

$$\begin{aligned} {\ddot{X}}(t) +2 \sqrt{\mu } {\dot{X}}(t) + (1 + \sqrt{\mu s} ) \nabla f(X(t)) = 0,\nonumber \\ \end{aligned}$$(1.10)with \(X(0) = x_{0}\) and \({\dot{X}}(0) = - \frac{2\sqrt{s} \nabla f(x_{0})}{1 + \sqrt{\mu s}}\).

-

(b)

The high-resolution ODE for NAG-SC (1.3):

$$\begin{aligned} {\ddot{X}}(t) + 2 \sqrt{\mu } {\dot{X}}(t) + \sqrt{s} \nabla ^{2} f(X(t)) {\dot{X}}(t) + \left( 1 + \sqrt{\mu s} \right) \nabla f(X(t))= 0, \end{aligned}$$(1.11)with \(X(0) = x_{0}\) and \({\dot{X}}(0) = - \frac{2\sqrt{s} \nabla f(x_{0})}{1 + \sqrt{\mu s}}\).

-

(c)

The high-resolution ODE for NAG-C (1.5):

$$\begin{aligned} {\ddot{X}}(t) + \frac{3}{t} {\dot{X}}(t) + \sqrt{s} \nabla ^{2} f(X(t)) {\dot{X}}(t) + \left( 1 + \frac{3\sqrt{s}}{2t}\right) \nabla f(X(t)) = 0, \end{aligned}$$(1.12)for \(t \ge 3\sqrt{s}/2\), with \(X(3\sqrt{s}/2) = x_{0}\) and \({\dot{X}}(3\sqrt{s}/2) = -\sqrt{s} \nabla f(x_{0})\).

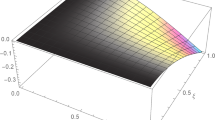

High-resolution ODEs are more accurate continuous-time counterparts for the corresponding discrete algorithms than low-resolution ODEs, thus allowing for a better characterization of the accelerated methods. This is illustrated in Fig. 2, which presents trajectories and convergence of the discrete methods, and the low- and high-resolution ODEs. For both NAGs, the high-resolution ODEs are in much better agreement with the discrete methods than the low-resolution ODEs.Footnote 5 Moreover, for NAG-SC, its high-resolution ODE captures the non-oscillatory pattern while the low-resolution ODE does not.

Top left and bottom left: trajectories and errors of NAG-SC and the heavy-ball method for minimizing \(f(x_{1}, x_{2}) = 5 \times 10^{-3} x_1^{2} + x_2^{2}\), from the initial value (1, 1), the same setting as Fig. 1. Top right and bottom right: trajectories and errors of NAG-C for minimizing \(f(x_{1}, x_{2}) = 2 \times 10^{-2}x_{1}^{2} + 5 \times 10^{-3} x_{2}^{2}\), from the initial value (1, 1). For the two bottom plots, we use the identification \(t = k\sqrt{s}\) between time and iterations for the x-axis

The three new ODEs include \(O(\sqrt{s})\) terms that are not present in the corresponding low-resolution ODEs (compare, for example, (1.12) and (1.8)). Note also that if we let \(s \rightarrow 0\), each high-resolution ODE reduces to its low-resolution counterpart. Thus, the difference between the heavy-ball method and NAG-SC is reflected only in their high-resolution ODEs—the gradient correction (1.7) of NAG-SC is preserved only in its high-resolution ODE in the form \(\sqrt{s} \nabla ^{2} f(X(t)) {\dot{X}}(t)\). This term, which we refer to as the (Hessian-driven) gradient correction, is connected with the discrete gradient correction by the approximate identity:

for small s, with the identification \(t = k \sqrt{s}\). The gradient correction \(\sqrt{s} \nabla ^{2} f(X) {\dot{X}}\) in NAG-C arises in the same fashion.Footnote 6 Interestingly, although both NAGs are first-order methods, their gradient corrections brings in second-order information from the objective function.

Despite being small, the gradient correction has a fundamental effect on the behavior of both NAGs, and this effect is revealed by inspection of the high-resolution ODEs. We provide two illustrations of this.

-

Effect of the gradient correction in acceleration Viewing the coefficient of \(\dot{X}\) as a damping ratio, the ratio \(2 \sqrt{\mu } + \sqrt{s} \nabla ^{2} f(X)\) of \(\dot{X}\) in the high-resolution ODE (1.11) of NAG-SC is adaptive to the position X, in contrast to the fixed damping ratio \(2\sqrt{\mu }\) in the ODE (1.10) for the heavy-ball method. To appreciate the effect of this adaptivity, imagine that the velocity \(\dot{X}\) is highly correlated with an eigenvector of \(\nabla ^{2} f(X)\) with a large eigenvalue, such that the large friction \((2 \sqrt{\mu } + \sqrt{s} \nabla ^{2} f(X)) \dot{X}\) effectively “decelerates” along the trajectory of the ODE (1.11) of NAG-SC. This feature of NAG-SC is appealing as taking a cautious step in the presence of high curvature generally helps avoid oscillations. Figure 1 and the left plot of Fig. 2 confirm the superiority of NAG-SC over the heavy-ball method in this respect. If we can translate this argument to the discrete case we can understand why NAG-SC achieves acceleration globally for strongly convex functions but the heavy-ball method does not. We will be able to make this translation by leveraging the high-resolution ODEs to construct discrete-time Lyapunov functions that allow maximal step sizes to be characterized for the NAG-SC and the heavy-ball method. The detailed analysis is given in Sect. 3.

-

Effect of gradient correction in gradient norm minimization We will also show how to exploit the high-resolution ODE of NAG-C to construct a continuous-time Lyapunov function to analyze convergence in the setting of a smooth convex objective with L-Lipschitz gradients. Interestingly, the time derivative of the Lyapunov function is not only negative, but it is smaller than \(-O(\sqrt{s} t^2 \Vert \nabla f(X)\Vert ^2)\). This bound arises from the gradient correction and, indeed, it cannot be obtained from the Lyapunov function studied in the low-resolution case by [41]. This finer characterization in the high-resolution case allows us to establish a new phenomenon:

$$\begin{aligned} \min _{0 \le i \le k} \left\| \nabla f(x_{i}) \right\| ^{2} \le O\left( \frac{L^{2}}{k^3}\right) . \end{aligned}$$That is, we discover that NAG-C achieves an inverse cubic rate for minimizing the squared gradient norm. By comparison, from (1.6) and the L-Lipschitz continuity of \(\nabla f\) we can only show that \(\left\| \nabla f(x_k) \right\| ^{2} \le O\left( L^{2}/k^2\right) \). See Sect. 4 for further elaboration on this cubic rate for NAG-C.

1.3 Related work

There is a long history of using ODEs to analyze optimization methods. Recently, the work of [41] has sparked a renewed interest in leveraging continuous dynamical systems to understand and design first-order methods and to provide more intuitive proofs for the discrete methods. Below is a rather incomplete review of recent work that uses continuous-time dynamical systems to study accelerated methods.

In the work of [13, 43, 44], Lagrangian and Hamiltonian frameworks are used to generate a large class of continuous-time ODEs for a unified treatment of accelerated gradient-based methods. Indeed, [43] extends NAG-C to non-Euclidean settings, mirror descent and accelerated higher-order gradient methods, all from a single “Bregman Lagrangian.” In [44], the connection between ODEs and discrete algorithms is further strengthened by establishing an equivalence between the estimate sequence technique and Lyapunov function techniques, allowing for a principled analysis of the discretization of continuous-time ODEs. Recent papers have considered symplectic [13] and Runge–Kutta [45] schemes for discretization of the low-resolution ODEs. Notably, there is a venerable line of work that studies inertial dynamics with a Hessian-driven term [1, 7, 9, 10]. In particular, [2] relates these ODEs to the analysis of associated optimization methods in both convex and non-convex settings, and [9] analyzes forward-backward methods using inertial dynamics with Hessian-driven terms, where the viscous damping coefficient is fixed. While the continuous-time limits considered in these works resemble closely with our ODEs, it is important to note that the Hessian-driven terms therein result from the second-order information of Newton’s method [9], and in contrast, the gradient correction entirely relies on the first-order information of Nesterov’s accelerated gradient method.

An ODE-based analysis of mirror descent has been pursued in another line of work by [28,29,30], delivering new connections between acceleration and constrained optimization, averaging and stochastic mirror descent.

In addition to the perspective of continuous-time dynamical systems, there has also been work on the acceleration from a control-theoretic point of view [11, 24, 25, 31] and from a geometric point of view [15]. See also [18, 19, 21, 23, 32, 37] for a number of other recent contributions to the study of the acceleration phenomenon.

1.4 Organization and notation

The remainder of the paper is organized as follows. In Sect. 2, we briefly introduce our high-resolution-ODE-based analysis framework. This framework is used in Sect. 3 to study the heavy-ball method and NAG-SC for smooth strongly convex functions. In Sect. 4, we turn our focus to NAG-C for a general smooth convex objective. In Sect. 5 we derive some extensions of NAG-C. We conclude the paper in Sect. 6 with a list of future research directions. Most technical proofs are deferred to the “Appendix”.

We mostly follow the notation of [36], with slight modifications tailored to the present paper. Let \({\mathcal {F}}_{L}^1( {\mathbb {R}}^{n})\) be the class of L-smooth convex functions defined on \({\mathbb {R}}^n\); that is, \(f \in {\mathcal {F}}^1_L\) if \(f(y) \ge f(x) + \left\langle \nabla f(x), y - x \right\rangle \) for all \(x, y \in {\mathbb {R}}^n\) and its gradient is L-Lipschitz continuous in the sense that \(\left\| \nabla f(x) - \nabla f(y) \right\| \le L \left\| x - y \right\| \), where \(\Vert \cdot \Vert \) denotes the standard Euclidean norm and \(L > 0\) is the Lipschitz constant. (Note that this implies that \(\nabla f\) is also \(L'\)-Lipschitz for any \(L' \ge L\).) The function class \({\mathcal {F}}_{L}^2({\mathbb {R}}^{n})\) is the subclass of \({\mathcal {F}}_{L}^1({\mathbb {R}}^{n})\) such that each f has a Lipschitz-continuous Hessian. For \(p = 1, 2\), let \({\mathcal {S}}_{\mu ,L}^p({\mathbb {R}}^{n})\) denote the subclass of \({\mathcal {F}}_{L}^p({\mathbb {R}}^{n})\) such that each member f is \(\mu \)-strongly convex for some \(0 < \mu \le L\). That is, \(f \in {\mathcal {S}}_{\mu , L}^p({\mathbb {R}}^{n})\) if \(f \in {\mathcal {F}}_{L}^p({\mathbb {R}}^{n})\) and \(f(y) \ge f(x) + \left\langle \nabla f(x), y - x \right\rangle + \frac{\mu }{2} \left\| y - x \right\| ^{2}\) for all \(x, y \in {\mathbb {R}}^{n}\). Note that this is equivalent to the convexity of \(f(x) - \frac{\mu }{2}\Vert x - x^\star \Vert ^2\), where \(x^\star \) denotes a minimizer of the objective f.

2 The high-resolution ODE framework

This section introduces a high-resolution ODE framework for analyzing gradient-based methods, with NAG-SC being a guiding example. Given a (discrete) optimization algorithm, the first step in this framework is to derive a high-resolution ODE using dimensional analysis, the next step is to construct a continuous-time Lyapunov function to analyze properties of the ODE, the third step is to derive a discrete-time Lyapunov function from its continuous counterpart and the last step is to translate properties of the ODE into that of the original algorithm. The overall framework is illustrated in Fig. 3.

An illustration of our high-resolution ODE framework. The three solid straight lines represent Steps 1, 2 and 3, and the two curved lines denote Step 4. The dashed line is used to emphasize that it is difficult, if not impractical, to construct discrete Lyapunov functions directly from the algorithms

Step 1: Deriving high-resolution ODEs Our focus is on the single-variable form (1.4) of NAG-SC. For any nonnegative integer k, let \(t_{k} = k\sqrt{s}\) and take the ansatz that \(x_k = X(t_k)\) for some sufficiently smooth curve X(t). Performing a Taylor expansion in powers of \(\sqrt{s}\), we get

We now use a Taylor expansion for the gradient correction, which gives

Multiplying both sides of (1.4) by \(\frac{1 + \sqrt{\mu s}}{1 - \sqrt{\mu s}} \cdot \frac{1}{s}\) and rearranging the equality, we can rewrite NAG-SC as

Next, plugging (2.13) and (2.14) into (2.15), we haveFootnote 7

which can be rewritten as

Multiplying both sides of the last display by \(1-\sqrt{\mu s}\), we obtain the following high-resolution ODE of NAG-SC:

where we ignore any O(s) terms but retain the \(O(\sqrt{s})\) terms (note that \((1 - \sqrt{\mu s})\sqrt{s} = \sqrt{s} + O(s)\)).

Our analysis is inspired by dimensional analysis [38], a strategy widely used in physics to construct a series of differential equations that involve increasingly high-order terms corresponding to small perturbations. In more detail, taking a small s, one first derives a differential equation that consists only of O(1) terms, then derives a differential equation consisting of both O(1) and \(O(\sqrt{s})\), and next, one proceeds to obtain a differential equation consisting of \(O(1), O(\sqrt{s})\) and O(s) terms. High-order terms in powers of \(\sqrt{s}\) are introduced sequentially until the main characteristics of the original algorithms have been extracted from the resulting approximating differential equation. Thus, we aim to understand Nesterov acceleration by incorporating \(O(\sqrt{s})\) terms into the ODE, including the (Hessian-driven) gradient correction \(\sqrt{s} \nabla ^{2} f(X) {\dot{X}}\) which results from the (discrete) gradient correction (1.7) in the single-variable form (1.4) of NAG-SC. We also show (see “Appendix A.1” for the detailed derivation) that this \(O(\sqrt{s})\) term appears in the high-resolution ODE of NAG-C, but is not found in the high-resolution ODE of the heavy-ball method.

As shown below, each ODE admits a unique global solution under mild conditions on the objective, and this holds for an arbitrary step size \(s > 0\). The solution is accurate in approximating its associated optimization method if s is small. To state the result, we use \(C^2(I; {\mathbb {R}}^n)\) to denote the class of twice continuously differentiable maps from I to \({\mathbb {R}}^n\) for \(I = [0, \infty )\) (the heavy-ball method and NAG-SC) and \(I = [1.5\sqrt{s}, \infty )\) (NAG-C).

Proposition 1

For any \(f \in {\mathcal {S}}_{\mu }^2({\mathbb {R}}^n) := \cup _{L \ge \mu } {\mathcal {S}}^2_{\mu ,L}({\mathbb {R}}^n)\), each of the ODEs (1.10) and (1.11) with the specified initial conditions has a unique global solution \(X \in C^2([0, \infty ); {\mathbb {R}}^n)\). Moreover, the two methods converge to their high-resolution ODEs, respectively, in the sense that

for any fixed \(T > 0\).

In fact, Proposition 1 holds for \(T = \infty \) because both the discrete iterates and the ODE trajectories converge to the unique minimizer when the objective is stongly convex.

Proposition 2

For any \(f \in {\mathcal {F}}^2({\mathbb {R}}^n) := \cup _{L > 0} {\mathcal {F}}^2_L({\mathbb {R}}^n)\), the ODE (1.12) with the specified initial conditions has a unique global solution \(X \in C^2([1.5\sqrt{s}, \infty ); {\mathbb {R}}^n)\). Moreover, NAG-C converges to its high-resolution ODE in the sense that

for any fixed \(T > 0\).

The proofs of the two propositions are standard in the theory of ordinary differential equations (see, e.g., the proofs of Theorems 1 and 2 in [41]) and thus are omitted.

Step 2: Analyzing ODEs using Lyapunov functions With these high-resolution ODEs in place, the next step is to construct Lyapunov functions for analyzing the dynamics of the corresponding ODEs, as is done in previous work [31, 41, 44]. For NAG-SC, we consider the Lyapunov function

The first and second terms \((1 + \sqrt{\mu s})\left( f(X) - f(x^{\star }) \right) \) and \(\frac{1}{4} \Vert {\dot{X}} \Vert ^{2}\) can be regarded, respectively, as the potential energy and kinetic energy, and the last term is a mix. For the mixed term, it is interesting to note that the time derivative of \({\dot{X}} + 2 \sqrt{\mu } (X - x^{\star }) + \sqrt{s} \nabla f(X)\) equals \(-(1+\sqrt{\mu s}) \nabla f(X)\).

From a dimensional analysis viewpoint, the step size s has dimension \([\mathrm {T}^{-2}]\), where \(\mathrm {T}\) denotes the time unit. Consequently, both \(\mu \) and L have the same dimension \([\mathrm {T}^2]\). Recognizing the assumptions imposed on the objective, which in particular give rise to \(\frac{\mu }{2} \Vert X - x^\star \Vert ^2 \le f(X) - f(x^\star ) \le \frac{L}{2} \Vert X - x^\star \Vert ^2\), one can readily show that every term in this Lyapunov function, such as \((1 + \sqrt{\mu s} )\left( f(X) - f(x^{\star }) \right) , \frac{1}{4} \Vert {\dot{X}} \Vert ^{2}, \frac{1}{4}\Vert \sqrt{s} \nabla f(X)\Vert ^2\) and any cross terms in the mixed energy, have dimension \([\mathrm {T}^2 \mathrm {L}^2]\), where the length unit \(\mathrm {L}\) is the dimension of X. Indeed, this dimensional analysis viewpoint in part formalizes the intuition for the construction of all Lyapunov functions in this paper.

The differentiability of \({\mathcal {E}}(t)\) will allow us to investigate properties of the ODE (1.11) in a principled manner. For example, we will show that \({\mathcal {E}}(t)\) decreases exponentially along the trajectories of (1.11), recovering the accelerated linear convergence rate of NAG-SC. Furthermore, a comparison between the Lyapunov function of NAG-SC and that of the heavy-ball method will explain why the gradient correction \(\sqrt{s}\nabla ^{2} f(X) {\dot{X}}\) yields acceleration in the former case. This is discussed in Sect. 3.1.

Step 3: Constructing discrete Lyapunov functions Our framework make it possible to translate continuous Lyapunov functions into discrete Lyapunov functions via a phase-space representation (see, for example, [3]). We illustrate the procedure in the case of NAG-SC. The first step is formulate explicit position and velocity updates:

where the velocity variable \(v_k\) is defined as \(v_k = \frac{x_{k+1} - x_k}{\sqrt{s}}\). The initial velocity is \(v_{0} = - \frac{2\sqrt{s}}{1 + \sqrt{\mu s}} \nabla f(x_{0})\). Interestingly, this phase-space representation has the flavor of symplectic discretization, in the sense that the update for \(x_k - x_{k-1}\) is explicit (it only depends on the last iterate \(v_{k-1}\)) while the update for \(v_{k} - v_{k - 1}\) is implicit (it depends on the current iterates \(x_k\) and \(v_{k}\), see [40]).

The representation (2.17) suggests translating the continuous-time Lyapunov function (2.16) into a discrete-time Lyapunov function of the following form:

by replacing continuous terms (e.g., \(\dot{X}\)) by their discrete counterparts (e.g., \(v_k\)). Akin to the continuous (2.16), here \(\mathbf {I}\), \(\mathbf {II}\), and \(\mathbf {III}\) correspond to potential energy, kinetic energy, and mixed energy, respectively. To better appreciate this translation, note that the factor \(\frac{1 + \sqrt{\mu s}}{1 - \sqrt{\mu s}}\) in \(\mathbf {I}\) results from the term \(\frac{1 + \sqrt{\mu s}}{1 - \sqrt{\mu s}} \sqrt{s}\nabla f( x_{k} )\) in (2.17). Likewise, \(\frac{ 2\sqrt{\mu }}{1 - \sqrt{\mu s}}\) in \(\mathbf {III}\) is from the term \(\frac{2\sqrt{\mu s}}{1 - \sqrt{\mu s}}v_{k}\) in (2.17). We use \(x_{k+1}\) in lieu of \(x_k\) as a reflection of the fact that the x variable takes a forward step in the phase-space representation of NAG-SC. The need for the final (small) negative term is technical; we discuss it in Sect. 3.2.

Step 4: Analyzing algorithms using discrete Lyapunov functions The last step is to map properties of high-resolution ODEs to corresponding properties of optimization methods. This step closely mimics Step 2 except that now the object is a discrete algorithm and the tool is a discrete Lyapunov function such as (2.18). Given that Step 2 has been performed, this translation is conceptually straightforward, albeit often calculation-intensive. For example, using the discrete Lyapunov function (2.18), we will recover the optimal linear rate of NAG-SC and gain insights into the fundamental effect of the gradient correction in accelerating NAG-SC. In addition, NAG-C is shown to minimize the squared gradient norm at an inverse cubic rate by a simple analysis of the decreasing rate of its discrete Lyapunov function.

3 Gradient correction for acceleration

In this section, we use our high-resolution ODE framework to analyze NAG-SC and the heavy-ball method. Section 3.1 focuses on the ODEs with an objective function \(f \in {\mathcal {S}}^2_{\mu , L}({\mathbb {R}}^{n})\), and in Sect. 3.2 we extend the results to the discrete case for \(f \in {\mathcal {S}}^1_{\mu , L}({\mathbb {R}}^{n})\). Throughout this section, the strategy is to analyze the two methods in parallel, thereby highlighting the differences between the two methods. In particular, the comparison will demonstrate the vital role of the gradient correction, namely \(\frac{ 1 - \sqrt{\mu s} }{ 1 + \sqrt{\mu s} } \cdot s \left( \nabla f(x_{k}) - \nabla f(x_{k - 1}) \right) \) in the discrete case and \(\sqrt{s} \nabla ^2 f(X) \dot{X}\) in the ODE case, in making NAG-SC an accelerated method.

3.1 The ODE case

The following theorem characterizes the convergence rate of the high-resolution ODE corresponding to NAG-SC.

Theorem 1

(Convergence of NAG-SC ODE) Let \(f \in {\mathcal {S}}^2_{\mu , L}({\mathbb {R}}^n)\). For any step size \(0 < s \le 1/L\), the solution \(X = X(t)\) of the high-resolution ODE (1.11) satisfies

The theorem states that the functional value f(X) tends to the minimum \(f(x^\star )\) at a linear rate. By setting \(s = 1/L\), we obtain \(f(X) - f(x^{\star }) \le 2L\left\| x_{0} - x^{\star } \right\| ^{2} \mathrm {e}^{- \frac{ \sqrt{\mu } t}{4}}\).

The proof of Theorem 1 is based on analyzing the Lyapunov function \({\mathcal {E}}(t)\) for the high-resolution ODE of NAG-SC. Recall that \({\mathcal {E}}(t)\) defined in (2.16) is

The next lemma states the key property we need from this Lyapunov function.

Lemma 1

(Lyapunov function for NAG-SC ODE) Let \(f \in {\mathcal {S}}^2_{\mu , L}({\mathbb {R}}^n)\). For any step size \(s > 0\), and with \(X = X(t)\) being the solution to the high-resolution ODE (1.11), the Lyapunov function (2.16) satisfies

The proof of this lemma is placed at the end of this subsection. In particular, the proof reveals that (3.19) can be strengthened to

The term \(\frac{\sqrt{s}}{2} ( \left\| \nabla f(X)\right\| ^{2} + {\dot{X}}^\top \nabla ^{2}f(X){\dot{X}} ) \ge 0\) plays no role at the moment, but Sect. 3.2 will shed light on its profound effect in the discretization of the high-resolution ODE of NAG-SC.

Proof of Theorem 1

Lemma 1 implies \(\dot{{\mathcal {E}}}(t) \le - \frac{\sqrt{\mu }}{4}{\mathcal {E}}(t)\), which amounts to \(\frac{\mathrm {d}}{\mathrm {d}t} \left( {\mathcal {E}}(t) \mathrm {e}^{\frac{\sqrt{\mu } t}{4}} \right) \le 0\). By integrating out t, we get

Recognizing the initial conditions \(X(0) = x_{0}\) and \(\dot{X}(0) = -\frac{2\sqrt{s} \nabla f(x_{0})}{1 + \sqrt{\mu s}}\), we write (3.20) as

Since \(f \in {\mathcal {S}}_{\mu , L}^{2}\), we have that \(\Vert \nabla f(x_0)\Vert \le L \Vert x_0 - x^\star \Vert \) and \(f(x_0) - f(x^\star ) \le L\Vert x_0 - x^\star \Vert ^2/2\). Together with the Cauchy–Schwarz inequality, the two inequalities yield

which is valid for all \(s > 0\). To simplify the coefficient of \(\left\| x_{0} - x^{\star } \right\| ^{2} \mathrm {e}^{- \frac{ \sqrt{\mu } t}{4}}\), note that L can be replaced by 1/s in the analysis since \(s \le 1/L\). It follows that

Furthermore, a bit of analysis reveals that

since \(\mu s \le \mu /L \le 1\), and this step completes the proof of Theorem 1. \(\square \)

We now consider the heavy-ball method (1.2). Recall that the momentum coefficient \(\alpha \) is set to \(\frac{1 - \sqrt{\mu s}}{1 + \sqrt{\mu s}}\). The following theorem characterizes the rate of convergence of this method.

Theorem 2

(Convergence of heavy-ball ODE) Let \(f \in {\mathcal {S}}_{\mu , L}^{2}({\mathbb {R}}^{n})\). For any step size \(0 < s \le 1/L\), the solution \(X = X(t)\) of the high-resolution ODE (1.10) satisfies

As in the case of NAG-SC, the proof of Theorem 2 is based on a Lyapunov function:

which is the same as the Lyapunov function (2.16) for NAG-SC except for the lack of the \(\sqrt{s} \nabla f(X)\) term. In particular, (2.16) and (3.21) are identical if \(s = 0\). The following lemma considers the decay rate of (3.21).

Lemma 2

(Lyapunov function for the heavy-ball ODE) Let \(f\in {\mathcal {S}}^{2}_{\mu , L}({\mathbb {R}}^n)\). For any step size \(s>0\), the Lyapunov function (3.21) for the high-resolution ODE (1.10) satisfies

The proof of Theorem 2 follows the same strategy as the proof of Theorem 1. In brief, Lemma 2 gives \({\mathcal {E}}(t) \le \mathrm {e}^{-\sqrt{\mu } t/4} {\mathcal {E}}(0)\) by integrating over the time parameter t. Recognizing the initial conditions

in the high-resolution ODE of the heavy-ball method and using the L-smoothness of \(\nabla f\), Lemma 2 yields

if the step size \(s \le 1/L\). Finally, since \(0 < \mu s \le \mu /L \le 1\), the coefficient satisfies \(\frac{1}{2} + \frac{3}{( 1 + \sqrt{\mu s} )^{3}} + \frac{2\mu s}{1 + \sqrt{\mu s}}< \frac{7}{2}\).

The proofs of Lemmas 1 and 2 share similar ideas. In view of this, we present only the proof of the former here, deferring the proof of Lemma 2 to “Appendix B.2”.

Proof of Lemma 1

Along the trajectories of (1.11), the Lyapunov function (2.16) satisfies

Furthermore, \(\left\langle \nabla f(X), X - x^{\star } \right\rangle \) is greater than or equal to both \(f(X) - f(x^\star ) + \frac{\mu }{2} \Vert X - x^\star \Vert ^2\) and \(\mu \Vert X - x^\star \Vert ^2\) due to the \(\mu \)-strong convexity of f. This yields

which together with (3.22) suggests that the time derivative of this Lyapunov function can be bounded as

Next, the Cauchy–Schwarz inequality yields

from which it follows that

Combining (3.23) and (3.24) completes the proof of the theorem. \(\square \)

Remark 1

The only inequality in (3.22) is due to the term \(\frac{\sqrt{s}}{2} (\left\| \nabla f(X)\right\| ^{2} + {\dot{X}}^{\top }\nabla ^{2} f(X){\dot{X}} )\), which is discussed right after the statement of Lemma 1. This term results from the gradient correction \(\sqrt{s} \nabla ^2 f(X) \dot{X}\) in the NAG-SC ODE. For comparison, this term does not appear in Lemma 2 in the case of the heavy-ball method as its ODE does not include the gradient correction and, accordingly, its Lyapunov function (3.21) is free of the \(\sqrt{s} \nabla f(X)\) term.

3.2 The discrete case

This section carries over the results in Sect. 3.1 to the two discrete algorithms, namely NAG-SC and the heavy-ball method. Here we consider an objective \(f \in {\mathcal {S}}_{\mu ,L}^1({\mathbb {R}}^n)\) since second-order differentiability of f is not required in the two discrete methods. Recall that both methods start with an arbitrary \(x_0\) and \(x_1 = x_0 - \frac{2 s\nabla f(x_0)}{1 + \sqrt{\mu s}}\).

Theorem 3

(Convergence of NAG-SC) Let \(f \in {\mathcal {S}}_{\mu ,L}^1({\mathbb {R}}^n)\). If the step size is set to \(s = 1/(4L)\), the iterates \(\{x_k\}_{k=0}^{\infty }\) generated by NAG-SC (1.3) satisfy

for all \(k \ge 0\).

In brief, the theorem states that \(\log (f(x_k) - f(x^\star )) \le - O(k\sqrt{\mu /L})\), which matches the optimal rate for minimizing smooth strongly convex functions using only first-order information [36]. More precisely, [36] shows that \(f(x_k) - f(x^\star ) = O((1 - \sqrt{\mu /L})^k)\) by taking \(s = 1/L\) in NAG-SC. Although this optimal rate of NAG-SC is well known in the literature, this is the first Lyapunov-function-based proof of this result.

As indicated in Sect. 2, the proof of Theorem 3 rests on the Lyapunov function \({\mathcal {E}}(k)\) from (2.18):

Recall that this functional is derived by writing NAG-SC in the phase-space representation (2.17). Analogous to Lemma 1, the following lemma gives an upper bound on the difference \({\mathcal {E}}(k+1) - {\mathcal {E}}(k)\).

Lemma 3

(Lyapunov function for NAG-SC) Let \(f \in {\mathcal {S}}_{\mu , L}^1({\mathbb {R}}^n)\). Taking any step size \(0 < s \le 1/(4L)\), the discrete Lyapunov function (2.18) with \(\{x_{k}\}_{k = 0}^{\infty }\) generated by NAG-SC satisfies

The form of the inequality ensured by Lemma 3 is consistent with that of Lemma 1. Alternatively, it can be written as \({\mathcal {E}}(k + 1) \le \frac{1}{1+\frac{\sqrt{\mu s}}{6}} {\mathcal {E}}(k)\). With Lemma 3 in place, we give the proof of Theorem 3.

Proof of Theorem 3

Given \(s = 1/(4L)\), we have

To see this, first note that

Combining these two inequalities, we get

which gives (3.25).

Next, we inductively apply Lemma 3, yielding

Recognizing the initial velocity \(v_{0} = - \frac{2\sqrt{s} \nabla f(x_{0})}{1 + \sqrt{\mu s}}\) in NAG-SC, one can show that

Taking \(s = 1/(4L)\) in (3.27), it follows from (3.25) and (3.26) that

Here the constant factor \(C_{\mu /L}\) is a short-hand for

which is less than five by making use of the fact that \(\mu /L \le 1\). This completes the proof. \(\square \)

We now turn to the heavy-ball method (1.2). Recall that \(\alpha = \frac{1-\sqrt{\mu s}}{1+\sqrt{\mu s}}\) and \(x_{1} = x_{0}-\frac{2s\nabla f(x_{0})}{1+\sqrt{\mu s}}\).

Theorem 4

(Convergence of heavy-ball method) Let \(f \in {\mathcal {S}}^1_{\mu ,L}({\mathbb {R}}^n)\). If the step size is set to \(s = \mu /(16L^2)\), the iterates \(\{x_k\}_{k=0}^{\infty }\) generated by the heavy-ball method satisfy

for all \(k \ge 0\).

The heavy-ball method minimizes the objective at the rate \(\log (f(x_k) - f(x^\star )) \le - O(k\mu /L)\), as opposed to the optimal rate \(-O(k\sqrt{\mu /L})\) obtained by NAG-SC. Thus, the acceleration phenomenon is not observed in the heavy-ball method for minimizing functions in the class \({\mathcal {S}}^1_{\mu ,L}({\mathbb {R}}^n)\). This difference is, on the surface, attributed to the much smaller step size \(s = \mu /(16L^2)\) in Theorem 4 as compared to the \(s = 1/(4L)\) step size in Theorem 3. Further discussion of this difference is given after Lemma 4.

In addition to allowing us to complete the proof of Theorem 4, Lemma 4 will shed light on why the heavy-ball method needs a more conservative step size. To state this lemma, we consider the discrete Lyapunov function defined as

which is derived by discretizing the continuous Lyapunov function (3.21) using the phase-space representation of the heavy-ball method:

Lemma 4

(Lyapunov function for the heavy-ball method) Let \(f \in {\mathcal {S}}_{\mu ,L}^{1}({\mathbb {R}}^n)\). For any step size \(s > 0\), the discrete Lyapunov function (3.28) with \(\{x_{k}\}_{k = 0}^{\infty }\) generated by the heavy-ball method satisfies

The proof of Lemma 4 can be found in “Appendix B.3”. To apply this lemma to prove Theorem 4, we need to ensure

A sufficient and necessary condition for (3.31) is

This is because \(\left\| \nabla f(x_{k + 1}) \right\| ^{2} \le 2L \left( f(x_{k + 1}) - f(x^{\star }) \right) \), which can be further reduced to an equality (for example, \(f(x) = \frac{L}{2} \Vert x\Vert ^2\)). Thus, the step size s must obey \(s = O\left( \frac{\mu }{L^2} \right) \). In particular, the choice of \(s = \frac{\mu }{16L^2}\) fulfills (3.32) and, as a consequence, Lemma 4 implies \({\mathcal {E}}(k+1) - {\mathcal {E}}(k) \le -\frac{\mu }{16 L} {\mathcal {E}}(k+1)\). The remainder of the proof of Theorem 4 is similar to that of Theorem 3 and is therefore omitted. As an aside, [39] uses \(s = 4 /(\sqrt{L} + \sqrt{\mu })^{2}\) for local accelerated convergence of the heavy-ball method. This choice of step size is larger than our step size \(s = \frac{\mu }{16 L^2}\), which yields a non-accelerated but global convergence rate.

The term \(\frac{s}{2} \left( \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } \right) ^2 \left\| \nabla f(x_{k + 1}) \right\| ^{2}\) in (3.30) that arises from finite differencing of (3.28) is a (small) term of order O(s) and, as a consequence, this term is not reflected in Lemma 2. In relating to the case of NAG-SC, one would be tempted to ask why this term does not appear in Lemma 3. In fact, a similar term can be found in \({\mathcal {E}}(k+1) - {\mathcal {E}}(k)\) by taking a closer look at the proof of Lemma 3. However, this term is canceled out by the discrete version of the quadratic term \(\frac{\sqrt{s}}{2} (\left\| \nabla f(X)\right\| ^{2} + {\dot{X}}^\top \nabla ^{2}f(X){\dot{X}} )\) in Lemma 1 and is, therefore, not present in the statement of Lemma 3. Note that this quadratic term results from the gradient correction (see Remark 1). In light of the above, the gradient correction is the key ingredient that allows for a larger step size in NAG-SC, which is necessary for achieving acceleration.

Before finishing Sect. 3.2 by proving Lemma 3, we briefly remark on the proof strategies for Lemmas 3 and 4. One can rewrite the second lines of the phase-space representations (2.17) and (3.29) as

respectively, from which it is straightforward to obtain (3.35) below in the proof and (3.30). Notably, the derivation of (3.35) additionally relies on the fact that the dimension of the first term is the same as the Lyapunov function plus the gradient term.

Proof of Lemma 3

Using the Cauchy–Schwarz inequality, we have (see the definition of \(\mathbf {III}\) in (2.18))

which, together with the inequality

for \(f \in {\mathcal {S}}_{\mu , L}^{1}({\mathbb {R}}^n)\), shows that the Lyapunov function (2.18) satisfies

Take the following inequality as given for the moment:

which holds for \(s \le 1/(2L)\). Comparing the coefficients of the same terms in (3.33) for \({\mathcal {E}}(k+1)\) and (3.34), we conclude that the first difference of the discrete Lyapunov function (2.18) must satisfy

since \(s \le 1/(4L)\), as desired.

To complete the proof of this lemma, we now verify (3.34) below. First, we point out that

implies (3.34) for \(s \le 1/L\). With (3.35) in place, recognizing that

when the step size satisfies \(s \le 1/(2L) \le 1/L\), we have

Now, we conclude this section by deriving (3.35). Recall the discrete Lyapunov function (2.18),

Next, we evaluate the difference between \({\mathcal {E}}(k)\) and \({\mathcal {E}}(k + 1)\) by the three parts, \(\mathbf {I}\), \(\mathbf {II}\) and \(\mathbf {III}\) respectively.

-

For part \(\mathbf {I}\) (potential), using the convexity of the objective, we have

$$\begin{aligned}&\left( \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } \right) \left( f(x_{k + 1}) - f(x^{\star }) \right) - \left( \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } \right) \left( f(x_{k}) - f(x^{\star }) \right) \\&\quad \le \left( \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } \right) \left[ \left\langle \nabla f(x_{k + 1}), x_{k + 1} - x_{k} \right\rangle - \frac{1}{2L} \left\| \nabla f(x_{k + 1}) - \nabla f(x_{k}) \right\| ^{2} \right] \\&\quad \le \underbrace{\left( \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } \right) \sqrt{s} \left\langle \nabla f(x_{k + 1}), v_{k} \right\rangle }_{\mathbf {I}_{1}} \underbrace{- \frac{1}{2L} \left( \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s}} \right) \left\| \nabla f(x_{k + 1}) - \nabla f(x_{k}) \right\| ^{2}}_{\mathbf {I}_{2}}. \end{aligned}$$ -

For part \(\mathbf {II}\) (kinetic energy), using the phase representation of NAG-SC (2.17), we see that \(\frac{1}{4} \left\| v_{k + 1} \right\| ^{2} - \frac{1}{4} \left\| v_{k} \right\| ^{2} \equiv \frac{1}{2} \left\langle v_{k + 1} - v_{k}, v_{k + 1} \right\rangle - \frac{1}{4} \left\| v_{k + 1} - v_{k} \right\| ^{2}\) equals

$$\begin{aligned}&- \frac{\sqrt{\mu s} }{1 - \sqrt{\mu s}} \left\| v_{k + 1} \right\| ^{2} - \frac{\sqrt{s}}{2} \left\langle \nabla f(x_{k + 1}) - \nabla f(x_{k}), v_{k + 1} \right\rangle \\&\qquad - \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } \cdot \frac{\sqrt{s}}{2} \left\langle \nabla f(x_{k + 1}), v_{k + 1} \right\rangle - \frac{1}{4} \left\| v_{k + 1} - v_{k} \right\| ^{2} \\&\quad = \underbrace{- \frac{\sqrt{\mu s} }{1 - \sqrt{\mu s}} \left\| v_{k + 1} \right\| ^{2}}_{\mathbf {II}_1} \\&\qquad \underbrace{- \frac{\sqrt{s}}{2} \cdot \frac{1 - \sqrt{\mu s} }{1 + \sqrt{\mu s} }\left\langle \nabla f(x_{k + 1}) - \nabla f(x_{k}), v_{k} \right\rangle }_{\mathbf {II}_2} \\&\qquad + \underbrace{ \frac{1 - \sqrt{\mu s} }{1 + \sqrt{\mu s} } \cdot \frac{s}{2} \left\| \nabla f(x_{k + 1}) - \nabla f(x_{k}) \right\| ^{2}}_{\mathbf {II}_{3}}\\&\qquad +\underbrace{ \frac{s}{2} \left\langle \nabla f(x_{k + 1}) - \nabla f(x_{k}), \nabla f(x_{k + 1}) \right\rangle }_{\mathbf {II}_4}\\&\qquad \underbrace{- \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } \cdot \frac{\sqrt{s}}{2} \left\langle \nabla f(x_{k + 1}), v_{k + 1} \right\rangle }_{\mathbf {II}_{5}} \underbrace{- \frac{1}{4} \left\| v_{k + 1} - v_{k} \right\| ^{2}}_{\mathbf {II}_{6}}. \end{aligned}$$ -

For part \(\mathbf {III}\) (mixed energy), using the phase representation of NAG-SC (2.17), we have

$$\begin{aligned}&\frac{1}{4} \left\| v_{k + 1} + \frac{2\sqrt{\mu }}{1 - \sqrt{\mu s}} ( x_{k + 2} - x^{\star } ) + \sqrt{s} \nabla f(x_{k + 1})\right\| ^{2} \\&\qquad - \frac{1}{4} \left\| v_{k} + \frac{2\sqrt{\mu }}{1 - \sqrt{\mu s}} ( x_{k + 1} - x^{\star } ) + \sqrt{s} \nabla f(x_{k})\right\| ^{2} \\&\quad = \frac{1}{2} \left\langle - \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } \sqrt{s} \nabla f(x_{k + 1}), \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } v_{k + 1}\right. \\&\qquad \left. + \frac{2\sqrt{\mu }}{1 - \sqrt{\mu s} } (x_{k + 1} - x^{\star }) + \sqrt{s} \nabla f(x_{k + 1}) \right\rangle \\&\qquad - \frac{1}{4} \left( \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} }\right) ^{2} s \left\| \nabla f(x_{k + 1})\right\| ^{2} \\&\quad = \underbrace{- \frac{\sqrt{\mu s}}{1 - \sqrt{\mu s}} \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } \left\langle \nabla f(x_{k + 1}), x_{k + 1}- x^{\star } \right\rangle }_{\mathbf {III}_{1}}\\&\qquad \underbrace{- \frac{1}{2} \left( \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } \right) ^{2} \sqrt{s} \left\langle \nabla f(x_{k + 1}), v_{k + 1} \right\rangle }_{\mathbf {III}_{2}} \\&\qquad \underbrace{ - \frac{1}{2} \left( \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} } \right) s \left\| \nabla f(x_{k + 1})\right\| ^{2}}_{\mathbf {III}_{3}}\\&\qquad \underbrace{ - \frac{1}{4} \left( \frac{1 + \sqrt{\mu s} }{1 - \sqrt{\mu s} }\right) ^{2} s \left\| \nabla f(x_{k + 1})\right\| ^{2} }_{\mathbf {III}_{4}}. \end{aligned}$$

Now, we evaluate the difference of the discrete Lyapunov function (2.18) at \(k+1\) and k:

The term \((1/2)\mathbf {I}_{1} + \mathbf {II}_{5} + \mathbf {II}_{6} + \mathbf {III}_{4}\) is identical to

Using the phase representation of NAG-SC (2.17), we have

Note that \(\mathbf {IV} = (1/2)\mathbf {I}_{1} + \mathbf {III}_{2} + \mathbf {III}_{3}\). Then, using the phase representation of NAG-SC (2.17), we have

To proceed, note that \(\mathbf {II}_{4} + \mathbf {IV}_{1} + \mathbf {additional\;term}\) is a perfect square as this term is identical to

Combining \(\mathbf {II}_{4} + \mathbf {IV}_{1} + \mathbf {additional\;term}\), \(\mathbf {I}_{2} + \mathbf {II}_{3}\), we see that \((\mathbf {II}_{4} + \mathbf {IV}_{1} + \mathbf {additional\;term}) + ( \mathbf {I}_{2} + \mathbf {II}_{3})\) equals

Now, we obtain that the difference of Lyapunov function (2.18) obeys

Because \(\left\| \nabla f(x_{k + 1}) - \nabla f(x_{k})\right\| ^{2} \le L \left\langle \nabla f(x_{k + 1}) - \nabla f(x_{k}), x_{k + 1} - x_{k}\right\rangle \) for any \(f(x) \in {\mathcal {S}}_{\mu , L}^{1}({\mathbb {R}}^{n})\), we have

This completes the proof. \(\square \)

4 Gradient correction for gradient norm minimization

In this section, we extend the use of the high-resolution ODE framework to NAG-C (1.5) in the setting of minimizing an L-smooth convex function f. The main result is an improved rate of NAG-SC for minimizing the squared gradient norm. Indeed, we show that NAG-C achieves the \(O(L^2/k^3)\) rate of convergence for minimizing \(\Vert \nabla f(x_k)\Vert ^2\). To the best of our knowledge, this is the sharpest known bound for this problem using NAG-C without any modification. Moreover, we will show that the gradient correction in NAG-C is responsible for this rate and, as it is therefore unsurprising that this inverse cubic rate was not perceived within the low-resolution ODE frameworks such as that of [41].

4.1 The ODE case

We begin by studying the high-resolution ODE (1.12) corresponding to NAG-C with an objective \(f \in {\mathcal {F}}_{L}^2({\mathbb {R}}^{n})\) and an arbitrary step size \(s > 0\). For convenience, let \(t_0 = 1.5 \sqrt{s}\).

Theorem 5

Assume \(f \in {\mathcal {F}}_{L}^2({\mathbb {R}}^n)\) and let \(X = X(t)\) be the solution to the ODE (1.12). The squared gradient norm satisfies

for all \(t > t_0\).

By taking the step size \(s = 1/L\), this theorem shows that \(\inf _{t_0 \le u \le t} \left\| \nabla f(X(u))\right\| ^2 = O(\sqrt{L}/t^3)\), where the infimum operator is necessary as the squared gradient norm is generally not decreasing in t. In contrast, directly combining the convergence rate of the function value (see Corollary 1) and inequality \(\Vert \nabla f(X)\Vert ^2 \le 2L (f(X) - f(x^\star ))\) only gives a \(O(L/t^2)\) rate for squared gradient norm minimization. We remark that this inverse cubic rate is also found in an ODE for modeling Newton’s method [10].

The proof of the theorem is based on the continuous Lyapunov function

which reduces to the continuous Lyapunov function in [41] when setting \(s = 0\).

Lemma 5

Let \(f \in {\mathcal {F}}_{L}^2({\mathbb {R}}^n)\). The Lyapunov function defined in (4.36) with \(X = X(t)\) being the solution to the ODE (1.12) satisfies

for all \(t \ge t_0\).

The decreasing rate of \({\mathcal {E}}(t)\) as specified in the lemma is sufficient for the proof of Theorem 5. First, note that Lemma 5 readily gives

where the last step is due to the fact \({\mathcal {E}}(t) \ge 0\). Thus, it follows that

Recognizing the initial conditions of the ODE (1.12), we get

which together with (4.38) gives

This bound reduces to the one claimed by Theorem 5 by only keeping the first term \(\sqrt{s} (t^3 - t_0^3)/3\) in the denominator.

The gradient correction \(\sqrt{s} \nabla ^{2} f(X) {\dot{X}}\) in the high-resolution ODE (1.12) plays a pivotal role in Lemma 5 and is, thus, key to Theorem 5. As will be seen in the proof of the lemma, the factor \(\left\| \nabla f(X) \right\| ^{2}\) in (4.37) results from the term \(t\sqrt{s}\nabla f(X)\) in the Lyapunov function (4.36), which arises from the gradient correction in the ODE (1.12). In light of this, the low-resolution ODE (1.8) of NAG-C cannot yield a result similar to Lemma 5; furthermore, we conjecture that the \(O(\sqrt{L}/t^3)\) rate does applies to this ODE. Sect. 4.2 will discuss this point further in the discrete case.

In passing, it is worth pointing out that the analysis above applies to the case of \(s = 0\). In this case, we have \(t_0 = 0\), and (4.39) turns out to be \(\inf _{0 \le u \le t} \left\| \nabla f(X(u))\right\| ^{2} \le \frac{4L\left\| x_0 - x^\star \right\| ^2}{t^2}\). This result is similar to that of the low-resolution ODE in [41].Footnote 8

This section is concluded with the proof of Lemma 5.

Proof of Lemma 5

The time derivative of the Lyapunov function (4.36) obeys

Making use of the basic inequality \(f(x^{\star }) \ge f(X) + \left\langle \nabla f(X), x^{\star } - X \right\rangle + \frac{1}{2L} \left\| \nabla f(X) \right\| ^{2}\) for L-smooth f, the expression for \(\frac{\mathrm {d}{\mathcal {E}}}{\mathrm {d}t}\) above satisfies

\(\square \)

Noting that Lemma 5 shows \({\mathcal {E}}(t)\) is a decreasing function, we obtain:

by recognizing the initial conditions of the high-resolution ODE (1.12). This gives the following corollary.

Corollary 1

Under the same assumptions as in Theorem 5, for any \(t > t_0\), we have

4.2 The discrete case

We now turn to the discrete NAG-C (1.5) for minimizing an objective \(f \in {\mathcal {F}}_L^1({\mathbb {R}}^{n})\). Recall that this algorithm starts from any \(x_0\) and \(y_0 = x_0\). The discrete counterpart of Theorem 5 is as follows.

Theorem 6

Let \(f \in {\mathcal {F}}_{L}^1({\mathbb {R}}^n)\). For any step size \(0 < s \le 1/(3L)\), the iterates \(\left\{ x_{k} \right\} _{k = 0}^{\infty }\) generated by NAG-C obey

for all \(k \ge 0\). In additional, we have \(f(x_{k}) - f(x^{\star }) \le \frac{119 \left\| x_{0} - x^{\star } \right\| ^{2}}{s (k + 1)^{2}}\) for all \(k \ge 0\).

Remark 2

The convergence result of this theorem carries over effortlessly to the iterate sequence \(\{y_k\}_{k=0}^{\infty }\), since the smoothness of the objective ensures that the two iterates are sufficiently close due to the smoothness of the objective. It is important to note, however, that this equivalence is in general not true when applying proximal gradient methods to nonsmooth objectives [12]. While it is beyond the scope of this paper, we refer interested readers to [4, 8] for extensions to nonsmooth objectives.

Taking \(s = 1/(3L)\), Theorem 6 shows that NAG-C minimizes the squared gradient norm at the rate \(O(L^2/k^3)\). This theoretical prediction is in agreement with two numerical examples illustrated in Fig. 4. To our knowledge, the bound \(O(L^2/k^3)\) is sharper than any existing bounds in the literature for NAG-C for squared gradient norm minimization. In fact, the convergence result \(f(x_k) - f(x^\star ) = O(L/k^2)\) for NAG-C and the L-smoothness of the objective immediately give \(\Vert \nabla f(x_k)\Vert ^2 \le O(L^2/k^2)\). This well-known but loose bound can be improved by using a recent result from [8], which shows that a slightly modified version of NAG-C satisfies \(f(x_k) - f(x^\star ) = o(L/k^2)\) (see Sect. 5.2 for more discussion of this improved rate). This reveals \(\Vert \nabla f(x_k)\Vert ^2 \le o\left( \frac{L^{2}}{k^2} \right) \), which, however, remains looser than the bound of Theorem 6. In addition, the rate \(o(L^{2}/k^2)\) is not valid for \(k \le n/2\) and, as such, the bound \(o(L^{2}/k^2)\) on the squared gradient norm is dimension-dependent [8]. For completeness, the rate \(O(L^{2}/k^{3})\) can be achieved by introducing an additional sequence of iterates and a more aggressive step-size policy in a variant of NAG-C [23]. In stark contrast, our result shows that no adjustments are needed for NAG-C to yield an accelerated convergence rate for minimizing the gradient norm.

Scaled squared gradient norm \(s^2(k+1)^3 \min _{0 \le i \le k} \Vert \nabla f(x_i)\Vert ^2\) of NAG-C. In both plots, the scaled squared gradient norm stays bounded as \(k \rightarrow \infty \). Left: \(f(x) = \frac{1}{2}\left\langle Ax, x\right\rangle + \left\langle b, x\right\rangle \), where \(A = T' T\) is a \(500 \times 500\) positive semidefinite matrix and b is \(1 \times 500\). All entries of \(b, \; T \in {\mathbb {R}}^{500 \times 500}\) are i.i.d. uniform random variables on (0, 1), and \(\Vert \cdot \Vert _{2}\) denotes the matrix spectral norm. Right: \(f(x) = \rho \log \left\{ \sum \limits _{i = 1}^{200} \mathrm{exp}\left[ \left( \left\langle a_{i}, x\right\rangle - b_{i} \right) /\rho \right] \right\} \), where \(A = [a_{1}, \ldots , a_{200}]'\) is a \(200 \times 50\) matrix and b is a \(200 \times 1\) column vector. All entries of A and b are sampled i.i.d. from \({\mathcal {N}}(0,1)\) with \(\rho = 20\)

An \(\Omega (L^2/k^4)\) lower bound has been established by [35] as the optimal convergence rate for minimizing \(\Vert \nabla f\Vert ^2\) with access to only first-order information. (For completeness, “Appendix C” presents an exposition of this fundamental barrier.) In the same paper, a regularization technique is used in conjunction with NAG-SC to obtain a matching upper bound (up to a logarithmic factor). This method, however, takes as input the distance between the initial point and the minimizer, which is not practical in general [27].

Returning to Theorem 6, we present a proof of this theorem using a Lyapunov function argument. By way of comparison, we remark that Nesterov’s estimate sequence technique is unlikely to be useful for characterizing the convergence of the gradient norm as this technique is essentially based on local quadratic approximations. The phase-space representation of NAG-C (1.5) takes the following form:

for any initial position \(x_{0}\) and the initial velocity \(v_{0} = - \sqrt{s} \nabla f(x_{0})\). This representation allows us to discretize the continuous Lyapunov function (4.36) into

The following lemma characterizes the dynamics of this Lyapunov function. Its proof is relegated to “Appendix C”.

Lemma 6

Under the assumptions of Theorem 6, for all \(k \ge 0\) we have

Next, we provide the proof of Theorem 6.

Proof of Theorem 6

We start with the fact that

for \(k \ge 2\). To show this, note that it suffices to guarantee

which is self-evident since \(s \le 1/(3L)\) by assumption.

Next, by a telescoping-sum argument, Lemma 6 leads to the following inequalities for \(k \ge 4\):

where the second inequality is due to (4.42). To further simplify the bound, observe that

for \(k \ge 4\). Plugging this inequality into (4.44) yields

which gives

It is shown in “Appendix C.4” that \({\mathcal {E}}(3) \le {\mathcal {E}}(2) \le 119 \left\| x_{0} - x^{\star }\right\| ^{2}\), for \(s \le 1/(3L)\). As a consequence of this, (4.45) gives

For completeness, “Appendix C.4” proves, via a brute-force calculation, that \(\left\| \nabla f(x_0) \right\| ^{2}\), \(\left\| \nabla f(x_1) \right\| ^{2}\), \(\left\| \nabla f(x_2) \right\| ^{2}\), and \(\left\| \nabla f(x_3) \right\| ^{2}\) are all bounded above by the right-hand side of (4.46). This completes the proof of the first inequality claimed by Theorem 6.

For the second claim in Theorem 6, the definition of the Lyapunov function and its decreasing property ensured by (4.42) implies

for all \(k \ge 2\). “Appendix C.4” establishes that \(f(x_{0}) - f(x^{\star })\) and \(f(x_{1}) - f(x^{\star })\) are bounded by the right-hand side of (4.47). This completes the proof. \(\square \)

In passing, we remark that the gradient correction sheds light on the superiority of the high-resolution ODE over its low-resolution counterpart, just as in Sect. 3. Indeed, the absence of the gradient correction in the low-resolution ODE leads to the lack of the term \((k+1)s \nabla f(x_k)\) in the Lyapunov function (see Section 4 of [41]), as opposed to the high-resolution Lyapunov function (4.41). Accordingly, it is unlikely to carry over the bound \({\mathcal {E}}(k+1) - {\mathcal {E}}(k) \le - O(s^2 k^2 \Vert \nabla f(x_{k+1})\Vert ^2)\) of Lemma 6 to the low-resolution case and, consequently, the low-resolution ODE approach pioneered by [41] is insufficient to obtain the \(O(L^2/k^3)\) rate for squared gradient norm minimization.

5 Extensions

Motivated by the high-resolution ODE (1.12) of NAG-C, this section considers a family of generalized high-resolution ODEs that take the form

for \(t \ge \alpha \sqrt{s}/2\), with initial conditions \(X(\alpha \sqrt{s}/2) = x_{0}\) and \({\dot{X}}(\alpha \sqrt{s}/2) = - \sqrt{s} \nabla f(x_{0})\). As demonstrated in [6, 41, 42], the low-resolution counterpart (that is, set \(s = 0\)) of (5.48) achieves acceleration if and only if \(\alpha \ge 3\). Accordingly, we focus on the case where the friction parameter \(\alpha \ge 3\) and the gradient correction parameter \(\beta > 0\). An investigation of the case of \(\alpha < 3\) is left for future work.

By discretizing the ODE (5.48), we obtain a family of new accelerated methods for minimizing smooth convex functions:

starting with \(x_{0} = y_{0}\). The second line of the iteration is equivalent to

In Sect. 5.1, we study the convergence rates of this family of generalized NAC-C algorithms along the lines of Sect. 4. To further our understanding of (5.49), Sect. 5.2 shows that this method in the super-critical regime (that is, \(\alpha > 3\)) converges to the optimum faster than \(O(1/(sk^2))\). As earlier, the proofs of all the results follow the high-resolution ODE framework introduced in Sect. 2. Proofs are deferred to “Appendix D”. Finally, we note that Sect. 6 briefly sketches the extensions along this direction for NAG-SC.

5.1 Convergence rates

The theorem below characterizes the convergence rates of the generalized NAG-C (5.49).

Theorem 7

Let \(f \in {\mathcal {F}}^1_L({\mathbb {R}}^n), \alpha \ge 3\), and \(\beta > \frac{1}{2}\). There exists \(c_{\alpha ,\beta } > 0\) such that, taking any step size \(0 < s \le c_{\alpha ,\beta }/L\), the iterates \(\{x_k\}_{k=0}^{\infty }\) generated by the generalized NAG-C (5.49) obey

for all \(k \ge 0\). In addition, we have

for all \(k \ge 0\). The constants \(c_{\alpha ,\beta }\) and \(C_{\alpha ,\beta }\) only depend on \(\alpha \) and \(\beta \).

The proof of Theorem 7 is given in “Appendix D.1” for \(\alpha = 3\) and “Appendix D.1” for \(\alpha > 3\). This theorem shows that the generalized NAG-C achieves the same rates as the original NAG-C in both squared gradient norm and function value minimization. The constraint \(\beta > \frac{1}{2}\) reveals that further leveraging of the gradient correction does not hurt acceleration, but perhaps not the other way around (note that NAG-C in its original form corresponds to \(\beta = 1\)). It is an open question whether this constraint is a technical artifact or is fundamental to acceleration.

5.2 Faster convergence in the super-critical regime

We turn to the case in which \(\alpha > 3\), where we show that the generalized NAG-C in this regime attains a faster rate for minimizing the function value. The following proposition provides a technical inequality that motivates the derivation of the improved rate.

Proposition 3

Let \(f \in {\mathcal {F}}^1_L({\mathbb {R}}^n), \alpha > 3\), and \(\beta > \frac{1}{2}\). There exists \(c_{\alpha ,\beta }' > 0\) such that, taking any step size \(0 < s \le c_{\alpha ,\beta }'/L\), the iterates \(\{x_k\}_{k=0}^{\infty }\) generated by the generalized NAG-C (5.49) obey

where the constants \(c'_{\alpha , \beta }\) and \(C'_{\alpha , \beta }\) only depend on \(\alpha \) and \(\beta \).

In relating to Theorem 7, one can show that Proposition 3 in fact implies (5.50) in Theorem 7. To see this, note that for \(k \ge 1\), one has

where the second inequality follows from Proposition 3.

Proposition 3 can be thought of as a generalization of Theorem 6 of [41]. In particular, this result implies an intriguing and important message. To see this, first note that, by taking \(s = O(1/L)\), Proposition 3 gives

which would not be valid if \(f(x_{k}) - f(x^{\star }) \ge c L\left\| x_{0} - x^{\star } \right\| ^{2}/k^2\) for a constant \(c > 0\). Thus, it is tempting to suggest that there might exist a faster convergence rate in the sense that

This faster rate is indeed achievable as we show next, though there are examples where (5.51) and \(f(x_{k}) - f(x^{\star }) = O(L\left\| x_{0} - x^{\star } \right\| ^{2}/k^2)\) are both satisfied but (5.52) does not hold (a counterexample is given in “Appendx D.1”).

Theorem 8

Under the same assumptions as in Proposition 3, taking the step size \(s = c'_{\alpha ,\beta }/L\), the iterates \(\{x_k\}_{k=0}^{\infty }\) generated by the generalized NAG-C (5.49) starting from any \(x_0 \ne x^\star \) satisfy

Scaled error \(s(k+1)^2 (f(x_k) - f(x^\star ))\) of the generalized NAG-C (5.49) with various \((\alpha , \beta )\). The setting is the same as the left plot of Fig. 4, with the objective \(f(x) = \frac{1}{2} \left\langle Ax, x\right\rangle + \left\langle b, x\right\rangle \). The step size is \(s = 10^{-1}\Vert A\Vert _{2}^{-1}\). The left shows the short-time behaviors of the methods, while the right focuses on the long-time behaviors. The scaled error curves with the same \(\beta \) are very close to each other in the short-time regime, but in the long-time regime, the scaled error curves with the same \(\alpha \) almost overlap. The four scaled error curves slowly tend to zero

Scaled error \(s(k+1)^2 (f(x_k) - f(x^\star ))\) of the generalized NAG-C (5.49) with various \((\alpha , \beta )\). The setting is the same as the right plot of Fig. 4, with the objective \(f(x) = \rho \log \left\{ \sum \limits _{i = 1}^{200} \mathrm{exp}\left[ \left( \left\langle a_{i}, x\right\rangle - b_{i} \right) /\rho \right] \right\} \). The step size is \(s = 0.1\). This set of simulation studies implies that the convergence in Theorem 8 is slow for some problems

Figures 5 and 6 present several numerical studies concerning the prediction of Theorem 8. For a fixed dimension n, the convergence in Theorem 8 is uniform over functions in \({\mathcal {F}}^1 = \cup _{L > 0} {\mathcal {F}}_L^1\) and, consequently, is independent of the Lipschitz constant L and the initial point \(x_0\). In addition to following the high-resolution ODE framework, the proof of this theorem reposes on the finiteness of the series in Proposition 3. See “Appendices D.1” and “D.2” for the full proofs of the proposition and the theorem, respectively.

In the literature, [5, 8] use low-resolution ODEs to establish the faster rate \(o(1/k^2)\) for the generalized NAG-C (5.49) in the special case of \(\beta = 1\). In contrast, our proof of Theorem 8 is more general and applies to a broader class of methods. Notably, [5, 8] show in addition that the iterates of NAG-C converge in this regime (see also [17]).

In passing, we make the observation that Proposition 3 reveals that \(\sum _{k = 1}^{\infty } s k^2 \left\| \nabla f(x_{k})\right\| ^{2} \le \frac{C'_{\alpha , \beta }\left\| x_{0} - x^{\star }\right\| ^{2}}{s}\), which would not hold if \(\min _{0 \le i \le k} \Vert \nabla f(x_i)\Vert ^2 \ge c \Vert x_0 - x^\star \Vert ^2/(s^2 k^3)\) for all k and a constant \(c > 0\). In view of the above, it might be true that the rate of the generalized NAG-C for minimizing the squared gradient norm can be improved to \(\min _{0 \le i \le k} \Vert \nabla f(x_i)\Vert ^2 = o\left( \frac{\Vert x_0 - x^\star \Vert ^2}{s^2k^3} \right) \). We leave the proof or disproof of this asymptotic result for future research.

6 Discussion

In this paper, we have proposed high-resolution ODEs for modeling three first-order optimization methods—the heavy-ball method, NAG-SC, and NAG-C. These new ODEs are more faithful surrogates for the corresponding discrete optimization methods than existing ODEs in the literature, thus serving as a more effective tool for understanding, analyzing, and generalizing first-order methods. Using this tool, we identified a term that we refer to as “gradient correction” in NAG-SC and in its high-resolution ODE, and we demonstrate its critical effect in making NAG-SC an accelerated method, as compared to the heavy-ball method. We also showed via the high-resolution ODE of NAG-C that this method minimizes the squared norm of the gradient at a faster rate than expected for smooth convex functions, and again the gradient correction is the key to this rate. Finally, the analysis of this tool suggested a new family of accelerated methods with the same optimal convergence rates as NAG-C.

The aforementioned results are obtained using the high-resolution ODEs in conjunction with a new framework for translating findings concerning the amenable ODEs into those of the less “user-friendly” discrete methods. This framework encodes an optimization property under investigation into a continuous-time Lyapunov function for an ODE and a discrete-time Lyapunov function for the discrete method. As an appealing feature of this framework, the transformation from the continuous Lyapunov function to its discrete version is through a phase-space representation. This representation links continuous objects such as position and velocity variables to their discrete counterparts in a faithful manner, permitting a transparent analysis of the three discrete methods that we studied.

There are a number of avenues open for future research using the high-resolution ODE framework. First, the discussion of Sect. 5 can carry over to the heavy-ball method and NAG-SC, which correspond to the high-resolution ODE, \({\ddot{X}}(t) + 2 \sqrt{\mu } {\dot{X}}(t) + \beta \sqrt{s} \nabla ^{2} f(X(t)) {\dot{X}}(t) + \left( 1 + \sqrt{\mu s} \right) \nabla f(X(t))= 0\), with \(\beta = 0\) and \(\beta = 1\), respectively. This ODE with a general \(0< \beta < 1\) corresponds to a new algorithm that can be thought of as an interpolation between the two methods. It is of interest to investigate the convergence properties of this class of algorithms. Second, we recognize that new optimization algorithms are obtained in [43] by using different discretization schemes on low-resolution ODE. Hence, a direction of interest is to apply the techniques therein to our high-resolution ODEs and to explore possible appealing properties of the new methods. Third, the technique of dimensional analysis, which we have used to derive high-resolution ODEs, can be further used to incorporate even higher-order powers of \(\sqrt{s}\) into the derivation of ODEs. This might lead to further fine-grained findings concerning the discrete methods. Last, the powerful toolbox developed for inertial dynamics might provide further insight in the analysis of our high-resolution ODEs [1, 2, 7, 9, 10].

More broadly, we wish to remark on possible extensions of the high-resolution ODE framework beyond smooth convex optimization in the Euclidean setting. In the non-Euclidean case, it would be interesting to derive a high-resolution ODE for mirror descent [43]. This framework might also admit extensions to non-smooth optimization with proximal methods and stochastic optimization, where the ODEs are replaced, respectively, by differential inclusions and stochastic differential equations. Finally, recognizing that the high-resolution ODEs are well-defined for nonconvex functions, we believe that this framework will provide more accurate characterization of local behaviors of first-order algorithms near saddle points [20, 26]. On a related note, given the centrality of the problem of finding an approximate stationary point in the nonconvex setting [16], it is worth using the high-resolution ODE framework to explore possible applications of the faster rate for minimizing the squared gradient norm that we have uncovered.

Notes

The gradient correction for NAG-C is \(\frac{k}{k+3} \cdot s (\nabla f(x_k) - \nabla f(x_{k-1}))\), as seen from the single-variable form of NAG-C: \(x_{k+1} = x_k + \frac{k}{k+3}(x_k - x_{k-1}) - s \nabla f(x_k) - \frac{k}{k+3} \cdot s (\nabla f(x_k) - \nabla f(x_{k-1}))\).

One can think of the Lyapunov function as a generalization of the idea of the energy of a system. Then the method studies stability by looking at the rate of change of this measure of energy.

We note that the form of the initial conditions is fixed for each ODE throughout the paper. For example, while \(x_0\) is arbitrary, X(0) and \(\dot{X}(0)\) must always be equal to \(x_0\) and \(-2\sqrt{s} f(x_0)/(1+\sqrt{\mu s})\), respectively, in the high-resolution ODE of the heavy-ball method. This is in accordance with the choice of \(\alpha = \frac{1 - \sqrt{\mu s}}{1 + \sqrt{\mu s}}\) and \(x_{1} = x_{0}-\frac{2s\nabla f(x_{0})}{1+\sqrt{\mu s}}\).

Note that for the heavy-ball method, the trajectories of the high-resolution ODE and the low-resolution ODE are almost identical.

Henceforth, the dependence of X on t is suppressed when clear from the context.

Note that we use the approximation \(\frac{x_{k+1} + x_{k -1} - 2x_{k}}{s} = {\ddot{X}}(t_{k}) + O(s)\), whereas [41] relies on the low-accuracy Taylor expansion \(\frac{x_{k+1} + x_{k -1} - 2x_{k}}{s} = {\ddot{X}}(t_{k}) + o(1)\) in the derivation of the low-resolution ODE of NAG-C.

To see this, recall that [41] shows that \(f(X(t)) - f(x^\star ) \le \frac{2\Vert x_0 - x^\star \Vert ^2}{t^2}\), where \(X = X(t)\) is the solution to (4.39) with \(s = 0\). Using the L-smoothness of f, we get \(\Vert \nabla f(X(t))\Vert ^2 \le 2L(f(X(t)) - f(x^\star )) \le \frac{4L\Vert x_0 - x^\star \Vert ^2}{t^2}\).

References

Alvarez, F.: On the minimizing property of a second order dissipative system in Hilbert spaces. SIAM J. Control Optim. 38(4), 1102–1119 (2000)

Alvarez, F., Attouch, H., Bolte, J., Redont, P.: A second-order gradient-like dissipative dynamical system with Hessian-driven damping: application to optimization and mechanics. J. Math. Pures Appl. 81(8), 747–779 (2002)

Arnold, V.I.: Mathematical Methods of Classical Mechanics, vol. 60. Springer Science and Business Media, Berlin (2013)

Attouch, H., Cabot, A.: Convergence rates of inertial forward-backward algorithms. SIAM J. Optim. 28(1), 849–874 (2018)

Attouch, H., Chbani, Z., Peypouquet, J., Redont, P.: Fast convergence of inertial dynamics and algorithms with asymptotic vanishing viscosity. Math. Progr. 168(1–2), 123–175 (2018)

Attouch, H., Chbani, Z., Riahi, H.: Rate of convergence of the Nesterov accelerated gradient method in the subcritical case \(\alpha \le 3\). arXiv preprint arXiv:1706.05671 (2017)

Attouch, H., Maingé, P.-E., Redont, P.: A second-order differential system with Hessian-driven damping; application to non-elastic shock laws. Differ. Equ. Appl. 4(1), 27–65 (2012)

Attouch, H., Peypouquet, J.: The rate of convergence of Nesterov’s accelerated forward-backward method is actually faster than \(1/k^{2}\). SIAM J. Optim. 26(3), 1824–1834 (2016)

Attouch, H., Peypouquet, J., Redont, P.: A dynamical approach to an inertial forward-backward algorithm for convex minimization. SIAM J. Optim. 24(1), 232–256 (2014)

Attouch, H., Peypouquet, J., Redont, P.: Fast convex optimization via inertial dynamics with Hessian driven damping. J. Differ. Equ. 261(10), 5734–5783 (2016)

Badithela, A., Seiler P.: Analysis of the heavy-ball algorithm using integral quadratic constraints. In: 2019 American Control Conference (ACC), pp. 4081–4085, IEEE, (2019)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2(1), 183–202 (2009)

Betancourt, M., Jordan, M.I., Wilson, A.C.: On symplectic optimization. arXiv preprint arXiv:1802.03653, (2018)

Bubeck, S.: Convex optimization: algorithms and complexity. Found. Trends Mach. Learn. 8(3–4), 231–357 (2015)

Bubeck, S., Lee, Y.T., Singh, M.: A geometric alternative to Nesterov’s accelerated gradient descent. arXiv preprint arXiv:1506.08187, (2015)

Carmon, Y., Duchi, J.C., Hinder, O., Sidford, A.: Lower bounds for finding stationary points I. arXiv preprint arXiv:1710.11606, (2017)

Chambolle, A., Dossal, C.: On the convergence of the iterates of the “fast iterative shrinkage/thresholding algorithm”. J. Optim. Theory Appl. 166(3), 968–982 (2015)

Diakonikolas, J., Orecchia, L.: The approximate duality gap technique: a unified theory of first-order methods. arXiv preprint arXiv:1712.02485, (2017)

Drusvyatskiy, D., Fazel, M., Roy, S.: An optimal first order method based on optimal quadratic averaging. SIAM J. Optim. 28(1), 251–271 (2018)

Du, S.S., Jin, C., Lee, J.D., Jordan, M.I., Singh, A., Poczos, B.: Gradient descent can take exponential time to escape saddle points. In: Advances in Neural Information Processing Systems, pp. 1067–1077, (2017)

Flammarion, N., Bach, F.: From averaging to acceleration, there is only a step-size. In: Conference on Learning Theory, pp. 658–695, (2015)

Gelfand, I.M., Tsetlin, M.L.: The method of“ ravines” (Russian). Uspekhi Matematicheskikh Nauk (Progres in Mathematics) 17, 103–131 (1962)

Ghadimi, S., Lan, G.: Accelerated gradient methods for nonconvex nonlinear and stochastic programming. Math. Progr. 156(1–2), 59–99 (2016)

Hu, B., Lessard, L.: Control interpretations for first-order optimization methods. In: 2017 American Control Conference (ACC), pp. 3114–3119. IEEE, (2017)

Hu, B., Lessard, L.: Dissipativity theory for Nesterov’s accelerated method. arXiv preprint arXiv:1706.04381, (2017)

Jin, C., Ge, R., Netrapalli, P., Kakade, S.M., Jordan, M.I.: How to escape saddle points efficiently. In: International Conference on Machine Learning (ICML), New York, ACM Press, (2017)

Kim, D., Fessler, J.A.: Optimizing the efficiency of first-order methods for decreasing the gradient of smooth convex functions. arXiv preprint arXiv:1803.06600, (2018)

Krichene, W., Bartlett, P.L.: Acceleration and averaging in stochastic descent dynamics. In: Advances in Neural Information Processing Systems, pp. 6796–6806, (2017)

Krichene, W., Bayen, A., Bartlett, P.L.: Accelerated mirror descent in continuous and discrete time. In: Advances in Neural Information Processing Systems, pp. 2845–2853, (2015)

Krichene, W., Bayen, A., Bartlett, P.L.: Adaptive averaging in accelerated descent dynamics. In: Advances in Neural Information Processing Systems, pp. 2991–2999, (2016)

Lessard, L., Recht, B., Packard, A.: Analysis and design of optimization algorithms via integral quadratic constraints. SIAM J. Optim. 26(1), 57–95 (2016)