Abstract

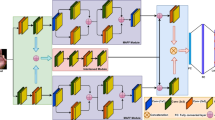

In this paper, a lightweighted Intensive Feature Extrication Deep Network (ExtriDeNet) is proposed for precise hand gesture recognition (HGR). ExtriDeNet primarily consists of two blocks: Intensive Feature Fusion Block (IFFB) and Intensive Feature Assimilation Block (IFAB). IFFB incorporates two different scaled filters \(3\times 3\) and \(5 \times 5\) to capture contextual features of hands, while IFAB is designed by embedding influential features of IFFB with two extreme minute and high-level feature responses from two receptive fields generated by employing \(1\times 1\) and \(7\times 7\) sized filters, respectively. The combination of multiscaled filters enriches the network with the most significant features and enhances the learnability of the network. Thus, the proposed ExtriDeNet efficiently defines the distinctive features of different hand gesture classes and achieves high performance as compared to state-of-the-art HGR approaches. The performance of the proposed network is evaluated on the standard datasets: MUGD, Finger Spelling, OUHands, NUS-I, NUS-II and HGR1 for both subject-dependent and subject-independent scheme.

Similar content being viewed by others

References

Bay, H., Tuytelaars, T., Van Gool, L.: Surf: speeded up robust features. In: European Conference on Computer Vision, pp. 404–417. Springer, Berlin (2006)

Lindeberg, T.: Scale invariant feature transform. Scholarpedia 7(5), 10491 (2012)

Bhaumik, G., Verma, M., Govil, M.C., Vipparthi, S.K.: EXTRA: an extended radial mean response pattern for hand gesture recognition. In: 2020 International Conference on Communication and Signal Processing (ICCSP) (2020)

Pedersoli, F., Benini, S., Adami, N., Leonardi, R.: XKin: an open source framework for hand pose and gesture recognition using kinect. Vis. Comput. 30(10), 1107–1122 (2014)

Tamiru, N.K., Tekeba, M., Salau, A.O.: Recognition of Amharic sign language with Amharic alphabet signs using ANN and SVM. Vis. Comput. (2021)

Islam, M., Hossain, M.S., Ul Islam, R., Andersson, K.: Static hand gesture recognition using convolutional neural network with data augmentation. In: Joint 2019 8th International Conference on Informatics. IEEE, Electronics and Vision (ICIEV) (2019)

Imran, J., Raman, B.: Deep motion templates and extreme learning machine for sign language recognition. Vis. Comput. 36(6), 1233–1246 (2020)

Pinto, R.F., Borges, C.D., Almeida, A., Paula, I.C.: Static hand gesture recognition based on convolutional neural networks. J. Electric. Comput. Eng. (2019)

Nguyen, T.N., Huynh, H.H., Meunier, J.: Static hand gesture recognition using principal component analysis combined with artificial neural network. J. Autom. Control Eng. 3(1), 40–45 (2015)

Zhang, W., Zeyi, L., Jian, C., Cuixia, M., Xiaoming, D., Hongan, W.: STA-GCN: two-stream graph convolutional network with spatial-temporal attention for hand gesture recognition. Vis. Comput. 36(10), 2433–2444 (2020)

Bhuvaneshwari, C., Manjunathan, A.: Advanced gesture recognition system using long-term recurrent convolution network. In: Materials Today: Proceedings (2019)

Tang, J., Shu, X., Li, Z., Qi, G.J., Wang, J.: Generalized deep transfer networks for knowledge propagation in heterogeneous domains. In: ACM Transactions on Multimedia Computing, Communications, and Applications, pp. 1–22 (2016)

Shu, X., Qi, G.J., Tang, J., Wang, J.: Weakly-shared deep transfer networks for heterogeneous-domain knowledge propagation. In: Proceedings of the 23rd ACM International Conference on Multimedia, pp. 35–44 (2015)

Ozcan, T., Basturk, A.: Transfer learning-based convolutional neural networks with heuristic optimization for hand gesture recognition. Neural Comput. Appl. 31(12), 8955–8970 (2019)

Liu, J., Furusawa, K., Tateyama, T., Iwamoto, Y., Chen, Y. W.: An improved hand gesture recognition with two-stage convolution neural networks using a hand color image and its pseudo-depth image. In: 2019 IEEE International Conference on Image Processing (ICIP), pp. 375–379. IEEE (2019)

Côté-Allard, U., Fall, C.L., Drouin, A., Campeau-Lecours, A., Gosselin, C., Glette, K., Gosselin, B.: Deep learning for electromyographic hand gesture signal classification using transfer learning. IEEE Trans. Neural Syst. Rehabil. Eng. 27(4), 760–771 (2019)

Shu, X., Zhang, L., Qi, G.J., Liu, W., Tang, J.: Spatiotemporal co-attention recurrent neural networks for human-skeleton motion prediction. IEEE Trans. Pattern Anal. Mach. Intell. (2021)

Shu, X., Tang, J., Qi, G.J., Liu, W., Yang, J.: Hierarchical long short-term concurrent memory for human interaction recognition. IEEE Trans. Pattern Anal. Mach. Intell. 43(3), 1110–1118 (2021)

Shu, X., Zhang, L., Sun, Y., Tang, J.: Host-parasite: graph LSTM-In-LSTM for group activity recognition. IEEE Trans. Neural Netw. Learn. Syst. 32(2), 663–674 (2021)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Szegedy, C., et al.: Inception-v4, inception-resnet and the impact of residual connections on learning. In: Thirty-First AAAI Conference on Artificial Intelligence (2017)

Howard, A.G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Adam, H.: Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 (2017)

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L.C.: Mobilenetv 2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4510–4520 (2018)

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2818–2826 (2016)

Varun, K., Sai, I., Puneeth, T., Prem, J.: Hand gesture recognition and implementation for disables using CNN’S. In: 2019 International Conference on Communication and Signal Processing (ICCSP), pp. 0592–0595. IEEE (2019)

Zhan, F.: Hand gesture recognition with convolution neural networks. In: 2019 IEEE 20th International Conference on Information Reuse and Integration for Data Science (IRI), pp. 295–298. IEEE (2019)

Barczak, A.L.C., Reyes, N.H., Abastillas, M., Piccio, A., Susnjak, T.: A new 2D static hand gesture colour image dataset for ASL gestures (2011)

Nicolas Pugeault, R.B.: ASL finger spelling dataset. http://personal.ee.surrey.ac.uk/Personal/N.Pugeault/index.php

Matti, M., Pekka, S., Jukka, H., Olli, S.: Ouhands database for hand detection and pose recognition. In: 6th International Conference on Image Processing Theory Tools and Applications, p. 1–5. IEEE (2016)

Prahlad, V., Loh, A.P.: Attention based detection and recognition of hand postures against complex backgrounds. Int. J. Comput. Vis. 101(3), 403–419 (2013)

Funding

Not applicable.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Bhaumik, G., Verma, M., Govil, M.C. et al. ExtriDeNet: an intensive feature extrication deep network for hand gesture recognition. Vis Comput 38, 3853–3866 (2022). https://doi.org/10.1007/s00371-021-02225-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-021-02225-z