Abstract

Ising machines based on nonlinear analog systems are a promising method to accelerate computation of NP-hard optimization problems. Yet, their analog nature is also causing amplitude inhomogeneity which can deteriorate the ability to find optimal solutions. Here, we investigate how the system’s nonlinear transfer function can mitigate amplitude inhomogeneity and improve computational performance. By simulating Ising machines with polynomial, periodic, sigmoid and clipped transfer functions and benchmarking them with MaxCut optimization problems, we find the choice of transfer function to have a significant influence on the calculation time and solution quality. For periodic, sigmoid and clipped transfer functions, we report order-of-magnitude improvements in the time-to-solution compared to conventional polynomial models, which we link to the suppression of amplitude inhomogeneity induced by saturation of the transfer function. This provides insights into the suitability of nonlinear systems for building Ising machines and presents an efficient way for overcoming performance limitations.

Similar content being viewed by others

Introduction

With the on-setting end of Moore’s law, we also start to see an end to the continuous growth of both performance and energy efficiency of conventional von-Neumann-based digital computers1, which is creating a particular challenge for performance and energy-intensive tasks such as optimization and machine learning2,3. Based on the well-known Ising spin model, Ising machines have emerged as a promising non-von-Neumann computing scheme that can accelerate computation of NP-hard optimization problems compared to conventional digital computers4,5. By mapping the cost function of optimization problems to an Ising Hamiltonian and implementing this Hamiltonian with a physical spin systems, calculation of optimal solutions can be achieved by the natural tendency of the spin system to evolve to its lowest energy state. Compared to conventional optimization algorithms such as simulated annealing, this natural analog computing concept can yield faster calculation and better energy efficiency6,7,8. Various types of Ising machines have been proposed based on optical, electronic, and quantum systems4,9,10,11,12,13. Optical systems, in particular, have attracted great attention due to their high analog bandwidth, favorable energy dissipation, and inherent parallelism7,14,15,16,17,18,19,20,21,22,23,24. Such systems have also been adapted into different physics-inspired algorithms that have demonstrated equal performance with state-of-the-art optimization algorithms25,26,27,28. A common feature among many of these Ising machines is that they are gain-dissipative nonlinear systems. Gain-dissipative systems generate spin states through bifurcation-induced bistability that results from the interplay of linear gain dynamics with a nonlinear transfer function29. Originally, nonlinear systems based on supercritical pitchfork bifurcations have been proposed, as they naturally incorporate the Ising model and have demonstrated efficient ground-state calculations for a variety of different optimization problems25,26,29,30,31. However, numerous Ising machine designs have since then demonstrated that a larger variety of different nonlinear systems can be used to implement Ising machines13,19,27.

This raises the fundamental question of what type of general nonlinear systems are capable of implementing Ising machines and which one is most suitable to achieve high computational performance in finding optimal solutions, i.e., short time-to-solution and high solution quality. A direct comparison between different Ising machines can be quite challenging though, due to the large differences in analog bandwidth and stability between different designs. Such engineering challenges have to lead to differing claims about the advantages of particular systems13,19,27, while little insight has been gained thus far into what features make a general nonlinear dynamical system suitable as an Ising machine. This is of particular interest since the analog nature of the spin system typically results in amplitude inhomogeneity, which is known to lead to an incorrect mapping of the spin system to the target Ising Hamiltonian and thus inhibits the ability to find optimal solutions29. While active feedback systems have been proposed to counteract this inhomogeneity25,26, such systems require dynamically control the gain of each individual spin, which creates a significant overhead and could negatively affect the analog bandwidth.

In order to effectively enhance the computational performance of analog spin systems, we consider the choice of the Ising machine’s nonlinear transfer function as an efficient way of mitigating amplitude inhomogeneity. By unifying different Ising machine concepts into a generalized nonlinear dynamical system that makes their computational performance directly comparable, we identify general features in the system’s nonlinear transfer function required for the implementation of Ising spins. Based on this, we simulate Ising machines with polynomial, periodic, sigmoid, and clipped functions. To understand the influence of the nonlinear transfer function on the computational performance of Ising machines, we perform various benchmarks of the different nonlinearities based on NP-hard MaxCut optimization problems. We find that, while conventional systems based on pitchfork normal forms are often unable to find optimal solutions due to amplitude inhomogeneity, clipped and sigmoid nonlinear transfer functions can reach higher solution qualities and yield order-of-magnitudes improvements in the time-to-solution for the same problems. We link this enhanced computational performance to the strong suppression of amplitude inhomogeneity by the nonlinear transfer function, which shows that errors induced by the analog system can in part be compensated by choosing an appropriate nonlinear system. Our findings propose a straightforward and efficient way for overcoming computational performance deterioration due to amplitude inhomogeneity and motivate that a much larger variety of physical system beyond the current state-of-the-art can be considered for future generations of Ising machines.

Results

Generalized Ising machine model

Ising machines are physical systems that implement coupled binary spins \({\sigma }_{i}=\left\{-1,1\right\}\), so that their energy or gain are equivalent to the Ising Hamiltonian

The spins are either in the spin up (σi = 1) or spin down state (σi = − 1) and are coupled through the symmetric spin coupling matrix Jij. In addition, biases bi can be applied to any spin. The computational capabilities of the Ising machines arise from the fact that the cost function of various NP-hard combinatorial optimization problems can be directly mapped to such an Ising Hamiltonian32 in a way that optimal solutions correspond to global energy minima of eq. (1). The natural tendency of Ising machines to evolve to their lowest energy configuration is then used to find optimal solutions. A crucial challenge in building Ising machines is to find physical systems with a high analog bandwidth that can implement large networks of spins. A common way to achieve this is by using gain-dissipative systems. These are nonlinear systems with an analog spin variable xi that exhibit a bifurcation structure with a symmetrical bistability.

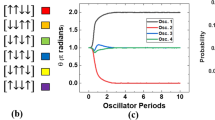

Figure 1a shows a typical bifurcation diagram of a bistable gain-dissipative system as a function of the bifurcation parameter. Below the bifurcation point, the system has only one stable fixed point S0 with an amplitude of xi(S0) = 0. Above the bifurcation point, this trivial fixed point becomes unstable and two new fixed points S1 and S2 emerge that lie symmetrically around S0. Figure 1b shows the time evolution of the spin amplitude xi when it is initially in the fixed point S0. When the system is below the bifurcation point (black curve), the amplitude xi is fluctuating around the trivial fixed point S0 due to the inherent noise of the system. Above the bifurcation point (orange and blue trace), the trivial fixed point becomes an unstable saddle, so that xi will either grow or decrease away from S0 until it ends up in one of the fixed points S1 or S2. This binary nature is exploited to implement the Ising model. By extracting the sign of the spin amplitude, xi can be mapped to an Ising spin through σi = sign(xi).

a Bifurcation diagram of a single gain-dissipative system as a function of the bifurcation parameter. Unstable fixed points are indicated by a dotted line. b Time evolution of a gain-dissipative system below the bifurcation point (black line) and above the bifurcation into the spin up (blue line) and spin down state (orange line) respectively. c Conceptual design of coupled gain-dissipative feedback systems to form an Ising machine. Spin states are generated in parallel gain-dissipative systems and coupled according to the coupling topology Jij. The states are then fed back to the gain-dissipative systems to close the feedback loop.

To implement the Ising Hamiltonian, Ising machines couple several of such analog Ising spins together. Figure 1c shows a schematic view of an Ising machine. Typically, an Ising machine is a continuous feedback system, where several bistable gain-dissipative systems are coupled with each other according to the spin coupling matrix Jij. The dynamics of such a feedback system can then be modeled by the dimensionless differential equation

Here, F is the nonlinear transfer function of the gain-dissipative systems and α and τ are the linear gain and the time delay of the feedback loop. The coupling between different spins occurs with the coupling strength β. To model noise, a Gaussian white noise term γζ is introduced with a zero mean and a standard deviation of γ. For simplicity, we neglect the time delay τ in the following. For optical and analog electronic systems in particular, this is often a reasonable assumption due to the short time of flight of light. The central questions that we are addressing in this work are how the nonlinear transfer function F has to be chosen in order to be suitable for Ising machines and how the particular choice of a nonlinear system affects the computational performance when solving optimization problems. In the following, we show how suitable dynamical systems can be constructed from general classes of nonlinear functions, namely polynomial, periodic, sigmoid, and clipped functions and we compare the computational performance of these different nonlinearities.

Ising machines based on polynomial functions

A basic way to generate an Ising spin system with polynomial transfer functions is the pitchfork normal form. The pitchfork normal form is inherent in various optical systems and has been used to describe Ising machines, e.g., for the classical approximation of degenerate optical parametric oscillators8,17,24, Kerr-nonlinear microring resonators21 and polariton condensates26. The nonlinear transfer function of Ising machines based on the supercritical pitchfork normal form is given by (arguments of F have been omitted for clarity)

and consists of a linear growth term with the linear gain α, a cubic saturation term and a coupling term with coupling strength β. In the following, we first consider the dynamics of the uncoupled system (β = 0). Figure 2a shows the right-hand side of eq. (3) at α = 1.1 for an isolated spin (β = 0) as a function of the spin amplitude xi. Characteristically, the transfer function contains three zero crossings, which correspond to three fixed points. S0 is at the origin and S1 and S2 symmetrically surround the origin. In between the fixed points, there is a local minimum to the left and a local maximum to the right of the origin, which results in an S-shaped transfer function. From linear stability analysis, it follows that the central fixed point is unstable, while the two surrounding fixed points are bistable.

a Nonlinear transfer function as a function of the spin amplitude for polynomial, sigmoid, periodic and clipped nonlinearities for isolated spins. b Bifurcation diagram and average saturation time for isolated spins for the nonlinearities in a as a function of the linear gain α. c Visualization of the inequality (5) for the case of homogenous spin amplitudes (δ = 0, solid line) and inhomogeneous amplitudes (δ > 0, dashed line). The dotted line indicates the left-hand-side of eq. (5) for an arbitrary α. Fixed points corresponding to global minima (ground states GSIsing) and local energy minima (excited states ESIsing) of the Ising model are indicated by circles. For homogeneous amplitudes, fixed points that lie below the dotted line fulfill the condition of eq. (5) and therefore exist.

The corresponding bifurcation diagram resulting from this transfer function is shown in the top panel of Fig. 2b. The uncoupled system possesses a pitchfork bifurcation with the bifurcation point at α = 1 (indicated by the red dashed line). Below the bifurcation point, only the trivial fixed point S0 is stable. Above the bifurcation point, the trivial solution becomes unstable and the two symmetrically bistable fixed points S1 and S2 arise. The amplitude of S1 and S2 is growing monotonically with α and scales as \(\left|{S}_{1,2}\right|\propto \sqrt{\alpha -1}\). In the bottom panel of Fig. 2b, we consider the dynamical timescale of this system as a function of α by measuring the saturation time tsat, i.e., the average time it takes the spin amplitude to grow/decrease to half of the fixed points’ amplitude. Directly at the bifurcation point, we observe critical slowing down of the temporal evolution of xi(t), where the saturation time increases exponentially towards the bifurcation33. This feature of the bifurcation can easily be understood by considering the shape of the transfer function F(xi(t)). As α approaches α = 1, the magnitude of the linear growth term in eq. (3) becomes vanishingly small so that the growth rate of the spin amplitude stagnates.

To understand the computational capabilities of Ising machines, we now consider a network of pitchfork normal forms that are coupled according to the coupling matrix Jij. The ability of eq. (3) to implement the Ising model (1) can be understood by deriving the Lyapunov function L({xi}). The Lyapunov function is a measure of the stability of a particular amplitude configuration {xi}, where stable configurations correspond to minima of L({xi}). For the Ising machine, the Lyapunov function is obtained by integrating the equation of motion (3) and summing over all spins:

In the case of homogeneous spin amplitudes (∣xi∣ = const.), we find a direct correspondence of the Lyapunov function to the Ising model. While the first two terms are constant regardless of the amplitude configuration, the last term is formally equivalent to the Ising Hamiltonian (1). By taking the sign of the spin amplitude \({\sigma }_{i}=\frac{| {x}_{i}| }{{x}_{i}}\), the Lyapunov function thus contains the same minima as the Ising model so that the ground state corresponds to the global minimum of L({xi}). Since by definition \(\frac{dL}{d{x}_{i}}=-F({x}_{i})\), the minima are stable fixed points of the coupled system. As we detail in the methods section, the condition for every fixed point to exist for homogeneous amplitudes is given by29

This inequality is visualized in Fig. 2c. The solid line depicts the r.h.s of eq. (5) as a function of the spin amplitude configuration {σi} for an exemplary Lyapunov function. The l.h.s. of eq. (5) for an arbitrary line gain α is indicated by the black dotted line. The inequality dictates that only fixed points corresponding to local minima below the dashed line exist. The Ising machine is, therefore, unable to reach any of the energy minima above the dotted line. This condition is exploited to effectively single out the ground state. As α increases from the region where only the trivial solution {xi} = 0 exists, the first non-trivial solution to exist is the ground state (GS), while suboptimal solutions are still nonexistent and can therefore be avoided.

However, it is important to note that the assumption of homogeneous amplitudes cannot be made in general29. Due to their analog nature, the spin amplitudes are inhomogeneously distributed around the fixed points with a standard deviation δ. As we show in the methods section, this leads to a modification of the coupling matrix Jij of the implemented Ising model so that it no longer corresponds to the intended target Hamiltonian (1). The dashed line in Fig. 2c exemplifies the influence of amplitude inhomogeneity (δ > 0) on the Lyapunov function. Compared to the homogeneous case (δ = 0), the inhomogeneity can induce a relative shift of the energy minima so that the ground state minimum is no longer the lowest energy configuration or is erased altogether. In this case, the lowest configuration corresponds to an excited state (ES), while the ground state can only be reached at much higher gain levels. This incorrect mapping of the target Ising model can thus significantly deteriorate or even diminish the probability of finding optimal solutions in optimization tasks. To mitigate this issue, a proposed approach is to modulate the gain of each individual spin individually to force the spins to one common amplitude25,26,34. However, this effectively doubles the number of dynamical equations and requires the addition of an active feedback system to perform the calculations of all gain coefficients, which can create a significant overhead and potentially reduce the analog bandwidth. In the following, we thus want to consider other types of nonlinearities and investigate how they can be used to directly reduce the negative effect of amplitude inhomogeneity.

Ising machines based on sigmoid functions

From the polynomial model (3), we find that the shape of the nonlinear transfer function is essential for generating analog Ising spins. Here, we investigate how such spin systems can be generated by mimicking the shape of the transfer function (3) with sigmoid functions. While sigmoid functions have so far not been considered for Ising machines, they are widely used in the context of Hopfield-Tank-networks and other neuromorphic systems to mimic the activation function of neurons35. Efficient ways of implementing them have been reported for both optical systems and electronic systems36,37,38,39,40,41. Sigmoid functions are characterized by an S-shaped nonlinearity and can be modeled by a variety of functions such as the logistic function or the Gompertz function. Here, we consider a sigmoid transfer function based on the hyperbolic tangent function

To facilitate a simple comparison to the polynomial model, we expand eq. (6) into a Taylor series to the third order for small spin amplitudes. As we derive in the methods section, in the weak coupling regime α ≫ β, this results in \(F({x}_{i})\approx (\alpha -1){x}_{i}-\frac{{\alpha }^{3}{x}_{i}^{3}}{3}+\beta {\sum }_{j}{J}_{ij}{x}_{j}\). Compared to the polynomial model, we recognize a close resemblance to eq. (3) with the same linear and nonlinear terms in xi as well as a linear coupling term. This suggests that the sigmoid model works as an approximation of the polynomial model when the system is close to the bifurcation point. We first consider the ability of the sigmoid model to implement uncoupled Ising spins (β = 0). When comparing the shape of this transfer function for an isolated spin in Fig. 2a for α = 1.1 to that of the polynomial model, we find close similarities in its shape and in the position of the fixed points. In the bifurcation diagram in Fig. 2b, we observe the same bifurcation point at α = 1 and a good agreement of the fixed points for α ≈ 1. For higher linear gain, the amplitude of the fixed points starts to deviate due to the different coefficient in the third-order polynomial term and due to the additional higher order terms. Particularly, the absolute amplitude of ∣S1,2∣ does not increase continuously but rather saturates for large α at ∣S1,2∣ → 1. Despite this, the saturation time in Fig. 2b agrees well with the polynomial model for all α. This is to be expected, since the saturation time tsat primarily depends on the linear growth term, which is identical between both models. When the spins are coupled, the linear coupling term ensures a good approximation of the Lyapunov function in eq. (4) to the Ising model for small amplitudes. While the additional higher-order terms can cause small deviations from the polynomial model, the linear coupling term remains dominant so that good mapping to the Ising model can be expected. Although the concept of using sigmoid functions has been considered in neural systems before35, its ability to generate a bistable bifurcation structure indicates that they are also inherently suitability for generating Ising machines.

Ising machines based on periodic functions

Periodic transfer functions form another set of nonlinearities that can be efficiently implemented with optical and electrical systems13,19. To generate an Ising spin system with periodic transfer functions, the general shape of the polynomial model (3) can be mimicked by appropriately shifting cosine or sine functions. In the following, we consider a nonlinear dynamical system based on a cos2 nonlinearity

The cos2 nonlinearity models Ising machines based on optical intensity modulators19 but is also equivalent to electronic oscillator-based Ising machines13. As with the sigmoid model, we expand the transfer function in a Taylor series to the third order. For small amplitudes and for the weak coupling regime, this results in \(F(x)\approx (\alpha -1)x-\frac{2{\alpha }^{3}{x}^{3}}{3}+\beta {\sum }_{j}{J}_{ij}{x}_{j}\). Similar to the sigmoid model, we find close resemblance to eq. (3), which suggests a good approximation close to the bifurcation point. Comparing the transfer function for an isolated spin to that of the polynomial model in Fig. 2a, we find that both systems closely resemble each other both in shape and in the position of the fixed points. In the bifurcation diagram in Fig. 2b, we observe good agreement with the polynomial model for the amplitude of the fixed points when the system is close to the bifurcation point. For higher values of α, the higher-order terms and the different scaling with α causes deviations. As for the sigmoid model, the absolute amplitude of the fixed points does not increase continuously but rather saturates at around ∣S1,2∣ → 0.5. Still, the saturation time tsat is identical to that of the polynomial model over the entire range of α in Fig. 2b, which is expected due to the matching linear growth term. For the coupled system, the linear coupling term ensures the correspondence of the Lyapunov function to the Ising model.

Ising machines based on clipped functions

As a last class of functions, we consider transfer functions that are clipped. Clipping is inherent in various electronic systems due to load limitations of components and has for example been observed in optoelectronic Ising machines19. Clipping has also been proposed as an efficient way to emulate Ising machines with digital hardware27. In the following, we consider a linear transfer function that is clipped to a maximum value of \(\left|{x}_{i}\right|\le 0.4\):

While the transfer function contains the same linear growth term and coupling term as the polynomial model, clipping is quite different from the nonlinear saturation terms discussed before. For isolated spins, the transfer function depicted in Fig. 2a only possesses one zero crossing and therefore only one fixed point. The function is discontinuous at the clipping levels with a sudden jump to zero beyond the clipping level, which pins the spins to the clipping level at ∣S1,2∣ = 0.4. This difference is clearly reflected in the bifurcation diagram in Fig. 2b. Although the clipped function possesses the same bifurcation point at α = 1 as the previous models for isolated spins, the amplitude of the fixed points does not increase or decrease with α, but rather immediately jumps to the clipping level at the bifurcation point. Hence, while the linear growth term and therefore the growth rate of the spin amplitude may be similar to the other models, the saturation amplitude can be quite different at the bifurcation. Setting the level to 0.4, therefore, ensures that the spin amplitude remains comparable to the other models at a gain close to the bifurcation point. As a consequence, the saturation time tsat in Fig. 2b remains very similar to that of the other models with the same critical slowing down at the bifurcation point. For the coupled system, the clipped model possesses the same linear coupling term as the polynomial model. Contrary to the sigmoid and periodic models, no additional nonlinear coupling terms are contained in the transfer function, which ensures the ability to implement the Ising model for homogeneous amplitudes.

Parameter optimization for inhomogeneous spin amplitudes

As described in the previous section, finding ground states with analog Ising machines follows the same approach for arbitrary Ising models in the case of homogenous spin amplitudes. Indeed, for homogeneous amplitudes, the ground state is the first and only solution to exist and can be reached by finding the bifurcation point, either by gradually increasing the gain or by analytical methods17,29. However, this simple scheme fails in general due to amplitude inhomogeneity. With amplitude inhomogeneity, the ground state may not always exist directly at the bifurcation point, which requires to scan the gain above the bifurcation point until the condition for existence is fulfilled. Furthermore, the ground state can become multistable with other excited states, which makes the ground-state search non-deterministic and requires to find operating regions with higher probability to find the ground state.

In order to optimize the performance of the Ising machines using the different transfer functions, we perform scans of the linear gain α, the coupling strength β and the noise strength γ. We optimize the performance in regard to the success rate Pa as well as the time-to-solution TTSa. The success rate Pa measures the probability of reaching a specific solution a (e.g., the ground state) at any point after initializing the Ising machine. The time-to-solution, defined as

measures the time required to reach the solution a with 99 percent probability. It is calculated from the success rate Pa and the average time Ta to reach that solution. Ta is calculated by tracking the energy during the evolution of the Ising machine and corresponds to the point where the solution a is first reached, either by converging or by a transient state. In Fig. 3a, we show exemplary success rates and time-to-solutions for reaching the ground state with the periodic model for a sweep of α and β. Here, Pa is estimated by repeatedly initializing the Ising machine and counting the number of instances in which the ground state has been reached. The implemented Ising model is the random graph g05100,5 contained in the Biq Mac graph library, which has a known ground state at EIsing = − 39742. We have estimated the bifurcation point of this graph from the point where the trivial solution becomes unstable, which is indicated in the parameter space by the red dashed line. Compared to the case of isolated spins in Fig. 2b, where the bifurcation point is at α = 1, the bifurcation point is shifted due to the coupling to the other spins. If β is below the bifurcation point, the success rate is zero as the system is unable to bifurcate and no solution besides the trivial one exists. The corresponding time-to-solution is thus undefined. The top panel of Fig. 3b shows an exemplary time series of the Ising energy in this parameter region for α = 0.8 and β = 0.01. At this point, the Ising energy randomly fluctuates around zero due to noise.

a Success rate and time-to-solution for the random graph g05100,5 for a scan of α and β. The pitchfork bifurcation point is indicated by the dashed line. The gray region indicates the points where the Ising machine is unable to converge to the ground state. b Exemplary time evolution of the Ising energy below the bifurcation point (top panel, corresponds to (i) in a) at α = 0.01 and above the bifurcation point at α = 0.15 for successful (middle panel, corresponds to (ii) in a) and unsuccessful (bottom panel) convergence to the ground state (GS). c Time-to-solution as a function of the coupling strength β for different noise γ strengths at α = 0.8. In (a) and (b), γ is fixed at γ = 0.005.

As β is increased to be directly above the bifurcation point, we observe that the success rate remains at zero. This indicates that the first solutions are excited states and that the ground state position is likely shifted due to amplitude inhomogeneity. Only for higher β, the success rate gradually increases and the fixed point corresponding to the ground state starts to exist. At this point, Pa is at around 10 percent, which indicates multistability with various other excited states. The middle panel of Fig. 3b shows an exemplary time evolution of the Ising energy for a successful calculation for α = 0.8 and β = 0.1. As the system is initialized, the Ising energy immediately decreases until the system eventually converges to a stable configuration at the ground state energy after t ≈ 70. As a comparison in Fig. 3b, we show a case in which the Ising machine reaches an excited state instead. After initially decreasing, the Ising energy converges to an energy of EIsing = − 349, which is only at 88 percent of the ground state. For higher β, the likelihood of this undesired convergence to excited states reduces and the success rate increases to around 40%. The corresponding time-to-solution decreases with this rising success rate from TTSGS ≈ 10000 to an optimum of TTSGS ≈ 1000, as fewer repeated runs of the Ising machine are required until the ground state is found. Eventually, for very high β, it becomes impossible again to reach the ground state and the success rate becomes zero. Figure 3a signifies the sensitivity of Ising machine performance to changes in α and β. For the g05100,5 graph, there is a clear gap between the bifurcation point and the region where the ground state can be found. Furthermore, the operating point with the lowest time-to-solution is for values of β that are further away from the point where the ground state first starts to exist. For each nonlinear transfer function, a sweep of α and β is, therefore, necessary to determine the optimal operating point.

We also consider the influence of the noise strength γ on the overall performance. Contrary to recent high noise level proposals for Ising machines with discrete spin systems43,44, we choose a noise level that is much smaller than the amplitude of the fixed points S1,2 ≫ γ, which corresponds to experimental realizations of analog Ising machines. This ensures that the noise is not strong enough to switch the configuration of individual spins and therefore guarantees that the Ising machine always converges to a stable configuration. The noise will therefore not directly influence the linear stability and the overall success rate. To assess whether the noise has any influence on the performance, we perform sweeps of γ over two orders of magnitude. In Fig. 3c, we measure the time-to-solution for different γ as a function of β for the g05100,5 graph at α = 0.8. Due to the non-deterministic nature of the ground state search, fluctuations of the time-to-solution within a factor of 2 around the average are observed for all noise levels. Interestingly, although γ is changed over two orders of magnitude, we cannot identify a clear change in the time-to-solution. We have verified this result for the different nonlinearities with various Ising models and parameter configurations and observe the same trend. We conclude that as long as the noise level is sufficiently small, we can assume that γ has a neglectable influence on the overall computational performance. In all following simulations, we have therefore fixed γ to a constant value of γ = 0.005.

Benchmark of different nonlinearities

To consider the effect of the nonlinear transfer function on the computational performance of Ising machines, we benchmark the different systems with various MaxCut optimization problems. MaxCut is a task to maximize the cut number

when separating a graph structure into two parts and is known to be an NP-hard problem45. For the benchmarks, we use instances contained in the Biq Mac and the SuiteSparse Matrix Collection libraries. From the Biq Mac library, we consider the g05N,m subset of random undirected graphs with an edge density of 50 percent, for which the ground states are known42. Similar to Fig. 3a, we test all 10 different instances for N = 60, N = 80, and N = 100 respectively by performing sweeps of α and β and measuring the time-to-solution to reach the ground state TTSGS. In Fig. 4, we consider the best time-to-solution that was achieved by the different nonlinearities during the scan of α and β. For the periodic, sigmoid and clipped system, the time-to-solution is shown as a ratio to the time-to-solution achieved by the polynomial model, whose absolute value is shown as a reference (absolute values for all models are given in the supplementary table 1). While all nonlinearities are able to converge to the ground state, we observe drastic differences for some specific problems. In these cases, the polynomial model typically performs worse than the other models with a time-to-solution that is one or two orders of magnitude slower, which is beyond the noise-induced fluctuations in Fig. 3c. We find that spins in all models still evolve on very similar timescales (similar to the isolated spins in Fig. 2b). However, we observe significantly lower success rates for the polynomial model that cause large differences in performance.

Time-to-solution of the periodic, sigmoid and clipped model relative to the polynomial model for the Biq Mac g05 MaxCut benchmark set with N = 60 (a), N = 80 (b), and N = 100 spins (c). The absolute value for the time-to-solution for the polynomial model is indicated by the numbers above the bars for the polynomial model.

To better understand these differences, we consider the Biq Mac instance g05100,5 as an example, where the time-to-solution differs by around one order of magnitude between the polynomial model and the other nonlinearities. In Fig. 5, we perform scans of the coupling strength β through the parameter space from below to above the bifurcation and analyze the fixed points that the systems converge to. We select α = 0.8, as it corresponds to a region where all models have been able to find the ground state with success rates close to the optimum during the scans of α and β. In the top panels, we calculate the success rate to reach the three highest cut values for the different models (Fig. 5a–d). We find that the polynomial model in Fig. 5a is unable to reach the ground state at any point in the scan. We have verified this by initializing the polynomial model in the correct ground state configuration and observe that the system instead converges to excited states of the implemented target Ising Hamiltonian. We have also tested other instances in the α − β parameter scan, where the ground state was reached by the polynomial model. We have found that these instances are transient states that pass through the ground state before converging to an excited state. The probability of reaching the ground state through these transient states is at just 2 percent per run and thus significantly lower than the success rate of any of the other model, hence causing the high TTS in Fig. 4. When considering the fixed points of the polynomial model, we are therefore unable to find any point in the parameter space at which the fixed point corresponding to the ground state exists. For the other models on the other hand, the ground state exists for increasing β and the systems are all able to converge to the optimal solution at a much higher success rate. We, therefore, find that the nonlinear transfer function can considerably affect the ability to correctly implemented the desired Ising model.

Success rate (top), standard deviation δ of the fixed point (middle), and amplitude distribution (bottom) as a function of β for the polynomial (a), sigmoid (b), periodic (c) and clipped model (d). The success rate shows the probability of reaching the three highest cut values with the ground state at C = 1436. The standard deviation and the amplitude distribution have been calculated once the system reaches a steady state and are the average over the three highest cut values. The implemented MaxCut problem is g05100,5 with α fixed at α = 0.8.

Since the failure of the mapping to the target Ising Hamiltonian is typically associated with amplitude inhomogeneity of the fixed point29, we quantify the amount of inhomogeneity for the different models. We measure the standard deviation \(\delta (| {x}_{i}^{* }| )\) of the absolute value of the amplitude \(| {x}_{i}^{* }|\) for the fixed points corresponding to the three highest cut values. In the middle panel of Fig. 5, we show \(\delta (| {x}_{i}^{* }| )\) as a function of β and compare it to the success rate in the upper row of Fig. 5 for each model (a–d). For the polynomial model, \(\delta (| {x}_{i}^{* }| )\) continuously increases with β and eventually doubles relative to the value at the bifurcation point. In the lower panel of Fig. 5a, we show exemplary amplitude distributions for the polynomial model, which are smoothly and broadly distributed around the fixed points of the isolated spins in Fig. 2b. We observe how the distribution becomes broader for high β as amplitude inhomogeneity increases. For the other nonlinearities however, this trend is entirely reversed. While all nonlinearities start at a similar level of amplitude inhomogeneity at the bifurcation, the sigmoid, periodic and clipped models all exhibit a decrease of \(\delta (| {x}_{i}^{* }| )\) with β. The distributions become squeezed for high β so that almost all of the spins become pinned to a level corresponding to the saturation levels in Fig. 2b and amplitude inhomogeneity mostly vanishes. This is particularly pronounced for the clipped models, where the distribution is the narrowest of all the transfer functions. When comparing \(\delta (| {x}_{i}^{* }| )\) with the success rate, we observe a clear correlation between the ability to find the ground state and the amount of amplitude inhomogeneity across the different models. As the inhomogeneity decreases, the implemented Ising model becomes closer to the target Hamiltonian and the ability to find the optimal solution is restored. Interestingly, while the amount of inhomogeneity is comparable between the different models, the success rate is not identical. Although the clipped model has an overall lower inhomogeneity, the success rate is highest for the periodic model. The lower success rate for the clipped model indicates that the spectrum of multistable excited states is different, either in the total number of states or in the size of their attractors. This shows that, while the suppression of inhomogeneity ensures the existence of the ground state at very similar values for β for the different models in Fig. 5, there can still be differences for the excited states. These differences are likely caused by the additional nonlinear terms in the Lyapunov function that are also discussed in previous sections and in the methods section.

Overall, we find that the suppression of amplitude inhomogeneity leads to an overall improvement of the time-to-solution over the polynomial model across the different problems in Fig. 4. Still, the computational performance advantage over the polynomial model only manifests itself for some of the problems. We attribute this to the varying difficulty of the different graphs contained in the Biq Mac library. For randomly generated graphs with spin numbers limited to N = 100, there is a rather high probability of generating easy instances that can be solved in polynomial time46. We expect these easy instances to be more robust against faulty mapping due to amplitude inhomogeneity, while the differences in computational performance are more pronounced for difficult problems. To test this, we perform MaxCut benchmarks with graphs contained in the SuiteSparse Matrix Collection47. Compared to the Biq Mac library, the SuiteSparse Matrix Collection is a collection of sparse graphs with both unweighted (Jij = − 1) as well as bimodal edges (\({J}_{ij}=\left\{-1,1\right\}\)). The library contains both random and geometric topologies with spin numbers between N = 800 and N = 5000. Many of the instances contained in the SuiteSparse Matrix Collection are considered difficult and exact solutions are often not known.

In Fig. 6a, we show the relative distance ΔC = 100(1 − C/Copt) in percent of the best solution obtained by the different nonlinearities C from the best-known value reported in literature Copt48,49. We find that all systems achieve solutions that are within just a few percent of or at the best known solution. Remarkably, this makes them comparable to state-of-the-art optimization methods such as simulated annealing or branch-and-bound algorithms without having to employ complex annealing schedules to further increase the solution quality. Considering the performance differences between the nonlinearities however, we can again observe that the polynomial model performs worse with an average distance of ΔCpoly = 1.3 percent from the best-known solution. The periodic and the clipped model achieve an average distances of ΔCper = 1.1 and ΔCclip = 0.7 respectively, while the best performance is achieved by the sigmoid nonlinearity with an average distance of ΔCsig = 0.6. This performance difference is especially striking for the set of bimodal problems with random connectivity and non-uniform node degree (G18, G19, G20, G21, G39, G40, G41, G42), where we observe improvements of up to four percent in the cut value over the polynomial model. For such bimodal problems, the probability of finding easy instances is significantly smaller than for unweighted graphs48, so that they can generally be assumed to be more difficult problems. We can thus observe a clear advantage in computational performance for such difficult problems that is gained by suppressing amplitude inhomogeneity through the nonlinear transfer function.

a Distance of the best solution obtained by the polynomial, sigmoid, periodic, and clipped model from the best known solution for the SuiteSparse Matrix Collection benchmark tasks. b Time-to-solution of the periodic, sigmoid and clipped model relative to the polynomial model. The absolute value for the time-to-solution for the polynomial model is indicated by the numbers above the bars for the polynomial model. In cases where the polynomial model reaches only 95 percent of the best solution, TTS95 is shown instead (indicated by brackets around the TTS). Cases, where the polynomial model fails to reach 95 percent of the best solution, are indicated by NA.

This advantage is also reflected in the best time-to-solution obtained by the different models, which is shown in Fig. 6b relative to the polynomial model (absolute values for all models are given in Supplementary Tables 2 and 3). Since the ground state is not always reached for all problems, we consider the time-to-solution to reach 98 percent of the ground state TTS98. For instances where the solution of the polynomial model is more than 2 percent away from the best-known solution, TTS95 is shown instead (indicated by brackets around the time-to-solution). Cases, where the solution quality of the polynomial model is more than five percent away from the optimum, are not considered in the following and indicated by TTS = NA in Fig. 6b. Similar to the Biq Mac library in Fig. 4, we find that the polynomial model performs worse on average, while the sigmoid and the clipped model perform the best. For various problems, improvements of up to three orders of magnitude in the time-to-solution are obtained over the polynomial model. The largest differences are observed for graphs with a random connectivity and non-uniform node density, which can generally be considered to contain more difficult instances46. This again indicates a link between problem hardness and susceptibility to amplitude inhomogeneity. For uniform node densities and non-random connections on the other hand, which typically contain more easy instances46, we observe a lower susceptibility to amplitude inhomogeneity.

Discussion

We show how different gain-dissipative Ising machine designs can be unified in a single nonlinear feedback system that is fully described by three dimensionless parameters. Based on the generic pitchfork normal form, we describe how analog Ising spins can be generated by mimicking the general shape of the nonlinear transfer function of the polynomial model and discuss the performance of Ising machines based on periodic, and clipped functions. By analyzing the Lyapunov function of the different nonlinear systems, we identify their ability to encode global energy minima of the Ising model as fixed points, whose stability is controlled by the linear gain. We find that different existing Ising machine concepts are in principal equally capable of implementing optimization problems and also demonstrate that sigmoid functions can be used as an alternative way of implementing analog spins that have not been considered in the context of Ising machines yet. Since the physical implementation of sigmoid functions has been investigated intensively as activation functions of artificial neurons, this creates an interesting link between Ising machines and neuromorphic computing concepts.

By performing benchmarks based on NP-hard MaxCut problems, we investigate the influence of the nonlinear transfer function on the quality of the solutions and the time to reach them. For both small and large-scale problems, we report significant differences in the computational performance for the different nonlinearities. While systems based on the pitchfork normal form may not be able to find the ground state, Ising machines using periodic, clipped, and sigmoid nonlinearities offer better solution quality and a shorter time-to-solution for the same problems. Compared to the polynomial model, we observe improvements of up to three orders of magnitude in the time-to-solution and up to four percent in the solution quality relative to the optimal solution. With all systems evolving at the same dynamical timescale, we identify faulty mapping to the target Ising Hamiltonian as the cause for these performance differences. Due to this faulty mapping, local minima of the Ising Hamiltonian are stabilized while the ground state solution becomes destabilized. We link these mapping errors to amplitude inhomogeneity, which is caused by the analog nature of the spin system. Periodic, sigmoid, and clipped transfer functions differ from the polynomial model in that they saturate for a large gain. This causes squeezing of the amplitude distribution and reduces inhomogeneity as the gain is increased. We observe a direct correlation between this reduced inhomogeneity and the ability to find optimal solutions, which leads us to conclude that suppression of amplitude inhomogeneity through the transfer function can significantly aid in enhancing the computational performance of analog spin systems.

This provides an intuitive explanation to some of the performance differences that have been reported for existing Ising machine concepts. In line with recent reports13,19,27, we find a clear computational advantage for systems with a saturable nonlinearity. The high sensitivity of computational performance to the nonlinear transfer function, therefore, strengthens the choice of such saturable nonlinearities for the design of analog Ising machines instead of systems based on pitchfork normal forms, while also motivating the search for other suitable nonlinear systems for future generations of Ising machines. Furthermore, saturable nonlinearities present an intriguing alternative to current approaches that aim to eliminate amplitude inhomogeneity by controlling the linear gain of each individual spin to force them to the same amplitude. While such systems have shown significant improvements in the solution quality25,26,50,51, the necessity to control the gain of each spin creates a significant overhead and requires the addition of an active feedback system to the analog Ising machine. Using the transfer function to pin the spins to the same level instead provides a completely passive alternative that could retain the speed advantage of a fully analog system. This approach is also compatible with recently proposed annealing schemes that could further enhance the solution quality48,52,53. Finally, beyond the considerations in this work, the sensitivity of computational performance of Ising machines to the shape of the transfer function could be further exploited to design nonlinear systems that are optimized for performance in specific optimization tasks. Combined with optical systems that are able to implement arbitrary nonlinear transfer functions37,38, this would bring Ising machines closer to becoming fast and efficient accelerators for difficult optimization tasks.

Methods

Condition for existence of fixed points

In the following, we show the derivation of condition eq. (5) both for homogeneous and inhomogeneous distributions of the fixed point amplitude \({x}_{i}^{* }\) for the polynomial model29. To consider both cases, we assume that \({x}_{i}^{* }\) can be written as \({x}_{i}^{* }=x{\sigma }_{i}\), where x is the absolute value of the homogeneous spin amplitude (x ≥ 0) and σi is the spin state. To introduce inhomogeneity, we include an additional pre-factor νi for each spin so that \({x}_{i}^{* }={\nu }_{i}x{\sigma }_{i}\). νi has a mean of \(\bar{{\nu }_{i}}=1\) and follows a distribution with the standard deviation δ. In case of a homogeneous amplitude distribution, δ = 0 and all νi are equal to one. The fixed points of the system can be found by setting the equation of motion equal to zero:

In the case of x > 0, summation over all spins leads to:

In order for a fixed point besides the trivial solution (x = 0) to exist, the r.h.s. has to be larger than zero (x > 0). This leads to the following inequality that describes the condition of existence for fixed points:

In the case of homogenous spin amplitudes (νj = νi), this corresponds to the inequality in eq. (5). The right-hand side of eq. (13) contains the Ising Hamiltonian (1) with the effective coupling matrix \(J^{\prime} ={J}_{ij}\frac{{\nu }_{j}}{{\nu }_{i}}\). This shows that for a homogeneous amplitude distribution, the implemented Hamiltonian is equivalent to the target Ising Hamiltonian, since \(J^{\prime} ={J}_{ij}\). For inhomogeneous amplitude distributions on the other hand, the implemented Ising Hamiltonian differs from the target Hamiltonian since every matrix element Jij is modified by a factor of \(\frac{{\nu }_{j}}{{\nu }_{i}}\).

Approximation of the nonlinear transfer function for the sigmoid and periodic models

To enable a direct comparison of the equations of motions for the periodic and sigmoid models against the polynomial model, eq. (6) and eq. (7) are approximated with polynomials. The polynomial approximation of the transfer function for the sigmoid model

follows from a third-order Taylor expansion. Here, we consider a multivariable Taylor series for the spin amplitude of the isolated system xi and the spins injected by coupling with other spins xj for small values (xi ≈ xj ≈ 0). For simplicity, we consider the sum of ∑jJijxj as a single variable. The resulting Taylor series to the third order is:

For the parameter scans of α and β, we assume that 0 ≤ α, β ≤ 1. Furthermore, we consider the weak coupling regime where α ≫ β. This means that the terms containing β contribute significantly less to the transfer function then the term only containing α, since α3 ≫ α2β ≫ αβ2 ≫ β3. While these higher-order terms can cause a deviation of the Lyapunov function for the intended Ising Hamiltonian, we can assume that these deviations are small in the weak coupling regime and that the linear coupling term β∑jJijxj is dominant. For the final transfer function, we, therefore, neglect the third-order terms in xi, xj containing β. In a similar fashion, the Taylor expansion of the transfer function for the periodic model results in:

As for the sigmoid model, we neglect the third-order terms in xi, xj containing β.

Numerical methods

Simulations of the time evolution for the differential equations (3), (6), (7), and (8) are performed using the Euler method. For the simulations, a stepwitdth of Δt = 0.1 is chosen. The number of total time steps is constant for all simulations and was chosen to be long enough to ensure convergence to a steady state (3000 iterations for the BiqMac library, 5000 for the SuiteSparse Matrix library). At the beginning of each simulation, the system is initialized in the trivial fixed point {xi} = 0 and left to evolve with α and β at constant values during the entire evolution. The time to reach a given solution (e.g., the ground state) for each simulation is evaluated by tracking the Ising energy during the evolution and taking the point when the system first reaches the desired solution (either by converging or by a transient state). For the scans of α and β, the parameters were varied in the range 0 ≤ α, β ≤ 1. For each parameter point, the success rate and the time-to-solution were assessed from 50 independent simulations.

Data availability

The authors declare that all relevant data are included in the manuscript. Additional data are available from the corresponding author upon reasonable request.

References

Theis, T. N. & Wong, H.-S. P. The end of Moore’s law: a new beginning for information technology. Comput. Sci. Eng. 19, 41–50 (2017).

Strubell, E., Ganesh, A., McCallum, A. Energy and policy considerations for deep learning in NLP. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics 1, 3645-3650 (2019).

Andrae, A. & Edler, T. On global electricity usage of communication technology: trends to 2030. Challenges 6, 117–157 (2015).

Johnson, M. W. et al. Quantum annealing with manufactured spins. Nature 473, 194–198 (2011).

Yamamoto, Y. et al. Coherent Ising machines- optical neural networks operating at the quantum limit. npj Quantum Inform. 3, 49 (2017).

Denchev, V. S. et al. What is the computational value of finite-range tunneling? Phys. Rev. X 6, 031015 (2016).

Inagaki, T. et al. A coherent Ising machine for 2000-node optimization problems. Science 354, 603–606 (2016).

Haribara, Y., Utsunomiya, S. & Yamamoto, Y. Computational principle and performance evaluation of coherent Ising machine based on degenerate optical parametric oscillator network. Entropy 18, 151 (2016).

Salathé, Y. et al. Digital quantum simulation of spin models with circuit quantum electrodynamics. Phys. Rev. X 5, 021027 (2015).

Barends, R. et al. Digitized adiabatic quantum computing with a superconducting circuit. Nature 534, 222–226 (2016).

Kim, K. et al. Quantum simulation of frustrated Ising spins with trapped ions. Nature 465, 590–593 (2010).

Yamaoka, M. et al. A 20k-spin Ising chip to solve combinatorial optimization problems with CMOS annealing. IEEE J. Solid-State Circuits 51, 303–309 (2016).

Chou, J., Bramhavar, S., Ghosh, S. & Herzog, W. Analog coupled oscillator based weigthed Ising machine. Sci. Rep. 9, 14786 (2019).

Utsonomiya, S., Takata, K. & Yamamoto, Y. Mapping of Ising models onto injection-locker laser systems. Opt. Express 19, 18091 (2011).

Shoji, T., Aihara, K. & Yamamoto, Y. Quantum model for coherent Ising machines: stochastic differential equations with replicator dynamics. Phys. Rev. A 96, 053833 (2017).

Babaeian, M. et al. A single shot coherent Ising machine based on a network of injection-locked multicore lasers. Nat. Commun. 10, 3516 (2019).

Wang, Z., Marandi, A., Wen, K., Byer, R. L. & Yamamoto, Y. Coherent Ising machine based on degenrate optical parametric oscillators. Phys. Rev. A 88, 063853 (2013).

McMahon, P. L. et al. A fully programmable 100-spin coherent Ising machine with all-to-all connections. Science 354, 614–617 (2016).

Böhm, F., Verschaffelt, G. & Van der Sande, G. A poor-man’s coherent Ising machine based on opto-electronic feedback system for solving optimization problems. Nat. Commun. 10, 3538 (2019).

Berloff, N. G. et al. Realizing the classical XY Hamiltonian in polariton simulators. Nat. Mater. 16, 1120–1126 (2017).

Tezak, N. et al. Integrated coherent Ising machines based on self-phase modulation in microring resonators. IEEE J. Sel. Top. Quantum Electron. 26, 5900115 (2020).

Pierangeli, D., Marcucci, G. & Conti, C. Large-scale photonic ising machine by spatial light modulation. Phys. Rev. Lett. 122, 213902 (2019).

Prahbu, M. et al. Accelerating recurrent Ising machines in photonic integrated circuits. Optica 7, 551 (2020).

Okawachi, Y. et al. Demonstration of chip-based coupled degenerate optical parametric oscillators for realizing nanophotonic spin-glass. Nat. Commun. 11, 4119 (2020).

Leleu, T., Yamamoto, Y., McMahon, P. & Aihara, K. Destabilization of local minima in analog spin systems by correction of amplitude heterogeneity. Phys. Rev. Lett. 122, 040607 (2020).

Kalinin, K. & Berloff, N. G. Global optimization of spin Hamiltonians with gain-dissipative systems. Sci. Rep. 8, 17791 (2018).

Tiunov, E. S., Ulanov, A. E. & Lvovsky, A. I. Annealing by stimulating the coherent Ising machine. Opt. Express 27, 10288 (2019).

Goto, H., Tatsumura, K. & Dixon, A. R. Combinatorial optimization by simulating adiabatic bifurcations in nonlinear Hamiltonian systems. Sci. Adv. 5, eaav2372 (2019).

Leleu, T., Yamamoto, Y., Utsunomiya, S. & Aihara, K. Combinatorial optimization using dynamical phase transitions in driven-dissipative systems. Phys. Rev. E 95, 022118 (2017).

Böhm, F. et al. Understanding dynamics of coherent Ising machines through simulation of large-scale 2D Ising models. Nat. Commun. 9, 5020 (2018).

Inagaki, T. et al. Large-scale Ising spin network based on degenerate parametric oscillators. Nat. Photonics 10, 415–419 (2016).

Lucas, A. Ising formulation of many NP-hard problems. Front. Phys. 2, 1–15 (2014).

Kuehn, C. A mathematical framework for critical transitions: bifurcations, fast-slow systems and stochastic dynamics. Phys. D: Nonlinear Phenom. 240, 1020–1035 (2011).

Kanao, T. & Goto, H. High-accuracy Ising machine using Kerr-nonlinear parametric oscillator with local four-body interaction. npj Quantum Inform. 7, 18 (2021).

Hopfield, J. J. & Tank, D. W. "Neural" computation of decisions in optimization problems. Biol. Cybern. 52, 141–152 (1985).

Miscuglio, M. et al. All-optical nonlinear activation function for photonic neural networks. Optical Mater. Express 8, 3851 (2018).

Williamson, I. A. D. et al. Reprogrammable electro-optic nonlinear activation functions for optical neural networks. IEEE J. Sel. Top. Quantum Electron. 26, 7700412 (2020).

Jha, A., Huang, C. & Prucnal, P. R. Reconfigurable all-optical nonlinear activation function for neuromorphic photonics. Opt. Lett. 45, 4819 (2020).

Mourgias-Alexandris, G. et al. An all-optical neuron with sigmoid activation function. Opt. Express 27, 9620 (2019).

Lu, C., Shi., B., Chen, L. Analogue circuit realization of a programmable sigmoidal function and its derivative for on-chip BP learning, In Proceedings of IEEE Asia-Pacific Conference on Circuits and System. Electronic Communication Systems (Cat. No.00EX394), 626–629 (2000).

Tsmots, I., Skorokhoda, O., Rabyk, V. Hardware implementation of sigmoid activation functions using FPGA. In Proceedings of IEEE 15th International Conference on the Experience of Designing and Application of CAD Systems (CADSM), 34–38 (2019).

Rendl, F., Rinaldi, G., Wiegele, A. A Branch and bound algorithm for max-cut based on combining semidefinite and polyhedral relaxations. In Integer Programming and Combinatorial Optimization, vol. 4513 LNCS, 295–309 (Springer Heidelberg, Berlin, Heidelberg, 2007).

Roques-Carmes, C. et al. Heuristic recurrent algorithm for photonic Ising machines. Nat. Commun. 11, 249 (2020).

Pierangeli, D., Marcucci, G., Brunner, D. & Conti, C. Noise-enhanced spatial-photonic Ising machine. Nanophotonics 9, 4109–4116 (2020).

Garey, M., Johnson, D. S. Computers and Intractability: A Guide to the Theory of NP-Completeness (W. H. Freeman & Co, New York, NY, USA 1990).

Kalinin, K., Berloff, N. G. Complexity continuum within Ising formulations of NP problems. http://arxiv.org/abs/2008.00466 (2020).

Davis, T. A. & Hu, Y. The University of Florida sparse matrix collection. ACM Trans. Math. Softw. 38, 1–25 (2011).

Wang, T., Roychowdhurry, J. OIM: oscillator-based ising machines for solving combinatorial optimization problems. In Lecture Notes in Computer Science (including Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 11493 LNCS, 232-256 (2019).

Ma, F. & Hao, J.-K. A multiple search operator heuristic for the max-k-cut problem. Ann. Oper. Res. 248, 365–403 (2017).

Leleu, T. et al. Scaling advantage of nonrelaxational dynamics for high-performance combinatorial optimization. http://arxiv.org/abs/2009.04084 (2020).

Goto, H. et al. High-performance combinatorial optimization based on classical mechanics. Sci. Adv. 7, eabe7953 (2021).

Pierangeli, D., Marcucci, G. & Conti, C. Adiabatic evolution on a spatial-photonic Ising machine. Optica 7, 1535 (2020).

Mills, K., Ronagh, P. & Tamblyn, I. Finding the ground state of spin Hamiltonians with reinforcement learning. Nat. Mach. Intell. 2, 509–517 (2020).

Acknowledgements

We acknowledge financial support from the Research Foundation Flanders (FWO) under the grants G028618N, G029519N, and G006020N as well as the Hercules Foundation and the Research Council of the Vrije Universiteit Brussel. T.V.V. would like to thank R. Beausoleil for his mentorship.

Author information

Authors and Affiliations

Contributions

F.B. performed the simulations and analyzed the data. F.B., T.V.V., G.V., and G.V.d.S. discussed the results and wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interest.

Additional information

Peer review information Communications Physics thanks the anonymous reviewers for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Böhm, F., Vaerenbergh, T.V., Verschaffelt, G. et al. Order-of-magnitude differences in computational performance of analog Ising machines induced by the choice of nonlinearity. Commun Phys 4, 149 (2021). https://doi.org/10.1038/s42005-021-00655-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-021-00655-8

This article is cited by

-

Overdamped Ising machine with stochastic resonance phenomena in large noise condition

Nonlinear Dynamics (2024)

-

Recent progress on coherent computation based on quantum squeezing

AAPPS Bulletin (2023)

-

Large-scale coherent Ising machine based on optoelectronic parametric oscillator

Light: Science & Applications (2022)

-

Noise-injected analog Ising machines enable ultrafast statistical sampling and machine learning

Nature Communications (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.