Abstract

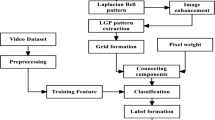

In the environment, water is present in a wide variety of scenes, and its detection enables a broad range of applications such as outdoor/indoor surveillance, maritime surveillance, scene understanding, content-based video retrieval, and automated driving as for unmanned ground and aerial vehicles. However, the detection of water is a delicate issue since it can appear in quite varied forms. Moreover, it can adopt simultaneously several textural, chromatic and reflectance properties. The diversification of water appearances makes their classification in the same class a quite difficult task using the general dynamic texture recognition methods. In this work, we propose a new more appropriate approach for recognition and segmentation of water in videos. In this approach, we start with a preprocessing phase in which we homogenize the aspect of the different aquatic surfaces by eliminating any differences in coloration, reflection and illumination. In this phase, a pixel-wise comparison is introduced leading to a unidirectional binarization. Two segmentation steps are then deployed: preliminary segmentation and final segmentation. In the first segmentation, candidate regions are first generated and then classified by applying our spatiotemporal descriptor. This descriptor investigates both spatial and temporal behavior of textures on a local scale through a sliding window. Secondly, a superpixel segmentation is applied in order to regularize the classification results. The proposed approach has been tested using both the recent Video Water and the DynTex databases. Furthermore, it has been compared with other similar work, and with other dynamic texture and material recognition methods. The obtained results show the efficiency of the proposed approach over the other methods. Additionally, its low computational cost makes it suitable for real-time applications.

Similar content being viewed by others

REFERENCES

R. Gaetano, G. Scarpa, and G. Poggi, “Hierarchical texture-based segmentation of multiresolution remote-sensing images,” IEEE Trans. Geosci. Remote Sens. 47 (7), 2129–2141 (2009).

G. Serra, C. Grana, and R. Cucchiara, “Covariance of covariance features for image classification,” in Proceedings of the ACM International Conference on Multimedia Retrieval 2014 (2014), pp. 411–414.

A. Rankin and L. Matthies, “Daytime water detection based on color variation,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (2010), pp. 215–221.

A. Rankin, L. Matthies, and P. Bellutta, “Daytime water detection based on sky reflections,” in 2011 IEEE International Conference on Robotics and Automation (2011), pp. 5329–5336.

S. Scherer, J. Rehder, S. Achar, H. Cover, A. Chambers, S. Nuske, and S. Singh, “River mapping from a flying robot: State estimation, river detection, and obstacle mapping,” Autonom. Rob. 33, 189–214 (2012).

P. Mettes, R. T. Tan, and R. C. Veltkamp, “Water detection through spatio-temporal invariant descriptors,” Comput. Vision Image Understanding 154, 182–191 (2017).

R. C. Gonzalez and R. E. Woods, Digital Image Processing, 2nd ed. (Prentice Hall, Upper Saddle River, NJ, 2002).

C. Zheng, D. Sun, and L. Zheng, “Recent applications of image texture for evaluation of food qualities - a review,” Trends Food Sci. Technol. 17 (3), 113–128 (2006).

K. Lloyd, P. Rosin, D. Marshall, and S. Moore, “Detecting violent and abnormal crowd activity using temporal analysis of grey level co-occurrence matrix (GLCM)-based texture measures,” Mach. Vision Appl. 28, 361–371 (2017).

T. Kasparis, D. Charalampidis, M. Georgiopoulos, and J. Rolland, “Segmentation of textured images based on fractals and image filtering,” Pattern Recognit. 34, 1963–1973 (2001).

T. Ojala, M. Pietikainen, and T. Maenpaa, “Multiresolution gray-scale and rotation invariant texture classification with local binary patterns,” Pattern Anal. Mach. Intell. 24 (7), 971–987 (2002).

G. Loy, “Fast computation of the Gabor wavelet transform,” in DICTA2002: Digital Image Computing Techniques and Applications (2002), pp. 279–284.

U. Indhal and T. Næs, “Evaluation of alternative spectral feature extraction methods of textural images for multivariate modeling,” J. Chemom. 12, 261–278 (1998).

R. Gonzalez and R. Woods, Digital Image Processing, 3rd ed. (Pearson Prentice Hall, Upper Saddle River, NJ, 2008).

T. Du-Ming and C. Wan-Ling, “Coffee plantation area recognition in satellite images using Fourier transform,” Comput. Electron. Agric. 135, 115–127 (2017).

D. Chetverikov and R. Péteri, “A brief survey of dynamic texture description and recognition,” in Computer Recognition Systems (Springer, 2005), pp. 17–26.

J. Zhong and S. Sclaroff, “Segmenting foreground objects from a dynamic textured background via a robust Kalman filter,” in Proceedings Ninth IEEE International Conference on Computer Vision (2003), Vol. 1, pp. 44–50.

V. Mahadevan and N. Vasconcelos, “Background subtraction in highly dynamic scenes,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2008), pp. 1–6.

V. Kaltsa, K. Avgerinakis, A. Briassouli, I. Kompatsiaris, and M. Strintzis, “Dynamic texture recognition and localization in machine vision for outdoor environments,” Comput. Ind. 98, 1–13 (2018).

A. Mumtaz, E. Coviello, G. Lanckriet, and A. Chan, “A scalable and accurate descriptor for dynamic textures using bag of system trees,” IEEE Trans. Pattern Anal. Mach. Intell. 37, 697–712 (2015).

Y. Xu, Y. Quan, Z. Zhang, H. Ling, and H. Ji, “Classifying dynamic textures via spatiotemporal fractal analysis,” Pattern Recognit. 48 (10), 3239–3248 (2015).

G. Doretto, E. Jones, and S. Soatto, “Spatially homogeneous dynamic textures,” in Computer Vision-ECCV 2004 (Springer, 2004), pp. 591–602.

F. Yang, G.-S. Xia, G. Liu, L. Zhang, and X. Huang, “Dynamic texture recognition by aggregating spatial and temporal features via ensemble {SVMs},” Neurocomputing 173, 1310–1321 (2016).

G. Zhao and M. Pietikäinen, “Dynamic texture recognition using local binary patterns with an application to facial expressions,” IEEE Trans. Pattern Anal. Mach. Intell. 29 (6), 915–928 (2007).

G. Zhao and M. Pietikäinen, “Dynamic texture recognition using volume local binary patterns,” in Dynamic Vision (Springer, 2007), pp. 165–177.

D. Tiwari and V. Tyagi, “Dynamic texture recognition based on completed volume local binary pattern,” Multidimens. Syst. Signal Process. 27 (2), 563–575 (2016).

R. C. Nelson and R. Polana, “Qualitative recognition of motion using temporal texture,” CVGIP: Image Understanding 56 (1), 78–89 (1992).

S. Fazekas and D. Chetverikov, “Dynamic texture recognition using optical flow features and temporal periodicity,” in 2007 International Workshop on Content-Based Multimedia Indexing (2007), pp. 25–32.

J. Chen, G. Zhao, M. Salo, E. Rahtu, and M. Pietikainen, “Automatic dynamic texture segmentation using local descriptors and optical flow,” IEEE Trans. Image Process. 22 (1), 326–339 (2013).

A. Rankin, L. Matthies, and A. Huertas, “Daytime water detection by fusing multiple cues for autonomous off-road navigation,” in Proceedings of the 24th Army Science Conference (2006), pp. 177–184.

A. Rankin and L. Matthies, “Daytime water detection and localization for unmanned ground vehicle autonomous navigation,” in Proceedings of the 25th Army Science Conference (2006).

A. Smith, M. Teal, and P. Voles, “The statistical characterization of the sea for the segmentation of maritime images,” in EC-VIP-MC 2003, 4th EURASIP Conference Focused on Video/Image Processing and Multimedia Communications (2003), Vol. 2, pp. 489–494.

J. Harchanko and D. Chenault, “Water-surface object detection and classification using imaging polarimetry,” Polariz. Sci. Remote Sens. II 5888, 330–336 (2005).

A. Štricelj and Z. Kacic, “Detection of objects on waters’ surfaces using CEIEMV method,” Comput. Electr. Eng. 46, 511–527 (2015).

P. Santana, R. Mendonça, and J. Barata, “Water detection with segmentation guided dynamic texture recognition,” in Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO) (2012), pp. 1836–1841.

M. Prasad, A. Chakraborty, R. Chalasani, and S. Chandran, “Quadcopter-based stagnant water identification,” in Proceedings of the Fifth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG) (2015), pp. 1–4.

Y. Wu, X. Zhang, Y. Xiao, and J. Feng, “Attention neural network for water image classification under IoT environment,” Appl. Sci. 10, 909 (2020).

S. Murali and V. K. Govindan, “Shadow detection and removal from a single image using LAB color space,” Cybern. Inf. Technol. 13 (2013).

W. Mokrzycki and M. Tatol, “Color difference Delta E - A survey,” Mach. Graphics Vision 20, 383–411 (2011).

P. Turaga, R. Chellappa, and A. Veeraraghavan, “Advances in video-based human activity analysis: Challenges and approaches,” Adv. Comput. 80, 237–290 (2010).

G. Catalano, A. Gallace, B. Kim, S. Pedro, and F. S. B. Kim, Optical Flow. Technical Report (2009).

B. Horn and B. Schunck, “Determining optical flow,” Artif. Intell. 17, 185–203 (1981).

B. Lucas and T. Kanade, “An iterative image registration technique with an application to stereo vision (IJCAI),” in Proceedings of Imaging Understanding Workshop (1981), pp. 121–130.

N. Otsu, “A threshold selection method from gray-level histograms,” IEEE Trans. Syst. Man Cybern. 9 (1), 62–66 (1979).

M. Frigo and S. Johnson, “The design and implementation of FFTW3,” Proc. IEEE 93 (2), 216–231 (2005).

S. Beauchemin and J. Barron, “The computation of optical flow,” ACM Comput. Surv. 27, 433–466 (1995).

R. Chaudhry, A. Ravichandran, G. Hager, and R. Vidal, “Histograms of oriented optical flow and Binet-Cauchy kernels on nonlinear dynamical systems for the recognition of human actions,” in IEEE Conference on Computer Vision and Pattern Recognition (2009), pp. 1932–1939.

J. Pers, V. Kenk, M. Kristan, M. Perše, K. Polanec, and S. Kovačič, “Histograms of optical flow for efficient representation of body motion,” Pattern Recognit. Lett. 31, 1369–1376 (2010).

R. Achanta, A. Shaji, K. Smith, A. Lucchi, P. Fua, and S. Susstrunk, “SLIC superpixels compared to state-of-the-art superpixel methods,” IEEE Trans. Pattern Anal. Mach. Intell. 34 (11), 2274–2282 (2012).

R. Péteri, S. Fazekas, and M. J. Huiskes, “DynTex: A comprehensive database of dynamic textures,” Pattern Recognit. Lett. 31 (12), 1627–1632 (2010).

X. Qi, C.-G. Li, G. Zhao, X. Hong, and M. Pietikäinen, “Dynamic texture and scene classification by transferring deep image features,” Neurocomputing 171, 1230–1241 (2016).

N. Dalal and B. Triggs, “Histograms of oriented gradients for human detection,” in IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'05) (2005), Vol. 1, pp. 886–893.

J. Chen, Z. Chen, Z. Chi, and H. Fu, “Facial expression recognition in video with multiple feature fusion,” IEEE Trans. Affective Comput. 9 (1), 38–50 (2016).

T. F. Chan and L. A. Vese, “Active contours without edges,” IEEE Trans. Image Process. 10 (2), 266–277 (2001).

D. Arthur and S. Vassilvitskii, “k-means++: The advantages of careful seeding,” in Proc. of the Annu. ACM-SIAM Symp. on Discrete Algorithms (2007), Vol. 8, 1027–1035.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

CONFLICT OF INTEREST

The authors declare that they have no conflict of interest.

COMPLIANCE WITH ETHICAL STANDARDS

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Anass Mançour-Billah was born and brought up in Morocco. He received his Bachelor of Engineering degree in Computer Science from the National School of Applied Sciences, Ibn Zohr University, Agadir, Morocco, in 2014. He is currently pursuing Ph.D. in Image and Signal Processing at Ibn Zohr University. His research interests comprise image processing, computer vision, machine learning and optimization.

Abdenbi Abenaou was born in Casablanca, Morocco. He is graduated from The Bonch-Bruevich St. Petersburg State University of Telecommunications, Saint Petersburg, Russia. He obtained his Ph.D. degree in Technical Sciences from the St. Petersburg State Electrotechnical University “LETI”, in 2005. He is currently a professor at the department of Software Engineering in National School of Applied Sciences, Agadir, Morocco. His research interests lie in the field of signal and image processing.

El Hassan Ait Laasri is an assistant professor of Electronics at Ibn Zohr University, Agadir, Morocco. His research interests lie in the field of instrumentation and signal & image processing using both classical processing methods and artificial intelligence techniques. During his thesis, he had worked on two monitoring systems: A structural health monitoring system (Italy) and a seismic monitoring system (Morocco). Several tasks were developed based on a variety of signal processing methods and artificial intelligence techniques such as neuronal network and fuzzy logic.

Driss Agliz was born and brought up in Morocco. He studied Physics and Chemistry, and holds, in 1983, his BSc degree (Licence Es-Sciences Physique) in Physics from the University Cadi Ayyad, Marrakech, Morocco. He then joined Rennes I University – France, where he got, in 1984, a MSc. degree (DEA: Diplôme des Etudes Approfondies) in materials sciences. He pursued his research on the studies and got, in 1987, a PhD degree from the University of Rennes I – France. Dr. D. Agliz started his teaching career on 1987 as Assistant Professor in the faculty of Science at University Ibn Zohr. Then he holds a “Doctorat d’Etat” thesis in 1997. He is currently Full Professor in National School of Applied Sciences, University Ibn Zohr, Agadir, Morocco. He has been working in several fields, such signal processing, materials sciences. He is supervising PhD, MSc as well as BSc students in the field of signal processing and renewable energies.

Rights and permissions

About this article

Cite this article

Mançour-Billah, A., Abenaou, A., Laasri, E.H. et al. Water Recognition and Segmentation in the Environment Using a Spatiotemporal Approach. Pattern Recognit. Image Anal. 31, 295–312 (2021). https://doi.org/10.1134/S1054661821020127

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1054661821020127