Abstract

In this paper we propose a stable finite difference method to solve the fractional reaction–diffusion systems in a two-dimensional domain. The space discretization is implemented by the weighted shifted Grünwald difference (WSGD) which results in a stiff system of nonlinear ordinary differential equations (ODEs). This system of ordinary differential equations is solved by an efficient compact implicit integration factor (cIIF) method. The stability of the second order cIIF scheme is proved in the discrete \(L^{2}\)-norm. We also prove the second-order convergence of the proposed scheme. Numerical examples are given to demonstrate the accuracy, efficiency, and robustness of the method.

Similar content being viewed by others

1 Introduction

The space-fractional reaction–diffusion equations, in which the integer-order differential operator is replaced by a corresponding fractional one, have gained great popularity due to the wide application in physics [1], finance [2], and biology [3–6]. These new fractional-order models provide a more adequate description of many processes than those of the integer-order for many processes with anomalous diffusion. This is because factional-order operators possess the nonlocal nature property such that the models can describe the phenomena showing the effect of memory and hereditary properties [7].

In this paper, we consider the following two-dimensional space-fractional reaction–diffusion equation

where \(1<\alpha _{x},\alpha _{y}\leq 2\) are the space fractional orders, \(K_{x},K_{y}>0\) are the diffusion constants. The Riesz fractional derivatives \(\frac{\partial ^{\alpha _{x}}u}{\partial |x|^{\alpha _{x}}}\) and \(\frac{\partial ^{\alpha _{y}}u}{\partial |y|^{\alpha _{y}}}\) are defined as follows:

where \({}_{a}{D}_{x}^{\alpha _{x}}\) and \({}_{x}{D}_{b}^{\alpha _{x}}\) are left and right Riemann–Liouville fractional derivatives defined as

Here \(\Gamma (\cdot )\) denotes the standard Gamma function.

Owing to the applications of fractional differential equations in many fields, it is important to seek effective method for these fractional models. The fractional differential equations are solved and analyzed by various analytical methods, such as monotone iterative method [8], Green function method [9], Laplace transform method [10], homotopy perturbation transform method [11], and other methods [12–16]. However, the analytic solutions of special fractional differential equations are in the form of trigonometric series, and it is very difficult to calculate them. For this reason, the study for the numerical methods has become an important research topic that offers great potential.

In recent years, various classical numerical methods have been extended to solve the fractional reaction–diffusion equations, such as the finite difference method (FDM) [17–20], finite element method (FEM) [21–24], and spectral method [25–27]. The finite difference methods are accepted as one of the most popular numerical methods for fractional reaction–diffusion equations because they allow easy implementation. Meerschaert and Tadjeran proposed the first-order shifted Grünwald formula in [18]. Based on the former work, Tian et al. developed weighted shifted Grünwald–Letnikov (WSGD) formulas [20]. Ortigueira firstly proposed the so-called fractional central difference scheme in [19]. Then Çelik and Duman analyzed this approximation and applied it to fractional diffusion equations [17].

The space discretization of fractional reaction–diffusion equation leads to a nonlinear ordinary differential equations (ODEs). Accurate and efficient simulations of such systems have lead many researcher to the design of various time discretization methods. The most notable method among them is the alternating direction implicit (ADI) technique. However, the discretization matrix obtained is full. It means that the Thomas algorithm for tridiagonal matrix is no longer applicable. It also should be noted that the ADI methods are limited to second-order accuracy in time. In this paper, an efficient compact implicit integration factor (cIIF) method [28, 29] is developed. By introducing the compact representation for the matrix approximating the differential operator, the cIIF methods apply matrix exponential operations sequentially in every spatial direction. As a result, exponential matrices which are calculated and stored have small sizes, compared to those in the 1D problem. For two or three dimensions, the cIIF method is more efficient in both storage and CPU cost.

The main contributions of this work are as follows:

-

We provide an efficient approach to compute solutions of the two-dimensional fractional reaction–diffusion equations even with millions of difference points.

-

We prove that the second-order cIIF scheme is stable in the discrete \(L^{2}\)-norm.

-

We prove the second-order convergence of the proposed scheme, and the numerical tests verify the theoretical analysis.

The rest of the paper is organized as follows: In Sect. 2 we present some notations and discretize the two-dimensional fractional reaction–diffusion equations into nonlinear ODEs in matrix formulation. In Sect. 3, we present the cIIF time discretization scheme. In Sect. 4 we compute various fractional reaction–diffusion equations to demonstrate the convergence rates and the efficiency of the proposed scheme. Finally, we summarize our conclusion in Sect. 5.

2 Numerical method

2.1 Compact finite difference method

The computation domain Ω is discretized into grids described by the set \((x_{j},y_{k})=(a+jh_{x},c+kh_{y})\) where \(h_{x}=(b-a)/N_{x}, h_{y}=(d-c)/N_{y}\) and \(0\leq j\leq N_{x}, 0\leq k\leq N_{y}\). We first introduce the second-order WSGD method derived in [20] to approximate the Riemann–Liouville space fractional derivatives. The essential idea of this approximation is using the weighted average to eliminate the low-order leading terms in asymptotic expansions for the truncation errors. The WSGD formulas for the left and right Riemann–Liouville fractional derivatives in the x-direction are defined as

for \(j=1,\dots ,N_{x}-1, k=1,\dots ,N_{y}-1\). The coefficients \(\omega _{k}^{(\alpha )}\) of the second order in (5) are given as

with

We refer the reader to the paper [20] for the detailed proof of the truncation error expression.

Based on the discretization of Riemann–Liouville fractional derivatives, the Riesz fractional derivatives in the x-direction can be discretized as

where \(\delta _{x}^{\alpha }u(x_{j},y_{k})= \frac{-1}{2\cos \frac{\pi \alpha }{2}}\frac{1}{h_{x}^{\alpha }} ( \sum_{l=0}^{j+1}\omega _{l}^{(\alpha )}u(x_{j-l+1},y_{k}) + \sum_{l=0}^{N-j+1}\omega _{l}^{(\alpha )}u(x_{j+l-1},y_{k}) )\). Similarly, Riesz fractional derivatives in the y-direction can be discretized as follows:

where \(\delta _{y}^{\alpha }u(x_{j},y_{k})= \frac{-1}{2\cos \frac{\pi \alpha }{2}}\frac{1}{h_{y}^{\alpha }} ( \sum_{l=0}^{k+1}\omega _{l}^{(\alpha )}u(x_{j},y_{k-l+1}) + \sum_{l=0}^{N-k+1}\omega _{l}^{(\alpha )}u(x_{j+l-1},y_{k}) )\).

Defining the grid function \(U_{j,k}(t)=u(x_{j},y_{k},t)\), the semidiscrete compact difference scheme for problem (1) can be written as

where \(R_{j,k}(t)=O(h^{2})\), \(h=\min \{h_{x},h_{y}\}\), denotes the truncation error in space. Omitting the truncation term \(R_{j,k}(t)\) and replacing the grid function \(U_{j,k}(t)\) with its numerical approximation \(u_{j,k}(t)\), we obtain the following difference scheme:

To apply the time discretization method for the ODEs (10), we now rewrite them in a matrix form. Define the numerical solution in the following matrix forms:

For a solution matrix \(\mathbf{U}\in \mathbb{R}^{N_{x}-1\times N_{y}-1}\), the discrete \(L^{2}\)-norm of U is defined as

Then we have

where \(\|\mathbf{U}\|_{F}\) is Frobenius norm. The 2-norm for matrix U is defined as \(\|\mathbf{U}\|_{2}=\sigma _{\max }(\mathbf{U})\) where \(\sigma _{\max }(\mathbf{U})\) represents the largest singular value of matrix U. In the case of a normal matrix U, \(\|\mathbf{U}\|_{2}=\lambda _{\max }(\mathbf{U})\), with \(\lambda _{\max }(\mathbf{U})\) being the absolute value of the largest eigenvalue of U.

Then introducing the \(M\times M\) difference matrix \(\mathbf{D}_{M}^{\alpha }\) as follows:

the difference scheme (8) and (9) can be written in the following matrix form:

Let \(K_{x}\mathbf{D}_{N_{x}-1}^{\alpha }=\mathbf{A}\) and \(K_{y}\mathbf{D}_{N_{y}-1}^{\alpha }=\mathbf{B}\). Then the ODEs (10) can be written in the following matrix form:

where \(\mathbf{F}(\mathbf{U})\) is an \((N_{x}-1)\times (N_{y}-1)\) matrix with each element defined as \(F(u_{j,k})\).

2.2 Compact implicit integration factor method

Now we will apply the cIIF method to solve (15). Assume the final time is \(t=T\) and let time step \(\triangle t=T/N\), \(t_{n}=n\triangle t, 0\leq n< N\). The numerical approximation matrix for \(u_{j,k}(t)\) at time \(t_{n}\) is defined as \(\mathbf{U}_{n}\). To construct the cIIF methods for (15), we multiply it by the integration factor \(e^{-\mathbf{A}t}\) from the left, and by \(e^{-\mathbf{B}t}\) from the right, and then integrate over one time step from \(t_{n}\) to \(t_{n+1}\) to obtain

Then we approximate the integrand in (16) by using the (\(r-1\))th order Lagrange interpolation polynomial with interpolation points at \(t_{n+1},t_{n},\ldots ,t_{n-r+2}\), namely

where

The local truncation error is \(O(\Delta t^{r})\). In this paper we use the following second-order compact implicit integration factor (cIIF2) scheme:

The local truncation error for (18) can be written in matrix form as \(\mathbf{E}=(E_{jk})_{N_{x}-1\times N_{y}-1}\) with each element being \(E_{jk}=O(\Delta t^{3}+\Delta th^{2})\).

3 Stability and convergence

We will study the linear stability of the numerical scheme for equation (18) with reaction term \(\mathcal{F}(u)\) satisfying the Lipschitz continuity condition. To obtain the linear stability analysis, we need the following lemmas.

Lemma 3.1

(See [30])

When \(1<\alpha \le 2\), the WSGD operator matrix \(\mathbf{D}_{M}^{\alpha }\) in (13) is a symmetric and negative definite matrix.

Lemma 3.2

Let A and B be arbitrary \(N\times N\) square matrices. We have the following inequalities:

Proof

Write the matrix B as \(\mathbf{B}=(\mathbf{b}_{1},\mathbf{b}_{2},\ldots ,\mathbf{b}_{N})\), where the \(\mathbf{b}_{i},i=1,2,\ldots ,N\), are the columns of B. Then we have

Taking square roots completes the proof. □

Theorem 3.3

Assume that function \(f(u)\) in (1) is globally Lipschitz continuous, i.e., there exists a real constant L such that \(|f(u)-f(v)|\leq L|u-v|\). The fourth-order cFDM coupled with cIIF2 scheme (18) is unconditionally stable in the discrete \(L^{2}\)-norm.

Proof

Assume that \(\mathbf{U}^{1}_{n+1}\) and \(\mathbf{U}^{2}_{n+1}\) are two different solutions of (18) with different initial data sets. Let \(\boldsymbol{\Phi }_{n+1}=\mathbf{U}^{1}_{n+1}-\mathbf{U}^{2}_{n+1}\), then it satisfies

By Lemma 3.2 and Lipschitz continuity, taking Frobenius norm for (19) results in

Since the matrices A and B are negative definite, the eigenvalues of matrix \(e^{\mathbf{A}\triangle t}\) are smaller than 1 such that \(\|e^{\mathbf{A}\triangle t}\|_{2}\leq 1\) and \(\|e^{\mathbf{B}\triangle t}\|_{2}\leq 1\). We get the following result from (20):

With time iterations, we have

From the above result (22), it can be concluded that

Then (23) yields the following result:

Now we complete the proof by (12). □

Theorem 3.4

Assume that function \(f(u)\) in (1) is globally Lipschitz continuous. The fourth-order cFDM coupled with cIIF2 scheme (18) converges to the solution of fractional problem (1) with order of \(O(\Delta t^{2}+h^{4})\) in the discrete \(L^{2}\)-norm.

Proof

We denote by \(\boldsymbol{\widetilde{U}}\) and U the exact value and the approximation of solution matrix of (1), respectively. Substituting \(\boldsymbol{\widetilde{U}}\) into (18), we have

where \(\mathbf{E}=(E_{jk})_{N_{x}-1\times N_{y}-1}\) and \(E_{jk}=O(\Delta t^{3}+\Delta th^{4})\). Denote \(\boldsymbol{\Psi }=\boldsymbol{\widetilde{U}}-\mathbf{U}\). Subtracting (25) from (18) yields the following error equation:

It is obvious that \(\boldsymbol{\Psi }_{0}=0\) for the initial condition. Taking Frobenius norm for (26) and applying Theorem 3.3, we obtain

by Lipschitz continuity. Here C denotes a positive constant independent of discretization parameters, which may take different values at different occurrences. As in the proof of Theorem 3.3, we have

As time evolves, we have

Multiplying by \(h_{x}h_{y}\) on both sides of the above inequality, we get \(\|\boldsymbol{\Psi }_{n}\|\leq CT(b-a)(d-c)(\Delta t^{2}+h^{4})\). This completes the proof. □

4 Numerical experiments

In this section, we will demonstrate the performance of the proposed scheme on some test problems. Firstly, in order to verify our theoretical analysis, we test our scheme for a nonlinear reaction–diffusion equation with exact solution. Then we apply the scheme to the two-dimensional space Riesz fractional Fitzhugh–Nagumo model which represents one of the simplest models for studying excitable media. In all the numerical experiments, we used a uniform spatial step size along each direction, i.e., \(h_{x}=h_{y}=h\). All the computations were performed in Matlab based on a ThinkPad desktop with i3-3110 CPU and 4 GB memory.

Example 1

We consider the following nonlinear reaction–diffusion equation on a square \(\Omega =[0,1]^{2}\):

with \(F(u)=-u^{2}\). The exact solution of this equation is \(u=e^{-t}x^{2}(1-x)^{2}y^{2}(1-y)^{2}\). The final computation time is \(T=1\). The function \(f(x,y,t)\) is determined by the exact solution. For details of \(f(x,y,t)\), please refer to [31].

We use the second-order WSGD method coupled with cIIF scheme to demonstrate the accuracy of space and time discretization. The orders of convergence in space and time are computed as \(q=\log _{2} (e(h)/e(h/2) )\) and \(p=\log _{2} (e(\tau )/e(\tau /2) )\), respectively. Table 1 displays the \(L^{2}\) errors of the WSGD scheme with different values of \(\alpha _{x}\) and \(\alpha _{y}\). The order of convergence is computed using a very small time step \(\Delta t=1\mathrm{E}-3\). In Table 2, the temporal errors and convergence order of the cIIF2 scheme are given for different time steps and \(\alpha _{x}\) and \(\alpha _{y}\), with \(h=1/256\). From Tables 1 and 2, we conclude that the convergence rate in space and time is of second order. The numerical results are well in line with the theoretical analysis.

Example 2

Consider the following space fractional Fitzhugh–Nagumo model on a square \(\Omega =[0,2.5]^{2}\):

with \(\mu =0.1,\varepsilon =0.01,\beta =0.5,\gamma =1\), and \(\delta =0\). The Fitzhugh–Nagumo model is used to describe the propagation of the transmembrane potential in the nerve axon. The initial conditions in this example are taken as

with homogeneous Dirichlet boundary condition.

The Fitzhugh–Nagumo model can generate the stable patterns in the form of spiral waves. Initially, we set the state in the lower-left quarter of the domain as \(u=1\) and in the upper-half part as \(w=0.1\). Then the trivial state \((u,w)=(0,0)\) was perturbed and further curved and rotated clockwise, generating the spiral pattern.

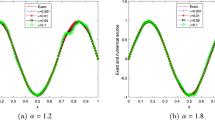

In our simulation, the computation domain is discretised using \(N_{x}=N_{y}=256\) points in each spatial coordinate. The time step is chosen as \(\Delta t=0.2\) and the final time is set to be \(T=1000\). We first consider the integer-order Fitzhugh–Nagumo model (i.e., \(\alpha _{x}=\alpha _{y}=2\)). The spiral wave solutions for two diffusion coefficients \(K_{x}=K_{y}=1\mathrm{E}-4\) and \(K_{x}=K_{y}=1\mathrm{E}-5\) are shown in Fig. 1. We find that the number of wavefronts increases with decreasing the diffusion coefficient. Keeping the coefficients \(K_{x}=K_{y}=1\mathrm{E}-4\) and reducing the fractional powers \(\alpha _{x}\) and \(\alpha _{y}\), we then get the stable rotating solution for \(\alpha _{x}=\alpha _{y}=1.8 \) (Fig. 2(a)) and \(\alpha _{x}=\alpha _{y}=1.5\) (Fig. 2(b)). As expected, the width of the excitation wavefront (red areas) reduces with decreasing \(\alpha _{x}\) and \(\alpha _{y}\), so does the number of the wavefronts. However, it is noted that the role of reducing the fractional power is not equivalent to the influence of a decreased diffusion coefficient in the integral order case. This can be clearly observed by comparing Figs. 1 and 2.

Solutions of Example 2 with \(\alpha _{x}=\alpha _{y}=2\) for varying diffusion coefficient: (a) \(K_{x}=K_{y}=1\mathrm{E}-4\) and (b) \(K_{x}=K_{y}=1\mathrm{E}-5\)

Solutions of Example 2 with \(K_{x}=K_{y}=1\mathrm{E}-4\) for varying fractional power: (a) \(\alpha _{x}=\alpha _{y}=1.8\) and (b) \(\alpha _{x}=\alpha _{y}=1.5\)

We take the different diffusion coefficients in the x- and y-directions as \(K_{x}=1\mathrm{E}-4, K_{y}=2.5\mathrm{E}-5\). Figure 3(a) displays the wave propagation at \(T=1000\). The smaller diffusion coefficient in the y-direction leads to an elliptical pattern. Next we consider the anisotropic fractional power \(\alpha _{x}=2, \alpha _{y}=1.7\) with \(K_{x}=K_{y}=1\mathrm{E}-4\). A similar pattern is displayed in Fig. 3(b), showing that the superdiffusion effect in the y-direction causes a similar elliptic spiral wave.

Solutions of Example 2 for varying diffusion coefficient and fractional power: (a) \(\alpha _{x}=\alpha _{y}=2\) and \(K_{x}=1\mathrm{E}-4, K_{y}=2.5\mathrm{E}-5\), (b) \(\alpha _{x}=2, \alpha _{y}=1.7\) and \(K_{x}=K_{y}=1\mathrm{E}-4\)

5 Concluding remarks

In this paper, the weighted shifted Grünwald difference method is developed to solve the two-dimensional Riesz space fractional nonlinear reaction–diffusion equation, in which the temporal component is discretized by the compact implicit integration factor method. The advantage of the method is that computing exponentials of large matrices is reduced to the computation of exponentials of matrices of significantly smaller sizes. The stability and convergence are strictly proven, which shows that the compact implicit integration factor method is stable in \(L^{2}\)-norm and convergent with second-order accuracy. The WSGD method coupled with cIIF method is applied to solve the fractional Fitzhugh–Nagumo model. Numerical experiments are provided to verify the theoretical analysis. The numerical results confirm that the proposed method is a powerful and reliable method for the fractional reaction–diffusion equations. Given its efficiency and good stability conditions, the cIIF method can be extended to the fourth-order fractional parabolic equations (e.g., Cahn–Hilliard equations), which will also be further explored in the future work.

Availability of data and materials

Not applicable.

References

Obrecht, C., Saut, J.C.: Remarks on the full dispersion Davey–Stewartson systems. Commun. Pure Appl. Anal. 14(4), 1547–1561 (2015)

Wang, Z., Huang, X., Shi, G.: Analysis of nonlinear dynamics and chaos in a fractional order financial system with time delay. Comput. Math. Appl. 62(3), 1531–1539 (2011)

Wang, Z., Wang, X., Li, Y., Huang, X.: Stability and Hopf bifurcation of fractional-order complex-valued single neuron model with time delay. Inter. J. Bif. Chaos 27(13), 1750209 (2018)

Kumar, S., Kumar, R., Cattani, C., Samet, B.: Chaotic behaviour of fractional predator-prey dynamical system. Chaos Solitons Fractals 135, 109811 (2020)

Ghanbari, B., Kumar, S., Kumar, R.: A study of behaviour for immune and tumor cells in immunogenetic tumour model with non-singular fractional derivative. Chaos Solitons Fractals 133, 109619 (2020)

Kumar, S., Kumar, A., Samet, B., Gómez-Aguilar, J.F., Osman, M.S.: A chaos study of tumor and effector cells in fractional tumor-immune model for cancer treatment. Chaos Solitons Fractals 141, 110321 (2020)

Goufo, E.F.D., Kumar, S., Mugisha, S.B.: Similarities in a fifth-order evolution equation with and with no singular kernel. Chaos Solitons Fractals 130, 109467 (2020)

Bai, Z., Zhang, S., Sun, S., Yin, C.: Monotone iterative method for fractional differential equations. Elect. J. Diff. Equat. 2016, Article ID 6 (2016)

Dong, X., Bai, Z., Zhang, S.: Positive solutions to boundary value problems of p-Laplacian with fractional derivative. Boundary Value Problems 2017(1), 5 (2017)

Kumar, S., Nisar, K.S., Kumar, R., Cattani, C., Samet, B.: A new Rabotnov fractional-exponential function-based fractional derivative for diffusion equation under external force. Math. Meth. Appl. Sci. 43(7), 4460–4471 (2020)

Kumar, S., Ghosh, S., Samet, B., Goufo, E.F.D.: An analysis for heat equations arises in diffusion process using new Yang–Abdel–Aty–Cattani fractional operator. Math. Meth. Appl. Sci. 43(9), 6062–6080 (2020)

Kumar, S., Kumar, R., Agarwal, R.P., Samet, B.: A study of fractional Lotka–Volterra population model using Haar wavelet and Adams–Bashforth–Moulton methods. Math. Meth. Appl. Sci. 43(8), 5564–5578 (2020)

Kumar, S.: A new analytical modelling for fractional telegraph equation via Laplace transform. Appl. Math. Model. 38(13), 3154–3163 (2014)

Kumar, S., Rashidi, M.M.: New analytical method for gas dynamics equation arising in shock fronts. Computer Physics Communications 185(7), 1947–1954 (2014)

Kumar, S., Kumar, A., Baleanu, D.: Two analytical methods for time-fractional nonlinear coupled Boussinesq–Burger’s equations arise in propagation of shallow water waves. Nonlinear Dynamics 85(2), 699–715 (2016)

Kumar, S., Kumar, D., Abbasbandy, S., Rashidi, M.M.: Analytical solution of fractional Navier–Stokes equation by using modifed Laplace decomposition method. Ain Shams Engineering Journal 5(2), 569–574 (2014)

Çelik, C., Duman, M.: Crank–Nicolson method for the fractional diffusion equation with the Riesz fractional derivative. J. Comput. Phys. 231(4), 1743–1750 (2012)

Meerschaert, M.M., Tadjeran, C.: Finite difference approximations for fractional advection–dispersion flow equations. J. Comput. Appl. Math. 172(1), 65–77 (2004)

Ortigueira, M.D.: Riesz potential operators and inverses via fractional centred derivatives. Int. J. Math. Math. Sci. 2006, 48391 (2006)

Tian, W., Zhou, H., Deng, W.: A class of second order difference approximations for solving space fractional diffusion equations. Math. Comput. 84(294), 1703–1727 (2015)

Bu, W., Tang, Y., Yang, J.: Galerkin finite element method for two-dimensional Riesz space fractional diffusion equations. J. Comput. Phys. 276, 26–38 (2014)

Duan, B., Zheng, Z., Cao, W.: Finite element method for a kind of two-dimensional space fractional diffusion equation with its implementation. Am. J. Comput. Math. 5(2), 135–157 (2015)

Deng, W.: Finite element method for the space and time fractional Fokker–Planck equation. SIAM J. Numer. Anal. 47(1), 204–226 (2008)

Yang, Z., Yuan, Z., Nie, Y., Wang, J., Zhu, X., Liu, F.: Finite element method for nonlinear Riesz space fractional diffusion equations on irregular domains. J. Comput. Phys. 330, 863–883 (2017)

Bueno-Orovio, A., Kay, D., Burrage, K.: Fourier spectral methods for fractional-in-space reaction diffusion equations. BIT Numer. Math. 54(4), 937–954 (2014)

Pindza, E., Owolabi, K.M.: Fourier spectral method for higher order space fractional reaction–diffusion equations. Commun. Nonlinear Sci. Numer. Simul. 40, 112–128 (2016)

Zayernouri, M., Karniadakis, G.E.: Fractional spectral collocation method. SIAM J. Sci. Comput. 36(1), 40–62 (2014)

Nie, Q., Wan, F.Y.M., Zhang, Y.T., Liu, X.F.: Compact integration factor methods in high spatial dimensions. J. Comput. Phys. 227(10), 5238–5255 (2008)

Zhang, R., Wang, Z., Liu, J., Liu, L.: A compact finite difference method for reaction–diffusion problems using compact integration factor methods in high spatial dimensions. Adv. Differ. Equ. 2018(1), 274 (2018)

Zhao, X., Sun, Z., Hao, Z.: A fourth-order compact ADI scheme for two-dimensional nonlinear space fractional Schrödinger equation. SIAM J. Sci. Comput. 36(6), 2865–2886 (2014)

Zeng, F., Liu, F., Li, C., Burrage, K., Turner, I., Anh, V.: A Crank–Nicolson ADI spectral method for a two-dimensional Riesz space fractional nonlinear reaction–diffusion equation. SIAM J. Numer. Anal. 52(6), 2599–2622 (2014)

Acknowledgements

The authors would like to thank the referees for their valuable comments and suggestions.

Funding

This work was partially supported by the National Nature Science Foundation of China (Grant No. 11971411), and Nature Science Foundation of Liaoning Province (Grant No. 20180550996).

Author information

Authors and Affiliations

Contributions

The authors declare that the study was realized in collaboration with the same responsibility. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, R., Li, M., Chen, B. et al. Stable finite difference method for fractional reaction–diffusion equations by compact implicit integration factor methods. Adv Differ Equ 2021, 307 (2021). https://doi.org/10.1186/s13662-021-03426-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-021-03426-5