Abstract

Peripersonal space (PPS) is a multisensory representation of the space near body parts facilitating interactions with the close environment. Studies on non-human and human primates agree in showing that PPS is a body part-centered representation that guides actions. Because of these characteristics, growing confusion surrounds peripersonal and arm-reaching space (ARS), that is the space one’s arm can reach. Despite neuroanatomical evidence favoring their distinction, no study has contrasted directly their respective extent and behavioral features. Here, in five experiments (N = 140) we found that PPS differs from ARS, as evidenced both by participants’ spatial and temporal performance and by its modeling. We mapped PPS and ARS using both their respective gold standard tasks and a novel multisensory facilitation paradigm. Results show that: (1) PPS is smaller than ARS; (2) multivariate analyses of spatial patterns of multisensory facilitation predict participants’ hand locations within ARS; and (3) the multisensory facilitation map shifts isomorphically following hand positions, revealing hand-centered coding of PPS, therefore pointing to a functional similarity to the receptive fields of monkeys’ multisensory neurons. A control experiment further corroborated these results and additionally ruled out the orienting of attention as the driving mechanism for the increased multisensory facilitation near the hand. In sharp contrast, ARS mapping results in a larger spatial extent, with undistinguishable patterns across hand positions, cross-validating the conclusion that PPS and ARS are distinct spatial representations. These findings show a need for refinement of theoretical models of PPS, which is relevant to constructs as diverse as self-representation, social interpersonal distance, and motor control.

Similar content being viewed by others

Introduction

Seminal studies described multisensory neurons in primates’ fronto-parietal regions coding for the space surrounding the body, termed peripersonal space (PPS) (Colby et al., 1993;Graziano & Gross, 1993 ; Rizzolatti et al., 1981a, 1981b). These neurons display visual receptive fields anchored to tactile ones and protruding over a limited area (~5 to 30 cm) from specific body parts (e.g., the hand) (Graziano & Gross, 1993; Rizzolatti et al., 1981a, 1981b). Neuroimaging results in humans are in line with these findings: ventral and anterior intraparietal sulcus, ventral and dorsal premotor cortices and putamen integrate visual, tactile and proprioceptive signals, allowing for a body part-centered representation of space (Brozzoli et al., 2011, 2012). Behaviorally, visual stimuli modulate responses to touches of the hand more strongly when presented near compared to far from it (Farnè et al., 2005; Làdavas & Farnè, 2004; Serino et al., 2015; Spence et al., 2004), a mechanism proposed to subserve both defensive (de Haan et al., 2016; Graziano & Cooke, 2006) and acquisitive aims (Brozzoli et al., 2009, 2010; Brozzoli et al., 2014; De Vignemont & Iannetti, 2014; Patané et al., 2019).

As a multisensory interface guiding interactions with the environment, PPS shares some characteristics with the arm-reaching space (ARS), the space reachable by extending the arm without moving the trunk (Coello et al., 2008). In humans, ARS tasks typically require judging the reachability of a stimulus (Carello et al., 1989; Coello & Iwanow, 2006). Despite their anatomo-functional differences (Desmurget et al., 1999; Filimon, 2010; Lara et al., 2018; Pitzalis et al., 2013), some research on human PPS diverged from the original electrophysiological findings and combined ARS and PPS (Coello et al., 2008; Iachini et al., 2014; Vieira et al., 2020). However, multisensory stimuli within ARS and close to the hand activate neural areas typically associated with PPS, whereas the same stimuli within ARS, but far from the hand, do not (Brozzoli et al., 2012; Graziano et al., 1994). To date, no empirical evidence exists to distinguish these spatial representations. The consequences of this conflation on spatial models of multisensory facilitation have to date been neglected, despite the crucial role it plays in sensorimotor control (Makin et al., 2017; Suminski et al., 2009, 2010) and the study of the bodily self (Blanke et al., 2015; Makin et al., 2008).

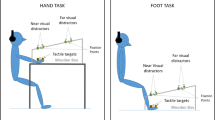

Here we leveraged empirical outcomes to disentangle two alternative theoretical models, hypothesizing that PPS and ARS are either identical or distinct spatial representations. To ensure fair comparative bases for this purpose, and to allow making clear alternative predictions, we set two pre-requisites: (1) not to oppose PPS and ARS in the context of different functions, and (2) to test both spaces with reference to the same body part. Thus, in Experiment 1 we used a tactile detection task and computed multisensory (visuo-tactile) facilitation, a typical proxy of PPS extent. In Experiment 2 we used a reachability judgment task and computed the point of subjective equality (PSE), a typical estimate of the ARS extent (Bourgeois & Coello, 2012). As visual and tactile stimuli were harmless and semantically neutral, our tasks were devoid of any defensive or social function. In addition, both PPS and ARS tasks were applied in reference to the hand, as PPS has been shown to be hand-centered (di Pellegrino et al., 1997) and what we can reach (ARS) is defined by how far our hand can reach (Coello & Iwanow, 2006), thus fulfilling the criteria for a fair comparison. Two additional experiments manipulated hand vision (visible or not) and position (close or distant), to progressively equate the reachability task to the multisensory conditions of Experiment 1.

Following this rationale, if PPS and ARS are equal, we should observe similar spatial extents from multisensory facilitation and reachability estimates. In addition, we should observe facilitation from all visual stimuli falling within ARS independently of hand position. Conversely, we should measure different spatial extents and observe multisensory facilitation only for stimuli near the hand, as a function of its position, resulting in specific and distinguishable spatial patterns of multisensory facilitation.

Experiment 1

Methods

Participants

We calculated our sample size with G*Power 3.1.9.2, setting the 10*2 (V-Position*Hand Position) within-interaction for a RM ANOVA hypothesizing a power of 0.85, an α = 0.05 and a correlation of 0.5 between the measures. We assumed that the visuo-tactile effect size might be greater than the audio-tactile one (small, corresponding to Cohen's d=0.2 so to f = 0.1) reported by Holmes and colleagues (Holmes et al., 2020). We thus considered a medium-low effect size (f = 0.20) and we needed to recruit at least 23 participants per study. All participants were right-handed, as evaluated via the Edinburgh Handedness Test (mean score 82%). Twenty-seven subjects (13 females; mean age = 26.12 years, range = 20–34; mean arm length = 79.41 ± 5.83 cm, measured from the acromion to the tip of the right middle finger) participated in Experiment 1.

All participants reported normal or corrected-to-normal vision, normal tactile sensitivity, and no history of psychiatric disorders. They gave their informed consent before taking part in the study, which was approved by the local ethics committee (Comité d’Evaluation de l’Ethique de l’Inserm, n° 17-425, IRB00003888, IORG0003254, FWA00005831) and was carried out in accordance with the Declaration of Helsinki. Participants were paid 15 € each.

Stimuli and apparatus

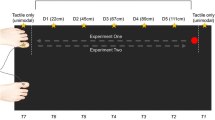

Visual stimuli were identical for both the experiments. We used a projector (Panasonic PT-LM1E_C) to present a two-dimensional (2D) gray circle (RGB = 32, 32, 32) in one of ten positions, ranging from near to far from the body. The diameter of the gray circle was corrected for retinal size using the formula:

where 3 cm is the diameter of the circle, 57 cm is the distance from the eye at which 1° of the visual field roughly corresponds to 1 cm, and x is the distance of the center of the stimulus from the point at 57 cm. Visual stimulus duration was 500 ms. The fixation cross (2.5 cm) was projected along the body’s sagittal axis (see Fig. 1). The ten positions were calibrated such that the sixth one corresponded to the objective limit of reachability for each participant. We ensured this before the experiment: participants stayed with eyes closed, their head on a chinrest (30 cm high), and placed their right hand as far as possible on the table. Starting from the sixth position, four positions were computed beyond the reachable limit and five closer to the participant’s body, 8 cm rightward with respect to the body’s sagittal axis. Positions, uniformly separated by 9 cm, spanned along 90 cm of space and were labelled V-P1 to V-P10, from the closest to the farthest (see Fig. 1).

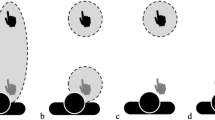

Experimental setup across experiments. a Positions of right hand, fixation cross, and visual stimuli. b and c The close hand (b) and the distant hand condition (c). In both experiments, the visual stimuli (here displayed as gray circles) were projected one at a time, in one of the ten possible positions (from V-P1 to V-P10), corrected for retinal size (a–c). Tactile and visual stimuli were presented alone (unisensory) or coupled synchronously with each other (multisensory). Globally, we adopted two conditions of unisensory stimulation (only tactile or visual stimulation) and a multisensory condition (visuo-tactile stimulation). To these, we added catch trials (nor visual nor tactile stimuli presented) to monitor participant’s compliance

Tactile stimuli were brief electrocutaneous stimulations (100 μs, 400 mV) delivered to the right index finger via a constant current stimulator (DS7A, DigiTimer, UK) through a pair of disposable electrodes (1.5*1.9 cm, Neuroline, Ambu, Denmark). Their intensity was determined through an ascending and a descending staircase procedure, incrementing and decrementing, respectively, the intensity of the stimulation to find the minimum intensity at which the participant could detect 100% of the touches over ten consecutive stimulations. Intensity was further increased by 10% before the first and third experimental block.

Design and procedure

Participants performed a speeded tactile detection task. Tactile stimulation of their right index finger could be delivered alone or synchronous to a visual one, in one of the ten positions (see Fig. 1). Participants rested with their head on the chinrest and eyes on the fixation cross. Their right hand was placed on the table 16 cm rightward from the body’s sagittal axis, with the tip of the middle finger corresponding to V-P2 (hereafter close hand) or V-P6 (hereafter distant hand), in different blocks counterbalanced across participants (116 randomized trials per block): two blocks with the close hand and two with the distant hand. Considering the distance between the positions of visual stimulation, the hand in the distant position covers positions V-4 (wrist), V-5, and V-P6 (tip of the middle finger), and the hand in the close position is flanked by the positions V-P1 and V-P2 (see Fig. 1). Each hand condition included 16 visuo-tactile (VT) stimulations per position and 16 unimodal tactile trials (T trials). To ensure compliance with task instructions, there were also four unimodal visual trials per position (V trials) and 16 trials with no stimulation (N trials). Participants had to respond to the tactile stimulus as fast as possible by pressing a pedal with their right foot. The total duration of the experiment was about 45 min.

Analyses

Both the experiments adopted a within-subject design. When necessary, Greenhouse-Geisser sphericity correction was applied. The first analyses focused on the accuracy of the performance. Four participants performed poorly (>2 SD from mean) and were excluded from further analyses.

To have a direct index of the proportion of multisensory facilitation over the unimodal tactile condition, we calculated the Multisensory Gain (MG):

TM was the average reaction time (RT) for unimodal tactile stimuli, and VT was the raw RT for a multisensory visuo-tactile stimulus. Larger MG values correspond to greater facilitation (namely, larger benefits for VT compared to T conditions). This measure is more rigorous than an absolute delta, as it allows correction of the RTs considering the subject-specific speed for each visual position and for each position of the hand (analyses on the delta RT are also reported in the Appendix – Experiment 1). Computing MG values per hand and stimulus position, we obtained two vectors of 10 MG values (from V-P1 to V-P10) for each participant: one for the close hand and one for the distant hand. We applied a multivariate SVM approach (Vapnick, 1995) to test whether a data-driven classifier could reliably predict the position of the hand from the spatial pattern of MG. The SVM was trained on (N – 1) participants (leave-one-out strategy) and tested on the two vectors excluded from training, using a linear kernel. Overall accuracy was calculated as the sum of the correct predictions for both hand positions divided by the total number of predictions.

To map multisensory facilitation more locally, we compared Bonferroni-corrected MG values for each position against zero and performed a Hand (close vs. distant)*Position (V-P1 to V-P10) within-subject ANOVA.

To compare the shape of these multisensory facilitation maps, we first tested which function better fit the spatial pattern and, second, we cross-correlated them to test their shapes for isomorphism. MG values were fitted to sigmoidal and normal curves, limited to two parameters. Table 1 reports formulas for curve fitting (Curve Fitting toolbox) with MATLAB (version R2016b, MathWorks, Natick, MA, USA). Similar to previous work (Canzoneri et al., 2012; Serino et al., 2015), we considered a good sigmoidal fit when data fitted a descending slope, indicating a facilitation close to the body that fades away with increasing distances.

Next, we performed a cross-correlation analysis on MG values to evaluate the isomorphism of the facilitation curve for both hand positions. Our prediction was that shifting the close hand pattern of facilitation distally (i.e., towards the distant-hand position), should bring higher correlations due to the overlap of the curves. We correlated the pattern of averaged MG values for all reachable stimuli (V-P1 to V-P6) in the close hand condition, with that of six averaged MG values observed in the distant hand condition. The correlation was then tested for four incremental position shifts (distally, one per position), up to the last shift, where we correlated the V-P1 to V-P6 pattern for the close hand with the V-P5 to V-P10 pattern of the distant hand.

Results

We tested the effect of VT stimulation over ten uniformly spaced positions, to obtain a fine-grained map of patterns of multisensory facilitation (validated in a pilot study). Participants performed accurately (90% hits, < 2% false alarms). First, the multivariate classifier was able to predict the two positions of the hand with an accuracy of 0.72 (33/46 correct classifications), with no bias for one hand position over the other (17/23 and 16/23 for the close and distant hands, respectively). This accuracy was significantly higher than chance (one-tailed binomial test p = 0.002). Hence, different patterns of multisensory facilitation were associated with different hand positions within the ARS.

A V-Position*Hand repeated-measures ANOVA (Fig. 2a) revealed a significant main effect of V-Position (F(5.85,128.71) = 3.52, p = 0.003, η2p = 0.14), further modulated by hand position, as indicated by the significant interaction (F(6.45,141.85) = 3.47, p = 0.002, η2p = 0.14). Tukey-corrected multiple t-test comparisons revealed faster responses in V-P2 than in V-P4 and in all the positions from V-P6 to V-P10 when the hand was close (all ps < 0.05 except V-P2 vs. V-P8, p = 0.054); responses were faster in V-P4 than in V-P1, V-P2, V-P3, V-P8, V-P9, and V-P10 when the hand was distant (all ps < 0.05). Critically, the MG was larger in V-P2 when the hand was close than when it was distant (p = 0.041). This pattern was reversed in V-P4, where the MG was larger when the hand was distant than when it was close (p = 0.022). No other differences were significant.

Different patterns of hand-centered multisensory facilitation within ARS. a Multisensory gain (MG) values along the ten visual positions, ranging from near to far space, for the distant (yellow) and the close (green) hand conditions. Higher values of MG represent stronger facilitation in terms of RT in the multisensory condition than in the unisensory tactile baseline (by definition, MG = 0). Error bars represent the standard error of the mean. Asterisks represent a significant difference (p < 0.05, corrected). b and c Number of trials reporting MG values greater than zero (unisensory tactile baseline) along the ten visual positions, ranging from near to far space, for the close (b) and the distant (c) hand conditions

To identify where multisensory facilitation was significant at the single position level, we ran a series of Bonferroni-corrected t-tests on the MG values versus 0 (i.e., no facilitation). When the hand was close, the MG significantly differed from 0 in V-P2 and V-P3 (all ps < 0.05). In contrast, when the hand was distant, the MG was larger in V-P4, V-P5 (all ps < 0.05) and marginally in V-P6 (p = 0.055). Figure 2 shows the number of trials reporting MG values greater than 0 with the hand close (2b) and distant (2c). The density peak shifted coherently with the position of the hand within ARS. Similar results were obtained by analyzing the delta RT for both the ANOVA and the t-tests (see Appendix – Experiment A1). Furthermore, the results of Experiment S1 show that this multisensory facilitation does not depend on sheer attentional factors.

These findings highlight the hand-centered nature of the multisensory facilitation, occurring in different locations, depending on hand position. From this, one would expect (1) the facilitation to be maximal in correspondence with hand location and to decay with distance from it and (2) the bell-shaped pattern of facilitation to follow the hand when it changes position. To test the first prediction, we modelled our data to a Gaussian curve. To test the alternative hypothesis, namely that facilitation spreads all over the ARS to decay when approaching the reachable limit, we compared the Gaussian to a sigmoid function fitting (Canzoneri et al., 2012; Serino et al., 2015). The sigmoidal curve could fit the data for a limited number of participants (distant hand: 5/23 subjects, 21.7%; close hand: 9/23 subjects, 39.1%). Instead, fitting the Gaussian curve to the same data accommodated convergence problems for a higher number of participants (distant hand: 14/23 subjects, 59.9%; close hand: 15/23 subjects, 65.2%).

The second prediction, that the bell-shaped facilitation should shift following the hand, was confirmed by the estimation of the position of the peak of the Gaussian curve in each hand position: with the hand close, the peak fell between V-P2 and V-P3 (2.34 ± 1.51); with the hand distant, it fell between V-P4 and V-P5 (4.15 ± 1.28). We then performed a cross-correlation analysis testing whether the curves reported for the two hand positions overlapped when considered in absolute terms. We reasoned that shifting the position of the hand – within the ARS – should bring to an isomorphic facilitation around the new hand position. This would imply the maximum correlation between MG values emerge when the close-hand curve shifts distally, towards the distant-hand position curve. We considered the first six values of MG with the close hand (from V-P1 to V-P6, i.e., the reachable positions) and correlated this distribution with six values of the MG for the distant hand (Fig. 3). We found the maximum correlation (r = 0.94 p = 0.005) when shifting the close hand distally by two positions. No other correlations were significant (all ps > 0.20).

The spatial pattern of MG shifts and follows the hand within reaching space. Cross-correlation analysis of distally shifting the pattern of MG values for all reachable positions with the hand close. Red colors represent higher MG values. Values of Pearson’s r and p values are reported for all the correlations performed. The black grid highlights the only significant correlation (p < 0.05)

Discussion

The results of Experiment 1 clearly indicate that PPS and ARS are not superimposable. Yet we cannot exclude that a reachability judgment task might still capture some of the PPS features. To investigate this possibility, we performed three experiments adopting this task and the same settings of Experiment 1. The results of Experiments S2 and S3 (Appendix) replicated well-established findings about the ARS, including the overestimation of its limit (Bourgeois & Coello, 2012; Carello et al., 1989). However, they failed to show any similarity with PPS, either in terms of absolute extent (ARS is larger than PPS) or in position-dependent modulation (PPS is hand-centered, whereas ARS is not, see Appendix, S2 and S3). To allow a full comparison, in Experiment 2 we made the reachability judgment task as similar as possible to the tactile detection task, using the same hand positions and multisensory stimulations.

Experiment 2

Methods

Participants

Twenty-five (16 females; mean age = 24.44 years, range = 18–41; mean arm length = 78.46 ± 7.26 cm) participants matching the same criteria as Experiment 1 participated in Experiment 2.

Stimuli and apparatus

Visual and tactile stimuli were identical to those used in Experiment 1.

Design and procedure

We took advantage of an ARS multisensory task by asking participants to perform reachability judgments while tactile stimuli were concurrently presented with the visual stimulus. Experiment 2 was meant to assess whether the multisensory stimulation (in addition to having the hand visible and in the same positions as Experiment 1) could either induce hand-centered facilitation in the reachability task performance and/or change the extent of the reachability limit. We employed the same settings as in Experiment 1 and applied the same tactile stimulation to the right index finger, placed in either the close or the distant position. However, in this case the tactile stimulus was task-irrelevant. Overall, 160 randomized V and 160 randomized VT trials were presented for each hand position, administered in two blocks in a randomized order. The order of hand positions was counterbalanced across participants.

Analyses

Similar to Experiment 1, we tested the classifier on the MG patterns and performed the same procedures already described on delta RTs and MG. The percentage of “reachable” responses per position was calculated and then fitted to sigmoidal and normal curves, as in Experiment 1. We fitted the curves separating hand positions and type of stimulation (unimodal visual vs. multisensory visuo-tactile). Hand (close vs. distant)*Stimulation (visual vs. visuo-tactile)*Model (Gaussian vs. Sigmoid) ANOVA on RMSE (root mean square error) values assessed which model best fitted the data, both at the individual and at the group level. Either way, the best-fitting model for these data was the sigmoidal curve. Thus, we investigated the PSE and slope values by subjecting them to two separate repeated-measure ANOVAs with Hand (close vs. distant) and Stimulation (visual vs. visuo-tactile) as within-subject factors.

Results

Participants were accurate (>90% hits, <2% false alarms). We computed for each subject two vectors of MG values, as in Experiment 1, and we could leverage a similar data-driven classifier to discriminate the close from the distant hand. Prediction accuracy was lower than in Experiment 1 (0.36, 18/50 correct classifications) and not significantly higher than chance level (one-tailed binomial test p = 0.98), indicating that the classifier failed to distinguish between hand positions within ARS.

Moreover, the V-Position*Hand within-subject ANOVA on the MG did not reveal any significant effect (Hand: F(1,24) = 0.83, p = 0.37; V-Position: F(6.31,151.56) = 1.20, p = 0.31; Hand*V-Position: F(5.35,128.5) = 1.82, p = 0.11). However, the significant intercept (F(1,24) = 9.80, p = 0.005) confirmed the general facilitation produced by multisensory stimulation, with respect to the unisensory one. Multiple Bonferroni-corrected comparisons revealed that none of the positions presented an MG significantly different from 0 (all ps > 0.05) when the hand was close. V-P5 and V-P6 differed from 0 (all ps < 0.05) when the hand was distant. Similar results were obtained by analyzing the delta RT, both with ANOVA and with t-test (see Appendix – Experiment 2).

Reachability judgments were then fitted to sigmoidal and Gaussian curves. Within-subject ANOVA on the RMSE of these models was performed with a Model (sigmoidal vs. Gaussian)*Stimulation (visual vs. visuo-tactile)*Hand (close vs. distant) design. The sigmoidal curve reported the best fit, irrespective of stimulation type and hand position (Model: (F(1,24) = 220.11, p < 0.001, η2p = 0.90)). For each variable, we estimated the coefficients of the sigmoid, obtaining the PSE and the curve slope. Through a Hand (close vs. distant)*Stimulation (visual vs. visuo-tactile) within-subject ANOVA on PSE values, we observed a main effect of stimulation type (F(1,24) = 4.38, p = 0.05, η2p = 0.15): the mean PSE was closer to the body in the unimodal visual (mean ± SE = 6.67 ± 0.17) than in the multisensory visuo-tactile condition (6.76 ± 0.18). The main effect of Hand (F(1,24) = 0.07, p = 0.79) and its interaction with Stimulation (F(1,24) = 3.49, p = 0.07) were not significant. Last, we performed a Hand (close vs. distant)*Stimulation (visual vs. visuo-tactile) within-subject ANOVA on slope values. Neither main effects (Hand: F(1,24) = 1.75, p = 0.20; Stimulation: F(1,24) = 0.35, p = 0.56) nor the interaction (Hand*Stimulation: F(1,24) = 0.27, p = 0.61) were significant (Fig. 4).

No hand-centered MG spatial patterns in a reachability judgment task. a Multisensory gain (MG) values along the ten positions, ranging from near to far space, for the close (green) and distant (yellow) hand conditions. Higher values of MG represent a stronger facilitation in terms of RT with respect to the unimodal visual baseline (by definition, MG = 0). Error bars represent the standard error of the mean. No significant differences between hand postures emerged. b PSE values calculated for both unimodal visual and multisensory visuo-tactile conditions for both hands. Error bars represent the standard error of the mean. Asterisks indicate a significant difference between unisensory and multisensory conditions (p < 0.05)

Discussion

We contrasted two theoretical views about PPS and ARS: one proposing they are different, the other opposing they are the same. Our findings clearly point against the latter, whether contrasted in terms of their spatial extent, by using their respective gold-standard paradigms and measures, or in terms of pattern of multisensory facilitation.

Due to obvious differences between paradigms, we did not compare the multisensory facilitation directly. We rather reasoned that, would PPS and ARS be the same spatial representation, using their typical paradigms applied to the same body part we should obtain similar results. Our visuo-tactile version of the reachability judgment task confirms previous findings on the extent and overestimation of ARS (Bootsma et al., 1992; Bourgeois & Coello, 2012; Carello et al., 1989; Coello & Iwanow, 2006), but its comparison with the PPS multisensory task resulted in two main advances arguing against the PPS-ARS identity.

First, we observed that multisensory facilitation depends on hand position, peaking in correspondence with its location and deteriorating with distance from it. Notably, this near-hand facilitation effect is independent of attention orienting (see Appendix, S1 results). Thus, PPS is smaller than the ARS, either objectively (from V-P1 to V-P6) or subjectively (PSE) measured. Were they superimposable, we should have observed faster RTs for all the reachable positions of visual stimulation. Both the classifier and the location-specific differences indicate instead that different spatial patterns of multisensory facilitation emerge for the close- and distant-hand positions, despite being both within the ARS limits. Interestingly, we add that overestimation is not modulated by hand vision (see Appendix, Experiments S2 and S3), and is independent of the position of the hand (Experiments 2 and Appendix, S3).

Second, our findings indicate that ARS is not hand-centered, whereas PPS is. In Experiment 2, adapting the reachability judgment task to a multisensory setting, the only significant effect was a general multisensory facilitation, spread over the ten positions tested: there was no modulation as a function of stimulus reachability or hand proximity, which, on the contrary, define PPS (Experiment 1). Therefore, ARS is not encoded in a hand-centered reference frame. Indeed, hand position was robustly classified from the distribution of MG in Experiment 1 (PPS), but not in Experiment 2 (ARS). Thus, the proximity of visual stimuli to the hand – not their reachability – predicts the increase in multisensory facilitation. Cross-correlation and univariate analyses further demonstrated that visual boosting of touch is hand-centered, following changes in hand position. In sum, here we show that (1) PPS does not cover the entire ARS, (2) ARS is not hand-centered, and (3) ARS is not susceptible to multisensory stimulation. Taken together, these results combine to show that PPS and ARS are not superimposable. Previous neuroimaging (Brozzoli et al., 2011, 2012) and behavioral studies (di Pellegrino et al., 1997; Farnè et al., 2005; Serino et al., 2015) reported body part-centered multisensory facilitation within PPS. Here we disclose that the facilitation is isomorphically “anchored” to the hand: present in close positions when the hand is close, it shifts to farther positions when the hand is distant, without changing its “shape.” Notably, the facilitation pattern fits well a Gaussian curve, similar to what is observed in non-human primate studies (Graziano et al., 1997) and in line with the idea of PPS as a « field », gradually deteriorating around the hand (Bufacchi & Iannetti, 2018).

The amount of multisensory facilitation observed in Experiment 1 for the position closest to the trunk (V-P1, thus clearly within ARS) is also remarkable. First, it is lower than that observed in correspondence of the close-hand PPS peak (between V-P2 and V-P3) and, second, it is comparable to that obtained for all the out-of-reach positions (V-P7 to V-P10), irrespective of hand distance.

These findings are consistent with what one would predict from neurophysiological data. Studies on non-human primates requiring reaching movements performed with the upper limb found activations involving M1, PMv and PMd, parietal areas V6A and 5, and the parietal reach region (Buneo et al., 2016; Caminiti et al., 1990; Georgopoulos et al., 1982; Kalaska et al., 1983; Mushiake et al., 1997; Pesaran et al., 2006). In humans, ARS tasks require judging stimulus reachability (Carello et al., 1989; Coello et al., 2008; Coello & Iwanow, 2006; Rochat & Wraga, 1997) or performing reaching movements (Battaglia-Mayer et al., 2000; Caminiti et al., 1990, 1991; Gallivan et al., 2009). Brain activations underlying these tasks encompass M1, PMd, supplementary motor area, posterior parietal cortex, and V6A, as well as the anterior and medial IPS (Lara et al., 2018; Monaco et al., 2011; Pitzalis et al., 2013; see Filimon, 2010, for review). Therefore, despite some overlap in their respective fronto-parietal circuitry, PPS and ARS networks do involve specific and distinct neuroanatomical regions, in keeping with the behavioral differences reported here.

At odds with previous studies employing looming stimuli (Canzoneri et al., 2012; Finisguerra et al., 2015; Noel et al., 2015, b; Serino et al., 2015; but see Noel et al., 2020), we used “static” stimuli flashed with tactile ones to avoid inflating the estimates of multisensory facilitation. Looming stimuli with predictable arrival times induce foreperiod effects that, though not solely responsible for the boosting of touch, may lead to overestimations of the magnitude of the facilitation (Hobeika et al., 2020; Kandula et al., 2017). Most noteworthy, the findings of the attentional control experiment provide the first behavioral evidence that multisensory near-hand effects may be appropriately interpreted within the theoretical framework of peripersonal space coding. This study therefore offers a bias-free (Holmes et al., 2020) protocol for fine-grained mapping of PPS.

In conclusion, this study provides an empirical and theoretical distinction between PPS and ARS. Discrepancies concern both their spatial extent and their behavioral features, and warn against the fallacy of conflating them. A precise assessment of PPS is crucial because several researchers exploit its body part-centered nature as an empirical entrance to the study of the bodily self (Blanke et al., 2015; Makin et al., 2008; Noel et al., 2015, b). Moreover, our results have direct implications for the study of interpersonal space, defined as the space that people maintain with others during social interactions. Several studies drew conclusions about interpersonal space using reachability tasks (Bogdanova et al., n.d.; Cartaud et al., 2018; Iachini et al., 2014). The present findings make clear that using these tasks does not warrant any conclusion extending to PPS, or informing about its relationship with the interpersonal space. Instead, they highlight the need to investigate the potential interactions between PPS and ARS, as to better tune rehabilitative protocols or brain machine interface algorithms for the sensorimotor control of prosthetic arms, for which multisensory integration appears crucial (Makin et al., 2007; Suminski et al., 2009, 2010).

References

Battaglia-Mayer, A., Ferraina, S., Mitsuda, T., Marconi, B., Genovesio, A., Onorati, P., Lacquaniti, F., & Caminiti, R. (2000). Early coding of reaching in the parietooccipital cortex. Journal of Neurophysiology, 83(4), 2374–2391. https://doi.org/10.1152/jn.2000.83.4.2374

Blanke, O., Slater, M., & Serino, A. (2015). Behavioral, Neural, and Computational Principles of Bodily Self-Consciousness. Neuron, 88(1), 145–166. https://doi.org/10.1016/j.neuron.2015.09.029

Bogdanova, O. V., Bogdanov, V. B., Dureux, A., Farne, A., & Hadj-Bouziane, F. (2021). THE PERIPERSONAL SPACE in a social world. Cortex. https://doi.org/10.1016/j.cortex.2021.05.005

Bootsma, R. J., Bakker, F. C., Van Snippemberg, F. E. J., & Tdlohreg, C. W. (1992). The effects of anxiety on perceiving the reachability of passing objects. Ecological Psychology, 4(1), 1–16.

Bourgeois, J., & Coello, Y. (2012). Effect of visuomotor calibration and uncertainty on the perception of peripersonal space. Attention Perception & Psychophysics, 74, 1268–1283. https://doi.org/10.3758/s13414-012-0316-x

Brozzoli, C., Cardinali, L., Pavani, F., & Farnè, A. (2010). Action-specific remapping of peripersonal space. Neuropsychologia, 48(3), 796–802. https://doi.org/10.1016/j.neuropsychologia.2009.10.009

Brozzoli, C, Ehrsson, H. H., & Farnè, A. (2014). Multisensory representation of the space near the hand: From perception to action and interindividual interactions. Neuroscientist, 20(2), 122–135. https://doi.org/10.1177/1073858413511153

Brozzoli, C., Gentile, G., & Ehrsson, H. H. (2012). That’s Near My Hand! Parietal and Premotor Coding of Hand-Centered Space Contributes to Localization and Self-Attribution of the Hand. Journal of Neuroscience, 32(42), 14573–14582. https://doi.org/10.1523/JNEUROSCI.2660-12.2012

Brozzoli, C., Gentile, G., Petkova, V. I., & Ehrsson, H. H. (2011). fMRI Adaptation Reveals a Cortical Mechanism for the Coding of Space Near the Hand. Journal of Neuroscience, 31(24), 9023–9031. https://doi.org/10.1523/JNEUROSCI.1172-11.2011

Brozzoli, C., Pavani, F., Urquizar, C., Cardinali, L., & Farnè, A. (2009). Grasping actions remap peripersonal space. NeuroReport, 20(10), 913–917. https://doi.org/10.1097/WNR.0b013e32832c0b9b

Bufacchi, R. J., & Iannetti, G. D. (2018). An action field theory of peripersonal space. Trends in cognitive sciences, 22(12), 1076-1090.

Buneo, C. A., Jarvis, M. R., Batista, A. P., & Andersen, R. A. (2016). Direct visuomotor transformations for reaching. Nature, 416(6881), 632. https://doi.org/10.1038/416632a

Caminiti, R., Johnson, P. B., & Urbano, A. (1990). Making arm movements within different parts of space: dynamic aspects in the primate motor cortex. Journal of Neuroscience, 10(7), 2039–2058. https://doi.org/10.1523/JNEUROSCI.10-07-02039.1990

Caminiti, R., Johnson, P. B., Galli, C., Ferraina, S., & Burnod, Y. (1991). Making arm movements within different parts of space: the premotor and motor cortical representation of a coordinate system for reaching to visual targets. Journal of Neuroscience, 11(5), 1182–1197. https://doi.org/10.1523/JNEUROSCI.11-05-01182.1991

Canzoneri, E., Magosso, E., & Serino, A. (2012). Dynamic Sounds Capture the Boundaries of Peripersonal Space Representation in Humans. PLoS ONE, 7(9), 3–10. https://doi.org/10.1371/journal.pone.0044306

Carello, C., Grosofsky, A., Reichel, F. D., Solomon, Y. H., & Turvey, M. T. (1989). Visually Perceiving What is Reachable. Ecological Psychology, 1(1), 27–54. https://doi.org/10.1207/s15326969eco0101

Cartaud, A., Ruggiero, G., Ott, L., Iachini, T., & Coello, Y. (2018). Physiological Response to Facial Expressions in Peripersonal Space Determines Interpersonal Distance in a Social Interaction Context. Frontiers in Psychology, 9, 1–11. https://doi.org/10.3389/fpsyg.2018.00657

Coello, Y., Bartolo, A., Amiri, B., Devanne, H., Houdayer, E., & Derambure, P. (2008). Perceiving what is reachable depends on motor representations: Evidence from a transcranial magnetic stimulation study. PLoS ONE, 3(8). https://doi.org/10.1371/journal.pone.0002862

Coello, Y., & Iwanow, O. (2006). Effect of structuring the workspace on cognitive and sensorimotor distance estimation: No dissociation between perception and action. Perception and Psychophysics, 68(2), 278–289. https://doi.org/10.3758/BF03193675

Colby, C. L., Duhamel, J. R., & Goldberg, M. E. (1993). Ventral intraparietal area of the macaque: anatomic location and visual response properties. Journal of Neurophysiology, 69(3), 902–914. https://doi.org/10.1152/jn.1993.69.3.902

de Haan, A. M., Smit, M., Van der Stigchel, S., & Dijkerman, H. C. (2016). Approaching threat modulates visuotactile interactions in peripersonal space. Experimental Brain Research, 234(7), 1875–1884. https://doi.org/10.1007/s00221-016-4571-2

Vignemont, F. De, & Iannetti, G. D. (2014). Neuropsychologia How many peripersonal spaces ? Neuropsychologia, 70, 327–334. https://doi.org/10.1016/j.neuropsychologia.2014.11.018

Desmurget, M., Epstein, C. M., Turner, R. S., Prablanc, C., Alexander, G. E., & Grafton, S. T. (1999). Role of the PPC in updating reaching movements to a visual target. Nature Neuroscience, 2(6), 563. https://doi.org/10.1109/EVER.2018.8362405

di Pellegrino, G., Làdavas, E., & Farnè, A. (1997). Seeing where your hands are. Nature, 388(6644), 730–730.

Farnè, A., Demattè, M. L., & Làdavas, E. (2005). Neuropsychological evidence of modular organization of the near peripersonal space. Neurology, 65(11), 1754–1758. https://doi.org/10.1212/01.wnl.0000187121.30480.09

Filimon, F. (2010). Human cortical control of hand movements: Parietofrontal networks for reaching, grasping, and pointing. The Journal of Neuroscience, 29(9), 2961–2971. https://doi.org/10.1177/1073858410375468

Finisguerra, A., Canzoneri, E., Serino, A., Pozzo, T., & Bassolino, M. (2015). Moving sounds within the peripersonal space modulate the motor system. Neuropsychologia, 70, 421–428. https://doi.org/10.1016/j.neuropsychologia.2014.09.043

Gallivan, J. P., Cavina-Pratesi, C., & Culham, J. C. (2009). Is that within reach? fMRI reveals that the human superior parieto-occipital cortex encodes objects reachable by the hand. Journal of Neuroscience, 29(14), 4381–4391. https://doi.org/10.1523/jneurosci.0377-09.2009

Georgopoulos, A. P., Kalaska, J. F., Caminiti, R., & Massey, J. T. (1982). On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex. The Journal of Neuroscience, 2(11), 1527–1537.

Graziano, M. S.A., & Cooke, D. F. (2006). Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia, 44(13), 2621–2635. https://doi.org/10.1016/j.neuropsychologia.2005.09.011

Graziano, M. S. A., & Gross, C. (1993). A bimodal map of space: somatosensory receptive fields in the macaque putamen with corresponding visual receptive fields. Experimental Brain Research, 97(1), 96–109. https://doi.org/10.1007/BF00228820

Graziano, M. S. A., Hu, X. T., & Gross, C. G. (1997). Visuospatial properties of ventral premotor cortex. Journal of Neurophysiology, 77(5), 2268–2292. https://doi.org/10.1152/jn.1997.77.5.2268

Graziano, M. S. A., Yap, G., & Gross, C. (1994). Coding of visual space by premotor neurons. Science, 266(5187), 1054–1057. https://doi.org/10.1126/science.7973661

Hobeika, L., Taffou, M., Carpentier, T., Warusfel, O., & Viaud-Delmon, I. (2020). Capturing the dynamics of peripersonal space by integrating expectancy effects and sound propagation properties. Journal of Neuroscience Methods, 332, 108534. https://doi.org/10.1016/j.jneumeth.2019.108534

Holmes, N. P., Martin, D., Mitchell, W., Noorani, Z., & Thorne, A. (2020). Do sounds near the hand facilitate tactile reaction times? Four experiments and a meta - analysis provide mixed support and suggest a small effect size. Experimental Brain Research, 1–15. https://doi.org/10.1007/s00221-020-05771-5

Iachini, T., Coello, Y., Frassinetti, F., & Ruggiero, G. (2014). Body space in social interactions: A comparison of reaching and comfort distance in immersive virtual reality. PLoS ONE, 9(11), 25–27. https://doi.org/10.1371/journal.pone.0111511

Kalaska, J. F., Caminiti, R., & Georgopoulos, A. P. (1983). Cortical mechanism related to the direction of two-dimensional arm movements: relations in parietal area 5 and comparisons with motor cortex. Exp Brain Res, 51, 247–260.

Kandula, M., Van der Stoep, N., Hofman, D., & Dijkerman, H. C. (2017). On the contribution of overt tactile expectations to visuo-tactile interactions within the peripersonal space. Experimental Brain Research, 235(8), 2511–2522. https://doi.org/10.1007/s00221-017-4965-9

Làdavas, E., & Farnè, A. (2004). Visuo-tactile representation of near-the-body space. Journal of Physiology Paris, 98(1-3), 161–170. https://doi.org/10.1016/j.jphysparis.2004.03.007

Lara, A. H., Cunningham, J. P., & Churchland, M. M. (2018). Different population dynamics in the supplementary motor area and motor cortex during reaching. Nature Communications, 9(1). https://doi.org/10.1038/s41467-018-05146-z

Makin, T. R., Vignemont, F. De, & Faisal, A. A. (2017). Neurocognitive considerations to the embodiment of technology. Nature Biomedical Engineering, 1(1), 1–3.

Makin, T. R., Holmes, N. P., & Ehrsson, H. H. (2008). On the other hand : Dummy hands and peripersonal space. Behavioural Brain Research, 191, 1–10. https://doi.org/10.1016/j.bbr.2008.02.041

Makin, T. R., Holmes, N. P., & Zohary, E. (2007). Is That Near My Hand? Multisensory Representation of Peripersonal Space in Human Intraparietal Sulcus. Journal of Neuroscience, 27(4), 731–740. https://doi.org/10.1523/JNEUROSCI.3653-06.2007

Monaco, S., Cavina-Pratesi, C., Sedda, A., Fattori, P., Galletti, C., & Culham, J. C. (2011). Functional magnetic resonance adaptation reveals the involvement of the dorsomedial stream in hand orientation for grasping. Journal of Neurophysiology, 106(5), 2248–2263. https://doi.org/10.1152/jn.01069.2010

Mushiake, H., Tanatsugu, Y., & Tanji, J. (1997). Neuronal Activity in the Ventral Part of Premotor Cortex During Target-Reach Movement is Modulated by Direction of Gaze. Journal of Neurophysiology, 78, 567–571.

Noel, J. P., Bertoni, T., Terrebonne, E., Pellencin, E., Herbelin, B., Cascio, C., ... & Serino, A. (2020). Rapid Recalibration of Peri-Personal Space: Psychophysical, Electrophysiological, and Neural Network Modeling Evidence. Cerebral Cortex, 30(9), 5088-5106.

Noel, J. P., Grivaz, P., Marmaroli, P., Lissek, H., Blanke, O., & Serino, A. (2015). Full body action remapping of peripersonal space: The case of walking. Neuropsychologia, 70, 375–384. https://doi.org/10.1016/j.neuropsychologia.2014.08.030

Noel, J.P., Pfeiffer, C., Blanke, O., Serino, A. (2015) Peripersonal space as the space of the bodily self. Cognition, 144:49-57. https://doi.org/10.1016/j.cognition.2015.07.012.

Patané, I., Cardinali, L., Salemme, R., Pavani, F., & Brozzoli, C. (2019). Action planning modulates peripersonal space. Journal of Cognitive Neuroscience, 31(8), 1141–1154.

Pesaran, B., Nelson, M. J., & Andersen, R. A. (2006). Dorsal premotor neurons encode the relative position of the hand, eye, and goal during reach planning. Neuron, 51, 125–134. https://doi.org/10.1016/j.neuron.2006.05.025

Pitzalis, S., Sereno, M. I., Committeri, G., Fattori, P., Galati, G., Tosoni, A., & Galletti, C. (2013). The human homologue of macaque area V6A. NeuroImage, 82, 517–530. https://doi.org/10.1016/j.neuroimage.2013.06.026

Rizzolatti, G., Scandolara, C., Matelli, M., & Gentilucci, M. (1981a). Afferent properties of periarcuate neurons in macaque monkeys. I. Somatosensory responses. Behavioural Brain Research, 2(2), 125–146. https://doi.org/10.1016/0166-4328(81)90052-8

Rizzolatti, G., Scandolara, C., Matelli, M., & Gentilucci, M. (1981b). Afferent properties of periarcuate neurons in macaque monkeys. II. Visual responses. Behavioural Brain Research, 2(2), 147–163. https://doi.org/10.1016/0166-4328(81)90053-X

Rochat, P., & Wraga, M. (1997). An Account of the Systematic Error in Judging What Is Reachable. Journal of Experimental Psychology: Human Perception and Performance, 23(1), 199–212. https://doi.org/10.1037/0096-1523.23.1.199

Serino, A., Noel, J. P., Galli, G., Canzoneri, E., Marmaroli, P., Lissek, H., & Blanke, O. (2015). Body part-centered and full body-centered peripersonal space representations. Scientific Reports, 5, 1–14. https://doi.org/10.1038/srep18603

Spence, C., Pavani, F., & Driver, J. (2004). Spatial constraints on visual-tactile cross-modal distractor congruency effects. Cognitive, Affective and Behavioral Neuroscience, 4(2), 148–169. https://doi.org/10.3758/CABN.4.2.148

Suminski, A. J., Tkach, D. C., Fagg, A. H., & Hatsopoulos, N. G. (2010). Incorporating feedback from multiple sensory modalities enhances brain – Machine interface control. The Journal of Neuroscience, 30(50), 16777–16787. https://doi.org/10.1523/JNEUROSCI.3967-10.2010

Suminski, A. J., Tkach, D. C., & Hatsopoulos, N. G. (2009). Exploiting multiple sensory modalities in brain-machine interfaces. Neural Network, 22(9), 1224–1234. https://doi.org/10.1016/j.neunet.2009.05.006.Exploiting

Vapnick, V. (1995). The nature of statistical learning theory. Springer-Verlag.

Vieira, J. B., Pierzchajlo, S. R., & Mitchell, D. G. V. (2020). Neural correlates of social and non-social personal space intrusions: Role of defensive and peripersonal space systems in interpersonal distance regulation. Social Neuroscience, 15(1), 36–51. https://doi.org/10.1080/17470919.2019.1626763

Acknowledgements

This work was supported by the Labex/Idex (ANR-11-LABX-0042) and by grants from the James S. McDonnell Foundation, the ANR-16-CE28-0015-01 and ANR-10-IBHU-0003 to A.F. E.B. was supported by the European Union's Horizon 2020 research and innovation program (Marie Curie Actions) under grant agreement MSCA-IF-2016-746154; a grant from MIUR (Departments of Excellence DM 11/05/2017 n. 262) to the Department of General Psychology, University of Padova. C.B. was supported by a grant from the Swedish Research Council (2015-01717) and ANR-JC (ANR-16-CE28-0008-01). We thank S. Alouche, J.L. Borach, S. Chinel, A. Fargeot, and S. Terrones for administrative and informatics support, and F. Volland for customizing the setup.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of Interest

The authors declare no competing financial interests.

Additional information

Open Practices Statements

The data and materials for all experiments are available online at https://osf.io/8dnga/?view_only=b0a4a90d5a8a43bb8ab787b1adf7d5c0

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(DOCX 1324 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zanini, A., Patané, I., Blini, E. et al. Peripersonal and reaching space differ: Evidence from their spatial extent and multisensory facilitation pattern. Psychon Bull Rev 28, 1894–1905 (2021). https://doi.org/10.3758/s13423-021-01942-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-021-01942-9