Abstract

We deal with the orbit determination problem for hyperbolic maps. The problem consists in determining the initial conditions of an orbit and, eventually, other parameters of the model from some observations. We study the behaviour of the confidence region in the case of simultaneous increase in the number of observations and the time span over which they are performed. More precisely, we describe the geometry of the confidence region for the solution, distinguishing whether a parameter is added to the estimate of the initial conditions or not. We prove that the inclusion of a dynamical parameter causes a change in the rate of decay of the uncertainties, as suggested by some known numerical evidences.

Similar content being viewed by others

1 Introduction

This paper is concerned with the behaviour of the confidence region coming from an orbit determination process as the number of observation increases.

We recall that orbit determination consists of recovering information on some parameters (initial conditions or dynamical parameters) of a model given some observations and goes back to Gauss (1809). The solution, called nominal solution, relies on the least squares algorithm, and the confidence region summarises the uncertainties coming from the intrinsic errors in the observational process.

The problem under investigation is suggested by the numerical results in Serra et al. (2018), Spoto and Milani (2016) where some estimates are given for the cases of a map depending on a parameter and presenting both ordered and chaotic zones: the Chirikov standard map (Chirikov 1979) (see also Siegel and Moser 1971; Celletti et al. 2010 for its importance in Celestial Mechanics). The authors of Serra et al. (2018), Spoto and Milani (2016) constructed the observations by adding some noise to a true orbit of the map. Then, they set up an orbit determination process to recover the true orbit and observed the decay of the uncertainties as the number of observations grows. The experiments show that the result crucially depends on the dynamics and on whether the parameter is included in the orbit determination process or not. More precisely, if the observations come from an ordered zone (an invariant curve), then the uncertainties decrease polynomially both if the parameter is included or not. This behaviour was analytically proved to be true at least in the case where only the initial conditions are estimated in Marò (2020), using KAM techniques.

The numerical results coming from the chaotic case are more delicate. From the practical point of view, the problem of the so-called computability horizon occurs. This prevents orbit determination from being performed if the time span of the observations is too large and more sophisticated techniques must be employed, such as the multi-arc approach (Serra et al. 2018). Moreover, at least until the computability horizon, the uncertainties on the sole initial conditions decrease exponentially, while a polynomial decay is observed when the parameter is included in the orbit determination process.

In this paper, we give an analytical proof of this last result. We will consider a class of hyperbolic maps depending on a parameter. The existence of chaotic orbits, in the future or in the past, for these systems is given by the fact that all the Lyapunov exponents are supposed to be nonzero. Despite the above-described practical problem in computing a solution, we will always suppose that the least squares algorithm converges and gives a nominal solution. Hence, the estimates on the decay of the uncertainty are given asymptotically as the number of observations goes to infinity. To state the result, we recall that the confidence region is an ellipsoid in the space of the fit parameters and the uncertainties strictly depend on the size of the axes of such an ellipsoid.

We will prove that, in the case of estimating only the initial conditions, there exists a full measure set of possible nominal solutions for which all the axes of the related confidence ellipsoid decay exponentially. On the other hand, if the parameter is included in the orbit determination process, then there exists a full measure set of initial conditions and parameters for which the related confidence ellipsoid has an axis that decays strictly slower than exponentially.

Our analytical results are consistent with the numerical results in Serra et al. (2018), Spoto and Milani (2016). In the case of estimating the parameter, we cannot prove polynomial decay of the uncertainties; however, we will show that this occurs for a class of affine maps depending on a parameter. Perturbed automorphisms of the torus are representative of this class, including the famous Arnold’s Cat Map.

We conclude stressing the fact that chaotic orbit determination is a challenge for both space mission and impact monitoring. Actually, the accurate determination of orbits of chaotic NEOs is essential in the impact monitoring activity (Milani and Valsecchi 1999). Another interesting case is given by satellites. When their operating life finishes, they are left without control in safe orbits, governed only by the natural forces. It has been noticed that many parts of this region are chaotic (Rosengren et al. 2015), due to the perturbed motion of the Moon. It is then important to track and determine orbits of non-operating satellites that could crash into operating ones. Finally, the targets of many space missions include the determination of some unknown parameter. Typical examples are the ESA/JAXA BepiColombo mission to Mercury, the NASA JUNO and ESA JUICE missions to Jupiter that are performed in a chaotic environment (Lari and Milani 2019).

Moreover, these results are related to a conjecture posed by Wisdom in 1987 (see Wisdom 1987). Discussing the chaotic rotation state of Hyperion, it was proposed that “the knowledge gained from measurements on a chaotic dynamical system grows exponentially with the time span covered by the observations”. In particular, this was related to the information on dynamical parameters like the moments of inertia ratios.

The paper is organised as follows. In Sect. 2, we adapt the general description of the problem given in Marò (2020) to our situation and state our main results. Moreover, we briefly discuss our results as compared with the numerical simulations in Serra et al. (2018), Spoto and Milani (2016). Section 3 is dedicated to the proof of the result concerning the estimation of the sole initial conditions, while Sect. 4 is dedicated to the proof of the results in the case the parameter is included. Section 5 is dedicated to the study of a concrete example, and our conclusions are given in Sect. 6.

2 Statement of the problem and main results

2.1 Notation and preliminaries on Lyapunov exponents

The main tool of our approach to the orbit determination problem is the notion of Lyapunov exponents of a differentiable map, of which we now recall the definition and the main properties as stated in the Oseledets Theorem. Let \(f:X\rightarrow X\) be a diffeomorphism of a d-dimensional differentiable Riemannian manifold X endowed with a \(\sigma \)-algebra \(\mathcal {B}\) and an f-invariant probability measure \(\mu \). We recall that a measure is f-invariant if \(\mu (E) =\mu (f^{-1}(E))\) for all \(E\in \mathcal {B}\). We denote by F(x) the Jacobian matrix of f and, for \(n\in \mathbb {Z}\),by \(F^n(x)\) the Jacobian matrix of \(f^n\) which, by the chain rule, can be written as

Let us now introduce the Lyapunov exponents of f. Suppose that

Given \(x\in X\) and a vector \(v\in T_xX\), let us define

If \({\overline{\gamma }}_\pm (x,v) ={\underline{\gamma }}_\pm (x,v)\), we use the notation

In principle, the limits \(\gamma _\pm (x,v)\) depend on x and v. The following version of the classical theorem by Oseledets (1968, 1968) (see also Raghunathan 1979; Ruelle 1979) gives an answer to this problem. The interested reader can find more details on Lyapunov exponents in Barreira and Pesin (2013).

Theorem 1

(Oseledets) Let \(f:X\rightarrow X\) be a measure-preserving diffeomorphism of a d-dimensional differentiable Riemannian manifold X endowed with a \(\sigma \)-algebra \(\mathcal {B}\) and an f-invariant probability measure \(\mu \), and assume that the Jacobian matrix F(x) satisfies (2). Then, for \(\mu \)-almost every \(x\in X\) there exist numbers

with \(r(x)\le d\) and a decomposition

such that

-

(i)

for every \(v\in E_i(x)\), the \(\limsup \) and the \(\liminf \) in (3) coincide and \(\gamma _+(x,v) = -\gamma _-(x,v) = \gamma _i(x)\);

-

(ii)

the functions \(x\mapsto r(x),\gamma _i(x),E_i(x)\) are measurable and f-invariant;

-

(iii)

If f is ergodic, then \(r(x),\gamma _i(x),E_i(x)\) are \(\mu \)-a.e. constant;

-

(iv)

for \(\mu \)-a.e. \(x\in X\), the matrix

$$\begin{aligned} \Lambda (x) := \lim _{n\rightarrow \infty }\left[ (F^n(x))^TF^n(x)\right] ^{1/2n} \end{aligned}$$exists and \(\exp \gamma _1(x),\dots ,\exp \gamma _{r(x)}(x)\) are its eigenvalues.

Definition 1

The numbers \(\gamma _1(x),\dots ,\gamma _{r(x)}(x)\) given in Theorem 1 are the Lyapunov exponents of f at x, and for each \(\gamma _i(x)\), \(i=1,\dots ,r(x)\), the dimension of the corresponding vector space \(E_i(x)\) is called the multiplicity of the exponent.

Without loss of generality, one can assume that the diffeomorphism f is ergodic, so that the Lyapunov exponents and their multiplicities do not depend on x. The case of non-ergodic maps can be treated by the standard procedure of ergodic decomposition, obtaining similar results depending on the ergodic component to which the initial condition x belongs.

Definition 2

A diffeomorphism \(f:X\rightarrow X\) is called hyperbolic if it has no vanishing Lyapunov exponents.

In the particular case of Hamiltonian maps, or of maps preserving the volume form of a manifold, one can easily deduce that hyperbolic maps necessarily have positive Lyapunov exponents, so are chaotic. Moreover, by Theorem 1-(i), it follows that for a hyperbolic map either f or \(f^{-1}\) is chaotic. This is an important remark for our main results.

In the following, we consider diffeomorphisms depending on a parameter \(k\in K\subset \mathbb {R}\) and use the notation \(f_k:X\rightarrow X\). We also assume that the dependence on k is differentiable and that the probability measure \(\mu \) of the manifold X is \(f_k\)-invariant and the map is ergodic for all \(k\in K\). The Jacobian matrix of \(f_k^n\) with respect to x for \(n\in \mathbb {Z}\) is denoted by \(F^n_k(x)\) and can be written as in (1). Assuming that (2) is satisfied, we can apply Oseledets Theorem to \(f_k\) for all \(k\in K\) and find its Lyapunov exponents at \(\mu \)-a.e. \(x\in X\). Since the map \(f_k\) is differentiable also with respect to the parameter k, we can also consider the Jacobian matrix of \(f_k\) with respect to (x, k) which is denoted by \({\tilde{F}}^n_k(x)\) for \(n\in \mathbb {Z}\).

For the sake of simplicity, from now on we will only consider the case when X is an open domain of \(\mathbb {R}^d\).This restriction has the only purpose of simplifying the notations and the exposition. The results can be readily extended to the general setting by using the metric of a Riemannian manifold.

Being \(f_k\) a diffeomophism of a domain in \(\mathbb {R}^d\), we use the notation \(f_k(x) = ((f_k)_1(x),\dots ,(f_k)_d(x))\) for its components, so that its Jacobian matrix with respect to x reads

Analogously, the Jacobian matrix \({\tilde{F}}_k(x)\)reads

and we note that, for \(n\in \mathbb {Z}\), the matrix\({\tilde{F}}^n_k(x)\) can be written as

2.2 Statement of the problem

A general statement of the problem can be found in Marò (2020). For the sake of completeness, here we recall and adapt it to the present notations.

Consider a map \(f_k\) as in the previous section. Given an initial condition x, its orbit is completely determined by the iterations \(f_k^n(x)\) for \(n\in \mathbb {Z}\). Instead, let’s suppose that we have been observing the evolution of the state of a system modelled by \(f_k\) and that we have got the observations \((\bar{X}_n)\) for \(|n|\le N\). Following Milani and Gronchi (2010), we set up an orbit determination process to determine the unknown parameters. We consider two different scenarios.

-

(A)

Only the initial conditions x are unknown.

-

(B)

Both the initial conditions x and the parameter k are unknown.

In both cases, we search for the values of the parameters that best approximate, in the least squares sense, the given observations. We first define the residuals as

We stress that, even if the expressions coincide, in case (A) the residuals are defined in terms of a fixed k, whereas in case (B) the value of k is to be determined.

Subsequently, we call the least squares solution \(x_0\) in case (A), or \((x_0,k_0)\) in case (B), the (local) minimiser of the target function

We will not be concerned with the existence and computation of the minima. This is a very delicate task, solved via iterative schemes such as the Gauss–Newton algorithm and the differential corrections. These algorithms crucially depend on the choice of the initial conditions. See Gronchi et al. (2015), Ma et al. (2018) for some recent results on this topic for the asteroid and space debris cases. For the case of chaotic maps that we study in this paper, the problem is considered in Serra et al. (2018), Spoto and Milani (2016) where computational problems that occur for large N are treated with advanced techniques. In the following of this paper, we assume that the least squares solution \(x_0\), or \((x_0,k_0)\), exists and we refer to it as the nominal solutions.

In general, the observations \((\bar{X}_n)\) contain errors; hence, values of x, or of (x, k), that make the target function slightly bigger than the minimum value \(Q_k(x_0)\), or \({\tilde{Q}}(x_0,k_0)\), are acceptable. This leads to the definition of the confidence region as

where \(\sigma >0\) is an empirical parameter chosen depending on statistical properties and bounds the acceptable errors; the value of \(\sigma \) is irrelevant for our purposes, hence in the next sections we will set \(\sigma =1\). Expanding the target functions \(Q_k(x)\) and \({\tilde{Q}}(x,k)\) at the corresponding nominal solution up to second order, we get, using the notation introduced in (1) and (5),

and

Under the hypothesis that the residuals corresponding to the nominal solution are small, we can neglect the terms \(\xi _{n,k}^T(x_0)\) and \({\tilde{\xi }}_{n}^T(x_0,k_0)\). Then, we define the normal matrices as

and the associated covariance matrices as

Note that the matrices \(C_{N,k}(x)\) and \(\Gamma _{N,k}(x)\) defined for case (A) are \(d\times d\), while the matrices \({\tilde{C}}_{N}(x,k)\) and \({\tilde{\Gamma }}_{N}(x,k)\) defined for case (B) are \((d+1)\times (d+1)\). Moreover, the normal matrices are symmetric and positive definite since \(f_k\) is a diffeomorphism and the operators \(F_k(x)\) and \({\tilde{F}}_k(x)\) have maximum rank. Hence, the confidence regions can be approximated by the confidence ellipsoids given by

for case (A) and by

for case (B). The covariance matrices \(\Gamma _{N,k}(x)\) and \({\tilde{\Gamma }}_{N}(x,k)\) describe the corresponding confidence ellipsoids \(\mathcal {E}_{N,k}\) and \(\tilde{\mathcal {E}}_{N}\) since the axes of the ellipsoids are proportional to the square root of the eigenvalues of the corresponding matrix and are directed along the corresponding eigenvectors. Since the matrix \(\Gamma _{N,k}(x)\) is positive definite, its eigenvalues are all real and positive and we denote them by

Analogously, we denote by

the eigenvalues of \({\tilde{\Gamma }}_{N}(x,k)\).

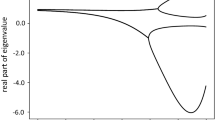

The regions \(\mathcal {E}\) represent the uncertainty of the nominal solution: the values inside \(\mathcal {E}\) are acceptable and the projections of \(\mathcal {E}\) on the axes represent the (marginal) uncertainties of the coordinates. See Fig. 1.

We remark that the normal and covariance matrices also have a probabilistic interpretation, see Milani and Gronchi (2010).

From the point of view of the applications (e.g. impact monitoring Milani and Valsecchi 1999), it is of fundamental importance to know the shape and the size of the confidence ellipsoid \(\mathcal {E}\). Hence, the question that we here address, stated in a broad sense, is the following:

Problem 1

Given a map \(f_k\) as in Sect. 2.1 and a nominal solution of the associated orbit determination process, describe the confidence ellipsoids for large N in cases (A) and (B).

Remark 1

The solution of the problem passes through the computation of the eigenvalues of the covariance matrices for large N. Note that they crucially depend on the dynamics, since we have to compute the linearisation of the system along an orbit.

2.3 Main results

In this paper, we consider Problem 1 for hyperbolic maps. We now state and comment the results, giving the proofs in Sects. 3 and 4.

For all \(k\in K\subset \mathbb {R}\), let \(f_k :X\rightarrow X\) be an ergodic hyperbolic diffeomorphism of an open domain \(X\subset \mathbb {R}^d\) with \(f_k\)-invariant probability measure \(\mu \), and assume that \(f_k\) satisfies (2).

For case (A), we have the following result

Theorem 2

Let \(\gamma _1,\dots ,\gamma _r\) be the Lyapunov exponents of \(f_k\), and let

For \(\mu \)-almost every \(x\in X\), the eigenvalues \(\lambda _{N,k}^{(i)}(x)\) of \(\Gamma _{N,k}(x)\) satisfy

for every \(i=1,\dots ,d\).

Theorem 2 shows that the axes of the confidence ellipsoid defined in (9) shrink exponentially fast with the number of observation. In fact, the lengths of the axes of \(\mathcal {E}_{N,k}(x)\) are the square roots of the eigenvalues of the corresponding covariance matrix \(\Gamma _{N,k}(x)\). Hence, the exponential rate of decay of the uncertainties is controlled by the Lyapunov exponents of the orbit corresponding to the nominal solution.

We now show how the result changes in case (B). We prove the following

Theorem 3

For \(\mu \)-almost every \(x\in X\) the largest eigenvalue \({\tilde{\lambda }}_{N}^{(d+1)}(x,k)\) of \({\tilde{\Gamma }}_{N}(x,k)\) is a positive number which decreases with N and satisfies

Thus, by Theorem 3, if the orbit determination problem includes the determination of the parameter k, the confidence ellipsoid defined in (9) has one of the axes which shrinks slower than any exponential. Since the uncertainties are the projection of the confidence ellipsoid on the direction of the parameters to be determined, in general the slow decay of this axis affects all the uncertainties, giving a lower bound to their speed of decay. In Sect. 5, we consider an example for which we can prove a more precise asymptotic behaviour for \({\tilde{\lambda }}_{N}^{(d+1)}(x,k)\).

Remark 2

By the proof of Theorem 3, we cannot exclude that \({\tilde{\lambda }}_{N}^{(d+1)}\) converges to a positive constant. However, this would imply the failure of the orbit determination process, since the confidence ellipsoid wouldn’t shrink to a point.

Remark 3

The methods used in Theorems 2 and 3 can be applied also to non-hyperbolic diffeomorphisms, showing a less than exponential decay of the uncertainties also in case (A). This problem was studied in Marò (2020) for nominal solutions living on invariant curves of exact symplectic twist maps of the cylinder, for which a sharp estimate for the rate of decay of the uncertainties was proved.

2.4 Comparison with the numerical results in Serra et al. (2018), Spoto and Milani (2016)

Our results in Theorems 2 and 3 are consistent with the numerical estimates in Serra et al. (2018), Spoto and Milani (2016). The authors considered a classical model in Celestial Mechanics: the well-known Chirikov Standard Map defined as \(f_k:\mathbb {T}^2\rightarrow \mathbb {T}^2\), \(f_k(x,y)=(\bar{x},\bar{y})\)

The data of an orbit determination process were produced adding a random Gaussian noise to the orbit with initial condition \((x_0,y_0)=(3,0)\) and \(k=0.5\). This initial condition is close to the hyperbolic fixed point and is likely giving rise to a chaotic orbit. The differential corrections algorithm is then performed both in cases (A) and (B).

Working in quadruple precision, numerical instability of the differential corrections occurs for the number of observations \(N\sim 300\). For the same number of iterations, it is computed the largest eigenvalue of the state transition matrix. A linear fit gives a Lyapunov indicator of \(+0.086\). It represents the largest Lyapunov exponent of the solution to which the differential corrections converge, i.e. the largest Lyapunov exponent of the nominal value.

To get a comparison with our results in case (A), we apply Theorem 2 to the Standard Map with initial condition given by the nominal value obtaining

hence assuming that the largest Lyapunov exponent coincides with the Lyapunov indicator. Hence, we expect the eigenvalues of the covariance matrix to shrink as

In the numerical simulations for case (A) performed in Serra et al. (2018), Spoto and Milani (2016), it was computed the standard deviation of the components x and y at every iteration, corresponding to the values \(\sigma _x\) and \(\sigma _y\) in Fig. 1. By a linear fit in logarithmic scale, the authors got the slopes \(-0.084\) for x and \(-0.083\) for y, deducing numerically the following decay of the uncertainties as function of N

Note that since the uncertainties \(\sigma _x,\sigma _y\) are proportional to the square root of the eigenvalues \(\lambda ^{(i)}_{N,0.5}\) of the covariance matrix, the estimate (12) coming from Theorem 2 is in perfect accordance with the numerical results (13) obtained in Serra et al. (2018), Spoto and Milani (2016). Thus, Theorem 2 represents a proof of the following conjecture posed in Spoto and Milani (2016) for case (A): “exponentially improving determination of the initial conditions only is possible, and the exponent appears to be very close to the opposite of the Lyapunov exponent.”

Concerning case (B), the numerical simulations give a decrease in the uncertainties of the form \(N^\alpha \), with different values of \(\alpha <-0.5\) for the parameters x, y, k. No quantitative conjecture is posed on the rate of decrease apart from being strictly less that exponential. This is consistent with our results in Theorem 3, and with the lower bound that we obtain for the decay of the uncertainties for the systems studied in Sect. 5.

3 Proof of Theorem 2

Let us fix \(k\in K\) and let \(x\in X\) be a point for which Oseledets Theorem holds. Consider the normal matrix \(C_{N,k}(x)\) as in (8) and, recalling that it is positive definite, denote by \(0<\delta _{N,k}^{(1)}(x)\le \dots \le \delta _{N,k}^{(d)}(x)\) its eigenvalues. Using Oseledets Theorem, for every \(i=1,\dots ,r\) let \(E_i\) be the vector spaces of the decomposition of \(T_xX \cong \mathbb {R}^d\), and write for a unit vector \(v\in E_i\)

By Oseledets Theorem and (3), for every \(\varepsilon >0\) there exist \(n_+=n_+(x)>0\) and \(n_-=n_-(x)<0\) such that

hence, from (14) we can choose \(\varepsilon \in (0,\gamma _*)\) and find \(\bar{N}(x):=\max \{n_+(x),-n_-(x)\}\) such that for all \(N>\bar{N}(x)\)

where

and in the left-hand side we have neglected the terms with \(e^{2(-|\gamma _i|-\varepsilon )|n|}\) for which the series converge. Hence, recalling the variational characterisation of the eigenvalues of a symmetric matrix, given \(\varepsilon \in (0,\gamma _*)\) there exists \(\bar{N}(x)\) such that for \(N>\bar{N}(x)\)

Analogously for \(N>\bar{N}(x)\), using \({{\tilde{c}}}_+(x):= c_+(x) + \max _i \sum _{n=0}^{+\infty }e^{2(-|\gamma _i|+\varepsilon )n}\),

Finally, using that by definition

we have that for every \(\varepsilon \in (0,\gamma _*)\)

Since \(\varepsilon \) is arbitrary, the theorem follows. \(\square \)

4 Proof of Theorem 3

Let us introduce the auxiliary diffeomorphism

Recalling (4), we can write the \((d+1)\times (d+1)\) Jacobian matrix G(x, k) of g with respect to (x, k) as follows

and for \(n\in \mathbb {Z}\) we denote by \(G^n(x,k)\) the Jacobian matrix of \(g^n\) with respect to (x, k). As in (1), by the chain rule it can be written as

Finally, we consider the auxiliary normal matrix

which is related to the normal matrix \({\tilde{C}}_N(x,k)\) as shown in the following lemma.

Lemma 1

For all \(n\in \mathbb {Z}\), it holds

so that for all \(N\ge 1\)

Proof

Formula (17) comes from the definitions noting that for \(n>0\)

and a similar formula holds for \(n<0\). Formula (18) is a straightforward consequence of (17). \(\square \)

We now study the Lyapunov exponents of g. First, we consider the measure \(\mu \times \delta _k\) on \(X\times K\) which is clearly g-invariant. Moreover, if \(f_k\) is ergodic, the same holds for g. Thus, under assumption (2) for \(f_k\) we can apply Oseledets Theorem to g, and obtain that for \(\mu \)-almost every \(x\in X\) the map g admits Lyapunov exponents \({\tilde{\gamma }}_1,\dots ,{\tilde{\gamma }}_{{\tilde{r}}}\) and an associated decomposition

In the following lemma, we describe the relation between the Lyapunov exponents of g and those of \(f_k\).

Lemma 2

Given g as above, we have \({\tilde{r}}=r+1\), and

Proof

First of all, if \(\gamma _i\) is a Lyapunov exponent of \(f_k\), then it is also a Lyapunov exponent of g with multiplicity not smaller, in the sense that \({\tilde{\gamma }}_i= \gamma _i\) and \(\dim E_i \le \dim {\tilde{E}}_i\) for all \(i=1,\dots ,r\). Actually, for every \(v\in \ E_i\) and for \(\mu \)-almost every \(x\in X\), using (17) we have

Then, we prove that the last exponent of g is zero. To this end, we recall that by Oseledets Theorem the eigenvalues of the matrix

are \(e^{\gamma _1}, e^{\gamma _2},\dots , e^{\gamma _{r}}\) with multiplicity \(\dim E_i\) and the eigenvalues of the matrix

are \(e^{{\tilde{\gamma }}_1}, e^{{\tilde{\gamma }}_2},\dots , e^{{\tilde{\gamma }}_{{\tilde{r}}}}\) with multiplicity \(\dim {\tilde{E}}_i\). Now, by (17), for every \(n\in \mathbb {Z}\)

so that \(\det {\tilde{\Lambda }}(x,k) = \det \Lambda _k(x)\) that is

But since the Lyapunov exponents of \(f_k\) are all different from zero and they are also Lyapunov exponents of g with multiplicity not smaller, we must have \({\tilde{r}}=r+1\), \({\tilde{\gamma }}_{{\tilde{r}}}=0\) and \(\dim {\tilde{E}}_{{{\tilde{r}}}}=1\). \(\square \)

The conclusion of the proof of Theorem 3 is a consequence of the following lemma. To state it, let us consider the normal matrix

and denote its eigenvalues by

Lemma 3

For \(\mu \)-almost every \(x\in X\), the smallest eigenvalue \({\tilde{\delta }}_{N}^{(1)}(x,k)\) of \({\tilde{C}}_{N}(x,k)\) is a positive number which increases with N and satisfies

Proof

First, \(\{{\tilde{\delta }}_{N}^{(1)}(x,k)\}_N\) is an increasing sequence of positive terms since

and \(v^T{\tilde{C}}_{N}(x,k)v\) is the sum of \(2N+1\) positive terms.

Let us now denote by \(v_0\in \mathbb {R}^{d+1}\) the unit vector corresponding to the vanishing Lyapunov exponent of g, so that

Hence, for every \(\varepsilon >0\) there exists \(\bar{n}\) such that \(\left| G^n(x,k)v_0 \right| ^2<e^{2\varepsilon |n|}\) for \(|n|>\bar{n}\), so that, for \(N>\bar{n}\)

for some constant c(x, k) independent on N.

We now use Lemma 1 to find an estimate for the eigenvalues of \({\tilde{C}}_{N}(x,k)\). From the variational characterisation of the eigenvalues and (18),

Hence, for every \(\varepsilon >0\) there exists a constant \(c_1(x,k)\) not depending on N such that

Since \(\varepsilon \) is arbitrary, the result is proved. \(\square \)

To finish the proof of Theorem 3 is now enough to recall that \({\tilde{\lambda }}_{N}^{(d+1)}(x,k) = [{\tilde{\delta }}_{N}^{(1)}(x,k)]^{-1}\) and apply Lemma 3. \(\square \)

5 An example

In this section, we present a class of maps for which the estimates on the eigenvalues of \(\Gamma _{N,k}\) and \({\tilde{\Gamma }}_N\) in Theorems 2 and 3 can be made explicit. Inspired by some computations presented in Milani and Baù, we consider the case of an affine hyperbolic diffeomorphism \(\mathcal {C}_k: \mathbb {T}^d\rightarrow \mathbb {T}^d\) of the d-dimensional torus \(\mathbb {T}^d = \mathbb {R}^d/\mathbb {Z}^d\). One example of this class for \(d=2\) is the well-known Arnold’s Cat Map.

Fixing a matrix \(A\in SL(d,\mathbb {Z})\) and a vector \(b\in \mathbb {R}^d\), we define

Since \(\det A =1\), the Lebesgue measure m is \(\mathcal {C}_k\)-invariant. Finally, we assume that A has no eigenvalues of modulus 1, since as shown below this implies that \(\mathcal {C}_k\) is hyperbolic. We denote by \(\delta _1,\dots ,\delta _d\) the eigenvalues of A.

The orbits of the map \(\mathcal {C}_k\) can be computed explicitly; more precisely, we have

Lemma 4

For every \(n\in \mathbb {Z}\), setting \(w=(\mathbb {1}-A)^{-1}b\),

for all \(x\in \mathbb {T}^d\).

Proof

The case \(n=0\) is trivial. For \(n\ne 0\), it follows directly noting that for \(n>0\)

\(\square \)

It is an easy consequence of this lemma that the matrices \(F^n_k(x)\) and \({\tilde{F}}^n_k(x)\) introduced in (4) and (5) are constant and independent on x and k. For the same reason, the Lyapunov exponents of \(\mathcal {C}_k\) are constant everywhere.

We now give Theorem 2 for maps \(\mathcal {C}_k\) under the assumption that A is symmetric. In this case, the result is much sharper, giving the exact exponential rate of decrease for all the eigenvalues of the covariance matrix \(\Gamma _{N,k}(x)\).

Proposition 1

Let \(\mathcal {C}_k: \mathbb {T}^d\rightarrow \mathbb {T}^d\) be defined as above with A symmetric, and let \(\gamma _1,\dots ,\gamma _d\) its Lyapunov exponents counted with multiplicity, that is, the exponents are not necessarily different. Then, the eigenvalues \(\lambda _{N,k}^{(i)}\) of the covariance matrix \(\Gamma _{N,k}\) satisfy

Proof

Since A is symmetric, there exists an orthonormal matrix P such that

with \(\delta _i \in \mathbb {R}\) for all \(i=1,\dots ,d\), and in particular, the Lyapunov exponents of \(\mathcal {C}_k\) are given by \(\gamma _i = \log |\delta _i|\). Since A has no eigenvalues of modulus 1, the map \(\mathcal {C}_k\) is hyperbolic (see Definition 2).

Now, from (22), the normal matrix satisfies

and its eigenvalues are

where we recall that the eigenvalues \(\delta _i\) are real. We conclude using that

and \(\gamma _i = \log |\delta _i|\). \(\square \)

We can say more also for the asymptotic behaviour of the largest eigenvalue \({\tilde{\lambda }}_N^{(d+1)}\) of the covariance matrix \({\tilde{\Gamma }}_N\) of the orbit determination problem in case (B). In Theorem 3, we proved that \({\tilde{\lambda }}_N^{(d+1)}\) decreases slower than exponentially, the lack of a precise estimate being due to the uncertainty on the speed of convergence to zero in (20). In the class of maps we are studying in this section, we can be much more precise on the asymptotic behaviour of \({\tilde{\lambda }}_N^{(d+1)}\).

Proposition 2

Let \(\mathcal {C}_k: \mathbb {T}^d\rightarrow \mathbb {T}^d\) be defined as above. The largest eigenvalue \({\tilde{\lambda }}_{N}^{(d+1)}\) of the covariance matrix \({\tilde{\Gamma }}_{N}\) for case (B) of the orbit determination problem satisfies

for all \(N\ge 1\).

Proof

Using the same notation of Sect. 4, we consider the auxiliary map \(g:\mathbb {T}^d\times K \rightarrow \mathbb {T}^d\times K\), \(g(x,k)=(\mathcal {C}_k(x),k)\), and recalling (17) and Lemma 4, its Jacobian matrix \(G^n\) takes for all \(n\in \mathbb {Z}\) the form

for all (x, k), where we recall that \(w =(\mathbb {1}-A)^{-1}b\) . We note that the eigenvalues of \(G^n\) are equal to \(\delta _1^{n},\dots ,\delta _d^n,1\), where \(\delta _1,\dots ,\delta _d\) are the eigenvalues of A. Actually, choosing \(v_i\) such that \(Av_i = \delta _iv_i\), then \(G^n(v_i,0)^T = (A^nv_i,0)^T=\delta _i^n(v_i,0)^T\), and, choosing the vector \(v_0=(w,1)\in \mathbb {R}^{d+1}\), we have

We thus get that for the normal matrix \(C^g_{N}(x,k)\) it holds

and for the normal matrix \({\tilde{C}}_{N}(x,k)\) of \(\mathcal {C}_k\) relative to case (B) of the orbit determination problem, we use (18) to write

Hence, from the variational characterisation of the eigenvalues of \({\tilde{C}}_{N}(x,k)\), we obtain that its smallest eigenvalue \({\tilde{\delta }}_N^{(1)}\) satisfies

and the result follows recalling that

\(\square \)

6 Conclusions and future work

We have considered the problem of orbit determination under the assumption that the number of observations grows simultaneously with the time span over which they are performed. Following the numerical results in Serra et al. (2018), Spoto and Milani (2016) we have studied the asymptotic rate of decay of the uncertainties as the number of observations grows.

We have considered the problem for hyperbolic maps, for which all the Lyapunov exponents are not zero, depending on a parameter, and we have treated separately the cases in which the parameter is included or not in the orbit determination procedure. We have analytically proved that if the parameter is not included then the uncertainties decrease exponentially, while if the parameter is included, then the uncertainties decrease strictly slower than exponentially. This is consistent with the numerical results and gives a proof of one of the main questions posed in Serra et al. (2018), Spoto and Milani (2016).

Together with the results in Marò (2020), which considered the ordered case (KAM scenario), this paper is a step forward the complete understanding of the numerical results.

References

Barreira, L., Pesin, Y.: Introduction to smooth ergodic theory. Graduate Studies in Mathematics, 148. American Mathematical Society, Providence (2013)

Chirikov, B.: A universal instability of many-dimensional oscillator systems. Phys. Rep. 52, 263 (1979)

Celletti, A., Di Ruzza, S., Lothka, C., Stefanelli, L.: Nearly-integrable dissipative systems and celestial mechanics. Eur. Phys. J. Spec. Top. 186, 33–66 (2010)

Gauss, C.F.: Theoria motus corporum coelestium in sectionibus conicis solem ambientium (Theory of the motion of the heavenly bodies moving about the sun in conic sections). Dover publications (1809/1963)

Gronchi, G.F., Baù, G., Marò, S.: Orbit determination with the two-body integrals: III. Cel. Mech. Dyn. Ast. 123, 105–122 (2015)

Lari, G., Milani, A.: Chaotic orbit determination in the context of the JUICE mission. Planet. Space Sci. 176, 104679 (2019)

Ma, H., Baù, G., Bracali Cioci, D., Gronchi, G.F.: Preliminary orbits with line-of-sight correction for LEO satellites observed with radar. Cel. Mech. Dyn. Ast. 130, 70 (2018)

Marò, S.: Orbit determination for standard-like maps: asymptotic expansion of the confidence region in regular zones. Cel. Mech. Dyn. Ast. 132, 40 (2020)

Milani, A., Baù, G.: Orbit determination for the Fibonacci dynamical system and generalizations: asymptotic behavior. https://drive.google.com/file/d/1q3RfvFOAUWCC163cQGnEO-X-eknuKsLV/view

Milani, A., Gronchi, G.F.: The Theory of Orbit Determination. Cambridge University Press, Cambridge (2010)

Milani, A., Valsecchi, G.B.: The asteroid identification problem II: target plane confidence boundaries. Icarus 140, 408–423 (1999)

Oseledets, V.I.: A multiplicative ergodic theorem. Lyapunov characteristic numbers for dynamical systems. Trudy Moskov. Mat. Obsc. 19, 176–210 (1968)

Oseledets, V.I.: English translation in trans. Moscow Math. Soc. 19, 197–231 (1968)

Raghunathan, M.S.: A proof of Oseledec’s multiplicative ergodic theorem. Israel J. Math. 32, 356–362 (1979)

Rosengren, A.J., Alessi, E.M., Rossi, A., Valsecchi, G.B.: Chaos in navigation satellite orbits caused by the perturbed motion of the Moon. MNRAS 449, 3522–3526 (2015)

Ruelle, D.: Ergodic theory on differentiable dynamical systems. IHES Publicationes Mathematiques 50, 275–320 (1979)

Siegel, C.L., Moser, J.K.: Lectures on Celestial Mechanics. Springer, Berlin (1971)

Serra, D., Spoto, F., Milani, A.: A multi-arc approach for chaotic orbit determination problems. Cel. Mech. Dyn. Ast. 130, 75 (2018)

Spoto, F., Milani, A.: Shadowing Lemma and chaotic orbit determination. Cel. Mech. Dyn. Ast. 124, 295–309 (2016)

Wisdom, J.: Urey Prize Lecture-Chaotic dynamics in the solar system. Icarus 72, 241–257 (1987)

Acknowledgements

This paper is dedicated to the memory of Andrea Milani who suggested the problem. We thank Gianluigi del Magno for discussions on the proof of Lemma 2. We would like to thank also the unknown referees for several valuable advice that improved the final version of the paper.

Funding

Open access funding provided by Università di Pisa within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was partially supported by the National Group of Mathematical Physics (GNFM-INdAM) through the project “Orbit Determination: from order to chaos” (Progetto Giovani 2019). This research is also part the authors’ activity within the UMI-DinAmicI community (www.dinamici.org) and the GNFM-INdAM, Italy.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Marò, S., Bonanno, C. Asymptotic behaviour of orbit determination for hyperbolic maps. Celest Mech Dyn Astr 133, 29 (2021). https://doi.org/10.1007/s10569-021-10026-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10569-021-10026-8