Abstract

We study a Lax pair in a 2-parameter Lie algebra in various representations. The overlap coefficients of the eigenfunctions of L and the standard basis are given in terms of orthogonal polynomials and orthogonal functions. Eigenfunctions for the operator L for a Lax pair for \(\mathfrak {sl}(d+1,\mathbb {C})\) is studied in certain representations.

Similar content being viewed by others

1 Introduction

The link of the Toda lattice to three-term recurrence relations via the Lax pair after the Flaschka coordinate transform is well understood, see e.g. [2, 27]. We consider a Lax pair in a specific Lie algebra, such that in irreducible \(*\)-representations the Lax operator is a Jacobi operator. A Lax pair is a pair of time-dependent matrices or operators L(t) and M(t) satisfying the Lax equation

where \([\, , \, ]\) is the commutator and the dot represents differentiation with respect to time. The Lax operator L is isospectral, i.e. the spectrum of L is independent of time. A famous example is the Lax pair for the Toda chain in which L is a self-adjoint Jacobi operator,

where \(\{e_n\}\) is an orthonormal basis for the Hilbert space, and M is the skew-adjoint operator given by

In this case the Lax equation describes the equations of motion (after a change of variables) of a chain of interacting particles with nearest neighbour interactions. The eigenvalues of L, L being isospectral, constitute integrals of motion.

In this paper we define a Lax pair in a 2-parameter Lie algebra. In the special case of \(\mathfrak {sl}(2,\mathbb {C})\) we recover the Lax pair for the \(\mathfrak {sl}(2,\mathbb {C})\) Kostant Toda lattice, see [2, Sect. 4.6] and references given there. We give a slight generalization by allowing for a more general M(t). We discuss the corresponding solutions to the corresponding differential equations in various representations of the Lie algebra. In particular, one obtains the classical relation to the Hermite, Krawtchouk, Charlier, Meixner, Laguerre and Meixner–Pollaczek polynomials from the Askey scheme of hypergeometric functions [16] for which the Toda modification, see [13, Sect. 2.8], remains in the same class of orthogonal polynomials. This corresponds to the results established by Zhedanov [29], who investigated the situation where L, M and \(\dot{L}\) act as three-term recurrence operators and close up to a Lie algebra of dimension 3 or 4. In the current paper Zhedanov’s result is explained, starting from the other end. In Zhedanov’s approach the condition on forming a low-dimensional Lie algebra forces a factorization of the functions as a function of time t and place n, which is immediate from representing the Lax pair from the Lie algebra element. The solutions of the Toda lattice arising in this way, i.e. which are factorizable as functions of n and t, have also been obtained by Kametaka [15] stressing the hypergeometric nature of the solutions. The link to Lie algebras and Lie groups in Kametaka [15] is implicit, see especially [15, Part I]. The results and methods of the short paper by Kametaka [15] have been explained and extended later by Okamoto [23]. In particular, Okamoto [23] gives the relation to the \(\tau \)-function formulation and the Bäcklund transformations.

Moreover, we extend to non-polynomial settings by considering representations of the corresponding Lie algebras in \(\ell ^2(\mathbb {Z})\) corresponding to the principal unitary series of \(\mathfrak {su}(1,1)\) and the representations of \(\mathfrak {e}(2)\), the Lie algebra of the group of motions of the plane. In this way we find solutions to the Toda lattice equations labelled by \(\mathbb {Z}\). There is a (non-canonical) way to associate to recurrences on \(\ell ^2(\mathbb {Z})\) three-term recurrences for \(2\times 2\)-matrix valued polynomials, see e.g. [3, 18]. However, this does not lead to explicit \(2\times 2\)-matrix valued solutions of the non-abelian Toda lattice as introduced and studied in [4, 7] in relation to matrix valued orthogonal polynomials, see also [14] for an explicit example and the relation to the modification of the matrix weight. The general Lax pair for the Toda lattice in finite dimensions, as studied by Moser [22], can also be considered and slightly extended in the same way as an element of the Lie algebra \(\mathfrak {sl}(d+1,\mathbb {C})\). This involves t-dependent finite discrete orthogonal polynomials, and these polynomials occur in describing the action of L(t) in highest weight representations. We restrict to representations for the symmetric powers of the fundamental representations, then the eigenfunctions can be described in terms of multivariable Krawtchouk polynomials following Iliev [12] establishing them as overlap coefficients between a natural basis for two different Cartan subalgebras. Similar group theoretic interpretations of these multivariable Krawtchouk polynomials have been established by Crampé et al. [5] and Genest et al. [8]. We discuss briefly the t-dependence of the corresponding eigenvectors of L(t).

In brief, in Sect. 2 we recall the 2-parameter Lie algebra as in [20] and the Lax pair. In Sect. 3 we discuss \(\mathfrak {su}(2)\) and its finite-dimensional representations, and in Sect. 4 we discuss the case of \(\mathfrak {su}(1,1)\), where we discuss both discrete series representations and principal unitary series representations. The last leads to new solutions of the Toda equations and the generalization in terms of orthogonal functions. The corresponding orthogonal functions are the overlap coefficients between the standard basis in the representations and the t-dependent eigenfunctions of the operator L. In Sect. 5 we look at the oscillator algebra as specialization, and in Sect. 6 we consider the Lie algebra for the group of plane motions leading to a solution in connection to Bessel functions. In Sect. 7 we indicate how the measures for the orthogonal functions involved have to be modified in order to give solutions of the coupled differential equations. For the Toda case related to orthogonal polynomials, this coincides with the Toda modification [13, Sect. 2.8]. Finally, in Sect. 8 we consider the case of finite dimensional representations of such a Lax pair for a higher rank Lie algebra in specific finite-dimensional representations for which all weight spaces are 1-dimensional.

A question following up on Sect. 7 is whether the modification for the weight is of general interest, cf. [13, Sect. 2.8]. A natural question following up on Sect. 8 is what happens in other finite-dimensional representations, and what happens in infinite dimensional representations corresponding to non-compact real forms of \(\mathfrak {sl}(d+1,\mathbb {C})\) as is done in Sect. 4 for the case \(d=1\). We could also ask if it is possible to associate Racah polynomials, as the most general finite discrete orthogonal polynomials in the Askey scheme, to the construction of Sect. 8. Moreover, the relation to the interpretation as in [19] suggests that it might be possible to extend to quantum algebra setting, but this is quite open.

This paper is dedicated to Richard A. Askey (1933–2019) who has done an incredible amount of fascinating work in the area of special functions, and who always had an open mind, in particular concerning relations with other areas. We hope this spirit is reflected in this paper. Moreover, through his efforts for mathematics education, Askey’s legacy will be long-lived.

2 The Lie algebra \(\varvec{\mathfrak g}(a,b)\)

Let \(a,b \in \mathbb {C}\). The Lie algebra \(\mathfrak g(a,b)\) is the 4-dimensional complex Lie algebra with basis H, E, F, N satisfying

For \(a,b \in \mathbb {R}\) there are two inequivalent \(*\)-structures on \(\mathfrak g(a,b)\) defined by

where \(\epsilon \in \{+,-\}\).

We define the following Lax pair in \(\mathfrak g(a,b)\).

Definition 2.1

Let \(r,s \in C^1 [0,\infty )\) and \(u \in C[0,\infty )\) be real-valued functions and let \(c \in \mathbb {R}\). The Lax pair \(L,M \in \mathfrak g(a,b)\) is given by

Note that \(L^*=L\) and \(M^*=-M\). Being a Lax pair means that \(\dot{L} = [M,L]\), which leads to the following differential equations.

Proposition 2.2

The functions r, s and u satisfy

Proof

From the commutation relations (2.1) it follows that

Since \([M,L]=\dot{L} = \dot{s}(t)(aH+bN) + \dot{r}(t) (E+E^*)\), the results follows. \(\square \)

Corollary 2.3

The function \(I(r,s)=\epsilon r^2+ (as+2c)s\) is an invariant.

Proof

Differentiating gives

which equals zero by Proposition 2.2. \(\square \)

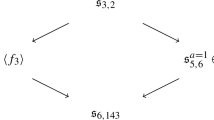

In the following sections we consider the Lax operator L in an irreducible \(*\)-representation of \(\mathfrak g(a,b)\), and we determine explicit eigenfunctions and its spectrum. We restrict to the following special cases of the Lie algebra \(\mathfrak g(a,b)\):

-

\(\mathfrak g(1,0) \cong \mathfrak {sl}(2,\mathbb {C})\oplus \mathbb {C},\)

-

\(\mathfrak g(0,1) \cong \mathfrak b(1)\) is the generalized oscillator algebra,

-

\(\mathfrak g(0,0) \cong \mathfrak e(2) \oplus \mathbb {C}\), with \(\mathfrak e(2)\) the Lie algebra of the group of plane motions.

These are the only essential cases as \(\mathfrak g(a,b)\) is isomorphic as a Lie algebra to one of these cases, see [20, Sect. 2-5].

3 The Lie algebra \(\varvec{\mathfrak {su}}(2)\)

In this section we consider the Lie algebra \(\mathfrak g(a,b)\) from Sect. 2 with \((a,b)=(1,0)\) and \(\epsilon = +\), i.e. the Lie algebra \(\mathfrak {su}(2) \oplus \mathbb {C}\). The basis element N plays no role in this case, therefore we omit it. So we consider the Lie algebra with basis H, E, F satisfying commutation relations

and the \(*\)-structure is defined by \(H^*=H, E^*=F\).

The Lax pair (2.2) is given by

where (without loss of generality) we set \(c=0\). The differential equations for r and s from Proposition 2.2 read in this case

and the invariant in Corollary 2.3 is given by \(I(r,s)=r^2+s^2\).

Lemma 3.1

Assume \({{\,\mathrm{sgn}\,}}(u(t))={{\,\mathrm{sgn}\,}}(r(t))\) for all \(t>0\), \(s(0)>0\) and \(r(0)>0\). Then \({{\,\mathrm{sgn}\,}}(s(t))>0\) and \({{\,\mathrm{sgn}\,}}(r(t))>0\) for all \(t>0\).

Proof

From \(\dot{s} = 2 ur\) it follows that s is increasing. Since (r(t), s(t)) in phase space is a point on the invariant \(I(r,s)=I(r(0),s(0))\), which describes a circle around the origin, it follows that r(t) and s(t) remain positive. \(\square \)

Throughout this section we assume that the conditions of Lemma 3.1 are satisfied, so that r(t) and s(t) are positive. Note that if we change the condition on r(0) to \(r(0)<0\), then \(r(t)<0\) for all \(t>0\).

For \(j \in \frac{1}{2}\mathbb {N}\) let \(\ell ^2_j\) be the \(2j+1\) dimensional complex Hilbert space with standard orthonormal basis \(\{e_n \mid n=0,\ldots ,2j\}\). An irreducible \(*\)-representation \(\pi _j\) of \(\mathfrak {su}(2)\) on \(\ell ^2_j\) is given by

where we use the notation \(e_{-1}=e_{2j+1}=0\). In this representation the Lax operator \(\pi _j(L)\) is the Jacobi operator

We can diagonalize the Lax operator \(\pi _j(L)\) using orthonormal Krawtchouk polynomials [16, Sect. 9.11], which are defined by

where \(N\in \mathbb {N}\), \(0<p<1\) and \(n,x \in \{0,1,\ldots ,N\}\). The three-term recurrence relation is

with the convention \(K_{-1}(x) = K_{N+1}(x)=0\). The orthogonality relations read

Theorem 3.2

Define for \(x \in \{0,\ldots ,2j\}\)

where \(p(t) = \frac{1}{2} + \frac{s(t)}{2C}\) and \(C = \sqrt{s^2 + r^2}\). For \(t>0\) let \(U_t: \ell ^2_j \rightarrow \ell ^2(\{0,\ldots ,2j\}, W_t)\) be defined by

then \(U_t\) is unitary and \(U_t \circ \pi _j(L(t)) \circ U_t^* = M(2C(j-x))\).

Here M denotes the multiplication operator given by \([M(f)g](x) = f(x)g(x)\).

Proof

From (3.2) and the recurrence relation of the Krawtchouk polynomials we obtain

where

The last identity implies

so that

Then we find that the eigenvalue is

Since \(s^2+r^2\) is constant, the result follows. \(\square \)

4 The Lie algebra \(\varvec{\mathfrak {su}}(1,1)\)

In this section we consider representations of \(\mathfrak g(a,b)\) with \((a,b)=(1,0)\) and \(\epsilon =-\), i.e. the Lie algebra \(\mathfrak {su}(1,1) \oplus \mathbb {C}\). We omit the basis element N again. The commutation relations are the same as in the previous section. The \(*\)-structure in this case is defined by \(H^*=H\) and \(E^*=-F\).

The Lax pair (2.2) is given by

where we set \(c=0\) again. The functions r and s satisfy

and the invariant is given by \(I(r,s)=s^2-r^2\).

Lemma 4.1

Assume \({{\,\mathrm{sgn}\,}}(u(t))=-{{\,\mathrm{sgn}\,}}(r(t))\) for all \(t>0\), \(s(0)>0\) and \(r(0)>0\). Then \({{\,\mathrm{sgn}\,}}(s(t))>0\) and \({{\,\mathrm{sgn}\,}}(r(t))>0\) for all \(t>0\).

Proof

The proof is similar to the proof of Lemma 3.1, where in this case \(I(r,s)=I(r(0),s(0))\) describes a hyperbola or a straight line. \(\square \)

Throughout this section we assume that the assumptions of Lemma 4.1 are satisfied.

We consider two families of irreducible \(*\)-representations of \(\mathfrak {su}(1,1)\). The first family is the positive discrete series representations \(\pi _k\), \(k>0\), on \(\ell ^2(\mathbb {N})\). The actions of the basis elements on the standard orthonormal basis \(\{e_n \mid n \in \mathbb {N}\}\) are given by

We use the convention \(e_{-1}=0\).

The second family of representations we consider is the principal unitary series representation \(\pi _{\lambda ,\varepsilon }\), \(\lambda \in -\frac{1}{2}+i\mathbb {R}_+\), \(\varepsilon \in [0,1)\) with \((\lambda ,\varepsilon ) \ne (-\frac{1}{2},\frac{1}{2})\), on \(\ell ^2(\mathbb {Z})\). The actions of the basis elements on the standard orthonormal basis \(\{ e_n \mid n \in \mathbb {Z}\}\) are given by

Note that both representations \(\pi _k^+\) and \(\pi _{\lambda ,\varepsilon }\) as given above define unbounded representations. The operators \(\pi (X)\), \(X \in \mathfrak {su}(1,1)\), are densely defined operators on their representation space, where as a dense domain we take the set of finite linear combinations of the standard orthonormal basis \(\{e_n\}\).

Remark 4.2

The Lie algebra \(\mathfrak {su}(1,1)\) has two more families of irreducible \(*\)-representations: the negative discrete series and the complementary series. The negative discrete series representation \(\pi _k^-\), \(k>0\), can be obtained from the positive discrete series representation \(\pi _k\) by setting

where \(\vartheta \) is the Lie algebra isomorphism defined by \(\vartheta (H)=-H\), \(\vartheta (E)=F\), \(\vartheta (F)=E\).

The complementary series are defined in the same way as the principal unitary series, but the labels \(\lambda ,\varepsilon \) satisfy \(\varepsilon \in [0,\frac{1}{2})\), \(\lambda \in (-\frac{1}{2},-\varepsilon )\) or \(\varepsilon \in (\frac{1}{2},1)\), \(\lambda \in (-\frac{1}{2}, \varepsilon -1)\).

The results obtained in this section about the Lax operator in the positive discrete series and principal unitary series representations can easily be extended to these two families of representations.

4.1 The Lax operator in the positive discrete series

The Lax operator L acts in the positive discrete series representation as a Jacobi operator on \(\ell ^2(\mathbb {N})\) by

\(\pi _k(L)\) can be diagonalized using explicit families of orthogonal polynomials. We need to distinguish between three cases corresponding to the invariant \(s^2-r^2\) being positive, zero or negative. This corresponds to hyperbolic, parabolic and elliptic elements, and the eigenvalues and eigenfunctions have different behaviour per class, cf. [19].

4.1.1 Case 1: \(s^2-r^2>0\)

In this case eigenfunctions of \(\pi _k(L)\) can be given in terms of Meixner polynomials. The orthonormal Meixner polynomials [16, Sect. 9.10] are defined by

where \(\beta >0\) and \(0<c<1\). They satisfy the three-term recurrence relation

Their orthogonality relations are given by

Theorem 4.3

Let

where \(c(t) \in (0,1)\) is determined by \(\frac{s}{r}=\frac{1+c}{2\sqrt{c}}\), or equivalently \(c(t) = e^{-2 {{\,\mathrm{arccosh}\,}}(\frac{s(t)}{r(t)})}\). Define for \(t>0\) the operator \(U_t:\ell ^2(\mathbb {N}) \rightarrow \ell ^2(\mathbb {N},W_t)\) by

then \(U_t\) is unitary and \(U_t\circ \pi _k(L(t)) \circ U_t^* = M(2C(x+k))\) where \(C = \sqrt{s^2-r^2}\).

Proof

The proof runs along the same lines as the proof of Theorem 3.2. The condition \(s^2-r^2>0\) implies \(s/r>1\), so there exists a \(c = c(t) \in (0,1)\) such that

It follows from the three-term recurrence relation for Meixner polynomials that \(r^{-1}L\) has eigenvalues \(\frac{(1-c)(x+k)}{\sqrt{c}}\), \(x \in \mathbb {N}\). Write \(c=e^{-2a}\) with \(a>0\), then \(\frac{ 1+c }{2\sqrt{c}}= \cosh (a)\), so that

where \(C = \sqrt{s^2 -r^2}\). \(\square \)

4.2 Case 2: \(s^2-r^2=0\)

In this case we need the orthonormal Laguerre polynomials [16, Sect. 9.12], which are defined by

They satisfy the three-term recurrence relation

and the orthogonality relations are

The set \(\{L_n \mid n \in \mathbb {N}\}\) is an orthonormal basis for the corresponding weighted \(L^2\)-space.

Using the three-term recurrence relation for the Laguerre polynomials we obtain the following result.

Theorem 4.4

Let

and let \(U_t:\ell ^2(\mathbb {N}) \rightarrow L^2([0,\infty ),W_t(x)dx)\) be defined by

then \(U_t\) is unitary and \(U_t \circ \pi _k(L(t)) \circ U_t^{*} = M(x)\).

4.3 Case 3: \(s^2-r^2<0\)

In this case we need the orthonormal Meixner–Pollaczek polynomials [16, Sect. 9.7] given by

where \(\lambda >0\) and \(0<\phi <\pi \). The three-term recurrence relation for these polynomials is

and the orthogonality relations read

The set \(\{P_n \mid n \in \mathbb {N}\}\) is an orthonormal basis for the weighted \(L^2\)-space.

Theorem 4.5

For \(\phi (t) = \arccos (\frac{s(t)}{r(t)})\) let

and let \(U_t : \ell ^2(\mathbb {N}) \rightarrow L^2(\mathbb {R},W_t(x)dx)\) be defined by

then \(U_t\) is unitary and \(U_t \circ \pi _k(L(t)) \circ U_t^{*} = M(-2Cx)\), where \(C = \sqrt{r^2-s^2}\).

Proof

The proof is similar as before. Using the three-term recurrence relation for the Meixner–Pollaczek polynomials it follows that the generalized eigenvalue of \(r^{-1}\pi _k(L)\) is \(- 2x \sin (\phi )\), where \(\phi \in (0,\pi )\) is determined by \(-\frac{s}{r} = \cos \phi \). Then

from which the result follows. \(\square \)

4.4 The Lax operator in the principal unitary series

The action of the Lax operator L in the principal unitary series as a Jacobi operator on \(\ell ^2(\mathbb {Z})\) is given by

Again we distinguish between the cases where the invariant \(s^2-r^2\) is either positive, negative or zero.

4.4.1 Case 1: \(s^2-r^2>0\)

The Meixner functions [11] are defined by

for \(x,n \in \mathbb {Z}\) and \(c \in (0,1)\). The parameters \(\lambda \) and \(\varepsilon \) are the labels from the principal unitary series. The Meixner functions satisfy the three-term recurrence relation

and the orthogonality relations read

The set \(\{ m_n \mid n \in \mathbb {Z}\}\) is an orthonormal basis for the weighted \(L^2\)-space.

Theorem 4.6

For \(t>0\) let

where \(c(t) \in (0,1)\) is determined by \(\frac{s(t)}{r(t)}=\frac{1+c(t)}{2\sqrt{c(t)}}\), or equivalently \(c(t) = e^{-2 {{\,\mathrm{arccosh}\,}}(\frac{s(t)}{r(t)})}\). Define \(U_t:\ell ^2(\mathbb {Z}) \rightarrow \ell ^2(\mathbb {Z},W_t)\) by

then \(U_t\) is unitary and \(U_t \circ \pi _{\lambda ,\varepsilon }(L(t)) \circ U_t^* = M(2C(x+\varepsilon ))\), where \(C = \sqrt{s^2 - r^2}\).

4.4.2 Case 2: \(s^2-r^2=0\)

In this case we need Laguerre functions [10] defined by

where \(x\in \mathbb {R}\), \(n \in \mathbb {Z}\), and U(a; b; z) is Tricomi’s confluent hypergeometric function, see e.g. [25, (1.3.1)], for which we use its principal branch with branch cut along the negative real axis. The Laguerre functions \(\{\psi _n \mid n \in \mathbb {Z}\}\) form an orthonormal basis for \(L^2(\mathbb {R},w(x)dx)\) where

The three-term recurrence relation reads

Theorem 4.7

Let

and let \(U_t : \ell ^2(\mathbb {Z}) \rightarrow L^2(\mathbb {R},W_t(x)dx)\) be defined by

then \(U_t\) is unitary and \(U_t \circ \pi _{\lambda ,\varepsilon }(L(t)) \circ U_t^{*} = M(-x)\).

4.4.3 Case 3: \(s^2-r^2<0\)

The Meixner–Pollaczek functions [17, Sect. 4.4] are defined by

Define

where \(\overline{f}(x) = \overline{f(x)}\) and

Let \(L^2(\mathbb {R},W(x)dx)\) be the Hilbert space consisting of functions \(\mathbb {R}\rightarrow \mathbb {C}^2\) with inner product

where \(f^t(x)\) denotes the conjugate transpose of \(f(x) \in \mathbb {C}^2\). The set \(\{({\begin{matrix}u_n \\ \overline{u_n} \end{matrix}}) \mid n \in \mathbb {Z}\}\) is an orthonormal basis for \(L^2(\mathbb {R},W(x)dx)\). The three-term recurrence relation for the Meixner–Pollaczek functions is

The function \(\overline{u_n}\) satisfies the same recurrence relation.

Theorem 4.8

For \(\phi (t) = \arccos (\frac{s(t)}{r(t)})\) let

and let \(U_t : \ell ^2(\mathbb {Z}) \rightarrow L^2(\mathbb {R},W_t(x;\lambda ,\varepsilon )dx)\) be defined by

then \(U_t\) is unitary and \(U_t \circ \pi _{\lambda ,\varepsilon }(L(t)) \circ U_t^{*} = M(2Cx)\), where \(C = \sqrt{r^2-s^2}\).

Note that the spectrum of \(\pi _{\lambda ,\varepsilon }(L(t))\) has multiplicity 2.

Remark 4.9

Transferring a three-term recurrence on \(\mathbb {Z}\) to a three term recurrence for \(2\times 2\) matrix orthogonal polynomials, see [3, Sect. VII.3] and [18, Sect. 3.2], does not lead to an example of the nonabelian Toda lattice [4, 7, 14]

5 The oscillator algebra \(\varvec{\mathfrak b}(1)\)

\(\mathfrak b(1)\) is the Lie \(*\)-algebra \(\mathfrak g(a,b)\) with \((a,b)=(0,1)\) and \(\epsilon =+\). Then \(\mathfrak b(1)\) has a basis E, F, H, N satisfying

The \(*\)-structure is defined by \(H^*=H\), \(N^*=N\), \(E^*=F\). The Lax pair L, M is given by

The differential equations for s and r are in this case given by

and the invariant is \(r^2+2cs\).

Lemma 5.1

Assume \({{\,\mathrm{sgn}\,}}(u(t))={{\,\mathrm{sgn}\,}}(r(t))\) for all \(t>0\), \(s(0)>0\) and \(r(0)>0\). Then \({{\,\mathrm{sgn}\,}}(s(t))>0\) and \({{\,\mathrm{sgn}\,}}(r(t))>0\) for all \(t>0\).

Proof

The proof is similar to the proof of Lemma 4.1, where in this case \(I(r,s)=I(r(0),s(0))\) describes a parabola (\(c \ne 0\)) or a straight line (\(c=0\)). \(\square \)

Throughout this section we assume the conditions of Lemma 5.1 are satisfied.

There is a family of irreducible \(*\)-representations \(\pi _{k,h}\), \(h>0\), \(k\ge 0\), on \(\ell ^2(\mathbb {N})\) defined by

The action of the Lax operator on the basis of \(\ell ^2(\mathbb {N})\) is given by

For the diagonalization of \(\pi _{k,h}(L)\) we distinguish between the cases \(c \ne 0\) and \(c = 0\).

5.1 Case 1: \(c \ne 0\)

In this case we need the orthonormal Charlier polynomials [16, Sect. 9.14], which are defined by

where \(a>0\) and \(n,x \in \mathbb {N}\). The orthogonality relations are

and \(\{C_n \mid n \in \mathbb {N}\}\) is an orthonormal basis for the corresponding \(L^2\)-space. The three-term recurrence relation reads

Theorem 5.2

For \(t>0\) define

and let \(U_t:\ell ^2(\mathbb {N}) \rightarrow L^2(\mathbb {N}, W_t)\) be defined by

Then \(U_t\) is unitary and \(U_t \circ L(t) \circ U_t^{*} = M(2c(x+k) + Ch)\), where \(C=\frac{1}{2c}r^2+s\).

Proof

The action of L can be written in the following form:

and recall that \(\frac{1}{2c}r^2+ s\) is constant. The result then follows from comparing with the three-term recurrence relation for the Charlier polynomials. \(\square \)

5.2 Case 2: \(c=0\)

In this case \(\dot{r}=0\), so r is a constant function. We use the orthonormal Hermite polynomials [16, Sect. 9.15], which are given by

They satisfy the orthogonality relations

and \(\{H_n \mid n \in \mathbb {N}\}\) is an orthonormal basis for \(L^2(\mathbb {R}, e^{-x^2}dx/\sqrt{\pi })\). The three-term recurrence relation is given by

Theorem 5.3

For \(t>0\) define

and let \(U_t:\ell ^2(\mathbb {N}) \rightarrow L^2(\mathbb {R};w_t(x;h)\,dx)\) be defined by

then \(U_t\) is unitary and \(U_t \circ \pi _{k,h}(L(t)) \circ U_t^{*} = M(x)\).

Proof

We have

which corresponds to the three-term recurrence relation for the Hermite polynomials. \(\square \)

6 The Lie algebra \(\varvec{\mathfrak {e}}(2)\)

We consider the Lie algebra \(\mathfrak g(a,b)\) with \(a=b=0\) and \(\epsilon =+\). Similar as in the case of \(\mathfrak {sl}(2,\mathbb {C})\), we omit the basis element N again. The remaining Lie algebra is \(\mathfrak e(2)\) with basis H, E, F satisfying

and the \(*\)-structure is determined by \(E^*=F, \quad H^*=H\).

The Lax pair is given by

with \(\dot{r} = -2cu\).

\(\mathfrak e(2)\) has a family of irreducible \(*\)-representations \(\pi _k\), \(k>0\), on \(\ell ^2(\mathbb {Z})\) given by

This defines an unbounded representation. As a dense domain we use the set of finite linear combinations of the basis elements.

Assume \(c \ne 0\). The Lax operator \(\pi _k(L(t))\) is a Jacobi operator on \(\ell ^2(\mathbb {Z})\) given by

For the diagonalization of \(\pi _k(L)\) we use the Bessel functions \(J_n\) [1, 28] given by

with \(z \in \mathbb {R}\) and \(n \in \mathbb {Z}\). They satisfy the Hansen-Lommel type orthogonality relations, which follow from [1, (4.9.15), (4.9.16)]

and the set \(\{J_{\cdot -n}(z) \mid n \in \mathbb {Z}\}\) is an orthonormal basis for \(\ell ^2(\mathbb {Z})\). A well-known recurrence relation for \(J_n\) is

which is equivalent to

Theorem 6.1

For \(t>0\) define \(U_t: \ell ^2(\mathbb {Z}) \rightarrow \ell ^2(\mathbb {Z})\) by

then \(U_t\) is unitary and \(U_t \circ \pi _k(L(t)) \circ U_t^{*} = M(2cm)\).

Finally, let us consider the completely degenerate case \(c=0\). In this case r is also a constant function, so there are no differential equations to solve. We can still diagonalize the (degenerate) Lax operator, which is now independent of time.

Theorem 6.2

Define \(U:\ell ^2(\mathbb {Z}) \rightarrow L^2[0,2\pi ]\) by

then U is unitary and \(U \circ \pi _k(L)\circ U^* = M(2kr \cos (x))\).

7 Modification of orthogonality measures

In this section we briefly investigate the orthogonality measures from the previous sections in case the Lax operator L(t) acts as a finite or semi-infinite Jacobi matrix. In these cases the functions \(U_te_n\) are t-dependent orthogonal polynomials and we see that the weight function \(W_t\) of the orthogonality measure for \(U_t e_n\) is a modification of the weight function \(W_0\) in the sense that

where \(K_t\) is independent of x. The modification function m(t) depends on the functions s or r, which (implicitly) depend on the function u. We show how the choice of u effects the modification function m.

Theorem 7.1

There exists a constant K such that

Remark 7.2

In the Toda-lattice case, \(u(t) = r(t)\), this gives back the well-known modification function \(m(t) = e^{Kt}\), see e.g. [13, Theorem 2.8.1].

Theorem 7.1 can be checked for each case by a straightforward calculation: we express m as a function of s and r,

where \(A_0\) is a normalizing constant such that \(m(0)=1\). Then differentiating and using the differential equations for r and s we can express \(\dot{m}/ m\) in terms of u and r.

7.1 \(\varvec{\mathfrak {su}}(2)\)

From Theorem 3.2 we see that

with \(C = \sqrt{s^2+r^2}\). Differentiating to t and using the relation \(\dot{s}(t) = 2 u(t) r(t)\) then gives

7.2 \(\varvec{\mathfrak {su}}(1,1)\)

For \(s^2-r^2>0\) Theorem 4.3 shows that

Then from \(\dot{s}(t) = -2u(t)r(t)\) and \(\dot{r}(t) = -2u(t)s(t)\) it follows that

where \(C = \sqrt{s^2-r^2}\).

For \(s^2-r^2=0\) Theorem 4.4 shows that

Then using \(\dot{r}(t) = 2u(t)r(t)\) it follows that

For \(s^2-r^2<0\) it follows from Theorem 4.5 that

Then from \(\dot{s}(t) = -2u(t)r(t)\) and \(\dot{r}(t) = -2u(t)s(t)\) it follows that

where \(C = \sqrt{r^2-s^2}\).

7.3 \(\varvec{\mathfrak b}(1)\)

For \(c \ne 0\) we see from Theorem 5.2 that

The relation \(\dot{r}(t) = -2c u(t)\) then leads to

For \(c=0\) Theorem 5.3 shows that

Note that \(r=r(t)\) is constant in this case. Then \(\dot{s}(t) = 2ru(t)\) leads to

Remark 7.3

The result from Theorem 7.1 is also valid for the orthogonal functions from Theorems 4.6 and 4.8, i.e. for L(t) acting as a Jacobi operator on \(\ell ^2(\mathbb {Z})\) in the principal unitary series for \(\mathfrak {su}(1,1)\) in cases \(r^2-s^2 \ne 0\). However, there is no similar modification function in the other cases where L(t) acts as a Jacobi operator on \(\ell ^2(\mathbb {Z})\). Furthermore, the corresponding recurrence relations for the functions on \(\mathbb {Z}\) can be rewritten to recurrence relations for \(2\times 2\) matrix orthogonal polynomials, but in none of the cases the modification of the weight function is as in Theorem 7.1.

8 The case of \(\varvec{\mathfrak {sl}}(d+1,\varvec{\mathbb {C}}\,)\)

We generalize the situation of the Lax pair for the finite-dimensional representation of \(\mathfrak {sl}(2,\mathbb {C})\) to the higher rank case of \(\mathfrak {sl}(d+1,\mathbb {C})\). Let \(E_{i,j}\) be the matrix entries forming a basis for the \(\mathfrak {gl}(d+1,\mathbb {C})\). We label \(i,j\in \{0,1,\ldots , d\}\). We put \(H_i= E_{i-1,i-1}-E_{i,i}\), \(i\in \{1,\ldots , d\}\), for the elements spanning the Cartan subalgebra of \(\mathfrak {sl}(d+1,\mathbb {C})\).

8.1 The Lax pair

Proposition 8.1

Let

and assume that the functions \(u_i\) and \(r_i\) are non-zero for all i and

then the Lax pair condition \(\dot{L}(t)=[L(t),M(t)]\) is equivalent to

Note that we can write it uniformly

assuming the convention that \(s_0(t)=s_{d+1}(t)=0\), which we adapt for the remainder of this section. The Toda case follows by taking \(u_i=r_i\) for all i, see [2, 22].

Proof

The proof essentially follows as in [2, Sect. 4.6], but since the situation is slightly more general we present the proof, see also [22, Sect. 5]. A calculation in \(\mathfrak {sl}(d+1,\mathbb {C})\) gives

and the last term needs to vanish, since this term does not occur in L(t) and in its derivative \(\dot{L}(t)\). Now the stated coupled differential equations correspond to \(\dot{L}=[M,L]\). \(\square \)

Remark 8.2

Taking the representation of the Lax pair for the \(\mathfrak {su}(2)\) case in the \(d+1\)-dimensional representation as in Sect. 6, we get, with \(d=2j\), as an example

Then the coupled differential equations of Proposition 8.1 are equivalent to (3.1).

Let \(\{e_n\}_{n=0}^d\) be the standard orthonormal basis for \(\mathbb {C}^{d+1}\), the natural representation of \(\mathfrak {sl}(d+1,\mathbb {C})\). Then L(t) is a t-dependent tridiagonal matrix. Moreover, we assume that \(r_i\) and \(s_i\) are real-valued functions for all i, so that L(t) is self-adjoint in the natural representation.

Lemma 8.3

Assume that the conditions of Proposition 8.1 hold. Let the polynomials \(p_n(\cdot ;t)\) of degree \(n \in \{0,1,\ldots , d\}\) in \(\lambda \) be generated by the initial value \(p_0(\lambda ;t)=1\) and the recursion

Let the set \(\{ \lambda _0, \ldots , \lambda _d\}\) be the zeroes of

In the natural representation L(t) has simple spectrum \(\sigma (L(t))= \{ \lambda _0, \ldots , \lambda _d\}\) which is independent of t, and \(\sum _{r=0}^d \lambda _r=0\) and

Note that with the choice of Remark 8.2, the polynomials in Lemma 8.3 are Krawtchouk polynomials, see Theorem 3.2. Explicitly,

where \(C=\sqrt{r^2(t)+s^2(t)}\) is invariant, see Theorem 3.2 and its proof.

Proof

In the natural representation we have

as a Jacobi operator. So the spectrum of L(t) is simple, and the spectrum is time independent, since (L(t), M(t)) is a Lax pair. We can generate the corresponding eigenvectors as \(\sum _{n=0}^d p_n(\lambda ;t) e_n\), where the recursion follows from the expression of the Lemma. The eigenvalues are then determined by the final equation, and since \(\mathrm {Tr}(L(t))=0\) we have \(\sum _{i=0}^d \lambda _i=0\). \(\square \)

Let \(P(t) = \bigl (p_i(\lambda _j;t)\bigr )_{i,j=0}^d\) be the corresponding matrix of eigenvectors, so that

Since L(t) is self-adjoint in the natural representation, we find

and the matrix \(Q(t) = \bigl (p_i(\lambda _j;t)\sqrt{w_j(t)} \bigr )_{i,j=0}^d\) is unitary. As \(r_i\) and \(s_i\) are real-valued, we have \(\overline{p_n(\lambda _s;t)} = p_n(\lambda _s;t)\), so that Q(t) is a real matrix, hence orthogonal. So the dual orthogonality relations to (8.2) hold as well. We will assume moreover that \(r_i\) are positive functions. The dual orthogonality relations to (8.2) hold;

Note that the \(w_r(t)\) are essentially time-dependent Christoffel numbers [26, Sect. 3.4]. By [22, Sect. 2], see also [6, Thm. 2], the eigenvalues and the \(w_r(t)\)’s determine the operator L(t), and in case of the Toda lattice, i.e. \(u_i(t) = r_i(t)\), the time evolution corresponds to linear first order differential equations for the Christoffel numbers [22, §3].

Since the spectrum is time-independent, the invariants for the system of Proposition 8.1 are given by the coefficients of the characteristic polynomial of L(t) in the natural representation. Since the characteristic polynomial is obtained by switching to the three-term recurrence for the corresponding monic polynomials, see [13, Sect. 2.2] and [22, §2], this gives the same computation. For a Lax pair, \(\mathrm {Tr}(L(t)^k)\) are invariants, and in this case the invariant for \(k=1\) is trivial since L(t) is traceless. In this way we have d invariants, \(\mathrm {Tr}(L(t)^k)\), \(k\in \{2,\ldots , d+1\}\).

Lemma 8.4

With the convention that \(r_n\) and \(s_n\) are zero for \(n\notin \{1,\ldots ,d\}\) we have the invariants

Proof

Write \(L(t) = DS + D_0 + S^*D\) with \(D=\mathrm {diag}(r_0(t), r_1(t),\ldots , r_d(t))\), \(S:e_n\mapsto e_{n+1}\) the shift operator and \(S^*:e_n\mapsto e_{n-1}\) its adjoint (with the convention \(e_{-1}=e_{d+1}=0\) and \(r_0(t)=0\)). And \(D_0\) is the diagonal part of L(t). Then

and we need to collect the terms that have the same number of S and \(S^*\) in the expansion. The trace property then allows to collect terms, and we get

and this gives the result, since \((SD_0S^*)_{n,n}= (D_0)_{n-1,n-1}\). \(\square \)

We do not use Lemma 8.4, and we have included it to indicate the analog of Corollary 2.3.

We can continue this and find e.g.

8.2 Action of L(t) in representations

We relate the eigenvectors of L(t) in some explicit representations of \(\mathfrak {sl}(d+1)\) to multivariable Krawtchouk polynomials, and we follow Iliev’s paper [12].

Let \(N\in \mathbb {N}\), and let \(\mathbb {C}_N[x]=\mathbb {C}_N[x_0,\ldots , x_d]\) be the space of homogeneous polynomials of degree N in \(d+1\)-variables, then \(\mathbb {C}_N[x]\) is an irreducible representation of \(\mathfrak {sl}(d+1)\) and \(\mathfrak {gl}(d+1)\) given by \(E_{i,j} \mapsto x_i \frac{\partial }{\partial x_j}\). \(\mathbb {C}_N[x]\) is a highest weight representation corresponding to \(N\omega _1\), \(\omega _1\) being the first fundamental weight for type \(A_d\). Then \(x^\rho = x_0^{\rho _0}\cdots x_d^{\rho _d}\), \(|\rho |=\sum _{i=0}^d\rho _i=N\), is an eigenvector of \(H_i\); \(H_i\cdot x^\rho = (\rho _{i-1}-\rho _i)x^\rho \), and so we have a basis of joint eigenvectors of the Cartan subalgebra spanned by \(H_1,\ldots , H_d\) and the joint eigenspace, i.e. the weight space, is 1-dimensional. It is a unitary representation for the inner product

and it gives a unitary representation of \(SU(d+1)\) as well.

Then the eigenfunctions of L(t) in \(\mathbb {C}_N[x]\) are \(\tilde{x}^\rho \), where

since Q(t) changes from eigenvectors for the Cartan subalgebra to eigenvectors for the operator L(t), cf. [12, Sect. 3]. It corresponds to the action of \(SU(d+1)\) (and of \(U(d+1)\)) on \(\mathbb {C}_N[x]\). Since Q(t) is unitary, we have

We recall the generating function for the multivariable Krawtchouk polynomials as introduced by Griffiths [9], see [12, §1]:

where \(\rho '= (\rho _1,\ldots , \rho _d) \in \mathbb {N}^d\), and similarly for \(\sigma '\). We consider \(P(\rho ',\sigma ')\) as polynomials in \(\sigma '\in \mathbb {N}^d\) of degree \(\rho '\) depending on \(U=(u_{i,j})_{i,j=1}^d\), see [12, §1].

Lemma 8.5

The eigenvectors of L(t) in \(\mathbb {C}_N[x]\) are

for \(u_{i,j} = \frac{Q(t)_{j,i}}{Q(t)_{0,i}}= p_j(\lambda _i;t)\), \(1 \le i,j\le d\) in (8.5), and \(L(t) \tilde{x}^\rho = (\sum _{i=0}^d \lambda _i \rho _i )\tilde{x}^\rho \). The eigenvalue follows from the conjugation with the diagonal element \(\Lambda \).

From now on we assume this value for \(u_{i,j}, 1 \le i,j \le d\). Explicit expressions for \(P(\sigma ',\rho ')\) in terms of Gelfand hypergeometric series are due to Mizukawa and Tanaka [21], see [12, (1.3)]. See also Iliev [12] for an overview of special and related cases of the multivariable cases.

Proof

Observe that

and \(Q(t)_{0,i}= \sqrt{w_i(t)}\) is non-zero. Now expand \(\tilde{x}^\rho \) using (8.5) and \(Q(t)_{i,j} = p_i(\lambda _j;t) \sqrt{w_j(t)}\) gives the result. \(\square \)

By the orthogonality (8.4) of the eigenvectors of L(t) we find

where we use that all entries of Q(t) are real. The second orthogonality follows by duality, and the orthogonality corresponds to [12, Cor. 5.3].

In case \(N=1\) we find \(P(f_i',f_j')= p_i(\lambda _j;t)\), where \(f_i\in \mathbb {N}^{d+1}\) is given by \((0,\ldots , 0, 1,0\ldots , 0)\) with the 1 on the i-th spot.

Lemma 8.6

For all \(\rho , \tau \in \mathbb {N}^{d+1}\) with \(|\rho |=|\tau |\) we have for the P from Lemma 8.5 the recurrence

Note that Lemma 8.6 does not follow from [12, Theorem 6.1].

Proof

Apply Lemma 8.5 to expand \(\tilde{x}^\rho \) in \(L(t)\tilde{x}^\rho = (\sum _{i=0}^d \lambda _i\rho _i) \tilde{x}^\rho \), and use the explicit expression of L(t) and the corresponding action. Compare the coefficient of \(x^\tau \) on both sides to obtain the result. \(\square \)

Remark 8.7

In the context of Remark 8.2 and (8.1) we have that the \(u_{i,j}\) are Krawtchouk polynomials. Then the left hand side in (8.5) is related to the generating function for the Krawtchouk polynomials, see [16, (9.11.11)], i.e. the case \(d=1\) of (8.5). Putting \(z_j = (\frac{p}{1-p})^{-\frac{1}{2} j} \left( {\begin{array}{c}d\\ j\end{array}}\right) ^{\frac{1}{2}}w^j\), we see that in this situation \(\sum _{j=0}^d u_{i,j} z_j\) corresponds to \((1+w)^{d-i} (1- \frac{1-p(t)}{p(t)}w)^i\). Using this in the generating function, the left hand side of (8.5) gives a generating function for Krawtchouk polynomials. Comparing the powers of \(w^k\) on both sides gives

The left hand side is, up to a normalization, the overlap coefficient of L(t) in the \(\mathfrak {sl}(2,\mathbb {C})\) case for the representation of dimension \(Nd+1\), see Sect. 3. Indeed, the representation \(\mathfrak {sl}(2,\mathbb {C})\) to \(\mathfrak {sl}(2,\mathbb {C})\) to \(\text {End}(\mathbb {C}_N[x])\) yields a reducible representation of \(\mathfrak {sl}(2,\mathbb {C})\), and the vector \(x^{(0,\ldots , 0,N)}\) is a highest weight vector of \(\mathfrak {sl}(2,\mathbb {C})\) for the highest weight dN. Restricting to this space then gives the above connection.

8.3 t-Dependence of multivariable Krawtchouk polynomials

Let \(L(t) v(t) = \lambda v(t)\), then taking the t-derivatives gives \(\dot{L}(t)v(t) + L(t)\dot{v}(t) =\lambda \dot{v}(t)\), since \(\lambda \) is independent of t, and using the Lax pair \(\dot{L}=[M,L]\) gives

Since L(t) has simple spectrum, we conclude that

for some constant c depending on the eigenvalue \(\lambda \) and t. Note that this differs from [24, Lemma 2].

For the case \(N=1\) we get

with the convention that \(u_0(t)=u_{d+1}(t)=0\), \(p_{-1}(\lambda _r;t)=0\). So

and comparing the coefficient of \(x_0\), we find \(c(t,\lambda _r) = - p_1(\lambda _r;t)u_1(t)\). So we have obtained the following proposition.

Proposition 8.8

The polynomials satisfy

for all eigenvalues \(\lambda _r\) of L(t), \(r\in \{0,\ldots , d\}\).

Note that for \(0 \le n<d\) we have

as polynomial identity. Indeed, for \(n=0\) this is trivially satisfied, and for \(1\le n<d\), this is a polynomial identity of degree n due to the condition in Proposition 8.1, which holds for all \(\lambda _r\) and hence is a polynomial identity. Note that the right hand side is a polynomial of degree n, and not of degree \(n+1\) since the coefficient of \(\lambda ^{n+1}\) is zero because of the relation on \(u_i\) and \(r_i\) in Proposition 8.1.

Writing out the identity for the Krawtchouk polynomials we obtain after simplifying

where the left hand side is related to the derivative. Note that the derivative of p cancels with factors u, see Theorem 3.2 and its proof and Sect. 7.

In order to obtain a similar expression for the multivariable t-dependent Krawtchouk polynomials we need to assume that the spectrum of L(t) is simple, i.e. we assume that for \(\rho ,\tilde{\rho } \in \mathbb {N}^{d+1}\) with \(|\rho |=|\tilde{\rho }|\) we have that \(\sum _{i=0}^d \lambda _i(\rho _i-\tilde{\rho }_i)=0\) implies \(\rho =\tilde{\rho }\). Assuming this we calculate, using Proposition 8.1,

using the notation \(W_\rho (t) = \prod _{i=0}^d w_i(t)^{\frac{1}{2} \rho _i}\) and \(f_i=(0,\ldots ,0,1, 0,\ldots , 0)\in \mathbb {N}^{d+1}\), with the 1 at the i-th spot. Now the t-derivative of \(\tilde{x}^\rho \) is

and it leaves to determine the constant in \(M(t) \tilde{x}^\rho - C\tilde{x}^\rho = \frac{\partial }{\partial t}\tilde{x}^\rho \). We determine C by looking at the coefficient of \(x_0^N\) using \(P(0, \rho ')= P((N,0,\ldots ,0)',\rho ')=1\). This gives \(C= N u_1(t)W_\rho (t)^{-1} - \frac{\partial }{\partial t} \ln W_\rho (t)\). Comparing the coefficients of \(x^\tau \) on both sides gives the following result.

Theorem 8.9

Assume that L(t) acting in \(\mathbb {C}_N[x]\) has simple spectrum. The t-derivative of the multivariable Krawtchouk polynomials satisfies

for all \(\rho ,\tau \in \mathbb {N}^{d+1}\), \(|\tau |=|\rho |=N\).

References

Andrews, G.E., Askey, R., Roy, R.: Special Functions, Encycl. Math. Appl., vol. 71. Cambridge Univ. Press (1999)

Babelon, O., Bernard, D., Talon, M.: Introduction to Classical Integrable Systems. Cambridge Univ Press, Cambridge (2003)

Berezanskiĭ, Ju.M.: Expansions in Eigenfunctions of Selfadjoint Operators, Translations of Math. Monographs, vol. 17. AMS (1968)

Bruschi, M., Manakov, S.V., Ragnisco, O., Levi, D.: The nonabelian Toda lattice-discrete analogue of the matrix Schrödinger spectral problem. J. Math. Phys. 21, 2749–2753 (1980)

Crampé, N., van de Vijver, W., Vinet, L.: Racah problems for the oscillator algebra, the Lie algebra \(\mathfrak{sl}_n\), and multivariate Krawtchouk polynomials. Ann. Henri Poincaré 21, 3939–3971 (2020)

Deift, P., Nanda, T., Tomei, C.: Ordinary differential equations and the symmetric eigenvalue problem. SIAM J. Numer. Anal. 20, 1–22 (1983)

Gekhtman, M.: Hamiltonian structure of non-abelian Toda lattice. Lett. Math. Phys. 46, 189–205 (1998)

Genest, V.X., Vinet, L., Zhedanov, A.: The multivariate Krawtchouk polynomials as matrix elements of the rotation group representations on oscillator states. J. Phys. A 46, 505203 (2013)

Griffiths, R.C.: Orthogonal polynomials on the multinomial distribution. Aust. J. Statist. 13, 27–35 (1971)

Groenevelt, W.: Laguerre functions and representations of \(su(1,1)\). Indag. Math. (N.S.) 14, 329–352 (2003)

Groenevelt, W., Koelink, E.: Meixner functions and polynomials related to Lie algebra representations. J. Phys. A 35, 65–85 (2002)

Iliev, P.: A Lie-theoretic interpretation of multivariate hypergeometric polynomials. Compos. Math. 148, 991–1002 (2012)

Ismail, M.E.H.: Classical and Quantum Orthogonal Polynomials in One Variable, Encycl. Math. Appl., vol. 98. Cambridge Univ. Press (2005)

Ismail, M.E.H., Koelink, E., Román, P.: Matrix valued Hermite polynomials, Burchnall formulas and non-abelian Toda lattice. Adv. Appl. Math. 110, 235–269 (2019)

Kametaka, Y.: On the Euler–Poisson–Darboux equation and the Toda equation. I, II. Proc. Jpn. Acad. Ser. A 60, 145–148, 181–184 (1984)

Koekoek, R., Lesky, P.A., Swarttouw, R.: Hypergeometric Orthogonal Polynomials and Their q-analogues, Springer Monographs in Math. Springer (2010)

Koelink, E.: Spectral theory and special functions. In: Álvarez-Nodarse, R., Marcellán, F., Van Assche, W. (eds.) Laredo Lectures on Orthogonal Polynomials and Special Functions, Adv. Theory Spec. Funct. Orthogonal Polynomials, pp. 45–84. Nova Sci. Publ., (2004)

Koelink, E.: Applications of spectral theory to special functions. In: H.S. Cohl, M.E.H. Ismail (eds.) Lectures on Orthogonal Polynomials and Special Functions, Lecture Notes of the London Math. Soc., vol. 464, pp. 131–212. Cambridge Univ Press (2021)

Koelink, H.T., Van Der Jeugt, J.: Convolutions for orthogonal polynomials from Lie and quantum algebra representations. SIAM J. Math. Anal. 29, 794–822 (1998)

Miller Jr., W.: Lie Theory and Special Functions, Math. in Science and Engineering, vol. 43. Academic Press (1968)

Mizukawa, H., Tanaka, H.: \((n+1, m+1)\)-hypergeometric functions associated to character algebras. Proc. Am. Math. Soc. 132, 2613–2618 (2004)

Moser, J.: Finitely many mass points on the line under the influence of an exponential potential—an integrable system, pp. 467–497. In: Moser, J. (ed.) Dynamical Systems, Theory and Applications, Lecture Notes in Phys, vol. 38. Springer (1975)

Okamoto, K.: Sur les échelles associées aux fonctions spéciales et l’équation de Toda. J. Fac. Sci. Univ. Tokyo Sect. IA Math. 34, 709–740 (1987)

Peherstorfer, F.: On Toda lattices and orthogonal polynomials. J. Comput. Appl. Math. 133, 519–534 (2001)

Slater, L.J.: Confluent Hypergeometric Functions. Cambridge Univ Press, Cambridge (1960)

Szegő, G.: Orthogonal Polynomials, vol. 23, 4th ed. Colloquium Publ. AMS (1975)

Teschl, G.: Almost everything you always wanted to know about the Toda equation. Jahresber. Deutsch. Math.-Verein. 103, 149–162 (2001)

Watson, G.N.: A Treatise on the Theory of Bessel Functions. Cambridge Univ Press, Cambridge (1944)

Zhedanov, A.S.: Toda lattice: solutions with dynamical symmetry and classical orthogonal polynomials. Theor. Math. Phys. 82, 6–11 (1990)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Dedicated to the memory of Richard Askey.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Groenevelt, W., Koelink, E. Orthogonal functions related to Lax pairs in Lie algebras. Ramanujan J 61, 445–474 (2023). https://doi.org/10.1007/s11139-021-00424-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11139-021-00424-9