Abstract

We consider the Vlasov–Poisson system with repulsive interactions. For initial data a small, radial, absolutely continuous perturbation of a point charge, we show that the solution is global and disperses to infinity via a modified scattering along trajectories of the linearized flow. This is done by an exact integration of the linearized equation, followed by the analysis of the perturbed Hamiltonian equation in action-angle coordinates.

Similar content being viewed by others

1 Vlasov–Poisson Near a Point Charge

This article is devoted to the study of the time evolution and asymptotic behavior of a three dimensional gas of charged particles (a plasma) that interact with a point charge. Under suitable assumptions this system can be described via a measure M on \({\mathbb {R}}^3_{x}\times {\mathbb {R}}^3_v\) that is transported by the long-range electrostatic force field created by the charge distribution, resulting in the Vlasov–Poisson system

Since this equation is rotationally invariant, the Dirac mass \(M_{eq}=\delta =\delta _{(0,0)}(x,v)\) is a formal stationary solution and we propose to investigate its stability. We consider initial dataFootnote 1 of the form \(M=q_c\delta +q_g\mu ^2_0dxdv\), where \(q_c>0\) is the charge of the Dirac mass and \(q_g>0\) is the charge per particle of the gas, which results in purely repulsive interactions. We track the singular and the absolutely continuous parts of a solution as \(M(t)=q_c\delta _{({\bar{x}}(t),{\bar{v}}(t))}+q_g\mu ^2(t)dxdv\), which formally yields the coupled system

where \(\lambda =q_g^2/(\epsilon _0m_g)>0\), \(q=q_cq_g/(2\pi \epsilon _0 m_g)>0\), \({\overline{q}}=q_cq_g/(\epsilon _0 m_c)>0\) are positive constantsFootnote 2.

1.1 Main result

Our main result concerns (1.2) with radial initial data, where the point charge is located at the origin. In this case, the densities are considered with respect to the reference measure \(\delta (x\wedge v)\cdot dxdv\) instead of the Lebesgue measure dxdv. For sufficiently small initial charge distributions \(\mu \) we establish the existence and uniqueness of global, strong solutions and we describe their asymptotic behavior as a modified scattering dynamic. While our full result can be most adequately stated in more adapted “action-angle” variables (see Theorem 1.6 below on page 7), for the sake of readability we begin here by giving a (weaker, slightly informal) version in standard Cartesian coordinates:

Theorem 1.1

Given any radial initial data \(\mu _0\in C^1_c({\mathbb {R}}^*_+\times {\mathbb {R}})\), there exists \(\varepsilon ^*>0\) such that for any \(0<\varepsilon <\varepsilon ^*\), there exists a unique global strong solution of (1.2) with initial data

Moreover, the electric field decays pointwise and there exists an asymptotic profile \(\mu _\infty \in L^2({\mathbb {R}}^*_+\times {\mathbb {R}})\) and a Lagrangian map \((\mathcal {R},\mathcal {V}):{\mathbb {R}}_+^*\times {\mathbb {R}}\times {\mathbb {R}}\rightarrow {\mathbb {R}}_+^*\times {\mathbb {R}}\) along which the density converges pointwiseFootnote 3

Remark 1.2

-

(1)

Our main theorem is in fact much more precise and requires fewer assumptions, but is better stated in adapted “action angle” variables. We refer to Theorem 1.6.

-

(2)

The Lagrangian map can be written in terms of an asymptotic “electric field profile” \(\mathcal {E}_\infty \):

$$\begin{aligned} \begin{aligned} \mathcal {R}(r,\nu ,t)&=t\sqrt{\nu ^2+\frac{q}{r}}-\frac{rq}{2(q+r\nu ^2)}\ln (t)+\lambda \mathcal {E}_\infty (\sqrt{\nu ^2+\frac{q}{r}})\ln (t)+O(1),\\ \mathcal {V}(r,\nu ,t)&=\sqrt{\nu ^2+\frac{q}{r}}-\frac{rq}{2(q+r\nu ^2)}\frac{1}{t}+O(\frac{\ln t}{t^2}). \end{aligned} \end{aligned}$$The first term corresponds to conservation of the energy along trajectories, the second term comes from a linear correction and the third term on the first line comes from a nonlinear correction to the position. This can be compared with the asymptotic behavior close to vacuum in [21, 31] by setting \(q=0\).

1.1.1 Prior work

In the absence of a point charge, the Vlasov–Poisson system has been extensively studied and we only refer to [2, 15, 25, 33, 35] for references on global wellposedness and dispersion analysis, to [8, 13, 21, 31] for more recent results describing the asymptotic behavior, to [14, 34] for book references and to [3] for a historical review.

The presence of a point charge introduces singular electric fields and significantly complicates the analysis. Nevertheless, global existence and uniqueness of strong solutions when the support of the density is separated from the point charge has been established in [27], see also [5] and references therein, while global existence of weak solutions for more general support was proved in [10] with subsequent improvements in [23, 24, 28]. We also refer to [9] where “Lagrangian solutions” are studied and to [6, 7] for works in the case of attractive interactions. Concentration, creation of a point charge and subsequent lack of uniqueness were studied in a related system for ions in 1d, see [26, 36]. To the best of our knowledge, there are no works concerning the asymptotic behavior of such solutions.

The existence and stability of other equilibriums has been considered for the Vlasov–Poisson system with repulsive interactions, most notably in connection to Landau damping [1, 4, 12, 18, 30]. In the case of Vlasov–Poisson with attractive interactions, there are many more equilibriums and their linear and nonlinear (in)stability have been studied [16, 17, 22, 29, 32], but the analysis of asymptotic stability is very challenging. We also refer to [20] which studies the stability of a Dirac mass in the context of the 2d Euler equation.

1.1.2 Our approach

In previous works on (1.2), the Lagrangian approach allows to integrate the solutions against characteristics but faces the problem of a singular electric field, while a purely Eulerian method leads to a poor control of the solutions, which makes it difficult to study the asymptotic behavior. In this paper, we introduce a different method based on the decomposition of the Hamiltonian to rewrite (1.2) as

where the linearized Hamiltonian \(\mathcal {H}_0\) is given in (2.1) and the nonlinear Hamiltonian \(\mathcal {H}_{pert}\) corresponds to the self-generated electrostatic potential (1.17). In short, our approach combines a Lagrangian analysis of the linearized problem with an Eulerian PDE framework in the nonlinear analysis, all the while respecting the symplectic structure. This amounts to considering solutions as superpositions of measures on each trajectory of the linearized flow instead of measures on the whole phase space.

On a technical level, one faces the two difficulties of a singular transport field and the nonlinearity separately: the singular electric field created by the point charge is present in the linearized equation coming from \(\mathcal {H}_0\), which is integrated exactly. The nonlinearity comes from the perturbed Hamiltonian \(\mathcal {H}_{pert}\), but this leads to a simple nonlinear equation, with a nonlinearity which is smoothing.

More precisely, in a first step we analyze the characteristic equations of the linear problem associated to (1.2). These turn out to be the classical ODEs of the Kepler problem, which can be integrated in adapted “action-angle” coordinates. In these, the geometry of the characteristic curves is straightened and the linear flow is solved explicitly as a linear map. To treat the nonlinear problem, we conjugate by the linear flow and study the resulting unknown in an Eulerian, \(L^2\) based PDE framework, based on energy estimates as in our recent work on the vacuum case [21]. This allows us to propagate the required regularity and moments to obtain a global strong solution. Moreover, we can readily identify the asymptotic dynamic: in a mixing type mechanism, the dependence on the “angles” is eliminated from the asymptotic electrostatic fields, and the scattering of solutions is modified by a field defined in terms of the “actions”.

We remark on some features and context of our techniques.

-

(1)

Since the system (1.2) is Hamiltonian and we solve the linearized system through a canonical change of unknown (i.e. a diffeomorphism respecting the symplectic structure), the nonlinear problem becomes quite simple after conjugation, see (1.16).

-

(2)

The moments we propagate are conserved by the linearized flow, unlike the physical moments in \(\langle r\rangle \), \(\langle v\rangle \). In fact, even in the nonlinear problem it is quite direct to globally propagate moments in action-angle variables, which already gives the existence of global weak solutions.

-

(3)

The asymptotic dynamic is easy to exhibit in action-angle variables through inspection of the formulas for the asymptotic electrostatic fields (see (1.18)).

-

(4)

It is notable that we do not require any separation between the point charge and the continuous distribution \(\mu \), addressing a question raised in [10, p. 376], (see also [27]).

-

(5)

We expect the methods presented here, based on integration of the linearized equation through “action-angle” coordinates, to be broadly applicable, both for local existence of rough solutions and especially for the analysis of long time behavior whenever the linearized equation corresponds to a completely integrable ODE without closed trajectories. This should include a large number of radial problems for plasmas since \(1+1\) Hamiltonian ODEs can be integrated by phase portrait.

-

(6)

The usefulness of action-angle variables for the Vlasov–Poisson equation was already exhibited in [11, 16, 19] where the authors produce a large class of 1d BGK-type waves which are linearly stable.

1.1.3 Remarks on the physical setup

Our primary interest here is to study the interaction of a gas of ions or electrons interacting with a (similarly) charged particle, subject only to electrostatic forces. In this case, up to rescaling, we may assume that \(\lambda =1\) in (1.2).

Taking into account gravitational effects, we may also consider the more general case of a large charged and massive point particle with mass \(m_c\) and charge \(q_c\) interacting with a gas of small particles with mass-per-particle \(m_g\) and charge-per-particle \(q_g\) subject to both gravitational and electrostatic forces. In this case, our result holds whenever the principal gas-point particle interaction is repulsive, i.e. when (in appropriates physical units)

whereas (due to the small data assumption on the gas at initial time) the gas-gas interaction may be repulsive \((\lambda =1)\) or attractive \((\lambda =-1\)) depending on the sign of \((q_g)^2-(m_g)^2\).

The situation that is beyond the scope of our analysis is the case when the inequality in (1.3) is reversed and some trajectories of the linearized system are closed. Note that in this case, even the local existence theory is incomplete.

1.2 Overview and ideas of the proof

To clarify the passage to the radially symmetric setting, we denote here by \((x,p)\in {\mathbb {R}}^3\times {\mathbb {R}}^3\) the three dimensional phase space variables. We note that in the particular case of radial initial conditions,Footnote 4

the Dirac mass in (1.2) does not move (i.e. \({\bar{x}}(t)={\bar{p}}(t)=0\)) and the continuous particle distribution \(\mu (t)\) of the solution is a radial function. The equations (1.2) reduce to the following system for \(\mu ({x},{p},t)\):

Per a slight abuse of notation with \(r:=\left|x\right|\), \(\varrho (r,t)=\varrho (x,t)\), by radiality the electric field \(E:=-\nabla _{x}\psi \) of the ensemble can be computed as

1.2.1 The “radial” phase space

Since as discussed the equations (1.2) are invariant under spherical symmetry and we will work with spherically symmetric data, it is more convenient to work on the phase space \((r,v)\in {\mathbb {R}}_{+}^*\times {\mathbb {R}}\) (rather than \((x, p)\in {\mathbb {R}}^3\times {\mathbb {R}}^3\)). Note that \(r^2v^2drdv\) is the natural measure corresponding to that of radially symmetric functions on \({\mathbb {R}}^3\times {\mathbb {R}}^3\), and hence we will work with the new density

This is chosen such that the (conserved) mass is the square of the \(L^2\) norm of both \(\varvec{\mu }\) on \({\mathbb {R}}_+^*\times {\mathbb {R}}\) and \(\mu \) on \({\mathbb {R}}^3\times {\mathbb {R}}^3\), i.e. we have

Moreover, the equations for \(\varvec{\mu }\) simply read

This equation is Hamiltonian and can be equivalently written as

which leads to the conservation of energy

1.2.2 The linearized system

In order to study (1.9), we first consider the linearized equation for a function f(r, v, t):

This linear transport equation can be solved directly via its characteristic equations

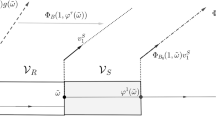

One recognizes here the classical Kepler problem in the radial setting, which can be integrated using generalizedFootnote 5 “action angle” coordinates \((\theta , a)\) (see also Fig. 1).

Lemma 1.3

There exists a canonical transformation to “action-angle” coordinates:

with inverse \((R(\theta ,a),V(\theta ,a))\), such that f solves (1.11) if and only if

solves the free streaming equation

1.2.3 Nonlinear analysis

Since (1.14) can be solved directly, we conjugate by this change of variables to stabilize the linearized system, thus defining \(\gamma \) as follows:

Since the change of variable preserves the symplectic structure, we find that the full nonlinear problem (1.9) is equivalent to

Here the potential can be expressed in action angle coordinates as follows:

Remark 1.4

While the trajectories of the linear equation (2.2) are straight lines in action-angle variables, in physical variables they correspond to an incoming ray followed by an outgoing one traced at varying velocities.

For the nonlinear problem this creates extra challenges, as interactions can occur over vastly disparate spatial scales. As the below Fig. 2 illustrates, in some regimes the evolution \(R(\theta +ta,a)\) is not a simple function of \((\theta ,a)\), from which one of the two variables can be recovered once the other is known (see also Lemma 2.5 below).

It remains to study solutions to (1.16). The dispersion mechanism is accounted for through the conjugation with the linearized flow and we hope to show that this picture remains true when we add the remaining nonlinear contribution, i.e. we expect that solutions to (1.16) do not change too much over time. We first use a bootstrap argument to propagate strong norms, which suffices to obtain global existence and decay of the electric field:

Proposition 1.5

There exists \(\varepsilon ^*\) such that for all \(0<\varepsilon _0\le \varepsilon _1\le \delta <\varepsilon ^*\), the following holds. Let \(\gamma \) be a solution to (1.16) with initial data \(\gamma _0\) on \(0\le t\le T\) and assume that for \(0\le t\le T\),

then in fact

This in turn allows us to investigate the asymptotic behavior. It can easily be formally deduced once one observes that, given the bounds propagated by the bootstrap, on the support of the density, one has

so that one expects that \({\widetilde{\Psi }}\) is asymptotically independent of \(\theta \):

As a consequence, (1.16) becomes a perturbation of a shear equation:

which can easily be integrated. This can be made rigorous under appropriate assumptions on the initial data and it leads to our main result.

Theorem 1.6

There exists \(\varepsilon _0>0\) such that any initial data \(\gamma _0\) satisfying

leads to a unique global solution \(\gamma (t)\in C^1_tL^2_{\theta ,a}\cap C^0_tH^1_{\theta ,a}\) of (1.16) with an electric field \((\partial _\theta {\widetilde{\Psi }},\partial _a{\widetilde{\Psi }})\) which decays in time. In addition, there exists \(\gamma _\infty \in L^2_{\theta ,a}\cap C^0_\theta L^2_a\) such that

where

This implies Theorem 1.1 upon setting \(\mu _\infty (r,\nu ):=\gamma _\infty (\Theta (r,\nu ),\mathcal {A}(r,\nu ))\). The expansion of the asymptotic dynamics in Remark 1.2 is then obtained via our analysis of the action angle variables in Sect. 2.1 below.

We expect that the number of moments in (1.19) can be significantly reduced. It is interesting that the decay of the electric field can be obtained under much weaker assumptions (see Lemma 3.1), but it is unclear to us how much asymptotic information can be recovered in this case.

1.3 Organization of the paper

In Sect. 2, we study the ODE associated to the linearized equation and establish a number of geometric results and bounds on relevant quantities for the nonlinear problem. In Sect. 3, we study the nonlinear equation; we establish the moment bootstrap for weak solutions in Sect. 3.1, the derivative bootstrap for strong solutions in Sect. 3.1.2 and prove the modified scattering in Sect. 3.2 by first obtaining a weak-strong limit for scattering data in Sect. 3.2.1 and finally the convergence of the particle density in Sect. 3.2.2.

We emphasize that one can obtain decay of moments for weak solutions in a self-contained way using only the results of the Sects. 2.1, 2.2 and 3.1.1.

2 Linearized Equation

The goal of this section is to integrate the linearized problem (2.2) and to prove various estimates for the corresponding transfer functions. Lemma 1.3 follows easily from Lemma 2.1 below.

2.1 Straightening the linear characteristics

The linearization of (1.10) at \(\varvec{\mu }=0\) is the Hamiltonian differential equation associated to

namely

This is now a linear transport equation, which can be integrated easily once we know the trajectories of the corresponding ODE:

2.1.1 Radial trajectories

Since we consider a \(1+1\) Hamiltonian system (2.3), the trajectories can be integrated by phase portrait. Letting \(\mathcal {A}=\sqrt{\mathcal {H}_0}\), we can explicitly integrate the resulting equation

by starting the “clock” \(\theta =0\) at the periapsis (i.e. the point of closest approach): Let

where \(G:(1,\infty )\rightarrow {\mathbb {R}}\) and \(H:{\mathbb {R}}_+\rightarrow (1,\infty )\) satisfy

These functions and related ones are studied in more details in Sect. 2.1.2. We can now solve the linear problem (2.2) via a canonical change of variable:

Lemma 2.1

The change of variables

in (2.4) defines a canonical diffeomorphism of the phase space (r, v) (with inverse \((R(\theta ,a),V(\theta ,a))\) as in (2.4)), which linearizes the flow in the sense that for the flow map \(\Phi ^t(r,v)\) associated to the Hamiltonian ODEs (2.3) we have

Moreover, we have

Proof

That \((\Theta ,\mathcal {A})\) and (R, V) are inverse can be checked directly once one observes that \(r_{min}\) is consistent: \(r_{min}(\mathcal {A}(r,v))=r_{min}(r,v)\) and \(r_{min}(a)=r_{min}(R(a,\theta ),V(a,\theta ))\). It is direct to check that \(\mathcal {A}\) is conserved along the flow. Moreover,

The first term on the right hand side gives \(\mathcal {A}\), and the second vanishes since when \(v=0\), \(G(r/r_{min})=G(1)=0\), while direct computations show that the bracket in the last term vanishes. In addition, the same computations show that

which shows that the transformation preserves the symplectic form and hence has Jacobian 1. \(\quad \square \)

2.1.2 Study of the structure functions

In this subsection, we study the geometric functions that arise from the change of variable in Lemma 2.1. These are independent of assumptions on the solutions (Fig. 3).

Plots of the functions G and H of Lemma 2.2

Lemma 2.2

The functions G and H are almost linear

In addition, we note the asymptotic behavior of G and its inverse

Proof of Lemma 2.2

Since G can be integrated explicitly, we easily obtain the first line in (2.8) from (2.5), and the second line follows from the fact that \(H=G^{-1}\). Now (2.9) follows by expanding the expression for G. \(\quad \square \)

In addition, we will frequently consider first and second order derivatives.

Lemma 2.3

We have explicit formulas for the first order derivatives

and the formulas for the second order derivatives

so that

Proof of Lemma 2.3

The formulas follow from direct calculations using that

together with Lemma 2.2 to control the behavior of H. \(\quad \square \)

2.1.3 Kinematics of linear trajectories

Here we collect a few estimates on the behavior of the trajectories of (2.3). Using (2.7), we see that the linearized flow is simple in the action-angle variables. For simplicity of notation, given a function \(F(\theta ,a)\) in phase space, we will denote by

its evolution under the linear flow. This is a slight abuse of notation since the transformation depends on time; however all our estimates will be instantaneous so this should not lead to confusion. Since we will show that in action-angle variables, the new density is (almost) stable, we expect that the main role will be played by trajectories starting from the “bulk region” \(\mathcal {B}\) defined by

We start with a few simple observations. By definition, we have a universal lower bound for R,

but in the bulk region, one can be more precise.

Lemma 2.4

We have the following control on \({\widetilde{R}}\):

and

In addition, we have a more precise control in the bulk: when \((\theta ,a)\in \mathcal {B}\), we have that

and in particular, the change of variable \(a\mapsto {\widetilde{R}}(\theta ,a)\) is well behaved.

Proof of Lemma 2.4

The bounds (2.13) and (2.14) follow from Lemma 2.3 and the formulas

Now, in the bulk, we observe that \(ta/2\le \vert \theta +ta\vert \le 2ta\), and \(ta^3\gg 1\) so that by (2.8) we have \(ta/2\le {\widetilde{R}}(\theta +ta,a)\le 2ta\). The other bounds follow directly. \(\quad \square \)

Since the interaction involves quantities defined in the physical space, it will be useful to understand how to relate them to phase space variables (Fig. 4). The next lemma is concerned with solutions of the equation

for fixed t and r.

The different regions of Lemma 2.5

Lemma 2.5

Let

for some fixed small constant \(c>0\) and define the regions

In the region \(\mathcal {R}_0\), we see that for any \(\theta \), there exists at most one \(a=\aleph (\theta ;r,t)\) solution of (2.15). In addition, we have that

In the region \(\mathcal {R}_j\), \(j\in \{1,2\}\), for each choice of a, there exists exactly one \(\theta =\tau _j(a;r,t)\) solution of (2.15). In addition, we see that

while on \(\mathcal {R}_2\), we see that

Proof

We can rewrite (2.15) as

We start with \(\mathcal {R}_0\) and denote \(a^*=\sqrt{q/r}\), \(\vartheta =\theta +ta\) and \(x=a^2\vert \vartheta \vert /q\) so that we are considering the equation

Now let \(a=a^*\sqrt{1+h^2}\) for some \(h\ge 0\). We have that by (2.9) and Lemma 2.3

From this we see that if h is small enough, \(0\le h\le c\), there exists a unique solution x to \(E_1=0\), and this solution satisfies \(h\le x\le 3h\). In addition, if \(h\le c/(q(a^*)^3t)\), there holds that \(\partial _a{\widetilde{R}}\lesssim -1/(q(a^*)^3)\).

Now in region \(\mathcal {R}_j\), \(j\in \{1,2\}\), we see that

and in particular, using (2.19), we find that

In addition, we have that

and the other bounds in (2.17) follow directly from the definitions.

Finally, the last statement in (2.18) follows from the formula for \(\partial _a{\widetilde{R}}\) in (2.20): Note that \(\vartheta /\left|\vartheta \right|=1\) and letting \(x=b\) the bound where the term in parenthesis vanishes, then when \(0\le x\le 2b\), there holds that \(q\le a^2r\le H(2b)\), while for \(x\ge 2b\) we see that both terms have same sign and \(H^{\prime \prime }\ge 0\), so that

\(\square \)

2.2 Electric field and potential

Given an instantaneous density distribution \(\mu (r,v,t)\), it is useful to introduce the “physical potential” \(\mathbf{\Psi }\) of the associated electric field as in (1.9), explicitly given as

when \(\gamma (\vartheta ,\alpha ,t)\) is the corresponding density distribution in action-angle coordinates as in (1.15). Then (1.17) can be rewritten as \({\widetilde{\Psi }}(\theta ,a,t)=\mathbf{\Psi }({\widetilde{R}}(\theta ,a),t)\). This allows to obtain formulas for the derivatives of \({\widetilde{\Psi }}\) in action angle variables in terms of the electric field \(\mathbf{E}\), the local mass \(\mathbf{m}\) and the density \(\varvec{\varrho }\) (compare also (1.9)):

Then for \(\beta \in \{a,\theta \}\) we have

We note that the local mass has a trivial uniform bound

but this can be made more precise.

Lemma 2.6

We can decompose \(\mathbf{m}\) as

where we have that for any \(\ell ,\kappa >0\)

Proof of Lemma 2.6

The decomposition corresponds to localizing in and out of the bulk zone defined in (2.11). Thus

Using Lemma 2.4, we see that

while using (2.12),

\(\square \)

We will use the following consequences:

Proposition 2.7

There holds that

and

Proof of Proposition 2.7

Using (2.12) and (2.13), we find that

and we can use (2.25) with \(\ell =3/2\), \(\kappa =2\). For (2.27), we use (2.13) to get that

From (2.25) with \(\ell =2\), \(\kappa =\frac{5}{2}\) we obtain

Similarly, if \(\frac{a^2}{q}{\widetilde{R}}(\theta ,a)\ge t^\frac{1}{2}\), we can use (2.25) (\(\ell =\frac{1}{2}\), \(\kappa =1\)) to get

On the other hand, it follows from Lemma 2.4 that if \(q\le a^2{\widetilde{R}}(\theta ,a)\le t^\frac{1}{2}\), then \((\theta ,a)\in \mathcal {B}^c\), and we use that

which gives (2.27).\(\quad \square \)

2.2.1 Study of the density

Controlling derivatives of \(\gamma \) requires estimates on the density; these are obtained in a similar way to the mass (see Lemma 2.6), but are more involved.

Lemma 2.8

The density can be decomposed into two terms,

where for \(\kappa ,\sigma \ge 0\),

The key observation is that the estimate for \(\varvec{\varrho }_s\) only involves at most one copy of the large term \(\Vert a\partial _a\gamma \Vert _{L^2_{\theta ,a}}\).

Proof of Lemma 2.8

We can decompose into two regions

where \(\varphi _{\ge 1}(x)\) denotes a smooth function supported on \(\{x\ge 1/10\}\) and equal to 1 for \(x\ge 1/2\).

Study of \(\partial _r\mathbf{m}^1\). This contains the main term. Integrating by parts, we observe that

where

From Lemma 2.4 we recall that in the bulk region \({\widetilde{R}}\sim at\), and thus

while on the other hand, using (2.12) and (2.11),

Direct computations using Lemma 2.4 show that

Separating the contribution of the bulk and outside as in the proof of Lemma 2.6, we find that

while using (2.12) and (2.11) yields

Study of \(\partial _r\mathbf{m}^2\). We now consider

The main observation is that thanks to Lemma 2.4, we have that

The Dirac measure restricts to the set studied in Lemma 2.5 and we decompose accordingly

and using (2.16), we see that

Integrating \(\gamma ^2(\vartheta ,\aleph )\aleph ^{3+2\kappa }=\int _0^{\aleph }\partial _\alpha (\gamma ^2(\vartheta ,\alpha )\alpha ^{3+2\kappa }) d\alpha \) from \(0\le \alpha \le \aleph \) (note that \(0\le \alpha \le a\) and \(a\in \mathcal {B}^c\) implies that \(\alpha \in \mathcal {B}^c\)), we can estimate

Using (2.17) and integrating over \(\vartheta \ge \tau _1\), we see that

Similarly, using (2.18) we obtain

This finishes the proof with \(\varvec{\varrho }_s=\varvec{\varrho }_s^1+\varvec{\varrho }_s^2\) and \(\varvec{\varrho }_n=M^{1,1}+M^{1,2}+M^{2,0}+M^{2,1}+M^{2,2}\). \(\quad \square \)

Remark 2.9

As can be seen from the proof of 2.8, we only need positive moments in a to control the area outside of the bulk where \(\left|\theta \right|\ge a t\). Such moments in a could be replaced by moments in \(\theta \), and thus positive weights in a are not necessary for our result.

Proposition 2.10

There holds that

and

where

Proof of Proposition 2.10

The most important term is the term with mixed derivative (see Sect. 3.11). We recall from (2.23) that

On the one hand, using Lemma 2.4, we see that

and this leads to an acceptable contribution using Lemmas 2.6 and 2.8. We now turn to

Using Lemma 2.4 and (2.12), we see that

and this term can be handled as before using Lemmas 2.6 and 2.8. Finally, we compute that

Using (2.13) and (2.14), we find that

and that

Using Lemma 2.6, we find that

while using Lemma 2.8, we find that

This finishes the proof. \(\quad \square \)

3 Nonlinear Analysis

In this section we consider the full nonlinear equation (1.16),

We first establish global existence of strong solutions via a bootstrap in Sect. 3.1, then we demonstrate the modified scattering asymptotics in Sect. 3.2. This establishes Proposition 1.5 and Theorem 1.6.

3.1 Bootstraps and global existence

We first propagate global bounds using energy estimates. The key property we will use is that the integral of a Poisson bracket vanishes. Commuting with appropriate operators gives the equations

The key in the bootstrap estimates is that one can propagate a moments easily and that the terms with slowest decay involve only these moments (see \(\mathbf{m}_s\) in (2.25) and \(\varvec{\varrho }_s\) in (2.28)). Interestingly, we will see in Sect. 3.1.1 that the moments can be bootstrapped on their own, allowing global bounds on weak solutions. These moment bounds allow to propagate another bootstrap for higher regularity. For simplicity, we only propagate the first order derivatives in Sect. 3.1.2.

3.1.1 Moment Bootstrap

It turns out that control of the moments can be bootstrapped independently of any derivative bound.

Lemma 3.1

Let \(p\ge 2\) and assume that \(\gamma \) solves (1.16) on an interval \(0\le t\le T\) and assume that

then there holds that

Proof of Lemma 3.1

The moments can be readily estimated. By (3.2) we have that, for \(q\in {\mathbb {R}}\)

Using (2.26), the bootstrap assumptions (3.3) and Gronwall inequality, we find that

Similarly, for \(q\ge 0\), using (3.2) and (2.27), we find that

and we can again apply Gronwall estimate. \(\quad \square \)

3.1.2 Control on the derivatives

We now show that we can obtain strong solutions by bootstrapping control of derivatives. It turns out that we will also need some moments of first derivatives. Given a weight function \(\omega (\theta ,a)\), we define

and we compute that

We will need this when

More generally, one can consider \(\omega _{p,q}:=\theta ^pa^q\) for \(p\in {\mathbb {N}}_{0}\) and \(q\in {\mathbb {Z}}\). Then the properties we need are

and that the set of weights \(\mathcal {I}=\{\omega _{p,q}\}_{p,q}\) we consider satisfies the induction property

where we make the notational convention that \(\omega _{p,q}=0\) if \(p<0\). We call such sets of weights compatible.

Proposition 3.2

Let \(\mathcal {I}\) be a compatible finite set of weights. Assume that \(\gamma \) solves (1.16) for \(0\le t\le T\) and satisfies for any weight

Then the following stronger bounds hold for any weights

In particular, the case of \(\omega =1\) gives the result of Proposition 1.5.

Proof of Proposition 3.2

Writing \(\omega =\omega _{p,q}\) for simplicity of notation, using (3.5) we find that

where we have used \(\vert a\partial _a\omega ^{(j)}\vert \lesssim \omega ^{(j)}\) from (3.7). The first two terms on each right hand side lead directly to a Gronwall bootstrap using (2.26) and (2.30). The last is not present when \(p=0\). If \(p\ge 1\), we may use the induction property (3.7) with (2.27) to proceed as follows:

and we see that all terms lead to (3.10). Similarly, we compute that

Here the only new term is the second one on the right hand side. For this term, we use (2.31) to get

and since we have already controlled the \(\theta \) derivative, we may use (3.10) to obtain that

which gives an acceptable contribution with Gronwall’s estimate. \(\quad \square \)

3.2 Asymptotic behavior

The analysis in this section is partially inspired by [21, 31].

3.2.1 Weak-strong limit and asymptotic electric field

Before we obtain strong convergence of the particle distribution, we first need weak convergence of “asymptotic functions” which are defined in terms of averages along linearized trajectories. Given a bounded measurable function \(\tau \), we define

The following Lemma states that these averages converge.

Lemma 3.3

Assume that \(\gamma \) solves (1.16) for \(0\le t\le T\) and satisfies the conclusions of Proposition 3.1 for \(p=2\) and the conclusions of Proposition 3.2. Given any bounded function \(\tau (a)\), the limit

exists and satisfies

Proof of Lemma 3.3

Using (1.16), we see that

Proposition 2.7, Proposition 2.10 and (3.9) then show that \(\langle \tau \rangle (t)\) is a Cauchy sequence as \(t\rightarrow \infty \). Integrating the time derivative then gives the bound (3.11).\(\quad \square \)

The convergence of the scattering data allows to define the asymptotic electric potential and electric field

Informally, we expect that

Under our assumptions we can prove the following:

Lemma 3.4

Under the assumptions of Lemma 3.3, there holds that

where \(\mathcal {B}_*\) is a smaller version of the bulk

Proof of Lemma 3.4

The first estimate follows from the uniform bound

We now turn to the second estimate. Recall from the proof of Proposition 2.7 that

where we have used Lemma 2.4. Furthermore, with \(\mathcal {E}(a,t):=a^{-2}\iint {\mathfrak {1}}_{\alpha \le a}\gamma ^2(\vartheta ,\alpha ,t)\), we have

where

Note from (2.9), there holds that

so that on the support of \(\mathcal {B}_*\)

for some universal constant \(C>0\). Therefore we have

so that, using (3.9),

Finally, using (3.12) with Lemma 2.6 and Lemma 2.8,

Since by (3.11) we have that \(\left|\mathcal {E}(a,t)-\mathcal {E}_\infty (a)\right|\lesssim \varepsilon _1^4 t^{-\frac{1}{4}}\), this concludes the proof. \(\quad \square \)

3.2.2 Strong limit

We can now correct the trajectories to get a strong limit and prove our main theorem.

Proof of Theorem 1.6

Under these conditions, we may apply Lemma 3.1 and Proposition 3.2 to propagate global bounds on the moments and derivatives with the weights in (3.6). Lemma 3.3 justifies the existence of \(\mathcal {E}_\infty \) and we have the estimates in Lemma 3.4. Let

We claim that \(\sigma \) converges to a limit \(\sigma _\infty \) in \(L^2_{\theta ,a}\). Indeed we compute that

We directly obtain that

while using Lemma 3.4, we find that

This establishes (1.20). In addition, the bounds from Proposition 3.2 give uniform bounds on \(\partial _\theta \gamma \) in \(L^2_{\theta ,a}\), which carries over to \(\gamma _\infty \). Finally (1.21) follows from \(L^2_{\theta ,a}\) convergence.

Finally, the uniqueness of solutions follows by Gronwall estimate on the \(L^2_{\theta ,a}\)-norm of the difference of two solutions \(\gamma _j\), \(j=1,2\). Starting with (1.16), one computes that

and since the second term is a perfect Poisson bracket, we deduce that

where \({\widetilde{\Psi }}_j\) is the potential field corresponding to \(\gamma _j\), \(j=1,2\). Now using (2.23), the bounds for \({\widetilde{R}}\), \(\partial _\theta {\widetilde{R}}\), \(\partial _a{\widetilde{R}}\) in. (2.12)–(2.13), and the fact that \(\mathbf{m}\) is quadratic in \(\gamma \), it follows that

and we can conclude the Gronwall argument. \(\quad \square \)

Notes

Here the initial continuous density \(f_0=\mu _0^2\) is assumed to be non-negative, a condition which is then propagated by the flow and allows us to work with functions \(\mu \) in an \(L^2\) framework rather than a general non-negative function f in \(L^1\) – see also our previous work [21] for more on this.

In these formulas, \(\epsilon _0\) is the vacuum permittivity, \(m_g\) the inertia of a gas particle and \(m_c\) the inertia of the point charge.

Here we slightly abuse notation and write \(\mu (r,\nu ,t)\) to denote the density of \(\mu \) in the same “radial variables” as the initial data, see Sect. 1.2.

Here we consider densities with respect to the measure \(\sigma _{rad}:=\delta (x\wedge p)dxdp\). This is a strong notion of radial solutions. A weaker notion would be to consider functions which are jointly invariant under rotations, i.e. a density of the form \(\mu =\mu (\vert x\vert ,\vert p\vert ,\ell )\), where \(\ell =x\times p\) is the microscopic angular momentum. We refer e.g. to [31] for the study of such solutions.

Action-angle variables traditionally refer to the case when the trajectories are bounded.

References

Arroyo-Rabasa, A., Winter, R.: Debye screening for the stationary Vlasov–Poisson equation in interaction with a point charge. Commun. Partial Diff. Equ. (2021). https://ddoi.org/10.1080/03605302.2021.1892754

Bardos, C., Degond, P.: Global existence for the Vlasov–Poisson equation in \(3\) space variables with small initial data. Annales de l’Institut Henri Poincaré. Analyse Non Linéaire 2(2), 101–118 (1985)

Bardos, C., Mauser, N.J.: Kinetic equations: a French history. European Mathematical Society. Newsletter, 109, 10–18 (2018). Translation of the French original

Bedrossian, J., Masmoudi, N., Mouhot, C.: Landau damping in finite regularity for unconfined systems with screened interactions. Commun. Pure Appl. Math. 71(3), 537–576 (2018)

Caprino, S., Marchioro, C.: On the plasma-charge model. Kinetic Related Models 3(2), 241–254 (2010)

Caprino, S., Marchioro, C., Miot, E., Pulvirenti, M.: On the attractive plasma-charge system in 2-d. Commun. Partial Differ. Equ. 37(7), 1237–1272 (2012)

Chen, J., Zhang, X., Wei, J.: Global weak solutions for the Vlasov–Poisson system with a point charge. Math. Methods Appl. Sci. 38(17), 3776–3791 (2015)

Choi, S.-H., Kwon, S.: Modified scattering for the Vlasov–Poisson system. Nonlinearity 29(9), 2755–2774 (2016)

Crippa, G., Ligabue, S., Saffirio, C.: Lagrangian solutions to the Vlasov–Poisson system with a point charge. Kinetic Related Models 11(6), 1277–1299 (2018)

Desvillettes, L., Miot, E., Saffirio, C.: Polynomial propagation of moments and global existence for a Vlasov-Poisson system with a point charge. Annales de l’Institut Henri Poincaré. Analyse Non Linéaire, 32(2), 373–400 (2015)

Faou, E., Horsin, R., Rousset, F.: On linear landau damping around inhomogeneous stationary states of the Vlasov-HMF model. arXiv preprint, arXiv:2105.02484 (2021)

Faou, E., Rousset, F.: Landau damping in sobolev spaces for the Vlasov-HMF model. Arch. Ration. Mech. Anal. 219, 887–902 (2016)

Flynn, P., Ouyang, Z., Pausader, B., Widmayer, K.: Scattering map for the Vlasov–Poisson system. arXiv preprint, arXiv:2101.01390 (2021)

Glassey, R.T.: The Cauchy Problem in Kinetic Theory. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (1996)

Griffin-Pickering, M., Iacobelli, M.: Recent developments on the well-posedness theory for Vlasov-type equations. arXiv preprint, arXiv:2004.01094 (2020)

Guo, Y., Lin, Z.: The existence of stable BGK waves. Commun. Math. Phys. 352(3), 1121–1152 (2017)

Guo, Y., Strauss, W.A.: Nonlinear instability of double-humped equilibria. Annales de l’Institut Henri Poincaré. Analyse Non Linéaire, 12(3), 339–352 (1995)

Han-Kwan, D., Nguyen,T.T., Rousset, F.: Asymptotic stability of equilibria for screened Vlasov–Poisson systems via pointwise dispersive estimates. arXiv preprint, arXiv:1906.05723 (2019)

Horsin, R.: Comportement en temps long d’équations de type Vlasov : études mathématiques et numériques. Ph.D. thesis, 2017. Thèse de doctorat dirigée par E. Faou, et F. Rousset, Mathématiques et Applications, Rennes 1 (2017)

Ionescu, A., Jia, H. (2019), Axi-symmetrization near point vortex solutions for the 2D Euler equation. Comm. Pure Appl. Math. https://doi.org/10.1002/cpa.21974

Ionescu, A.D., Pausader, B., Wang, X., Widmayer, K.: On the asymptotic behavior of solutions to the Vlasov-Poisson system. International Mathematics Research Notices, to appear, arXiv preprint, arXiv:2005.03617 (2020)

Lemou, M., Méhats, F., Raphael, P.: The orbital stability of the ground states and the singularity formation for the gravitational Vlasov Poisson system. Arch. Ration. Mech. Anal. 189(3), 425–468 (2008)

Li, D., Zhang, X.: On the 3-D Vlasov–Poisson system with point charges: global solutions with unbounded supports and propagation of velocity-spatial moments. J. Differ. Equ. 263(10), 6231–6283 (2017)

Li, D., Zhang, X.: Asymptotic growth bounds for the 3-D Vlasov–Poisson system with point charges. Math. Methods Appl. Sci. 41(9), 3294–3306 (2018)

Lions, P.-L., Perthame, B.: Propagation of moments and regularity for the \(3\)-dimensional Vlasov–Poisson system. Inventiones Mathematicae 105(2), 415–430 (1991)

Majda, A.J., Majda, G., Zheng, Y.X.: Concentrations in the one-dimensional Vlasov-Poisson equations. I. Temporal development and non-unique weak solutions in the single component case. Physica D Nonlinear Phenomena 74(3–4), 268–300 (1994)

Marchioro, C., Miot, E., Pulvirenti, M.: The Cauchy problem for the 3-D Vlasov–Poisson system with point charges. Arch. Ration. Mech. Anal. 201(1), 1–26 (2011)

Miot, E.: A uniqueness criterion for unbounded solutions to the Vlasov–Poisson system. Commun. Math. Phys. 346(2), 469–482 (2016)

Mouhot, C.: Stabilité orbitale pour le système de Vlasov-Poisson gravitationnel (d’après Lemou-Méhats-Raphaël, Guo, Lin, Rein et al.). Number 352, pages Exp. No. 1044, vii, 35–82. 2013. Séminaire Bourbaki. Vol. 2011/2012. Exposés 1043–1058

Mouhot, C., Villani, C.: On Landau damping. Acta Math. 207(1), 29–201 (2011)

Pankavich,S.: Exact large time behavior of spherically-symmetric plasmas. arXiv preprint, arXiv:2006.11447 (2020)

Penrose, O.: Electrostatic instabilities of a uniform non-Maxwellian plasma. Phys. Fluids 3(2), 258–265 (1960)

Pfaffelmoser, K.: Global classical solutions of the Vlasov–Poisson system in three dimensions for general initial data. J. Differ. Equ. 95(2), 281–303 (1992)

Rein, G.: Collisionless kinetic equations from astrophysics—the Vlasov–Poisson system. In: Handbook of Differential Equations: Evolutionary Equations. Vol. III, Handb. Differ. Equ., pp. 383–476. Elsevier/North-Holland, Amsterdam (2007)

Schaeffer, J.: Global existence of smooth solutions to the Vlasov–Poisson system in three dimensions. Commun. Partial Differ. Equ. 16(8–9), 1313–1335 (1991)

Zheng, Y.X., Majda, A.: Existence of global weak solutions to one-component Vlasov–Poisson and Fokker–Planck–Poisson systems in one space dimension with measures as initial data. Commun. Pure Appl. Math. 47(10), 1365–1401 (1994)

Acknowledgements

The authors would like to thank Y. Guo and P. Flynn for interesting and stimulating discussions. They would also like to thank the diligent referees for their careful reading and valuable comments and suggestions. B. P. was supported in part by NSF Grant DMS-1700282.

Funding

Open Access funding provided by EPFL Lausanne.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by A. Ionescu.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pausader, B., Widmayer, K. Stability of a Point Charge for the Vlasov–Poisson System: The Radial Case. Commun. Math. Phys. 385, 1741–1769 (2021). https://doi.org/10.1007/s00220-021-04117-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00220-021-04117-8