Abstract

In this article we present a quantitative central limit theorem for the stochastic fractional heat equation driven by a a general Gaussian multiplicative noise, including the cases of space–time white noise and the white-colored noise with spatial covariance given by the Riesz kernel or a bounded integrable function. We show that the spatial average over a ball of radius R converges, as R tends to infinity, after suitable renormalization, towards a Gaussian limit in the total variation distance. We also provide a functional central limit theorem. As such, we extend recently proved similar results for stochastic heat equation to the case of the fractional Laplacian and to the case of general noise.

Similar content being viewed by others

1 Introduction

In this article we consider the stochastic fractional heat equation

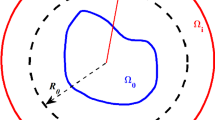

with initial condition \(u(0, x)\equiv 1\). Here \(\sigma \) is assumed to be a Lipschitz continuous function with the property \(\sigma (1)\ne 0\) and \(- (-\Delta )^{\frac{\alpha }{2}}\) is the fractional Laplace operator.

Fractional Laplace operator can be viewed as a generalization of spatial derivatives and classical Sobolev spaces into fractional order derivatives and fractional Sobolev spaces, and together with the associated equations it has numerous applications in different fields including fluid dynamics, quantum mechanics, and finance to simply name a few. For detailed discussions and different equivalent formal definitions, see [10] and the references therein.

In the present article we provide a general existence and uniqueness result to equation (1.1) that covers many different choices of the (Gaussian) random perturbation \({\dot{W}}\). We note that in this context, existence and uniqueness of the solution to (1.1) can be deduced from general results of [9] (although in [9] it was assumed that the spatial covariance of \({\dot{W}}\) has a spectral density). However, for the reader’s convenience we present and prove the existence and uniqueness in our particular setting. Our main contribution is in providing quantitative limit theorems in a general context. These results cover three different important situations: when \({\dot{W}}\) is a standard space–time white noise, when \({\dot{W}}\) is a white-colored noise, i.e. a Gaussian field that behaves as a Wiener process in time and it has a non-trivial spatial covariance given by the Riesz kernel of order \(\beta <\min (\alpha ,d)\), and when \({\dot{W}}\) is a white-colored noise with spatial covariance given by an integrable and bounded function \(\gamma \).

Our results continue the line of research initiated in [12, 13] where a similar problem for the stochastic heat equation on \({\mathbb {R}}\) (or \({\mathbb {R}}^d\), respectively) driven by a space–time white noise (or spatial covariance given by the Riesz kernel, respectively) was considered. As such, we extend the results presented in [12, 13] as the main theorems of [12, 13] can be recovered from ours by simply plugging in \(\alpha =2\). Proof-wise our methods are similar to those of these two references. However, we stress that in our case we do not have fine properties of the heat kernel at our disposal, and hence one has to be more careful in the computations. In particular, our main contribution is the bound for the norm of the Malliavin derivative (cf. Proposition 5.2) that differs from the classical Laplacian case. Moreover, we provide a general approach how such bounds can be achieved, based on the boundedness properties of the convolution operator with the spatial covariance \(\gamma \) (see Proposition 3.2) together with the semigroup property and some integrability of the Green kernel.

On a related literature, we also mention [8] studying the case of stochastic wave equation on \({\mathbb {R}}^d\). In this article, the driving noise was assumed to be Gaussian multiplicative noise that is white in time and colored in space such that the correlation in the space variable is described by the Riesz kernel. As such, our results complements the above mentioned works studying the stochastic heat and wave equation.

The rest of the paper is organised as follows. In Sect. 2 we describe and discuss our main results. In particular, we provide the existence and uniqueness result for the solution, and provide quantitative central limit theorems for the spatial average in the mentioned particular cases. In Sect. 3 we recall some preliminaries, including some basic facts on Stein’s method and Malliavin calculus that are used to prove our results, together with some basic facts on the Green kernel related to the fractional heat equation, and a key inequality proved in Proposition 3.2. Proofs of our main results are provided in Sects. 4 and 5.

2 Main results

In this section we introduce and discuss our main results concerning equation (1.1). Throughout the article, we assume that \({\dot{W}}\) is a centered Gaussian noise with a covariance given by

where \(\delta _0\) denotes the Dirac delta function and \(\gamma \) is a nonnegative and nonnegative definite symmetric measure. The spectral measure \({\widehat{\gamma }}(d\xi )\) is defined through the Fourier transform of the measure \(\gamma \):

The existence of the solution to (1.1) is guaranteed if a fractional version (2.4) of Dalang’s condition is satisfied. In particular, this is the case on all examples mentioned in the introduction.

We next introduce the Green kernel (or fundamental solution) associated to the operator \(- (-\Delta )^{\frac{\alpha }{2}}\), where \(\alpha \in (0,2]\). This kernel, denoted in the sequel by \(G_{\alpha }\), is defined via its Fourier transform

for \(\alpha >0\) (here and in the sequel, \(\vert \cdot \vert \) denotes the Euclidean norm). While explicit formulas for \(G_\alpha (t,x)\) are known only in the special cases \(\alpha = 1\) (the Poisson kernel) and \(\alpha =2\) (the heat kernel), the kernel \(G_\alpha (t,x)\) admits many desirable properties. Some of them that are suitable for our purposes are recorded in Sect. 3.

Similarly to the classical stochastic heat equation case, the solution to the stochastic equation (1.1) can be expressed in terms of \(G_{\alpha }\). That is, the mild solution is a measurable random field \(\left( u(t,x), t\ge 0, x \in {\mathbb {R}}^d \right) \) which satisfies

where the stochastic integral is understood in the Walsh sense [21]. The following existence and uniqueness result holds. Taking into account that the claim follows as a special case of [9, Theorem 1.2] provided that \({\hat{\gamma }}(d\xi )\) is absolutely continuous, the result and condition (2.4) are not at all surprising.

Theorem 2.1

Suppose that the Fourier transform \(\widehat{\gamma }= {\mathcal {F}} \gamma \) satisfies the fractional Dalang’s condition:

for some (and hence for all) \(\beta >0\). Then Eq. (1.1) admits a unique mild solution given by (2.3). Moreover, for any \(p\ge 1\) and any \(T>0\) we have

Remark 1

We present our results only in the case of the initial condition \(u(0, x)\equiv 1\) which makes our presentation and notation easier. We stress however, that with a little bit of extra effort our results could be extended to cover more general initial conditions. Actually, our existence result can be generalised to cover even the cases of initial conditions given by measures (satisfying certain suitable conditions). Indeed, then the mild solution is given by

where \(u_0(dy)\) denotes the initial measure. In this case, one needs to require conditions on \(u_0(dy)\) as well, in addition to (2.4). In particular, the integral \(\int _{{\mathbb {R}}^d}G_\alpha (t,x-y)u_0(dy)\) above should exists. For a detailed exposure on the topic in the case of the stochastic heat equation (\(\alpha =2\)), we refer to [4]. Similarly, in the spirit of [13, Corollary 3.3], our approximation results can be generalised to the case of \(u(0,x) = f(x)\) with suitable assumptions on the function f, once a comparison principle is established.

Throughout the article, for a function f and a (signed) measure \(\mu \) we denote by \(f*\mu \) the convolution defined by

provided it exists. If \(\mu \) is absolutely continuous, then \(d\mu (x) = \mu (x)dx\) for some function \(\mu \) and we recover the classical convolution for integrable functions

If \(\mu \) can be viewed as a function, the well-known Young convolution inequality states that for \(\frac{1}{p} + \frac{1}{q} = \frac{1}{r}+1\) with \(1\le p, q\le r\le \infty \), we have

In particular, this gives us, for any \(p\ge 1\),

More generally, if \(\mu \) is a finite measure, a simple mollification argument shows that (2.7) remains valid with \(\Vert \mu \Vert _{L^{1}({\mathbb {R}}^d)}\) replaced by \(\mu ({\mathbb {R}}^d)\) (see, e.g. [1, Proposition 3.9.9]). Finally, by \(I_{d-\beta }\) we denote the Riesz potential defined by, for \(0<\beta < d\),

where \(K_{d-\beta }(y) = |y|^{-\beta }\). More generally, Riesz potential \(I_{d-\beta } \mu \) with respect to a measure \(\mu \) is defined through the convolution

In order to simplify our notation, we also define \(I_{d-\beta }\) for \(\beta =d\) simply as an identity operator.

We also provide approximation results for the spatial average over an Euclidean ball of radius R, denoted by \(B_R\). For these purposes we require some more refined information on the covariance \(\gamma \) instead of the general condition (2.4).

Assumption 2.2

We assume that \(\gamma \) is given by the Riesz potential \(\gamma = I_{d-\beta } \mu \), where \(0<\beta \le d\) and \(\mu \) is a finite symmetric measure. Moreover, one of the following conditions holds:

-

(i)

\(\beta < \alpha \wedge d\).

-

(ii)

\(\beta =d=1\) and \(\alpha >1\).

-

(iii)

\(\beta =d \ge \alpha \) and \(\mu =\gamma \) is absolutely continuous, i.e. \(d\gamma (x)= \gamma (x)dx\), with \(\gamma \in L^r({\mathbb {R}}^d)\) for some \(r>\frac{d}{\alpha }\). In addition, if \(r>2\), we impose Dalang’s condition (2.4).

Remark 2

Condition \(\beta <\alpha \) in Case (i) implies that Dalang’s condition (2.4) is satisfied. Indeed, we recall that a Fourier transform \({\widehat{\mu }}\) of a finite measure \(\mu \) is a bounded continuous function. Consequently, by recalling the convolution theorem \(\widehat{f *\mu } = {\widehat{f}}{\widehat{\mu }}\) and the fact that the Riesz potential \(I_{d-\beta }\) is a Fourier multiplier, we obtain

from which we deduce (2.4). Dalang’s condition (2.4) clearly holds in Case (ii). Finally, in Case (iii) we can deduce (2.4) from the Hausdorff-Young inequality if \(r\le 2\).

Remark 3

By carefully examining our proof one can see that our results remains valid provided that \(\gamma = I_{d-\beta }\mu \) satisfies Dalang’s condition and the statement in Proposition 3.2 holds for suitable number 2q.

Case (ii) covers the case of the space–time white noise, where \(\gamma \) is given by the Dirac delta \(\gamma (y) = \delta _0(y)\). The case \(\gamma (y) = |y|^{-\beta }\) corresponds to the noise with spatial correlation given by the Riesz kernel, studied in the heat equation case \(\alpha =2\) in [13]. In our terminology, this is included in Case (i) where \(\gamma = I_{d-\beta }\delta _0\).

Recall that the total variation distance between random variables (or associated probability distributions) is given by

Our first main results concern the following two quantitative central limit theorems for the spatial average of the solution.

Theorem 2.3

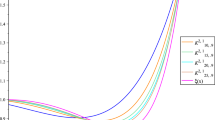

Let \(\gamma \) satisfy Assumption 2.2 and let u be the solution to the stochastic fractional heat equation (1.1). Then for every \(t>0\) there exists a constant C, depending solely on t, \(\alpha \), \(\sigma \), and the covariance \(\gamma \), such that

where \(Z\sim N(0,1)\) is a standard normal random variable, and \(\sigma _R^2 = \mathrm{Var} \big ( \int _ {B_R} [ u(t,x) - 1 ]\,dx \big ) \sim R^{2d-\beta }\), as \(R\rightarrow \infty \).

Remark 4

While we have stated our result concerning only a ball \(B_R\) centered at the origin, we stress that with exactly the same arguments, one can replace \(B_R\) with some other body \(RA_0 = \{Ra : a \in A_0\}\). This affects only the normalization constants. Moreover, as in the heat case (cf. [13, Remark 3]), one can allow the center of the ball \(a_R\) to vary in R as well. This fact follows easily from the stationarity.

Following the spirit of the mentioned references, we also provide functional version of Theorem 2.3.

Theorem 2.4

Let \(\gamma \) satisfy Assumption 2.2 and let u be the solution to the stochastic fractional heat equation (1.1). Then

as \(R\rightarrow \infty \), where Y is a standard Brownian motion, the weak convergence takes place on the space of continuous functions C([0, T]), and \(\varrho (s)\) is given by;

-

If \(\beta < d\), then \(\varrho (s) = \sqrt{\mu \left( {\mathbb {R}}^d\right) \int _{B_1^2}|x-x'|^{-\beta }dxdx'}{\mathbb {E}}[\sigma (u(s,y))]\).

-

If \(\beta =d\), then \(\varrho (s) = \sqrt{|B_1|\int _{{\mathbb {R}}^d} {\mathbb {E}}\left[ \sigma (u(s,0))\sigma (u(s,z))\right] d\mu (z)}\).

Note that \(\varrho \) depends on \(\alpha \) through the the solution u(s, y), see Remark 6.

Remark 5

We prove later (see Lemma 5.5) that

Under our initial condition \(u(0,x) \equiv 1\), we may hence apply the arguments of [8, Lemma 3.4] to see the equivalence

Hence \(\sigma (1)\ne 0\) is a natural condition that guarantees \(\sigma _R>0\) for all \(R>0\). Note also that \(\sigma (1)\ne 0\) is necessary to exclude the trivial solution \(u(t,x)\equiv 1\) by using the Picard iteration.

Example 1

Suppose \(\mu = \delta _0\) and let \(\beta = d = 1\) and \(\alpha >1\). This case corresponds to the space–time white noise, and now

In the case \(\alpha =2\), we thus recover the results of [12].

Example 2

Suppose \(\beta <d\) and let \(\mu = \delta _0\). This case corresponds to the white-colored case with the spatial covariance given by the Riesz kernel. Now

and consequently, for \(\alpha = 2\) we obtain the results of [13].

Remark 6

We emphasis that the additional parameters associated to the fractional operator (i.e. \(\alpha \)) does not affect the above results, except for the constant quantities through the solution u. Indeed, the renormalization rate and the total variation distance are, up to multiplicative constants, the same as in the case \(\alpha =2\) corresponding to the classical stochastic heat equation. Similarly, the limiting normal distribution in Theorem 2.3 and the limiting time-changed Brownian motion in Theorem 2.4 are the same as in the case \(\alpha =2\). Since \(G_\alpha \) is intimately connected to a stable Lévy process, this might appear surprising as one might expect stable limiting laws. However, the Gaussian form of the limiting distribution is connected to the Gaussian nature of the noise \({\dot{W}}\), while the Green kernel \(G_\alpha (t,x)\) (associated to a stable process) is simply a deterministic function that has suitable scaling in the time variable t and sufficient integrability in the spatial variable x. In contrast, one could expect stable limiting law, even in the case \(\alpha =2\) when \(G_\alpha \) is the (Gaussian) heat kernel, if the noise \({\dot{W}}\) is driven by a suitable Lévy process.

3 Preliminaries

In this section we present some preliminaries that are required for the proofs of our main theorems. In particular, we recall some facts on Malliavin calculus and Stein’s method together with some basic properties of the fractional Green kernel. Finally, in Proposition 3.2 we present a basic inequality that allows us to derive a bound for the Malliavin derivative.

3.1 Malliavin calculus and Stein’s method

We start by introducing the Gaussian noise that governs the stochastic fractional heat equation (1.1).

Denote by \(C_{c}^{\infty }\left( [0, \infty ) \times {\mathbb {R}}^d\right) \) the class of \(C^{\infty }\) functions on \( [0, \infty ) \times {\mathbb {R}}^d\) with compact support. We consider a Gaussian family of centered random variables

on some complete probability space \(\left( \Omega , {\mathcal {F}}, P\right) \) such that

We stress again that, in general, \(\gamma \) is not a function, and hence (3.1) should be understood as

We denote by \({\mathfrak {H}}\) the Hilbert space defined as the closure of \(C_{c}^{\infty }\left( [0, \infty ) \times {\mathbb {R}}^d\right) \) with respect to the inner product (3.1). By density, we obtain an isonormal process \((W(\varphi ), \varphi \in {\mathfrak {H}})\), which consists of a Gaussian family of centered random variable such that, for every \(\varphi , \psi \in {\mathfrak {H}}\),

The Gaussian family \((W(\varphi ), \varphi \in {\mathfrak {H}})\) is usually called a white-colored noise because it behaves as a Wiener process with respect to the time variable \(t\in [0, \infty )\) and it has a spatial covariance given by the measure \(\gamma \).

Let us introduce the filtration associated to the random noise W. For \(t>0\), we denote by \({\mathcal {F}}_{t}\) the sigma-algebra generated by the random variables \(W(\varphi )\), with \(\varphi \in {\mathfrak {H}}\) having its support included in the set \([0, t]\times {\mathbb {R}}^d\). For every random field \((X(s,y), s\ge 0, y\in {\mathbb {R}}^d)\), jointly measurable and adapted with respect to the filtration \(\left( {\mathcal {F}}_{t}\right) _{t\ge 0} \), satisfying

we can define stochastic integrals with respect to W of the form

in the sense of Dalang-Walsh (see [6, 21]). This integral satisfies the Itô-type isometry

The Dalang-Walsh integral also satisfies the following version of the Burkholder-Davis-Gundy inequality: for any \(t\ge 0\) and \(p\ge 2\),

Let us next describe the basic tools from Malliavin calculus needed in this work. We introduce \(C_p^{\infty }({\mathbb {R}}^n)\) as the space of smooth functions with all their partial derivatives having at most polynomial growth at infinity, and \({\mathcal {S}}\) as the space of simple random variables of the form

where \(f\in C_p^{\infty }({\mathbb {R}}^n)\) and \(h_i \in {\mathfrak {H}}\), \(1\le i \le n\). Then the Malliavin derivative DF is defined as \({\mathfrak {H}}\)-valued random variable

For any \(p\ge 1\), the operator D is closable as an operator from \(L^p(\Omega )\) into \(L^p(\Omega ; {\mathfrak {H}})\). Then \({\mathbb {D}}^{1,p}\) is defined as the completion of \({\mathcal {S}}\) with respect to the norm

The adjoint operator \(\delta \) of the derivative is defined through the duality formula

valid for any \(F \in {\mathbb {D}}^{1,2}\) and any \(u\in \mathrm{Dom} \, \delta \subset L^2(\Omega ; {\mathfrak {H}}) \). The operator \(\delta \) is also called the Skorokhod integral since, in the case of the standard Brownian motion, it coincides with an extension of the Itô integral introduced by Skorokhod (see, e.g. [11, 19]). In our context, any adapted random field X which is jointly measurable and satisfies (3.3) belongs to the domain of \(\delta \), and \(\delta (X)\) coincides with the Walsh integral:

This allows us to represent the solution u(t, x) to (1.1) as a Skorokhod integral.

The proofs of our main results are based on Malliavin-Stein approach, introduced by Nourdin and Peccati in [16] (see also the book [17]). In particular, we apply the following result to obtain rate of convergence in the total variation distance (see [20] and also [12, 18]).

Proposition 3.1

If F is a centered random variable in the Sobolev space \({\mathbb {D}}^{1,2}\) with unit variance such that \(F = \delta (v)\) for some \({\mathfrak {H}}\)-valued random variable v belonging to the domain of \(\delta \), then, with \(Z \sim N (0,1)\),

3.2 On fractional Green kernel

We recall some useful properties of the kernel \(G_{\alpha }\) defined through (2.2). For details, we refer to [2, 5, 7].

-

(1)

For every \(t>0\), \(G_{\alpha }(t, \cdot ) \) is the density of a d-dimensional Lévy stable process at time t. In particular, we have

$$\begin{aligned} \int _{{\mathbb {R}}^d} G_{\alpha }(t,x)dx=1. \end{aligned}$$(3.8) -

(2)

For every t, the kernel \(G_{\alpha }(t,x)\) is real valued, positive, and symmetric in x.

-

(3)

The operator \(G_{\alpha } \) satisfies the semigroup property, i.e.

$$\begin{aligned} G_{\alpha } (t+s, x)= \int _{{\mathbb {R}}^{d}} G_{\alpha } (t, z ) G_{\alpha } (s, x-z) dz \end{aligned}$$(3.9)for \(0<s<t\) and \(x\in {\mathbb {R}}^d\).

-

(4)

\(G_{\alpha }\) is infinitely differentiable with respect to x, with all the derivatives bounded and converging to zero as \(\vert x\vert \rightarrow \infty \). Moreover, we have the scaling property

$$\begin{aligned} G_{\alpha }(t,x)= t^ {-\frac{d}{\alpha } } G_{\alpha }(1, t ^ {-\frac{1}{\alpha }}x). \end{aligned}$$(3.10) -

(5)

There exist two constants \(0<K_{\alpha }' < K_{\alpha } \) such that

$$\begin{aligned} K' _{\alpha } \frac{1}{\left( 1+ \vert x\vert \right) ^ {d+\alpha }}\le \left| G_{\alpha } (1,x)\right| \le K_{\alpha } \frac{1}{ \left( 1+ \vert x\vert \right) ^ {d+\alpha }} \end{aligned}$$(3.11)for all \(x\in {\mathbb {R}}^d\). Together with the scaling property, this further translates into

$$\begin{aligned} K' _{\alpha } \frac{t^{-\frac{d}{\alpha }}}{\left( 1+ \vert t^{-\frac{1}{\alpha }}x\vert \right) ^ {d+\alpha }}\le \left| G_{\alpha } (t,x)\right| \le K_{\alpha } \frac{t^{-\frac{d}{\alpha }}}{ \left( 1+ \vert t^{-\frac{1}{\alpha }}x\vert \right) ^ {d+\alpha }}. \end{aligned}$$(3.12)

3.3 A basic inequality

The following proposition contains an inequality that plays a fundamental role in the proof of the estimates of the p-norm of the Malliavin derivative.

Proposition 3.2

Suppose that the covariance \(\gamma \) satisfies Assumption 2.2. Then, there exists a number \(2q \in \left( 1,\frac{2d}{2d-\alpha } \wedge \frac{d+\alpha }{d}\right) \) such that for any functions \(f,g \in L^{2q}({\mathbb {R}}^d)\) we have

Remark 7

The requirement \(2q < \frac{2d}{2d-\alpha }\) ensures that

while the requirement \(2q < \frac{d+\alpha }{d}\) ensures that \(G^{\frac{1}{2q}}(1,x)\) is integrable. Note also that \(\frac{d+\alpha }{d} \le \frac{2d}{2d-\alpha }\) only if \(d\le \alpha \). Since \(\alpha \le 2\), this can happen only in the one-dimensional case \(d=1\) or in the heat case \(\alpha =2\) and \(d=1,2\). In the latter however, \(G^{\frac{1}{2q}}(1,x)\) is integrable regardless of the value 2q and consequently, our results can be applied in that case as well under a condition \(2q \in \left( 1,\frac{2d}{2d-2}\right) \).

Proof of Proposition 3.2

We decompose the proof into the three possible cases from Assumption 2.2:

- Case (i)::

-

Taking \(2q=\frac{2d}{2d-{\beta }}\) (recall \(\beta < \alpha \wedge d\)) and using Hölder’s inequality, we obtain

$$\begin{aligned} \int _{{\mathbb {R}}^{2d}} f(x) [ g*\gamma ] (x) dx \le \Vert f\Vert _{L^{2q}({\mathbb {R}}^d)} \Vert g*\gamma \Vert _{L^{2q/(2q-1)}({\mathbb {R}}^d)}. \end{aligned}$$Notice that \(g*\gamma =( I_{d-\beta } g) * \mu \). Therefore, it follows from (2.7) that

$$\begin{aligned} \Vert g*\gamma \Vert _{L^{2q/(2q-1)}({\mathbb {R}}^d)} \le \mu ({\mathbb {R}}^d) \Vert I_{d-\beta } g \Vert _{L^{2q/(2q-1)}({\mathbb {R}}^d)}. \end{aligned}$$We then conclude the proof using the fact that \(2q=\frac{2d}{2d-{\beta }}\) and applying the following Hardy-Littlewood-Sobolev inequality (see e.g. [15] and references therein): for \(1<p<r<\infty \) satisfying \(\frac{1}{r} = \frac{1}{p} - \frac{d-\beta }{d}\), we have

$$\begin{aligned} \Vert I_{d-\beta } g\Vert _{L^{r}({\mathbb {R}}^d)} \le C\Vert g\Vert _{L^{p}({\mathbb {R}}^d)}. \end{aligned}$$(3.15) - Case (ii):

-

: Suppose \(\beta =d\). By Young’s inequality (2.7) and Hölder’s inequality, we get

$$\begin{aligned} \int _{{\mathbb {R}}^d} f({y}) \left[ g *\gamma \right] (y)dy \le \Vert f\Vert _{L^{2}({\mathbb {R}}^d)}\Vert g *\gamma \Vert _{L^{2}({\mathbb {R}}^d)} \le C \Vert f\Vert _{L^{2}({\mathbb {R}}^d)}\Vert g \Vert _{L^{2}({\mathbb {R}}^d)}. \end{aligned}$$Consequently, one can always choose \(q=1\) in (3.13). However, then \(2q < \frac{2d}{2d-\alpha }\wedge \frac{d+\alpha }{d}\) only if \(\alpha > d\). Taking into account the fact \(\alpha \in (0,2]\), this forces \(d=1\) and \(\alpha >1\). In conclusion, in the one-dimensional case and for \(\alpha >1\) we obtain the estimate (3.13) with \(q=1\), which completes the proof of Case (ii).

- Case (iii)::

-

Let \(\beta =d\ge \alpha \) and suppose that \(\gamma \) is absolutely continuous with a density \(\gamma \in L^r({\mathbb {R}}^d)\), where \(r>\frac{d}{\alpha }\). In this case we choose \(2q=\frac{2r}{2r-1}\). Clearly \(2q>1\). Moreover, condition \(r>\frac{d}{\alpha }\) implies \(2q < \frac{2d}{2d-\alpha }\) and \(\frac{2d}{2d-\alpha } \le \frac{d+\alpha }{d}\) because \(d \ge \alpha \). Finally, Hölder’s inequality and Young’s inequality (2.6) gives us

$$\begin{aligned} \int _{{\mathbb {R}}^d}{\int _{{\mathbb {R}}^d}} f(x) g(y) \gamma (x-y) dx dy \le \Vert f\Vert _{L^{2q}({\mathbb {R}}^d)} \Vert g\Vert _{L^{2q}({\mathbb {R}}^d)}\Vert \gamma \Vert _{L^{r}({\mathbb {R}}^d)}. \end{aligned}$$

\(\square \)

4 Proof of Theorem 2.1

For \(t\ge 0\), we denote

Taking the Fourier transform and using (2.2), we see that I(t) can equally be given by

Suppose now that \(\gamma \) satisfies (2.4). For \(\beta >0\), we define a function \(\Upsilon (\beta )\) by

Clearly, \(\Upsilon \) is non-negative, decreasing in \(\beta \), and \(\lim _{\beta \rightarrow \infty } \Upsilon (\beta )=0\).

Before proving Theorem 2.1 we introduce the following technical lemma that can be viewed as a fractional version of [4, Lemma 2.5].

Lemma 4.1

Let I(t) be given by (4.1) and, for given \(\iota >0\), let \(h_n\) be defined recursively by \(h_0(t) = 1\), and for \(n\ge 1\)

Then for any \(p\ge 1\) and any fixed \(T<\infty \), the series

converges uniformly in \(t\in [0,T]\).

Proof

By the same argument as in the proof of [4, Lemma 2.5], we get, for any \(\beta >0\), that

By choosing \(\beta \) large enough, we have \(\iota \Upsilon (\beta )\le 1/2\) and, as in [4], by choosing the smallest such \(\beta \) this gives us \(H(\iota ,1,t) \le \exp (Ct)\) for some constant C depending on \(\Upsilon \) and \(\iota \). Similarly, for the general case \(p>1\) we may apply Hölder inequality to get

where \(\frac{1}{p} + \frac{1}{q}=1\). Hence, similar arguments show that \(H(\iota ,p,t)\le \exp (Ct)\) and, in particular, that the series in (4.3) converges. \(\square \)

Equipped with Lemma 4.1, we are now able to prove Theorem 2.1.

Proof of Theorem 2.1

Define the standard Picard iterations by setting \(u_{0}(t,x)=1\) and, for \(n\ge 1\),

By induction, we can easily show that for every \(n\ge 0\), \(u_{n}(t, x)\) is well-defined and, for every \(p\ge 2\) and \(\beta >0\), we have

This in turn shows that

To see (4.4), we first observe that it is clearly true for \(n=0\). Suppose now that it holds for some n. We have

and, for every \(p\ge 2\), by using (3.3) and (3.4),

By using the Lipschitz assumption on \(\sigma \) and the induction hypothesis we get

Hence

and we obtain

Applying similar arguments together with Hölder’s inequality for

gives us

By standard arguments, it suffices to consider the case of an equality. In this case, it follows from Lemma 4.1 that \(\sum _{n\ge 1} H_{n} (t)^ {\frac{1}{p}}\) converges uniformly on [0, T]. Consequently, the sequence \(u_{n}\) converges in \(L^ {p}(\Omega ) \), uniformly on \([0, T] \times {\mathbb {R}}^{d} \), and its limit satisfies (2.3). The uniqueness follows in a similar way, and the stationarity of the solution with respect to the space variable is a consequence of the proof of Lemma 18 in [6].

\(\square \)

5 Proofs of Theorems 2.3 and 2.4

In this section we prove Theorems 2.3 and 2.4. The key ingredient for the proofs is to bound the Malliavin derivative of the solution to (1.1) by a quantity involving the Green kernel associated to the fractional operator (2.2). Once a suitable bound is established, it suffices to study the asymptotic variance and follow the ideas presented in [12, 13]. We divide this section into four subsections. In the first one we study the (bound for the) Malliavin derivative of the solution, and in the second we study the correct normalization rate. The last two subsections are devoted to the proofs of Theorem 2.3 and Theorem 2.4.

5.1 Bound for the Malliavin derivative

We begin by providing a linear equation for the Malliavin derivative of the solution. The claim follows from (2.3), and the proof is rather standard. For this reason we omit the details.

Proposition 5.1

Let u be the mild solution to (1.1). Then for every \(t\in (0, T]\), \(p\ge 2\) and \(x \in {\mathbb {R}}^d \), the random variable u(t, x) belongs to the Sobolev space \({\mathbb {D}}^{1,p}\) and its Malliavin derivative satisfies

where \(\Sigma (r, z) \) is an adapted and bounded (uniformly with respect to r and z) stochastic process that coincides with \(\sigma ' (u(r, z)) \) whenever \(\sigma \) is differentiable.

The following result provides a bound for the p-norm of the Malliavin derivative of the solution.

Proposition 5.2

Suppose that \(\gamma \) satisfies Assumption 2.2 and recall (see (3.14)) that \(\kappa = \frac{2d}{\alpha }\left( 1-\frac{1}{2q}\right) \), where q is from Proposition 3.2. Then for every \(0<s<t<T\), for every \(x, y \in {\mathbb {R}}^d \), and for every \(p\ge 2\) we have

Proposition 5.2 is based on the following lemma which proof is postponed to the appendix.

Lemma 5.3

Suppose that \(\gamma \) satisfies Assumption 2.2 and assume that \(g: [0, T]\times {\mathbb {R}}^d\rightarrow {{\mathbb {R}}}\) is non-negative function satisfying, for every \(t\in [0, T] \) and \({x} \in {\mathbb {R}}^d \),

Then

where \(\kappa = \frac{2d}{\alpha }\left( 1-\frac{1}{2q}\right) \) and q is from Proposition 3.2.

Remark 8

By carefully examining the proof of Lemma 5.3, one observes that the statement remains valid as long as, for 2q determined through Proposition 3.2 and depending solely on the covariance \(\gamma \), we have

for some constant C and parameter \(\kappa < 1\), and \(G_\alpha \) satisfies the semigroup property (3.9). This encodes the required connection between the density \(G_\alpha \) of the associated stable process and the covariance \(\gamma \). Indeed, the above requirements means that improved integrability induced by the convolution with \(\gamma \) is sufficient to compensate low integrability (or scaling in the time variable t) of the kernel \(G_\alpha \). As such, we could consider more general Green kernels G in place of \(G_\alpha \) in Lemma 5.3. For example, G can be taken to be a density of more general Lévy process. In this case, we obtain Proposition 5.2 provided that G satisfies the above condition.

Proof of Proposition 5.2

In a standard way we can show that, for every \(t\in (0, T]\) and \(x \in {\mathbb {R}} \), the random variable u(t, x) belongs to the Sobolev space \({\mathbb {D}}^{1,p}\) for all \(p\ge 2\) and its Malliavin derivative satisfies (5.1). Moreover, using the Burkholder-Davis-Gundy inequality (3.4) we obtain that, for any \(p\ge 2\),

To conclude the proof, it suffices to apply Lemma 5.3 with \(\theta =t-r, \eta = x-z \), and

\(\square \)

For later use we also record the following simple technical fact.

Lemma 5.4

Suppose \(2q \in \left( 1,\frac{2d}{2d-\alpha } \wedge \frac{d+\alpha }{d}\right) \). Then

where \(\kappa \) is defined in (3.14).

Proof

By the scaling property (3.10) we get

where, by (3.11),

since \(\frac{d+\alpha }{2q}>d\). \(\square \)

5.2 Asymptotic behavior of the covariance

Let us use the following notation. For fixed \(t>0,\) we define

The constant \(k_\beta \), for \(\beta \le d\), is defined by

Set

and

When \(\beta =d\), we put

and following lemma justifies the fact that \(\nu _\alpha \) is well-defined. The proof is postponed to the end of this subsection.

Lemma 5.5

Suppose that \(\gamma \) satisfies Assumption 2.2 with \(\beta =d\) and let \(\Psi \) be given by (5.6). Then for every \(s\ge 0\) we have

In particular, \(\nu _\alpha \) given by (5.8) is well-defined. Moreover, for every \(s\in [0,T]\) we have

The following theorem provides us the correct renormalization as well as the limiting covariance.

Theorem 5.6

Suppose that \(\gamma \) satisfies Assumption 2.2. Then

Before we proceed to the proof of Theorem 5.6, we present a couple of technical lemmas.

Lemma 5.7

Suppose that \(\gamma \) satisfies Assumption 2.2. Then for any bounded function \(s\mapsto \theta (s)\) we have, as \(R\rightarrow \infty \),

where \(k_\beta \) is defined in (5.5).

Proof

Recall that, writing formally by (3.2), we have

Since clearly \(\varphi _R(t-s,\bullet ) \in L^1({\mathbb {R}}^d) \cap L^{\infty }({\mathbb {R}}^d)\), it follows from Young’s inequality (2.7) and Hardy-Littlewood-Sobolev’s inequality (3.15) that \(\varphi _R(t-s,\bullet ) *I_{d-\beta } *\mu \in L^2({\mathbb {R}}^d)\). Hence we obtain, by taking a Fourier transform and using Plancherel’s theorem, that

where \(c_{d,\beta } =1\) for \(\beta =d\). By recalling that

where \(J_{\frac{d}{2}}\) denotes the Bessel function of the first kind of order d/2, we obtain

leading to

Since \({\widehat{\mu }} \in L^{\infty }({\mathbb {R}}^d)\), we have \(\sup _{R>0}e^{-2(t-s)R^{-\alpha }|\xi |^{\alpha }}{\widehat{\mu }}\left( \frac{\xi }{R}\right) < \infty \). Moreover, since \(J_{\frac{d}{2}}^2(|\xi |) = O(|\xi |^{{-1}})\) as \(|\xi |\rightarrow \infty \) and \(J_{\frac{d}{2}}^2(|\xi |) \sim c_d|\xi |^d\) as \(|\xi |\rightarrow 0\) (here we have used standard Landau notation \(O(|\xi |)\) and \(f\sim g\) if \(\frac{f}{g} \rightarrow 1\)), we have \( \int _{{\mathbb {R}}^d} |\xi |^{\beta -2d}J_{\frac{d}{2}}^2(|\xi |)d\xi < \infty . \) This, together with the boundedness of \(\theta (s)\), allows us to use the dominated convergence theorem and therefore, as \(R\rightarrow \infty \),

The result now follows from \({\widehat{\mu }}(0) = \mu ({\mathbb {R}}^d)\) together with the fact that

for \(\beta <d\) and, for \(\beta =d\), we have

Indeed, the validity of (5.10) can be seen from

while the validity of (5.9) can be seen from

This completes the proof. \(\square \)

Lemma 5.8

Suppose that \(\gamma \) satisfies Assumption 2.2 and \(\beta <d\). Then

Proof

As in the proof of Theorem 3.1 in [13], we can write, via the Clark-Ocone formula,

where

Hence, by applying Proposition 5.2, we obtain the estimate

We prove the claim by an argument based on uniform integrability. We know that \(\gamma = K_{d-\beta } *\mu \). Therefore,

where \(2q= \frac{2d}{2d-\beta }\). Making the change of variables \(u=s-r\), \(\xi =y-z\) and \(\xi '=y-z'\), we can write

For any fixed \(\xi , \xi ' ,w \in {\mathbb {R}}^d\), clearly, \(\vert y-y'-\xi -\xi ' -w\vert ^{- \beta }\) tends to zero as \(|y-y'|\) tends to infinity. Taking into account that

to show that \(\lim _{|y-y'| \rightarrow \infty } T_1(s, y, y' ) =0\), it suffices to check that

for some \(\beta '>\beta \). Making a change of variables, we can write

Appying Hölder’s and Hardy-Littlewood-Sobolev’s inequality (3.15) yields

which is finite since \(\beta '\) is close to \(\beta \). This concludes the proof. \(\square \)

Proof of Theorem 5.6

For notational simplicity, we only consider the case \(r=t\) while the case of general \(t,r\in [0,T]\) follows in a similar way. Using (2.3) and (5.4), we can write

Hence, by (3.3), we get

Let us begin with the case \(\beta <d\). In view of Lemma 5.7 together with the boundedness of \(\theta ^2_{\alpha } (s)\), it suffices to show that

Now by Lemma 5.8 we know that for every \(\varepsilon >0\) there exists \(K>0\) such that, for every \(s\in [0, t]\) and every \(y, y'\) with \( \vert y- y' \vert \ge K\),

By using \(\gamma = I_{d-\beta } *\mu \), we split \( T_{R}= T_{R,1}+ T_{R,2}, \) where

and

On the region \(|y'-y|\le K, 0\le s \le T\) the quantity \(\Psi (s, y-y ')- \theta ^2_{\alpha } (s)\) is uniformly bounded. Using also the semigroup property and (3.8) allows us to estimate

as \(R\rightarrow \infty \), since clearly here we have

For the term \(T_{R,2}\), we apply (5.12) to get

The change of variables \(x-y= \theta \), \(x'-y' =\theta '\), \(x_1=R\xi _1\) and \(x'= R\xi '\) yields

which is bounded by \(C\varepsilon \) because \(\sup _{z\in {\mathbb {R}}^d} \int _{B_1} |y-z| ^{-\beta } dy <\infty \). Since \(\varepsilon >0\) is arbitrary, the desired limit (5.11) follows. This verifies the claim for the case \(\beta < d\).

Let next \(\beta =d\). Since for a fixed \(s>0\), the function \(y \mapsto \Psi (s,y)\) is a bounded function and now \(\gamma = \mu \) is a finite measure, we may regard \({\tilde{\gamma }}_s(dy) = \Psi (s,y)\gamma (dy)\) as a signed measure. Considering positive and negative parts separately, we may use exactly the same arguments as in the proof of Lemma 5.7 and get

where now

This verifies the claim for \(\beta =d\) as well, and hence the proof is completed. \(\square \)

We end this subsection by proving Lemma 5.5.

Proof of Lemma 5.5

Denote

Since T(s, y) is also a bounded function, we may follow the proofs of Theorem 5.6 and Lemma 5.7 to obtain

where now, as \(R\rightarrow \infty \),

and

By the very definition, we have

and since T(s, y) and \(\gamma (y)\) are both covariances, they are positive semidefinite. Consequently, the product \(T(s,y)\gamma (y)\) is again a covariance. It follows that

for all \(\xi \in {\mathbb {R}}^d\) and, in particular, for \(\xi = 0\). Now

The claim follows from this together with the observations \(\Psi (0,z)= \theta _\alpha ^2(0)\) for all \(z\in {\mathbb {R}}^d\) and \(\widehat{T(s,\bullet )\gamma (\bullet )}(0) \ge 0\) for all \(s\ge 0\). \(\square \)

5.3 Proof of Theorem 2.3

We start with the following result that we will utilise in the case \(\beta <d\).

Lemma 5.9

Suppose that \(0<\beta<\alpha < 2\wedge d\). For every \( t>0\) we have

Proof

Using the estimate (3.12), we have

The estimate (5.13) follows from this, because one can show that

\(\square \)

Proof of Theorem 2.3

Let \(\varphi _R\) be given by (5.4). By the same arguments as in the proof of [13, Theorem 1.1] (see pp. 7178-7180), using Proposition 3.1, Theorem 5.6 and Proposition 5.1, we get \(d_{TV}(F_R,Z)\le 2(A_1+A_2)\), where

and

We begin with the case \(\beta =d\) that is simpler. For the term \(A_1\) in this case, we use the trivial bound \(\varphi _{R} (t-s, y')\varphi _{R} (t-s, {\tilde{y}})\varphi _{R} (t-s, {\tilde{y}}') \le 1\), integrate in the variables \(y'\) and \({\tilde{y}}'\), perform the change of variables \(y\mapsto y-z\) and \({\tilde{y}} \mapsto {\tilde{y}}-z\) in the integrals with respect to \(y,{\tilde{y}}\), and then integrate with respect to \(z'\), z, and finally with respect to y and \({\tilde{y}}'\). Together with Lemma 5.4, this leads to

Treating the term \(A_2\) with similar arguments completes the proof for the case \(\beta =d\). Suppose next \(\beta <d\) and let us again first treat the term \(A_1\). We can bound \(A_1\) as follows

The change of variables \(x_1-y=\theta _1\), \( x_2-y' =\theta _2\), \(x_3 -{\tilde{y}} =\theta _3\), \(x_4-{\tilde{y}}' =\theta _4\), \(z-y= \eta _1\) and \({\tilde{z}} - {\tilde{y}} = \eta _2\), yields

Integrating in the variables \(\theta _2\) and \(\theta _3\) and using the estimate (5.13), we can write

The change of variables \(x_i =R \xi _i \), \(i=1,2,3,4\) yields

Taking into account that

and that, by Lemma 5.4,

we conclude that

Treating the term \(A_2\) similarly verifies the case \(\beta <d\) as well, completing the whole proof. \(\square \)

5.4 Proof of Theorem 2.4

In order to prove 2.4 it suffices to prove tightness and the convergence of the finite dimensional distributions. For the latter we can proceed as in [13] together with the arguments of the proof of Theorem 2.3. The tightness is ensured by the following result and Kolmogorov’s criterion.

Proposition 5.10

Let u(t, x) be the solution to (1.1). Then for any \(0\le s < t\le T\) and any \(p\ge 1\) there exists a constant \(C=C(p,T)\) such that

Proof

Let \( \Theta _{x,t,s}\) be given by

We have, for \(0<s<t<T\),

Now Burkholder inequality implies that, for every \(p\ge 1\),

Hence it remains to show that

By taking the Fourier transform, we obtain \(K_{R}(t,s)\le C (I_{1}+ I_{2}),\) where

and

Using \(e ^{-2(t-r)\vert \xi \vert ^{\alpha }}\le 1\) and

leads to

This concludes the proof. \(\square \)

Theorem 2.4 follows by the arguments of the proof of [13, Theorem 1.3] together with Proposition 5.10. Details that, despite being rather lengthy, are directly based on the same arguments that we have used above, and for this reason they are left to the reader.

References

V. Bogachev. Measure Theory. Springer-Verlag, Berlin, 2007

L. Chen and R. Dalang. Moments, intermittency and growth indices for nonlinear stochastic fractional heat equation. Stoch. Partial Differ. Equ. Anal. Comput., 3(3): 360–397, 2015

L. Chen and J. Huang. Comparison principle for stochastic heat equation on \({\mathbb{R}} ^ {d}\). Ann. Probab., 47(2): 989–1035, 2018

L. Chen and K. Kunwoo. Nonlinear stochastic heat equation driven by spatially colored noise: moments and intermittency. Acta Math. Sci., 39: 645–668, 2019

Chen, L., Hu, Y., Nualart, D.: Regularity and strict positivity of densities for the nonlinear stochastic heat equation. To appear in: Mem. Amer. Math. Soc., (2018)

Dalang, R.: Extending the martingale measure stochastic integral with applications to spatially homogeneous S.P.D.E.’s. Electron. J. Probab. 4(6), 29 pp (1999)

L. Debbi and M. Dozzi. On the solutions of nonlinear stochastic fractional partial differential equations in one spatial dimension. Stoch. Proc. Appl., 115: 1761–1781, 2005

F. Delgado-Vences, D. Nualart and G. Zheng. A central limit theorem for the stochastic wave equation with fractional noise. Ann. Inst. H. Poincaré Probab. Statist., 56(4): 3032–3042, 2020

M. Foondun and D. Khoshnevisan. On the stochastic heat equation with spatially-colored random forcing. Trans. Amer. Math. Soc., 365: 409–458, 2013

Garofalo, N.: Fractional thoughts. In: New Developments in the Analysis of Nonlocal Operators, Contemp. Math., 723: 1-135, Amer. Math. Soc., Providence, RI (2019)

B. Gaveau and P. Trauber. L’intégrale stochastique comme opérateur de divergence dans l’espace founctionnel. J. Funct. Anal., 46: 230–238, 1982

J. Huang, D. Nualart and L. Viitasaari. A central limit theorem for the stochastic heat equation. Stochastic Process. Appl., 130: 7170–7184, 2020

J. Huang, D. Nualart, L. Viitasaari and G. Zheng. Gaussian fluctuations for the stochastic heat equation with colored noise. Stoch. Partial Differ. Equ. Anal. Comput., 8: 402–421, 2020

T. Komatsu. On the martingale problem for generators of stable processes with perturbations. Osaka. J. Math., 21: 113–132, 1984

E.H. Lieb. Sharp constants in the Hardy-Littlewood-Sobolev and related inequalities. Ann. of Math., 118: 349–374, 1983

I. Nourdin and G. Peccati. Stein’s method on Wiener chaos. Probab. Theory Rel., 145(1):75–118, 2009

Nourdin, I., Peccati, G.: Normal Approximations with Malliavin Calculus. From Stein’s method to universality. Cambridge Tracts in Mathematics, 192. Cambridge University Press, Cambridge (2012)

D. Nualart and E. Nualart. Introduction to Malliavin Calculus. IMS Textbooks, Cambridge University Press, 2018

D. Nualart and E. Pardoux. Stochastic calculus with anticipating integrands. Probab. Theory Related Fields, 78: 535–581, 1988

Nualart, D., Zhou, H.: Total variation estimates in the Breuer-Major theorem. To appear in Ann. Inst. H. Poincaré Probab. Stat. (2018)

Walsh, J.B.: An introduction to stochastic partial differential equations. In: École d’Été de Probabilités de Saint-Flour, XIV—1984, 265–439. Lecture Notes in Math. 1180, Springer, Berlin (1986)

Acknowledgements

We would like to thank two anonymous referees for their valuable comments.

Funding

Open access funding provided by Aalto University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

O. Assaad and C.A. Tudor were supported in part by the Labex CEMPI (ANR-11-LABX-0007-01).

Appendix: Proof of Lemma 5.3

Appendix: Proof of Lemma 5.3

Proof of Lemma 5.3

As in [3] (see the proofs of Lemmas 2.4 and 3.1), it suffices to prove the bound (5.3) in the case when (5.2) is an equality. Let \(g_{n}, n\ge 0\) be a sequence defined iteratively by setting \(g_{0} (t,x)= G_{\alpha } (t, x)\), and for \(n\ge 0\)

Denote \(\kappa = \frac{2d}{\alpha } - \frac{d}{q\alpha }\). We prove by induction that for every \(n\ge 0\),

For \(n=0\), taking into account that \(\alpha +d \ge \frac{ \alpha + d}{2q}\), \(\frac{\kappa }{2} = \frac{d}{\alpha }- \frac{d}{2q\alpha }\), and \(2q>1\), we can use the estimate (3.12),

Hence (6.1) is true for \(n=0\).

Suppose that (6.1) holds for n. Denoting \(c_j = \frac{ \Gamma ^{j}(1-\kappa )}{\Gamma \big ( (j+1)(1-\kappa )\big )} \) and by the induction hypothesis,

The inequality (3.13) with \(g(y)=f(y)= G_{\alpha }(t-s,x-y)G_{\alpha }^{\frac{1}{2q}}(s, y)\) allows us to estimate

The scaling and asymptotic properties of the kernel \(G _{\alpha }\) imply that

Taking into account that \((\alpha +d)2q \ge \alpha +d\), we obtain

Therefore, by the semigroup property

Substituting (6.4) and (6.2) into (6.3) yields

Finally, it follows from (6.1)

This finishes the proof. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Assaad, O., Nualart, D., Tudor, C.A. et al. Quantitative normal approximations for the stochastic fractional heat equation. Stoch PDE: Anal Comp 10, 223–254 (2022). https://doi.org/10.1007/s40072-021-00198-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40072-021-00198-7

Keywords

- Stochastic fractional heat equation

- Fractional Laplacian

- Central limit theorem

- Malliavin calculus

- Stein’s method