Abstract

A single dynamical system with time-delayed feedback can emulate networks. This property of delay systems made them extremely useful tools for Machine-Learning applications. Here, we describe several possible setups, which allow emulating multilayer (deep) feed-forward networks as well as recurrent networks of coupled discrete maps with arbitrary adjacency matrix by a single system with delayed feedback. While the network’s size can be arbitrary, the generating delay system can have a low number of variables, including a scalar case.

Similar content being viewed by others

1 Introduction

Systems with time-delays, or delay differential equations (DDE), play an important role in modeling various natural phenomena and technological processes [1,2,3,4,5,6,7,8]. In optoelectronics, delays emerge due to finite optical or electric signal propagation time between the elements [9,10,11,12,13,14,15,16,17,18,19,20]. Similarly, in neuroscience, propagation delays of the action potentials play a crucial role in information processing in the brain [21,22,23,24,25,26,27,28].

Machine Learning is another rapidly developing application area of delay systems [29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47]. It is shown recently that DDEs can successfully realize a reservoir computing setup, theoretically [41,42,43,44, 46, 48,49,50], and implemented in optoelectronic hardware [30, 32, 39]. In time-delay reservoir computing, a single DDE with either one or a few variables is used for building a ring network of coupled maps with fixed internal weights and fixed input weights. In a certain sense, the network structure emerges by properly unfolding the temporal behavior of the DDE. In this paper, we explain how such an unfolding appears, not only for the ring network as in reservoir computing but also for arbitrary networks of coupled maps. In [51], a training method is proposed to modify the input weights while the internal weights are still fixed.

Among the most related previous publications, Hart and collaborators unfold networks with arbitrary topology from delay systems [42, 48]. Our work extends their results in several directions, including varying coupling weights and applying it to a broader class of delay systems. The networks constructed by our method allow for a modulation of weights. Hence, they can be employed in Machine-Learning applications with weight training. In our recent paper [50], we show that a single DDE can emulate a deep neural network and perform various computational tasks successfully. More specifically, the work [50] derives a multilayer neural network from a delay system with modulated feedback terms. This neural network is trained by gradient descent using back-propagation and applied to machine-learning tasks.

As follows from the above-mentioned machine-learning applications, delay models can be effectively used for unfolding complex network structures in time. Our goal here is a general description of such networks. While focusing on the network construction, we do not discuss details of specific machine-learning applications such as, e.g., weights training by gradient descent, or specific tasks.

The structure of the paper is as follows. In Sect. 2, we derive a feed-forward network from a DDE with modulated feedback terms. Section 3 describes a recurrent neural network. In Sect. 4, we review a special but practically important case of delay systems with a linear instantaneous part and nonlinear delayed feedback containing an affine combination of the delayed variables; originally, these results have been derived in [50].

The clock cycle intervals \(I_\ell \) and sub-intervals \(I_{\ell ,n}\). The node \(x^\ell _n\) (blue dot) is defined by the value of the solution x(t) of system (1) (blue line) at the time point \(t=(\ell -1)T+n\theta \). The modulation function \(\mathcal {M}_d(t)\) is a step function with constant values \(v^\ell _{d,n}\) on the intervals \(I_{\ell ,n}\)

2 From delay systems to multilayer feed-forward networks

2.1 Delay systems with modulated feedback terms

Multiple delays are required for the construction of a network with arbitrary topology by a delay system [42, 48, 50]. In such a network, the connection weights are emulated by a modulation of the delayed feedback signals [50]. Therefore, we consider a DDE of the following form:

with D delays \(\tau _1,\dots ,\tau _D\), a nonlinear function f, a time-dependent driving signal z(t), and modulation functions \(\mathcal {M}_1(t), \ldots , \mathcal {M}_D(t)\).

System (1) is a non-autonomous DDE, and the properties of the functions \(\mathcal {M}_d(t)\) and z(t) play an important role for unfolding a network from (1). To define these properties, a time quantity \(T>0\) is introduced, called the clock cycle. Further, we choose a number N of grid points per T-interval and define \(\theta := T/N\). We define the clock cycle intervals

which we split into smaller sub-intervals

see Fig. 1. We assume the following properties for the delays and modulation functions:

- Property (I)::

-

The delays satisfy \(\tau _d = n_d \theta ,\ d=1,\ldots ,D\) with natural numbers \(0< n_1< \cdots< n_D < 2N\). Consequently, it holds \(0< \tau _1< \cdots< \tau _D < 2T\).

- Property (II)::

-

The functions \(\mathcal {M}_d(t)\) are step functions, which are constant on the intervals \(I_{\ell ,n}\). We denote these constants as \(v^\ell _{d,n}\), i.e.,

$$\begin{aligned} \mathcal {M}_d(t) = v^\ell _{d,n} \quad \text {for} \quad t\in I_{\ell ,n}. \end{aligned}$$

In the following sections, we show that one can consider the intervals \(I_\ell \) as layers with N nodes of a network arising from the delay system (1) if the modulation functions \(\mathcal {M}_d(t)\) fulfill certain additional requirements. The nth node of the \(\ell \)-th layer is defined as

which corresponds to the solution of the DDE (1) at time point \((\ell - 1)T + n\theta \). The solution at later time points \(x^{\ell '}_{n'}\) with either \(\ell '>\ell \) or \(n'>n\) for \(\ell '=\ell \) depends, in general, on \(x^\ell _n\), thus, providing the interdependence between the nodes. Such dependence can be found explicitly in some situations. The simplest way is to use a discretization for small \(\theta \), and we consider such a case in the following Sect. 2.2. Another case, when \(\theta \) is large, can be found in [50].

Let us remark about the initial state for DDE (1). According to the general theory [2], to solve an initial value problem, an initial history function \(x_0(s)\) must be provided on the interval \(s \in [-\tau _D, 0]\), where \(\tau _D\) is the maximal delay. In terms of the nodes, one needs to specify \(x_n^\ell \) for \(n_D\) “history” nodes. However, the modulation functions \(\mathcal {M}_d(t)\) can weaken this requirement. For example, if \(\mathcal {M}_d(t) = 0\) for \(t \le \tau _d\), then it is sufficient to know the initial state \(x(0) = x_0^1 =x_0\) at a single point, and we do not require a history function at all. In fact, the latter special case has been employed in [50] for various machine-learning tasks.

2.2 Disclosing network connections via discretization of the DDE

Here, we consider how a network of coupled maps can be derived from DDE (1). Since the network nodes are already introduced in Sect. 2.1 as \(x_n^\ell \) by Eq. (2), it remains to describe the connections between the nodes. Such links are functional connections between the nodes \(x_n^\ell \). Hence, our task is to find functional relations (maps) between the nodes.

For simplicity, we restrict ourselves to the Euler discretization scheme since the obtained network topology is independent of the chosen discretization. Similar network constructions by discretization from ordinary differential equations have been employed in [52,53,54].

We apply a combination of the forward and backward Euler method: the instantaneous system states of (1) are approximated by the left endpoints of the small-step intervals of length \(\theta \) (forward scheme). The driving signal z(t) and the delayed system states are approximated by the right endpoints of the step intervals (backward scheme). Such an approach leads to simpler expressions. We obtain

for \(n = 2,\ldots ,N\), where \(t^\ell _n := (\ell - 1)T + n\theta \), and

for the first node in the \(I_\ell \)-interval.

According to Property (I), the delays satisfy \(0<\tau _d<2T\). Therefore, the delay-induced feedback connections with target in the interval \(I_\ell \) can originate from one of the following intervals: \(I_\ell \), \(I_{\ell -1}\), or \(I_{\ell -2}\). In other words: the time points \(t^\ell _n - \tau _d\) can belong to one of these intervals \(I_{\ell }\), \(I_{\ell -1}\), \(I_{\ell -2}\). Formally, it can be written as

We limit the class of networks to multilayer systems with connections between the neighboring layers. Such networks, see Fig. 4b, are frequently employed in machine-learning tasks, e.g., as deep neural networks [50, 55,56,57,58]. Using (5), we can formulate a condition for the modulation functions \(\mathcal {M}_d(t)\) to ensure that the delay terms \(x(t-\tau _d)\) induce only connections between subsequent layers. For this, we set the modulation functions’ values to zero if the originating time point \(t^\ell _n - \tau _d\) of the corresponding delay connection does not belong to the interval \(I_{\ell -1}\). This leads to the following assumption on the modulation functions:

Property (III): The modulation functions \(\mathcal {M}_d(t)\) vanish at the following intervals:

In the following, we assume that condition (III) is satisfied.

Expressions (3)–(4) contain the interdependencies between \(x_n^\ell \), i.e., the connections between the nodes of the network. We explain these dependencies and present them in a more explicit form in the following. Our goal is to obtain the multilayer network shown in Fig. 4b.

2.3 Effect of time-delays on the network topology

Network connections induced by one time-delay \(\tau _d\). a Connections induced by \(\tau _d<T\). b \(\tau _d = T\). c \(\tau _d > T\). Multiple delays \(\tau _1, \ldots ,\tau _D\) result in a superposition of parallel patterns as shown in Fig. 4b

Taking into account property (III), the node \(x^\ell _n\) of layer \(I_{\ell }\) receives a connection from a node \(x^{\ell -1}_{n-n'_d}\) of layer \(I_{\ell -1}\), where \(n'_d:=n_d-N\). Two neighboring layers are illustrated in Fig. 2, where the nodes in each layer are ordered vertically from top to bottom. Depending on the size of the delay, we can distinguish three cases.

-

(a)

For \(\tau _d<T\), there are \(n_d\) “upward” connections as shown in panel Fig. 2a.

-

(b)

For \(\tau _d=T\), there are \(n_d=N\) “horizontal” delay-induced connections, i.e. connections from nodes of layer \(\ell -1\) to nodes of layer \(\ell \) with the same index, see Fig. 2b.

-

(c)

For larger delays \(\tau _d>T\), there are \(2N-n_d\) “downward” delay-induced connections, as shown in Fig. 2c.

In all cases, the connections induces by one delay \(\tau _d\) are parallel. Since the delay system possesses multiple delays \(0< \tau _1< \ldots< \tau _D < 2T\), the parallel connection patterns overlap, as illustrated in Fig. 4b, leading to a more complex topology. In particular, a fully connected pattern appears for \(D = 2N-1\) and \(\tau _d = \theta d\), i.e. \(\tau _1 = \theta ,\ \tau _2 = 2\theta , \ldots , \tau _D = D\theta = (2N-1)\theta \).

2.4 Modulation of connection weights

With the modulation functions satisfying property (III), the Euler scheme (3)–(4) simplifies to the following map:

where Eq. (6) implies \(v^{\ell }_{d,n} = 0\) if \(n-n'_d < 1\) or \(n-n'_d > N\). In other words, the dependencies at the right-hand side of (7)–(8) contain only the nodes from the \(\ell -1\)-th layer. Moreover, the numbers \(v^\ell _{d,n}\) determine the strengths of the connections from \(x^{\ell -1}_{n-n'_d}\) to \(x^\ell _n\) and can be considered as network weights. By reindexing, we can define weights \(w^\ell _{nj}\) connecting node j of layer \(\ell -1\) to node n of layer \(\ell \). These weights are given by the equation

and define the entries of the weight matrix \(W^\ell = (w^\ell _{nj}) \in \mathbb {R}^{N\times (N+1)}\), except for the last column, which is defined below and contains bias weights. The symbol \(\delta _{nj}\) is the Kronecker delta, i.e. \(\delta _{nj} = 1\) if \(n=j\), and \(\delta _{nj} = 0\) if \(n\ne j\).

Coupling matrix \(W^\ell \) between the hidden layers \(\ell - 1\) and \(\ell \), see Eq. (9)–(10). The nonzero weights are arranged along the diagonals, and equal \(v^\ell _{d,n}\). The position of the diagonals is determined by the corresponding delay \(\tau _d\). If \(\tau _d = T = N\theta \), then the main diagonal contains the entries \(v^\ell _{d,1},\ldots ,v^\ell _{d,N}\) (shown in yellow). If \(\tau _d = n_d\theta < T\), then the corresponding diagonal lies above the main diagonal and contains the values \(v^\ell _{d , 1}, \ldots , v^\ell _{d , n_d}\) (red). If \(\tau _d = n_d\theta > T\), then the corresponding diagonal lies below the main diagonal and contains the values \(v^\ell _{d , n_d-N+1}, \ldots , v^\ell _{d, N}\) (blue). The last column of the matrix contains the bias weights (gray)

The time-dependent driving function z(t) can be utilized to realize a bias weight \(b^\ell _n\) for each node \(x^\ell _n\). For details, we refer to Sect. 2.5. We define the last column of the weight matrix \(W^\ell \) by

The weight matrix is illustrated in Fig. 3. This matrix \(W^\ell \) is in general sparse, where the degree of sparsity depends on the number D of delays. If \(D=2N-1\) and \(\tau _d = d\theta , \ d=1,\ldots ,D\), we obtain a dense connection matrix. Moreover, the positions of the nonzero entries and zero entries are the same for all matrices \(W^2, \ldots , W^L\), but the values of the nonzero entries are in general different.

2.5 Interpretation as multilayer neural network

The map (7)–(8) can be interpreted as the hidden layer part of a multilayer neural network provided we define suitable input and output layers.

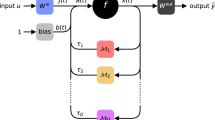

Implementing a multilayer neural network by delay system (1). a The system state is considered at discrete time points \(x^\ell _{n} := x((\ell - 1)T +n\theta )\). The intervals \(I_\ell \) correspond to layers. Due to delayed feedback, non-local connections emerge (color lines). b shows the resulting neural network

The input layer determines how a given input vector \(u\in \mathbb {R}^{M+1}\) is transformed to the state of the first hidden layer \(x(t),\ t\in I_1\). The input \(u\in \mathbb {R}^{M+1}\) contains M input values \(u_1,\ldots ,u_M\) and an additional entry \(u_{M+1}=1\). To ensure that \(x(t),\ t\in I_1\) depends on u and the initial state \(x(0)=x_0\) exclusively, and does not depend on a history function \(x(s),\ s < 0\), we set all modulation functions to zero on the first hidden layer interval. This leads to the following

Property (IV): The modulation functions satisfy

The dependence on the input vector \(u\in \mathbb {R}^{M+1}\) can be realized by the driving signal z(t).

Property (V): The driving signal z(t) on the interval \(I_1\) is the step function given by

where \(f^\mathrm {in}(W^{\mathrm {in}} u)\in \mathbb {R}^N\) is the preprocessed input, \(W^{\mathrm {in}}\in \mathbb {R}^{N\times (M+1)}\) is an input weight matrix, and \(f^\mathrm {in}\) is an element-wise input preprocessing function. For example, \(f^\mathrm {in}(a)=\tanh (a)\) was used in [50].

As a result, the following holds for the first hidden layer

which is just a system of ordinary differential equations, which requires an initial condition at a single point \(x(0)=x_0\) for solving it in positive time. This yields the coupled map representation

For the hidden layers \(I_2,I_3,\dots \), the driving function z(t) can be used to introduce a bias as follows.

Property (VI): The driving signal z(t) on the intervals \(I_\ell \), \(\ell \ge 2\), is the step function given by

Assuming the properties (I)–(VI), Eqs. (7)–(8) imply

Let us finally define the output layer, which transforms the node states \(x^L_1,\ldots , x^L_n\) of the last hidden layer to an output vector \(\hat{y} \in \mathbb {R}^P\). For this, we define a vector \(x^L := (x^L_1, \ldots , x^L_N, 1)^\mathrm {T} \in \mathbb {R}^{N+1}\), an output weight matrix \(W^\mathrm {out}\in \mathbb {R}^{P\times (N+1)}\), and an output activation function \(f^\mathrm {out}:\mathbb {R}^P \rightarrow \mathbb {R}^P\). The output vector is then defined as

Figure 4 illustrates the whole construction process of the coupled maps network; it is given by Eqs. (15)–(21).

We summarize the main result of section 2.

3 Constructing a recurrent neural network from a delay system

System (1) can also be considered as recurrent neural network. To show this, we consider the system on the time interval [0, KT], for some \(K\in \mathbb {N}\), which is divided into intervals \(I_k :=((k-1)T,kT], \ k = 1, \ldots ,K\). We use k instead of \(\ell \) as index for the intervals to make clear that the intervals do not represent layers. The state x(t) on an interval \(I_k\) is interpreted as the state of the recurrent network at time k. More specifically,

is the state of node n at the discrete time k. The driving function z(t) can be utilized as an input signal for each k-time-step.

Property (VII): z(t) is the \(\theta \)-step function with

where \(u(k),\ k = 1, \ldots ,K\) are \((M+1)\)-dimensional input vectors, \(W^\mathrm {in} \in \mathbb {R}^{N\times (M+1)}\) is an input weight matrix, \(f^\mathrm {in}\) is an element-wise input preprocessing function. Each input vector u(k) contains M input values \(u_1(k),\ldots ,u_M(k)\) and a fixed entry \(u_{M+1}(k):=1\) which is needed to include bias weights in the last column of \(W^\mathrm {in}\).

The main difference of the Property (VII) from (VI) is that it allows for the information input through z(t) in all intervals \(I_k\). Another important difference is related to the modulation functions, which must be T-periodic to implement a recurrent network. This leads to the following assumption.

Property (VIII): The modulation functions \(\mathcal {M}_d(t)\) are T-periodic \(\theta \)-step functions with

Note that the value \(v_{d,n}\) is independent on k due to periodicity of \(\mathcal {M}_d(t)\). When assuming the Properties (I), (III), (IV), (VII), and (VIII), the map equations (7)–(8) become

and can be interpreted as a recurrent neural network with the input matrix \(W^\mathrm {in}\) and the internal weight matrix \(W = (w_{nj}) \in \mathbb {R}^{N\times N}\) defined by

Recurrent network obtained from DDE (1) with two delays. The delays \(\tau _1<T\) and \(\tau _2>T\) induce connections with opposite direction (color arrows). Moreover, the nodes of the recurrent layer a linearly locally coupled (black arrows). All nodes of the recurrent layers are connected to the input and output layer

When we choose the number of delays to be \(D=2N-1\), we can realize any given connection matrix \(W\in \mathbb {R}^{N\times N}\). For that we need to choose the delays \(\tau _d = d\theta , \ d=1,\ldots ,2N-1\). Consequently there are \(D=2N-1\) modulation functions \(\mathcal {M}_d(t)\) which are step functions with values \(v_{d,n}\). In this case, Eq. (28) provides for all entries \(w_{nj}\) of W exactly one corresponding \(v_{d,n}\). Therefore, the arbitrary matrix W can be realized by choosing appropriate step heights for the modulation functions. In the setting of Sect. 3, the resulting network is an arbitrary recurrent network.

Summarizing, the main message of Sect. 3 is as follows.

4 Networks from delay systems with linear instantaneous part and nonlinear delayed feedback

Particularly suitable for the construction of neural networks are delay systems with a stable linear instantaneous part and a feedback given by a nonlinear function of an affine combination of the delay terms and a driving signal. Such DDEs are described by the equation

where \(\alpha > 0\) is a constant time scale, f is a nonlinear function, and

Ref. [8] studied this type of equation for the case \(D=1\), i.e. for one delay.

An example of (29) is the Ikeda system [59] where \(D=1\), i.e. a(t) consists of only one scaled feedback term \(x(t-\tau )\), signal z(t), and the nonlinear function \(f(a)=\sin (a)\). This type of dynamics can be applied to reservoir computing using optoelectronic hardware [33]. Another delay dynamical system of type (29), which can be used for reservoir computing, is the Mackey–Glass system [30], where \(D=1\) and the nonlinearity is given by \(f(a)=\eta a /(1+|a|^p)\) with constants \(\eta , p > 0\). In the work [50], system (29) is used to implement a deep neural network.

Even though the results of the previous sections are applicable to (29)–(30), the special form of these equations allows for an alternative, more precise approximation of the network dynamics.

4.1 Interpretation as multilayer neural network

It is shown in [50] that one can derive a particularly simple map representation for system (29) with activation signal (30). We do not repeat here the derivation, and only present the resulting expressions. By applying a semi-analytic Euler discretization and the variation of constants formula, the following equations connecting the nodes in the network are obtained:

for the first hidden layer. The hidden layers \(\ell = 2, \ldots ,L\) are given by

The output layer is defined by

where \(f^\mathrm {out}\) is an output activation function. Moreover,

where \(u_{M+1}:=1\) and \(x^\ell _{N+1} := 1\), for \(\ell =1,\ldots ,L\).

One can also formulate the relation between the hidden layers in a matrix form. For this, we define

Then, for \(\ell = 2,\ldots ,L\), Eqs. (33)–(34) become

where f is applied component-wise. By subtracting \(A x^\ell \) from both sides of Eq. (41) and multiplication by the matrix

we obtain a matrix equation describing the \(\ell \)th hidden layer

The neural network (31)–(39) obtained from delay system (29)–(30) can be trained by gradient descent [50]. The training parameters are the entries of the matrices \(W^\mathrm {in}\) and \(W^\mathrm {out}\), the step heights of the modulation functions \(\mathcal {M}_d(t)\), and the bias signal b(t).

4.2 Network for large node distance \(\theta \)

In contrast to the general system (1), the semilinear system (29) with activation signal (30) does not only emulate a network of nodes for small distance \(\theta \). It is also possible to choose large \(\theta \). In this case, we can approximate the nodes given by Eq. (2) by the map limit

up to exponentially small terms.

The reason for this limit behavior lies in the nature of the local couplings. Considering Eq. (29), one can interpret the parameter \(\alpha \) as a time scale of the system, which determines how fast information about the system state at a certain time point decays while the system is evolving. This phenomenon is related to the so-called instantaneous Lyapunov exponent [60,61,62], which equals \(-\alpha \) in this case. As a result, the local coupling between neighboring nodes emerges when only a small amount of time \(\theta \) passes between the nodes. Hence, increasing \(\theta \) one can reduce the local coupling strength until it vanishes up to a negligibly small value. For a rigorous derivation of Eq. (44), we refer to [50].

The apparent advantage of the map limit case is that the obtained network matches a classical multilayer perceptron. Hence, known methods such as gradient descent training via the classical back-propagation algorithm [63] can be applied to the delay-induced network [50].

The downside of choosing large values for the node separation \(\theta \) is that the overall processing time of the system scales linearly with \(\theta \). We need a period of time \(T=N\theta \) to process one hidden layer. Hence, processing a whole network with L hidden layers requires the time period \(LT=LN\theta \). For this reason, the work [50] provides a modified back-propagation algorithm for small node separations to enable gradient descent training of networks with significant local coupling.

5 Conclusions

We have shown how networks of coupled maps with arbitrary topology and arbitrary size can be emulated by a single (possibly even scalar) DDE with multiple delays. Importantly, the coupling weights can be adjusted by changing the modulations of the feedback signals. The network topology is determined by the choice of time-delays. As shown previously [30, 33, 34, 39, 50], special cases of such networks are successfully applied for reservoir computing or deep learning.

As an interesting conclusion, it follows that the temporal dynamics of DDEs can unfold arbitrary spatial complexity, which, in our case, is reflected by the topology of the unfolded network. In this respect, we shall mention previously reported spatio-temporal properties of DDEs [7, 64,65,66,67,68,69,70,71,72]. These results show how in some limits, mainly for large delays, the DDEs can be approximated by partial differential equations.

Further, we remark that similar procedures have been used for deriving networks from systems of ordinary differential equations [52,53,54]. However, in their approach, one should use an N-dimensional system of equations for implementing layers with N nodes. This is in contrast to the DDE case, where the construction is possible with just a single-variable equation.

As a possible extension, a realization of adaptive networks using a single node with delayed feedback would be an interesting open problem. In fact, the application to deep neural networks in [50] realizes an adaptive mechanism for the adjustment of the coupling weights. However, this adaptive mechanism is specially tailored for DNN problems. Another possibility would be to emulate networks with dynamical adaptivity of connections [73]. The presented scheme can also be extended by employing delay differential-algebraic equations [74, 75].

References

G. Stepan, Retarded dynamical systems: stability and characteristic functions (Longman Scientific & Technical, Harlow, 1989)

J.K. Hale, S.M.V. Lunel, Introduction to Functional Differential Equations (Springer, New York, 1993)

O. Diekmann, S. M. Verduyn Lunel, S. A. van Gils, H.-O. Walther, Delay Equations, Vol. 110. In Applied Mathematical Sciences (Springer, New York, 1995)

T. Erneux, Applied Delay Differential Equations, Vol. 3. In Surveys and Tutorials in the Applied Mathematical Sciences (Springer, New York, 2009)

H. L. Smith, An Introduction to Delay Differential Equations with Applications to the Life Sciences, Vol. 57 . In Texts in Applied Mathematics (Springer, New York, 2010)

T. Erneux, J. Javaloyes, M. Wolfrum, S. Yanchuk, Chaos Interdiscip. J. Nonlinear Sci. 27, 114201 (2017)

S. Yanchuk, G. Giacomelli, J. Phys A. Math. Theor. 50, 103001 (2017)

T. Krisztin, Period. Math. Hung. 56, 83–95 (2008)

A.G. Vladimirov, D. Turaev, Phys. Rev. A 72, 033808 (2005)

H. Erzgräber, B. Krauskopf, D. Lenstra, SIAM, J. Appl. Dyn. Syst. 5, 30–65 (2006)

O. D’Huys, R. Vicente, T. Erneux, J. Danckaert, I. Fischer, Chaos 18, 37116 (2008)

R. Vicente, I. Fischer, C.R. Mirasso, Phys. Rev. E (Stat. Nonlinear Soft Matter Phys.) 78, 66202 (2008)

B. Fiedler, S. Yanchuk, V. Flunkert, P. Hövel, H.-J.J. Wünsche, E. Schöll, Phys. Rev. E 77, 066207 (2008)

M. Wolfrum, S. Yanchuk, P. Hövel, E. Schöll, Eur. Phys. J. Spec. Top. 191, 91–103 (2011)

S. Yanchuk, M. Wolfrum, SIAM J. Appl. Dyn. Syst. 9, 519–535 (2010)

M.C. Soriano, J. García-Ojalvo, C.R. Mirasso, I. Fischer, Rev. Mod. Phys. 85, 421–470 (2013)

N. Oliver, T. Jüngling, I. Fischer, Phys. Rev. Lett. 114, 123902 (2015)

M. Marconi, J. Javaloyes, S. Barland, S. Balle, M. Giudici, Nat. Photon. 9, 450–455 (2015)

D. Puzyrev, A.G. Vladimirov, S.V. Gurevich, S. Yanchuk, Phys. Rev. A 93, 041801 (2016)

S. Yanchuk, S. Ruschel, J. Sieber, M. Wolfrum, Phys. Rev. Lett. 123, 053901 (2019)

J. Foss, J. Milton, J. Neurophysiol. 84, 975–985 (2000)

J. Wu, Introduction to Neural Dynamics and Signal Transmission Delay (Walter de Gruyter, Berlin, 2001)

E.M. Izhikevich, Neural Comput. 18, 245–282 (2006)

G. Stepan, Philos. Trans. R. Soc. Lond. A Math. Phys. Eng. Sci. 367, 1059–1062 (2009)

G. Deco, V. Jirsa, A.R. McIntosh, O. Sporns, R. Kotter, Proc. Natl. Acad. Sci. 106, 10302–10307 (2009)

P. Perlikowski, S. Yanchuk, O.V. Popovych, P.A. Tass, Phys. Rev. E 82, 036208 (2010)

O.V. Popovych, S. Yanchuk, P.A. Tass, Phys. Rev. Lett. 107, 228102 (2011)

M. Kantner, S. Yanchuk, Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 371, 20120470 (2013)

H. Paugam-Moisy, R. Martinez, S. Bengio, Neurocomputing 71, 1143–1158 (2008)

L. Appeltant, M.C. Soriano, G. van der Sande, J. Danckaert, S. Massar, J. Dambre, B. Schrauwen, C.R. Mirasso, I. Fischer, Nat. Commun. 2, 468 (2011)

R. Martinenghi, S. Rybalko, M. Jacquot, Y.K. Chembo, L. Larger, Phys. Rev. Lett. 108, 244101 (2012)

L. Appeltant, PhD thesis, Vrije Universiteit Brussel, Universitat de les Illes Balears (2012)

L. Larger, M.C. Soriano, D. Brunner, L. Appeltant, J.M. Gutierrez, L. Pesquera, C.R. Mirasso, I. Fischer, Opt. Express 20, 3241–3249 (2012)

D. Brunner, M. Soriano, C. Mirasso, I. Fischer, Nat. Commun. 4, 1364 (2013)

J. Schumacher, H. Toutounji, G. Pipa, In Artificial Neural Networks and Machine Learning: Proceedings of the 23rd International Conference on Artificial Neural Networks, 26–33 (Springer, Berlin, 2013)

H. Toutounji, J. Schumacher, G. Pipa, IEICE Proc. Ser. 1, 519–522 (2014)

L. Grigoryeva, J. Henriques, L. Larger, J.-P.P. Ortega, Sci. Rep. 5, 1–11 (2015)

B. Penkovsky, PhD thesis, Université Paris-Sud 11 (2017)

L. Larger, A. Baylón-Fuentes, R. Martinenghi, V.S. Udaltsov, Y.K. Chembo, M. Jacquot, Phys. Rev. X 7, 1–14 (2017)

K. Harkhoe, G. Van der Sande, Photonics 6, 124 (2019)

F. Stelzer, A. Röhm, K. Lüdge, S. Yanchuk, Neural Netw. 124, 158–169 (2020)

J.D. Hart, L. Larger, T.E. Murphy, R. Roy, Philos. Trans. R. Soc. A. Math. Phys. Eng. Sci. 377, 20180123 (2019)

F. Köster, S. Yanchuk, K. Lüdge, Preprint at arXiv:2009.07928 (2020)

F. Köster, D. Ehlert, K. Lüdge, Cognit. Comput. 1–8 (2020)

A. Argyris, J. Cantero, M. Galletero, E. Pereda, C.R. Mirasso, I. Fischer, M.C. Soriano, IEEE J. Sel. Top. Quantum Electron. 26, 1–9 (2020)

M. Goldmann, F. Köster, K. Lüdge, S. Yanchuk, Chaos Interdiscip. J. Nonlinear Sci. 30, 93124 (2020)

C. Sugano, K. Kanno, A. Uchida, IEEE J. Sel. Top. Quantum Electron. 26, 1–9 (2020)

J.D. Hart, D.C. Schmadel, T.E. Murphy, R. Roy, Chaos Interdiscip. J. Nonlinear Sci. 27, 121103 (2017)

L. Keuninckx, J. Danckaert, G. Van der Sande, Cognit. Comput. 9, 315–326 (2017)

F. Stelzer, A. Röhm, R. Vicente, I. Fischer, S. Yanchuk, Preprint at arXiv:2011.10115 (2020)

M. Hermans, J. Dambre, P. Bienstman, IEEE Trans. Neural Netw. Learning Syst. 26, 1545–1550 (2015)

E. Haber, L. Ruthotto, Inverse Probl. 34, 014004 (2017)

Y. Lu, A. Zhong, Q. Li, B. Dong, in Beyond Finite Layer Neural Networks: Bridging Deep Architectures and Numerical Differential Equations, Vol. 80. Proceedings of Machine Learning Research, 3276–3285 (PMLR, 2018)

R.T.Q. Chen, Y. Rubanova, J. Bettencourt, D.K. Duvenaud, In Proceedings of the 32nd International Conference on Neural Information Processing Systems, 6572–6583 (Curran Associates Inc., Red Hook, 2018)

C.M. Bishop, Pattern Recognition and Machine Learning (Springer, New York, 2006)

I. Goodfellow, Y. Bengio, A. Courville, Deep Learning (MIT Press, Cambridge, 2016)

Y. Lecun, Y. Bengio, G. Hinton, Nature 521, 436–444 (2015)

J. Schmidhuber, Neural Netw. 61, 85–117 (2015)

K. Ikeda, Opt. Commun. 30, 257–261 (1979)

S. Heiligenthal, T. Dahms, S. Yanchuk, T. Jüngling, V. Flunkert, I. Kanter, E. Schöll, W. Kinzel, Phys. Rev. Lett. 107, 234102 (2011)

S. Heiligenthal, T. Jüngling, O. D’Huys, D.A. Arroyo-Almanza, M.C. Soriano, I. Fischer, I. Kanter, W. Kinzel, Phys. Rev. E 88, 12902 (2013)

W. Kinzel, Philos. Trans. R. Soc. Math. Phys. Eng. Sci. 371, 20120461 (2013)

D. Rumelhart, G.E. Hinton, R.J. Williams, Nature 323, 533–536 (1986)

F.T. Arecchi, G. Giacomelli, A. Lapucci, R. Meucci, Phys. Rev. A 45, R4225–R4228 (1992)

G. Giacomelli, R. Meucci, A. Politi, F.T. Arecchi, Phys. Rev. Lett. 73, 1099–1102 (1994)

G. Giacomelli, A. Politi, Phys. Rev. Lett. 76, 2686–2689 (1996)

M. Bestehorn, E.V. Grigorieva, H. Haken, S.A. Kaschenko, Physica D 145, 110–129 (2000)

G. Giacomelli, F. Marino, M.A. Zaks, S. Yanchuk, EPL (Europhysics Letters) 99, 58005 (2012)

S. Yanchuk, G. Giacomelli, Phys. Rev. Lett. 112, 1–5 (2014)

S. Yanchuk, G. Giacomelli, Phys. Rev. E 92, 042903 (2015)

S. Yanchuk, L. Lücken, M. Wolfrum, A. Mielke, Discrete Continuous Dyn. Syst. A 35, 537–553 (2015)

I. Kashchenko, S. Kaschenko, Commun. Nonlinear Sci. Numer. Simul. 38, 243–256 (2016)

R. Berner, E. Schöll, S. Yanchuk, SIAM J. Appl. Dyn. Syst. 18, 2227–2266 (2019)

P. Ha, V. Mehrmann, BIT Numer. Math. 56, 633–657 (2016)

B. Unger, Electron. J. Linear Algebra 34, 582–601 (2018)

Acknowledgements

This work was funded by the “Deutsche Forschungsgemeinschaft” (DFG) in the framework of the project 411803875 (S.Y.) and IRTG 1740 (F.S.).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Stelzer, F., Yanchuk, S. Emulating complex networks with a single delay differential equation. Eur. Phys. J. Spec. Top. 230, 2865–2874 (2021). https://doi.org/10.1140/epjs/s11734-021-00162-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1140/epjs/s11734-021-00162-5