Abstract

In this paper, we propose an approach to explore reinsurance optimization for a non-life multi-line insurer through a simulation model that combines alternative reinsurance treaties. Based on the Solvency II framework, the model maximises both solvency ratio and portfolio performance under user-defined constraints. Data visualisation helps understanding the numerical results and, together with the concept of the Pareto frontier, supports the selection of the optimal reinsurance program. We show in the case study that the methodology can be easily restructured to deal with multi-objective optimization, and, finally, the selected programs from each proposed problem are compared.

Similar content being viewed by others

1 Introduction

The recent introduction of new risk-based reporting and solvency frameworks is encouraging non-life insurers to focus much more than previously on risk, value, and capital management of their portfolios. These new risk-based frameworks offer more incentive to develop risk management strategies that align with risk tolerance and optimize economic value on a risk-adjusted basis. Among possible tools of risk mitigation, reinsurance is one of the key drivers for non-life insurers. Obviously, as non-life insurance companies adapt their business models to modern risk management frameworks, which can include customised reinsurance solutions of increasing complexity, reinsurance companies are also adapting their offerings to meet the evolving needs of their non-life insurance clients. In this framework, the selection of the optimal reinsurance design is a relevant task. Indeed, as is well-known, the detection of a desirable reinsurance program can be based on a relevant trade-off. On the one hand, treaties allow to reduce the risk exposure of the insurer and hence to stabilize the business (see, e.g., [3] for a recent overview). On the other hand, they affect the potential profits. Naturally, the optimal solution depends on the chosen objective and constraints. For instance, key elements are criteria used to quantify the performance of the retained portfolio as well as the pricing rule applied by the reinsurer. In the literature, the analysis of optimal reinsurance treaties can be traced back to the seminal paper of [11] and has been an active research field both for academics and practitioners since then. Over the following decades, there were many contributions in the field, generalizing classical results for more intricate optimality criteria and/or more general premium principles. In particular, in [33], the authors show that stop-loss and truncated stop-loss are optimal solutions depending on the pricing rules of both the insurer and the reinsurer. Optimal reinsurance under premium principles based on mean and variance of the reinsurer’s share of the total claim amount is analysed in [40]. In [35], the authors, assuming that the premium calculation principle is a convex functional and that some other quite general conditions are fulfilled, study the relationship between maximizing the adjustment coefficient and maximizing the expected utility of wealth for the exponential utility function, both with respect to the retained risk of the insurer. In [41], the authors deal with the general problem of optimal insurance contracts design in the presence of multiple insurance providers. New reinsurance premium principles that minimize the expected weighted loss functions and balance the trade-off between the reinsurer’s shortfall risk and the insurer’s risk exposure are provided in [15]. Prompted by the recent insurance regulatory developments aiming at the harmonisation of risk assessment procedures, considerable attention has turned to embedding value at risk (VaR) and tail value at risk (TVaR) risk measures in the study of optimal reinsurance models (see, e.g. [8, 36]). In this field, the optimal risk management strategy of an insurance company subject to regulatory constraints is investigated in [10]. An optimal stop-loss reinsurance contract under VaR and TVaR is instead studied in [13]. Results are then extended in [14] providing the optimal ceded loss functions in a class of increasing convex ceded loss functions. The problem has been reexamined in [17] by introducing a simpler and more transparent approach based on intuitive geometric arguments. In [32], explicit forms of optimal contracts are derived in the case of absolute deviation and truncated variance risk measures. These interesting approaches mainly focus on the identification of the optimal program by minimizing specific tail risk measures under different premium principles.

We approach this topic in a different way, by introducing a flexible and efficient multi-objective simulation-based optimization framework to better represent the reward-to-risk trade-off between portfolio performance and risks for a multiline non-life insurance company under the Solvency II Directive. In particular, we aim at identifying optimal reinsurance programs that jointly maximise the solvency ratio and the profitability under specific constraints. Additionally, a Pareto frontier is used to delete inefficient reinsurance treaties and, via the definition of the unique minimal convex hull, we further restrict the set of optimal strategies that the company could pursue to reach its objectives. It is noteworthy that the use of the Pareto frontier on optimal reinsurance from a risk-sharing perspective has been already analysed in the literature. In [12], the authors analyse the necessary and sufficient conditions for a reinsurance contract to be Pareto-optimal and characterize all Pareto-optimal reinsurance contracts under more general model assumptions. Explicit forms of the Pareto-optimal reinsurance contracts are obtained under the expected value premium principle. A set of Pareto optimal insurance contracts is studied in [6], where the risk is covered by multiple insurance companies. The authors in [37] study the Pareto-optimal reinsurance policies, where both the insurer’s and the reinsurer’s risks and returns are considered. The risks of the insurer and the reinsurer, as well as the reinsurance premium, are determined by specific distortion risk measures with different distortion operators. Pareto optimality of insurance contracts is also explored in [5]. However, with our proposal, we differ with respect to this existing literature by providing an approach that allows to consider both the empirical characteristics of the insurance company and the framework designed by the Solvency II directive. Additionally, this methodology appears as a suitable tool to select optimal reinsurance programs considering a wide range of opportunities. Moreover, our approach is more similar in spirit to that of [4], where the optimal risk position of an insurance group is explored by considering intra-group transfers. In particular, the authors obtain an optimal share of premiums and liability transfers in order to minimize the total amount of the technical provisions and minimum capital requirement, based on the methodology provided by Quantitative Impact Study 5. Also in this case, we differ from this approach because our aim is to provide a multi-objective optimization framework that considers both risk and return. Moreover, our proposal deals with a possible partial internal model allowing to overcome some of the limitationsFootnote 1 implied by the standard formula for non-life underwriting risk (e.g. lognormal assumption, absence of size factor, safety loadings neglected in the computation of capital requirement, etc.).

Multi-objective optimization in a reward-to-risk framework has been mainly explored in the field of portfolio selection (see, e.g., [24, 50]). In the reinsurance field, a novel approach is introduced in [44] to find optimal combinations of different types of reinsurance contracts. The authors introduce a mean-variance-criterion to solve this task and to compare alternative multi-objective evolutionary algorithms (MOEAs). In contrast to most MOEAs designed to solve multi-objective reinsurance optimization problems that rely on population metaheuristics, [45] proposes a different evolutionary strategy that uses the mutation operator as the main search mechanism. In this case the authors aim at finding the optimal combination of treaties that minimize both the loading of the reinsurance company and the VaR of the retained losses for a specific business line. Although also our proposal deals with multi-objective optimization, we embed the problem in a Solvency II framework, we provide a higher degree of flexibility allowing to test alternative and complex reinsurance programs (as either the presence of reinstaments or of umbrella coverages) and we also treat multiline insurance companies. In particular, the proposed optimization can be seen as a tool to explore and deeply understand the main trade-offs involved in the construction of a reinsurance program. A numerical analysis has been developed in order to test the flexibility of our proposal on a multi-line non-life insurance company. The approach proved to be effective in capturing the effects of simple or articulated reinsurance programs on both risk measure and profitability. Once the gross of reinsurance scenario is simulated, a very large set of treaty combinations can be tested in short computational times, making the approach affordable in practice. Then, via the unique minimal convex hull, we limit the optimal strategies that the company could pursue to a restricted number, while the data visualisation provides a significant support for the comparison of the results.

The paper is organised as follows. Section 2 describes the general framework we deal with, as well as an introduction to the risk and reward indicators considered. Furthermore, the multi-objective optimization problem is provided. The methodological environment used to model the aggregate claim amount distribution, the dependence between lines of business and the characteristics of the reinsurance programs involved are described in Sect. 3. In Sect. 4 we perform an empirical analysis of the multi-objective portfolio selection problems and discuss the results. We also report a pseudo-code with the developed algorithms. In this way, the numerical results are fully reproducible. In particular, in Sects. 4.4 and 4.5 the specific effects of reinstaments and umbrella coverages are explored. Conclusions follow.

2 Model

We consider here a multi-line non-life insurance company with L lines of business (LoBs) at the end of time t and we focus only on the premium risk component of the capital requirement.

Focusing on a 1-year time-horizon, as prescribed the Solvency II directive for capital assessment [28], we define the random variable (r.v.) \(U_{t+1}\), that denotes the amount of own fundsFootnote 2 at time \(t+1\) as:

where \(u_{t}\) is the deterministic amount of own funds at the end of time t. The term \((b_{i,t+1}-X_{i,t+1}-e_{i,t+1})\) is the gross technical profit of LoB i, defined as the difference between next-year year earned premiums \(b_{i,t+1}\) and incurred aggregate claims amount \(X_{i,t+1}\) plus expenses \(e_{i,t+1}\). It is worth pointing out that both next-year premiums and expenses are here assumed deterministic. This assumption allows only to simplify the model, but the proposed approach also holds in case both amounts are treated as random variables. Finally, in formula (1) technical profit assures a financial return at a rate j, where cash-in and cash-out are assumed uniformly distributed over the year. Also the financial return is here considered deterministic in order to neglect the market risk component from the valuation.

As well-known, the insurance company can enter in a reinsurance contract in order to mitigate its risk (see, e.g. [16, 21]) for the year \(t+1\) and we assume that, for each LoB, the company has at disposal a finite number (denoted with r) of treaties in the market. In other words, for each LoB, the company has a number \(r+1\) of possible choices, given by the r available alternative treaties and by the possibility that no reinsurance is selected by the company.

Hence, given L LoBs, we have \((r+1)^{L}\) possible choices for the companyFootnote 3. To this end, we introduce a set \(\mathcal {M}=\left\{ m_{1}, \ldots ,m_{(r+1)^{L}} \right\}\), with \((r+1)^{L}\) elements, where each element m indicates a possible choice (i.e. which treaty has been selected for each LoB).

Therefore, we can rewrite the own funds defined in formula (1) as:

where the new component in formula (2) considers the effect on the technical profit of the reinsurance treaties selected by the insurance company and, therefore, depends on m. In particular, it is defined as the difference between the amount of premiums paid to the reinsurance company \(b^{r}_{i,t+1}\) and the aggregate claim amount paid by the reinsurer \(X^{r}_{i,t+1}\) plus the commissions \(C^{r}_{i,t+1}\) paid by the reinsurer in proportional treaties.

Given this framework, our aim is to select the optimal combination \(m^{*}\) for the insurance company by considering the effects on both the profitability and the risk. The aim is the definition of an optimization criterion that takes into account and connects the most crucial performance indexes and the most realistic assumptions altogether. Hence, to evaluate the effect we start considering two classical indicators.

Concerning risk mitigation, the easier solution is represented by detecting the mix of reinsurance contracts m that minimizes the coefficient of variation \(CV(S^{n}_{t+1}(m))=\dfrac{\sigma (S^{n}_{t+1}(m))}{\mathbb {E}(S^{n}_{t+1}(m))}\) of the r.v. \(S^{n}_{t+1}(m)=\sum _{i=1}^{L}(X_{i,t+1}-X^{r}_{i,t+1})\). Although the analysis of the effects of a treaty on the volatility is crucial and it could be also interesting for a direct comparison with the risk mitigation factors defined by the Solvency II standard formula (see, e.g., the non-proportional factor defined in [29] and analysed in [18, 19]), the coefficient of variation gives only a partial view of the risk profile of an insurer. For instance, it does not consider the effect on the skewness of the distribution as well as the presence of possible sliding commissions in proportional treaties.

Hence, to have a more complete view of the impacts in terms of risk mitigation, we focus on the solvency position of the insurance company by searching for the combination m that maximizes the solvency ratio \(sr_{t}(m)=\dfrac{u_{t}}{scr_{t}(m)}\). The denominator considers the premium risk solvency capital requirement (\(scr_{t}\)) derived by a partial internal model and it is computed as:

where we apply a VaR evaluated at 99.5% confidence level as prescribed by the Solvency II directive [28]. Obviously the approach can be easily adapted varying the risk measure or the confidence level (for instance using a TVaR at 99% as in Swiss Solvency Test (see [27, 30])).

In terms of profitability, our purpose is to maximize the expected ROE defined as:

Therefore, we define a multi-objective portfolio optimization problem with specific constraints. Specific constraints are given by the fact that the Solvency II Directive requires a minimum capital to be held by EU-based insurance companies in order to guarantee a target level for the ruin probability over a specified period. Additionally, a minimum level of profitability could be asked by the stakeholders. Hence, we define the problem as follows:

subject to:

where \(\rho \in [0,\infty )\) and \(\xi \in [1,\infty )\) are minimum expected profitability and minimum solvency ratio defined by the company. \(\chi \in (0,\infty )\) is instead the maximum level of volatility that can be tolerated by the insurance company. For instance, the insurer could ask that the net coefficient of variation is lower or equal than the net volatility factor provided by the standard formula. Or alternatively, it could be imposed a reduction of the coefficient of variation moving from gross to net of reinsurance situation.

The choice of considering simultaneously two risk measures, \(sr_{t}(m)\) and \(CV(S^{n}_{t+1}(m))\), is mainly driven by their different meaning: the solvency ratio mainly captures the risk behaviour for extreme cases (1 over 200 years according to the level of confidence 99.5%), while the coefficient of variation describes also the volatility around the expected losses.

The concept of Pareto frontier is then introduced to delete inefficient reinsurance treaties from the optimization. Given the set of feasible treaties \(\mathcal {M}^{*} \subseteq \mathcal {M}\) that satisfy constraints (4), we define the set \(\mathcal {O}^*\subseteq \mathcal {M}^*\) of reinsurance treaties that are not strictly dominated by any other treaty in \(\mathcal {M}^*\). According to the problem (3), a feasible treaty \(m^{'}\in \mathcal {M}^*\) is said to (Pareto) dominate another solution \(m^{''} \in \mathcal {M}^*\) if

with at least one strict inequality satisfied. The use of the Pareto frontier has a significant impact on reducing the number of considered reinsurance treaties when passing from \(\mathcal {M}^{*}\) to \(\mathcal {O}^{*}\).

Furthermore, we introduce the concept of convex hull to detect the reinsurance treaties with the most interesting risk-return trade-offs. A convex hull of a given set \(\mathcal {Y}\) is defined as the unique minimal convex set containing \(\mathcal {Y}\). In particular, given that the problem (3) is a double maximization, we compute on \(\mathcal {O}^{*}\) the so-called upper convex hull, which is composed by the upward-facing points only. We define the set of treaties belonging to the upper convex hull as \(\mathcal {C}^{*}\subseteq \mathcal {O}^{*}\). Under problem (3), the upper convex hull is characterized by an interesting property: given \(\mathcal {O}^{*}\), let \(\mathcal {C}^{*}=\left\{ m_{1}, \ldots ,m_{q}\right\}\) (with \(q\ge 2\)) be its upper convex hull, where \(sr_{t}(m_{1})< \cdots < sr_{t}(m_{q})\); then for \(s=1,\ldots ,q-1\)

subject to the constraint \(sr_{t}(m)> sr_{t}(m_{s})\). In other words, when we move from a program \(m_{s}\) of the convex hull to another Pareto efficient program with a greater solvency ratio, the best trade-off, between \(sr_{t}(m)\) and \(\mathbb {E}(ROE_{t+1}(m))\), is achieved by selecting the next program \(m_{s+1}\) on the convex hull. Trivially, if the upper convex hull is linear, also every \(m_{w}\) with \(s<w\le q\) returns the best trade-off, but with different scales. In the numerical part we deal with problem (3), we consider a set \(\mathcal {M}\) of alternative reinsurance programs, we identify the set of feasible solutions \(\mathcal {M}^{*}\) and then we will use the concept of Pareto frontier and Eq. (6) to identify strategic reinsurance programs for the insurance company. In particular, we easily computed the convex hull with the chull function from the built-in R package grDevices. The function has been slightly modified in order to obtain either the upper or lower convex hull.

Note that the optimization problem can be easily restructured by switching \(\mathbb {E}(ROE_{t+1}(m))\) or \(sr_{t}(m)\) with \(CV(S^{n}_{t+1}(m))\) in both (3) and (5). Of course the optimization target in (3) would be the minimization of \(CV(S^{n}_{t+1}(m))\), and the condition in (5) would be \(CV(S^{n}_{t+1}(m^{''}))\le CV(S^{n}_{t+1}(m^{'}))\). In addition, by replacing \(\mathbb {E}(ROE_{t+1}(m))\) with \(CV(S^{n}_{t+1}(m))\) in (6), we need to determine the lower convex hull instead of the upper one when passing from \(\mathcal {O}^{*}\) to \(\mathcal {C}^{*}\), and, formula (6) becomes a minimization. These alternative problems will be also analysed in the numerical part.

3 Methodological environment

To solve problem (3)–(4), we introduce a methodological environment. In particular, a classical frequency-severity model (see, e.g., [9, 23]) is applied to model the distribution of gross aggregate claims amount \(X_{i,t+1}\) of each LoB. In other words, we can define the r.v. \(X_{i,t+1}\) as:

where

-

\(K_{i,t+1}\) is the r.v. claim counts. As usually provided in the literature (see [23]), the number of claims distribution is the Poisson law (\(K_{i,t+1} \sim Poi(n_{i,t+1} \cdot Q_{i,t+1})\)), with an expected number of claims \(n_{i,t+1}\) affected by a structure variable \(Q_{i,t+1}\).

-

\(Q_{i,t+1}\) is a mixing variable (or contagion parameter) (see [34, 42]) and it describes the parameter uncertainty on the number of claims. It is assumed that \(\mathbb {E}(Q_{i,t+1})=1\) and that the random variable is defined only for positive values. In the numerical analysis, a Gamma distribution will be assumed for \(Q_{i,t+1}\).

-

\(Z_{h,i,t+1}\) is the amount of claim h (severity). As usual, \(Z_{1,i,t+1},Z_{2,i,t+1}, \ldots ,\) are mutually independent and identically distributed random variables, each independent of the number of claims \(K_{i,t+1}\).

In order to focus only on premium risk, we are considering next year incurred losses that can be covered by using earned premiums. We define earned premiums \(b_{i,t+1}= \mathbb {E}\left[ X_{i,t+1}\right] \left( 1+\lambda _{i} \right) +\mathbb {E}\left[ e_{i,t+1}\right]\), where \(\lambda _{i}\) is the safety loading coefficient. It is noteworthy that we are excluding next-year payments for claims already occurred at the valuation date because such random variable is treated in reserve risk evaluation. Furthermore, previous assumptions are based on a mixed compound Poisson process that is a classical methodology used in literature and in practice to quantify the capital requirement for premium risk (see, e.g. [23]).

Total claims amount gross of reinsurance, \(S^{g}_{t+1}=\sum _{i=1}^{L}X_{i,t+1}\), for the whole portfolio is then computed by taking into account the dependence between lines of business. Since we deal with \(L>2\) and we want to consider also tail dependencies, that can be crucial to fully catch the benefit of reinsurance contracts, we apply Vine copulas (see, e.g., [20, 38]). As well-known, thanks to Sklar’s theorem (see, e.g., [25, 48, 49]), the modelling of the marginal distributions can be conveniently separated from the dependence modelling in terms of the copula. But, while for the bivariate case, a rich variety of copula families is available and well-investigated (see [39, 43]), Archimedean copulas can lack the flexibility of accurately modelling the dependence among larger numbers of variables. Vine copulas do not suffer from any of these problems, because allow to build flexible dependency structures using bi-variate copulas. Therefore, the copula aggregation is divided in blocks of bi-variate aggregations, in such a way that each block can be characterized with a different choice of copula and parameters. In other words an aggregation tree is defined (see, e.g., [46]). Vines thus combine the advantages of multivariate copula modelling, that is separation of marginal and dependence modelling, and the flexibility of bivariate copulas (see [2] for a description of statistical inference techniques for the two classes of canonical (C-) and D-vines). C- and D-vine copulas have been very successful in many applications, mainly, but not exclusively, in risk management, finance and insurance. For instance, in [1, 31] the authors showed the good performance of vine copulas compared to alternative multivariate copulas.

Very briefly, C-vines are characterized by a unique node that is connected to all the others. The structure is star-shaped and, for instance, in four dimensions we have three trees. Note that, given the starting configuration of first tree, there is not a unique way in which the following trees can be structured. On the other hand, D-Vine copulas are characterized by a linear structure. Unlike the C-vine, once the first tree is chosen, the following trees are uniquely defined. In fact, by setting the starting sequence of nodes, there is only one way to aggregate. Trivially, in a 3-dimensional case, there is no difference between a C-vine and D-vine (for a recent overviews about the vine methodology, see [20, 22]).

Additionally, we define the set of possible treaties that the insurance company has at disposal on the market. In particular, we assume for the generic LoB i that the following contracts are available for the year \(t+1\):

-

1.

a Quota Share treaty with a ceding percentage \(\alpha _{i}\) and reinsurance commissions \(C^{r}_{i}\);

-

2.

an Excess of Loss per risk with deductible \(D_{i}\) and limit \(L_{i}\), denoted with \(L_{i}\) xs \(D_{i}\). The reinstatements related to the layer are assumed free and unlimited for now. Under this type of reinsurance, a reinsurance company has to pay for each \(h-\)claim \(Z_{h,i}\) an amount equal to \(\min \left( \max \left( Z_{h,i}-D_{i},0\right) ,L_{i}\right)\);

-

3.

a combined treaty, where the Quota Share treaty is in force after the Excess of Loss.

To generate the set of available reinsurance programs \(\mathcal {M}\), the mathematical domain of the reinsurance parameters has been discretised for both Quota Share and Excess of Loss treaties. This operation is required in order to deal with a finite number of programs m. An adequate discretisation helps providing realistic reinsurance programs, while keeping relatively small computational times.

To price Excess of Loss treaties the standard deviation pricing principle has been applied, where for the generic LoB i the reinsurance premium \(b^{r,xol}_{i,t+1}\) is equal to

where \(X^{r,xol}_{i,t+1}\) is the aggregate claim amount paid by the reinsurer due to the Excess of Loss treaty in force. As well known, reinsurance treaties can be priced using alternative methodologies and this topic has been widely explored in the literature. The aim of this part is to introduce a general structure for the development of the case study. Obviously the same approach can be easily applied considering an alternative pricing principle.

Since in the combined treaties the Quota Share is applied after the Excess of Loss, the proportional treaty is characterized by the following premium

The previous formula is motivated by the fact that we expect a lower Quota Share premium if an Excess of Loss already mitigates part of the underlying risk. Trivially, the overall reinsurance premium for the generic LoB i is defined as \(b^{r}_{i,t+1}=b^{r,xol}_{i,t+1}+b^{r,qs}_{i,t+1}\). Obviously in case only Quota Share is in force, we apply formula (9) assuming \(b^{r,xol}_{i,t+1}=0\).

4 Numerical application

4.1 General framework and gross of reinsurance results

In this section, we test the proposed approach using data of an Italian insurance company. Data have been modified for confidentiality reasons. For the sake of simplicity, we assume that the company operates only in the following LoBs:

-

1.

Motor third party liability (MTPL);

-

2.

General third party liability (GTPL);

-

3.

Motor own damage (MOD).

Main parameters of the company are reported in Table 1. In particular, for each LoB i, the single claim amount \(Z_{h,i,t+1}\) is distributed according to a \(LogNormal(\mu _{i,t+1},\sigma _{i,t+1})\) and each claim is covered up to a policy limit \(pl_{i,t+1}\). Some key parameters, as the standard deviation of the structure variable \(\sigma (Q_{i,t+1})\), the safety loading and the expense ratio \(ER_{i,t+1}\) have been calibrated using market data (see [7]).

In terms of annual tariff premiums (last column in Table 1), this insurer represents more or less the \(10^\mathrm{{th}}\) biggest Italian insurer for each LoB. Therefore, given the distribution of premiums around the market, we are in front of a medium-sized insurer. By looking at the parameters, we can note that MTPL is the largest LoB in the portfolio, but, due to high competitiveness in the market, the safety loading coefficient is only \(1.2\%\). Instead, GTPL is characterized by a larger and more volatile claim-size distribution and at the same time, it’s the least diversified due to a low value of \(E(K_{GTPL,t+1})\). For MOD, a higher profitability is expected notwithstanding a lower relative volatility.

A Monte Carlo simulation procedure is initially performed and it has been used parameters reported in Table 1. Particular care is given when storing the simulated large claims, which will be fundamental for reinsurance applications in the next steps. To this end, the Algorithm 1 describes the procedure followed to generate the aggregate claim amount for each LoB.

Thanks to the simulation structure provided by Algorithm 1, the aggregate claims amount \(X^{n,xol}_{i,t+1}\) net of a generic Excess of Loss \(L_{i}\) xs \(D_{i}\) can be easily computed on the same simulations by applying the Algorithm 2.

The main characteristics for each LoB are listed in Table 2. Additionally, gross capital requirement for premium risk is computed, at moment, separately for each LoB.

The lowest coefficient of variation is observed for MTPL, due to both a higher diversification and the lowest variability of the structure variable. However, the marginal capital requirement is the highest among the three lines because of the relevance in the portfolio. On the other hand, in relative terms, the highest capital is absorbed by GTPL.

Since the empirical evidence of dependence between LoBs is not available, the aggregation is achieved through the use of Vine copula, where the parameters are calibrated according to the correlation matrix provided by Solvency II Commission Delegated Regulation [29] (see Table 3).

In particular, since we deal with a 3-dimensional scenario, a C-Vine copula is equivalent to a D-Vine one. The chosen aggregation structure is graphically represented with the following trees. Therefore, each couple is aggregated by applying a mirror Clayton copula (see [46]), whose parameters have been derived exploiting the well-known relation between the correlation coefficient and Kendall Tau.

Recalling the results of Algorithm 1, we need to reorder the simulation structure according to the copula sample, where the gross of reinsurance aggregate claims amount \(X_{i,t+1}^g\) is used as ordering index. The Vine copula simulation algorithm is implemented in the R’s VineCopula package (see [47]).

Table 4 reports main characteristics of the distribution of aggregate losses for the whole portfolio. Furthermore, total capital requirement is equal to roughly 78 millions of Euro with a saving of approximately 7% because of diversification between LoBs. It is worth pointing out that the solvency ratio is roughly 117% and the expected ROE is around 14%. The financial return rate j is assumed equal to 0 for simplicity.

Starting from the framework gross of reinsurance reported in Table 4, we test alternative reinsurance strategies that the insurance company can pursue. To do this, we set the constraints (4) in problem (3) as follows:

In this specific setting, the aim of the insurance company is to obtain a significant increase of the solvency ratio because of the reinsurance. Additionally, the constraints assume a minimum level of profitability equal to 10% and a total net volatility not higher than the gross one.Footnote 4 The set \(\mathcal {M}\) is composed by all possible combinations obtained assuming to cover the risk with an Excess of Loss treaty, a Quota Share or a combined treaty based on an interplay between a proportional and a non-proportional treaty (with a Quota Share after the Excess of Loss). Both the retention of the quota share and the limits of the layer covered by the Excess of Loss have been discretised in order to deal with a finite number of possible reinsurance treaties. In particular, the following choices have been made:

-

for the selection of all the possible Quota Share treaties, whose retention \(1-\alpha _{i}\) varies in the interval [0; 1] with a step of \(5\%\) to consider retentions that are likely to be negotiated between insurer and reinsurer;

-

for the selection of all possible Excess of Loss, in this initial analysis we do not consider subsequent layers and the presence of possible reinstatements, aggregate limits and aggregate deductibles. The choice has been made in order to reduce the computational times and the size of \(\mathcal {M}\). However, in Sect. 4.4, a specific focus has been made on consecutive layers and reinstatements;

-

the deductible \(D_{i}\) is defined in the range \(\left[ 500,000;2,000,000\right]\) with a step of 250, 000. The lower limit has been set to fix a reasonable attachment point for the treaty and to avoid that the layer over-fits the simulations by protecting extreme events only at a low price;

-

the limit \(L_{i}\) has been discretised with a 2, 000, 000 step to study how far should the layer go. More precisely, due to the presence of the policy limit \(pl_{i,t+1}\), the range of \(L_{i}\) is \(\left[ 2,000,000; pl_{i,t+1} - D_{i} \right]\);

Since MOD is a short tailed LoB, the application of Excess of Loss does not significantly contribute in mitigating extreme losses. For the sake of brevity, results related to Excess of Loss treaties for this line of business have not been reported in the following.

When pricing Excess of Loss treaties, we assume that the insurance market competitiveness is in line with the reinsurance one. Therefore, recalling Eq. (8), the standard deviation premium principle has been calibrated as follows:

Instead, when pricing Quota Share, we will assume that the reinsurer requires an additional compensation by keeping a fixed \(5\%\) of the expense commissions, such that

Hence, the reinsurance commissions are assumed deterministic.

Now we have all the elements to compute the results net of reinsurance through the application of different reinsurance programs on the simulations of Algorithm 3. Since each Excess of Loss contract is redundant in \(\mathcal {M}\) and the Quota Share is always applied afterwards, it is preferred to calculate in a separate step the aggregate claims amount \(X_{i,t+1}^{n,xol}\) and the reinsurance premium \(b_{i,t+1}^{r,xol}\) for each unique Excess of Loss treaty. In fact, the application of Quota Share contracts is straightforward since both ceded losses and ceded premiums are derived from the ceding percentage \(\alpha _i\).

The last and crucial step of the calculations is mainly composed by the simple combination of Algorithm 4 outputs claimsXoL and premiumsXoL according to the programs contained in \(\mathcal {M}\). In fact, note that the operations involved in Algorithm 5 to calculate the vector of aggregate claims amount net of reinsurance \(S^{n}_{t+1}\) are not time-consuming.

We acknowledge that the proposed algorithms can be further optimised in terms of computational times. However, the current algorithms already provide the results in a reasonable amount of time. Indeed, to give an idea of computational times, given the aforementioned discretisations and assumptions, the most time consuming part of the process is Algorithm 5, which takes 12 min to compute all the combinations of treaties. The code is written in R, with the help of the Rcpp package (see [26]), using an Intel Pentium 4415U, 2.30 GHz processor and 8 GB RAM. The pseudo-code presented in this paper is kept simple in order to be easier to understand. However, the proposal allows us to use the same results for different optimization problems, as shown in Sect. 4.3.

4.2 Profitability and capital requirement optimization under volatility constraint

Recalling the proposed optimization problem (3), it is possible to analyse graphically each reinsurance scheme from Algorithm 5, as depicted in Fig. 1. Since the aim is to maximise both the \(\mathbb {E}(ROE_{t+1}(m))\) and \(sr_{t}(m)\), the reinsurance programs we aim for are the ones in the upper-right corner of the figure. Only the points displayed in orange satisfy the set of constraints (10).

Behaviour of alternative reinsurance programs in terms of expected ROE and solvency ratio. The scale varies with respect to the coefficient of variation net of reinsurance. Points displayed in orange satisfy the set of constraints (10)

We display a close-up of the orange dots of Fig. 1. Moreover, yellow dots represent the set of non-dominated reinsurance programs. Orange points are the strategies belonging to the upper convex hull frontier

Then, in Fig. 2 a close-up of the orange dots of Fig. 1 is presented. As shown by the color gradient of \(CV(S^{n}_{t+1}(m))\), the treaties with the least coefficient of variation are the ones in the lower-left corner. Using the concept of Pareto frontier, the set of non-dominated reinsurance programs \(\mathcal {O}^{*}\) are determined in yellow and in orange. In particular, the three orange points are the ones belonging to the upper convex hull frontier \(\mathcal {C}^{*}\subseteq \mathcal {O}^{*}\), and, in ascending order of \(sr_{t}(m)\), are structured as summarized in Table 5.

Note that \(\alpha _{MOD}\) is not specified in Table 5 since the optimal reinsurance programs never apply Quota Share for MOD (i.e. \(\alpha _{MOD}=0\) for all three cases). This fact is justified by the high profitability and low riskiness that characterize this line, and, therefore, ceding such LoB would be inefficient for the insurance company. The results in Table 5 (see last three columns) show some peculiarities:

-

in each program the coefficient of variation is really close to the one gross of reinsurance, which is equal to \(7.69\%\). Hence, the constraint has been satisfied in its upper bound, while the resulting programs maximize the trade-off between \(sr_{t}(m)\) and \(\mathbb {E}(ROE_{t+1}(m))\) without any significant reduction of \(CV(S^{n}_{t+1}(m))\);

-

all the programs rely heavily on the Quota Share of MTPL to adjust \(sr_{t}(m)\), since ceding such LoB generates a more diversified risk portfolio;

-

In conjunction with the increase of \(\alpha _{MTPL}\), the Excess of Loss’ layer of GTPL shifts towards more frequent risks to provide more coverage;

-

the upper convex hull frontier is almost linear graphically;

-

there is no program on the convex frontier with \(sr_{t}(m)\approx \,160\%\) because of the discontinuity between the second and the third orange point. In fact, under this optimization, if the insurer aims for such \(sr_{t}(m)\) target, a reinsurance program on the Pareto frontier would be selected.

4.3 Alternative optimizations

In this section, we modify the optimal problem keeping constant the set of constraints. In particular, we focus here on the minimization of the underlying riskiness considered in both \(sr_{t}(m)\) and \(CV(S^{n}_{t+1}(m))\) and we define a minimum level of profitability equal to 10%. Therefore, we rewrite the problem as

subject to (10).

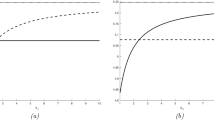

As mentioned before, we use the same results from Algorithm 5, while changing the axes in the data visualisation. The reinsurance programs are represented in Fig. 3. Trivially, we now aim for the lower-right corner of the figure. As before, the orange dots represent the programs that satisfy the constraints, and they are characterized by a spiky shape. The use of the Pareto frontier is able to reduce in a significant way the number of considered programs, as shown in Fig. 4.

Behaviour of alternative reinsurance programs in terms of solvency ratio and coefficient of variation. The scale varies with respect to the expected ROE net of reinsurance. Points displayed in orange satisfy the set of constraints (10)

We display a close-up of the orange dots of Figure 3. Moreover, yellow dots represent the set of non-dominated reinsurance programs. Orange points are the strategies belonging to the lower convex hull frontier, with the tangent one in red

In this scenario, the lower convex hull has been computed and it is not linear as in the previous optimization. Due to this fact, we can build a line that connects the leftmost and rightmost points of the Pareto frontier, and determine which Pareto reinsurance program is tangent to it. This determination of the tangent program can provide some useful considerations on the most interesting trade-offs. The programs on the convex frontier, ordered by values of \(sr_{t}(m)\) and with the tangent program in bold, are reported in Table 6.

The profit constraint has been met in its lower bound, with an expected ROE just slightly higher than \(10\%\). In fact, the optimal trade-off between the two risk measures comes at the cost of reducing as much as possible the expected profit. The \(5^{\text {th}}\) program also appeared in the previous optimization (see Table 5), since it almost meets the upper bound of volatility constraint and it is characterized by the highest solvency ratio among the subset of programs.

The tangent program relies on a solid protection with an Excess of Loss on GTPL and it does not use any Quota Share on such LoB to avoid sharing profit. At the same time, the optimal program combines on MTPL a Quota Share with a retention of \(70\%\) and an Excess of Loss that does not cover extreme losses after 7.25 millions, due to their low occurrence.

Alternatively, we assume that the insurance company is willing to achieve a minimum solvency ratio, while maximizing the expected profit and minimizing the claims volatility. This kind of scenario is of interest in the actuarial practice, and it is worth to be analysed. The optimization problem can be rewritten as:

subject to (10).

As in the previous cases, the numerical constraints are applied, and we are able to select the programs depicted in orange in Fig. 5.

Behaviour of alternative reinsurance programs in terms of expected ROE and coefficient of variation. The scale varies with respect to the solvency ratio net of reinsurance. Points displayed in orange satisfy the set of constraints (10)

We display a close-up of the orange dots of Fig. 5. Moreover, yellow dots represent the set of non-dominated reinsurance programs. Orange points are the strategies belonging to the lower convex hull frontier, with the tangent one in red

In Fig. 6, the programs on the convex frontier are determined. We summarize the characteristics of these programs in Table 7, ordered by values of \(\mathbb {E}(ROE_{t+1}(m))\), and with the tangent program in bold.

In this case, all the programs that lie on the convex frontier have a solvency ratio close to the lower bound in the constraint. With respect to previous results, the tangent program relies less on Quota Share and more on Excess of Loss for MTPL. We have indeed that such treaty assures a lower saving of capital but also a lower reduction of profitability. The tangent program achieves really similar results to the \(4^{\text {th}}\) program. The only difference is choosing which LoB to cover more and which less with Excess of Loss.

Up to now, we have analysed three different reinsurance optimization criteria under the assumed constraints. However, it is interesting to explore what happens when the number of possible reinsurance programs is strongly limited because the constraints are too binding. To this end, we increase the solvency ratio constraint, such that the new constraints of problem (12) is set as follows:

As shown in Fig. 8, the convex frontier is composed by only two programs, summarized in Table 8.

Behaviour of alternative reinsurance programs in terms of expected ROE and coefficient of variation. The scale varies with respect to the solvency ratio net of reinsurance. Points displayed in orange satisfy the set of constraints (13)

We display a close-up of the orange dots of Fig. 7. Moreover, yellow dots represent the set of non-dominated reinsurance programs. Orange points are the strategies belonging to the lower convex hull frontier

It is interesting to note that the first program in Table 8 has been already appeared as tangent program when dealing with the optimization problem (11). In fact, this program is characterized by an interesting trade-off between volatility and solvency ratio, while satisfying the profitability constraint in its lower bound. Since there are only two programs given the current conditions, we are not able to perform a good comparison. An idea is to investigate whether these two programs remain a valid choice under a less binding constraint in terms of profitability such as:

Behaviour of alternative reinsurance programs in terms of expected ROE and coefficient of variation. The scale varies with respect to the solvency ratio net of reinsurance. Points displayed in orange satisfy the set of constraints (14)

We display a close-up of the orange dots of Fig. 9. Moreover, yellow dots represent the set of non-dominated reinsurance programs. Orange points are the strategies belonging to the lower convex hull frontier, with the tangent one in red

Now that more programs are considered, a proper convex frontier is determined in Fig. 10, and confirms that the first program of Table 8 is now the tangent one, as shown in Table 9.

If we obtained a different tangent program in Table 9, the main problem would be linked to the constraints being too binding: by considering a wider range of reinsurance programs, the insurance company would be able to select a better trade-off.

The \(5^{th}\) program of Table 9 is really similar to the tangent one, except for \(L_{GTPL}\) that covers large claims up to the policy limit \(pl_{GTPL,t+1}\). Since in practice the distribution of extreme losses might be underestimated in the fitting procedure, opting for a wider Excess of Loss layer can be a valid choice.

4.4 Reinstatement optimization

In this section, we investigate the impact of reinstatements on the optimization problem (3). We briefly recall the main characteristics of reinstatements. Under an Excess of Loss treaty \(L_i\) xs \(D_i\) applied to the generic LoB i, the reinsurer pays for each \(h-\)claim \(Z_{h,i,t+1}\) an amount \(Z_{h,i,t+1}^{r,xol}\) equal to \(\min (\max (Z_{h,i,t+1}-D_i,0),L_i)\). In case of presence of an annual aggregate deductible \(AAD_i\) and an annual aggregate limit \(AAL_i\), the aggregate claim amount \(X_{i,t+1}^{r,xol}\) paid by the reinsurer for such Excess of Loss treaty is calculated as follows:

When reinstatements are present, \(AAL_i\) is given as an integer multiple of the limit \(L_i\), such that \(AAL_i= (N_i+1)L_i\), where \(N_i\) is the number of reinstatements available. The reinsurance premium for such Excess of Loss varies accordingly to the reinstatements being free or paid. In case of free reinstatements, the premium is simply calculated on the basis of the risk underlying a layer \(L_i\) xs \(D_i\) with an aggregate layer \((N_i+1)L_i\) xs \(AAD_i\). With paid reinstatements the insurer pays an additional reinstatement premium to reinstate the layer if a claim reduces its capacity. The premium of the \(n^{\text {th}}\) reinstatement is paid pro rata of the claims to the layer and it is expressed as a percentage \(c_n\) of the base premium \(b_{i,t+1}^{r,base}\) initially paid for the layer. To clarify the concept, the \(n^{\text {th}}\) reinstatement covers the amount

where \(\left( \frac{R_{n,i}}{L_i}\right)\) represents the proportion of layer reinstated. Therefore, since the premium for the \(n^{th}\) reinstatement is defined as \(\left( c_{n,i} b_{i,t+1}^{r,base} \frac{R_{n,i}}{L_i}\right)\), the total premium is

In the previous optimization problems, we always assumed the absence of Annual Aggregate Deductibles and Limits by imposing \(AAD_{i}=0\), \(N_{i}=\infty\) and \(c_{n,i}=0\) \(\forall n=1,\ldots ,N\) and \(\forall i\). To study the reinstatements’ effects in the proposed optimization framework, we modify the Excess of Loss treaties presented in Table 5 for both MTPL and GTPL, such that:

-

given the generic LoB i, the Excess of Loss layer \(L_i\) xs \(D_i\) is split in two sequential layers, denoted as \(L_{i,1}\) xs \(D_i\) and \((L_i-L_{i,1})\) xs \((D_i+L_{i,1})\). This choice has been made since assuming the presence of only one layer might neglect possible advantages provided by the reinstatements. In the computations \(L_{i,1}\) has been discretised with a 1,000,000 step in the range \([0;L_i)\), where we trivially consider the single layer \(L_i\) xs \(D_i\) when \(L_{i,1}=0\);

-

subsequently, for each new layer we may introduce reinstatements, both paid and free, with \(N\in \left\{ 0,1,2,\infty \right\}\). In case of paid reinstatements, \(c_{n,i} \in \left\{ 0.5 , 1\right\}\) as commonly found in practice, and we assume \(c_{n,1} = c_{n,2}=\cdots =c_{N,1}\) when \(N\ge 2\). Trivially, \(c_{n,i}=0\) \(\forall i\) for free reinstatements;

-

to determine the initial reinsurance premium \(b_{i,t+1}^{r,base}\) for each resulting layer, the standard deviation pricing principle has been applied (see, e.g., [51] for further details on the methodology) with the same calibration used in the previous sections.

Behaviour of alternative reinsurance programs in terms of expected ROE and coefficient of variation that satisfy the set of constraints (10). The scale varies with respect to the solvency ratio net of reinsurance. Yellow dots represent the set of non-dominated reinsurance programs and orange points are the strategies belonging to the upper convex hull frontier. The points displayed in red are the reinsurance programs with the presence of different reinstatements structures generated starting from the programs in orange

To add the reinstatements in Algorithm 5, we need to calculate the stochastic reinsurance premiums in premiumsXoL as vectors. Due to the presence of sequential layers, we also need to compute such premiums twice. The resulting reinsurance programs that satisfy the set of constraints (10) are represented with red points in Fig. 11. We observe a trade-off between two different effects:

-

1.

since the standard deviation premium principle is sub-additive, the reinsurance premium increases when we split a layer in two consecutive layers. Therefore, since we are not introducing the counterparty default risk in the calculations, we expect a reduction in the profitability of the insurer by keeping untouched its underlying risk net of reinsurance;

-

2.

by introducing a finite value of AAL and number of reinstatements, the reinsurance premiums are reduced on average and the insurer is more exposed to extreme risk due to possible layer exhaustion and stochastic reinsurance premiums.

For these reasons the generated reinsurance programs are characterized by a lower solvency ratio than their respective starting orange point of Fig. 11. We might also observe that some red dots are located on top of the convex frontier, showing that the insurer can actually slightly optimize the trade-off between \(sr_{t}(m)\) and \(\mathbb {E}(ROE_{t+1}(m))\) of the reinsurance program with adequate layer splits and reinstatements. This further optimization is clearly not possible when applied to a reinsurance program that satisfies the solvency ratio constraint in its lower bound, like the leftmost orange point of Fig. 11. Therefore, we ignore the latter and, recalling the results of Table 5, the reinsurance programs that will be optimized are presented in Table 10.

To avoid overloading the tables with numerical results, we will present one reinstatement optimization for each reinsurance treaty present in Table 10. In particular, we choose the alternative programs that lie on top of the convex frontier that optimize the trade-off between \(sr_{t}(m)\) and \(\mathbb {E}(ROE_{t+1}(m))\). Both the optimized programs presented in Table 11 modify the MTPL layer while leaving untouched the GTPL one. For this reason, the information regarding the latter LoB is not included.

In particular, we can see from Table 11 that the MTPL layer of the first program has been split into two consecutive layers, each with one paid reinstatement. Instead, the MTPL layer of the second program is not split, but indicates that a paid reinstatement might be preferable to an unlimited AAL. In both the optimized programs, the resulting changes in \(CV(S^{n}_{t+1}(m))\), \(sr_{t}(m)\) and \(\mathbb {E}(ROE_{t+1}(m))\) are minimal when compared to the respective starting point.

4.5 Umbrella case study

Since we dealt with finite aggregate limits and reinstatements, an interesting analysis could be characterized by the introduction of an Umbrella treaty to avoid horizontal exhaustion of the layers. Before diving into the numerical results, we will briefly describe the characteristics of such reinsurance treaty to give more context to the subject. The main purpose of an Umbrella is to protect the insurance company from risks that are not covered by other reinsurance contracts. For example, an Umbrella coverage might include catastrophe claims or other particular risks that are excluded by the reinsurance scheme. Another common use is to increase the protection of Excess of Loss treaties in two alternative scenarios:

-

vertical exhaustion occurs when a claim exceeds the amount \(D_i+L_i\) of the Excess of Loss applied to the LoB i. In this case, the Umbrella covers the amount exceeding the layer. Contractually speaking, when the vertical exhaustion clause is present, the perimeter of the Umbrella can be defined as a subset of the risks covered by the associated Excess of Loss layer. For example, given an Excess of Loss that covers the GTPL LoB, the Umbrella that protects its vertical exhaustion might include the medical liability risks only for commercial reasons;

-

horizontal exhaustion occurs when the aggregate claim amount \(X_{i,t+1}^{r,xol}\) transferred to the reinsurer reaches the aggregate limit \(AAL_i\), leaving the insurer unprotected. In this scenario, the Umbrella protects the insurer from those claims that fall in the layer when the latter is already fully consumed, providing an additional coverage.

A single Umbrella coverage can protect multiple LoBs at the same time and its perimeter can be defined in several ways, for example by including the horizontal exhaustion clause only, which is the particular case that we analyse since connected with the previous section where we introduced finite aggregate limits. More precisely, we study how an Umbrella affects the optimization when applied to all the Excess of Loss treaties with finite AAL for both MTPL and GTPL.

To price such contract, the standard deviation premium principle with \(\beta _{umb}=35\%\). From a risk theory perspective, when an Umbrella is applied to multiple LoBs, the dependence between them is a strong driver to determine the volatility of the underlying risk, and therefore, in our case, the price of the treaty. Since two consecutive layers with finite AAL can be present for both MTPL and GTPL, defining a closed mathematical formula to compute the standard deviation of such complex risk is a tough challenge. For this reason, we derive the standard deviation from the simulations in each scenario where the Umbrella has been tested.

To better understand the math behind the Umbrella, given \(G_i\) Excess of Loss layers for the generic LoB i, we define the amount

where \(D_{i,g}\) and \(L_{i,g}\) are respectively the deductible and the limit of the \(g^{th}\) layer, with \(g=1, \ldots , G_i\). Trivially, \(D_i = D_{i,1}\) and \(L_i = \sum _{g=1}^{G_i} L_{i,g}\) when the layers are sequential such as \(D_{i,g}=D_{i,g-1}+L_{i,g-1}\) for \(g>1\). When layers with finite \(AAL_{i,g}\) are present, a portion of the claims might exceed the layer capacity, that can be defined on aggregate as

where, in absence of an Umbrella, \(X_{i,t+1}^{r,out}\) is a loss for the insurer. Given that the Umbrella contracts that we are considering cover every Excess of Loss layer available in the program, an Umbrella with annual aggregate deductible \(AAD_{umb}\) and limit \(AAL_{umb}\) covers the amount

In our analysis we assume that two different Umbrella treaties are available in the market: one with medium coverage (\(AAL_{umb}=5,000,000\)) and another with high coverage (\(AAL_{umb}=20,000,000\)), both with \(AAD_{umb}=0\). As seen before, with the introduction of adequate finite \(AAL_i\) the insurer can achieve an improvement in the expected profitability at the cost of increasing both CV and sr. Instead, with the subsequent introduction of an Umbrella, we expect to observe an effect in the opposite direction: the trade-off between a finite \(AAL_i\) and an Umbrella covering horizontal exhaustion might provide some interesting results. We remark that the scope of this section is to investigate the main effects of an Umbrella in terms of profitability and solvency ratio, without focusing too much on optimizing the trade-off with the introduction of such treaty.

Behaviour of alternative reinsurance programs in terms of expected ROE and coefficient of variation that satisfy the set of constraints (10). The scale varies with respect to the solvency ratio net of reinsurance. Yellow dots represent the set of non-dominated reinsurance programs and orange points are the strategies belonging to the upper convex hull frontier. The points displayed in red are the reinsurance programs with the presence of different reinstatements structures generated starting from the programs in orange, characterized by an additional Umbrella cover with \(AAL=5,000,000\)

Both the Umbrella contracts have been applied to those programs that had at least one Excess of Loss with finite \(AAL_{i,g}\) and that were generated according to the set of criteria specified in the previous section. Recalling the results of Table 11, the programs that were slightly more efficient than the respective starting program are characterized by solid reinstatements, such that an Umbrella never activates according to the simulations. Hence, we excluded from the current analysis all the programs that, with the addition of an Umbrella, were unaffected in terms of protection. The resulting programs with \(AAL_{umb}=5,000,000\) are represented with red dots in Figure 12. We can observe that many programs generated by the rightmost optimal program, depicted in orange, lie on top of the convex frontier when the Umbrella is applied. This effect is reasonable since, recalling Table 10, the second program covers more GTPL than the first, creating the opportunity to benefit from the Umbrella price (\(\beta _{umb}<\beta _{GTPL}\)). One of these reinsurance programs is shown in Table 12.

Compared to Table 11, the program shown in Table 12 relies in this scenario on a finite AAL also for GTPL, so that the extreme losses that exceed \(AAL_{GTPL,1}\) are covered by the Umbrella. On the opposite side, the second GTPL layer is characterized by \(AAL_{GTPL,2}=\infty\), probably due to the fact that the Umbrella’s limited capacity is able to cover in a proper manner just the losses coming from the first GTPL layer and the MTPL one. In general, the presented program is just slightly different from the starting program in terms of coefficient of variation, solvency ratio and expected ROE net of reinsurance.

Behaviour of alternative reinsurance programs in terms of expected ROE and coefficient of variation that satisfy the set of constraints (10). The scale varies with respect to the solvency ratio net of reinsurance. Yellow dots represent the set of non-dominated reinsurance programs and orange points are the strategies belonging to the upper convex hull frontier. The points displayed in red are the reinsurance programs with the presence of different reinstatements structures generated starting from the programs in orange, characterized by an additional Umbrella cover with \(AAL=20,000,000\)

We now analyse the impact of the Umbrella with a higher coverage (i.e. \(AAL_{umb}=20,000,000\)). With such capacity, the Umbrella is able to cover every exceeding loss in our simulations. Therefore, on a first glance, one might expect that the solvency ratio and the coefficient of variation of the aggregate claims amount remain constant when passing from the starting program to the modified one with such Umbrella. But, as displayed in Fig. 13, it is not always the case: in fact, if we focus on the central orange points and on its respective alternatives in red, some programs are characterized by a different (and lower) solvency ratio. The reason behind this effect is the presence of stochastic reinstatement premiums, which downgrade both the solvency ratio and the coefficient of variation. Also, note that every red point in the middle is now dominated by the original program.

An alternative program from the rightmost orange program of Fig. 13 is displayed in Table 13. Compared to the results presented in Table 12, the program is characterized by the same layer split, but relies more on reinstatements due to the greater Umbrella’s capacity. In addition, the solvency ratio has decreased, while keeping almost untouched the coefficient of variation and the expected ROE. As briefly mentioned before, two opposite effects are present:

-

the presence of reinstatements implies higher expected profit and higher risk due to volatile reinsurance premiums;

-

the Umbrella improves the solvency ratio and the coefficient of variation, while it downgrades the expected ROE.

In this particular scenario, the trade-off does not add any particular value for the insurer. It is worth mentioning that, for \(\beta _{umb}\ge 40\%\), every alternative program with the Umbrella lies below the convex frontier. Therefore, since the optimization is strongly price sensitive for the Umbrella, such treaty should not be considered solely to achieve a better risk-return trade-off. In fact, protecting the horizontal exhaustion of the Excess of Loss layers might be an interesting choice when the assumptions regarding the claim-size distribution are too unreliable.

5 Conclusions

In this paper, we propose an alternative approach to tackle multi-objective portfolio optimization problems under specific constraints. We apply this optimization framework in the portfolio selection process of an EU-based non-life insurance company that aims at jointly remunerating for shareholders’ capital and be compliant in terms of solvency ratios with the Solvency II Directive. In particular, we investigate the solutions to the proposed risk-return reinsurance optimization of a multi-line non-life insurer through a simulation model. In particular, the combination of Excess of Loss and Quota Share contracts have been tested on three lines of business of an Italian insurance company. The concepts of Pareto efficient frontier and convex hull support the selection of the most appealing reinsurance structures under the user-defined constraints. Moreover, the aid provided by the data visualisation contributes in the comprehension of the numerical results.

In addition, two alternative optimization problems have been also analysed, and most of the selected programs exhibit very similar structures. The results reveal that the Quota Share plays a huge role in balancing the risk portfolio since one of the lines of business (Motor Third Party Liability) is predominant. A trade-off between proportional and non-proportional reinsurance is present in order to reach the desired goals. In fact, the Excess of Loss deductible and limit depend on how much Quota Share has been bought, and vice-versa. For each selected program no reinsurance is applied to the Motor Other Damage business because of the high profit and the moderate risk.

In the last sections reinstatements and consecutive Excess of Loss layers have been introduced, revealing that an opportune combination of reinstatements and layers might slightly improve the effects of a reinsurance program. Subsequently, in order to cover losses coming from the horizontal exhaustion of the layers, two different Umbrella treaties have been analysed.

Particular care needs to be paid when building assumptions on both claim-size distribution and reinsurance pricing. In fact, the chosen reinsurance program might cost differently than initially thought once the actual quote is obtained. Meanwhile, an imprecise risk estimation might lead to biased decisions.

The calibration of the model optimizes risk and return indicators within a 1-year time horizon, which, in case of the solvency ratio, is consistent with the Solvency II framework. Further research improvements may involve the projections in a multi-year horizon, while including the opportunity of adjusting the reinsurance program along the way. In addition, since an insurance company already has its own reinsurance program, the proposed optimization model can be adapted by investigating only programs that are slightly different from the reference one. Thereby, the analysis would signal with shorter computational times which are the most effective modifications that could be implemented in the current reinsurance program.

Notes

For further details on this topic see [19].

We denote random variables with capital letters.

For the sake of simplicity, we assume that all treaties can be applied to all lines of business. Otherwise the number of possible combinations decreases.

We choose to report also information also about the treaties with \(sr_{t}(m)\ge 145\%\) and \(\mathbb {E}(ROE_{t+1}(m))\ge 8.5\%\) to visualise how much the set of constraints is binding.

References

Aas K, Berg D (2009) Models for construction of multivariate dependence: a comparison study. Eur J Financ 15(7–8):639–659

Aas K, Czado C, Frigessi A, Bakken H (2009) Pair-copula constructions of multiple dependence. Insur Math Econ 44:182–198

Albrecher H, Beirlant J, Teugels JL (2017) Reinsurance: actuarial and statistical aspects. Wiley, New York

Asimit AV, Badescu AM, Haberman S, Kim E-S (2016) Efficient risk allocation within a non-life insurance group under Solvency II Regime. Insur Math Econ 66:69–76

Asimit AV, Bignozzi V, Cheung KC, Hu J, Kim E-S (2017) Robust and Pareto Optimality of Insurance Contracts. Eur J Oper Res 4:20

Asimit AV, Boonen TJ (2017) Insurance with Multiple Insurers: A Game-Theoretic Approach. Eur J Oper Res 267(2):778–790

Associazione Nazionale fra le Imprese Assicuratrici. L’assicurazione Italiana 2018-2019. Technical report, ANIA, 2019

Balbás A, Balbás B, Heras A (2009) Optimal reinsurance with general risk measures. Insur Math Econ 44(3):374–384

Beard RE, Pentikäinen T, Pesonen E (1984) Risk theory. Chapman & Hall, London

Bernard C, Tian W (2009) Optimal reinsurance arrangements under tail risk measures. J Risk Insur 20:20

Borch K (1960) An attempt to determine the optimum amount of stop loss reinsurance. In: Transactions of the 16th international congress of actuaries, pp 597–610

Cai J, Liu H, Wang R (2017) Pareto-optimal reinsurance arrangements under general model settings. Insur Math Econ 77:24–37

Cai J, Tan KS (2007) Optimal retention for a stop-loss reinsurance under the VAR and CTE risk measures. ASTIN Bull J IAA 37(1):93–112

Cai J, Tan KS, Weng C, Zhang Y (2008) Optimal reinsurance under VAR and CTE risk measures. Insur Math Econ 43(1):185–196

Cai J, Wang Y (2019) Reinsurance premium principles based on weighted loss functions. Scand Actuarial J 2019(10):903–923

de L. Centeno M, (1995) The effect of the retention limit on risk reserve. Astin Bull 25:1

Cheung KC (2010) Optimal reinsurance revisited—a geometric approach. ASTIN Bull J IAA 40(1):221–239

Clemente GP (2018) The effect of non-proportional reinsurance: a revision of solvency ii standard formula. Risks 6(2):50

Clemente GP, Savelli N (2017) Actuarial improvements of standard formula for non-life underwriting risk. Insurance regulation in the European Union. Springer, Berlin, pp 223–243

Kurowika D, Joe H (2010) Dependence modeling vine copula handbook

Coutts SM, Thomas T (1997) Capital and risk and their relationship to reinsurance programmes. In: Proceedings of 5th international conference on insurance solvency and finance

Czado C (2010) Copula theory and its applications, chapter pair-copula constructions of multivariate copulas. Springer, Berlin, pp 93–109

Daykin C, Pentikäinen T, Pesonen M (1994) Practical risk theory for actuaries. Chapman & Hall, London

Doumpos M, Zopounidis C (2020) Multi-objective optimization models in finance and investments. J Glob Optim 76(2):243–244

Durante F, Fernandex-Sanchez J, Sempi C (2013) A topological proof of Sklar’s theorem. Appl Math Lett 26(9):945–948

Eddelbuettel D, Francois R, Allaire J.J, Ushey K, Kou Q, Russel N, Bates D, Chambers J (2021) Rcpp: seamless r and c++ integration. R package version 1.0.6

Eling M, Gatzert N, Schmeiser H (2008) The swiss solvency test and its market implications. Geneva Pap 33:418–439

European Commission. Directive 2009/138/EC of the European Parliament and of the Council of 25 November 2009 on the taking-up and pursuit of the business of Insurance and Reinsurance (Solvency II). Technical report, European Commission, 2009

European Commission. Commission Delegated Regulation (EU) 2015/35 supplementing Directive 2009/138/EC of the European Parliament and of the Council on the taking-up and pursuit of the business of Insurance and Reinsurance (Solvency II) 10 of October 2014 (published on Official Journal of the EU, vol. 58, 17 January 2015). Technical report, European Commission, 2014

Federal Office of Private Insurance (2004) White Paper of the Swiss Solvency Test. Technical report, FOPI

Fischer M, Köck C, Schlüter S, Weigert F (2009) An empirical analysis of multivariate copula models. Quant Financ 9(7):839–854

Gajek L, Zagrodny D (2004) Optimal reinsurance under general risk measures. Insur Math Econ 34(2):227–240

Gajek L, Zagrodny D (2004) Reinsurance arrangements maximizing insurer’s survival probability. J Risk Insur 71(3):421–435

Gisler A (2009) The insurance risk in the SST and in Solvency II: modelling and parameter estimation. In: Proceedings Astin colloquium

Guerra M, de L. Centeno M (2008) Optimal reinsurance policy: the adjustment coefficient and the expected utility criteria. Insur Math Econ 42(2):529–539

Guerra M, de L. Centeno M (2012) Are quantile risk measures suitable for risk-transfer decisions? Insur Math Econ 50(3):446–461

Jiang W, Hong H, Ren J (2017) On pareto-optimal reinsurance with constraints under distortion risk measures. Eur Actuarial J 8:2

Joe H (1996) Families of m-variate distributions with given margins and m(m-1)/2 bivariate dependence parameters. Inst Math Stat Hayward 28:120–141

Joe H (2001) Multivariate models and dependence concepts. Chapman & Hall/CRC, Boca Raton

Kaluszka A (2001) Optimal reinsurance under mean-variance premium principles. Insur Math Econ 28(1):61–67

Malamud S, Rui H, Whinston A (2016) Optimal reinsurance with multiple tranches. J Math Econ 65:71–82

Meyers G, Shenker N (1982) Parameter uncertainty in the collective risk model. Technical report, Casualty Actuarial Society Discussion Paper Program

Nelsen RB (2010) An introduction to copulas. Springer, Berlin

Oesterreicher I, Mitschele A, Schlottmann F, Seese D (2006) Comparison of multi-objective evolutionary algorithms in optimizing combinations of reinsurance contracts. In: GECCO ’06

Román S, Villegas AM, Villegas JG (2018) An evolutionary strategy for multiobjective reinsurance optimization. J Oper Res Soc 69(10):1661–1677

Savelli N, Clemente GP (2011) Hierarchical structures in the aggregation of premium risk for insurance underwriting. Scand Actuarial J 2011(3):193–213

Schepsmeier U, Stoeber J, Brechmann EC, Graeler B, Nagler T, Erhardt T (2015) Vinecopula: statistical inference of vine copulas. R package version 1.6.1

Sklar A (1959) Fonctions de répartition à n dimensions et leurs marges. Publ Inst Stat Univ Paris 8:229–231

Sklar A (1996) Random variables, distribution functions, and copulas: a personal look backward and forward. Lect Notes Monograph Ser 20:1–14

Steuer RE, Qi Y, Hirschberger M (2007) Suitable-portfolio investors, nondominated frontier sensitivity, and the effect of multiple objectives on standard portfolio selection. Ann Oper Res 152(1):297–317

Sundt B (1991) On excess of loss reinsurance with reinstatements. Bull Swiss Assoc Actuaries 20:1

Acknowledgements

The authors wish to thank the Editor and three anonymous referees for their careful reading and the suggestions that helped to improve the quality of the paper.

Funding

Open access funding provided by Università Cattolica del Sacro Cuore within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zanotto, A., Clemente, G.P. An optimal reinsurance simulation model for non-life insurance in the Solvency II framework. Eur. Actuar. J. 12, 89–123 (2022). https://doi.org/10.1007/s13385-021-00281-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13385-021-00281-2