Discrete Dynamics of Dynamic Neural Fields

- Department of Mathematics, Trinity University, San Antonio, TX, United States

Large and small cortexes of the brain are known to contain vast amounts of neurons that interact with one another. They thus form a continuum of active neural networks whose dynamics are yet to be fully understood. One way to model these activities is to use dynamic neural fields which are mathematical models that approximately describe the behavior of these congregations of neurons. These models have been used in neuroinformatics, neuroscience, robotics, and network analysis to understand not only brain functions or brain diseases, but also learning and brain plasticity. In their theoretical forms, they are given as ordinary or partial differential equations with or without diffusion. Many of their mathematical properties are still under-studied. In this paper, we propose to analyze discrete versions dynamic neural fields based on nearly exact discretization schemes techniques. In particular, we will discuss conditions for the stability of nontrivial solutions of these models, based on various types of kernels and corresponding parameters. Monte Carlo simulations are given for illustration.

1. Introduction

To model a large amount of cells randomly placed together with a uniform volume density and capable of supporting various simple forms of activity, including plane waves, spherical and circular waves, and vortex effects, Beurle (1956) proposed a continuous model consisting of a partial differential equation, that can capture some neurons behaviors like excitability at the cortical level. Amari (1977) and Wilson and Cowan (1972) subsequent modifications did allow for new features such as inhibition. This essentially gave birth to the theory of dynamic neural fields (DNFs) with far reaching applications. The literature on the DNFs is rather rich, and the trends nowadays are toward cognition, plasticity, and robotics. In cognitive neuroscience for instance, DNFs have enabled analyses of Electroencephalograms (Nunez and Srinivasan, 2006), short term memory (Camperi and Wang, 1998), visual hallucinations (Ermentrout and Cowan, 1979; Tass, 1995), visual memory (Schmidt et al., 2002). Durstewitz et al. (2000) have proposed neurocomputational models of working memory using single layer DNFs and shown that the temporal dynamics they obtained compare well with the in vivo observations. Simmering et al. (2008) and Perone and Simmering (2019) used DNFs to achieve spatial cognition by successfully simulating infants' performance in some tasks. Quinton and Goffart (2017) used nonlinearities in DNFs to propose a unifying model for goal directed eye-movements that allow for the implementation of qualitatively different mechanisms including memory formation and sensorimotor coupling. Plasticity in neuroscience can be thought of as the ability of the brain to adapt to external activities by modifying some of its structure. Connections between plasticity and DNFs have been made in studies on intrinsic plasticity, see Strub et al. (2017) or parallel works such as Neumann and Steil (2011), Pozo and Goda (2010), and the references therein. Robotics has been a great niche for DNFs with the works of Bicho et al. (2000), Erlhagen and Schöner (2001), Erlhagen and Bicho (2006), and Bicho et al. (2010).

To recall the theoretical aspects of DNFs, let Ω ⊆ ℝd be a manifold where d is positive integer. In the presence of neurons located on Ω at time t arranged on L layers, the average potential function Vk(xk, t) is often used to understand the continuous field on the kth layer. Vk(xk, t) is the average membrane potential of the neuron located at position xk at time t of the kth layer. When L = 1, V(xk, t) can also be understood as the synaptic input or activation at time t of a neuron at position or direction xk. It satisfies the equation (see Amari, 1977) which is given as

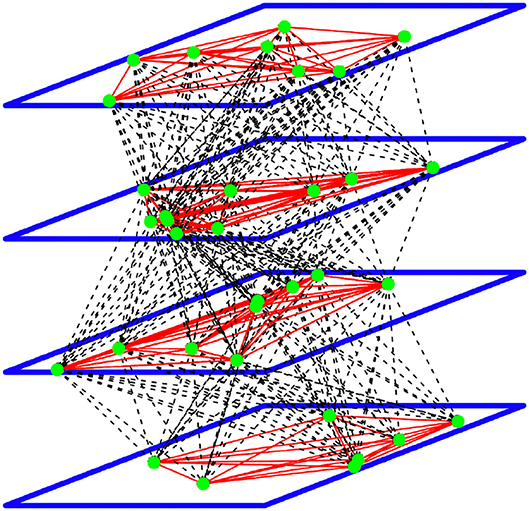

where Wkl(xk, xℓ) is the intensity of the connection between a neuron located at position xk on the kth layer with a neuron a position xℓ on the ℓth layer, G[Vℓ(xℓ, t)] is the pulse emission rate (or activity) at time t of the neuron located at position xℓ on the ℓth layer. G is often chosen as a monotone increasing function. Sk(xk, t) represents the intensity of the external stimulus at time t arriving on the neuron at position xk on the kth layer, see Figure 1 below.

Figure 1. A representation of the DNFs with four layers and 34 neurons. The green dots are the neurons with intra-layer connections represented by the red lines, and inter-layer connections represented by the dashed lines.

Most of the mentions of discrete systems involving DNFs are of Euler forward type discretization—see Erlhagen and Bicho (2006), Quinton and Goffart (2017), and Beim and Hutt (2014)—with simulations as the main objective. Stability analysis of solutions of DNFs in the sense of discrete dynamical systems are either understudied or often ignored. Beim and Hutt (2014) studied an heterogeneous aspect of DNFs and found existence of attractors and saddle nodes for solutions of (1). There are also discussion of DNFs as neural networks. For instance, Sussilo and Barack (2013) discussed continuous time recurrent neural network (RNN) as differential equation of the form

where Vk is an M dimensional state of activations and G(Vj) are the firing rates, and represents the external stimulus received from I inputs uj and synaptic weights Bkj. The weights Lkj are selected from a normal distribution. In a sense, the Equation (1) can be considered a continuous time (recurrent neural networks) RNN. Authors such as Elman (1990) and Williams and Zipser (1990), and most recently, Durstewitz (2017)—see Equation (9.8) therein, have discussed discrete time RNN formulated as a difference equation of the form

where G is a monotone increasing function, Wi0 is a basic activity level for the neuron at position xi, the Wij's are weights representing the connection strength between neuron at position xi and neuron at position xj, and is some external stimulus at time n arriving on the neuron at position xi. So essentially, after discretization, (1) may yield not only a discrete time dynamical system, but also a RNN. In fact, Jin et al. (2021) considered a discrete equivalent in the space for Equation (1) for L = 2 while maintaining the time as continuous and discussed it in the context of unsupervised learning which could help understand plasticity in the brain. The two main goals of this paper are:

1. to propose a discrete dynamic neural field model that is both numerically convergent and dynamically consistent. The model is based on nearly exact discretization techniques proposed in Kwessi et al. (2018) that ensure that the discrete system obtained preserves the dynamics of the continuous system it originated from.

2. to propose a rigorous and holistic theoretical framework to study and understand the stability of dynamic neural fields.

The model we propose has the advantage of being general and simple enough to implement. It also captures different generalizations of DNFs such as predictive neural fields (PNFs) and active neural fields (ANFs). We further show that the proposed model is in effect a Mann-type (see Mann, 1953) local iterative process which admits weak-type solutions under appropriate conditions. The model can also be written as a recurrent neural network. Stability analysis shows that in some case, the model proposed can be studied using graph theory.

The remainder of the paper is organized as follows: in section 2, we propose our nearly exact discretization scheme for DNFs. In section 3.1, we make a brief overview of the essential notions for the stability analysis of discrete dynamical systems, in section 3.2, we discuss the existence on nontrivial fixed solutions for DDNFs, in sections 3.3 and 3.4, we discuss stability analysis for a one and multiple layers discrete DNFs. In section 3.5, we propose simulations and their interpretation for neural activity and in section 4, we make our concluding remarks.

2. Materials and Methods

2.1. Discrete Schemes for DNFs

For sake of simplification, we will use the following notations: given a positive integer L, and integers 1 ≤ k, ℓ ≤ L, Mk, Mℓ, we consider indices 1 ≤ ik ≤ Mk, 1 ≤ jℓ ≤ Mℓ. We put

where hn = tn+1 − tn, and is the time scale of the field. We obviously assume that all the layers of the domain Ω have the same time scale. |Ω| represents the size of the field Ω that henceforth we will assume to be finite. xi represents a point on the kth layer and yj a point on the ℓth layer. Mk represents the number of interconnected neurons on the kth layer of the domain Ω, and represents the spatial discretization step on the kth layer of the domain Ω. When L = 1, we will adopt the notations and . Henceforth, for sake of clarity and when needed, we will represent vectors or matrices in bold.

To obtain a discrete model for DNFs, we are going to use nearly exact discretization schemes (NEDS) (see Kwessi et al., 2018). We note that according to this reference, Equation (1) is of type T1, therefore, the left-hand-side will be discretized as

For the right-hand-side, we write a Riemann sum for the integral in Equation (1), over the domain Ω as

This means that, for a given L ∈ ℕ, for integers 1 ≤ k ≤ L, Mk, and 1 ≤ ik ≤ Mk, a discrete dynamic neural field (DDNFs) model can be written as

Staying within the confines of the layers' architecture proposed by Amari (1977), in a DDNFs with L ≥ 3, we observe that the activity on neurons located on the bottom (or top) layer depends on the interconnected neurons on that layer and their connections to the layer above (or below). First, we define

Middle layers are connected to two layers, one above and one below. This means that at time tn, a neuron at position xi on the first layer receives external input , intra-layer signals from M1 neurons on the first layer, and inter-layer signals from M2 neurons on the second layer. Likewise, a neuron at position xi on the Lth layer receives external input , inter-layer signals from ML−1 neurons on the (L − 1)th layer, and intra-layer signals from ML neurons on the Lth layer. For 1 < k < L, we have if ℓ ∉ Lk and if ℓ ∈ Lk. This means that at time t = tn, a neuron at position xi on the kth layer receives external input , inter-layer signals from Mk−1 neurons on the (k − 1)th layer, intra-layer signals from Mk neurons on the kth layer, and inter-layer signals from Mk+1 neurons on the (k + 1)th layer.

Further, Equation (5) has a matrix notation which helps with the study of stability analysis. Indeed, let and d = L × M, let X = (X1, X2, ⋯, XL) ∈ Ω, where for 1 ≤ k ≤ L, Xk = {(xk1, xk2, ⋯, xkM)} represents the positions of Mk neurons on the kth layer. We are assuming without loss of generality that xki = 0 if Mk < i ≤ M to have a full L × M matrix. Now consider the matrix

formed by the column vectors . In this case, Equation (5) can be written in a matrix form as

with an element-wise notation

for 1 ≤ i ≤ M and for 1 ≤ k ≤ L. For more complex layers' architectures, the interested reader can refer to De Domenico et al. (2013) and the references therein, where tensor products are used instead of matrices.

2.2. Pulse Emission Rate Functions

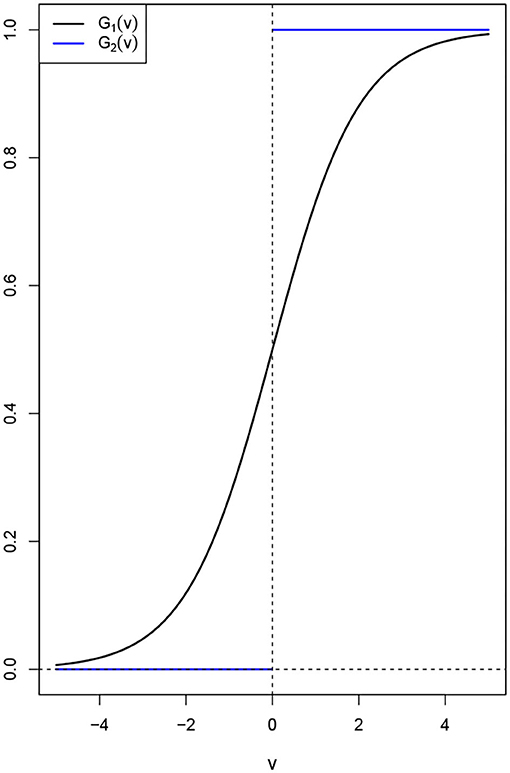

Amari (1977) proved the existence of nontrivial solutions for DNFs when G is the Heaviside function. Further studies have shown that in fact this is true for a large classes of functions G. As we stated earlier, functions G satisfying the Hammerstein conditions G(v) ≤ μ1v + μ2 are good candidates. However most practitioners use either the Heaviside function or the sigmoid function. For some θ > 0 and v0 ≥ 0, the sigmoid function, is defined as , and Heaviside function is defined as G2(v, v0) = I(v0, ∞)(v), see Figure 2 below. Here IA is the indicator function and is given as IA(x) = 1 if x ∈ A and IA(x) = 0 otherwise. This shows that the assumption that G be nonnegative and increasing is not unrealistic. In fact we can transform many functions to bear similar properties of the Heaviside and sigmoid functions, see for instance Kwessi and Edwards (2020).

REMARK 1. We observe that the choice of the sigmoid activation function is widely preferred in the literature for its bounded nature. Another reason is the fact that it is also suitable when the are binary, that is, they may take values 0, 1, where 0 and 1 represents, respectively active and non active neurons at time n. In this case, would represent the probability that there is an activity on neuron at position xi at time n + 1.

2.3. Connection Intensity Functions

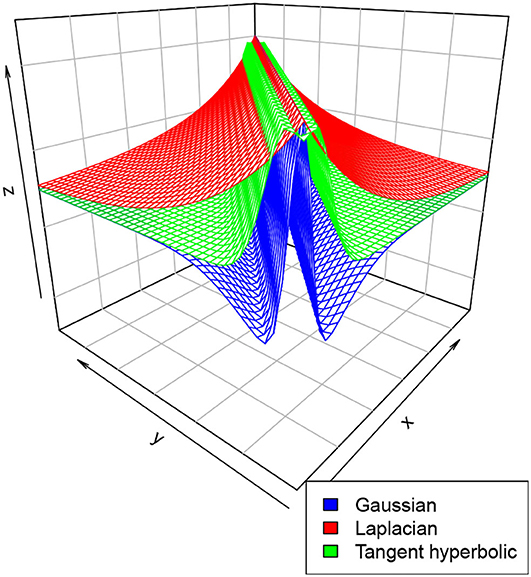

There are a variety of connection intensities functions (or kernels) that one can choose from. This include a Gaussian kernel , the Laplacian kernel defined as , or the hyperbolic tangent kernel Thyp(x, y, β, r) = 1 + tanh(βxT · y + r) where ∥·∥ is a norm in ℝd. Note that as defined, the hyperbolic tangent kernel is not symmetric, however, for a suitable choice of β and r, one may obtain a nearly symmetric kernel. Indeed, if r < 0 and β is close to zero, we have Thyp(x, y, β, r) ≈ (1 + tanh(r))e−β∥x−y∥2, see for instance Lin and Lin (2003). This kernel is very useful in non-convex problems and support vector machines.

In practice however, it is very common to select the function W(x, y) as the difference between either of the functions above, which results in a “Mexican hat” shaped function, that is, either , or , or . Figure 3 below is an illustration of these kernels for selected parameters. This choice will ensure that neurons within the same cell assembly are connected reciprocally by high synaptic weights σ+, whereas neurons not belonging to the same assembly are connected by low synaptic weights σ− (see for instance Durstewitz et al., 2000; Quinton and Goffart, 2017; Wijeakumar et al., 2017; Jin et al., 2021).

3. Existence and Stability of Nontrivial Solutions of the DDNFs

3.1. Stability Analysis

We recall the essential notions for the stability analysis of a discrete dynamical system Vn+1 = F(Vn) where F : ℝd → ℝ is continuously differentiable map.

DEFINITION 2.

(i) A point V∗ is said to be a fixed point for F if F(V∗) = V∗.

(ii) The orbit of a point V is defined as with .

(iii) The spectral radius ρ(A) a matrix A is a maximum of its absolute eigenvalues.

(iv) A fixed point V∗ for F is said to be stable if there exists a neighborhood of V∗ whose trajectories are arbitrarily close to V∗, that is, for all ϵ > 0, .

(v) A fixed point V∗ for F is said to be attracting if there exists a neighborhood of V∗ whose trajectories converge to V∗, that is, for all ϵ > 0, .

(vi) A fixed point V∗ for F is said to be asymptotically stable if it is both stable and attracting.

(v) A fixed point V∗ for F is said to be unstable if it is not stable.

THEOREM 3. Let Vn+1 = F(Vn) be a discrete dynamical system with a fixed point V∗ and let A = JF(V∗) be its Jacobian matrix.

1. If ρ(A) < 1, then V∗ is asymptotically stable.

2. If ρ(A) > 1, then V∗ is unstable.

3. If ρ(A) = 1, then V∗ may be stable or unstable.

3.2. Existence of Nontrivial Fixed Solutions for the DDNFs Model

A nontrivial solution for the DNFs is a non constant solution for which the left-hand side of Equation (1) is equal to zero. For the DDNFs, it can be formulated in the following way: for a given xi on the kth layer, the DDNFs in (5) has a nontrivial fixed point if for all n ≥ 1, we have . This is satisfied when

The right-hand side of Equation (7) is a Riemann sum for

So the existence of a nontrivial fixed point for the continuous DNFs Equation (1) implies the existence of a non trivial fixed solution for the DDNFs. According to Hammerstein (1930), such a nontrivial solution exists if W(x, y) is symmetric positive definite and G satisfies G(v) ≤ μ1v + μ2, where 0 < μ1 < 1, μ2 > 0 are constants.

We will use a different approach to prove existence of nontrivial solution of DNFs. Let be a measure space. We will consider the space L1(S) of equivalent classes of integrable functions on S, endowed with the norm , where dx = μ(dx).

DEFINITION 4. A stochastic kernel W is a measurable function W : S × S → ℝ such that

1. W(x, y) ≥ 0, ∀x, y ∈ S,

2. .

DEFINITION 5. A density function f is an element of L1(S) such that f : S → ℝ such that .

DEFINITION 6. A Markov operator P on L1(S) is a positive linear operator that preserves the norm, that is, P : L1(S) → L1(S) is linear such that

1. Pf ≥ 0 if f ≥ 0 and f ∈ L1(S),

2. ∥Pf∥ = ∥f∥.

REMARK 7.

We note that f ≥ 0 (and Pf ≥ 0) represents equivalent classes of integrable functions on S that are greater or equal to 0 except on a set of measure zero and thus the inequality should be understood almost everywhere.

We also note that when an operator P satisfies the second condition above, it is sometimes referred to as a non-expansive operator.

THEOREM 8. (Corollary 5.2.1–Lasota and Mackey, 2004). Let be measure space and P : L1(S) → L1(S) be a Markov operator. If for some density function f, there is g ∈ L1(S) such that Pnf ≤ g, for all n, then

Now we use Definitions 4, 5, and 6 and Theorem 8 above to state our result on the existence of nontrivial fixed solution of DNFs.

THEOREM 9. Let . Consider a DNFs with no external stimulus [S(x) = 0]. If W(x, y) is positive and bounded, then the DNFs (and consequently the DDNFs) has at least one nontrivial fixed solution V∗.

PROOF. Let be the Borel σ-algebra on S generated by the family and μ be the associated Borel measure. A nontrivial solution V(x) for the DNFs satisfies the equation

Consider the linear operator P defined on S as

Clearly, P is a Markov operator and we have

with W1(x, y) = W(x, y) and for n ≥ 1. Moreover, by the positivity and boundedness of W, there exists M > 0 such that for a density function f, we have Pnf(x) ≤ M, ∀n ≥ 1. By the above Theorem, there exists a density f∗ such that Pf∗ = f∗. We conclude by observing that f∗(x) = G(V∗(x)) = V∗(x) for some function V∗(x) defined on Ω and thus a nontrivial solution for the DNFs and DDNFs exists. That is, V∗ is a fixed point of G.

One major limitation of the above theorem is that it only applies to positive connectivity functions W(x, y) which would exclude a wide range of DNFs, for example, competing DNFs use almost exclusively negative weight functions as well as DNFs for working memories, see for instance Zibner et al. (2011). One remedy could be to prove the existence of weak fixed solutions of (5) using iterative processes without a positivity restriction. Indeed, let Ω be a nonempty, closed and convex subset of l2, the space of infinite sequences v = (v1, v2, ⋯) such that . We recall that l2 is a Hilbert space with the inner product .

DEFINITION 10. Consider a map T : Ω → l2. Suppose there is constant C such that ∥T(v1) − T(v2)∥2 ≤ C∥v1 − v2∥2. Then

1. T is called a Lipschitz map if C > 0.

2. T is called a contraction map if 0 < C < 1.

3. T is called a non-expansive map if C = 1.

DEFINITION 11. Let T : Ω → l2 be either a contraction or a non-expansive map. A Mann-type iterative process is a difference equation on Ω defined as

THEOREM 12. Suppose Ω is a subset of l2 and W(x, y) is bounded by W0. If G(v) is Lipschitz with Lipschitz constant K such that W0C ≤ 1, then the DDNFs (5) is a Mann-type iterative process with at least one weak solution.

PROOF. Here, we denote Vn to mean Vn(X) introduced earlier. We start by rewriting certain quantities in vector and matrix form. Let d = L × M where as above, and let . Let and , where as above, we complete the vectors with zeros for Mℓ<j ≤ M. Then we have the dot product

Similarly, let and . We also have the dot product

Now we consider the matrix formed by block matrices for 1 ≤ k ≤ L. We observe that (5) is a Mann-type process written as

where T is the linear map on Ω defined as T(Vn) = Γ · G(Vn)+Sn. It follows that for Un, Vn ∈ Ω

Since by hypothesis CW0 ≤ 1, T is either a contraction or a non-expansive map. It is known (see Mann, 1953) that in this case, the Mann-type iterative process (9) has at least one weak solution V∗, that is, there exists V∗ ∈ Ω such that for all V ∈ l2.

The second equation in (9) is a generalization of Equation (7) in Quinton and Goffart (2017) and according to their description, it can be considered a predictive neural field (PNF) where T(Vn) is an internal projection corresponding to the activity required to cancel the lag of the neural field Vn for a specific hypothetically observed trajectory, by exciting the field in the direction of the motion, ahead of the current peak location, and inhibiting the field behind the peak.

3.3. Stability Analysis of Hyperbolic Fixed Solutions

To discuss stability analysis for the DDNFs in (5), we are going to separate the analysis into a single-layer system and a multiple-layers system. For simplification purposes, we consider the following notations when necessary:

If L = 1 and for any M1 > 0, we will write and .

If L > 1 and Mk = Mℓ = 1 for all 1 ≤ k, ℓ ≤ L, we will write .

3.3.1. Single-Layer DDNFs Model

Let xi ∈ Ω. Suppose that there are M neurons interconnected and connected to the neuron located at xi. Let Wij be the intensity of the connection between the neuron at xi and the neuron at xj. Let

be the weighted average rate of change of pulse emissions received or emitted by the neuron at xi, where is a nontrivial solution of Equation (5) with L = 1. Some authors like Lin et al. (2009) specify the type of connection, by dividing the connectivities or synaptic strengths Wij into a group of receptive signals called “feed backward” and a group of emission signals called “feed forward.” Here we make no such specification. Recall that . Henceforth, we fix hn = h > 0 for all n so that .

THEOREM 13. Let Ω ⊆ ℝ be a bounded region. Let M be a positive integer. Let x = (xi)1≤i≤M be a vector of M interconnected points on Ω via a connectivity function W(·, ·).

Let V∗(x) = (Vi,∗)1≤i≤M be a nontrivial fixed point of the DDNFs on Ω.

PROOF. We have . Let xi ∈ Ω and let Vi,∗ be a nontrivial fixed point of the DDNFs with one layer. Let 1 ≤ j ≤ M and xj ∈ Ω. The DDNFs with one layer in Equation (5) can be written as

and element-wise as

The function f : ℝM → ℝ is a continuously differentiable function since the function G : ℝ → ℝ is, and given 1 ≤ i, p ≤ M, we have that

where δij is the Kronecker symbol with δij = 1 if i = j and δij = 0 if i ≠ j. It follows that

We define the adjacency matrix as the Jacobian matrix A = Jf[V∗(x)] where A = (aij) with . A is a M × M matrix and let ρ(A) be its spectral radius. Therefore, we have

Since 0 < α < 1, the last equality is equivalent to

It follows that if , then we have that and consequently, V∗(x) is asymptotically stable.

REMARK 14.

The condition links the size of the domain Ω and other parameters such as the rate of change of the pulse emission function G, the connectivity function W(x, y) and the number of connected neurons M. Indeed, . Therefore, achieving requires . This means that to ensure stability of the fixed solution V∗(x), it is enough to have weighted rates of change of pulse emission be bounded by 1 for each neuron. This condition is obviously true if the neuron at xi is not connected with the neuron at xj since Wij would be zero. In fact the latter corresponds to the trivial fixed solution V∗(x) = 0.

3.3.2. Special Case of Single Layer Model With a Heaviside Pulse Emission Function

Suppose the pulse emission function G(x) is the Heaviside function G2(v, v0). There are two possible approaches to discuss the stability of the nontrivial solution. First, we observe that the derivative of G2(v, v0) exists in the sense of distribution and is given as where δ(v0) is the Dirac measure at v0. It can be written as if v ≠ v0 and if v = v0. This means that for a nontrivial solution, the adjacency matrix A defined above is reduced to the diagonal matrix A = diag(α). It follows that ρ(A) = α < 1 and the nontrivial solution V∗(x) is asymptotically stable. Another approach is to note that Equation (5) with one layer (L = 1) can be written as

where

with

Moreover, we have

Suppose that for all n ∈ ℕ and for all x ∈ Ω, Sn(x) and W(x, y) are bounded by say S and W, respectively. Let Ym = (1 − α)[S + |Ω|W]. For all 1 ≤ i ≤ M, for xi ∈ Ω, and n ∈ ℕ we have |Yi,n+1| ≤ Ym, and

This shows that exists and defines the state of the field overtime. If , then the field settles to an inhibitory phase overtime, and if , the field settles in an excitatory phase overtime. In general Si,n = ν+Ui,n where ν is a negative real number referred to as the resting level that ensures that the DNFs produce no output in the absence of external input Ui,n. If Ui,n ≡ 0 and V0(x) < 0 for all x ∈ Ω, then , since λi,n−k = 0 for all k = 0, ⋯, n and for all 1 ≤ i ≤ M. Hence we will have , see section 3.5.1 below for an illustration.

3.4. Multiple-Layers DDNFs Model

Let 1 ≤ k, m ≤ L. Consider the DDNFs with L layers given above and let xi, xp on the kth layer.

Consider a fixed point solution . Given a layer 1 ≤ k ≤ L and a neuron at position xi on it, there are two sources of variability for the potential : firstly, the variability due to the Mk neurons on the kth layer connected to the neuron at position xi, and secondly, the variability due to the neurons located on possibly two layers (k − 1) and (k + 1) connected to the kth layer. Therefore, let 1 ≤ m ≤ L and let xq be a point on the mth layer. By the chain rule, we have

where the variability on the kth layer is

From Equation (7), we obtain the variability due to layers k − 1 and k + 1:

Let . We recall that . Now we consider a M × M super-adjacency matrix , where A(k,m) is a Mk × Mm block matrix defined as , where with

and

Consequently, A(k,m) = 0 if m ∉ Lk, where 0 represents the zero matrix. Therefore, the super-adjacency matrix can be written as

Clearly, A is a block tridiagonal matrix that reduces to a block diagonal matrix if the pulse emission rate function G is the Heaviside function and to single M × M diagonal matrix if L = 1. There is a rich literature on the subject of tridiagonal matrices and the research is still on-going. There is no close form formula for the eigenvalues of A since they are not obvious to calculate, except for some special cases.

3.4.1. Special Case: Single Neuron Multiple-Layers DDNFs

It special case that is worth mentioning since all L layers have exactly one neuron and they are interconnected. In fact in this case, Mk = 1, βk = |Ω| for 1 ≤ k ≤ L, and the super adjacency matrix reduces to an L × L tridiagonal matrix

where from Equations (11) and (12), one has

We observe that K(k,k) is the weighted rate of change of the pulse emission function on the kth layer, and K(k,k+1) can be regarded as the transitional weighted rate of change of the pulse emission function from layer k to layer k + 1. Likewise, K(k−1,k) is the transitional weighted rate of change of the pulse emission function from layer k − 1 to layer k. From Molinari (2008), the eigenvalues of A are given by the equation

Suppose further that a1 = a2 = ⋯ = aL = a, b1 = b2 = ⋯, bL−1 = b, and c1 = c2 = ⋯cL−1 = c. This means that the weighted rates of change of the pulse emission function for 1 ≤ k ≤ L, for 1 ≤ k ≤ L − 1, and for 2 ≤ k ≤ L. From Kulkarni et al. (1999), the eigenvalues of A will given by

Now for 1 ≤ k ≤ L, put

We therefore have the following result.

THEOREM 15. Suppose we are in the presence of a DDNFs with L layers with M = 1 point each, interconnected via a positive connectivity function W and a rate pulse emission rate function G whose rate of change is identical on all layers. Then

1. The fixed point V∗(X) is asymptotically stable if and only if .

2. The fixed point V∗(X) is unstable if and only if .

3. The fixed point V∗(X) is maybe stable or unstable if .

PROOF. By hypothesis, a = α + (1 − α)|Ω|K0, b = [α + (1 − α)|Ω|K0]|Ω|K1, and c = [α + (1 − α)|Ω|K0]|Ω|K2, for some K0, K1, K2 > 0. For 1 ≤ k ≤ L, the eigenvalues are given as

From Thereom 3, the proof is complete.

REMARK 16.

1. It is worth observing that in case L = 1 and M = 1, then K1 = K2 = 0 and thus μ1 = α + (1 − α)|Ω|K0. We see that |μ1 < 1 if |Ω|K0 < 1 yielding the condition obtained in the one-layer-model.

2. We also note that in fact and therefore the stability condition links all the parameters of system.

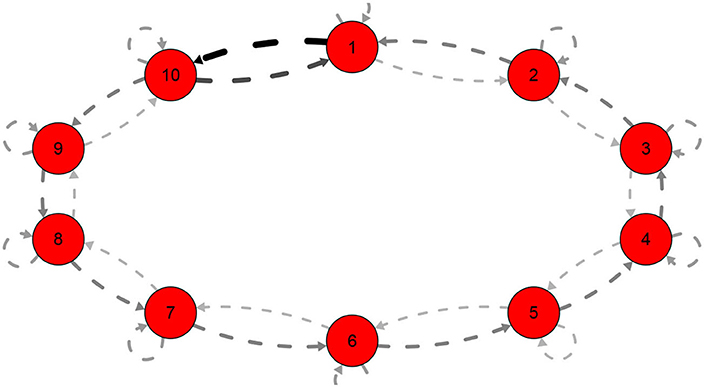

3. This special cases is also important in that we can imagine a large number of neurons supposedly placed on individual layers and interconnected in a forward or backward manner only and each receiving external input. In case the first and last neurons are connected so that the system forms a weighted closed graph, like in Figure 4, where the thickness represents the weights.

Then the super-adjacency matrix given as

According to Molinari (2008), the eigenvalues are given by

3.4.2. Special Case: Single Neuron Two-Layer DDNFs

We now discuss a single neuron two-layer DDNFs. In this case, we still have Mk = 1 for k = 1, 2 and L = 2. It follows that the super-adjacency matrix is given as where from Equations (11) and (12), we have

Let

We have the following stability result.

THEOREM 17. Consider a two-layer DDNFs with one neuron each. Then the nontrivial solution V∗(X) is asymptotically stable if and if the following conditions are satisfied:

1. 0 < θ < 1,

2. μ < 1,

3. .

PROOF. By the determinant-trace analysis, see for instance Elaydi (2007), it is enough to prove that the above conditions are equivalent to

The determinant of the super-adjacency matrix is

Also

Therefore since θ < 1 and μ < 1, we have that det(A) < 1. Let . The discriminant of P(α) is . Therefore P(α) is positive if Δ < 0 with θ > 0, that is, with θ > 0.

REMARK 18.

1. We note that the inequality θ < 1 must be strict, otherwise the third condition in the theorem would be false. To see why, observe that θ = 1 ⇒ L = 1 thus K(2,2) = 0. Hence and μ = αK(1,1). Therefore, , that is, which is impossible since the latter would be equivalent to saying ((α − |Ω|)K(1,1))2 < 0.

2. We observe that the condition 0 < θ < 1 requires |Ω|2K(1,2)K(2,1) < 1. What is striking about it is that is achieved if , similarly to Remark 14 above.

3.5. Simulations

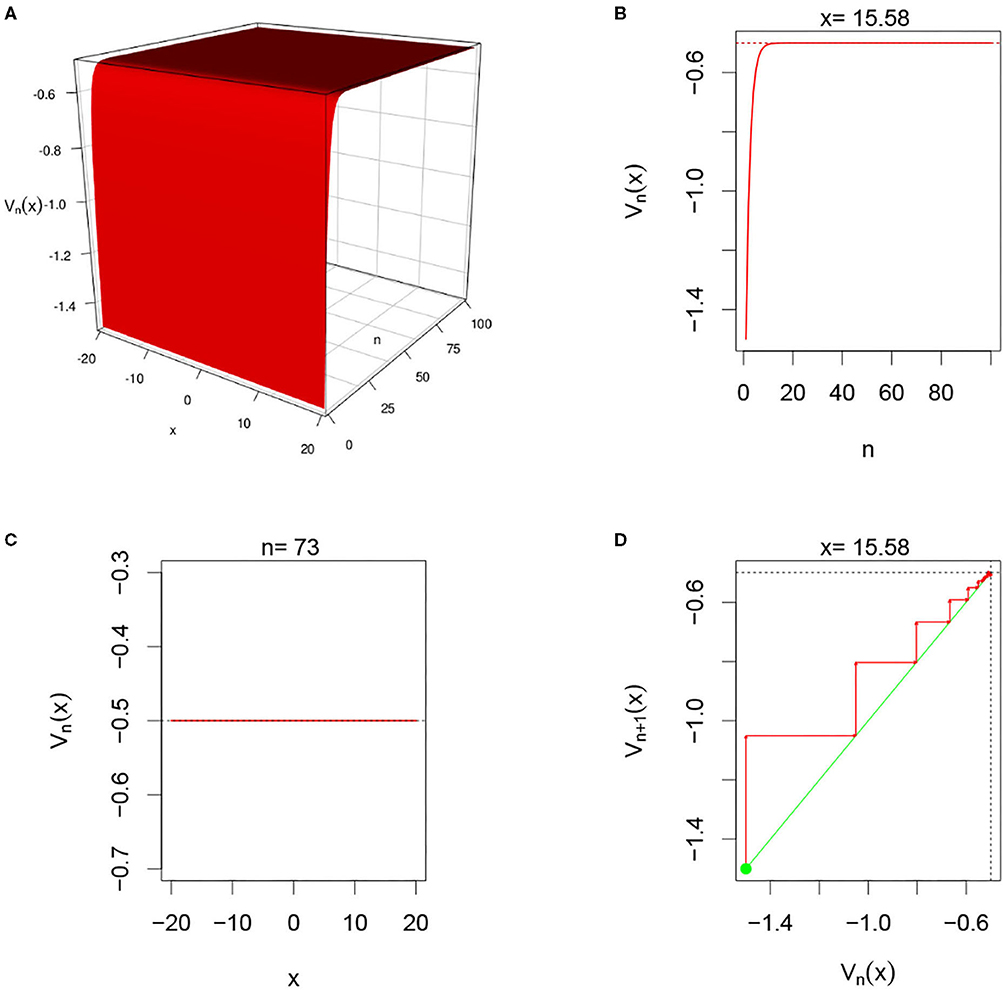

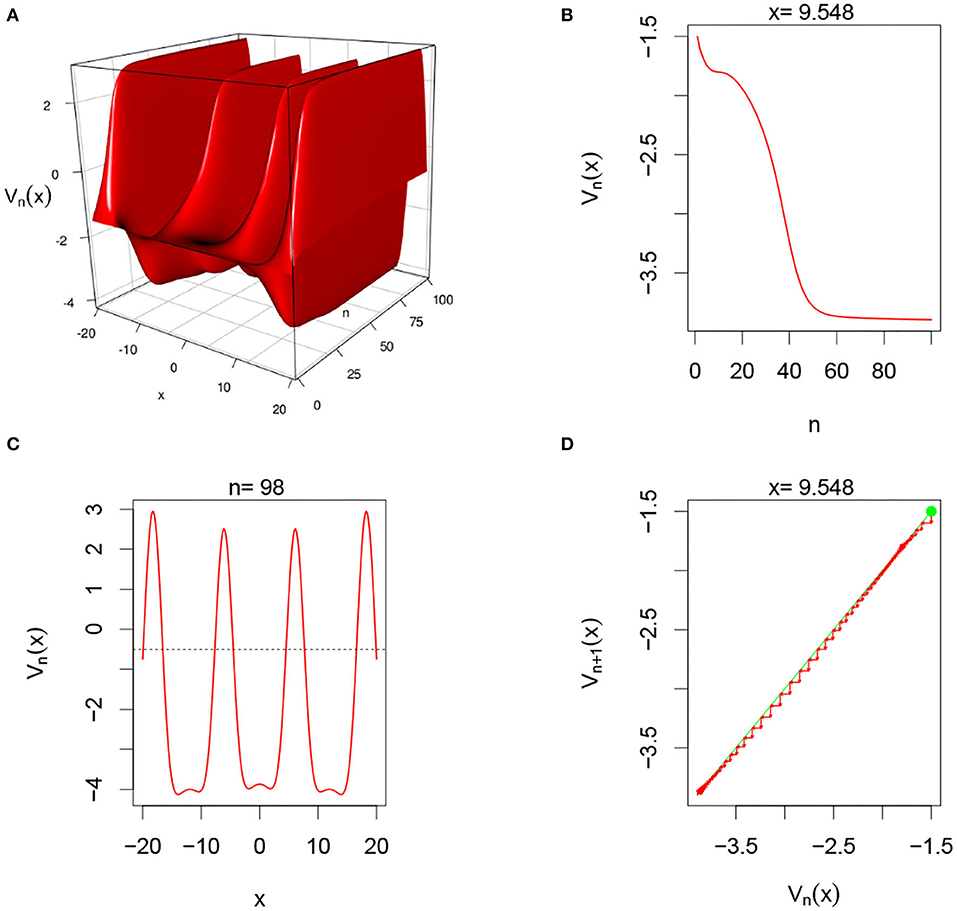

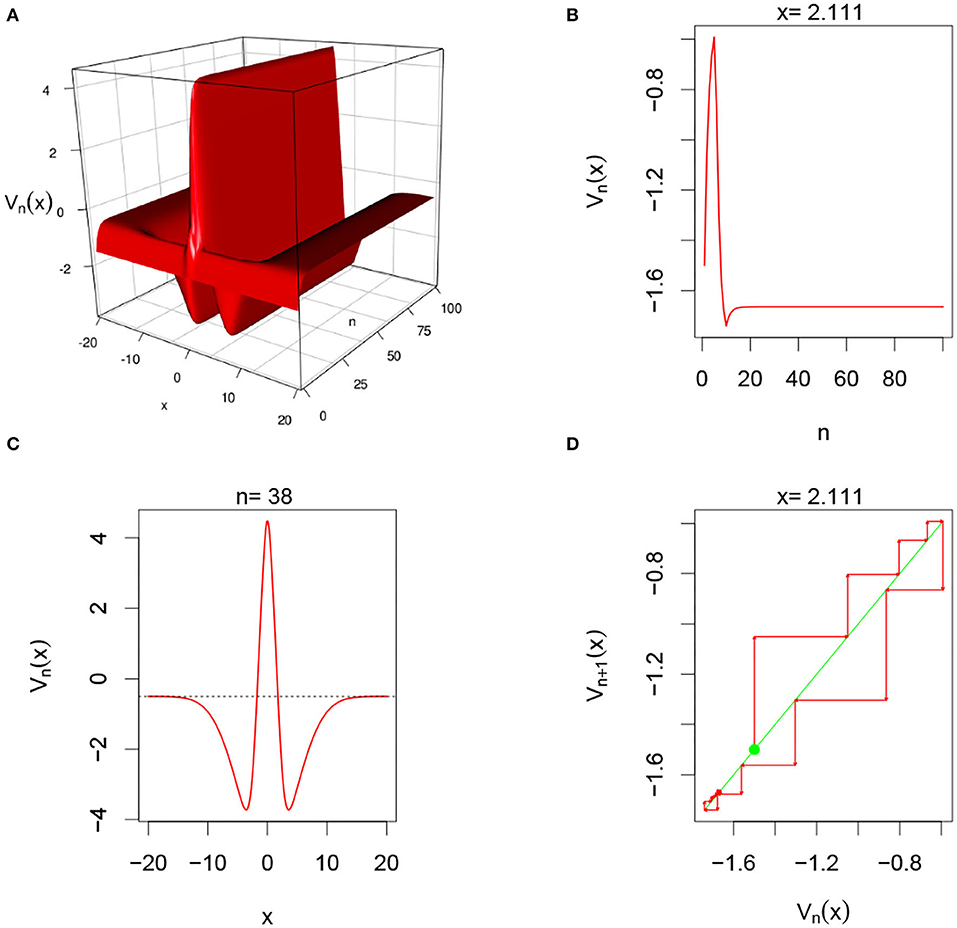

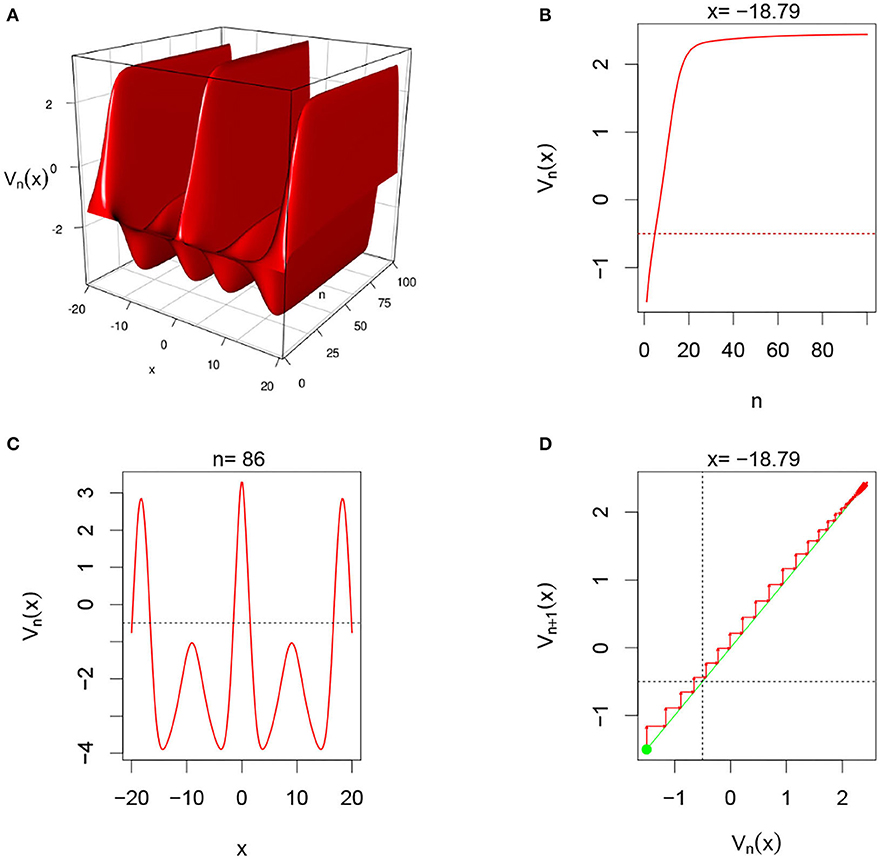

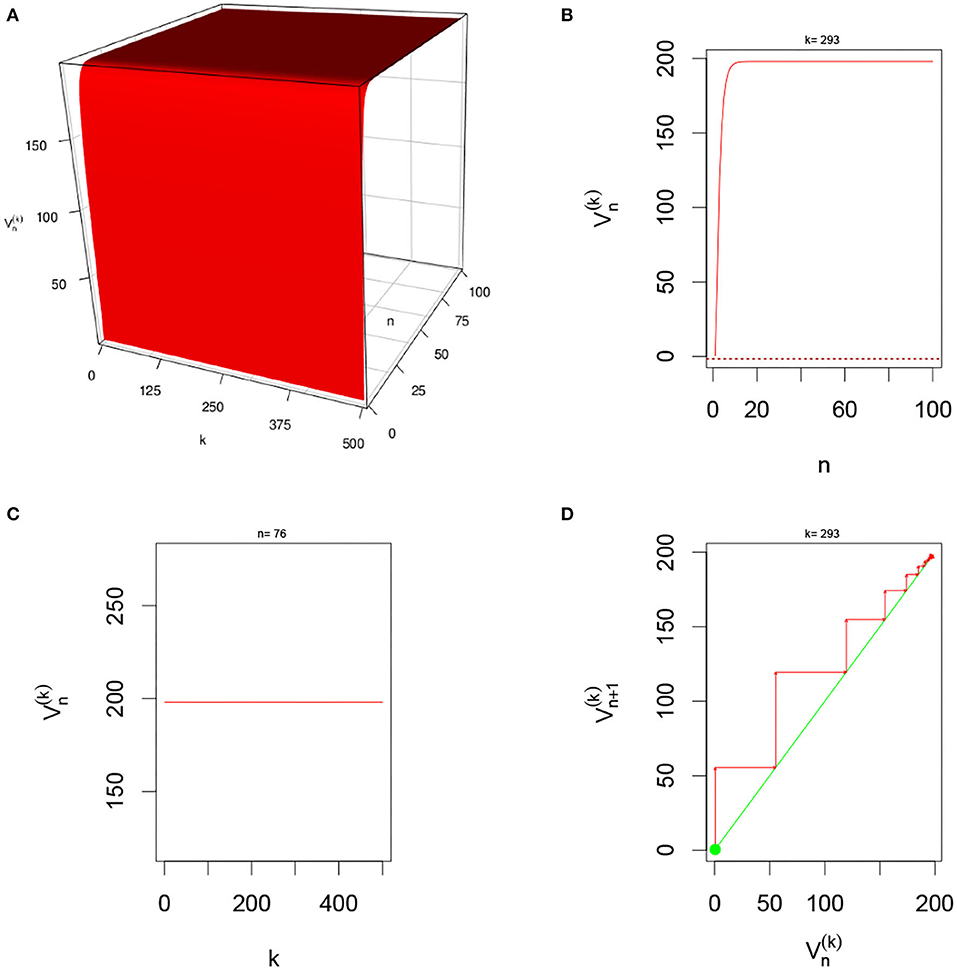

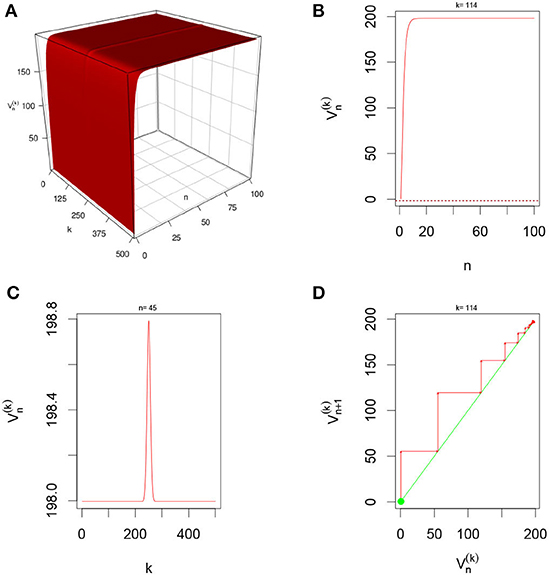

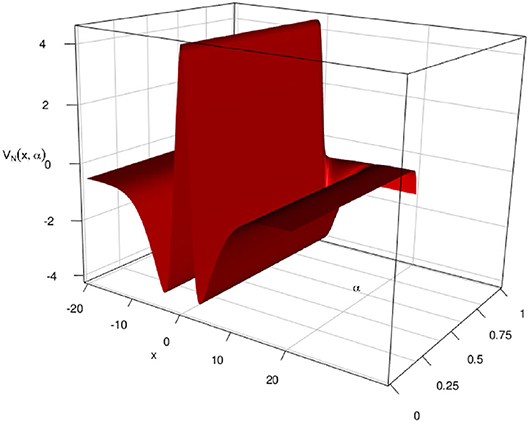

There are multiple parameters that affect the stability of a fixed point solution to the DDNFs: the choice of the kernel function W(x, y), the pulse emission rate function G, parameters such the size of the field |Ω|, the time scale function ϕ(h), and the number of points per layer M, and the length of time N. In these simulations, we will consider the Ω = [−20, 20], h = 0.8, N = 100, M = 200, and the , with , and σ2 = 4.5. We select a resting phase ν = −0.5. To initialize our dynamical system, we will choose Vi, 0 = −1.5 for i = 1, 2, ⋯, M. In Figures 5–8, below, (a) is the three dimension representation of Vn(x): = V(x, n), (b) represents Vn(x) as a function of n for a given x value, (c) represents Vn(x) as function of x for a given n value, and (d) represents the cobweb diagram for a given x value, namely a plot of Vn+1(x) vs. Vn(x) for which the green dot represents the starting point V0(x) = −1.5 and the red arrows connecting the points with coordinates [Vn(x), Vn(x)] and [Vn(x), Vn+1(x)] to show the evolution of the dynamical system overtime. Convergence occurs when the arrows stop evolving, and oscillations occurs when they circle around indefinitely. In the multi-layer case, we let L = 250.

Figure 5. Here Ui,n = 0 and G(v) = G1(v). (A–D) We observe from all graphs that Vn(x) quickly settles to the resting level ν = −0.5 confirming the theoretical results in section 3.3.2.

3.5.1. Single-Layer With Heaviside Function and No External Input

In this simulation, we consider single-layer DDNFs with Ui,n = 0 with a Heaviside pulse emission function, see Figure 5 below.

3.5.2. Single-Layer With Sigmoid Function and No External Input

In this simulation, we consider single-layer DDNFs with Ui,n = 0 but with a sigmoid pulse emission function, see Figure 6 below.

Figure 6. Here Ui,n = 0 and G(v) = G2(v). From (A), we observe that system alternating between inhibitory and excitatory phases. (B,D) Show that for fixed x = 9.548, the system converges overtime. In (C), we clearly see the oscillatory regime of the system in the space domain for fixed n = 98.

3.5.3. Single-Layer With Heaviside Function With Gaussian External Input

In this simulation, we consider single-layer DDNFs with a Heaviside Pulse emission function with a Gaussian external input , see Figure 7 below.

Figure 7. Here and G(v) = G1(v). From (A), we observe that system has an excitatory phase around 0 and an inhibitory phase farther away. (B,D) Show that for fixed x = 2.111, the system converges overtime. In (C), we clearly observe a high level excitation occurring around 0, and the system settling back to its resting phase farther away from 0 for fixed n = 38.

3.5.4. Single-Layer With Sigmoid Function With Gaussian External Input

In this simulation, we consider single-layer DDNFs with a Sigmoid Pulse emission function with a Gaussian external input , see Figure 8 below.

Figure 8. Here and G(v) = G2(v). This case is similar to the second case above. From (A), we observe that system also alternates between inhibitory and excitatory phases. (B,D) Show that for fixed x = −18.79, the system converges overtime. In (C), we clearly the oscillatory regime of the system in the space domain for fixed n = 86. The system in this case is more frequently inhibitory than excitatory.

3.5.5. Multiple-Layer With Sigmoid Function With No External Input

In this simulation, we consider Multiple-layer DDNFs with a Sigmoid pulse emission rate function with no external input, Ui,n = 0, see Figure 9 below.

Figure 9. Here Ui,n = 0 and G(v) = G2(v) with L = 200 and M = 1 neuron per layer. From (A), we observe that system quickly excitatory and settles into constant state overtime. (B,D) Show that for fixed k = 293, the system converges overtime. In (C), we clearly see the constant state for n = 76.

3.5.6. Multiple-Layer With Sigmoid Function With Gaussian External Input

In this simulation, we consider Multiple-layer DDNFs with a Sigmoid pulse emission rate function with a Gaussian external input, Ui,n = 0, see Figure 10 below.

Figure 10. Here and G(v) = G2(v) with L = 200 and M = 1 neuron per layer. From (A), we observe that system is quickly excitatory and settles into constant “Gaussian” state overtime. (B,D) Show that for fixed k = 114, the system converges overtime. In (C), we clearly see the “Gaussian” state for n = 45 with peaks located at around L = 250.

3.5.7. Effect of α

In this simulation, we consider VN(x, α) where N = 100 for different values of α. Since the convergence to the fixed solution is quick, we expect VN(x, α) to be independent of α at n = N = 100, in that any cross-section of the three dimensional plot VN(x, α) should be the same. This is shown in Figure 11 below for a G(v) = G1(v) and .

Figure 11. Here and G(v) = G2(v) with L = 1 and M = 200 neuron per layer. We see that the cross-sections are as in Figure 7 above and the “flaps” at the end represent the case where α = 1, which amounts to the constant case.

4. Discussion

In this paper, we have proposed a discrete model for dynamical neural fields. We have proposed another proof of the existence of nontrivial solutions for dynamic neural fields. We have discussed its stability of nontrivial solutions of dynamic neural field for some particular cases. The simulations we propose capture very well the notion of stability on dynamic neural field. Below are some observations drawn from this analysis.

1. We observe that the cases of no external input Ui,n with one layer and multiple neurons and multiple layers with one neuron each are very similar, albeit the shape of the potential function . In fact, this is not surprising since multiple layers with one neurons each amount to one layer with neurons connected like in a graph.

2. An important observation is that of the stability conditions depend on the knowledge of a nontrivial solution V∗ for the DDNFs. Two things to note about V∗(X) are:

(a) We can not obtain V∗(X) in a close form. We may however rely on estimates like the one proposed in Kwessi (2021). Even in the latter case, using an estimate may proved delicate because of limited accuracy.

(b) If one cannot use a true value for V∗(X), one may use the properties of the pulse emission functions such as the Heaviside or sigmoid functions that are in most cases in applications bounded.

3. We note the size of the domain Ω is an important parameter for the shape of .

4. As for the types of kernels, we note that Gaussian and Laplacian kernel tends to produce similar results that are sometimes very different from those produced by hyperbolic tangent kernels.

5. We note that the choice of α = e−h is similar to an Euler forward discretization with where dt ≪ τ. This choice is made for simplification purposes and also out of an abundance of caution due to the fact for certain one-dimension systems, the Euler forward discretization have been shown not to always preserve the true dynamics from the ordinary differential equation, see for instance Kwessi et al. (2018).

6. Another important note is that the parameter α = 1 was not found to be a bifurcation parameter, thus the bifurcation diagram will not be given for sake of simplicity.

7. We acknowledge that in the two special cases discussed for multiple-layers DDNFs, so far, at least theoretically, the results apply only to positive connectivity functions W(x, y) because we rely on results previously established in the current literature. Simulations however suggest that they may also extend to negative connectivity functions and it would a worthwhile exercise to see how to establish these results theoretically.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Author Contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Amari, S.-I. (1977). Dynamics of pattern formation in lateral-inhibition type neural fields. Biol. Cybern., 27, 77–87. doi: 10.1007/BF00337259

Beim, P. G., and Hutt, A. (2014). Attractor and saddle node dynamics in heterogeneous neural fields. EPJ Nonlin. Biomed. Phys. EDP Sci. 2:2014. doi: 10.1140/epjnbp17

Beurle, R. L. (1956). Properties of a mass of cells capable of regenerating pulses. Philos. Trans. R. Soc. Lond. B 240. 55–94.

Bicho, E., Louro, L., and Erlhangen, W. (2010). Integrating verbal and non-verbal communication in adynamic neural field for human-robot interaction. Front. Neurorobot. 4:5. doi: 10.3389/fnbot.2010.00005

Bicho, E., Mallet, P., and Schöner, G. (2000). Target representation on an autonomous vehicle with low-levelsensors. Int. J. Robot. Res. 19, 424–447. doi: 10.1177/02783640022066950

Camperi, M., and Wang, X.-J. (1998). A model of visuospatial short-term memory in prefrontal cortex: recurrent network and cellular bistability. J. Comput. Neurosci. 4, 383–405. doi: 10.1023/A:1008837311948

De Domenico, M., Solé-Ribalta, A., Cozzo, E., Kivelä, M., Moreno, Y., Porter, M. A., et al. (2013). Mathematical formulation of multilayer networks. Phys. Rev. X 3:041022. doi: 10.1103/PhysRevX.3.041022

Durstewitz, D. (2017). Advanced Data Analysis in Neuroscience. Bernstein Series in Computational Neuroscience. Cham: Springer.

Durstewitz, D., Seamans, J. K., and Sejnowski, T. J. (2000). Neurocomputational models of working memory. Nat. Neurosci. 3(Suppl.), 1184–1191. doi: 10.1038/81460

Erlhagen, W., and Bicho, E. (2006). The dynamics neural field approach to cognitive robotics. J. Neural Eng. 3, R36–R54. doi: 10.1088/1741-2560/3/3/R02

Erlhagen, W., and Schöner, G. (2001). Dynamic field theory of movement preparation. Psychol. Rev. 109, 545–572. doi: 10.1037/0033-295x.109.3.545

Ermentrout, G. B., and Cowan, J. D. (1979). A mathematical theory of visual hallucination patterns. Biol. Cybern. 34, 137–150. doi: 10.1007/BF00336965

Hammerstein, A. (1930). Nichtlineare integralgleichungen nebst anwendungen. Acta Math. 54, 117–176. doi: 10.1007/BF02547519

Jin, D., Qin, Z., Yang, M., and Chen, P. (2021). A novel neural modelwith lateral interactionfor learning tasks. Neural Comput. 33, 528–551. doi: 10.1162/neco_a_01345

Kulkarni, D., Scmidt, D., and Tsui, S. K. (1999). Eigen values of tridiagonal pseudo-toeplitz matrices. Linear Algeb. Appl. 297, 63–80.

Kwessi, E. (2021). A consistent estimator of nontrivial stationary solutions of dynamic neural fields. Stats 4, 122–137. doi: 10.3390/stats4010010

Kwessi, E., and Edwards, L. (2020). “Artificial neural networks with a signed-rank objective function and applications,” in Communication in Statistics–Simulation and Computation. 1–26. doi: 10.1080/03610918.2020.1714659

Kwessi, E., Elaydi, S., Dennis, B., and Livadiotis, G. (2018). Nearly exact discretization of single species population models. Nat. Resour. Model. 31:e12167. doi: 10.1111/nrm.12167

Lasota, A., and Mackey, M. C. (2004). Chaos, Fractals, and Noise. Applied Mathematical Sciences. New York, NY: Springer-Verlag.

Lin, H.-T., and Lin, C.-J. (2003). A study on sigmoid kernels for svm and the training of non-psd kernels by smotype methods. Neural Comput. 13, 2119–2147.

Lin, K. K., Shea-Brown, E., and Young, L.-S. (2009). Spike-time reliability of layered neural oscillator networks. J. Comput. Neurosci. 27, 135–160. doi: 10.1007/s10827-008-0133-3

Molinari, L. G. (2008). Determinant of block tridiagonal matrices. Linear Algeb. Appl. 429, 2221–2226. doi: 10.1016/j.laa.2008.06.015

Neumann, K., and Steil, J. J. (2011). “Batch intrinsic plasticity for extreme learning machines,” in International Conference on Artificial Neural Networks (Berlin: Springer), 339–346.

Nunez, P. L., and Srinivasan, R. (2006). Electric Fields of the Brain: The Neurophysics of EEG, 2nd Edn. Oxford University Press. doi: 10.1093/acprof:oso/9780195050387.001.0001

Perone, A., and Simmering, V. R. (2019). Connecting the dots: finding continuity across visuospatial tasks and development. Front. Psychol. 2019:1685. doi: 10.3389/fpsyg.2019.01685

Pozo, K., and Goda, Y. (2010). Unraveling mechanisms of homeostatic synaptic plasticity. Neuron 66, 337–351. doi: 10.1016/j.neuron.2010.04.028

Quinton, J. C., and Goffart, L. (2017). A unified dynamic neural field model of goal directed eye-movements. Connect. Sci. 30, 20–52. doi: 10.1080/09540091.2017.1351421

Schmidt, B. K., Vogel, E. K., Woodman, G. F., and Luck, S. J. (2002). Voluntary and automatic attentional control of visual working memory. Percept. Psychophys. 64, 754–763. doi: 10.3758/bf03194742

Simmering, A. R., Schutte, V. R., and Spencer, J. P. (2008). Generalizing the dynamic field theory of spatial cognition across real and developmental time scales. Brain Res. 1202, 68–86. doi: 10.1016/j.brainres.2007.06.081

Strub, C., Schöner, G., Wörgötter, F., and Sandamirskaya, Y. (2017). Dynamic neural fields with intrinsic plasticity. Front. Comput. Neurosci. 11:74. doi: 10.3389/fncom.2017.00074

Sussilo, D., and Barack, O. (2013). Opening the black box: low-dimensional dynamics in high-dimensional recurrent neural networks. Neural Comput. 25, 626–649. doi: 10.1162/NECO_a_00409

Tass, P. (1995). Cortical pattern formation during visual hallucinations. J. Biol. Phys. 21, 177–210.

Wijeakumar, S., Ambrose, J. P., Spencer, J. P., and Curtu, R. (2017). Model-based functional neuroimaging using dynamic neural fields: an integrative cognitive neuroscience approach. J. Math. Psychol. 76(Pt B), 212–235. doi: 10.1016/j.jmp.2016.11.002

Williams, R. J., and Zipser, D. (1990). A learning algorithm for continually running fully recurrent neural networks. Neural Comput. 1, 256–263.

Wilson, H. R., and Cowan, J. D. (1972). Excitatory and inhibitory interactions in localized populations ofmodel neurons. Biophys. J. 12, 1–24. doi: 10.1016/S0006-3495(72)86068-5

Keywords: dynamic neural fields, discrete, stability, simulations, neurons

Citation: Kwessi E (2021) Discrete Dynamics of Dynamic Neural Fields. Front. Comput. Neurosci. 15:699658. doi: 10.3389/fncom.2021.699658

Received: 23 April 2021; Accepted: 02 June 2021;

Published: 08 July 2021.

Edited by:

Feng Shi, Amazon, United StatesReviewed by:

Jeremy Fix, CentraleSupélec, FranceFlora Ferreira, School of Sciences, University of Minho, Portugal

Copyright © 2021 Kwessi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Eddy Kwessi, ekwessi@trinity.edu

Eddy Kwessi

Eddy Kwessi