Abstract

We present strongly convergent explicit and semi-implicit adaptive numerical schemes for systems of semi-linear stochastic differential equations (SDEs) where both the drift and diffusion are not globally Lipschitz continuous. Numerical instability may arise either from the stiffness of the linear operator or from the perturbation of the nonlinear drift under discretization, or both. Typical applications arise from the space discretization of an SPDE, stochastic volatility models in finance, or certain ecological models. Under conditions that include montonicity, we prove that a timestepping strategy which adapts the stepsize based on the drift alone is sufficient to control growth and to obtain strong convergence with polynomial order. The order of strong convergence of our scheme is (1 − ε)/2, for ε ∈ (0,1), where ε becomes arbitrarily small as the number of finite moments available for solutions of the SDE increases. Numerically, we compare the adaptive semi-implicit method to a fully drift-implicit method and to three other explicit methods. Our numerical results show that overall the adaptive semi-implicit method is robust, efficient, and well suited as a general purpose solver.

Similar content being viewed by others

1 Introduction

Consider the d-dimensional semi-linear stochastic differential equation (SDE) of Itô type

where T > 0, \(A\in \mathbb {R}^{d\times d}\), \(f:\mathbb {R}^{d}\to \mathbb {R}^{d}\), \(g:\mathbb {R}^{d}\to \mathbb {R}^{d\times m}\), and W is an m-dimensional Wiener process. We suppose that the drift coefficient f and the diffusion coefficient g together satisfy polynomial bounds and a monotone condition permitting g to grow superlinearly as long as that growth is countered sufficiently strongly by f. Global Lipschitz bounds are not required. For example, consider f(x) = −x2 with g(x) = x3/2 or f(x) = −x5 with g(x) = x2. Such applications arise in finance: for example, the Lewis stochastic volatility model [17] which has a polynomial diffusion coefficient of order 3/2. It was shown in [11] that the explicit Euler-Maruyama method with constant stepsize fails to converge in the strong sense to solutions of (1) if either the drift or the diffusion coefficients grow superlinearly. Also, as noted in [4], fixed stepsize schemes may need to use very small stepsizes when the SDE being solved is stiff. We address these issues here by a semi-implicit scheme with adaptive timestepping.

In [14], a class of timestepping strategies, referred to as admissible, was motivated for the numerical discretization of SDEs where the drift satisfies a one-sided Lipschitz coefficient and the diffusion satisfies a global Lipschitz bound. An admissible strategy uses the present value of the numerical trajectory to select the next timestep to avoid spuriously large drift responses. This is distinct from the error control approach in (for example) [4, 5, 13].

Timesteps selected by an admissible strategy are subject to upper and lower limits \(h_{\max \limits }\) and \(h_{\min \limits }\) in a fixed ratio ρ, with \(h_{\max \limits }\) serving as a convergence parameter and \(h_{\min \limits }\) serving to ensure that the simulation completes in a reasonable time. If the strategy attempts to select a timestep smaller than \(h_{\min \limits }\), then a backstop method is applied instead over a single step of length \(h_{\min \limits }\). It was proved in [14] that the explicit Euler-Maruyama method over a random mesh generated by an admissible timestepping strategy is strongly convergent in \(h_{\max \limits }\) with order 1/2. The proof relied upon pth-moment bounds on the supremum of solutions of the underlying SDE. Note also the adaptive approach in [6] which is consistent with the admissibility condition of [14].

Here, we examine more general SDEs and consider simultaneously both explicit and semi-implicit Euler-Maruyama schemes. Due to the monotone condition on the drift and diffusion terms, our analysis must contend with only a finite number of available bounded SDE moments (see for example the estimates provided by parts (i) and (ii) of Lemma 4). Unlike in [14], we characterize precisely the backstop scheme and integrate it into the analysis in a way that is compatible with taking a random number of timesteps. In this way, we show that a class of admissible timestepping strategies depending only on the drift response can be used to ensure that both the explicit and semi-implicit adaptive Euler-Maruyama schemes are strongly convergent to solutions of (1) with order (1 − ε)/2, in the sense that for any ε ∈ (0,1), there exists Cε > 0, independent of \(h_{\max \limits }\) such that

where \(\widetilde Y_{N}\) is value of the numerical scheme at time T, and ∥⋅∥ is the l2 norm. The reduction in the order of strong convergence in our main result (when compared to that in [14]) is a direct consequence of the loss of global Lipschitz continuity in the diffusion coefficient. If we reimpose global Lipschitz continuity on the diffusion, we recover a strong convergence order of 1/2, and if we decompose the drift of (1) so that A = 0, we recover the main result of [14]: see Remark 6 for more details of this.

The nature of the monotone condition is such that a timestepping scheme which is admissible, and which can therefore successfully control the drift response, will also be sufficient to control the diffusion response. It is well documented that the structure of the drift function (both linear and nonlinear) under discretization may have local dynamics that render the stability of equilibria vulnerable to the effects of perturbation, either stochastic or numerical [1, 3, 7, 8, 11].

Our method handles stiffness leading to potential instability in the discretization in two distinct ways. Where there is a classic (deterministic) stiff linear system, we are able to treat this term implicitly without sacrificing numerical efficiency. Adaptive timestepping then treats nonlinear stability under stochastic perturbation. Thus, we deal with each source of potential instability separately, as would a stochastic IMEX-type method. The use of an implicit approach to deal with the linear part of the drift avoids any consideration of potential interactions between it and the diffusion or between it and the nonlinear part of the drift. Note that the decomposition of the drift into the form AX(t) + f(X(t)) is determined by the modeller, and when A = 0 the convergence analysis in this article applies equally to a fully explicit method if desired.

The literature already contains numerical schemes with fixed stepsizes that converge strongly to solutions of SDEs with coefficients that satisfy local Lipschitz and monotone conditions. Several of these extend the idea of taming as introduced in [12], which rescales the functional response of the drift coefficient in the scheme; they do so by allowing the entire stochastic Euler map to be rescaled by some combination of drift and diffusion responses. For example, see the balanced method introduced in [30] and the variant presented in [25], which are both strongly convergent in this setting. The projected Euler method of [2] handles runaway trajectories by projecting them back onto a ball of radius inversely proportional to the step size; hence, the authors control moments of the numerical solution. It was shown in [24] that a drift-implicit discretization could also ensure strong convergence in our setting. Finally we highlight [10], which treats SDEs and SPDEs with non-globally monotone coefficients.

In Section 5, we compare the numerical performance of a selection of these methods to that of the adaptive scheme presented in this article. We note this selection cannot be exhaustive and there are a growing number of variations; see for example [9, 22, 23, 26, 29, 31]. However, our examples illustrate some of the drawbacks of fixed-step explicit schemes (when linear stability is an issue) and where for fixed, relatively large h, the taming perturbation which imposes convergence may change the dynamics of the solution. Compared to the fixed-step explicit methods, our numerical results show that the semi-implicit adaptive method gives consistently reliable numerical convergence results. It is also more efficient than the drift-implicit scheme for SODEs, though this comparison is less clear for the SPDE example.

The structure of the article is as follows. In Section 2, we describe the monotone condition and polynomial bounds that must be satisfied by f and g, and provide the pth-moment bounds satisfied by the solutions of (1) within that framework. In Section 3, we introduce the semi-implicit Euler-Maruyama method that, applied stepwise over a random mesh and combined with an appropriate backstop method, is the focus of the article. A mathematical definition for meshes produced by admissible timestepping strategies is provided, and conditional moment bounds for the SDE solution associated with these meshes are derived. In Section 4, we present our main convergence result and state several technical lemmas, with proofs provided in Section 6. In Section 5, we carry out a comparative numerical investigation of strongly convergent schemes from the selection discussed above.

2 Setting

Throughout the paper, \(\mathbb {N}\) denotes the set {0,1,2,…}, ∥⋅∥ denotes the l2 norm of a d-dimensional vector, ∥⋅∥F the Frobenius norm of a d × m-dimensional matrix, and for any \(x\in \mathbb {R}^{d}\) and i = 1,…,m, gi(x) denotes the ith column of the diffusion coefficient matrix g(x). For \(a,b\in \mathbb {R}\) let a ∨ b denote \(\max \limits \{a,b\}\). For any \(A\in \mathbb {R}^{d\times d}\), we let \(A^{1/2}\in \mathbb {C}^{d\times d}\) denote the matrix such that (A1/2)2 = A. Let \((\mathcal {F}_{t})_{t\geq 0}\) be the natural filtration of W. To ensure the existence of a unique strong solution for (1) (in the sense of [21, Definition 2.2.1]) over the interval [0,T], it suffices to place local Lipschitz and monotone conditions on f and g:

Assumption 1

For each R > 1 there exists LR > 0 such that

for \(x,y\in \mathbb {R}^{d}\) with ∥x∥∨∥y∥≤ R, and there exists c ≥ 0 such that for some p ≥ 0

We also require a set of polynomial bounds on the derivatives of f and g, and hence on f and g themselves. The minimum value of p in (2) required to prove our main strong convergence result depends on the order of these bounds.

Assumption 2

Suppose \(f:\mathbb {R}^{d}\to \mathbb {R}^{d}\) and \(g:\mathbb {R}^{d}\to \mathbb {R}^{d\times m}\) are continuously differentiable with derivatives bounded as follows: for some cj,γ0,γ1 ≥ 0; j = 1,…,4, we have

where \(Df(x)\in \mathbb {R}^{d\times d}\) is the matrix of partial derivatives of f, and \(Dg_{i}(x)\in \mathbb {R}^{d\times d}\) is the matrix of partial derivatives of the ith column of g, and

We require that some of the moments of the solutions of (1) are bounded over the interval [0,T]. Furthermore, (2) in Assumption 1 implies (see, for example, Tretyakov & Zhang [30]) that there exists \(c^{\prime }\geq 0\) such that

This is a special case of Khasminskii’s condition using the Lyapunov-type function V (x) = 1 + ∥x∥2, and it guarantees the existence of a unique strong solution of (1) over [0,T] for any \(T<\infty \) (see [21, Theorem 2.3.5]), while also ensuring pth-moment bounds as follows:

Lemma 1

Let (X(t))t∈[0,T] be the unique solution of (1). Suppose that (5) holds for some p ≥ 2 and (4) in Assumption 2 holds, then there exists \(M_{p,T}<\infty \) such that

Proof

The proof of (6) follows from [23, Lemma 4.2], since the bound on g provided by (4) implies Eq. (4.2) in that article, which we reproduce here as

with r = 2γ1 + 2. □

To ensure sufficiently many bounded moments of the form (6) for our analysis to work, we now impose a lower bound on the value of p in (2) that depends on the order of the polynomial bounds on f and g. This bound is associated with a tolerance parameter ε which then appears in the the rate of strong convergence in Theorem 6.

Assumption 3

Suppose that (2) in Assumption 1 holds with

where γ0 and γ1 are as required in Assumption 2, and \(\mathbb {N}\setminus \{0\}\ni q>1-\log _{2}\varepsilon \), where ε ∈ (0,1) is a fixed tolerance parameter.

Finally, note that the analysis in this article is also valid if the initial vector is random, \(\mathcal {F}_{0}\)-measurable, and \(\mathbb {E}\|X(0)\|^{p}<\infty \).

3 An adaptive semi-implicit Euler scheme with backstop

The adaptive timestepping scheme under investigation in this article is based upon the semi-implicit Euler-Maruyama scheme over a random mesh \(\{t_{n}\}_{n\in \mathbb {N}}\) on the interval [0,T] given by

where △Wn+ 1 := W(tn+ 1) − W(tn), and \(\{h_{n}\}_{n\in \mathbb {N}}\) is a sequence of positive random timesteps and \(\{t_{n}:={\sum }_{i=1}^{n}h_{i-1}\}_{n\in \mathbb {N}\setminus \{0\}}\) with t0 = 0. For the setting described in Section 2, we show that, in order to ensure strong convergence with order (1 − ε)/2 of the method (8) for any ε ∈ (0,1), it is sufficient to construct the stepsize sequence \(\{h_{n}\}_{n\in \mathbb {N}}\) in the same way as in [14], demonstrating the applicability of this strategy to a significantly broader class of SDEs. We review the construction now.

Definition 1

Suppose that each member of \(\{t_{n}:={\sum }_{i=1}^{n}h_{i-1}\}_{n\in \mathbb {N}\setminus \{0\}}\), with t0 = 0, is an \(\mathcal {F}_{t}\)-stopping time: i.e., \(\{t_{n}\leq t\}\in \mathcal {F}_{t}\) for all t ≥ 0, where \((\mathcal {F}_{t})_{t\geq 0}\) is the natural filtration of W. The filtration \((\mathcal {F}_{t})_{t\geq 0}\) can be extended (see [21]) to any \(\mathcal {F}_{t}\)-stopping time τ by

In particular, this allows us to condition on \(\mathcal {F}_{t_{n}}\) at any point on the random time-set \(\{t_{n}\}_{n\in \mathbb {N}}\).

Remark 1

Throughout the article, the index of a random sequence reflects its \(\mathcal {F}_{t_{n}}\)-measurability. For example, consider the timestep sequence \(\{h_{n}\}_{n\in \mathbb {N}}\): each hn is \(\mathcal {F}_{t_{n}}\)-measurable. The only exception to this is \(\{t_{n}\}_{n\in \mathbb {N}}\), since each tn is \(\mathcal {F}_{t_{n-1}}\)-measurable.

Assumption 4

Suppose that each hn is \(\mathcal {F}_{t_{n}}\)-measurable, and that there are constant minimum and maximum stepsizes \(h_{\min \limits }\) and \(h_{\max \limits }\) imposed in a fixed ratio ρ so that

Definition 2

For each t ∈ [0,T], define the random integer N(t) such that

Set N := N(T) and tN := T, so that T is always the last point on the mesh.

We note that N(t) is a.e. the index of the right endpoint of the step that contains t, i.e. \(t\in [t_{N^{(t)}-1},t_{N^{(t)}}]\) a.s. Both \(t_{N^{(t)}}\) and \(t_{N^{(t)}-1}\) are \(\mathcal {F}_{t}\)-stopping times, and N(t) is supported on the finite set \(\{N^{(t)}_{\min \limits },\ldots ,N^{(t)}_{\max \limits }\}\), where

Remark 2

In (8), note that each △Wn+ 1 = W(tn+ 1) − W(tn) is taken over a random step of length hn = hn(Yn) and which depends on {W(s),s ∈ [0,tn]} through Yn. Therefore, △Wn+ 1 is a function of values of the Wiener process up to time tn, is not independent of \(\mathcal {F}_{t_{n}}\), and there is no reason to expect that \(\triangle W_{n+1}\sim \mathcal {N}(0,h_{n} I_{d\times d})\), where Id×d is the identity matrix. However, since tn+ 1 is a bounded \(\mathcal {F}_{t_{n}}\)-stopping time then, by Doob’s optional sampling theorem (see, for example, [27]),

In our main analysis, we use the following lemma on the boundedness of the moments of solutions of (1) conditioned at points on our adaptive mesh. The proof is a modification of that of [21, Theorem 2.4.1].

Lemma 2

Let (X(t))t∈[0,T] be a unique solution of (1), and suppose that (5) holds for some p ≥ 2. Then, there exist constants ν1 and ν2 such that

We are now in a position to define the scheme which is the subject of this article, and which combines a semi-implicit Euler scheme over an adaptive mesh, generated according to an admissible timestepping strategy, with a backstop method.

Definition 3

Define the map \(\theta :\mathbb {R}^{d}\times \mathbb {R}^{d}\times \mathbb {R}^{m}\times \mathbb {R}^{+}\to \mathbb {R}^{d}\) such that

so that, if \(\{Y_{n}\}_{n\in \mathbb {N}}\) is defined by the semi-implicit scheme (8), then

Then, we define a semi-implicit Euler scheme with backstop as the sequence \(\{\widetilde Y_{n}\}_{n\in \mathbb {N}}\) by

where \(\{h_{n}\}_{n\in \mathbb {N}}\) satisfies the conditions of Assumption 4. The map \(\varphi :\mathbb {R}^{d}\times \mathbb {R}^{d}\times \mathbb {R}^{m}\times \mathbb {R}^{+}\to \mathbb {R}^{d}\) characterizes the form a user-selected backstop method. We require that

for some positive constants C1 and C2, and ε ∈ (0,1) the fixed parameter from Assumption 3.

Remark 3

Note that φ will satisfy (13) if the backstop method is subject to a mean-square consistency requirement that bounds the propagation of discretization error over a single step. In practice, rather than checking (13) directly, we use as our backstop a method that is known to be strongly convergent of order 1/2 in this setting: for the numerical experiments in Section 5, we use the balanced method introduced by Tretyakov and Zhang [30], which satisfies a similar local accuracy bound (see [30, Eq. (2.9)]) and corresponds to the map

Examples of \(h_{\min \limits }\), \(h_{\max \limits }\), and ρ for the scheme (12) with backstop characterized by (14) are given in Section 5.

Finally, we define the admissible timestepping strategy (see also [14]).

Definition 4

Let \(\{\widetilde Y_{n}\}_{n\in \mathbb {N}}\) be a solution of (12) where f and g satisfy the conditions of Assumptions 1, 2, and 3. We say that \(\{h_{n}\}_{n\in \mathbb {N}}\) is an admissible timestepping strategy for (12) if Assumption 4 is satisfied and there exist real non-negative constants \(R_{1}, R_{2}<\infty \), independent of \(h_{\max \limits }\), such that whenever \(h_{\min \limits }<h_{n}\leq h_{\max \limits }\),

For example (see [14]), the timestepping rule given by

is admissible for (12). Choosing the larger of \(1/\|f(\widetilde Y_{n})\|\) and \(\|\widetilde Y_{n}\|/\|f(\widetilde Y_{n})\|\) helps maximize the stepsize while maintaining its admissibility. The backstop is needed since it may not always be possible to control \(\widetilde Y_{n}\) via timestep so that (15) holds. See Section 7 for a more detailed comment.

4 Strong convergence of the adaptive scheme

4.1 Preliminary lemmas

These lemmas provide a regularity bound in time and an estimate on remainder terms from Taylor expansions of f and g. Proofs are given in Section 6.

Lemma 3

Let (X(t))t∈[0,T] be a solution of (1) with coefficients f and g satisfying the conditions of Assumptions 1, 2, and 3, and suppose that the sequence of random times \(\{t_{n}\}_{n\in \mathbb {N}}\) is as in Definition 1 and satisfies the conditions of Assumption 4. Then for all \(n\in \mathbb {N}\) and p ≥ 2, there exists an a.s. finite and \(\mathcal {F}_{t_{n}}\)-measurable random variable \(\bar {L}_{p,n}\) so that

Now consider the Taylor expansions of f and gi, i = 1,…,m:

where the remainders Rf and \(R_{g_{i}}\) are given in integral form by

and z can be taken to read either f or gi. Furthermore, let

We now give \(\mathcal {F}_{t_{n}}\)-conditional mean-square bounds on the integrals of these remainder terms.

Lemma 4

Let (X(t))t∈[0,T] be a solution of (1) with coefficients f and g satisfying the conditions of Assumptions 1, 2, and 3. Let \(\{t_{n}\}_{n\in \mathbb {N}}\) be as in Definition 1, satisfying the conditions of Assumption 4.

Then for any ε ∈ (0,1) there is an a.s. finite and \(\mathcal {F}_{t_{n}}\)-measurable random variable \(\bar {{{{\varLambda }}}}_{\varepsilon ,n}>0\), and a constant \({{{\varLambda }}}_{\varepsilon }<\infty \), the latter independent of \(\{h_{n}\}_{n\in \mathbb {N}}\) and \(h_{\max \limits }\), such that

Remark 4

The notational convention used in Part (iv) of Lemma 4 is extended throughout the paper to \(\mathcal {F}_{t_{n}}\)-adapted sequences for which there exists a finite uniform upper bound on the expectation of each term.

4.2 Main results

In this section, we provide a lemma giving a bound on the one-step error for the combined scheme, followed by our main theorem which uses a Gronwall inequality to produce an order of strong convergence.

Lemma 5

Let (X(t))t∈[0,T] be a solution of (1) with drift and diffusion coefficients f and g satisfying the conditions of Assumptions 1, 2, and 3. Let \(\{\widetilde Y_{n}\}_{n\in \mathbb {N}}\) be a solution of (12) with initial value \(\widetilde Y_{0}=X_{0}\) and admissible timestepping strategy \(\{h_{n}\}_{n\in \mathbb {N}}\) satisfying the conditions of Assumption 4 and Definition 4.

Define the error sequence \(\{E_{n}\}_{n\in \mathbb {N}}\) by \(E_{n}:=\widetilde Y_{n}-X(t_{n})\). Then, there exist a.s. finite and \(\mathcal {F}_{t_{n}}\)-measurable random variables \(\bar {{{\varLambda }}}_{\varepsilon ,n}, \bar {{{\varGamma }}}_{2,n}, \bar {{{\varGamma }}}_{3,n}^{(m)}\) with finite expectations independent of n, denoted Λε, Γ2, and \({{{\varGamma }}}_{3}^{(m)}\) respectively, such that

where ε ∈ (0,1) is the fixed parameter from Assumption 3 and constants Q,Γ1 are given by

where c,R2,C2 satisfy (2) in Assumption 1, (13) in Definition 3, and (15) in Definition 4 respectively.

Proof

For hn selected at time tn, for some \(n\in \mathbb {N}\), by an admissible timestepping strategy, there are two possible cases (denoted (I) and (II)), first, \(h_{\min \limits }<h_{n}\leq h_{\max \limits }\) and second, \(h_{n}= h_{\min \limits }\). We consider each in turn.

(I) In this case, we rely on the bound (15) provided by the admissibility of the timestepping scheme. When \(h_{\min \limits }<h_{n}\leq h_{\max \limits }\), \(\widetilde Y_{n+1}\) is derived from \(\widetilde Y_{n}\) using (8), and we have

Expand f and g as Taylor series around X(tn) over the interval of integration, and write

Therefore,

which is

Let Q be as defined in (18). Then, using that \(h_{\max \limits }\leq 1\) and the inequality 2〈x,y〉≤∥x∥2 + ∥y∥2, we find

We develop bounds on \(\mathbb {E}\left [ {A_{n+1}|\mathcal {F}_{t_{n}}} \right ]\), \(\mathbb {E}\left [ {B_{n}|\mathcal {F}_{t_{n}}} \right ]\), \(\mathbb {E}\left [ {C_{n+1}|\mathcal {F}_{t_{n}}} \right ]\) in turn. The terms after Cn+ 1 on the RHS of the inequality have zero conditional expectation, by (11) in Remark 2, and the fact that En and each \(\tilde R_{g_{i}}\) are conditionally independent with respect to \(\mathcal {F}_{t_{n}}\), with the latter an Itô integral with zero conditional expectation.

By (2) in Assumption 1,

By (15) in Definition 4, and (4) in Assumption 2, we have

Next, by Jensen’s inequality and part (i) of Lemma 4,

We also have from part (iii) of Lemma 4 \(\mathbb {E}\left [ {\langle E_{n},\tilde {R}^{A}_{n+1}\rangle |\mathcal {F}_{t_{n}}} \right ]\leq \frac {1}{2}h_{n}\|E_{n}\|^{2}+\frac {1}{2}\bar {{{\varLambda }}}_{\varepsilon ,n} h_{n}^{2-\varepsilon }\) a.s. Applying parts (i)–(iii) of Lemma 4 then gives

Therefore, we have

(II) Suppose that \(h_{n}= h_{\min \limits }\). Here \(\widetilde Y_{n+1}\) is generated from \(\widetilde Y_{n}\) via an application of the backstop method over a single step of length \(h_{\min \limits }\). This corresponds to a single application of the map φ and therefore the relation (13) is satisfied a.s.

To combine the two cases (I) and (II), define the a.s. finite and \(\mathcal {F}_{t_{n}}\)-measurable random variables

where C1 and C2 are as given in (13). Since \(Q,\bar {{{\varLambda }}}_{\varepsilon ,n}\geq 0\) (the latter in the a.s. sense), we have for any hn selected by an admissible adaptive timestepping strategy,

Note that since (a ∨ b) ≤ a + b when a,b ≥ 0, by (19) we may apply (6) to (20) to show that, under Assumption 3,

where \(M_{2+2\gamma _{1},T}\) and \(M_{2(\gamma _{0}+\gamma _{1})+2,T}\) are finite constants satisfying (6) for p = 2,2γ0 + 2 respectively. It can be similarly shown under Assumption 3 that there exist finite constants \({{{\varGamma }}}_{3}^{(m)},{{{\varLambda }}}_{\varepsilon }\) such that \(\mathbb {E}\left [ {\bar {{{\varGamma }}}_{3,n}^{(m)}} \right ]\leq {{{\varGamma }}}_{3}^{(m)}\) and \(\mathbb {E}\left [ {\bar {{{\varLambda }}}_{\varepsilon ,n}} \right ]\leq {{{\varLambda }}}_{\varepsilon }\).□

The bound (22) characterizes the propagation of error in mean-square over a single step of the combined semi-implicit Euler scheme with backstop (12), and holds regardless of whether or not the timestepping strategy requires an application of the semi-implicit scheme or the backstop scheme.

Assumption 5

Finally, \(h_{\max \limits }\) is chosen so that there exists a constant δ ∈ [0,1] that does not depend on \(h_{\max \limits }\), such that

where Q is defined in (18) and Γ1 is defined in (19). It follows that there exists \(\gamma <\infty \) such that

Although these conditions are required in the proof of Theorem 6, we have observed no practical implications in our numerical experiments.

Definition 5

Define an a.s. continuous process \((\mathcal {E}_c^2(t))_{t\in [0,T]}\) pathwise as the a.e. linear interpolant of ∥En∥2 and ∥En+ 1∥2 on each interval [tn,tn+ 1] for n = 0,…,N − 1:

The accumulation of error in mean-square for (12), and hence the order of strong convergence, can now be estimated.

Theorem 6

Let (X(t))t∈[0,T] be a solution of (1) with drift and diffusion coefficients f and g satisfying the conditions of Assumptions 1, 2, and 3. Let \(\{\widetilde Y_{n}\}_{n\in \mathbb {N}}\) be a solution of (12) in Definition 3, with initial value \(\widetilde Y_{0}=X_{0}\) and admissible timestepping strategy \(\{h_{n}\}_{n\in \mathbb {N}}\) satisfying the conditions of Definition 4 and Assumption 5. Then, if ε ∈ (0,1) is the fixed parameter from Assumption 3, there exists Cε,m,ρ,T > 0, independent of \(h_{\max \limits }\) such that

where \(\mathcal {E}_c^2(t)\) is as defined in Definition 5, and in particular

where N is as given in Definition 2.

Remark 5

By setting A = 0 in (1), we obtain strong convergence of identical order of a backstopped fully explicit Euler-Maruyama adaptive method.

Proof (Theorem 6)

Fix t ∈ [0,T], and let N(t) be as in Definition 2. Multiply both sides of (22) by the indicator variable \(\mathcal {I}_{\{{N^{(t)}}\geq n+1\}}\) to get

Since \(t_{N^{(t)}}\) is a \(\mathcal {F}_{t}\)-stopping time, the event \(\{N^{(t)}\leq n\}\in \mathcal {F}_{t_{n}}\) and therefore the indicator variable \(\mathcal {I}_{\{{N^{(t)}}\geq n+1\}}\) is \(\mathcal {F}_{t_{n}}\)-measurable. Thus, we have

Since {N(t) ≥ n + 1}⊂{N(t) ≥ n}, we have \(\mathcal {I}_{\{N^{(t)}\geq n+1\}}(\omega )\leq \mathcal {I}_{\{{N^{(t)}}\geq n\}}(\omega )\) for all ω ∈Ω. Take expectations on both sides, and since \({h_{n}}\leq h_{\max \limits }\) we have

Now, sum both sides of (26) over \(n=0,\ldots ,N^{(t)}_{\max \limits }-1\), where \(N^{(t)}_{\max \limits }\) is the deterministic index in (10), to get (using the bound t ≤ T)

Bringing the sum inside the expectation on the RHS of (27) yields

By a change in index in the second sum on the RHS of (28) (and since ∥E0∥2 = 0 a.e.), we can write

Subtracting from both sides the first term on the RHS of (29), and dividing through by \(1-h_{\max \limits }Q>\delta \) (which holds by (23) in Assumption 4), we get

where \({{{{\varGamma }}}_{\varepsilon ,m}}:=\frac {\rho T}{\delta }\left ({{{\varGamma }}}_{2}+{{{\varGamma }}}_{3}^{(m)}+126{{{\varLambda }}}_{\varepsilon }\right )\), using \(h_{\max \limits }^{\varepsilon }<1\) by Assumption 4.

It follows from (25) in Definition 5 that a.s.,

and therefore by integration

The a.s. continuity of \((\mathcal {E}_c^2(t))_{s\in [0,T]}\) implies the continuity and therefore boundedness over [0,T] of \(\mathbb {E}\left [ {\mathcal {E}_c^2(t)} \right ]\). Combining (30) and (31), and using that \(h_{\max \limits }=\rho h_{\min \limits }\), we get

Similarly

By (25) for all \(s\in [t_{N^{(t)}-1},t_{N^{(t)}}]\) a.e.,

Sum both sides of (32) and (33) and then use (34) to get

We can write, by (24),

Therefore, since \(t\in [t_{N^{(t)}-1},t_{N^{(t)}}]\) and by the lower bound in (34),

where γ is given by (24) in Assumption 5.

Since (35) holds for all t ∈ [0,T], an application of the integral form of Gronwall’s inequality (e.g., [21, Theorem 8.1]) gives, for each t ∈ [0,T],

The statement of the Theorem follows with

□

5 A comparative numerical review of some available schemes

Given the semi-linear SDE

with solution \(u:[0,T]\times {{{\varOmega }}} \to \mathbb {R}^{d}\), we compare our semi-implicit adaptive numerical method to a number of different fixed-step schemes (with time step h) that we outline below. Some numerical examples for an explicit adaptive scheme are given in [14]. A majority of recent developments concentrate on a perturbation of the flow (or solution) of order h1/2 or higher; however, the first method we present is the classic implicit approach. We do not consider an exhaustive list of taming-type schemes and there are other variants available; see for example [9, 22, 23, 26, 29, 31]. Our examples illustrate some of the drawbacks of explicit schemes, for example where linear stability is an issue.

-

1.

Drift implicit scheme [24] This is given for (36) by

$$Y_{n+1}=Y_{n} + h (A Y_{n+1}+f(Y_{n+1})) + g(Y_{n}) \triangle W_{n+1}.$$Although strong convergence has been proved (see [24]), at each step a nonlinear system of the form

$$ 0=Y_{n+1}- h (A Y_{n+1}+f(Y_{n+1})) + b $$(37)needs to be solved for Yn+ 1 for some vector b. Even for the deterministic case there is no guarantee the nonlinear solver will converge to the correct root (see [28, Chapter 4]). We observe in our numerical experiments that both a standard Newton method and the matlab nonlinear solver fsolve (or fzero in one-dimension) may fail to converge. In the event of a step where this occurs we use as a backstop an alternative explicit method, in this article taken to be the balanced method (see below). The drift implicit scheme with this backstop method is denoted by Drift Implicit in the figures of this Section. Finally, note that for several examples in this section the implicit solver may be made more efficient by exploiting a known closed-form solution for the nonlinear system (37). Such solutions are not in general available and so we do not make use of them in our comparative analysis here.

-

2.

Tamed [25] A tamed version which may be used when the solutions of (36) have a limited number of finite moments [31]

$$Y_{n+1}=Y_{n}+ \frac{h AY_{n} + f(Y_{n}) + {\sum}_{j=1}^{m} g_{j}(Y_{n})\triangle W} {1+ h^{\beta} \|AY_{n}+f(Y_{n})\| + {\sum}_{j=1}^{m} \|g_{j}(Y_{n})\|h^{\beta}}.$$Strong convergence of order 1/2 is achieved by setting β = 1/2. We denote this method Tamed.

-

3.

Balanced method [30] is given for (36) by

$$Y_{n+1}= Y_{n} + \frac{h (AY_{n} + f(Y_{n})) + {\sum}_{r=1}^{m} g_{r}(Y_{n}) \triangle W_{r,n+1}}{1+h\|AY_{n}+f(Y_{n})\|+{\sum}_{r=1}^{m} \|g_{r}(Y_{n})\triangle W_{r,n+1}\|}.$$This was proved to be strongly convergent with order 1/2 (including for additive noise) and is denoted in the figures of this Section as BM.

-

4.

Projected EM [2] uses the standard EM method when Yn is inside a ball of radius inversely proportional to the step step size. However, outside of the ball, the numerical solution is projected onto the ball. We have for \(Z:=\min \limits (1,Y_{n}/\sqrt {h}\|Y_{n}\|)\)

$$ Y_{n+1} = Z+h(AZ+f(Z) + \sum\limits_{r=1}^{m} g_{r}(Z){\Delta} W_{r,n+1}. $$This is denoted below as Projected EM.

We provide a comparative illustration of the combined effect of semi-implicitness and adaptivity using five examples ranging from geometric Brownian motion to a system of SDEs arising from the spatial discretization of an SPDE. Recall that our use of a semi-implicit method controls instabilities from a linear operator and the adaptive timestepping controls the discretization of the nonlinear structure. Stiffness is manifested in the structure of each of these equations in different ways: ranging from the linearity only (in geometric Brownian motion) to both in the linear operator and nonlinearities for a discretization of an SPDE.

To examine strong convergence in \(h_{\max \limits }\) for the SDE examples below, we solve with M = 1000 samples to estimate the root mean square error (RMSE) at a final time T = Nh = 1, \(\sqrt {\mathbb {E}\left [ {\|X(T)-X_{N}\|^{2}} \right ]}\) and we estimate the standard deviation from 20 groups of 50 samples included on the error plots. Reference solutions are computed with 106 uniform steps on [0,T]. For efficiency, we compare the RMSE against the average computing time over the 1000 samples (denoted cputime). Unless otherwise stated, we take ρ = 10 throughout.

5.1 Geometric Brownian motion

The classic example to illustrate linear mean square stability is geometric Brownian motion

If r + σ2/2 < 0 it is straightforward to see that \(\mathbb {E}\left [ {(u(t)^{2}} \right ]\to 0\) as \(t\to \infty \) and that the (fixed step) explicit Euler method is only stable if 0 < h < − 2(r + σ2/2)/r2. The drift and diffusion are both linear functions, so there is no need for either taming or adaptivity to control growth from a nonlinear term; indeed, in this example, the semi-implicit adaptive and fully drift implicit schemes co-coincide if A = r and f(u) = 0.

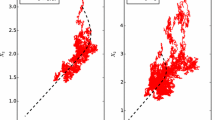

However, it is instructive to compare the explicit schemes to the implicit schemes (Adaptive IEM and Drift Implicit). We take r = − 8 and σ = 3 so that the explicit Euler method is unstable for h = 0.25 and h = 0.5. In Fig. 1, we plot the error squared, |u(tn) − Yn|2, of two sample paths one with \(h_{\max \limits }=0.25\) (a) and \(h_{\max \limits }=0.5\) (b). Although the tamed and projected schemes control growth from the linear instability, we observe that this control can come at a price of bounded oscillations.

Error |u(tn) − Yn|2 plotted against tn ∈ [0,10] in two sample paths for geometric Brownian motion (38) with a \(h_{\max \limits }=0.25\) and b \(h_{\max \limits }=0.5\)

5.2 1D stochastic Ginzburg-Landau

The 1D stochastic Ginzburg-Landau SDE is a classic example with a cubic nonlinearity in the drift and linear diffusion

We take here parameter values as in [22, Example 4.7], a = 0.1, b = 1 and c = 0.2, x(0) = 2, and solve to T = 1. We see in Fig. 2 that all the methods demonstrate convergence and that Adaptive IEM and Projected EM are similar in convergence and efficiency. Neither the adaptive nor drift-implicit schemes used the backstop method.

Convergence and efficiency of methods applied to the stochastic Ginzburg-Landau (39). We compare RMSE at T = 1 against \(h_{\max \limits }\) in (a) and efficiency (RMSE vs cputime) in (b)

5.3 Stochastic volatility system

We consider an extension of the 3/2-volatility model to two dimensions as in [26]

with λ = 2.5, μ = 1, X(0) = a[2,2]T, a = 1,10,100, and

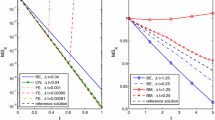

We see in Fig. 3 that all the methods demonstrate convergence but that BM and Tamed have a larger error constant and the adaptive method Adaptive IEM is the most efficient. The initial data taken was X(0) = [2,2]T and the backstop method was not used for either drift implicit or adaptive methods (as for (39)). In Fig. 4, we examine the time steps hn for a single noise path with the same value of \(h_{\max \limits }=0.01\) but with ρ = 10 and ρ = 100 corresponding to \(h_{\min \limits }=10^{-3}\) and \(h_{\min \limits }=10^{-4}\). In (a), we have initial data X(0) = [20,20]T and in (b) X(0) = [200,200]T. In (a), we observe that with ρ = 100 the backstop method is not used at all (but it is required for ρ = 10) whereas in (b), to deal with the larger initial data, the backstop is used in both cases. As time progresses, the timestep taken increases until it reaches \(h_{\max \limits }\).

Convergence of methods applied to the stochastic volatility system (40). We compare RMSE at T = 1 against \(h_{\max \limits }\) in (a) and efficiency (RMSE vs cputime) in (b)

These observations suggest that practitioners who apply a standard explicit or semi-implicit Euler-Maruyama scheme over a uniform mesh with a step size sufficiently small (e.g., close to \(h_{\min \limits }\) with large ρ) may rarely encounter the spurious coefficient responses that underlie the lack of strong convergence for the scheme.

5.4 Finite difference approximation of an SPDE

Consider the SPDE

with t ≥ 0, x ∈ [0,1] and zero Dirichlet boundary conditions. We take initial data \(u_{0}(x)=2\sin \limits (\pi x)\), σ = 0.2, η = 11, λ = 2 and trace class noise \(\widehat W\)

for some \(m\in \mathbb {N}\setminus \{0\}\) and where Wj(t) are mutually independent standard Brownian motions.

We introduce a grid of d + 2 uniformly spaced points xk = kΔx on [0,1] for k = 1,…,d + 2. Then, the finite difference approximation in space leads to a system of d SDEs:

where we denote u := (u1,u2,…,ud)T, uk(t) ≈ u(xk,t) and the noise process is \({W}:=(\widehat {W}(x_{1},t),\widehat {W}(x_{2},t),\ldots ,\widehat {W}(x_{d},t))^{T}\). The tri-diagonal matrix A is the standard finite difference approximation to the Laplacian with zero Dirichlet boundary conditions given by

For further details on the finite difference approximation of SPDEs, see [20]. To be able to compare different system sizes d, we scaled the Euclidean norm in the standard way (see for example [20]) to approximate the function space norm L2([0,1]). This system of SDEs displays linear stiffness (similar to the geometric Brownian motion) and nonlinear stiffness arising from the drift and diffusion coefficients. The parameter 𝜖 then determines the degree of linear stiffness. To examine convergence to the SDE system (42), we take 𝜖 = 0.1, T = 1 for d = m = 10 (Fig. 5) and d = m = 100 (Fig. 6).

Convergence and efficiency for the methods applied to the finite difference approximation of the SPDE given by (42) with d = 10 a RMSE vs \(h_{\max \limits }\) (with reference line of slope 0.5) and b the efficiency (RMSE vs cputime)

Convergence and efficiency for the methods applied to the finite difference approximation of the SPDE given by (42) with d = 100 a RMSE vs \(h_{\max \limits }\) (with reference line of slope 0.5) and b the efficiency (RMSE vs cputime)

For the smaller system size (d = 10) in Fig. 5(a), we see all methods converging for \(h_{\max \limits }\) sufficiently small, although Projected EM initially diverges. We see in (b) that Adaptive IEM is more efficient than Drift Implicit and is similar to the other explicit schemes. When m = 100 Projected EM is no longer seen to converge on this range of h and so is not plotted in Fig. 6. In (a), we now see that the Drift Implicit is more accurate for a given \(h_{\max \limits }\) and from (b) that it is comparable in efficiency with Adaptive IEM.

6 Proofs of technical results

In this section, we frequently use the inequality ∥a + b∥p ≤ 2p(∥a∥p + ∥b∥p), where \(a,b\in \mathbb {R}^{d}\), and \(p\in \mathbb {R}^{+}\), which follows from ∥a + b∥p ≤ (∥a∥ + ∥b∥)p ≤ (2(∥a∥∨∥b∥))p = 2p(∥a∥p ∨∥b∥p) ≤ 2p(∥a∥p + ∥b∥p).

Proof (Lemma 3)

Fix \(n\in \mathbb {N}\) and suppose that tn < s ≤ tn+ 1. Then

By the triangle inequality and [21, Theorem 1.7.1] (with conditioning on \(\mathcal {F}_{t_{n}}\)),

Next, we apply (4), Lemma 2, and the fact that \(\|A\|_{F}^{p}<\infty \), to get a.s.

Therefore, since |s − tn|≤ 1, we can define an a.s. finite and \(\mathcal {F}_{t_{n}}\)-measurable random variable

so that (16) holds. □

Proof (Lemma 4)

Part (i): Set γ := γ0 ∨ γ1, where γ0,γ1 are as in Assumptions 2 and 3, and for q = 1,2,… define

which satisfies the relation

and, by Lemma 2, the a.s. finite bound,

the right-hand side of which we denote \((\bar {{\varUpsilon }}_{q,n})^{1/2}\), where \(\bar {{\varUpsilon }}_{q,n}\) is an a.s. finite and \(\mathcal {F}_{t_{n}}\)-measurable random variable. Let \(q\in \mathbb {N}\setminus \{0\}\) satisfy \(q>1-\log _{2}\varepsilon \). Then by (3) and q successive applications of the Cauchy-Schwarz inequality, and (16) with p = 2 in the statement of Lemma 3, we get

where \(\bar {{{\varLambda }}}_{\varepsilon ,n}:=2{c_{1}^{2}}\bar {{\varUpsilon }}_{q,n}^{1/(2^{q})}\bar L_{2,n}^{{\sum }_{i=2}^{q}1/(2^{i-1})}\) depends on ε through q.

Part (ii): By the conditional form of the Itô isometry, for i = 1,…,m,

and the proof follows as in Part (i), with a reduction of one in the order of hn.

Part (iii) holds as a special case of Part (i). Part (iv) follows by an application of the Cauchy-Schwarz inequality, followed by Jensen’s inequality for the functions \((\cdot )^{1/(2^{q-1})}\) and \((\cdot )^{{\sum }_{i=2}^{q}1/(2^{i-1})}\) (both of which are concave over \(\mathbb {R}^{+}\), by the second derivative test), to get

which is finite under the conditions of Assumption 3: p satisfies (7), and therefore by (6) the finiteness of \(\mathbb {E}\left [ {\bar {{\varUpsilon }}_{q,n}} \right ]\) is ensured by (44) and that of \(\mathbb {E}\left [ {\bar {L^{2}_{n}}} \right ]\) is ensured by (43). □

Remark 6

By making q successive applications of the Cauchy-Schwarz inequality in the proof of Lemma 4, we separate the expectation of dependent random factors in Rf and Rg in such a way that the highest possible order of hn is achieved in the estimate, given the available finite moment bounds. This is necessary to ensure a polynomial order of strong convergence in the statement of Theorem 6. If the diffusion coefficient g is globally Lipschitz continuous then the resulting uniform bound on each ∥Dgi(x)∥F, along with stronger moment bounds of the form (6), sidesteps that requirement. In this case, the statement of Lemma 4, and hence the statement of Theorem 6, would hold with ε = 0 (and order constant independent of q, and therefore ε), giving an order of strong convergence of 1/2 for the semi-implicit method with backstop (12), using an admissible timestepping strategy. If we then set A = 0 in (1), our method becomes explicit and we recover the main result of [14].

7 Conclusion

The discretization of SDEs with non-Lipschitz drift and diffusion coefficients is a challenging numerical problem. We have proved strong convergence for both adaptive semi-implicit and explicit Euler schemes, and presented numerical results that indicate the semi-implicit variant is well suited as a general purpose solver, being more robust than several competing explicit fixed-step methods and more efficient than the drift implicit method.

Both the drift implicit and the adaptive schemes make use of a backstop method which is triggered when the adaptive timestepping strategy attempts to select a timestep at the minimum stepsize \(h_{\min \limits }\). Our numerical experiments indicate that, for an appropriate choice of ρ, \(h_{\min \limits }\) may be achieved only rarely (if at all). It may be possible to characterize the probability of this occurrence and, if it can be bounded appropriately, a strong convergence result may be possible for a numerical method of the form (8) that does not rely on a backstop method (provided T is reached in a finite number of steps). A step in this direction may be found in [15].

SDEs where the drift coefficient is both positive and non-globally Lipschitz continuous are not covered by the analysis in this article, though adaptive meshes have been used to reproduce positivity of solutions with high probability and a.s. stability and instability of equilibria in [16] (informed by the approach of Liu & Mao [18]). We are unaware of any strong convergence results for such equations.

Finally, since our analysis relies upon the boundedness of ∥A∥F, and since the error constant in the strong convergence estimate increases without bound with the number of independent noise terms m, the results of the article do not automatically extend to SPDEs. This setting is now considered in [19].

References

Appleby, J., Kelly, C., Mao, X., Rodkina, A.: On the local dynamics of polynomial difference equations with fading stochastic perturbations. Dyn. Contin. Discrete Impuls. Syst. Ser. A Math. Anal. 17, 401–430 (2010)

Beyn, W.-J., Isaak, E., Kruse, R.: Stochastic c-stability and b-consistency of explicit and implicit euler-type schemes. J. Sci. Comput. 67(3), 955–987 (2016). 10.1007/s10915-015-0114-4

Buckwar, E., Kelly, C.: Non-normal drift structures and linear stability analysis of numerical methods for systems of stochastic differential equations. Comput. Math. Appl. 64, 2282–2293 (2012)

Burrage, P.M., Burrage, K.: A variable stepsize implementation for stochastic differential equations. SIAM J. Sci. Comput. 24(3), 848–864 (2002)

Gains, J.G., Lyons, T.J.: Variable step size control in the numerical solution of stochastic differential equations. SIAM J. Appl. Math. 57, 1455–1484 (1997)

Fang, W., Giles, M.: Adaptive Euler-Maruyama method for SDEs with non-globally Lipschitz drift. Ann. Appl. Probab. 30(2), 526–560 (2020)

Higham, D.J., Mao, X., Stuart, A.M.: Strong convergence of Euler-type methods for nonlinear stochastic differential equations. SIAM J. Numer. Anal. 40, 1041–1063 (2002)

Higham, D.J., Trefethen, L.N.: Stiffness of ODEs. BIT Numer. Math. 33(2), 285–303 (1993)

Hutzenthaler, M., Jentzen, A.: Numerical approximations of stochastic differential equations with non-globally Lipschitz continuous coefficients. Mem. Amer. Math. Soc. 236, v + 99 (2015)

Hutzenthaler, M., Jentzen, A.: On a perturbation theory and on strong convergence rates for stochastic ordinary and partial differential equations with non-globally monotone coefficients. arXiv:1401.0295

Hutzenthaler, M., Jentzen, A., Kloeden, P.E.: Strong and weak divergence in finite time of Euler’s method for stochastic differential equations with non-globally Lipschitz continuous coefficients. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 467, 1563–1576 (2011)

Hutzenthaler, M., Jentzen, A., Kloeden, P.E.: Strong convergence of an explicit numerical method for SDEs with nonglobally Lipschitz continuous coefficients. Ann. Appl. Probab. 22, 1611–1641 (2012)

Ilie, S., Jackson, K.R., Enright, W.H.: Adaptive time-stepping for the strong numerical solution of stochastic differential equations. Numer. Algor. 68, 791–812 (2015)

Kelly, C., Lord, G.J.: Adaptive timestepping strategies for nonlinear stochastic systems. IMA J. Numer. Anal. 38(3), 1523–1549 (2018)

Kelly, C., Lord, G.J., Sun, F.: Strong convergence of an adaptive time-stepping Milstein method for SDEs with one-sided Lipschitz drift. arXiv:1909.00099

Kelly, C., Rodkina, A., Rapoo, A.: Adaptive timestepping for pathwise stability and positivity of strongly discretised nonlinear stochastic differential equations. J. Comput. Appl. Math. 334, 39–57 (2018)

Lewis, A.L.: Option Valuation under Stochastic Volatility: with Mathematica Code. Finance Pres (2000)

Liu, W., Mao, X.: Almost sure stability of the Euler-Maruyama method with random variable stepsize for stochastic differential equations. Numer. Algor. 74(2), 573–592 (2017)

Lord, G.J., Campbell, S.: Adaptive time-stepping for Stochastic Partial Differential Equations with non-Lipschitz drift. arXiv:1812.09036

Lord, G.J., Powell, C.E., Shardlow, T.: An introduction to computational stochastic PDEs, Cambridge Texts in Applied Mathematics. Cambridge University Press, New York (2014)

Mao, X.: Stochastic Differential Equations and Applications. 2, Horwood (2008)

Mao, X.: The truncated Euler-Maruyama method for stochastic differential equations. J. Comput. Appl. Math. 290, 370–384 (2015)

Mao, X.: Convergence rates of the truncated Euler-Maruyama method for stochastic differential equations. J. Comput. Appl. Math. 296, 362–375 (2016)

Mao, X., Szpruch, L.: Strong convergence and stability of implicit numerical methods for stochastic differential equations with non-globally Lipschitz continuous coefficients. J. Comput. Appl. Math. 238, 14–28 (2013)

Sabanis, S.: A note on tamed Euler approximations, Electron. Commun. Probab. 18, 10 (2013)

Sabanis, S.: Euler approximations with varying coefficients: the case of superlinearly growing diffusion coefficients, . Ann. Appl. Probab. 26, 2083–2105 (2016)

Shiryaev, A.N.: Probability, 2nd edn. Springer, Berlin (1996)

Stuart, A.M., Humphries, A.R.: Dynamical Systems and Numerical Analysis CUP (1996)

Szpruch, L., Zhang, X.: V-integrability, asymptotic stability and comparison theorem of explicit numerical schemes for SDEs. Math. Comput. To appear (2018)

Tretyakov, M.V., Zhang, Z.: A fundamental mean-square convergence theorem for SDEs with locally Lipschitz coefficients and its applications. SIAM J. Numer. Anal. 51, 3135–3162 (2013)

Zhang, Z., Ma, H.: Order-preserving strong schemes for SDEs with locally Lipschitz coefficients. Appl. Numer. Math. 112, 1–16 (2017)

Acknowledgements

The authors are grateful to Stuart Campbell of Heriot-Watt University, and Alexandra Rodkina of the University of the West Indies at Mona, for useful discussions in preparing this work. We also wish to acknowledge the careful reading and detailed feedback provided by anonymous referees, which significantly contributed to the present form of the manuscript.

Funding

Open Access funding provided by the IReL Consortium. Gabriel Lord was partially funded by EPSRC grant EP/K034154/1.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kelly, C., Lord, G.J. Adaptive Euler methods for stochastic systems with non-globally Lipschitz coefficients. Numer Algor 89, 721–747 (2022). https://doi.org/10.1007/s11075-021-01131-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-021-01131-8

Keywords

- Stochastic differential equations

- Adaptive timestepping

- Semi-implicit Euler method

- Non-globally Lipschitz coefficients

- Strong convergence