Abstract

Key message

We found high accuracy classification (Fmeasure = 95%, on cross-validation) of Araucaria angustifolia (Bertol.) Kuntze, an endangered native species, and Hovenia dulcis Thunb. an aggressive, invasive alien species in WorldView-2 multispectral images. In applying machine learning algorithms, the spectral attributes mainly related to the near-infrared band were the most important for the models.

Context

It is difficult to classify tree species in tropical rainforests due to the high spectral response’s diversity of existing species, as well as to adjust efficient machine learning techniques and orbital image resolution.

Aims

To explore the spectral and textural response of an endangered species (A. angustifolia) and an invasive species (H. dulcis) in WorldView-2 multispectral images, testing its recognition capability by machine learning techniques.

Methods

We used a WordView-2 (2016) image with 0.5-m spatial resolution. Then we manually clipped the canopy area of the two species in this image using two compositions: True color composition (R=660 nm, G=545 nm, B=480 nm) and near-infrared composition (NIR-2=950 nm, G=545 nm, B=480 nm). Thus, we applied spectral and textural descriptors (pyramid histogram of oriented gradients—PHOG and Edge Filter), which selects the most representative features of the dataset. Finally, we used artificial neural networks (ANN) and random forest (RF) for tree species classification.

Results

The species classification was performed with high accuracy (Fmeasure = 95%, on cross-validation), essentially for spectral attributes using the near-infrared composition. RF surpassed the ANN classification rates and also proved to be more stable and faster for training and testing.

Conclusion

The WorldView-2 multispectral sensor showed the potential to provide sufficient information for classifying two species, proving its usefulness in this phytophysiognomy where hyperspectral sensors are generally used for this type of classification.

Similar content being viewed by others

1 Introduction

The diversity of tree species is essential for maintaining terrestrial ecosystems; therefore, surveying diversity becomes increasingly important in conservation and sustainable forest management (Wulder et al. 2004; McDermid et al. 2009). The Atlantic Rainforest is a tropical forest biome which covers the southeast, south, and coastal regions of Brazil, eastern Paraguay, and a portion of northern Argentina. It is one of the richest forests in terms of plant biodiversity on the planet. Such diversity is related to the high degree of endemism in some regions, with very heterogeneous plant phytophysiognomies. Variations in species richness are linked to factors such as latitude, altitude, rain precipitation, and soil (Colombo and Joly 2010). Its original vegetation cover has been reduced due to anthropogenic disturbances mainly related to agricultural expansion, being estimated as 16% in 2009 (Ribeiro et al. 2009) and 28% in 2018 (Rezende et al. 2018).

Mixed Ombrophylous Forest (MOF) is part of the Atlantic Rainforest, and considered to be one of the most threatened phytophysiognomies with a loss of 75.6% of its original cover De Gasper et al. (2013). It is also known as araucaria forest due to the presence of A. angustifolia, which results in a unique aspect of forest stratification due to its peculiar morphological characteristics with high crowns, and two well-defined vertical strata. This species has paramount importance for the ecosystem in which it is found for all aspects, i.e., ecological, economic, historical, social, and cultural. However, this species has been intensively exploited since the nineteenth century for its high economic value for both timber use and seed trade (Andersson 2005). Its territory has been reduced to a minimum fraction, which according to the International Union for the Conservation of Nature and Natural Resources (IUCN), classified araucaria as Critically Endangered (Thomas 2013). Recent publications point out that projections for the complete extinction of the species are estimated for the year 2070 due to its overexploitation and climate change if conservation measures are not improved (Wilson et al. 2019).

Another problem faced in MOF is the bioinvasion of tree species. A particular problem is due to H. dulcis, a species introduced in South America for ornamental and forestry purposes such as wood use (Zenni and Ziller 2011). However, this species easily established and spread in the region due to the similarity of the environmental conditions to its natural habitat. The dispersion of this species mainly occurs by medium-sized mammals such as armadillos (Carvalho 1994), skunks (Cáceres and Monteiro-Filho 2001), and bats (Zortea 1993). Another determining feature is the intense production of seeds with a high germination rate, especially where there are reductions in litter deposition and indications of anthropic disturbances in the forest (Dechoum et al. 2015). There is a strong invasion of H. dulcis in the MOF remnants in the Center-South region of Paraná due to several favorable factors (Figueiredo et al. 2013; Nauiack 2015).

One of the main challenges for forest conservation is obtaining large-scale information to monitor biodiversity (Sothe et al. 2020); it is also essential to know the composition of tree species and their spatial distribution patterns (Nagendra 2001). This access to diversity became more achievable by increasing the spatial, spectral, temporal, and radiometric resolutions of sensors embedded in satellites or Remotely Piloted Aircrafts (RPAs) (Kwok 2018). Another factor which contributed to this process was the emergence of machine learning techniques and their ability to operate with complex calculation issues, which can be progressively applied to solve practical problems. For example, there is artificial neural networks (ANN), one of the most widespread classification algorithms (Samborska et al. 2014; Simioni et al. 2020), and random forest (RF), widely used in image classification from remote sensors (Belgiu and Drăgu 2016).

Even with all this technological development, the classification process is constantly being improved. In classifications of boreal forests and European tundra, Immitzer et al. (2012) found excellent results using RF with WordView-2 images, showing the potential to identify 20 forest species with 70% accuracy. However, reproducing a forest inventory using this same methodology in a tropical rainforest where stratification, the density of individuals, and diversity of species are high becomes a challenge for the currently available resources. Ferreira et al. 2016a, b, Ferreira et al. 2019) reached 8 species for the same series of sensors (WolrdView-3), but in semi-deciduous seasonal forests. Sothe et al. (2020) managed to recognize 16 species, although using hyperspectral sensors attached to an RPA, with ample spatial resolution.

Given the importance of identifying forest species by satellite images in forest management programs, this study aimed to compare different classification models for two forest species with a marked presence in MOF, namely H. dulcis, an invasive alien species, and A. angustifolia, which is a critically endangered native species. To do so, we compared the use of spectral and textural descriptors (pyramid histogram of oriented gradients—PHOG and Edge Filter) optimized by data mining (genetic algorithm—GA) and submitted to two classifiers: random forest—RF and artificial neural network—ANN. Thus, we sought to explore the spectral and textural response of these species in WorldView-2 multispectral images. This is the first time that a detailed and comparative analysis of these descriptors has been applied to these species, especially for H. dulcis.

2 Materials and methods

We used a 2016 WorldView-2 properly preprocessed image in which we selected two spectral compositions. Then we collected samples in pre-established areas (manual cropping of the crowns) from the two tree species (A. angustifolia and H. dulcis). Next, we compared the selected compositions using spectral and textural attributes (PHOG and Edge Filter). We applied data mining to select better features (except for Edge Filter). Finally, we applied the two classifiers (ANN and RF), in which each dataset was tested separately in each classification scheme, generating 12 models. The species were classified together in each model. This process can be seen in the flowchart in Fig. 1.

2.1 Study area and species

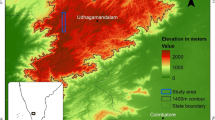

A large “continuum” of native MOF can be found in the second plateau of Paraná state, Brazil, located in the central west region. This area is preserved by three National Conservation Units (NCU) which cover about 20km2 for research, sustainable management, and species preservation. This large area plays an important role in Paraná’s water structure, as it is a watershed in the three large basins of the state—Ivaí, Tibagi, and Iguaçu. According to the Water and Land Institute of Paraná (IAT), these three NCUs are areas of relevant ecological interest, being entitled as a strategic area for environmental conservation (IAT 2007).

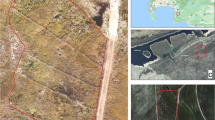

The present study was carried out in the vicinity of these conservation units in the Imbituvão watershed, which belongs to the Tibagi watershed. The study area is within the rural properties inserted in the project called “Imbituvão”, carried out by the Department of Forestry at the Midwestern State University. This region presents several MOF fragments with a high degree of H. dulcis infestation and the presence of A. angustifolia. It also presents a heterogeneous and fragmented landscape, possibly due to the use and occupation of land by agriculture. Its geological structure predominantly consists of sandstone with texture varying from fine to coarse (Salamuni et al. 1969). The predominant soil types are red-yellow dystrophic ultisol and nitisol with aluminum oxides (Mazza et al. 2005). The area has a wavy and rugged topography with an average altitude of 800m. The climate is temperate subtropical with mild summers, winters with severe and frequent frosts, without a dry season. The precipitation is close to 200mm month−1 with an annual average temperature of 18° C (Maack 2017). We selected four areas (A1, A2, A3, and A4) of 206,000 m2 167,700 m2 220,000 m2, and 336,000 m2, respectively, for sample collection (Fig. 2).

Study location and the four sampling areas. On the left is the geographical location of the project area in the Imbituvão sub-basin. On the right are the four rural properties (A1, A2, A3, and A4), with the spatial location of the sample units (crowns of A. angustifolia and H. dulcis). The image is WV2-2016, true color composition (R=660 nm, G=545 nm, B=480 nm)

A. angustifolia is a dominant tree species of the MOF. It is an angiosperm conifer evergreen, with an unmistakable appearance. It has a trunk, usually single and cylindrical, which can reach up to 50m in height and 2.5m in diameter at 1.3m (diameter at breast height: DBH). Its crowns are symmetrical and circular in conical shape when young, but as an adult, they present a concave crown with an average of 4-m-long branches, reaching up to 9m. Its pine needles are dark green, simple, alternating, spiral, and with a tip ending in a thorn, reaching 6cm in length and 1cm in width (Ruiz 2017). This species is also found in isolated forests and fields, presenting easy cognitive identification in very high-resolution sensor images (i.e., RPAs).

H. dulcis is a deciduous tree species originally from Southeast Asia (China, Japan, and Korea). It is a species which can reach up to 25 m in height in Brazil, with trunks up to 50cm of DBH and crowns up to 8m in diameter (Liu et al. 2015). It presents reproductive phases and very peculiar phenology, with light green leaves at the time of regrowth compared to native species, most of which are evergreen with dark green leaves. This facilitates its cognitive recognition in high-resolution images.

2.2 WorldView-2 satellite

WorldView-2 is a new generation of imaging satellites. It is a commercial satellite with passive sensors owned by DigitalGlobe®. It has a heliosynchronous orbit and is approximately 770km above sea level. It has eight spectral bands: (1) coastal blue (CB: 400–450 nm), (2) blue (B: 450–510 nm), (3) green (G: 510–580 nm), (4) yellow (Y: 585–625 nm), (5) red (R: 630–690 nm), (6) red edge (RE: 705–745 nm), (7) near-infrared 1 (NIR-1: 770–895 nm), (8) near-infrared 2 (NIR-2: 860–1040 nm), with a spatial resolution of 1.84m. It also has a panchromatic band of 0.46m of spatial resolution with a detection interval of 450–800nm. This satellite image has a scene size (coverage of a captured image) of 16.4km2, with a radiometric resolution of 11 bits per pixel. Its temporal resolution is 3.7 days for maximum spatial resolution and 1.1 days with its spatial resolution halved. This off-nadir movement also enables the satellite to produce stereoscopy in the image (DigitalGlobe 2010). The WorldView-2 mission was launched in 2009 and is currently operational.

2.3 Image acquisition and pre-processing

We acquired a WorldView-2 image captured on 04/30/2016 at 1:42 PM (WV2-2016) with less than 3% of cloud coverage; the image covered about 85% of the Imbituvão River sub-basin. The image was orthorectified (WGS 84, UTM – 22S) with a digital elevation model (DEM) on a scale equivalent to the spatial resolution of the image. Afterward, it was submitted to atmospheric correction, and its radiance values were converted to reflectance by the Fast Line-of-sight Atmospheric Analysis of Spectral Hypercubes (FLAASH) algorithm (Perkins 2012). After the atmospheric correction, multispectral images were fused into a panchromatic image by the principal components spectral sharpening (PC spectral sharpening) algorithm Welch and Ehlers (1987), with resampling based on the nearest neighbor to achieve a spatial resolution of 0.5m. Sample collection, attribute selection, feature extraction, data mining, and classification were then performed after the image acquisition and pre-processing.

2.4 Sample collection and spectral attributes

A commonly used correlation analysis between bands was performed before choosing the area and collecting the samples. Band correlation is expressed by the reflectance of each pixel in each band, and the dimensions of the resulting images are nb by nb, where nb is the number of bands of the input data, in this case, nb = 8. This analysis indicates the similarity between bands; however, the composition choice varies according to the image application (Inglada 2002). For this work, low similarity values were required to add more information in the composition. The most dissimilar composition for detecting the H. dulcis and A. angustifolia species was NIR (NIR-2=950 nm, G=545 nm, B=480 nm). After choosing the composition, the image was converted from 11 bits to 8 bits to reduce dimensionality, and this conversion was done by interpolation. The chosen composition was compared to the true RBG color composition (R=660 nm, G=545 nm, B=480 nm), as shown in Fig. 3A. From this point on, we will use the term NIR for composition with the red band’s replacement by near-infrared and RGB for the composition with natural colors.

Spectral-band correlation, radiometric conversion, and sample collection by clipping the images of the crowns of A. angustifolia and H. dulcis. A Correlation between the 8 spectral bands of the WorldView-2 sensor; composition choice: near-infrared (NIR-2=950 nm, G=545 nm, B=480 nm) and true color composition (R=660 nm, G=545 nm, B=480 nm); and radiometric conversion. B Collection of samples by clipping the crowns of A. angustifolia and H. dulcis in 20 × 20px, in both compositions, in simultaneous confirmation with the RPA’s images

An area of 20 × 20 pixels was used to crop the treetops in the images (sampling). This dimension was defined as seeking to cover as much crown area as possible for both species, avoiding pixels that do not correspond to the crowns, or border pixels (such as the edges of the tree itself). Researchers with a similar aim as in the present study sought to detect the invasive Solanum mauritianum species in South Africa (native species of the Brazilian Atlantic forest) also in WorldView-2 images using RF as a classifier (Peerbhay et al. 2016). They obtained optimal clipping dimensions between 15 and 17m2, an average of 16 × 16 pixels for the fused image. Thus, the choice of an average value of 20 × 20px for larger species such as H. dulcis and A. angustifolia is justified.

This collection was performed in such a way that the sampled individuals presented the same crown shape pattern and a homogeneous distribution along with the image, avoiding overlapping of crowns, trees in edges, or isolated conditions (Fig. 1). Furthermore, the detailed choice of representative individuals (healthy trees with similar crown sizes and shapes) was performed as a collection criterion. A total of 45 clippings were made for each species (A. angustifolia and H. dulcis) and each RGB and NIR composition, (i.e., 90 clippings for each composition) (Crisigiovanni et al. 2021). The entire clipping procedure was manually performed using GIMP 2.8.22 software, with simultaneous image validation of an RPA with a spatial resolution of 0.06m (eBee - Sensefly®). The RPA images do not correspond to the same year and month as the satellite image, as they were captured in August 2018 when H. dulcis has a very distinct leaf color (Fig. 3B).

The 90 clippings of the two species were divided into 2/3 (60 samples) to build the classification models (MOD). We applied cross-validation k-folds 10/90 (CV) in this set. We then separated 1/3 (30 samples) without training, for external validation (EV) of the models. Next, we performed the cutout collection and the division to construct each model in a balanced way to avoid problems with imbalance (More and Rana 2017). The choice of cutouts for the model construction and validation was made randomly and for both RGB and NIR compositions. Each clipping contained 400 pixels, and each pixel could receive 256 digital numbers (DN) from each band. We had three bands per composition or three spectral attributes per image. The organization and distribution of each pixel’s digital values and each band is where the spectral and textural features can be selected. The reflectance histograms for each image were extracted by Chemostat software® (Helfer et al. 2015).

2.5 Textural attributes

2.5.1 Pyramid histogram of oriented gradients

Pyramid is a technique used to reduce the dimensionality of raster-type images, which means that the pixel dimension is halved for each step of the pyramid (for example 20×20px; 10×10px; 5×5px). Thus, a weighted average is performed among the closest neighbors to achieve this, transforming every four pixels of the image into one pixel (Fig. 4A). The histogram of oriented gradients (HOG) technique is applied after reducing the image dimensionality, which is a widely used resource descriptor in computer vision and image processing for object detection purposes (Korom et al. 2014).

Process of obtaining textural attributes by PHOG and Edge Filter descriptors. A PHOG, reduction of the dimensionality of the crown’s clipped followed by the application of the histogram of oriented gradients, by scanning the image by windows. B Application of Edge Filter for detection of discontinuities in pixel values. Both images of A. angustifolia in A and B come from WV2-2016, true color composition (R=660 nm, G=545 nm, B=480 nm)

The HOG method consists of overlapping windows of defined dimensions to scan the image both horizontally and vertically. This filter passage encodes information about the orientation of the intensity gradients in the whole clipped image. This coding is performed by vectors oriented in a direction ranging from 0 to 360° (0°, 45°, 90°, 135° ... , 360°) and intensity based on the digital values of each pixel (Fig. 4A). HOG is a similar method for edge-oriented histograms (or Edge Filters), although it uses local contrast normalization for greater accuracy. HOG has been applied in different knowledge areas since its discovery, including detecting forest species by satellite imagery (Dalal and Triggs 2005; Rybski et al. 2010; Hu and Collornosse 2013; Torrione et al. 2014; Jipeng et al. 2020). For this work, PHOG and Edge Filter (items 2.5.1 and 2.5.2) were applied to all samples (cutouts) of both compositions (NIR and RBG), and for both A. angustifolia and H. dulcis species.

2.5.2 Edge Filter

Edges can be defined as points in a digital image where the brightness of the image changes sharply with discontinuities. These pixels, in which the reflectance of the image changes drastically, are usually arranged in a set of segments of lines or curves. Edge detection includes a variety of mathematical methods which aim to identify these discontinuities caused by brightness changes. Like HOG, this method also consists of overlapping windows of defined dimensions and scanning the entire image vertically and horizontally. This imaging scan identifies edges and organizes them according to their distribution and location (Fig. 4B). This technique has been widely used in computer vision for detecting objects in an unsupervised manner (Başa 2015), or in segmenting images, mainly from satellites, for object-oriented classification (Kang et al. 2013). Both textural descriptors (HOG and Edge Filter) were executed by the ImageFilter package implemented in Weka 3.9.3 (Waikato Environment for Knowledge Analysis) (Frank et al. 2016).

2.6 Data mining (genetic algorithm)

A data mining technique was used before performing the classification to select the best features, using spectral data (reflectance histograms) and textural data-oriented histograms extracted by PHOG (the data extracted by Edge Filter did not go through the mining process because the number of elements extracted by this descriptor was low). The genetic algorithm (GA) was used for this mining, which is a metaheuristic algorithm bioinspired by the adaptive process of natural selection, and thus uses operators such as crossover and mutation to select the best features in a data set (Huang and Wang 2006). GA belongs to the larger class of evolutionary algorithms (EA) and is commonly used to generate high-quality solutions to optimization problems in operational surveys, but has been widely applied to detect objects in remote sensor images (Bhanu et al. 1995; Celik 2010; Hashemi et al. 2010; Xu et al. 2020).

The GA was adjusted for our goal of selecting better spectral and textural features of the images to the following hyperparameters: 60% probability of crossover and 3.3% of mutation. The crossings occurred with a maximum population of 20 individuals with a selection time of 20 generations. This algorithm was applied to the 60 separate individuals for constructing classification models. Figure 5 details the input of the attributes of the clippings of each image to be selected by the GA algorithm.

2.7 Classification algorithms: ANN and RF

Next, two classification algorithms were applied after choosing the best features for both compositions by GA for both spectral and textural features: (1) ANN; and (2) RF. Both classification algorithms were performed with cross-validation by the K-folds 10/90 method. The classification was also evaluated by the Fmeasure, which uses the agreement of the data set classifications. Machine learning algorithms were run using Weka 3.9.3 software (Frank et al. 2016).

2.7.1 Artificial neural network

The ANN algorithm is a bioinspired algorithm based on the functioning of human neuron layers (Tang et al. 2016). We used a multilayer perceptron (MLP) architecture, which constituted the algorithm of a layer of input neurons and a hidden output layer. The impulses received by the neural receptors represent the data entry in the algorithm, which in this case were spectral and textural attributes. The ANN classification process is performed by iterations called epochs; between each epoch, there may be adjustments in the weights assigned to the input data, modifying the paths made between neurons of the network for classification. These adjustments represent the learning rate of the algorithm, which for this case used a learning rate of 0.3, with a variation of training time epochs from 0 to 500 (Ramchoun et al. 2016).

2.7.2 Random forest

RF is also a bioinspired algorithm, although based on decision trees. This algorithm builds a new database based on the original one by classifying the two databases into original and artificial. The algorithm then performs a sequence of decision trees, and instead of searching for the most important resource when splitting a node, it looks for the best resource among a random subset of resources (Breiman 2001). For this case, the hyperparameters were 100 decision trees to be analyzed in 100 search iterations (Guan et al. 2013).

2.8 Accuracy analysis

Percentages of global correct answers were used for both species concerning the total number of cutouts classified by the model to verify the ANN and RF algorithms’ classification capability. We also used the Precision (Eq. 1), Recall (Eq. 2), and Fmeasure (Eq. 3) to access the omission and commission errors, which has been extensively used to analyze the accuracy of classifier algorithms (Hand and Christen 2018). Its equations are given by:

In which TP is the total number of each correctly classified species (A. angustifolia or H. dulcis); FN is the false-negative detections, indicating the number of A. angustifolia or H. dulcis which are not correctly classified (i.e., omission errors); and FP is the false-positive detections, indicating the number A. angusitfolia classified as H. dulcis and vice versa (i.e., commission errors). Fmeasure corresponds to the mean of Precision and Recall (in this case, we can use a simple mean because the data is balanced).

3 Results

3.1 Spectral and textural feature selection

The extraction of spectral features was performed by the reflectance histogram of the image clippings. Figure 6 shows that the reflection patterns for each of the RGB and NIR bands are very distinct between H. dulcis (B) and A. angustifolia (A). It can be observed that the shade of green reflected by A. angustifolia is darker than H. dulcis. Regarding the radiometric resolution, we can observe that A. angustifolia’s reflectance interval is about 50 shades of gray darker than H. dulcis. The greater dispersion of the values reflected in the infrared range to H. dulcis is also remarkable.

Reflectance distribution histograms of the selected bands for each species A. angustifolia and H. dulcis. A Histogram of distribution of reflectance values for the four bands selected in the work for A. angustifolia. B Histogram for H. dulcis. Reflectance percentage values range from 0 to 100% and gray levels in 8 bits from 0 (white) to 255 (black). The axes were limited to the maximum values for easy visualization

Feature extraction by textural descriptors was also performed based on the reflectance values in Fig. 6. However, textural descriptors take into account the position of each pixel to find discontinuities or sudden changes in reflection patterns. The features selected by PHOG are based on the image size and the number of pyramids performed to reduce the image’s dimensionality. Thus, the number of features selected for the different compositions and different species was 680 in total, approximately 225 features per spectral band. The results were different for the Edge Filter, since different numbers of features per species are extracted. The compositions of the RGB and NIR images provided 80 features selected for the Edge Filter, approximately 25 features per spectral band.

The genetic search algorithm using the hyperparameters proposed in the literature was able to reduce the amount of input data for the classifier algorithms by about 60%. We obtained optimization rates of up to 10% using GA compared to when not using GA for RF and ANN classifiers. This reduction can be observed for both reflectance histograms and for the features selected by the textural descriptor PHOG.

3.2 ANN and RF classifiers

Both ANN and RF classifiers showed high performance, with high and similar classification rates, as can be seen in Fig. 7A. However, RF showed greater accuracy for both spectral and textural attributes. An average increase of 2.5% was observed, corresponding to two additional images correctly classified by RF in cross-validation (CV). The addition of correct classifications for external validation (EV) was 6.4% using RF as a classifier. The increase was only 0.3% when all features (all images) (MOD) were used, in other words practically null (Table 1).

Classifications percentage by ANN and RF algorithm without distinction of spectral and textural attributes. A Average classifications by ANN and RF (regardless of composition). B Average classifications by ANN and RF, taking into account the composition RGB and NIR. CV—cross-validation; EV—external validation; and MOD—classification using all features

When comparing the two compositions (RGB and NIR) considering both spectral and textural descriptors, we can observe that there was a better classification for the NIR composition of the images compared to RGB images (Fig. 7B). In analyzing ANN, there was an increase of 11.6% using CV, 2.3% using EV, and 11.1% when using MOD. Regarding RF, there was even greater contrast between the compositions, with an increase of 12.2% for the CV, 8.9% for the EV, and 10.6% for the MOD (Table 1).

In focusing on the correct percentage of images classified by species by both algorithms, we can observe that there were no major difficulties for either ANN or RF in classifying species for spectral descriptors (Fig. 8). There was a higher classification rate of H. dulcis for RGB images with a difference of 2.3% in the CV compared to A. angustifolia. This increase was maintained, although subtly, showing an increase of 0.8% for the MOD. In contrast, A. angustifolia stood out for the EV with an increase of 6.7% accurate classification for the H. dulcis species (Table 1).

However, this scenario was completely different regarding texture descriptors (PHOG and Edge Filter). In this case, all the results for A. angustifolia outweighed the classification values, demonstrating that the texture descriptors for recognizing H. dulcis were not as efficient as for A. angustifolia (Fig. 8). The decrease in H. dulcis classification rates compared to A. angustifolia was 25.4% lower for CV (equivalent for this case, 7 individuals classified incorrectly), 20% lower for EV (6 individuals), and 15.8% lower for MOD (4 individuals), respectively (Table 1).

We can observe that the differences in classifications between species show greater contrast when we classify the images by the PHOG and Edge Filter texture descriptors (Fig. 9 and Table 1). The classification of H. dulcis for texture was low, mainly due to PHOG features. On the other hand, the scenario reverses for A. angustifolia, with subtle variations in the classification. The PHOG descriptors for A. angustifolia proved to be better when classified by RF and ANN. Cross-validation (CV) was over 99%, well above the CV for H. dulcis.

Since the classification of H. dulcis using PHOG did not obtain high rates, we can better analyze the results for this species by comparing the differences in the accuracy of PHOG for RGB composition (Table 1). CV—Precision: 23.3%, Recall: 100.0%, and Fmeasure: 61.7%; EV—Precision: 13.3%, Recall: 66.7%, and Fmeasure: 40.0%; MOD—Precision: 36.7%, Recall: 100.0%, and Fmeasure: 68.3% (these values were the same for both RF and ANN). Note that the main error is associated with the omission of H. dulcis (images not classified correctly), which did not markedly occur in the commissions (insertion of A. angustifolia in H. dulcis’ class). This result indicates that there was a greater difficulty in classifying H. dulcis linked to the features extracted by PHOG for RGB composition.

The computational time spent on constructing and training the models was also analyzed. The computational effort spent to run the RF algorithm was about 10 times lower than for the ANN algorithm (data not shown). The network system stabilizes after 500 epochs for both RGB and NIR compositions when analyzing the recognition rate by the ANN classifier with the number of epochs that the algorithm processes. The neural network learning was plotted by its percentage of classification (cross-validation) as a function of epochs (Fig. 10A and 10B). Another factor which can be emphasized is the need for a larger number of epochs for the curve to achieve an asymptotic behavior when textural attributes are used when compared to spectral attributes by the RGB channels. We can observe that the neural network was already trained at 100 epoch, without a loss in the classification quality and with much lower computational cost compared to when using 500 epochs. It can also be noted that the inflection points of the curves, meaning the moments where there is the highest classification with the shortest possible time (obtained by the first derivative of the function), happen in the first 10 iterations.

ANN learning ratio as a function of epochs for each composition and descriptor. A Percentage of classification as a function of the number of epochs for the spectral attributes (RGB and NIR). B Percentage of classification as a function of the number of epochs for the textural attributes (PHOG and Edge Filter)

4 Discussion

4.1 MOF species composition and its detections by remote sensors

MOF is one of the most diverse phytophysiognomies in the Atlantic Rainforest. In addition to having a diversified horizontal composition, it also has high spatial variability and a very stratified vertical structure (Pelissari et al. 2018). About 106 tree species, 75 genera, and 39 botanical families were identified in forest inventories carried out in the region of the present work (Imbituvão sub-basin). The vast majority of these species are evergreen angiosperms with great variability in leaf structure. The Myrtaceae family deserves mention, having crowns mostly in the codominant stratum of the forest. Lauraceae and Fabaceae families are part of the species, which make up the upper or dominant strata, as well as the Sapindaceae, Asteraceae, and Araucariaceae (represented by A. angustifolia) families. These families comprise 62.6% of all individuals sampled Figueiredo et al. (2013). Similar patterns were found in other studies carried out in the MOF in southern Brazil (Ferreira et al. 2016b; de Oliveira et al. 2018; Pelissari et al. 2018).

The diversity of forest spectral responses is also high due to the high diversity of species, spatial variability and stratification of MOF, and subtropical forest characteristics, increasing the difficulty in classifying canopies by remote sensors. It was possible to identify 12 species with classic machine learning techniques (support vector machine—SVM) in studies using hyperspectral RPAs and point clouds (Sothe et al. 2019), and up to 15 species using deep learning techniques such as convolutional neural network (CNN) (Sothe et al. 2020). In addition, using hyperspectral RPA sensors with RF as a classifier in the seasonal semi-deciduous forest (phytophysiognomy belonging to the Atlantic Rainforest, but with less diversity and without the presence of A. angustifolia) managed to classify 8 species (Miyoshi et al. 2020). However, these classification values do not reach 15% of the species found in a conventional forest inventory in Atlantic forests.

4.2 WorldView-2 for tree species detection

The WordView-2 multispectral satellite is widely used for tree crown detection (Immitzer et al. 2012; Korom et al. 2014; Peerbhay et al. 2016). However, it still has wide bands in the detection spectrum, mainly in the infrared, compared with hyperspectral sensors. This makes it difficult to detect species in a highly diverse ecosystem such as MOF. This factor is due to the overlap of spectral signatures between species not being included in the spectrum bands captured by the sensor. This scenario changes in classifications of European forests. For example, an identification potential of 20 species was achieved in research using RF with WordView-2 images in eastern Austria (Immitzer et al. 2012).

The latest mission of the Worldviews series (WorldView-3) features one of the most advanced very high-resolution (VHR) sensors, providing spectral data in 16 bands and covering from visible to near-infrared (VNIR, 400–1040 nm) and shortwave-infrared (SWIR, 1210–2365 nm). The aggregation of these spectral bands facilitates recognition of forest species environments with high diversity. Ferreira et al. (2016a) managed with the association of WV-3 images and textural attributes to identify 8 species in the semi-deciduous forest. The same was found with WV-3 images associated with hyperspectral data (Ferreira et al. 2019).

4.3 Morphological and phenological species characteristics

H. dulcis has very obvious, delimited phenology and reproductive cycles. This species blooms from August to February in southern Brazil, and has ripe fruits from March to October. There is also a total leaf loss season that runs from April/May to August, and regrowth begins at the end of August (Carvalho 1994). These phenological characteristics can be easily cognitively observed in high-resolution satellite images, except from February to April when the foliage presents a shade of green similar to other MOF leaf-species. The A. angustifolia species does not present changes during the year, but its detection in images is also possible because it is one of the tallest species of the Atlantic Rainforest and due to the characteristics of the pine needle.

These phenological differences between the two species were taken into account for comparison by spectral and textural descriptors. It was expected a priori that the spectral attributes would not be so significant due to the day the images were captured (04/30/2016), which is the beginning of the flowering of H. dulcis, a stage in which the species still presents a shade of green similar to other leafy species. These phenological responses proved to be more efficient for distinguishing species, mainly related to the classification of H. dulcis. This result indicates that even in times when H. dulcis does not visually stand out, the species presents a high contrast between the reflection rates of A. angustifolia. This differentiation was even more visible when the attributes were analyzed by the composition with the infrared band (NIR-2).

The importance of the infrared component for vegetation studies is well known; however, near-infrared regions (500–900 nm) were considered essential for differentiating species in Atlantic Rainforests (Ferreira et al. 2019; Miyoshi et al. 2020). On the other hand, the textural descriptors demonstrate a higher efficiency (for both classifiers) in detecting A. angustifolia, constituting a result which is in favor of what was expected a priori. These results are possible mainly due to the singular shape of their crowns, which present numerous discontinuities of edges because of changes in shading formed by the shape of the branches (Sothe et al. 2020).

4.4 ANN and RF classifier performance

In summary, we found high rates of correct classifications for both species by the RF and ANN classification algorithms. Moreover, we observed that there was a low computational processing cost to run the algorithms. However, the highest recognition values were achieved by the RF classifier over ANN, which in addition to obtaining lower classification rates, required a higher computational cost for network training. Some studies point to greater stability and predictive power of ANN compared to RF when adjusting regression models for optimization purposes (Ahmad et al. 2017). Nonetheless, several studies have indicated that the RF classifier achieves better classification results when applied to data from multispectral satellite images, and in addition requires configurations with fewer hyperparameters for the adjustment of its models (Chan et al. 2012; Shao et al. 2015).

Some characteristics aiding efficient application of RF with multispectral images for classifications of different tree species could be shown in this work. The increase in the correct classification rates for both species when the NIR composition was used was evident. This fact indicates the importance of gaining spectral information when using quality data from sensors such as WorldView-2 (Ferreira et al. 2016a, 2019).

Another characteristic of RF is its greater stability regarding the number and size of samples used for training when compared to other classifiers. This is due to the large number of decision trees produced by randomly selecting a subset of training samples and a subset of variables to split each node in the tree. Comparisons published using RF and other group classifiers such as AdaBoost reported that both classifiers produced similar classification results, although the RF algorithm showed greater speed to train the data and greater stability in the classification (Chan and Paelinckx 2008; Miao et al. 2012).

5 Conclusion

This study demonstrated that the WorldView-2 multispectral sensor has the potential to provide sufficient information for recognizing the H. dulcis and A. angustifolia species in the MOF. The RF and ANN machine learning algorithms were effective in classifying these species, reaching close to 95% accuracy. This result could be achieved due to the sample design choice with scale factors for sample collection and the use of spectral and textural descriptors (PHOG and Edge Filter). Spectral attributes, mainly related to the infrared band (NIR-2), were more efficient for classification compared to textural attributes. Furthermore, H. dulcis classification was not satisfactory regarding texture, but the attributes extracted by the Edge Filter were efficient in classifying A. angustifolia. Furthermore, data mining by the genetic algorithm indicated that selecting the best features reduced the data entry size and increased the performance of the RF and ANN classifiers.

Additional studies covering different types of vegetation in different phytogeographic contexts and periods of the year (spatiotemporal) are needed to confirm the potential of WorldView-2 data for classifying these species. We suggest using all the radiometric resolution of the images (11bits) which was converted in this work to reduce the data dimensionality. We also suggest using deep learning algorithms (i.e., CNN) to automate the extraction of spectral, textural, and spatial attributes. Therefore, other MOF species should also be evaluated to increase the diversity of spectral responses and improve remote forest inventories.

We can conclude that the sample design, the use of WorldView-2 sensor images, the choice of descriptors, and GA, RF, and ANN algorithms were efficient for classifying the species addressed herein. This work contributes to improvements in the recognition and identification methods of tree species by high-resolution image and machine learning. It consequently provides information which contributes to the conservation and management of the MOF, considering that A. angustifolia is the characteristic and dominant species of this forest typology, as opposed to H. dulcis which is a worrying and aggressive invasive alien species.

Abbreviations

- MOF:

-

Mixed Ombrophylous Forest

- NCU:

-

National Conservation Units

- IAT:

-

Instituto Água e Terra (Brazilian environmental agency)

- DBH:

-

Diameter at breast height

- IUCN:

-

Union for the Conservation of Nature and Natural Resources

- DEM:

-

Digital elevation model

- WGS:

-

World Geodetic System

- UTM:

-

Universal Transverse of Mercator

- WV2:

-

WorldView2 (satellite)

- RPA:

-

Remotely Piloted Aircraft

- ANN:

-

Artificial neural network

- MLP:

-

Multilayer perceptron (neural network architecture)

- RF:

-

Random forest

- PHOG:

-

Pyramid histogram of oriented gradients

- GA:

-

Genetic algorithm

- R:

-

Red

- G:

-

Green

- B:

-

Blue

- NIR:

-

Near-infrared

- CV:

-

Cross-validation

- EV:

-

External validation

- MOD:

-

Model using the supplied test set (all instances)

References

Ahmad MW, Mourshed M, Rezgui Y (2017) Trees vs neurons: comparison between random forest and ANN for high-resolution prediction of building energy consumption. Energy Build 147:77–89. https://doi.org/10.1016/j.enbuild.2017.04.038

Andersson FA (2005) Coniferous forests Elsevier Uppsala, Sweden 296–297

Başa B (2015) Implementation of hog edge detection algorithm Onfpga’s. Procedia Soc Behav Sci 174:1567–1575. https://doi.org/10.1016/j.sbspro.2015.01.806

Belgiu M, Drăgu L (2016) Random forest in remote sensing: a review of applications and future directions. ISPRS J Photogramm Remote Sens 114:24–31. https://doi.org/10.1016/j.isprsjprs.2016.01.011

Bhanu B, Lee S, Ming J (1995) Adaptative image segmentation using genetic algorithm. Ieee Trans Syst man, Cybern 25:1543–1567. https://doi.org/10.1109/21.478442

Breiman L (2001) Random forests. Mach Learn 45:5–32. https://doi.org/10.1023/a:1010933404324

Cáceres NC, Monteiro-Filho ELA (2001) Food habits, home range and activity of Didelphis aurita (Mammalia, Marsupialia) in a forest fragment of Southern Brazil. Stud Neotropical Fauna Environ 36:85–92. https://doi.org/10.1076/snfe.36.2.85.2138

Carvalho PER (1994) Ecologia, silvicultura e usos da uva-do-Japão (Hovenia dulcis Thunberg). Embrapa Florestas-Circular Técnica 23:24

Celik T (2010) Change detection in satellite images using a genetic algorithm approach. IEEE Geosci Remote Sens Lett 7:386–390. https://doi.org/10.1109/LGRS.2009.2037024

Chan JCW, Paelinckx D (2008) Evaluation of random forest and Adaboost tree-based ensemble classification and spectral band selection for ecotope mapping using airborne hyperspectral imagery. Remote Sens Environ 112:2999–3011. https://doi.org/10.1016/j.rse.2008.02.011

Chan JCW, Beckers P, Spanhove T, Vanden BJ (2012) An evaluation of ensemble classifiers for mapping Natura 2000 heathland in Belgium using spaceborne angular hyperspectral (CHRIS/Proba) imagery. Int J Appl Earth Obs Geoinf 18:13–22. https://doi.org/10.1016/j.jag.2012.01.002

Colombo AF, Joly CA (2010) Brazilian Atlantic Forest lato sensu: the most ancient Brazilian forest, and a biodiversity hotspot, is highly threatened by climate change. Braz J Biol 70:697–708. https://doi.org/10.1590/s1519-69842010000400002

Crisigiovanni EL, Figueiredo Filho A, de Lima VA, Pesck VA (2021) Database for classification of Araucaria angustifolia (Bertol.) Kuntze and Hovenia dulcis Thunb. based on WoldView2 image clippings. V1, Zenodo repository, [dataset]. https://doi.org/10.5281/ZENODO.4545112

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05). 886–893

De Gasper AL, Sevegnani L, Vibrans AC et al (2013) Inventário florístico florestal de Santa Catarina : espécies da Floresta Ombrófila Mista. Rodriguésia 64:201–210

de Oliveira TWG, Schmitz R, Camaño JDZ et al (2018) Behavior of the diametric distribution of ecological groups in a Mixed Ombrophilous Forest fragment. Floresta 48:413–424. https://doi.org/10.5380/rf.v48i3.56466

Dechoum M d S, Rejmánek M, Castellani TT, Zalba SM (2015) Limited seed dispersal may explain differences in forest colonization by the Japanese raisin tree (Hovenia dulcis thunb.), an invasive alien tree in southern Brazil. Trop Conserv Sci 8:610–622. https://doi.org/10.1177/194008291500800303

DigitalGlobe (2010) The benefits of the eight spectral bands

Ferreira MP, Zortea M, Zanotta DC, Shimabukuro YE, de Souza Filho CR (2016a) Mapping tree species in tropical seasonal semi-deciduous forests with hyperspectral and multispectral data. Remote Sens Environ 179:66–78. https://doi.org/10.1016/j.rse.2016.03.021

Ferreira T d S, Marcon AK, Salami B et al (2016b) Composição florístico-estrutural ao longo de um gradiente de borda em fragmento de floresta ombrófila mista alto-montana em Santa Catarina. Cienc Florest 26:123–134. https://doi.org/10.5902/1980509821097

Ferreira MP, Wagner FH, Aragão LEOC, Shimabukuro YE, de Souza Filho CR (2019) Tree species classification in tropical forests using visible to shortwave infrared WorldView-3 images and texture analysis. ISPRS J Photogramm Remote Sens 149:119–131. https://doi.org/10.1016/j.isprsjprs.2019.01.019

Figueiredo FA, Nauiack CHB, Roik M, Gomes GS (2013) Inventário das florestas nativas em pequenas propriedades rurais na bacia do Imbituvão. Centro-Sul do Paraná 1:115

Frank E, Hall MA, Witten IH (2016) The WEKA workbench. Online Appendix. In: Data mining: practical machine learning tools and techniques, 4o edn

Guan H, Li J, Chapman M, Deng F, Ji Z, Yang X (2013) Integration of orthoimagery and lidar data for object-based urban thematic mapping using random forests. Int J Remote Sens 34:5166–5186. https://doi.org/10.1080/01431161.2013.788261

Hand D, Christen P (2018) A note on using the F-measure for evaluating record linkage algorithms. Stat Comput 28:539–547. https://doi.org/10.1007/s11222-017-9746-6

Hashemi S, Kiani S, Noroozi N, Moghaddam ME (2010) An image contrast enhancement method based on genetic algorithm. Pattern Recogn Lett 31:1816–1824. https://doi.org/10.1016/j.patrec.2009.12.006

Helfer GA, Bock F, Marder L et al (2015) Chemostat, um software gratuito para análise exploratória de dados multivariados. Quim Nova 38:575–579. https://doi.org/10.5935/0100-4042.20150063

Hu R, Collornosse J (2013) A performance evaluation of gradient field HOG descriptor for sketch based image retrieval. Comput Vis Image Underst 117:790–806. https://doi.org/10.1016/j.cviu.2013.02.005

Huang CL, Wang CJ (2006) A GA-based feature selection and parameters optimizationfor support vector machines. Expert Syst Appl 31:231–240. https://doi.org/10.1016/j.eswa.2005.09.024

IAT (2007) Refinamento das Áreas Prioritárias para a Conservação da Biodiversidade no Paraná. Curitiba

Immitzer M, Atzberger C, Koukal T (2012) Tree species classification with random forest using very high spatial resolution 8-band WorldView-2 satellite data. Remote Sens 4:2661–2693. https://doi.org/10.3390/rs4092661

Inglada J (2002) Similarity measures for multisensor remote sensing images. Int Geosci Remote Sens Symp 1:104–106. https://doi.org/10.1109/igarss.2002.1024955

Jipeng T, Manasa S, Manjunath TC (2020) An optimized method using CNN , RF , cuckoo search and HOG for early detection of eye disease in humans. Eur J Electr Eng Comput Sci 4:2–5. https://doi.org/10.24018/ejece.2020.4.2.202

Kang X, Member S, Li S, Benediktsson JA (2013) Spectral – spatial hyperspectral image classification with edge-preserving filtering. IEEE Trans Geosci Remote Sens 1–12. https://doi.org/10.1109/TGRS.2013.2264508

Korom A, Phua MH, Hirata Y, Matsuura T (2014) Extracting oil palm crown from WorldView-2 satellite image. IOP Conf Ser Earth Environ Sci 18. https://doi.org/10.1088/1755-1315/18/1/012044

Kwok R (2018) Ecology’s remote-sensing revolution. Nature 556:137–138

Liu T, Hua S, Wang Z (2015) Dietary Chinese herbs. Diet Chinese Herbs:417–423. https://doi.org/10.1007/978-3-211-99448-1

Maack R (2017) Geografia física do estado do Paraná Editora UEPG. Ponta Grossa

Mazza CA da S, Santos JE dos Mazza MCM, et al. (2005) Caracterização Ambiental dos Componentes Estruturais da Paisagem do Município de Irati, ParanáEmpresa Brasileira de Pesquisa Agropecuária Embrapa Florestas Colombo

McDermid GJ, Hall RJ, Sanchez-Azofeifa GA et al (2009) Remote sensing and forest inventory for wildlife habitat assessment. For Ecol Manag 257:2262–2269. https://doi.org/10.1016/j.foreco.2009.03.005

Miao X, Heaton JS, Zheng S, Charlet DA, Liu H (2012) Applying tree-based ensemble algorithms to the classification of ecological zones using multi-temporal multi-source remote-sensing data. Int J Remote Sens 33:1823–1849. https://doi.org/10.1080/01431161.2011.602651

Miyoshi GT, Imai NN, Tommaselli AMG et al (2020) Evaluation of hyperspectral multitemporal information to improve tree species identification in the highly diverse atlantic forest. Remote Sens 12:1–21. https://doi.org/10.3390/rs12020244

More AS, Rana DP (2017) Review of random forest classification techniques to resolve data imbalance. Proc 1st Int Conf Intell Syst Inf Manag ICISIM 72–78. https://doi.org/10.1109/ICISIM.2017.8122151

Nagendra H (2001) Using remote sensing to assess biodiversity. Int J Remote Sens 22:2377–2400. https://doi.org/10.1080/01431160117096

Nauiack CH (2015) Regimes de manejo para Hovenia dulcis Thunb. em Floresta Ombrófila Mista como alternativa de controle e geração de rendas. Midwestern State University

Peerbhay K, Mutanga O, Lottering R, Ismail R (2016) Mapping Solanum mauritianum plant invasions using WorldView-2 imagery and unsupervised random forests. Remote Sens Environ 182:39–48. https://doi.org/10.1016/j.rse.2016.04.025

Pelissari AL, Filho AF, Ebling AA et al (2018) Spatial variability of tree species diversity in a mixed tropical forest in Southern Brazil. An Acad Bras Cienc 90:2491–2500. https://doi.org/10.1590/0001-3765201820170826

Perkins T (2012) Speed and accuracy improvements in FLAASH atmospheric correction of hyperspectral imagery. Opt Eng 51:111707. https://doi.org/10.1117/1.oe.51.11.111707

Ramchoun H, Amine M, Idrissi J, Ghanou Y, Ettaouil M (2016) Multilayer perceptron: architecture optimization and training. Int J Interact Multimed Artif Intell 4:26. https://doi.org/10.9781/ijimai.2016.415

Rezende CL, Scarano FR, Assad ED, Joly CA, Metzger JP, Strassburg BBN, Tabarelli M, Fonseca GA, Mittermeier RA (2018) From hotspot to hopespot: an opportunity for the Brazilian Atlantic Forest. Perspect Ecol Conserv 16:208–214. https://doi.org/10.1016/j.pecon.2018.10.002

Ribeiro MC, Metzger JP, Martensen AC, Ponzoni FJ, Hirota MM (2009) The Brazilian Atlantic Forest: how much is left, and how is the remaining forest distributed? Implications for conservation. Biol Conserv 142:1141–1153. https://doi.org/10.1016/j.biocon.2009.02.021

Ruiz ECZ (2017) Biomassa e flora epifítica de copas de Araucaria angustifolia. Midwestern State University

Rybski PE, Huber D, Morris DD, Hoffman R (2010) Visual classification of coarse vehicle orientation using histogram of oriented gradients features. In: EEE Intelligent Vehicles Symposium 1–6

Salamuni R, Hertel R, Silva J (1969) História do Paraná. Grafipar Curitiba

Samborska IA, Alexandrov V, Sieczko L, Kornatowska B (2014) Signpost Open Access. Journal of NanoPhotoBioSciences Artificial neural networks and their application in biological and agricultural research 2:14–30

Shao Y, Campbell JB, Taff GN, Zheng B (2015) An analysis of cropland mask choice and ancillary data for annual corn yield forecasting using MODIS data. Int J Appl Earth Obs Geoinf 38:78–87. https://doi.org/10.1016/j.jag.2014.12.017

Simioni JPD, Guasselli LA, de Oliveira GG, Ruiz LFC, de Oliveira G (2020) A comparison of data mining techniques and multi-sensor analysis for inland marshes delineation. Wetl Ecol Manag 2:577–594. https://doi.org/10.1007/s11273-020-09731-2

Sothe C, Dalponte M, de Almeida CM et al (2019) Tree species classification in a highly diverse subtropical forest integrating UAV-based photogrammetric point cloud and hyperspectral data. Remote Sens:11. https://doi.org/10.3390/rs11111338

Sothe C, De Almeida CM, Schimalski MB et al (2020) Comparative performance of convolutional neural network, weighted and conventional support vector machine and random forest for classifying tree species using hyperspectral and photogrammetric data. GIScience Remote Sens 57:369–394. https://doi.org/10.1080/15481603.2020.1712102

Tang J, Deng C, Huang GB (2016) Extreme learning machine for multilayer perceptron. Lyon Chir 27:809–821. https://doi.org/10.1109/tnnls.2015.2424995

Thomas P (2013) Araucaria angustifolia. IUCN Red List Threat Species. https://doi.org/10.2305/IUCN.UK.2013-1.RLTS.T32975A2829141.en

Torrione PA, Morton KD, Sakaguchi R, Collins LM (2014) Histograms of oriented gradients for landmine detection in ground-penetrating radar data. IEEE Trans Geosci Remote Sens 52:1539–1550. https://doi.org/10.1109/TGRS.2013.2252016

Welch R, Ehlers M (1987) Merging multiresolution Spot Hrv and Landsat Tm data. Photogramm Eng Remote Sens 53:301–303

Wilson OJ, Walters RJ, Mayle FE, Lingner DV, Vibrans AC (2019) Cold spot microrefugia hold the key to survival for Brazil’s critically endangered Araucaria tree. Glob Chang Biol 25:4339–4351. https://doi.org/10.1111/gcb.14755

Wulder MA, Hall RJ, Coops NC, Franklin SE (2004) High spatial resolution remotely sensed data for ecosystem characterization. Bioscience 54:511. https://doi.org/10.1641/0006-3568

Xu K, Chen E, Li Z, et al. (2020) An automatic optimization method of forest type classification using PolSAR image based on genetic algorithm. 2019 6th Asia-Pacific Conf Synth Aperture Radar 1–5. https://doi.org/10.1109/apsar46974.2019.9048333

Zenni RD, Ziller RS (2011) Visao geral das plantas exoticas invasoras no Brasil. Rev Bras Bot 34:431–446. https://doi.org/10.1590/S0100-84042011000300016

Zortea M (1993) Folivory in Platyrrhinus (Vampyrops) lineatus. Bat Res News 34:59–60

Acknowledgements

The authors would like to thank the Imbituvão project, funded by the National Council for Scientific and Technological Development of Brazil (CNPq), and the technical cooperation between the State Secretariat of Science Technology and Higher Education (SETI) and the Ministry for Science, Research and Arts of Baden-Württemberg for the availability of the WorldView-2 image. We are also grateful to the Coordination for the Improvement of Higher Education Personnel of Brazil (CAPES) for the work development’s financial support.

Availability of data and material

The datasets used during the current work are available from the corresponding author request and in the Zenodo at https://doi.org/10.5281/ZENODO.4545112.

Funding

This study was funded by the National Council for Scientific and Technological Development of Brazil (CNPq) and Coordination for the Improvement of Higher Education Personnel of Brazil (CAPES).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The work does not involve any ethical risks as there was no destruction of botanic material.

Consent for publication

Not applicable

Competing interests

The authors declare no competing interests.

Additional information

Handling Editor: Barry A. Gardiner

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contribution of the co-authors

Crisigiovanni E. L. designed the methods, performed the experiments, processed the data, analyzed the results, and wrote most of the manuscript. Figueiredo Filho A. idealized the article, provided the materials (satellite image), and formulated the research framework. Pesck V. A. contributed to the geoprocessing and remote sensing analysis and cooperated in the methodology’s design. De Lima V. A. cooperated in the methodology design and performed the machine learning analyses and data processing. All authors participated in the manuscript writing, proofreading, and edition, since the first version, the same way all authors have read and approved the final manuscript.

Rights and permissions

About this article

Cite this article

Crisigiovanni, E.L., Filho, A.F., Pesck, V.A. et al. Potential of machine learning and WorldView-2 images for recognizing endangered and invasive species in the Atlantic Rainforest. Annals of Forest Science 78, 54 (2021). https://doi.org/10.1007/s13595-021-01070-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13595-021-01070-3