Abstract

Mining patterns of temporal sequence data is an important problem across many disciplines. Under appropriate preprocessing procedures, a structured temporal sequence can be organized into a probability measure or a time series representation, which grants a potential to reveal distinctive temporal pattern characteristics. In this paper, we propose a nested two-stage clustering method that integrates optimal transport and the dynamic time warping distances to learn the distributional and dynamic shape-based dissimilarity at the respective stage. The proposed clustering algorithm preserves both the distribution and shape patterns present in the data, which are critical for the datasets composed of structured temporal sequences. The effectiveness of the method is tested against existing agglomerative and K-shape-based clustering algorithms on Monte Carlo simulated synthetic datasets, and the performance is compared through various cluster validation metrics. Furthermore, we apply the developed method to real-world datasets from three domains: temporal dietary records, online retail sales, and smart meter energy profiles. The expressiveness of the cluster and subcluster centroid patterns shows significant promise of our method for structured temporal sequence data mining.

Similar content being viewed by others

Notes

All the source codes have been made public on https://github.com/AML-wustl/OT-DTW.

The data are publicly available at https://data.london.gov.uk/dataset/smartmeter-energy-use-data-in-london-households.

References

Abonyi J, Feil B (2007) Cluster analysis for data mining and system identification. Springer, Berlin

Agueh M, Carlier G (2011) Barycenters in the Wasserstein space. SIAM J Math Anal 43(2):904–924

Arthur D, Vassilvitskii S (2007) k-means++: the advantages of careful seeding. In: Proceedings of the eighteenth annual ACM-SIAM symposium on Discrete algorithms. Society for Industrial and Applied Mathematics, pp 1027–1035

Bagnall AJ, Janacek GJ (2004) Clustering time series from ARMA models with clipped data. In: Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining, ACM, pp 49–58

Bietti A, Bach F, Cont A (2015) An online em algorithm in hidden (semi-)markov models for audio segmentation and clustering. In: 2015 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 1881–1885. https://doi.org/10.1109/ICASSP.2015.7178297

Cominetti R, San Martín J (1994) Asymptotic analysis of the exponential penalty trajectory in linear programming. Math Program 67(1–3):169–187

Csiszár I (1967) Information-type measures of difference of probability distributions and indirect observation. Studia Scientiarum Mathematicarum Hungarica 2:229–318

Cuturi M (2013) Sinkhorn distances: lightspeed computation of optimal transport. In: Advances in neural information processing systems, pp 2292–2300

Dheeru D, Karra Taniskidou E (2017) UCI machine learning repository. http://archive.ics.uci.edu/ml

Ester M, Kriegel HP, Sander J, Xu X et al (1996) A density-based algorithm for discovering clusters in large spatial databases with noise. Kdd 96:226–231

Fowlkes EB, Mallows CL (1983) A method for comparing two hierarchical clusterings. J Am Stat Assoc 78(383):553–569

Fred ALN, Jain AK (2003) Robust data clustering

Garreau D, Lajugie R, Arlot S, Bach F (2014) Metric learning for temporal sequence alignment. In: Advances in neural information processing systems, pp 1817–1825

Gibbs AL, Su FE (2002) On choosing and bounding probability metrics. Int Stat Rev 70(3):419–435

Hensman J, Rattray M, Lawrence ND (2015) Fast nonparametric clustering of structured time-series. IEEE Trans Pattern Anal Mach Intell 37(2):383–393. https://doi.org/10.1109/TPAMI.2014.2318711

Hubert L, Arabie P (1985) Comparing partitions. J Classif 2(1):193–218

Jaccard P (1912) The distribution of the flora in the alpine zone. 1. New Phytologist 11(2):37–50

Jain AK (2010) Data clustering: 50 years beyond k-means. Pattern Recogn Lett 31(8):651–666

Jinklub K, Geng J (2018) Hierarchical-grid clustering based on data field in time-series and the influence of the first-order partial derivative potential value for the arima-model. In: Gan G, Li B, Li X, Wang S (eds) Advanced data mining and applications. Springer, Cham, pp 31–41

Keogh EJ, Pazzani MJ (2000) Scaling up dynamic time warping for datamining applications. In: Proceedings of the sixth ACM SIGKDD international conference on Knowledge discovery and data mining, ACM, pp 285–289

Khanna N, Eicher-Miller HA, Boushey CJ, Gelfand SB, Delp EJ (2011) (2011) Temporal dietary patterns using kernel k-means clustering. In: IEEE international symposium on multimedia (ISM), IEEE, pp 375–380

Khanna N, Eicher-Miller HA, Verma HK, Boushey CJ, Gelfand SB, Delp EJ (2017) Modified dynamic time warping (MDTW) for estimating temporal dietary patterns. In: 2017 IEEE global conference on signal and information processing (GlobalSIP), IEEE, pp 948–952

Kiss IZ, Zhai Y, Hudson JL (2005) Predicting mutual entrainment of oscillators with experiment-based phase models. Phys Rev Lett 94(24)

McDowell IC, Manandhar D, Vockley CM, Schmid AK, Reddy TE, Engelhardt BE (2018) Clustering gene expression time series data using an infinite gaussian process mixture model. PLoS Comput Biol 14(1):1–27. https://doi.org/10.1371/journal.pcbi.1005896

Meilă M (2007) Comparing clusterings–an information based distance. J Multivar Anal 98(5):873–895

Mirkin B (1996) Mathematical classification and clustering. Springer, New York

National Cancer Institute (2017) Interactive diet and activity tracking in aarp (idata). https://biometry.nci.nih.gov/cdas/idata/. Accessed Feb 2017

Paparrizos J, Gravano L (2016) K-shape: efficient and accurate clustering of time series. SIGMOD Rec 45(1):69–76. https://doi.org/10.1145/2949741.2949758

Park Y (2018) Comparison of self-reported dietary intakes from the automated self-administered 24-h recall, 4-d food records, and food-frequency questionnaires against recovery biomarkers. Am J Clin Nutr 107(1):80–93

Petitjean F, Ketterlin A, Gançarski P (2011) A global averaging method for dynamic time warping, with applications to clustering. Pattern Recogn 44(3):678–693

Rakthanmanon T, Campana B, Mueen A, Batista G, Westover B, Zhu Q, Zakaria J, Keogh E (2013) Addressing big data time series: mining trillions of time series subsequences under dynamic time warping. ACM Trans Knowl Discov Data (TKDD) 7(3):10

Rokach L, Maimon O (2005) Clustering methods. Springer, Boston, pp 321–352. https://doi.org/10.1007/0-387-25465-X_15

Rubner Y, Tomasi C, Guibas LJ (2000) The earth mover’s distance as a metric for image retrieval. Int J Comput Vis 40(2):99–121

Sakoe H, Chiba S (1978) Dynamic programming algorithm optimization for spoken word recognition. IEEE Trans Acoust Speech Signal Process 26(1):43–49

Verde R, Irpino A (2007) Dynamic clustering of histogram data: using the right metric. In: Selected contributions in data analysis and classification. Springer, pp 123–134

Villani C (2016) Optimal transport: old and new. Springer, Berlin

Wang X, Smith K, Hyndman R (2006) Characteristic-based clustering for time series data. Data Min Knowl Disc 13(3):335–364

Ward JH Jr (1963) Hierarchical grouping to optimize an objective function. J Am Stat Assoc 58(301):236–244

Zhao Y, Karypis G, Fayyad U (2005) Hierarchical clustering algorithms for document datasets. Data Min Knowl Disc 10(2):141–168

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported in part by the National Science Foundation under the Awards ECCS-1509342, CMMI-1763070, and CMMI-1933976, and by the NIH Grant R01CA226937A1.

Appendices

Appendix

Remark 1

The Wasserstein barycenter \({\overline{\varPhi }}_k\) of \(n_k\) continuous distributions \(\{\varPhi _1,\ldots ,\varPhi _{n_k}\}\) of cluster k under the objective of Definition (13) satisfies

Remark 2

(Wasserstein Barycenter, [2]) A Wasserstein barycenter of N measures \(\{\nu _i: i=1,\ldots ,N\}\) in \({\mathbb {P}} \subset P(\varOmega )\) is a minimizer of f over \({\mathbb {P}}\), where

Remark 3

(DTW Barycenter) A DTW barycenter of N time series \(P=\{{\mathbf {p}}_1, \ldots ,{\mathbf {p}}_N\}\) in a space \({\mathbb {E}}\) induced by DTW metric is a minimizer of the sum of squared distance to the set P, where

A. Results

Based on the definition of DB and CH indices, we seek to find the local minimum of DB index and the local maximum of CH index. From Fig. 18b, the CH index strictly decreases with increasing K and there is no clear kink point toward plateau, which provides little information for the optimal choice of K. From Fig. 18a, due to the relative smaller DB index and clearer separation of cluster centroids, we set \(K=4\) in the current experiment (Example of temporal dietary dataset). From Fig. 19b, the CH index also strictly decreases with increasing K and provides little information for the optimal choice of K. But from Fig. 19a, \(K=6\) becomes a good candidate for the number of clusters since the DB index achieves local minimum then (Figs. 20, 21, 22, 23).

B. Applications

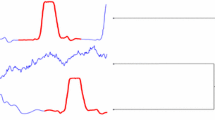

Apart from the temporal pattern discovery in the applications discussed in Sect. 6, the proposed clustering algorithm appears to posses some desirable properties which would extend its use in synchronization detection application in an oscillator network [23]. The synchronization detection problem is defined as follows: In an oscillator network, each oscillator can be treated as a node in the network, and the coupling between oscillators is the edges. Each oscillator’s dynamics consists of two parts—its own intrinsic dynamics and the coupling functions from other oscillators. The network starts from an arbitrary initial condition and evolves over time (according to the oscillator dynamical equations). Given the time series measurement corresponding to the output of each oscillator, we aim to determine which of the oscillators (nodes) are phase synchronized. Traditionally, this problem requires preprocessing of the data by peak-finding or Hilbert transform (to extract phase information from the measured data) and further clustering according to the oscillator phase model [23]. Our method saves the expensive phase processing step, and can directly work with the recordings. For example, Fig. 24 shows an illustration of a synthetic oscillator network with 15 oscillators and cluster results from our OT–DTW method. The colored nodes in the left network plot provide the synchronization clusters based on phase difference calculation. On the right is our two-stage cluster outputs, and except oscillator 14, our cluster results match very well with the phase-based synchronization clusters (our results also separate oscillator 7, 12, and 13 into a separate cluster from oscillator 2, 3, 6, and 8). This leads to our conjecture that the distributional difference and the dynamic shape difference in the time domain have some intrinsic correlation with the phase synchronization and we plan to pursue this direction in a future study.

Rights and permissions

About this article

Cite this article

Wang, L., Narayanan, V., Yu, YC. et al. A Nested Two-Stage Clustering Method for Structured Temporal Sequence Data. Knowl Inf Syst 63, 1627–1662 (2021). https://doi.org/10.1007/s10115-021-01578-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-021-01578-0