Abstract

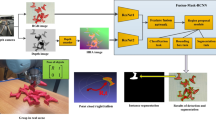

To perform object grasping in dense clutter, we propose a novel algorithm for grasp detection. To obtain grasp candidates, we developed instance segmentation and view-based experience transfer as part of the algorithm. Subsequently, we established an algorithm for collision avoidance and stability analysis to determine the optimal grasp for robot grasping. The strategy for the view-based experience transfer was to first find the object view and then transfer the grasp experience onto the clutter scenario. This strategy has two advantages over existing learning-based methods for finding grasp candidates. (1) our approach can effectively exclude the influence of noise or occlusion on images and precisely detect grasps that are well aligned on each target object. (2) our approach can efficiently find out optimal grasps on each target object and has the flexibility of adjusting and redefining the grasp experience based on the type of target object. We evaluated our approach using some open-source datasets and with a real-world robot experiment, which involved a six-axis robot arm with a two-jaw parallel gripper and a Kinect V2 RGB-D camera. The experimental results show that our proposed approach can be generalized to objects with complex shape, and is able to grasp on dense clutter scenarios where different types of objects are in a bin. To demonstrate our grasping pipeline, a video is provided at https://youtu.be/gQ3SO6vtTpA.

Similar content being viewed by others

References

Bicchi, A., Kumar, V.: Robotic grasping and contact: a review. In: Proceedings of IEEE International Conference on Robotics and Automation (ICRA), pp 348–353 (2000)

Chu, F.-J., Xu, R., Vela, P.A.: Real-world multiobject, multigrasp detection. IEEE Robot Autom Lett 3(4), 3355–3362 (2018)

David, P., DeMenthon, D., Duraiswami, R., Samet, H.: Simultaneous pose and correspondence determination using line features. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit 2, II–II (2003)

Evangelidis, G.D., Psarakis, E.Z., Intelligence, M.: Parametric image alignment using enhanced correlation coefficient maximization. IEEE Trans Pattern Anal Mach Intell 30(10), 1858–1865 (2008)

He, K., Sun, J.: Fast guided filter. CoRR (2015). http://arxiv.org/abs/1505.00996

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask r-cnn. IEEE Trans Pattern Anal Mach Intell 42(2), 386–397 (2020)

Hinterstoisser, S., Holzer, S., Cagniart, C., Ilic, S., Konolige, K., Navab, N., Lepetit, V.: Multimodal templates for real-time detection of texture-less objects in heavily cluttered scenes. In: IEEE International Conference on Computer Vision (ICCV), pp 858–865 (2011)

Hinton, G.E., Zemel, R.S.: "Autoencoders, minimum description length and Helmholtz free energy." In: Proceedings of the 6th International Conference on Neural Information Processing Systems, pp. 3–10 (1993)

Hodaň, T., Matas, J., Obdržálek, Š.: On evaluation of 6D object pose estimation. In: European Conference on Computer Vision (ECCV), pp. 606–619. Springer, Cham (2016)

Hodan, T., Haluza, P., Obdržálek, Š., Matas, J., Lourakis, M., Zabulis, X.: T-LESS: An RGB-D dataset for 6D pose estimation of texture-less objects. In: IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 880–888. (2017). https://doi.org/10.1109/WACV.2017.103

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: Leibe B., Matas J., Sebe N., Welling M. (eds) Computer Vision – ECCV 2016. ECCV 2016. Lecture Notes in Computer Science, vol. 9906. Springer, Cham. https://doi.org/10.1007/978-3-319-46475-6_43

Kehl, W., Manhardt, F., Tombari, F., Ilic, S., Navab, N.: SSD-6D: Making RGB-based 3D detection and 6D pose estimation great again. In: IEEE International Conference on Computer Vision (ICCV), pp. 1530–1538 (2017). https://doi.org/10.1109/ICCV.2017.169

Kingma, D.P., Welling, M.: Auto-encoding variational bayes. arXiv preprint http://arxiv.org/abs/1312.6114 (2013)

Lin, T.Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft COCO: common objects in context. In: European Conference on Computer Vision (ECCV), pp. 740–755 (2014)

Liu, M.-Y., Tuzel, O., Veeraraghavan, A., Taguchi, Y., Marks, T.K., Chellappa, R.: Fast object localization and pose estimation in heavy clutter for robotic bin picking. Int J Robot Res (IJRR) 31(8), 951–973 (2012)

Lowe, D.G.: Three-dimensional object recognition from single two-dimensional images. Artif Intell 31(3), 355–395 (1987)

Mahendran, S., Ali, H., Vidal, R.: 3D pose regression using convolutional neural networks. In: IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 494–495 (2017)

Mahler, J., Liang, J., Niyaz, S., Laskey, M., Doan, R., Xinyu Liu, Aparicio Ojea, J., Goldberg, K.: Dex-Net 2.0: deep learning to plan robust grasps with synthetic point clouds and analytic grasp metrics. In: Proceedings of the Robotics: Science and Systems XIII (RSS) (2017)

Mousavian, A., Eppner, C., Fox, D.: 6-DOF GraspNet: variational Grasp Generation for Object Manipulation. In: IEEE/CVF International Conference on Computer Vision (ICCV), pp. 2901–2910 (2019)

Neubeck, A., Van Gool, L.: Efficient non-maximum suppression. In: 18th International Conference on Pattern Recognition, vol. 3. IEEE (2006)

Ni, P., Zhang, W., Zhu, X., Cao, Q.: PointNet++ grasping: learning an end-to-end spatial grasp generation algorithm from sparse point clouds. In: IEEE International Conference on Robotics and Automation (ICRA), pp. 3619–3625 (2020)

Park, D., Seo, Y., Chun, S.Y.: Real-time, highly accurate robotic grasp detection using fully convolutional neural networks with high-resolution images. (2018). https://arxiv.org/abs/1809.05828

Pinto, L., Gupta, A.: Supersizing self-supervision: Learning to grasp from 50K tries and 700 robot hours. In: IEEE International Conference on Robotics and Automation (ICRA), pp. 3406–3413 (2016)

Qin, Y., Chen, R., Zhu, H., Song, M., Xu, J., Su, H.: S4G: a modal single-view single-shot SE(3) grasp detection in cluttered scenes. In: Conference on Robotic Learning (CoRL). Osaka, Japan (2019)

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Fei-Fei, L.: Imagenet large scale visual recognition challenge. Int J Comput Vis 115(3), 211–252 (2015)

Shah, M.: Solving the robot-world/hand-eye calibration problem using the Kronecker product. ASME J Mech Robot 5(3), 031007 (2013)

Simonyan K., Zisserman A.: Very deep convolutional networks for large-scale image recognition. In: Proceedings of International Conference on Learning Representations (2015)

Sundermeyer, M., Marton, Z.-C., Durner, M., Brucker, M., Triebel R.: Implicit 3d orientation learning for 6d object detection from rgb images. In: European Conference on Computer Vision (ECCV), pp. 699–715 (2018)

Vincent, P., Larochelle, H., Bengio, Y., Manzagol, P.-A.: Extracting and composing robust features with denoising autoencoders. In: Proceedings of the 25th International Conference on machine learning, pp. 1096–1103 (2008)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4), 600–612 (2004)

Zeng, A., Song, S., Yu K.T., Donlon E., Hogan F.R., Bauza, M., Ma D., Taylor, O., Liu, M., Romo, E., Fazeli, N., Alet, F., Dafle, N.C., Holladay, R., Morona, I., Nair, P.Q., Green, D., Taylor, I., Liu, W., Funkhouser, T., Rodriguez, A.: “Robotic pick-and-place of novel objects in clutter with multi-affordance grasping and cross-domain image matching.” In IEEE International Conference on Robotics and Automation (ICRA), pp. 3750–3757 (2018)

Zhang, Z.: A flexible new technique for camera calibration. IEEE Trans Pattern Anal Mach Intell 22(11), 1330–1334 (2000)

Zhang, H., Lan, X., Zhou, X., Zheng, N.: Roi-based robotic grasp detection in object overlapping scenes using convolutional neural network. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (2018)

Zhou, X., Lan, X., Zhang, H., Tian, Z, Zhang, Y., Zheng N.: Fully convolutional grasp detection network with oriented anchor box. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 7223–7230 (2018)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, JW., Li, CL., Chen, JL. et al. Robot grasping in dense clutter via view-based experience transfer. Int J Intell Robot Appl 6, 23–37 (2022). https://doi.org/10.1007/s41315-021-00179-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41315-021-00179-y