Abstract

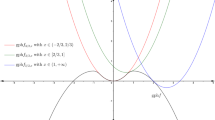

We study robustness properties of some iterative gradient-based methods for strongly convex functions, as well as for the larger class of functions with sector-bounded gradients, under a relative error model. Proofs of the corresponding convergence rates are based on frequency-domain criteria for the stability of nonlinear systems. Applications are given to inexact versions of gradient descent and the Triple Momentum Method. To further emphasize the usefulness of frequency-domain methods, we derive improved analytic bounds for the convergence rate of Nesterov’s accelerated method (in the exact setting) on strongly convex functions.

Similar content being viewed by others

Notes

For the reader familiar with the control-theoretic absolute stability problem, the convergence result quoted above shows that gradient descent satisfies a version of the Aizerman conjecture.

In the language of the absolute stability problem, inexact gradient descent satisfies a version of the Kalman conjecture.

The reader should ignore the statement about the finiteness of \(V_\mathrm {f}\), which is famously false [63].

[52] explicitly states that A should not have spectrum with \(|z| = \rho \), but this is unnecessary. Such an assumption can always be removed when (A, B) is controllable by shifting the eigenvalues off of \(|z| = \rho \) via linear feedback: an equivalent characterization of controllability is that for any monic polynomial there is a matrix N such that the characteristic polynomial of \(A + BN\) is the given polynomial [50, §34, Theorem 1]. The corresponding linear change of variables \((x, u) \mapsto (x, u + Nx)\) does not affect the validity of (FDI) (it corresponds to a congruency transformation of the Popov function by a rational matrix function), and leaves invariant any solution of (LMI).

In general, this is not true of every solution to (LMI).

In reference to the remarks preceding Lemma 2.7, note that \(\Pi ({{\bar{z}}},z) \equiv 0\) when \(|z| = \rho _\mathrm {GD}\) and \(\alpha = 2/(m+L)\).

References

Ahmad, N.S., Carrasco, J., Heath, W.P.: A less conservative lmi condition for stability of discrete-time systems with slope-restricted nonlinearities. IEEE Trans. Autom. Control 60(6), 1692–1697 (2014)

Arora, S., Ge, R., Ma, T., Moitra, A.: Simple, efficient, and neural algorithms for sparse coding. In: Conference on Learning Theory, pp. 113–149. PMLR (2015)

Bertsekas, D.P.: Nonlinear Programming. Athena Scientific Belmont, Massachusets (1999)

Boczar, R., Lessard, L., Recht, B.: Exponential convergence bounds using integral quadratic constraints. In: 2015 54th IEEE Conference on Decision and Control (CDC), pp. 7516–7521. IEEE (2015)

Badithela, A., Seiler, P.: Analysis of the heavy-ball algorithm using integral quadratic constraints. In: 2019 American Control Conference (ACC), pp. 4081–4085. IEEE (2019)

Chen, Y., Candes, E.: Solving random quadratic systems of equations is nearly as easy as solving linear systems. In: Advances in Neural Information Processing Systems, pp. 739–747 (2015)

Cohen, M.B., Diakonikolas, J., Orecchia, L.: On acceleration with noise-corrupted gradients. arXiv:1805.12591 (2018)

Cyrus, S., Hu, B., Van Scoy, B., Lessard, L.: A robust accelerated optimization algorithm for strongly convex functions. In: 2018 Annual American Control Conference (ACC), pp. 1376–1381. IEEE (2018)

Candes, E.J., Li, X., Soltanolkotabi, M.: Phase retrieval via Wirtinger flow: theory and algorithms. IEEE Trans. Inf. Theory 61(4), 1985–2007 (2015)

d’Aspremont, A.: Smooth optimization with approximate gradient. SIAM J. Optim. 19(3), 1171–1183 (2008)

Drummond, R., Duncan, S.: Accelerated gradient methods with memory. arXiv:1805.09077 (2018)

Devolder, O., Glineur, F., Nesterov, Y.: First-order methods with inexact oracle: the strongly convex case. CORE discussion papers, 2013016 (2013)

Devolder, O., Glineur, F., Nesterov, Y.: Intermediate gradient methods for smooth convex problems with inexact oracle. Technical report (2013)

Devolder, O., Glineur, F., Nesterov, Y.: First-order methods of smooth convex optimization with inexact oracle. Math. Program. 146(1–2), 37–75 (2014)

de Klerk, E., Glineur, F., Taylor, A.: Worst-case convergence analysis of gradient and Newton methods through semidefinite programming performance estimation. arXiv:1709.05191 (2017)

de Klerk, E., Glineur, F., Taylor, A.B.: On the worst-case complexity of the gradient method with exact line search for smooth strongly convex functions. Optim. Lett. 11(7), 1185–1199 (2017)

Drori, Y., Taylor, A.B.: Efficient first-order methods for convex minimization: a constructive approach. Math. Program. 184, 183–220 (2020). https://doi.org/10.1007/s10107-019-01410-2

Drori, Y., Teboulle, M.: Performance of first-order methods for smooth convex minimization: a novel approach. Math. Program. 145(1–2), 451–482 (2014)

França, G., Bento, J.: An explicit rate bound for over-relaxed ADMM. In: 2016 IEEE International Symposium on Information Theory (ISIT), pp. 2104–2108. IEEE (2016)

Fazlyab, M., Ribeiro, A., Morari, M., Preciado, V.M.: Analysis of optimization algorithms via integral quadratic constraints: nonstrongly convex problems. SIAM J. Optim. 28(3), 2654–2689 (2018)

Fetzer, M., Scherer, C.W.: Absolute stability analysis of discrete time feedback interconnections. IFAC-PapersOnLine 50(1), 8447–8453 (2017)

Friedlander, M.P., Schmidt, M.: Hybrid deterministic-stochastic methods for data fitting. SIAM J. Sci. Comput. 34(3), A1380–A1405 (2012)

Gannot, O.: Frequency criteria for exponential stability. arXiv:1910.10855 (2019)

Gramlich, D., Ebenbauer, C., Scherer, C.W.: Convex synthesis of accelerated gradient algorithms for optimization and saddle point problems using Lyapunov functions. arXiv:2006.09946 (2020)

Hu, B., Lessard, L.: Control interpretations for first-order optimization methods. In: 2017 American Control Conference (ACC), pp. 3114–3119. IEEE (2017)

Hu, B., Lessard, L.: Dissipativity theory for nesterov’s accelerated method. In: Proceedings of the 34th International Conference on Machine Learning-Volume 70, pp. 1549–1557 (2017)

Hassan-Moghaddam, S., Jovanović, M.R: Proximal gradient flow and Douglas–Rachford splitting dynamics: global exponential stability via integral quadratic constraints. arXiv:1908.09043 (2019)

Hu, B., Seiler, P.: Exponential decay rate conditions for uncertain linear systems using integral quadratic constraints. IEEE Trans. Autom. Control 61(11), 3631–3637 (2016)

Hu, B., Syed, U.A.: Characterizing the exact behaviors of temporal difference learning algorithms using Markov jump linear system theory. arXiv:1906.06781 (2019)

Hu, B., Seiler, P., Lessard, L.: Analysis of approximate stochastic gradient using quadratic constraints and sequential semidefinite programs. arXiv:1711.00987 (2017)

Hu, B., Seiler, P., Lessard, L.: Analysis of biased stochastic gradient descent using sequential semidefinite programs. Math. Program., 1–26 (2020)

Hu, B., Wright, S., Lessard, L.: Dissipativity theory for accelerating stochastic variance reduction: a unified analysis of SVRG and Katyusha using semidefinite programs. arXiv:1806.03677 (2018)

Jury, E.I., Lee, B.W.: A stability theory for multinonlinear control systems. Third IFAC World Congr. 28, A1–A11 (1966)

Jury, E.L., Lee, B.: On the stability of a certain class of nonlinear sampled-data systems. IEEE Trans. Autom. Control 9(1), 51–61 (1964)

Kalman, R.E.: Lectures on controllability and observability. In: Controllability and Observability, pp. 1–149. Springer (2010)

Kim, D., Fessler, J.A.: Optimized first-order methods for smooth convex minimization. Math. Program. 159(1–2), 81–107 (2016)

Kim, D., Fessler, J.A.: On the convergence analysis of the optimized gradient method. J. Optim. Theory Appl. 172(1), 187–205 (2017)

Kleinberg, R., Li, Y., Yuan, Y.: An alternative view: When does SGD escape local minima? arXiv:1802.06175 (2018)

Kalman, R.E., Szegoö, G.: Sur la stabilité absolue d’un système d’équations aux diffèrences finies. C R Acad Sci Paris

Lessard, L., Recht, B., Packard, A.: Analysis and design of optimization algorithms via integral quadratic constraints. SIAM J. Optim. 26(1), 57–95 (2016)

Lessard, L., Seiler, P.: Direct synthesis of iterative algorithms with bounds on achievable worst-case convergence rate. arXiv:1904.09046 (2019)

Lurie, A.I.: Some Nonlinear Problems in the Theory of Automatic Control. HM Stationary Office, London (1957)

Li, Y., Yuan, Y.: Convergence analysis of two-layer neural networks with ReLU activation. arXiv:1705.09886 (2017)

Michalowsky, S.: Design of Distributed and Robust Optimization Algorithms. Logos Verlag Berlin GmbH, A Systems Theoretic Approach (2020)

Megretski, A., Rantzer, A.: System analysis via integral quadratic constraints. IEEE Trans. Autom. Control 42(6), 819–830 (1997)

Michalowsky, S., Scherer, C., Ebenbauer, C.: Robust and structure exploiting optimization algorithms: an integral quadratic constraint approach. arXiv:1905.00279 (2019)

Nesterov, Y.: Lectures on Convex Optimization, vol. 137. Springer, Berlin (2018)

Nishihara, R., Lessard, L., Recht, B., Packard, A., Jordan, M.I.: A general analysis of the convergence of ADMM. arXiv:1502.02009 (2015)

O’Shea, R., Younis, M.: A frequency-time domain stability criterion for sampled-data systems. IEEE Trans. Autom. Control 12(6), 719–724 (1967)

Popov, V.M., Georgescu, R.: Hyperstability of Control Systems. Springer, Berlin (1973)

Polyak, B.T.: Introduction to Optimization, vol. 1. Publications Division Inc., New York (1987)

Rantzer, A.: On the kalman–Yakubovich–Popov lemma. Syst. Control Lett. 28(1), 7–10 (1996)

Ryu, E.K., Taylor, A.B., Bergeling, C., Giselsson, P.: Operator splitting performance estimation: tight contraction factors and optimal parameter selection. arXiv:1812.00146 (2018)

Safavi, S., Joshi, B., França, G., Bento, J.: An explicit convergence rate for nesterov’s method from SDP. In: 2018 IEEE International Symposium on Information Theory (ISIT), pp. 1560–1564. IEEE (2018)

Sun, R., Luo, Z.-Q.: Guaranteed matrix completion via non-convex factorization. IEEE Trans. Inf. Theory 62(11), 6535–6579 (2016)

Taylor, A., Bach, F.: Stochastic first-order methods: non-asymptotic and computer-aided analyses via potential functions. arXiv:1902.00947 (2019)

Taylor, A.B., Hendrickx, J.M., Glineur, F.: Exact worst-case performance of first-order methods for composite convex optimization. SIAM J. Optim. 27(3), 1283–1313 (2017)

Taylor, A.B., Hendrickx, J.M., Glineur, F.: Smooth strongly convex interpolation and exact worst-case performance of first-order methods. Math. Program. 161(1–2), 307–345 (2017)

Taylor, A.B., Hendrickx, J.M., Glineur, F.: Exact worst-case convergence rates of the proximal gradient method for composite convex minimization. J. Optim. Theory Appl. 178(2), 455–476 (2018)

Taylor, A., Van Scoy, B., Lessard, L.: Lyapunov functions for first-order methods: tight automated convergence guarantees. arXiv:1803.06073 (2018)

Van Scoy, B., Freeman, R.A., Lynch, K.M.: The fastest known globally convergent first-order method for minimizing strongly convex functions. IEEE Cont. Syst. Lett. 2(1), 49–54 (2017)

Willems, J.: Least squares stationary optimal control and the algebraic Riccati equation. IEEE Trans. Autom. Control 16(6), 621–634 (1971)

Willems, J.: On the existence of a nonpositive solution to the Riccati equation. IEEE Trans. Autom. Control 19(5), 592–593 (1974)

Willems, J.C.: Dissipative dynamical systems part I: general theory. Arch. Ration. Mech. Anal. 45(5), 321–351 (1972)

Willems, J.C.: Dissipative dynamical systems part II: linear systems with quadratic supply rates. Arch. Ration. Mech. Anal. 45(5), 352–393 (1972)

Willems, J.C., Polderman, J.W.: Introduction to Mathematical Systems Theory: A Behavioral Approach, vol. 26. Springer, Berlin (1997)

Xiong, H., Chi, Y., Bin, H., Zhang, W.: Analytical convergence regions of accelerated gradient descent in nonconvex optimization under regularity condition. Automatica 113, 108715 (2020)

Acknowledgements

The author is indebted to both anonymous referees for their close readings and numerous suggestions on how to improve the paper. Special thanks are due to the first referee, who pointed out that a proof of Proposition 1.5 can already be inferred from [dKGT1].

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Gannot, O. A frequency-domain analysis of inexact gradient methods. Math. Program. 194, 975–1016 (2022). https://doi.org/10.1007/s10107-021-01665-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-021-01665-8