Abstract

Similarity measure (SM) proves to be a necessary tool in cognitive decision making processes. A single-valued neutrosophic set (SVNS) is just a particular instance of neutrosophic sets (NSs), which is capable of handling uncertainty and impreciseness/vagueness with a better degree of accuracy. The present article proposes two new weighted vector SMs for SVNSs, by taking the convex combination of vector SMs of Jaccard and Dice and Jaccard and cosine vector SMs. The applications of the proposed measures are validated by solving few multi-attribute decision-making (MADM) problems under neutrosophic environment. Moreover, to prevent the spread of COVID-19 outbreak, we also demonstrate the problem of selecting proper antivirus face mask with the help of our newly constructed measures. The best deserving alternative is calculated based on the highest SM values between the set of alternatives with an ideal alternative. Meticulous comparative analysis is presented to show the effectiveness of the proposed measures with the already established ones in the literature. Finally, illustrative examples are demonstrated to show the reliability, feasibility, and applicability of the proposed decision-making method. The comparison of the results manifests a fair agreement of the outcomes for the best alternative, proving that our proposed measures are effective. Moreover, the presented SMs are assured to have multifarious applications in the field of pattern recognition, image clustering, medical diagnosis, complex decision-making problems, etc. In addition, the newly constructed measures have the potential of being applied to problems of group decision making where the human cognition-based thought processes play a major role.

Similar content being viewed by others

Introduction

Human beings remain constantly in a state of making decisions due to the intrinsic nature of their mind. They are natural decision makers since every action ultimately results in a decision, no matter how significant it might be. Several cognitive factors like people’s level of expertise, behavioral style, and decision maker’s credibility have a huge psychological impact on the decision making process. According to psychologists, the decision making process can be understood by considering both individual judgments and taking into account both rational and irrational aspects of behavior. Thus, for proper representation of decision maker interests, we are bound to consider those cognitive aspects. This implies that decisions considering cognitive aspects are comparatively better and they closely depict the decision maker’s preferences. Human cognition-based methods or techniques not only help the decision makers in expressing their preferences regarding a certain scenario but also helps visualize people’s intentions or underlying thought processes.

It is worth mentioning that various disciplines in the field of operations research, economics, management science, etc., flourished with convincing outcomes when the notion of MADM was introduced to their researchers in respective domains. However, it is noticed that the decision makers involved in MADM problems are unable to come up with the proper justification of the involved decision parameters, due to reasons like lack of information about the public domain, poor information processing capabilities, complexity of the scenario, shortage of time, etc. This results in incorrect preference ordering of alternatives. We encounter a wide literature on MADM problems, where the attribute values take the form of crisp numbers [3], fuzzy numbers [4], interval-valued fuzzy numbers [5], interval-valued intuitionistic fuzzy numbers [6], and so on.

For the first time, neutrosophic sets (NSs) were developed by Smarandache [1, 2], which are capable of dealing with imprecise or unclear information. These sets are characterized by three independent functions namely, truth, indeterminacy, and falsity membership functions. Noteworthy that, fuzzy sets and intuitionistic fuzzy sets can only deal with partial or incomplete information, but on the other hand, NSs tackle inconsistent information to a pretty decent extent. The widespread application of NSs to MADM problems, where the decision makers express the ratings of alternatives with the help of NSs is gaining huge attention among researchers recently [7,8,9]. Single-valued neutrosophic set (SVNSs) were first proposed by Wang et al. [10], where he discussed some of their preliminary ideas and the arithmetic operations between SVNSs. Furthermore, Wang realized that interval numbers could better represent the truth, the indeterminacy, and the falsity degree of a particular statement, over the classical non-fuzzy or crisp numbers. Hence, Wang et al. [11], proposed interval neutrosophic sets (INSs). Thereafter, various methods were developed for MADM problems involving SVNSs and INSs, such as TOPSIS method [12, 13], weighted aggregation operators [14,15,16,17,18], subsethood measure [19], inclusion measures [20], and outranking method [21, 22]. As a result, MADM problems are tackled with an efficient and significant tool known as similarity measure (SM) [23,24,25,26,27]. The highest weighted SM value between the set of alternatives and the positive ideal alternative corresponds to the deserving or best alternative. Broumi and Smarandache [28], considered NSs and hence defined the Hausdorff distance measure between two such sets. Later on, it was Majumdar and Samanta [29] who utilized the concepts of membership degrees, matching function, and distance measure, for defining certain SMs between two SVNSs. The correlation coefficient for SVNSs was then improved by Ye [30]. Moreover, Ye [31] also introduced vector SMs for SVNSs and INSs, where SVNS acts as a vector in three dimensions. Also, Ye [32] utilized the vector concept in improving the cosine SM, so that it can be applied to problems of medical decision-making. It was Broumi and Smarandache [33] who tackled pattern recognition problems by extension of the cosine SM for SVNSs onto INSs. Consequently, Pramanik et al. [34] proposed hybrid vector SM for SVNSs and INSs.

In the recent 5 years, SVNSs have seen widespread applications in the realm of cognitive decision-making. For instance, Chai et al. [35] enriched the literature of SVNSs by proposing certain novel similarity measures. Saqlain et al. [36] proposed the concept of tangent similarity measure for single and multi-valued hypersoft sets under a neutrosophic setting. Likewise, Qin and Wang [37] proposed certain entropy measures for SVNSs with applications to MADM problems. Basset et al. [38] gave the form for cosine similarity measure for treatment of bipolar disorder diseases with the help of bipolar neutrosophic sets. Tan and Zhang [39] illustrated the decision-making procedure which is helpful in outrage/havoc assessment of typhoon disaster havoc by application of Refined SVNSs. Ye [40] carried out fault analysis of a steam turbine with the help of cotangent function-based SMs for SVNSs to maximize its efficiency. Moreover, Ye [41] discussed certain bidirectional projection measures of SVNSs for their applications in mechanical design. Mondal et al. [42] demonstrated a MADM strategy based on hyperbolic sine similarity measures for SVNSs. Thereafter, certain entropy and cross-entropy measures for SVNSs were proposed by Wu et al. [43]. Ye and Fu [44] in their paper, showed the usefulness of tangent function-based SM for the treatment of the multi-period medical condition. In the same year, Ye [45] demonstrated how a dimension root SM can be applied for the diagnosis of faults in hydraulic turbines. Garg and Nancy [46] proposed certain novel ideas of biparametric distance measures for SVNSs. The concept of SVNSs when applied to graphs, or precisely, “single-valued neutrosophic graphs”, was a significant and enticing theory which was proposed by Broumi et el. [47]. Unlike simple graphs, a stronger version of it known as hypergraphs had many remarkable applications in the literature. In simple graphs where a single edge can connect exactly two vertices only, but a hyperedge in a hypergraph can connect a set of vertices. In this context, Yu et al. [48] applied the learning method involving hypergraphs to model the pair-wise coherency between images. Another term for it is ‘transductive image classification’. Moreover, Yu et al. [49] applied the complex concept of multimodal hypergraphs to propose a sparse coding technique for the prediction of click data and image re-ranking, and as a consequence of which we get minimum margin for an era in a text-based image search. The application of hypergraphs tends to improve the visual efficacy which was established by Yu et al. [50] in their journal article. Moreover, in their article, they also proposed a novel ranking model which takes into account the click features and visual features. Later, a significantly effective and novel framework by the name of Muli-task Manifold Deep Learning (M2DL) for estimating face poses via multi-modal information was introduced by Hong et al. [51].

Therefore, it is clear that in the context of decision making, the psychological aspects play a significant role. The process through which we “perceive”, “interpret”, and “generate” our responses towards the thought process of people undergoing social interactions is termed as Social Cognition. Social functioning outputs have a strong linkage to social cognition, such that when such aspects are not considered the quality of decisions is degraded, while people achieve an enhanced degree of satisfaction with decisions when such factors are considered. A person needs to first understand the process of social interactions to handle social cognition tasks. For example, suppose a family decides to celebrate the birthday of one of its members and it is trying to figure out the best restaurant in the city for the celebration. Here, the intention of the family members comes into play rather than only the preferences towards the alternatives and their respective criteria. Now, the person whose birthday needs to be celebrated is the crucial one who needs to be content with the decision. Ironically, if some other members opt for a restaurant according to their liking which eventually makes the person (whose birthday is to be celebrated) unhappy, then the decision will be considered to be a bad/inappropriate one.

However, there exist certain cognitive limitations in people’s minds like initial impression and emotional satisfaction at the time of making decisions, which restrict them from making rational decisions in MCDM scenarios. For instance, suppose there is a real estate dealer who is interested in buying a house from four options that are presented to him/her by the estate agency. Now, if the dealer enters one of these houses and suddenly feels uncomfortable, then no matter how good the deal of the house is at that price, the dealer will restrict himself from buying it since his feeling does not allow him to do so. Consider another scenario, where a person wants to buy a second-hand truck. Even though the truck might be in excellent working condition and the car dealer offers him a good deal, but if the person who is interested in buying somehow feels that the dealer is tricking him, then he would not buy it. Here, the first impression of the person restricts him from making a final decision. Similarly, there are many such examples. From the literature, it is evident that MCDM techniques including cognitive aspects are close to inexistent. However, few researchers have tried to establish the linkage of social cognition into decision making problems (for details please refer to Bisdorff [52], Carneiro et al. [53], Homenda et al. [54], and Ma et al. [55]).

Motivation of Our Work

Uncertain or imprecise information is an indispensable factor in most real-world application problems. In this regard, NSs are well equipped with handling inconsistent information and indeterminate decision data with a better perspective over fuzzy sets and intuitionistic fuzzy sets. It is due to their characteristic independent membership functions of truth, indeterminacy, and falsity. The cognitive decision-making problems are innate to possession of factors like vagueness, error, contradiction, and redundancy data. In this context, NSs which are capable of handling such impreciseness or vagueness shall prove to be a good fit while solving multi-attribute decision-making and multi-attribute group decision-making problems. SVNSs are a particular subclass of NSs, which we will be using to tackle a MADM method. INSs are also a similar subclass of NSs, which are exempted in this study. There is a common practice of applying the vector SMs in decision-making problems [57,58,59]. However, motivated by the works of Ye [56] and Pramanik et al. [34], we propose two convex vector SM by taking the convex combination of Jaccard and Dice SMs and Jaccard and cosine SMs, respectively. In this article, our proposed measures for SVNSs are developed extending the concept of variation coefficient similarity method [56], under the neutrosophic setting. We discuss some basic properties of the newly constructed measures and thereby demonstrating their valid structural formulation. The application of our proposed measures is shown while tackling few practical MADM scenarios. The final yielded outcomes, affirms the accuracy and good fit of our proposed measures.

Structure of the Paper

Hence, the rest of the paper is set out as follows: in “Preliminaries and Existing Methods”, we give a brief overview of the concepts related to neutrosophic sets, SVNSs, and also, we review certain existing weighted vector SMs for SVNSs. In “Proposed Method”, our proposed definitions of weighted convex vector similarity measures are discussed, while the subsequent “MADM based on Proposed Similarity Measure for SVNSs” firstly elaborates the procedure to solve any MADM problem based on the proposed method. Then, to validate the applicability of our proposed measures, we illustrate few practical MADM scenarios which for instance are optimum profit for an investment company and the selection of proper face masks, to prevent the spread of the COVID-19 pandemic. In addition, they are supported by meticulous comparative analysis showing the feasibility of our proposed measures with the existing methods in the literature. Finally, “Conclusion” provides the conclusions for the article.

Preliminaries and Existing Methods

Here, in this section, we briefly review some of the concepts of NSs, SVNSs, and their basic arithmetic operations, the ideas of which will be necessitated in the subsequent sections of our study.

Definition 1

Suppose we consider \(U\) to be a space of objects (points) and let us denote the generic element in \(U\) by \(x\). Then a neutrosophic set \(P\) in \(U\) is characterized by three independent functions, a truth membership function \(T_{P} \left( x \right)\), an indeterminacy membership function \(I_{P} \left( x \right)\), and a falsity membership function \(F_{P} \left( x \right)\). The three functions are standard and non-standard subsets of the interval \(\left] {{}^{ - }0,1^{ + } } \right[\), and the following condition is satisfied,

Noteworthy that, neutrosophic sets introduced by Smarandache [1] were more from a philosophical point of view and they had very scarce applications in the field of science and engineering. As a better successor to it, Wang et al. [10] introduced a subclass of the neutrosophic sets, which are called single-valued neutrosophic sets (SVNSs).

Definition 2

(Single-Valued Neutrosophic Sets) [10]

Suppose we consider \(U\) be a space of objects (points) and let us denote the generic element in \(U\) by \(x\). Then a single-valued neutrosophic set \(P\) in \(X\) is characterized by three independent functions, a truth membership function \(T_{P} \left( x \right)\), an indeterminacy membership function \(I_{P} \left( x \right)\), and a falsity membership function \(F_{P} \left( x \right)\). We denote the SVNS \(P\) as

\(P = \left\{ {\left\langle {x,T_{P} \left( x \right),I_{P} \left( x \right),F_{P} \left( x \right)} \right\rangle |x \in X} \right\}\), where \(T_{P} \left( x \right)\), \(I_{P} \left( x \right)\), \(F_{P} \left( x \right)\)\(\in\lbrack0,1\rbrack;\,\,\,\,x\in U\).

Also, the following inequality is satisfied by the sum of \(T_{P} \left( x \right)\), \(I_{P} \left( x \right)\), and \(F_{P} \left( x \right)\),

For the sake of simplicity, let us consider, \(P = \left\langle {T_{P} \left( x \right),I_{P} \left( x \right),F_{P} \left( x \right)} \right\rangle\) as the SVNS in \(U\).

Definition 3

(Arithmetic operations between SVNSs) [10, 16]

For any two SVNSs \(P = \left\langle {T_{P} \left( x \right),I_{P} \left( x \right),F_{P} \left( x \right)} \right\rangle\) and \(Q = \left\langle {T_{Q} \left( x \right),I_{Q} \left( x \right),F_{Q} \left( x \right)} \right\rangle\) considered in a finite universe \(U\), the arithmetic operations between them were proposed in previous studies [10, 16] as follows:

-

1.

Complement: The complement of SVNS \(P\) is denoted by \(P^{C}\) and defined as \(P^{C} = \left\langle {F_{P} \left( x \right),\,\,1 - I_{P} \left( x \right),\,\,T_{P} \left( x \right)} \right\rangle\), where the first component is the falsity membership degree for \(P\), the second component is 1 minus the indeterminacy degree for \(P\), and the third is the truth membership grade for \(P\).

-

2.

Inclusion: A SVNS \(P\) is said to be a subset of another SVNS \(Q\), that is \(P \subseteq Q\) if and only if \(T_{P} \left( x \right) \le T_{Q} \left( x \right),\,\,I_{P} \left( x \right) \ge I_{Q} \left( x \right),\,\,F_{P} \left( x \right) \ge F_{Q} \left( x \right);\,\,\forall x \in U\).

-

3.

Equality: For equality between two SVNSs \(P\) and \(Q\) to hold, we must have that both sets must be a subset of each other, that is, \(P \subseteq Q\) and \(P \supseteq Q\). Or in other words, we can also say that, \(P = Q\) if and only if \(T_{P} \left( x \right) = T_{Q} \left( x \right),\,\,I_{P} \left( x \right) = I_{Q} \left( x \right),\,\,F_{P} \left( x \right) = F_{Q} \left( x \right);\,\,\forall x \in U\).

-

4.

Addition: The addition operation between two SVNSs \(P\) and \(Q\) is defined by\(P \oplus Q = \left\{ {\left\langle {x,\,\,T_{P} \left( x \right) + T_{Q} \left( x \right) - T_{P} \left( x \right)T_{Q} \left( x \right),\,\,I_{P} \left( x \right)I_{Q} \left( x \right),\,\,F_{P} \left( x \right)F_{Q} \left( x \right)} \right\rangle |x \in U} \right\}\), which is not the traditional additive rule. Here, the first component is the algebraic sum of truth degrees minus their product, the second component is the product of the indeterminacy degree for the two sets, and the third component is the product of their falsity degrees.

-

5.

Multiplication: The multiplication operation between two SVNSs \(P\) and \(Q\) is defined by \(P \otimes Q = \left\{ {\left\langle {x,\,\,T_{P} \left( x \right)T_{Q} \left( x \right),\,\,I_{P} \left( x \right) + I_{Q} \left( x \right) - I_{P} \left( x \right)I_{Q} \left( x \right),\,\,F_{P} \left( x \right) + F_{Q} \left( x \right) - F_{P} \left( x \right)F_{Q} \left( x \right)} \right\rangle |x \in U} \right\}\), where the components swap their arithmetic as in the addition case. That is, the first component is the product of their truth degrees, the second component being the algebraic sum minus the product of their indeterminacy degrees, and it is the algebraic sum minus their product of falsity degrees in the third component.

-

6.

Union: The union of two SVNSs \(P\) and \(Q\) is defined by\(P \cup Q = \left\{ {\left\langle {x,\,\,T_{P} \left( x \right) \vee T_{Q} \left( x \right),\,\,I_{P} \left( x \right) \wedge I_{Q} \left( x \right),\,\,F_{P} \left( x \right) \wedge F_{Q} \left( x \right)} \right\rangle |\forall x \in U} \right\}\). The resulting SVNS has the first component as the maximum of their truth degrees, the second component as the minimum of their indeterminacy degrees, and the third component is the minimum of their respective falsity degrees as well.

-

7.

Intersection: The intersection of two SVNSs \(P\) and \(Q\) is defined by \(P \cap Q = \left\{ {\left\langle {x,\,\,T_{P} \left( x \right) \wedge T_{Q} \left( x \right),\,\,I_{P} \left( x \right) \vee I_{Q} \left( x \right),\,\,F_{P} \left( x \right) \vee F_{Q} \left( x \right)} \right\rangle |\forall x \in U} \right\}\). Broadly speaking, the intersection is the reverse case for union operation since the maximum function in the case of union operation becomes the minimum function here, and vice-versa.

Existing Non-weighted Vector Similarity Measures

SMs greatly enhance the valuable output efficiency in decision-making processes. Many experts from time to time have formulated several fruitful definitions of SMs based on distances and vectors. Hence, in the following sequel, we recall the definitions of Jaccard [57], Dice [58], and cosine [59] similarity measures. These SMs are structurally simple, easy to compute, and modest, which enables the decision-makers to determine the different similarity value options at ease.

Let \(M = \left( {m_{1} ,m_{2} ,...,m_{s} } \right)\) and \(N = \left( {n_{1} ,n_{2} ,...,n_{s} } \right)\) be two \(s\)-dimensional vectors having non-negative co-ordinates. Then,

Definition 4

Between any two vectors \(M = \left( {m_{1} ,m_{2} ,...,m_{s} } \right)\) and \(N = \left( {n_{1} ,n_{2} ,...,n_{s} } \right)\), the Jaccard similarity measure [57] is defined as

where \(\left\| M \right\| = \sqrt {\sum\limits_{i = 1}^{s} {m_{i}^{2} } }\) and \(\left\| N \right\| = \sqrt {\sum\limits_{i = 1}^{s} {n_{i}^{2} } }\) are called the Euclidean norms of \(M\) and \(N\), and the inner product of vectors \(M\) and \(N\) is given by \(M.N = \sum\limits_{i = 1}^{s} {m_{i} n_{i} }\).

The above-mentioned similarity measure satisfies the following properties:

-

J1. \(0 \le J_{SM} \left( {M,N} \right) \le 1\)

-

J2. \(J_{SM} \left( {M,N} \right) = J_{SM} \left( {N,M} \right)\)

-

J3. \(J_{SM} \left( {M,N} \right) = 1\) for \(M = N\), i.e., \(m_{i} = n_{i} \,\left( {i = 1,2,...,s} \right)\) for every \(m_{i} \in M\) and \(n_{i} \in N\)

Definition 5

Between two vectors \(M = \left( {m_{1} ,m_{2} ,...,m_{s} } \right)\) and \(N = \left( {n_{1} ,n_{2} ,...,n_{s} } \right)\), the Dice similarity measure [58] is defined as

It satisfies the following properties:

-

D1. \(0 \le D_{SM} \left( {M,N} \right) \le 1\)

-

D2. \(D_{SM} \left( {M,N} \right) = D_{SM} \left( {N,M} \right)\)

-

D3. \(D_{SM} \left( {M,N} \right) = 1\) for \(M = N\), i.e., \(m_{i} = n_{i} \,\left( {i = 1,2,...,s} \right)\) for every \(m_{i} \in M\) and \(n_{i} \in N\)

Definition 6

Between two vectors \(M = \left( {m_{1} ,m_{2} ,...,m_{s} } \right)\) and \(N = \left( {n_{1} ,n_{2} ,...,n_{s} } \right)\), the cosine similarity measure [59] is defined as

It satisfies the following properties:

-

C1. \(0 \le C_{SM} \left( {M,N} \right) \le 1\)

-

C2. \(C_{SM} \left( {M,N} \right) = C_{SM} \left( {N,M} \right)\)

-

C3. \(C_{SM} \left( {M,N} \right) = 1\) for \(M = N\), i.e., \(m_{i} = n_{i} \,\left( {i = 1,2,...,s} \right)\) for every \(m_{i} \in M\) and \(n_{i} \in N\)

Remark 1

The common property that each of these similarity measures hold is that they assume values within the unit interval \([0,1]\). Jaccard and Dice SMs are undefined for both \(m_{i} = 0\) and \(n_{i} = 0\), whereas cosine similarity measure is undefined for either \(m_{i} = 0\) or \(n_{i} = 0\), for \(i = 1,2,...,s\).

Definition 7

Between two vectors \(M = \left( {m_{1} ,m_{2} ,...,m_{s} } \right)\) and \(N = \left( {n_{1} ,n_{2} ,...,n_{s} } \right)\), the variation coefficient similarity measure [56] is defined as

It satisfies the following properties:

-

V1. \(0 \le V_{CF} \left( {M,N} \right) \le 1\)

-

V2. \(V_{CF} \left( {M,N} \right) = V_{CF} \left( {N,M} \right)\)

-

V3. \(V_{CF} \left( {M,N} \right) = 1\) for \(M = N\), i.e., \(m_{i} = n_{i} \,\left( {i = 1,2,...,s} \right)\) for every \(m_{i} \in M\) and \(n_{i} \in N\)

Some Weighted Vector Similarity Measures of SVNSs

In multiple-criteria decision making methods, criteria weights have a much larger influence on the outcomes yielded by a decision process and also on the ranking of alternatives. The reason being that it takes into account the relative importance of each criterion concerning the set of alternatives chosen. Consequently, any such process which does not consider the respective criteria weightage and sets identical weights of importance for them thus loses its logical importance. Thus, decision-makers from their best of knowledge try to allocate weights to each criterion involved in a decision process. Hereby, we list down three existing definitions of weighted vector SMs.

Suppose we consider two SVNSs \(P\) and \(Q\) in a three-dimensional space defined by

\(P = \left\{ {\left\langle {T_{P} \left( {x_{i} } \right),I_{P} \left( {x_{i} } \right),F_{P} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\) and \(Q = \left\{ {\left\langle {T_{Q} \left( {x_{i} } \right),I_{Q} \left( {x_{i} } \right),F_{Q} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\).

Then, we can define weighted vector similarity as follows:

Definition 8

Let \(U\) be a universe of discourse defined by \(U = \left\{ {x_{1} ,x_{2} ,...,x_{r} } \right\}\), where \(P = \left\{ {\left\langle {T_{P} \left( {x_{i} } \right),I_{P} \left( {x_{i} } \right),F_{P} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\) and \(Q = \left\{ {\left\langle {T_{Q} \left( {x_{i} } \right),I_{Q} \left( {x_{i} } \right),F_{Q} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\) be two SVNSs.

Let \(w_{i} \in [0,1]\) be the weight of every element \(x_{i} \left( {i = 1,2,...,r} \right)\), so that \(\sum\limits_{i = 1}^{r} {w_{i} } = 1\).

Then, the weighted Jaccard similarity measure [30] between \(P\) and \(Q\) is defined as

Definition 9

Let \(U\) be a universe of discourse defined by \(U = \left\{ {x_{1} ,x_{2} ,...,x_{r} } \right\}\), where \(P = \left\{ {\left\langle {T_{P} \left( {x_{i} } \right),I_{P} \left( {x_{i} } \right),F_{P} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\) and \(Q = \left\{ {\left\langle {T_{Q} \left( {x_{i} } \right),I_{Q} \left( {x_{i} } \right),F_{Q} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\) be two SVNSs.

Let \(w_{i} \in [0,1]\) be the weight of every element \(x_{i} \left( {i = 1,2,...,r} \right)\), so that \(\sum\limits_{i = 1}^{r} {w_{i} } = 1\).

Then, the weighted Dice similarity measure [30] between \(P\) and \(Q\) is defined as

Definition 10

Let \(U\) be a universe of discourse defined by \(U = \left\{ {x_{1} ,x_{2} ,...,x_{r} } \right\}\), where \(P = \left\{ {\left\langle {T_{P} \left( {x_{i} } \right),I_{P} \left( {x_{i} } \right),F_{P} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\) and \(Q = \left\{ {\left\langle {T_{Q} \left( {x_{i} } \right),I_{Q} \left( {x_{i} } \right),F_{Q} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\) be two SVNSs.

Let \(w_{i} \in [0,1]\) be the weight of every element \(x_{i} \left( {i = 1,2,...,r} \right)\), so that \(\sum\limits_{i = 1}^{r} {w_{i} } = 1\).

Then, the weighted cosine similarity measure [30] between \(P\) and \(Q\) is defined as

Remark 2

It is noteworthy that Eqs. (7), (8), and (9) satisfy

-

P1. \(0 \le J_{WSM} \left( {P,Q} \right) \le 1;\,\,0 \le D_{WSM} \left( {P,Q} \right) \le 1;\,\,0 \le C_{WSM} \left( {P,Q} \right) \le 1\)

-

P2. \(J_{WSM} \left( {P,Q} \right) = J_{WSM} \left( {Q,P} \right);\,\,D_{WSM} \left( {P,Q} \right) = D_{WSM} \left( {Q,P} \right);\,\,C_{WSM} \left( {P,Q} \right) = C_{WSM} \left( {Q,P} \right)\)

-

P3. \(J_{WSM} \left( {P,Q} \right) = 1;\,\,D_{WSM} \left( {P,Q} \right) = 1;\,\,C_{WSM} \left( {P,Q} \right) = 1\) if and only if \(P = Q\), which implies

\(T_{P} \left( {x_{i} } \right) = T_{Q} \left( {x_{i} } \right),\,\,I_{P} \left( {x_{i} } \right) = I_{Q} \left( {x_{i} } \right),\,\,F_{P} \left( {x_{i} } \right) = F_{Q} \left( {x_{i} } \right)\), for every \(x_{i} \in X\,\left( {i = 1,2,...,r} \right)\).

Remark 3

Now, \(J_{WSM} \left( {P,Q} \right),\,D_{WSM} \left( {P,Q} \right)\) for SVNSs \(P = \left\{ {\left\langle {T_{P} \left( {x_{i} } \right),I_{P} \left( {x_{i} } \right),F_{P} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\) and \(Q = \left\{ {\left\langle {T_{Q} \left( {x_{i} } \right),I_{Q} \left( {x_{i} } \right),F_{Q} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\), are undefined when \(P = \left\langle {0,0,0} \right\rangle\) and \(Q = \left\langle {0,0,0} \right\rangle\), i.e., for \(T_{P} = I_{P} = F_{P} = 0\) and \(T_{Q} = I_{Q} = F_{Q} = 0\) given \(i = 1,2,...,r\). On the other hand, \(C_{WSM} \left( {P,Q} \right)\) is undefined for \(P = \left\langle {0,0,0} \right\rangle\) or \(Q = \left\langle {0,0,0} \right\rangle\), i.e., when \(T_{P} = I_{P} = F_{P} = 0\) or \(T_{Q} = I_{Q} = F_{Q} = 0\) for \(i = 1,2,...,r\).

Proposed Method

Based on the enormous potential of SVNSs which help raise the utility of cognitive decision-making process, we propose two weighted convex vector SMs (WCVSMs) which are dependent on the coefficient parameter. The SMs have arranged in such a way that their structure represents a convex combination. The idea for such formulation came up as the vector SMs are empirically established to produce feasible and rational outcomes on their own, so there is nothing wrong with constructing a function out of those SMs. The sole intention is to serve the decision-making domain with efficient similarity measures which are capable of producing convincing outcomes by providing a global evaluation framework for each alternative with respect to each criterion. Hence, the two proposed measures are listed below.

Definition 11

Let us consider \(U\) be a universe of discourse defined by \(U = \left\{ {x_{1} ,x_{2} ,...,x_{r} } \right\}\), where \(P = \left\{ {\left\langle {T_{P} \left( {x_{i} } \right),I_{P} \left( {x_{i} } \right),F_{P} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\) and \(Q = \left\{ {\left\langle {T_{Q} \left( {x_{i} } \right),I_{Q} \left( {x_{i} } \right),F_{Q} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\) are two SVNSs.

Also, let \(w_{i} \in [0,1]\) be the weight of every element \(x_{i} \left( {i = 1,2,...,r} \right)\), such that \(\sum\limits_{i = 1}^{r} {w_{i} } = 1\).

Then the two weighted convex vector similarity measures between SVNSs are proposed as follows:

and

Our proposed measure(s) satisfy the following proposition,

Proposition 1

The two proposed weighted convex vector similarity measure (WCVSM) of SVNSs between \(P\) and \(Q\) satisfy the properties given below:

-

P1: \(0 \le S_{W}^{JD} \left( {P,Q} \right) \le 1;\,\,\,0 \le S_{W}^{JC} \left( {P,Q} \right) \le 1\)

-

P2. \(S_{W}^{JD} \left( {P,Q} \right) = S_{W}^{JD} \left( {Q,P} \right);\,\,\,S_{W}^{JC} \left( {P,Q} \right) = S_{W}^{JC} \left( {Q,P} \right)\)

-

P3. \(S_{W}^{JD} \left( {P,Q} \right) = 1;\,\,\,S_{W}^{JC} \left( {P,Q} \right) = 1\) when \(P = Q\), i.e.,

-

\(T_{P} \left( {x_{i} } \right) = T_{Q} \left( {x_{i} } \right),\,\,I_{P} \left( {x_{i} } \right) = I_{Q} \left( {x_{i} } \right),\,\,F_{P} \left( {x_{i} } \right) = F_{Q} \left( {x_{i} } \right)\), for every \(x_{i} \in X\,\left( {i = 1,2,...,r} \right)\)

Proof:

(P1). From eqns. (7) and (8), we find that for Jaccard and Dice similarity measures of SVNSs, \(0 \le J_{WSM} \left( {P,Q} \right) \le 1\) and \(0 \le D_{WSM} \left( {P,Q} \right) \le 1\) for all \(i = 1,2,...,r\). Now, Eq. (10) can be written as follows,

Since, \(J_{WSM} \left( {P,Q} \right) \ge 0\) and \(D_{WSM} \left( {P,Q} \right) \ge 0\), so does the WCVSM, \(S_{W}^{JD} \left( {P,Q} \right) \ge 0\) for all \(\lambda \in [0,1]\).

Thus, the first property is satisfied. Similarly, we can prove \(0 \le S_{W}^{JC} \left( {P,Q} \right) \le 1\).

(P2). From Eq. (10),

Similar results are also obtained from Eq. (11), \(S_{W}^{JC} \left( {P,Q} \right) = S_{W}^{JC} \left( {Q,P} \right)\), which proves the second property.

(P3). If \(T_{P} \left( {x_{i} } \right) = T_{Q} \left( {x_{i} } \right),\,I_{P} \left( {x_{i} } \right) = I_{Q} \left( {x_{i} } \right)\) and \(F_{P} \left( {x_{i} } \right) = F_{Q} \left( {x_{i} } \right)\), for \(i = 1,2,...,r\), then the value of \(J_{WSM} \left( {P,Q} \right) = 1,\,\,D_{WSM} \left( {P,Q} \right) = 1\) and \(C_{WSM} \left( {P,Q} \right) = 1\). Therefore, from Eq. (10), the value of \(S_{W}^{JD} \left( {P,Q} \right) = 1\) and \(S_{W}^{JC} \left( {P,Q} \right) = 1\). This concludes the proof.

Remark 4

Now for \(P = \left\{ {\left\langle {T_{P} \left( {x_{i} } \right),I_{P} \left( {x_{i} } \right),F_{P} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\) and \(Q = \left\{ {\left\langle {T_{Q} \left( {x_{i} } \right),I_{Q} \left( {x_{i} } \right),F_{Q} \left( {x_{i} } \right)} \right\rangle |x_{i} \in U} \right\}\), the convex similarity measure value is assumed to be zero for \(P = \left\langle {0,0,0} \right\rangle\) and \(Q = \left\langle {0,0,0} \right\rangle\).

MADM Based on Proposed Similarity Measure for SVNSs

We consider a multi-attribute decision-making problem with \(x\) set of alternatives and \(y\) set of attributes, where the values of the attributes are represented by SVNSs. Let \(A = \left\{ {A_{1} ,A_{2} ,...,A_{x} } \right\}\) be a finite collection of alternatives and \(C = \left\{ {C_{1} ,C_{2} ,...,C_{y} } \right\}\) be the finite collection of attributes. Also, let the weight vector be denoted by \(w = \left( {w_{1} ,w_{2} ,...,w_{y} } \right)^{T}\) corresponding to the set of attributes \(C_{j} \left( {j = 1,2,...,y} \right)\) such that \(\sum\limits_{j = 1}^{y} {w_{j} } = 1\) and \(w_{j} \ge 0\). We denote the decision matrix by \(D = \left( {s_{ij} } \right)_{x \times y}\), where the preference values of the alternatives \(A_{i} \left( {i = 1,2,...,x} \right)\) over the attribute set \(C_{j} \left( {j = 1,2,...,y} \right)\) are depicted by single valued neutrosophic element of the form \(s_{ij} = \left\langle {T_{ij} ,I_{ij} ,F_{ij} } \right\rangle\). Here, \(T_{ij}\) indicates the membership degree, \(I_{ij}\) denotes the indeterminacy degree and \(F_{ij}\) indicates the non-membership degree for the alternative \(A_{i}\) with respect to the attribute \(C_{j}\). Thus, for \(i = 1,2,...,x;\,\,\,j = 1,2,...,y\) we have, \(0 \le T_{ij} + I_{ij} + F_{ij} \le 3\) and \(T_{ij} \in [0,1],\,\,I_{ij} \in [0,1],\,\,F_{ij} \in [0,1]\).

Let us consider that the alternative \(A_{i} \left( {i = 1,2,...,x} \right)\) takes single-valued neutrosophic values and has the following representation, \(A_{i} = \left( {s_{i1} ,s_{i2} ,...,s_{ix} } \right),\,\,\,for\,\,i = 1,2,...,x;\)

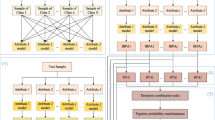

There are certain steps to follow while selecting the best alternative amongst a set of alternatives which are as follows,

Step 1

Determination of the ideal solution

It is a very common procedure in MADM to utilize the concept of an ideal alternative/solution. And, realizing a perfectly ideal solution in the real world is an abstract idea as there does not exist any such. However, in order to construct a useful theoretical framework and to carry out the mathematical calculations, we incorporate the concept of an ideal solution. It facilitates the set of alternatives under study to be ranked based on the degree of similarity (closeness) or non-similarity (farness) from the ideal solution. Thus, we need to determine the SVNS-based ideal solution.

Let \(Y\) denote the entire collection of two types of attribute, which are namely profit/benefit type attribute (\(B\)) and the cost-type attribute (\(L\)). Then the ideal solution (IS), \(A^{*} = \left( {s_{1}^{*} ,s_{2}^{*} ,...,s_{x}^{*} } \right)\), is that solution of the decision matrix \(D = \left( {s_{ij} } \right)_{x \times y}\) which is defined as

and

Step 2

We evaluate the WCVSM between the ideal alternative and each alternative.

From Eqs. (10) and (11), the WCVSMs between the ideal alternative \(A^{*}\) and the alternative \(A_{i}\) for \(i = 1,2,...,x;\,\,\lambda \in [0,1]\) are given by

where the ideal solution \(A^{*}\) takes respective forms according to the nature of the attribute as depicted in Eqs. (14) and (15).

Step 3

We rank the alternatives.

The ranking of the alternatives could be easily determined according to the values obtained from Eqs. (14) and (15). The decreasing order of the WCVSMs gives the required ranking or preference ordering of the alternatives.

Optimum Profit Determination by an Investment Company

We consider a multi-attribute decision making problem (adapted from Ye [7]), in which an investment company is interested in finding out the best suitable alternative amongst a set of four alternatives: (1) \(A_{1}\) is a computer company, (2) \(A_{2}\) is an arms company, (3)\(A_{3}\) is a car company, and (4) \(A_{4}\) is a food company. Three criteria(s) are taken into consideration by the investment company based on which alternatives are evaluated, which are (1) \(C_{1}\) is the environmental impact analysis, (2) \(C_{2}\) is the growth analysis, and (3) \(C_{3}\) is the risk factor analysis. The decision maker assesses the four possible alternatives with respect to the attributes based on the SVNS values provided. The SVNS-based decision matrix \(D = \left( {d_{ij} } \right)_{4 \times 3}\) are presented in Table 1.

The weight vector is given by \(W = \left\{ {w_{1} ,w_{2} ,w_{3} } \right\}^{T} = \left\{ {0.40,0.25,0.35} \right\}^{T}\) such that

Step 1

Identification of the attribute-type.

Here, the attributes \(C_{2}\) and \(C_{3}\) are benefit-type attribute, while \(C_{1}\) is identified as cost-type attribute.

Step 2

Determination of the ideal solution (IS).

With the help of Eqs. (14) and (15), the ideal solution for the given decision matrix \(D = \left( {d_{ij} } \right)_{4 \times 3}\) can be determined as

Step 3

Evaluation of the weighted convex vector similarity measures.

We evaluate the weighted convex vector similarity measures with the help of the Eqs. (12–14), and the outcomes obtained for various values of \(\lambda\) are presented in Tables 2 and 3.

Step 4

Ranking of the alternatives.

Based on the outcomes obtained for different values of \(\lambda\) as shown in Tables 2 and 3, the alternative \(A_{2}\) turns out to be the best suitable alternative.

Comparative Analysis

Here, we provide a comparison of the outputs obtained via our proposed convex vector similarity measures with some of the existing similarity measures on the illustrated MADM scenario. The comparison results along with their evaluated similarity values are depicted in Table 4. It is very obvious from Table 4 that our results for evaluation of the best alternative are in agreement with Ye’s vector similarity measure method [31], Ye’s improved cosine similarity measure [32], and hybrid vector similarity measure by Pramanik et al. [34], for SVNSs. Even the ranking order of the alternatives obtained with our proposed measures coincides exactly with that of Ye’s SMs ([31, 32]), whereas the alternatives \({A}_{3}\) and \({A}_{4}\), interchange their places under Pramanik et al.’s method.

Furthermore, the ranking order evaluated by subset-hood measure method [19], improved correlation coefficient [30] is demonstrated in Table 5. According to [19] and [30], the alternative \({A}_{2}\) is the second-best choice amongst the set of alternatives, whereas it is the best choice according to previous studies [31, 32, 34], and our presented measures. Hence, the validity and feasibility of our measures is established.

Appropriate Mask Selection to Prevent COVID-19 Outbreak

The demand for face masks has seen an unprecedented spike as a result of the havoc and outrage caused by the COVID-19 pandemic. Several types of masks which are normally available in the market are, namely, disposable medical masks (\(M_{1}\)), normal non-medical masks (\(M_{2}\)), surgical masks (\(M_{3}\)), gas masks (\(M_{4}\)), thick-layered medical protective masks (\(M_{5}\)), and N95 masks or particulate respirators (\(M_{6}\)). People interested in buying an appropriate mask keep the following four attributes in mind, namely, high filtration capability (\(A_{1}\)), ability to re-utilize or re-use (\(A_{2}\)), material texture or quality (\(A_{3}\)), and rate of leakage (\(A_{4}\)). The attribute values are determined based on the evaluation index provided by people for each type of mask and are presented via SVNSs as shown in Table 6.

Further, it is necessary to assign attribute weights to each attribute since different people have different respiratory conditions. For instance, a person having high respiratory complications will for obvious reasons put more weightage on the filtration capability attribute of the mask, to minimize the chances for transmission of COVID-19 disease.

Thus, instead of considering equal weights for the attributes \(A_{1} ,A_{2} ,A_{3}\) and \(A_{4}\), we consider the weigh vector to be \(W = \left\{ {0.6,0.1,0.1,0.2} \right\}\). We proceed in a step-wise manner which is illustrated below.

Step 1

Identification of the attribute-type.

Here, attributes \(A_{1} ,\;A_{2}\), and \(A_{3}\) are of benefit-type, while \(A_{4}\) is a cost-type attribute.

Step 2

Determination of the ideal solution (IS).

The ideal solution \(M^{*} = \left( {M_{1}^{*} ,M_{2}^{*} ,M_{3}^{*} ,M_{4}^{*} } \right)\) is constructed using the formulae given below,

\(M_{j}^{*} = \left\langle {T_{j}^{*} ,I_{j}^{*} ,F_{j}^{*} } \right\rangle = \left\langle {\mathop {\max }\limits_{i} \left\{ {T_{ij} } \right\},\mathop {\min }\limits_{i} \left\{ {I_{ij} } \right\},\mathop {\min }\limits_{i} \left\{ {F_{ij} } \right\}} \right\rangle\) for benefit-type attribute, and \(M_{j}^{*} = \left\langle {T_{j}^{*} ,I_{j}^{*} ,F_{j}^{*} } \right\rangle = \left\langle {\mathop {\min }\limits_{i} \left\{ {T_{ij} } \right\},\mathop {\max }\limits_{i} \left\{ {I_{ij} } \right\},\mathop {\max }\limits_{i} \left\{ {F_{ij} } \right\}} \right\rangle\) for cost-type attribute, and where \(i = 1,2,...,6\,;\,j = 1,2,3,4\). Therefore, with the help of above two equations, the ideal solution for the given decision matrix \(R = \left( {r_{ij} } \right)_{6 \times 4}\) is evaluated as,

Therefore, with the help of above two equations, the ideal solution for the given decision matrix \(R = \left( {r_{ij} } \right)_{6 \times 4}\) is evaluated as,

Step 3

Determining the weighted convex vector similarity measures.

By multiplication of the respective weight to each attribute, we obtain the weighted vector similarity measure values as,

By our first proposed measure \(S_{W}^{JD} \left( {M^{*} ,M_{i} } \right)\),

For \(\lambda =0.1\),

For \(\lambda =0.4\),

For \(\lambda =0.8\),

By our first proposed measure \(S_{W}^{JC} \left( {M^{*} ,M_{i} } \right)\),

For \(\lambda =0.1\),

For \(\lambda =0.4\),

For \(\lambda =0.8\),

Moreover, the similarity measure results obtained under various existing measures are also presented in Table 7.

Step 4 Ranking of the masks.

Based on the highest similarity measure value obtained between the set of masks and the ideal solution (mask), we find that \(M_{6}\) (N95-mask) is the appropriate mask or the best buying option to help minimize the transmission rate of the COVID-19 pandemic.

It is evident from Table 7 that our evaluation for the best suitable mask coincides with the outcomes obtained with other similarity measures as well. This demonstrates the credibility and validity of our newly proposed similarity measures.

Computational-time Analysis

Based on conclusive evidence obtained from experimental data and owing to their “simple” and “easy-to compute” structure, the vector SMs are found to be very much effective. This implies that the calculations involved consume a substantially less amount of time which provided the decision-makers with the surplus advantage of time.

It is noteworthy that since our newly constructed measures are devised with the help of the Jaccard, Dice, and cosine vector SMs, so the computation time is much smaller in our case too. However, the only difference is that the time taken for our calculations is twice that when vector SMs are considered alone. But even then, a very miniature amount of time is spent, and adopting powerful software like MATLAB for calculation purposes, just eases our load and provides instant results in the blink of an eye. For obvious reasons, that additional amount of time taken via our constructed measures is compensated by the accurate and efficient results evaluated.

The main advantage of our proposed measures over the existing methods in the literature is the fact that they can not only accommodate the SVN environment, but they can also capture the indeterminate information supplied by the decision-makers, automatically.

Importance of the Study

NSs are developed from a philosophical point of view and as a generalization to many sets like classical set, fuzzy set, intuitionistic fuzzy set, interval-valued fuzzy set, interval-valued intuitionistic fuzzy set, tautological set, paradoxist set, dialetheist set, and paraconsistent set. But, due to their scarce real-scientific and engineering applications, a particular subclass of NSs was developed known as SVNSs. SVNSs have the unique ability to imitate the ambiguous nature of subjective judgments provided by the decision-makers and are suitable for dealing with uncertain, imprecise, and indeterminate information which are prevalent in multiple-criteria decision analysis. Thus, SVNSs provide a significant and powerful mathematical framework and have recently become one of the research hotspots for researchers from all over the globe. In our study, we investigate and propose certain similarity measures for SVNSs since the concept of similarity has a big influence on MADM problems. It is to be noted that elements that are regarded as similar are viewed from different perspectives of parameters like closeness, proximity, resemblances, distances, and dissimilarities. Moreover, in decision-making problems, human beings as decision-makers scrutinize several criteria before making a final decision. So, considering the relative importance of weights becomes a necessity. Most often, the weights are considered in such a way that their sum is equal to one. While comparing two objects, we normally are interested in knowing whether the objects are identical or partially (approximately) identical or at least identical to what degree. This instinct compels us to investigate and address some desirable properties about the form of similarity measures for SVNSs under consideration.

Conclusion

Decision making in humans can be described as a cognitive process that mainly focuses on the data which is given as input and on the cognitive capabilities of people. By cognitive capabilities, we mean the ability by which we know how the available information is further processed. Unlike machines which process the given information in a binary form, whereas humans do not think similarly. Rather people’s opinions can be expressed/measured on a specific evaluation scale. Mostly the fluctuations or deviations observed in decision making problems are due to behavioral biases among people, which are deviations from rational standards while processing arguments. Further, few other factors like time factor, overestimation of negative comments, perception differences among people regarding positive, and negative information also contribute towards irrational decisions being made. Often people make such non-rational decisions in the process of trying to avoid losses. Therefore, proper knowledge of psychology is required to understand how people choose between different courses of action. Hence, we can say that a significant target of cognitive psychology is to elaborate the mental state-of-art processes that define human behavior.

In the same vein, the similarity measure concept is one of the prime concepts in human cognition. The role of similarity measures is so crucial in decision-making domain that it has diverse applications in the field of machine learning, taxonomy, case-based reasoning, recognition, ecology, physical anthropology, automatic classification, psychology, citation analysis, information retrieval, and many more. In this regard, one of the efficient and significant tools for the measurement of similarity between two objects is the vector SMs. Jaccard, Dice, and cosine SMs are the ones that are mostly sought for. But each element of the universe has some inherent weight associated with them, so to provide an order of importance among the elements, we opt for weighted vector SMs over the non-weighted vector SMs. Hence, in this article, we have proposed two weighted convex vector SMs for SVNSs. SVNSs being a subclass of NSs are considered here, due to their efficiency in tackling imprecise, incomplete, and inconsistent information. They provide the decision-makers with an additional probability to capture the indeterminate information which normally exists in almost all real-world phenomena. Thereafter, a MADM method is discussed in detail using the proposed weighted vector SMs. Furthermore, numerical illustrations are provided for validation and feasibility of the presented approach. Moreover, in light of the recent pandemic situation which the whole world is facing due to the COVID-19 outbreak, our proposed measures have addressed the task of proper selection of antivirus masks for people by representing the problem in the context of a MADM scenario. Thus, our newly constructed measures are capable of producing intuitive results and their veracity is established when compared with the existing measures. Consequently, the outcomes are found to be in logical agreement.

In the future research direction, we shall try our best to seek potential multifarious applications of our proposed measures in various other related decision-making problems of pattern recognition, medical diagnosis, clustering analysis, data mining, and supply management. Consequently, some other kinds of fuzzy sets like hesitant fuzzy sets and picture fuzzy sets could be explored to see the probable applications of our presented measures in their respective setting. Although, it is observed that MCDM methods often had some similarities with respect to their configuration and usage, in today’s vibrant world the process of decision making is much more dynamic and demanding when carried out in groups. Therefore, our newly constructed measures will be extended to problems of group decision making. Including cognitive aspects have great advantages in group decision making problems by coming up with decisions having improved satisfaction degree and higher quality of decisions. Some of those advantages include sharing the physical and mental workload, training inexperienced members of the group, and improving the quality of decisions. Moreover, we also plan to tackle consensus issues in group decision making problems as the MCDM framework allows us to do so. It enables the decision makers to reach a consensus by sharing information via the construction of a solid framework of social cognition problems.

Data Availability

No data were used to support the findings of the study performed.

References

Smarandache F. A unifying field in logics. Neutrosophy: neutrosophic probability, set and logic. American Research Press, Rehoboth. 1998.

Smarandache F. Neutrosophic set—a generalization of the intuitionistic fuzzy set. International Journal of Pure and Applied Mathematics. 2005;24:287–97.

Hwang CL, Yoon K. Multiple attribute decision making: methods and applications. Springer, New York. 1981.

Chen CT. Extensions of the TOPSIS for group decision making under fuzzy environment. Fuzzy Sets Syst. 2000;114:1–9.

Ashtiani B, Haghighirad F, Makui A, Montazer GA. Extension of fuzzy TOPSIS method based on interval-valued fuzzy sets. Appl Soft Comput. 2009;9:457–61.

Lakshmana GNV, Muralikrishan S, Sivaraman G. Multi-criteria decision-making method based on interval valued intuitionistic fuzzy sets. Expert Syst Appl. 2011;38:1464–7.

Ye J. Multiciteria decision-making method using the correlation coefficient under single-valued neutrosophic environment. Int J Gen Syst. 2013;42(4):386–94.

Ye J. Single valued neutrosophic cross entropy for multicriteria decision making problems. Appl Math Model. 2013;38:1170–5.

Kharal A. A neutrosophic multi-criteria decision-making method. New Math Nat Comput. 2014;10(2):143–62.

Wang H, Smarandache F, Zhang YQ, Sunderraman R. Single valued neutrosophic sets Multispace Multistructure. 2010;4:410–3.

Wang H, Smarandache F, Zhang YQ, Sunderraman R. Interval neutrosophic sets and logic: theory and applications in computing. Hexis, Phoenix. 2005.

Chi P, Liu P. An extended TOPSIS method for the multi-attribute decision making problems on interval neutrosophic set. Neutrosophic Sets Syst. 2013;1:63–70.

Biswas P, Pramanik S, Giri BC. TOPSIS method for multi-attribute group decision-making under single-valued neutrosophic environment. Neural Comput Appl. 2015. https://doi.org/10.1007/s00521-015-1891-2

Peng J, Wang J, Wang J, Zhang H, Chen X. Multi-valued neutrosophic sets and power aggregation operators with their applications in multi-criteria group decision-making problems. Int J Comput Intell Syst. 2015;8(2):345–63.

Liu P, Chu Y, Li Y, Chen Y. Some generalized neutrosophic number Hamacher aggregation operators and their application to group decision making. Int J Fuzzy Syst. 2014;16(2):242–55.

Liu P, Wang Y. Multiple attribute decision-making method based on single-valued neutrosophic normalized weighted Bonferroni mean. Neural Comput Appl. 2014. https://doi.org/10.1007/s00521-014-1688-8

Peng J, Wang J, Wang J, Zhang H, Chen X. Simplified neutrosophic sets and their applications in multi-criteria group decision-making problems. Int J Syst Sci. 2015. https://doi.org/10.1080/00207721.2014.994050

Ye J. A multicriteria decision-making method using aggregation operators for simplified neutrosophic sets. J Intell Fuzzy Syst. 2014;26(5):2459–66.

Sahin R, Kucuk A. Subsethood measure for single valued neutrosophic sets. J Intell Fuzzy Syst. 2014. https://doi.org/10.3233/IFS-141304

Sahin R, Karabacak M. A multi attribute decision-making method based on inclusion measure for interval neutrosophic sets. Int J Eng Appl Sci. 2014;2(2):13–5.

Peng J, Wang J, Zhang H, Chen X. An outranking approach for multi-criteria decision-making problems with simplified neutrosophic sets. Appl Soft Comput. 2014;25:336–46.

Zhang H, Wang J, Chen X. An outranking approach for multi-criteria decision-making problems with interval-valued neutrosophic sets. Neural Comput Appl. 2015. https://doi.org/10.1007/s00521-015-1882-3

Luukka P. Fuzzy similarity in multi-criteria decision-making problem applied to supplier evaluation and selection in supply chain management. Advn Art Intell. 2013. https://doi.org/10.1155/2011/353509

Li Y, Olson DV, Qin Z. Similarity measures between intuitionistic fuzzy (vague) sets: a comparative analysis. Pattern Recogn Lett. 2007;28:278–85.

Chiclana F, Tapia Garcia JM, del Moral MJ, Herrera-Viedma E. A statistical comparative study of different similarity measures on consensus in group decision making. Inf Sci. 2013;221:110–23.

Guha D, Chakraborty D. A new similarity measure of intuitionistic fuzzy sets and its application to estimate the priority weights from intuitionistic preference relations. Notes on Intuitionistic Fuzzy Sets. 2012;18(1):37–47.

Xu Z. Some similarity measures of intuitionistic fuzzy sets and their applications to multi attribute decision making. Fuzzy Optim Decis Making. 2007;6:109–21.

Broumi S, Smarandache F. Several similarity measures of neutrosophic sets. Neutrosophic Sets Syst. 2013;1:54–62.

Majumdar P, Samanta SK. On similarity and entropy of neutrosophic sets. J Intell Fuzzy Syst. 2014;26:1245–52.

Ye J. Improved correlation coefficients of single valued neutrosophic sets and interval neutrosophic sets for multiple attribute decision making. J Intell Fuzzy Syst. 2014;27:2453–62.

Ye J. Vector similarity measures of simplified neutrosophic sets and their application in multicriteria decision making. Int J Fuzzy Syst. 2014;16(2):204–11.

Ye J. Improved cosine similarity measures of simplified neutrosophic sets for medical diagnosis. Artif Intell Med. 2015;63(3):171–9.

Broumi S, Smarandache F. Cosine similarity measures of interval valued neutrosophic sets. Neutrosophic Sets Syst. 2014;5:15–20.

Pramanik S, Biswas P, Giri BC. Hybrid vector similarity measures and their applications to multi-attribute decision making under neutrosophic environment. Neural Comput Appl. 2015. https://doi.org/10.1007/s00521-015-2125-3

Chai JS, Selvachandran G, Smarandache F, Gerogiannis VC, Son LH, Bui QT, Vo B. New similarity measures for single-valued neutrosophic sets with applications in pattern recognition and medical diagnosis problems. Complex Intell Syst. 2020. https://doi.org/10.1007/s40747-020-00220-w

Saqlain M, Jafar N, Moin S, Saeed M, Broumi S. Single and multi-valued neutrosophic hypersoft set and tangent similarity measure of single-valued neutrosophic hypersoft sets. Neutrosophic Sets and Systems. 2020;32:317–29.

Qin K, Wang X. New similarity and entropy measures of single-valued neutrosophic sets with applications in multi-attribute decision-making. Soft Comput. 2020;24:16165–76. https://doi.org/10.1007/s00500-020-04930-8

Basset MA, Mohamed M, Elhosemy M, Son LH, Chiclana F, Zaied AENH. Cosine similarity measures of bipolar neutrosophic set for diagnosis of bipolar disorder diseases. Artif Intell Med. 2019. https://doi.org/10.1016/j.artmed.2019.101735

Tan RP, Zhang WD. Decision-making method based on new entropy and refined single-valued neutrosophic sets and its application in typhoon disaster assessment. Appl Intell. 2021;51:283–307. https://doi.org/10.1007/s10489-020-01706-3

Ye J. Single-valued neutrosophic similarity measures based on cotangent function and their application in the fault diagnosis of steam turbine. Soft Comput. 2017;21:817–25. https://doi.org/10.1007/s00500-015-1818-y

Ye J. Projection and bidirectional projection measures of single-valued neutrosophic sets and their decision-making method for mechanical design schemes. J Exp Theor Artif Intell. 2016;29(4):731–40. https://doi.org/10.1080/0952813X.2016.1259263

Mondal K, Pramanik S, Giri BC. Single valued neutrosophic hyperbolic sine similarity measure based MADM strategy. Neutrosophic Sets Syst. 2018;20:3–11.

Wu H, Yuan Y, Wei L, Pei L. On entropy, similarity measure and cross-entropy of single-valued neutrosophic sets and their application in multi-attribute decision-making. Soft Comput. 2018;22:7367–76. https://doi.org/10.1007/s00500-018-3073-5

Ye J, Fu J. Multi-period medical diagnosis method using a single-valued neutrosophic similarity measure based on tangent function. Comput Methods Prog Biomed. 2016;123:142–9. https://doi.org/10.1016/j.cmpb.2015.10.002

Ye J. Fault diagnosis of hydraulic turbine using the dimension root similarity measure of single-valued neutrosophic sets. Intell Auto Soft Comput. 2016;24(1):1–8. https://doi.org/10.1080/10798587.2016.1261955

Garg H. Some new biparametric distance measures on single-valued neutrosophic sets with applications to pattern recognition and medical diagnosis. Information. 2017; 8(4): 162–182.

Broumi S, Talea M, Bakali A, Smarandache F. Single-valued neutrosophic graphs. J New Theory. 2016;10:86–101.

Yu J, Tao D, Wang M. Adaptive hypergraph learning and its application in image classification. IEEE Trans Image Process. 2012;21(7). https://doi.org/10.1109/TIP.2012.2190083

Yu J, Rui Y, Tao D. Click prediction for web image reranking using multimodal sparse coding. IEEE Trans Image Process. 2014;23(5). https://doi.org/10.1109/TIP.2014.23113377

Yu J, Tao D, Wang M, Rui Y. Learning to rank using user clicks and visual features for image retrieval. IEEE Trans Cybern. 2015;45(4). https://doi.org/10.1109/TCYB.2014.2336697

Hong C, Yu J, Zhang J, Jin X, Lee KH. Multi-modal face pose estimation with multi-task manifold deep learning. IEEE Trans Industr Inf. 2018. https://doi.org/10.1109/TII.2018.2884211

Bisdorff R. Cognitive support methods for multi-criteria decision making. Eur J Oper Res. 1999;119:379–87.

Carneiro J, Conceição L, Martinho D, Marreiros G, Novais P. Including cognitive aspects in multiple criteria decision analysis. Ann Oper Res. 2016. https://doi.org/10.1007/s10479-016-2391-1

Homenda W, Jastrzebska A, Pedrycz W. Multicriteria decision making inspired by cognitive processes. Appl Math Comput. 2016;290:392–411.

Ma W, Luo X, Jiang Y. Multicriteria decision making with cognitive limitations: a DS/AHP-based approach. Int J Intell Syst. 2017. https://doi.org/10.1002/int.21872

Xu X, Zhang L, Wan Q. A variation coefficient similarity measure and its application in emergency group decision making. Sys Eng Proc. 2012;5:119–24.

Jaccard P. Distribution de la flore alpine dans le basin desquelques regions voisines. Bull de la Soc Vaudoise des Sci Nat. 1901;37(140):241–72.

Dice LR. Measures of amount of ecologic association between species. Ecology. 1945;26:297–302.

Salton G, McGill MJ. Introduction to modern information retrieval. McGraw-Hill, Auckland. 1983.

Acknowledgements

The authors would like to thank the anonymous reviewers for their valuable inputs and insightful comments which helped to improve the paper significantly.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

This article does not contain any studies with animals performed by any of the authors.

Conflict of Interest

The authors declare no competing interests.

Rights and permissions

About this article

Cite this article

Borah, G., Dutta, P. Multi-attribute Cognitive Decision Making via Convex Combination of Weighted Vector Similarity Measures for Single-Valued Neutrosophic Sets. Cogn Comput 13, 1019–1033 (2021). https://doi.org/10.1007/s12559-021-09883-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-021-09883-0