Abstract

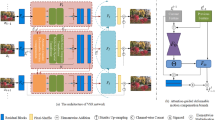

By selectively enhancing the features extracted from convolution networks, the attention mechanism has shown its effectiveness for low-level visual tasks, especially for image super-resolution (SR). However, due to the spatiotemporal continuity of video sequences, simply applying image attention to a video does not seem to obtain good SR results. At present, there is still a lack of suitable attention structure to achieve efficient video SR. In this work, building upon the dual attention, i.e., position attention and channel attention, we proposed deep dual attention, underpinned by self-attention alignment (DASAA), for video SR. Specifically, we start by constructing a dual attention module (DAM) to strengthen the acquired spatiotemporal features and adopt a self-attention structure with the morphological mask to achieve attention alignment. Then, on top of the attention features, we utilize the up-sampling operation to reconstruct the super-resolved video images and introduce the LSTM (long short-time memory) network to guarantee the coherent consistency of the generated video frames both temporally and spatially. Experimental results and comparisons on the actual Youku-VESR dataset and the typical benchmark dataset-Vimeo-90 k demonstrate that our proposed approach achieves the best video SR effect while taking the least amount of computation. Specifically, in the Youku-VESR dataset, our proposed approach achieves a test PSNR of 35.290db and a SSIM of 0.939, respectively. In the Vimeo-90 k dataset, the PSNR/SSIM indexes of our approach are 32.878db and 0.774. Moreover, the FLOPS (float-point operations per second) of our approach is as low as 6.39G. The proposed DASAA method surpasses all video SR algorithms in the comparison. It is also revealed that there is no linear relationship between positional attention and channel attention. It suggests that our DASAA with LSTM coherent consistency architecture may have great potential for many low-level vision video applications.

Similar content being viewed by others

References

Wang X, Chan KC, Yu K, Dong C, Change Loy C. Edvr: Video restoration with enhanced deformable convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. 2019. pp. 0–0.

Wang L, Wang Y, Liang Z, Lin Z, Yang J, An W, Guo Y. Learning parallax attention for stereo image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. pp. 12250–12259.

Sajjadi MS, Vemulapalli R, Brown M. Frame-recurrent video super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. pp. 6626–6634.

Haris M, Shakhnarovich G, Ukita N. Recurrent back-projection network for video super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. pp. 3897–3906.

Huang Y, Wang W, Wang L. Bidirectional recurrent convolutional networks for multi-frame super-resolution. In: Advances in Neural Information Processing Systems. 2015. pp. 235–243.

Wang TC, Liu MY, Zhu JY, Liu G, Tao A, Kautz J, Catanzaro B. Video-to-video synthesis. arXiv preprint arXiv: 1808.06601. 2018.

Caballero J, Ledig C, Aitken A, Acosta A, Totz J, Wang Z, Shi W. Real-time video super-resolution with spatio-temporal networks and motion compensation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. pp. 4778–4787.

Tai Y, Yang J, Liu X. Image super-resolution via deep recursive residual network. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2017. pp. 3147–3155.

Zhang Y, Tian Y, Kong Y, Zhong B, Fu Y. Residual dense network for image super-resolution. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2018. pp. 2472–2481.

Zhang Y, Li K, Li K, Wang L, Zhong B, Fu Y. Image super-resolution using very deep residual channel attention networks. In: Proceedings of the European Conference on Computer Vision (ECCV). 2018. pp. 286–301.

Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, Lu H. Dual attention network for scene segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. pp. 3146–3154.

Kim J, Kwon Lee J, Mu Lee K. Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2016. pp. 1646–1654.

Kappeler A, Yoo S, Dai Q, Katsaggelos AK. Video super-resolution with convolutional neural networks. IEEE Transactions on Computational Imaging. 2016;2(2):109–22.

Gers FA, Schmidhuber J, Cummins F. Learning to forget: Continual prediction with LSTM. 1999.

Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9(8):1735–80.

Feng Y, Ma L, Liu W, Luo J. Spatio-temporal video re-localization by warp lstm. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. pp. 1288-1297.

Dong C, Loy CC, He K, Tang X. Image super-resolution using deep convolutional networks. IEEE Trans Pattern Anal Mach Intell. 2015;38(2):295–307.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2016. pp. 770–778.

Lim B, Son S, Kim H, Nah S, Mu Lee K. Enhanced deep residual networks for single image super-resolution. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops. 2017. pp. 136–144.

Liu H, Fu Z, Han J, Shao L, Hou S, Chu Y. Single image super-resolution using multi-scale deep encoder–decoder with phase congruency edge map guidance. Inf Sci. 2019;473:44–58.

Yan B, Lin C, Tan W. Frame and feature context video super-resolution. In: The Thirty-Third AAAI Conference on Artificial Intelligence. 2019. pp.5597–5604.

Zhang K, Mu G, Yuan Y, Gao X, Tao D. Video super-resolution with 3D adaptive normalized convolution. Neurocomputing. 2012;94:140–51.

Li S, He F, Du B, Zhang L, Xu Y, Tao D. Fast spatio-temporal residual network for video super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. pp. 10522–10531.

Tian Y, Zhang Y, Fu Y, Xu C. TDAN: Temporally-deformable alignment network for video super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2020. pp. 3360–3369.

Dai J, Qi H, Xiong Y, Li Y, Zhang G, Hu H, Wei Y. Deformable convolutional networks. In: Proceedings of the IEEE international conference on computer vision. 2017. pp. 764–773.

Wang X, Girshick R, Gupta A, He K. Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2018. pp. 7794–7803.

Zhang H, Goodfellow I, Metaxas D, Odena A. Self-attention generative adversarial networks. arXiv preprint arXiv: 1805.08318 (2018).

Xue T, Chen B, Wu J, Wei D, Freeman WT. Video enhancement with task-oriented flow. Int J Comput Vision. 2019;127(8):1106–25.

Sakkos D, Liu H, Han J, Shao L. End-to-end video background subtraction with 3d convolutional neural networks. Multimedia Tools and Applications. 2018;77(17):3023–23041.

Jo Y, Oh SW, Kang J, Kim SJ. Deep video super-resolution network using dynamic up-sampling filters without explicit motion compensation. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2018. pp. 3224–3232.

Funding

This work was funded in part by the National Natural Science Foundation of China under (Grant No. 61971004), the Natural Science Foundation of Anhui Province (Grant No. 2008085MF190), and the Key Project of Natural Science of Anhui Provincial Department of Education (Grant No. KJ2019A0083).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflict of Interest

Yuezhong Chu declares that he has no conflict of interest. Yunan Qiao declares that she has no conflict of interest. Heng Liu declares that he has no conflict of interest. Jungong Han declares that he has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chu, Y., Qiao, Y., Liu, H. et al. Dual Attention with the Self-Attention Alignment for Efficient Video Super-resolution. Cogn Comput 14, 1140–1151 (2022). https://doi.org/10.1007/s12559-021-09874-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-021-09874-1