Abstract

The Video Activity Coder is a free, open-source computer program that can assist in coding behaviors in videorecorded datasets. Several programs already exist with this purpose; however, some of these are quite expensive, whereas some open-source, free alternatives have limitations. The Video Activity Coder allows a user to view recorded observations with the ability to play, pause, rewind, and fast-forward video clips easily. Users can mark the beginnings and endings of behavioral codes by using an onscreen keypad. For example, one could note every instance of a child manipulating toys by pressing a key or note the beginning and end of when a parent assists a child. In addition, the program offers descriptive statistics and output that captures second-by-second streams of codes that can be analyzed with other programs.

Similar content being viewed by others

Introduction

Researchers have relied on numerous methods for coding human and non-human animal behaviors, oftentimes videorecording observations prior to coding behaviors. These techniques can be used through other recording methods (e.g., paper and pencil) but are performed much more easily with the help of a digital video camera and a computer. One set of techniques involves interval coding (Bakeman & Quera, 1995; Hintze et al., 2002) during which a researcher divides an observational record into equal segments (i.e., intervals) of time. If a phenomenon of interest happened at least once during an interval, this is considered a partial-interval technique. If a phenomenon occurred at a specific point in the interval, this is considered a momentary technique. A “whole-interval” approach requires noting whether a phenomenon lasted the entire duration of an interval. Bakeman and Quera (1995) note that interval coding can be limiting because a lot of information is lost; their main advantage is that they make live coding by hand easy.

Other methods are better suited for coding video recorded observations. In ethology, researchers make distinctions between states (e.g., a person manipulating an object) which can last for a relatively long time and events (e.g., a person picking up an object) which are fleeting (Altmann, 1974). These can be coded by hand during live observations or from recordings with computer software. One example of code for states (using Altmann’s definition) comes from Morrison and Reiss (2018), who studied the visual self-recognition in young dolphins in captivity. The researchers, in a test of non-human animal self-recognition, videorecorded two dolphins and tracked the amount of time the dolphins engaged in self-focused behavior while looking in a mirror. Hutchins et al. (2013) provide another illustration through their investigation of the coordination among states of visual attention, engagement of equipment in planes and flight simulators, and conversations between pilots and air traffic controllers. An example of coding for events (again, using Altmann’s terminology) is noting how many times infants hold or remove parts of toys with their left hand, right hand, or both hands simultaneously (Potier et al., 2013). Tallying frequencies of pigs’ tail and ear movements while they play with toys (Marcet Rius et al., 2018) provides another illustration.

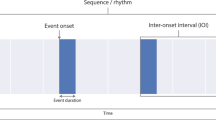

The terminology regarding behavioral coding is quite different in the psychology literature. Bakeman and Quera (1995, 2011) and others (e.g., Hintze et al., 2002) use the term “event coding” in a more general manner as a contrast to interval coding. Event coding involves creating codes that preserve onset and offset times or that only preserve the order of behaviors. When using event coding, one can note how often events happen, how long they happen on average, and how long it takes for one event to start after another has ended (e.g., when a prompt has ended and when a response starts) (Hintze et al., 2002). Note that, for the most part, Bakeman and Quera’s events are the same as Altmann’s states. However, Bakeman and Quera (1995) use the term timed events to “…represent momentary or frequency behaviors” (p. 7), which corresponds to Altmann’s more limited use of event. Thus, it is important to keep in mind that the same word can mean very different things depending on the author.

Several software programs, some paid and some free, allow researchers to code events and states from video-recorded observations. Table 1 shows a comparison between the Video Activity Coder and several other video coding programs. Please note that the list of programs and the list of features contained in the table are not exhaustive. There are different versions of the paid programs which vary in terms of the features they offer. Free, open-source options include BORIS (Friard & Gamba, 2016), CowLog (Pastell, 2016), Datavyu (Datavyu Team, 2014), ELAN (2020), and Simple Video Coder (Barto et al., 2017). Paid options include INTERACT (Mangold, 2020), the Observer XT (Zimmerman et al., 2009), and Transana (2020).

Most of the programs described above share the same major features. Several of these, including the Video Activity Coder can operate on Windows, Mac OS and Linux-based computers, whereas others can only be used on computers with Windows (see Table 1). All of the programs feature (a) a window that shows videos (or multiple videos in some cases), (b) video controls, the ability to record behavioral codes with a keypad and/or keystrokes, (c) a timeline or table that shows codes with onset/offset times, (d) some ability to export data. The Video Activity Coder falls in between some programs like Datavyu and the Simple Video Coder, which do not produce descriptive statistics, and other programs (e.g., BORIS and Observer XT) which offer a wide array of statistics, including basic descriptive statistics, sequential analyses, and latency analysis. Some of them can also produce timeline charts to display onsets and offsets of codes over time (e.g., BORIS and INTERACT) (see Table 1).

These programs vary in terms of ease of use and the speed at which a user can learn to operate them. Simple Video Coder and BORIS, for example, are relatively straightforward and do not require much training in order to learn their basic features. Some, especially the paid programs, take longer to learn, partly because of the number of features they include. The Video Activity Coder is designed to be easy to use, shares many of the basic features of other video coding software, and includes features that some of the other programs, particularly the other free, open-source ones, are missing. Users who need statistical analyses beyond the descriptive statistics provided by the Video Activity Coder may export various types of summary files that can be used by other software packages.

Using the Video Activity Coder

Downloading and installation

The Video Activity Coder was created in Java 8 and JavaFX 8, so researchers can use the program on a variety of operating systems, including Microsoft Windows, Linux, and Apple Mac OS. All of the files associated with the Video Activity Coder are located at https://sourceforge.net/projects/video-activity-coder/. Users can install the Windows version with the installable file; Linux and Mac users can run the program from the .jar file as long as they have copies of a Java runtime environment installed on their computers. The program is open-source and licensed under GNU GPL version 3, so users are welcome to view and adapt the source code. I ask that users cite this paper if they use the Video Activity Coder.

The main screen of the Video Activity Coder has four components. These are (a) drop-down menus, (b) the video window (with controls), (c) the keypad and (d) the activity log table. Figure 1 provides an example of how the main screen looks on a Windows-based computer, and each component is described in turn below.

Using the drop-down menus

Drop-down menus for project file management, for customizing the labels for codes, for data analysis/processing, and information about the program are at the top left of the main screen. Right above this, the user can see the name of the current project file. Selecting “New Project” allows the user to start a new project. New projects are initiated by choosing a video to code. Selecting “Load Project” allows the user to load a previously saved project. When the user selects “Save Project”, she can either choose a new filename for the project or save under the current filename (if the project has already been saved). Video Activity Coder project files have a “.prj” extension. To the right of the project files options is a drop-down menu labeled “Customize Codes”, which allows the user to change the default names of any of the 30 possible codes. The customized names will appear on the keypad and on the Behavioral Codes table (which will be described below). Code names can be as long as the user wishes, although names over 15 characters (including spaces) will be cut off on the keypad.

Next, there are menu options related to different forms of output. Selecting “Export to CSV File” will produce a comma-delimited file that includes information from the Activity Log table, whereas “Export to Tab-delimited File” will produce a tab-delimited version of the same file. Each row in the file contains a code name, onset time, offset time, and any notes that the user may have entered for that instance. This information can be adapted to use with GSEQ (Bakeman & Quera, 2011) or other software if one is interested in sequential analyses. At the bottom of the spreadsheet, users will see the project filename and the time and date when the file was created.

Selecting “Descriptive Statistics” opens a new window with the total duration and number of occurrences of each code. Number of occurrences will be of interest when using event coding, whereas total duration (and instances, too) will be of interest when using state coding. The window also displays with means, standard deviations and 95% confidence intervals for the durations of codes. Confidence intervals are computed with \( \overline{X}\pm 1.96\ast \frac{s}{\sqrt{n}} \), with \( \overline{X} \) as the sample mean, s as the sample standard deviation and n as the sample size. Because Student’s t values are not included in these calculations, the interval limits may be inaccurate with small sample sizes (see Hazra, 2017). The program computes descriptive statistics for all 30 codes, so statistics for unused codes will show up as zeros and “NaN” in the window.

The next two options, “Save Code Stream as CSV File” and “Save Code Stream as Tab-delimited File” produce comma-delimited and tab-delimited files which contain information on whether each code was present or not during each decisecond of the recording. The resulting file will have 31 columns, with the first representing the deciseconds in the recording and the other 15 representing the codes. The project filename, along with the time and date the user created the file, is at the bottom of the file. Each row contains information on a decisecond (starting with zero) and whether each coded behavior was present (with a 1) or absent (with a 0) during that second. This information may be useful when computing inter-rater reliability between coders, binary time series analyses (Jentsch & Reichmann, 2019), cross-recurrence quantification analysis (Coco & Dale, 2014; Wallot & Leonardi, 2018), conditional probabilities (Bakeman & Quera, 2011), and the like. Lastly, a user can request a chart that shows code stream information with the “Code Stream Chart” option.

The video window and video controls

The video window, on the left side of the screen, displays the video file that the user is coding. The Video Activity Coder can use video files in either MP4 and FLV formats. Video controls and the coding keypad are located below the video window. Controls include (a) buttons to play, pause and reload a video, and (b) sliders to change what the user is viewing (“Viewing Progress”), playback speed and volume. The “Viewing Progress” bar moves while the video is playing and double-clicking on different parts of the bar change the point at which the video plays.

Coding keypad

To the right of the video controls and above the Activity Log is a keypad with 30 buttons that allows the user to mark the onset and offset times for actions or events of interest. Pressing a key once will display the action/event label and onset in the Behavioral Codes Table (see next paragraph). Pressing the same button again will display the end time of the action/event in the table. The default labels are “First” through “Thirtieth” which the user can change using the “Customize Codes” drop-down menu. A user can code momentary actions, or events in Altmann’s (1974) terminology, by pressing a key then pressing it again 1 s later.

The activity log table

The activity log table contains columns that display code labels, onset and offset times, and user notes. Each row represents a unique instance of a code. Once the user presses a key on the keypad, the code label and onset time in HH:MM:SS.S format will appear in the table. Pressing the same key again, will make the offset time (also in HH:MM:SS.S format) appear in the table. Once a row is complete, double clicking on it will cue the video to the onset of that instance. The user can click on a row then click on “Add a Note” (at the bottom of the table) to add a brief note or description for that instance of a code. By highlighting a row, one can also remove that instance of a code using “Delete This Entry.” If instances of codes end up out of chronological order (e.g., by coding in several passes), pressing the “Sort by Start Time” button will sort rows by onset time. The remaining buttons allow the user to adjust the onset or offset time in a selected row; times can be moved up or down by a decisecond with each click of a button.

A workflow example

The following is a description of how I created a project file and conducted basic data analyses in order to demonstrate how to use the Video Activity Coder. After starting the Video Activity Coder, I started a new project under “Project Files”. Then I loaded a MP4 file showing juvenile snow leopards playing at a zoo exhibit. Before starting the coding process, I customized some codes via the drop-down menu shown in Fig. 2. Note that this process automatically changes the labels in the keypad under the video player. Next, I coded the video clip using the keypad and added a note by highlighting the second row and clicking the “Add a note” button at the bottom of the Activity Log table.

Selecting “Descriptive Statistics” under the Data menu produced the pop-up window displayed in Fig. 3. Standard deviations and 95% confidence intervals were not computed in this case because each code had only one instance. I also prompted the Video Activity Coder to produce a code stream file, which is also done under the Data menu. Figure 4 displays a segment of this file. This allows me to conduct other analyses, like computing the conditional probability of observing a leopard tackling another leopard given any leopard in the area was pacing. That probability happened to pcondit = .29 and was calculated after creating a crosstabulation table in R (R Core Team, 2020) from the code stream file. Figure 5 shows a portion of the code stream chart that corresponds to the code stream shown in Fig. 4. Despite the simplicity of this workflow example, it should demonstrate the ease of getting started with coding with the Video Activity Coder and creating files that can be analyzed by a variety of statistical software packages.

Conclusions

The Video Activity Coder provides a free, easy-to-use, computerized tool for coding behaviors from video recordings on a variety of operating systems. Users can code up to 30 unique states (i.e., behaviors that last several seconds or minutes) easily or events (i.e., momentary behaviors). If one records events, one must make sure events’ durations are at least 1 s long or else errors will occur in how behavioral streams for export are constructed. Users can save project files and export comma-delimited or tab-delimited versions of the behavioral codes table and of second-by-second streams of codes. The procedures for accomplishing these tasks is relatively straightforward and should take little time for a user to learn. This ease of learning and ease of use makes the Video Activity Coder ideal for simple behavioral coding and for quickly training additional coders to help with coding tasks.

There are some limitations to the program to keep in mind. For example, the Video Activity Coder may not be ideal for extensive transcription or note-taking beyond what can be added in the Notes column of the behavioral codes table. Notes can be long, but only a small portion of their contents will be visible in the table. (Downloading the summary table will show notes in their entirety.) Therefore, other programs, like Transana could be more appropriate for coding conversations. Another limitation is that software cannot display multiple videos at once like some programs. In addition, the Video Activity Coder is useful for continuous recording of states or events, less so for interval coding. A user could use a timer and press keypad buttons on and off to record events that fall within intervals of interest; however, best practices recommend continuous coding over interval coding when using videorecorded data (see Bakeman & Quera, 1995, cited in the Introduction). Lastly, users can conduct limited analyses within the program, so one would need to export comma-delimited or tab-delimited files to other programs to run many types of statistical analyses. Despite these constraints, the Video Activity Coder offers the basic tools for behavioral coding.

Open practices statement

The files associated with this article are publicly available at https://sourceforge.net/projects/video-activity-coder/.

References

Altmann, J. (1974). Observation study of behavior: Sampling methods. Behaviour, 49, 227–267. https://doi.org/10.1163/156853974X00534

Bakeman, R., & Quera, V. (1995). Analyzing interaction: Sequential analysis with SDIS and GSEQ. Cambridge University Press.

Bakeman, R., & Quera, V. (2011). Sequential analysis and observational methods for the behavioral sciences. Cambridge University Press.

Barto, D., Bird, C. W., Hamilton, D. A., & Fink, B. C. (2017). The Simple Video Coder: A free tool for efficiently coding social video data. Behavior Research Methods, 49, 1563–1568. https://doi.org/10.3758/s13428-016-0787-0

Coco, M. I., & Dale, R. (2014). Cross-recurrence quantification analysis of categorical and continuous time series: An R package. Frontiers in Psychology, 5:510. https://doi.org/10.3389/fpsyg.2014.00510

Datavyu Team (2014). Datavyu: A video coding tool. Databrary Project, New York University. URL http://datavyu.org

ELAN (Version 6.0) [Computer software]. (2020). Nijmegen: Max Planck Institute for Psycholinguistics, The Language Archive. Retrieved from https://archive.mpi.nl/tla/elan

Friard, O., & Gamba, M. (2016). BORIS: a free, versatile open-source event-logging software for video/audio coding and live observations. Methods in Ecology and Evolution, 7, 1325-1330. https://doi.org/10.1111/2041-210X.12584

Hazra A. (2017). Using the confidence interval confidently. Journal of Thoracic Disease, 9(10), 4125–4130. https://doi.org/10.21037/jtd.2017.09.14

Hintze, J. M., Volpe, R. J., & Shapiro, E. S. (2002). Best practices in the systematic direct observation of student behavior. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology IV (pp. 993–1006). National Association of School Psychologists.

Hutchins, E., Weibel, N., Emmenegger, C., Fouse, A., & Holder, B. (2013). An integrative approach to understanding flight crew activity. Journal of Cognitive Engineering and Decision Making, 7, 353–376. https://doi.org/10.1177/1555343413495547

Jentsch, C. & Reichmann, L. (2019). Generalized binary time series models. Econometrics, 7, 47-72. https://doi.org/10.3390/econometrics7040047

Mangold (2020). INTERACT User Guide. Mangold International GmbH (Ed.). Retrieved from www.mangold-international.com

Marcet Rius, M., Pageat, P., Bienboire-Frosini, C., Teruel, E., Monneret, P., Leclercq, J., Lafont-Lecuelle, C., & Cozzi, A. (2018). Tail and ear movements as possible indicators of emotions in pigs. Applied Animal Behaviour Science, 205, 14–18. https://doi.org/10.1016/j.applanim.2018.05.012

Morrison, R. & Reiss, D. (2018). Precocious development of self-awareness in dolphins. PLoS ONE, 13(1):e0189813. https://doi.org/10.1371/journal.pone.0189813

Pastell, M. (2016). CowLog – Cross-platform application for coding behaviors from video. Journal of Open Research Software, 4, e15. https://doi.org/10.5334/jors.113

Potier, C., Meguerditchian, A., & Fagard, J. (2013). Handedness for bimanual coordinated actions in infants as a function of grip morphology. Laterality, 18, 576–593. https://doi.org/10.1080/1357650X.2012.732077

R Core Team. (2020). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. URL: https://www.R-project.org/.

Transana 3.32 [Computer software]. (2020). Madison, WI: Spurgeon Woods LLC. Available: https://www.transana.com

Wallot, S. & Leonardi, G. (2018). Analyzing multivariate dynamics using cross-recurrence quantification analysis (CRQA), diagonal-cross-recurrence profiles (DCRP), and multidimensional recurrence quantification analysis (MdRQA) – A tutorial in R. Frontiers in Psychology, 9:2232. https://doi.org/10.3389/fpsyg.2018.02232

Zimmerman, P. H., Bolhuis, J. E., Willemsen, A., Meyer, E. S., & Noldus, L. P. J. J. (2009). The Observer XT: A tool for the integration and synchronization of multimodal signals. Behavior Research Methods, 41(3), 731–735. https://doi.org/10.3758/BRM.41.3.731

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Braswell, G.S. An introduction to the Video Activity Coder: Free software for coding videorecorded behaviors. Behav Res 53, 2596–2603 (2021). https://doi.org/10.3758/s13428-021-01608-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-021-01608-3