Abstract

Various machine learning techniques exist to perform regression on temporal data with concept drift occurring. However, there are numerous nonstationary environments where these techniques may fail to either track or detect the changes. This study develops a genetic programming-based predictive model for temporal data with a numerical target that tracks changes in a dataset due to concept drift. When an environmental change is evident, the proposed algorithm reacts to the change by clustering the data and then inducing nonlinear models that describe generated clusters. Nonlinear models become terminal nodes of genetic programming model trees. Experiments were carried out using seven nonstationary datasets and the obtained results suggest that the proposed model yields high adaptation rates and accuracy to several types of concept drifts. Future work will consider strengthening the adaptation to concept drift and the fast implementation of genetic programming on GPUs to provide fast learning for high-speed temporal data.

Similar content being viewed by others

References

A. Tsymbal, The problem of concept drift: definitions and related work. Comput. Sci. Dep, Trinity Coll Dublin 106(2), 58 (2004)

T. Mitsa, Temporal Data Mining, Chapman & Hall/CRC Data Mining and Knowledge Discovery Series (2010)

J. Brownlee, A gentle introduction to concept drift in machine learning. Mach. Learn. Mastery (2018)

L. Khan, W. Fan, In international conference on database systems for advanced applications, in Tutorial: Data Stream Mining and its Applications (Springer, Berlin, Heidelberg, 2012), pp. 328–329

E. Lughofer, On-line active learning: a new paradigm to improve practical useability of datastream modeling methods. Inf. Sci. 415, 356–376 (2017)

Z. Zhang, J. Zhou, Transfer estimation of evolving class priors in data stream classification. Pattern Recogn. 43(9), 3151–3161 (2010)

J. Gama, I. Žliobaite, A. Bifet, M. Pechenizkiy, A. Bouchachia, A survey on concept drift adaptation. ACM Comput. Surv. 46(4), 44:1-44:37 (2014)

R. Elwell, R. Polikar, Incremental learning of concept drift in nonstationary environments. IEEE Trans. Neural Netw. 22(10), 1517–1531 (2011)

C. Alippi, G. Boracchi, M. Roveri, Just in time classifiers: managing the slow drift case, in Proc. Int. Joint Conf. Neural Networks (2009), pp. 114–120

L. Torrey, J. Shavlik, Transfer Learning, in Handbook of Research on Machine Learning Applications. ed. by J.M.R.M.M.M.A.A.S.E. Soria (IGI Global, 2009)

J.C. Schlimmer, R.H. Granger, Incremental learning from noisy data. Mach. Learn. 1(3), 317–354 (1986)

G. Ditzler, M. Roveri, C. Alippi, Learning in nonstationary environments: a survey. IEEE Comput. Intell. Mag. 10(4), 12–25 (2015)

S. Delany, P. Cunningham, A. Tsymbal, L. Coyle, A case-based technique for tracking concept drift in spam filtering. Knowl. Based Syst 18(4–5), 187–195 (2005)

C. Alippi, Intelligence for Embedded Systems (Springer, Berlin, 2014).

J. Sarnelle, A. Sanchez, R. Capo, J. Haas, R. Polikar, Quantifying the limited and gradual concept drift assumption, in 2015 International Joint Conference on Neural Networks (IJCNN) (IEEE, 2015), pp. 1–8

L.I. Kuncheva, Classifier ensembles for changing environments, in Proc. 5th Int Workshop of Multiple Classifier Systems (2004), pp. 1–15

G. Brown, J.L. Wyatt, P. Tino, Managing diversity in regression ensembles. J. Mach. Learn. Res. 6, 1621–1650 (2005)

M. Basseville, I.V. Nikiforov, Detection of Abrupt Changes: Theory and Application, vol. 104 (Prentice-Hall, Englewood Cliffs, 1993).

A. Tsymbal, M. Pechenizkiy, P. Cunningham, S. Puuronen, Dynamic integration of classifiers for handling concept drift. Inform. Fusion 9(1), 56–68 (2008)

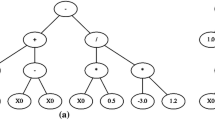

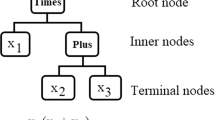

J.R. Koza, Genetic programming: a paradigm for genetically breeding populations of computer programs to solve problems. Stanford University Computer Science Department Technical Report STAN-CS-90-1314 (1990)

S. Massimo, A. Tettamanzi, Genetic programming for financial time series prediction, in Genetic Programming (Springer, 2001), pp. 361–370

M. Kľúčik, J. Juriova, M. Kľúčik, Time series modeling with genetic programming relative to ARIMA models, in Conferences on New Techniques and Technologies for Statistics (2009), pp. 17–27

P.G. Espejo, S. Ventura, F. Herrera, A Survey on the Application of Genetic Programming to Classification. IEEE Trans. Syst., Man, Cybern., Part C, Appl. Rev. 40(2), 121–144 (2010)

K. Nag, N. Pal, A Multiobjective genetic programming-based ensemble for simultaneous feature selection and classification. IEEE Trans. Cybern. 46(2), 499–510 (2016)

L. Vanneschi, G. Cuccu, A study of genetic programming variable population size for dynamic optimization problems, in IJCCI (2009), pp. 119–126

Z. Yin, A. Brabazon, C. O’Sullivan, M. O’Neill, Genetic programming for dynamic environments, in 2nd international symposium advances in artificial intelligence and applications, vol. 2, pp. 437–446

M. Rieket, K. M. Malan, and A. P. Engelbrecht, Adaptive genetic programming for dynamic classification problems, in 2009 IEEE congress on evolutionary computation (2009), pp. 674–681

N. Wagner, Z. Michalewicz, M. Khouja, R. McGregor, Time series forecasting for dynamic environments: the DyFor genetic program model. IEEE Trans. Evol. Comput. 11(4), 433–452 (2007)

S. Kelly, J. Newsted, W. Banzhaf, C. Gondro, A modular memory framework for time series prediction, in Proceedings of the 2020 Genetic and Evolutionary Computation Conference (2020), pp. 949–957

A.J. Turner, J.F. Miller, Recurrent cartesian genetic programming of artificial neural networks. Genet. Progr. Evol. Mach. 18(2), 185–212 (2017)

N.R. Draper, H. Smith, Applied Regression Analysis, vol. 326 (Wiley, New York, 1998).

T. Hastie, R. Tibshirani, J. Friedman, The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Springer Series in Statistics, 2nd edn. (Springer, 2009).

J.B. Fraleigh, R.A. Beauregard, Linear Algebra, 3rd edn. (Addison-Wesley Publishing Company, Upper Saddle River, 1995).

S.M. Stigler, Gauss and the invention of least squares. Ann. Stat. 9(3), 465–474 (1981)

A. Kordon, Future trends in soft computing industrial applications, in Proceedings of the 2006 IEEE Congress on Evolutionary Computation (2006), pp. 7854–7861

E. Alfaro-Cid, A.I. Esparcia-Alcázar, P. Moya, B. Femenia-Ferrer, K. Sharman, J.J. Merelo, Modeling pheromone dispensers using genetic programming, in Lecture Notes in Computer Science, vol 5484 (Springer, Berlin/Heidelberg, 2008), pp. 635–644

D.P. Searson, D.E. Leahy, M.J. Willis, GPTIPS: an open-source genetic programming toolbox for multigene symbolic regression, in Proceedings of the International Multiconference of Engineers and Computer Scientists, vol 1 (Citeseer, 2010), pp. 77–80

N.Q. Uy, N.X. Hoai, M. O’Neill, R.I. McKay, E. Galván-López, Semantically-based crossover in genetic programming: application to real-valued symbolic regression. Genet. Progr. Evol. Mach. 12(2), 91–119 (2011)

K. Georgieva, A.P. Engelbrecht, dynamic differential evolution algorithm for clustering temporal data. Large Scale Sci. Comput., Lect. Notes Comput. Sci. 8353, 240–247 (2014)

C. Kuranga, Genetic programming approach for nonstationary data analytics. Ph.D Thesis, University of Pretoria, Pretoria, South Africa (2020)

R. Poli, W.B. Langdon, N.F. McPhee, A field guide to genetic programming. Lulu Enterprise, UK Ltd, http://lulu.com (2008)

L. Vanneschi, R. Poli, Genetic programming: introduction, application, theory and open issues, in Handbook of Natural Computing: Theory, Experiments and Applications. ed. by T.B.A.J.K. Grzegorz Rosenberg (Springer, Berlin, 2010)

W. Banzhaf, P. Nordin, R.E. Keller, F.D. Francone, Genetic Programming: An Introduction, vol. 1 (Morgan Kaufmann, San Francisco, 1998).

A. Canoa, B. Krawczyk, Evolving rule-based classifiers with genetic programming on GPUs for drifting data streams. Pattern Recogn. 87, 248–268 (2019)

A. Soundarrajan, S. Sumathi, G. Sivamurugan, Voltage and frequency control in power generating system using hybrid evolutionary algorithms. J. Vib. Control 18(2), 214–227 (2012)

MATLAB, version 8.5.0 (R2015a) (The MathWorks Inc., Natick, MA, 2015)

R.W. Morrison, Performance measure in dynamic environments, in GECCO Workshop on Evolutionary Algorithms for Dynamic Optimization Problems, No. 5–8 (2003)

R.W. Morrison, K.A. De Jong, A test problem generator for non-stationary environments, in Proc. of the 1999 Congr. on Evol. Comput. (1999), pp. 2047–2053

J. Branke, Memory enhanced evolutionary algorithms for changing optimization problems, in Proc. of the 1999 Congr. on Evol. Comput. (1999), pp. 1875–1882

Y. Jin, B. Sendhoff, Constructing dynamic optimization test problems using the multiobjective optimization concept. EvoWorkshop 2004 LNCS 3005, 526–536 (2004)

C. Li, M. Yang, L. Kang, A new approach to solving dynamic TSP, in Proc of the 6th Int. Conf. on Simulated Evolution and Learning (2006), pp. 236–243

C. Li, S. Yang, A generalized approach to construct benchmark problems for dynamic optimization, in Proc. of the 7th Int. Conf. on Simulated Evolution and Learning (Springer, Berlin, Heidelberg, 2008), pp. 391–400.

L. Zhang, J. Lin, R. Karim, Sliding window-based fault detection from high-dimensional data streams. IEEE Trans. Syst., Man, Cybern.: Syst. 47(2), 289–303 (2017)

A.S. Rakitianskaia, A.P. Engelbrecht, Training Feedforward Neural Network with Dynamic Particle Swarm Optimisation (Computer Science Department, University of Pretoria, 2011).

L. Bennett, L. Swartzendruber, H. Brown, Superconductivity Magnetization Modeling (National Institute of Standards and Technology (NIST), US Department of Commerce, USA, 1994).

V. Cherkassky, D. Gehring, F. Mulier, Comparison of adaptive methods for function estimation from samples. IEEE Trans. Neural Netw. 7(4), 969–984 (1996)

M. Harries, Splice-2 comparative evaluation: electricity pricing. Technical Report UNSW-CSE-TR-9905, Artificial Intelligence Group, School of Computer Science and Engineering, The University of New South Wales, Sydney 2052, Australia (1999)

R.J. Shiller, Stock Market Data Used in Irrational Exuberance (Princeton University Press, 2005).

J. Kitchen, R. Monaco, Real-time forecasting in practice. Bus. Econ.: J. Natl. Assoc. Bus. Econ. 38, 10–19 (2003)

M. López-Ibáñez, J. Dubois-Lacoste, L. Pérez-Cáceres, T. Stützle, M. Birattari, The irace package: Iterated racing for automatic algorithm configuration. Oper. Res. Perspect. 3, 43–58 (2016)

X. Qiu, P.N. Suganthan, G.A. Amaratunga, Ensemble incremental learning random vector functional link network for short-term electric load forecasting. Knowl.-Based Syst. 145, 182–196 (2018)

J. Che, J. Wang, Short-term electricity prices forecasting based on support vector regression and auto-regressive integrated moving average modeling. Energy Convers. Manage. 51(10), 1911–1917 (2010)

Acknowledgements

The authors would like to thank reviewers for their valuable comments which immensely improved the structure of this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Area Editor: Una-May O'Reilly.

Appendix

Rights and permissions

About this article

Cite this article

Kuranga, C., Pillay, N. Genetic programming-based regression for temporal data. Genet Program Evolvable Mach 22, 297–324 (2021). https://doi.org/10.1007/s10710-021-09404-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10710-021-09404-w