Abstract

We study the existence, optimality, and construction of non-randomised stopping times that solve the Skorokhod embedding problem (SEP) for Markov processes which satisfy a duality assumption. These stopping times are hitting times of space-time subsets, so-called Root barriers. Our main result is, besides the existence and optimality, a potential-theoretic characterisation of this Root barrier as a free boundary. If the generator of the Markov process is sufficiently regular, this reduces to an obstacle PDE that has the Root barrier as free boundary and thereby generalises previous results from one-dimensional diffusions to Markov processes. However, our characterisation always applies and allows, at least in principle, to compute the Root barrier by dynamic programming, even when the well-posedness of the informally associated obstacle PDE is not clear. Finally, we demonstrate the flexibility of our method by replacing time by an additive functional in Root’s construction. Already for multi-dimensional Brownian motion this leads to new class of constructive solutions of (SEP).

Similar content being viewed by others

1 Introduction

We study the Skorokhod embedding problem for Markov processes \(X=(X_t)_{t\ge 0}\) evolving in a locally compact space E. That is, given measures \(\mu \) and \(\nu \) on E, the task is to find a stopping time T such that

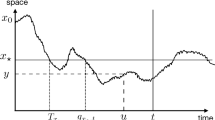

Throughout this article we are interested in non-randomised stopping times, that is T is a stopping time in the filtration generated by X. When X is a one-dimensional Brownian motion, this problem has received much attention, partly due to its importance in mathematical finance [42]. In this case, there exists a wealth of different stopping times that solve SEP\((X, \mu , \nu )\), see [51] for an overview. One of the most intuitive solutions is due to Root [57]: for a one-dimensional Brownian motion and \(\mu \),\(\nu \) in convex order, there exists a space-time subset – the so-called Root barrier – such that its hitting time by \((t,X_t)\) solves SEP\((X, \mu , \nu )\). More recently, connections with obstacle PDEs [23, 28, 33, 34, 38], optimal transport [3, 5,6,7, 20, 37, 39, 40], and optimal stopping [23, 25] and extensions to the multi-marginal case [4, 22, 56] have been developed.

However, already for multi-dimensional Brownian motion much less is known about solutions to SEP\((X, \mu , \nu )\), see for example work of Falkner [30] that highlights some of the difficulties that arise in the multi-dimensional Brownian case. For general Markov processes the literature gets even sparser: Rost [58, 59] developed a potential theoretic approach to previous work of Root, but in general this shows only the existence of a randomised stopping time for SEP\((X, \mu , \nu )\) when \(\mu \) and \(\nu \) are in balayage order. Subsequent works of Chacon, Falkner, and Fitzsimmons, [18, 30, 32], expand on these results and provide sufficient conditions for the existence of a non-randomised stopping time; however, in none of these works the question of how to compute these stopping times \(T(\omega )\) for a given sample trajectory \(X(\omega )\) is addressed. Another approach is the application of optimal transport to SEP\((X, \mu , \nu )\) as initiated by Beiglböck, Cox, Huesmann [3]. This covers Feller processes but verifying the assumptions can be non-trivial. More importantly, the optimal transport approach currently only addresses the existence and optimality of a stopping time but not its computation. Besides these two approaches – (Rost’s) potential theoretic approach and the optimal transport approach – we are not aware of a general methodology that produces solutions to SEP\((X, \mu , \nu )\) for Markov processes.

Contribution. We focus on the large class of right-continuous transient standard Markov processes satisfying a duality assumption and absolute continuity of the semigroup. Our main result is Theorem 3.6 which extends Rost’s results and shows existence of a non-randomised Root stopping time and, more importantly, represents the Root barrier as a free boundary via the semigroup of the dual space-time process. This allows to apply classical dynamic programming to calculate the Root barrier for a large class of Markov processes. Theorem 3.6 also implies that if a PDE theory is available that ensures the well-posedness of the free boundary problem formulated as PDE problem, then numerical methods for PDEs can be used to compute the barrier. However, in general this requires much stronger assumptions on the Markov process, e.g. when the generator involves non-local terms as is already the case for one-dimensional Lévy processes, the well-posedness of such PDEs is an active research area.

We present a series of examples of processes to which our result applies. The most important one is arguably multi-dimensional Brownian motion (or more generally, hypoelliptic diffusions), but we also discuss stable Lévy processes and Markov chains on a discrete state space. In all these cases our result allows to compute the Root barrier, and we present several numerical experiments to illustrate this point.

Finally, we show that our approach is flexible enough to construct new classes of solutions to the Skorokhod embedding problem: instead of hitting times of the space-time process \((t,X_t)\), we discuss hitting times of \((A_t,X_t )\) where A is an additive functional of X of the form \(\int _0^\cdot a(X_s) \,\mathrm {d}s\). We expect that such an approach holds in much greater generality for other functionals and leave this for further research.

Outline. The structure of the article is as follows: Sect. 2 introduces notation and basic results from potential theory, Sect.3 contains the statement of our main result and Sect. 4 contains its proof. Section 5 then applies this to concrete examples of Markov processes and computations of Root barriers. Section 6 discusses how these results can be used to construct new solutions of SEP\((X, \mu , \nu )\). In Appendix A and Appendix B we will present fundamental results from classical potential theory used throught this article and in Appendix C we discuss details around applying our result to Brownian motion in a Lie group.

2 Notations and assumptions

We briefly recall notations from potential theory, mostly following the presentation in Blumenthal and Getoor [12]. A detailed description can be found in “Appendix A”. Throughout, E is a locally compact metric space with countable base and \({\mathcal {E}}\) is the Borel-\(\sigma \)-algebra on E. In addition, we write \(\mathcal {E}^*\) for the \(\sigma \)-algebra of universally measurable sets and \(\mathcal {E}^n\) for the \(\sigma \)-algebra of nearly Borel sets, see Definition A.1.

Let \(\left( \Omega , {\mathcal {F}}, ({\mathcal {F}}_t)_{t \ge 0}, (X_t)_{t\ge 0}, ({\mathbb {P}}^x)_{x \in E}\right) \) denote a filtered probability space that carries a stochastic process X. To allow for killing we add an absorbing cemetery state \(\Delta \) to the state space, that is we define \(E_\Delta := E \cup \{\Delta \}\) and for all \(t\ge 0\) if \(X_t(\omega )=\Delta \), then \(X_s(\omega )=\Delta \) for all \(s>t\). Denote with \(\zeta := \inf \{t \ge 0:~ X_t =\Delta \}\) the lifetime of the process. Each \({\mathbb {P}}^x\) is then a probability measure on paths with \(X_0 = x\), \({\mathbb {P}}^x\)-a.s for all \(x\in E_\Delta \). Furthermore, for \(t\ge 0\) let \(\theta _t\) be the natural shift operator of the the process, i.e. \(\theta _t(X_s(\omega ))=X_{t+s}(\omega )\) for all \(s \ge 0\). Throughout, we assume that X is a standard process, see Definition A.2, in particular we assume that X has càdlàg paths and satisfies the strong Markov property. We write \(P= (P_t)_{t\ge 0}\) for the Markovian transition semigroup of X and \(U = \int _0^\infty P_t\,\mathrm {d}t\) for its potential and write as usual \(P_tf\), \(\mu P_t\), Uf and \(\mu U\) for the actions on Borel functions \(f:E\rightarrow {\mathbb {R}}\) and Borel measures \(\mu \) on E. For an \(({\mathcal {F}}_t)_{t\ge 0}\)-stopping time T, we write \(P_T(x,\,\mathrm {d}y)={\mathbb {P}}^x\left( X_T\in \,\mathrm {d}y; T <\zeta \right) \) and for first hitting times \(T_A =\inf \{t>0:\,X_t\in A\}\) of A\(\in \mathcal {E}\), we write \(P_A= P_{T_A}\). We write \(A^r\) for the regular points of a nearly Borel set A, see Table 3 in “Appendix A”.

A central role will be played by lifting X to a space-time process \({\overline{X}}\), that is \({\overline{X}}_t : = (\tau _t,X_t)\) with \(\tau _t=\tau _0+t\), with the space-time semigroup \(Q=(Q_t)_{t\ge 0}\) acting on Borel functions \(g:{\mathbb {R}}\times E\rightarrow {\mathbb {R}}\) and Borel measures \(\mu \) on E as follows:

where \(t\in {\mathbb {R}}\), \(x\in E\), \(A\in \mathcal {E}\) and \(I\subseteq {\mathbb {R}}\).

Duality. Throughout this paper we make the following assumption,

Assumption 2.1

There exists a standard process \(\widehat{X}\) with semigroup \(\widehat{P}\) on the same probability space, and some \(\sigma \)-finite measure \(\xi \) on E such that for all \(t \ge 0\) and \(f,g \ge 0\) \(\mathcal {E}^*\) -measurable,

Furthermore, the semigroups of X and \(\widehat{X}\) are absolutely continuous with respect to \(\xi \),

Remark 2.2

Relation (2.1) is referred to in the literature as weak duality. The processes X and \(\widehat{X}\) are said to be in strong duality with respect to \(\xi \) (as defined in [12, Ch. VI] or [19, Ch.13]), if, in addition to (2.1), the resolvent kernels are absolutely continuous with respect to \(\xi \). This is weaker than the absolute continuity of the semigroup, so that in particular, strong duality of X and \(\widehat{X}\) holds under Assumption 2.1.

We write \(\widehat{P}\) and \(\widehat{U}\) for the semigroup and potential kernel of \(\widehat{X}\), and we denote the actions of these operators on Borel functions f and measures \(\mu \) on the other side as for X, i.e. \(f\widehat{P}_t\), \(f\widehat{U}\) and \(\widehat{U} \mu \). Furthermore, we use the prefix “co” for the corresponding properties relating to \(\widehat{X}\), e.g. coexcessive, copolar, cothin, etc., and we write \(\widehat{T}_A = \inf \{t>0:\,\widehat{X}_t\in A\}\) and \({}^rA\) for the coregular points of a measurable set A.

By [36, 65], absolute continuity of the semigroups implies that the corresponding space-time processes \((\tau _t,X_t)\), and \((\widehat{\tau }_t, \widehat{X}_t)\), where \(\widehat{\tau }_t= \widehat{\tau }_0-t\), are in strong duality with respect to the measure \(\lambda \otimes \xi \), where \(\lambda \) is the Lebesgue measure on the real line. We denote by \(\widehat{Q}\) the semigroup corresponding to the space-time process \((\widehat{\tau }_t, \widehat{X}_t)\). For every \(s\ge 0\) and \((\mathcal {B}({\mathbb {R}})\times \mathcal {E})\)-\(\mathcal {B}({\mathbb {R}})\)-measurable function g,

In addition, there exists a Borel function \((t,x,y) \mapsto p_t(x,y)\) such that for all \(t>0\) and x, y in E, \(P_t(x,\,\mathrm {d}y) = p_t(x,y) \xi (\mathrm {d}y)\) and \(\widehat{P}_t(\mathrm {d}x,y) = p_t(x,y)\xi (\mathrm {d}x)\), and p satisfies the Kolmogorov–Chapman relation

The function \(u(x,y) := \int _0^\infty p_t(x,y)\,\mathrm {d}t\) is excessive in x (for each fixed y), coexcessive in y, and is a density for U and \(\widehat{U}\).

Note that the duality assumption implies by [12, Ch. IV, Prop. (1.11)]) that a measure \(\mu \) is excessive if and only if it has a density which is coexcessive and finite \(\xi \)-almost everywhere. Hence, the density of the potential \(\mu U\) with respect to \(\xi \) is given by the (coexcessive) potential function \(\mu \widehat{U}\).

Remark 2.3

The functions which are (co-)excessive with respect to P, \(\widehat{P}\), Q and \(\widehat{Q}\) are actually Borel-measurable. Indeed, strong duality of the corresponding processes guarantees the existence of a so-called reference measureFootnote 1 (for more details see [12, Ch. VI]). In this case Proposition (1.3) in [12, Ch. V] implies that excessive functions are Borel-measurable.

We repeatedly use the following classical result,

Proposition 2.4

(Hunt’s switching formula, [12, VI.1.16]) Let X, \(\widehat{X}\) be standard processes in strong duality. Then for all Borel-measurable B, one has \(P_B u = u \widehat{P}_B\), i.e. for all \(x, y \in E\),

Remark 2.5

The dual process \(\widehat{X}\) can be thought of as X running backwards in time. In fact, strong duality implies that for non-negative bounded Borel functions f and g it holds

More generally and ignoring technicalities (see [19, Ch.13] for details), if we take \(\Omega \) to be the canonical probability space and let \(r_t: \Omega \rightarrow \Omega \) denote the right-continuous time reversal at time t, that is \(\omega ':=r_t(\omega )\) is given as \(\omega '(s):=\omega (t-s-)\), then for any F that is \({\mathcal {F}}_t\)-measurable

Informally, strong duality of X with another standard process requires that two conditions are met: (i) X admits an excessive reference measure, (ii) the right-continuous version of its time reversal is a standard process and in particular satisfies the strong Markov property. We refer to [19, Ch. 15] and [62] for a detailed discussion.

Remark 2.6

A practical approach to obtain Markov processes in duality is via Dirichlet forms. Given a Markov process with generator \(\mathcal {L}\), this consists in considering the bilinear form

extended to a suitable class of functions f, g. The theory of Dirichlet forms, see e.g. [49], then provides sufficient analytic criteria on \(\mathcal {D}\) so that it is associated to a pair of (standard) Markov processes in weak duality with respect to \(\xi \). It is also possible to obtain existence (and further properties) of transition densities for a Markov semigroup by considering functional inequalities (such as Nash inequality) satisfied by the associated Dirichlet form, see e.g. [17].

3 A free boundary characterisation

Definition 3.1

(Root barrier) A subset R of \({\mathbb {R}}_+ \times E\) is called a Root barrier for X if R is nearly Borel-measurable with respect to the space-time process \({\overline{X}}\) and

We call the first hitting time \(T_R = \inf \{t > 0 : (t, X_t)\in R\}\) the Root stopping time associated with R.

Dealing with the regularity of R is a central theme of this article and it is useful to introduce “right-” and “left-”continuous modifications \(R^-\) and \(R^+\) of R.

Definition 3.2

For a Root barrier R denote with

the section at time t. We define \(R^- \subset R \subset R^+\) as

Remark 3.3

An equivalent definition of the barrier is that the mapping \(t \mapsto R_t\) is non-decreasing. This also implies that \(R^-\) and \(R^+\) are barriers as well. As R is nearly Borel-measurable with respect to \(\overline{X}\) then so are the shifted barriers \(R^{s} := \{ (t-s,x) ,\;\; (t,x) \in R\}\) for any \(s \in {\mathbb {R}}\), as then

Definition 3.4

(Balayage order) Two probability measures \(\mu \) and \(\nu \) are in balayage order, if their potentials \(\mu U\) and \(\nu U\) satisfy

In this case we will write \(\mu \prec \nu \) and say that \(\mu \) is before \(\nu \).

Remark 3.5

Under Assumption 2.1, (3.1) is equivalent to

The inequality (3.2) holds everywhere if and only if it holds \(\xi \)-almost everywhere, since both sides are coexcessive functions.

We now state our main result,

Theorem 3.6

Let X be a Markov process for which Assumption 2.1 holds. Let \(\mu , \nu \) be two measures such that \(\mu U\) and \(\nu U\) are \(\sigma \)-finite measures and such that \(\nu \) charges no semipolar set. If \(\mu \prec \nu \) then there exists a Root barrier R for X such that

Moreover, if we set

then

-

(1)

\(f^{\mu ,\nu }(t,x) = \mu P_{t \wedge T_R} \widehat{U}(x)\),

-

(2)

\( T_R = \mathop {\text {arg min}}\limits _{S:~ \mu P_S = \nu } \mu P_{t\wedge S} U(B)\) for any Borel set B and \(t\ge 0\),

-

(3)

in the above we may take

$$\begin{aligned} R = \left\{ (t,x)\in {\mathbb {R}}_+ \times E\;\; |\;\; f^{\mu ,\nu }(t,x) = \nu \widehat{U} (x) \right\} . \end{aligned}$$

Besides existence and optimality of a Root stopping time, the main interest of Theorem 3.6 is that item (3) provides a way to compute the Root barrier for a large class of Markov processes ranging from Lévy processes to hypo-elliptic diffusions, see the examples in Sect. 5. Concretely, it allows to use classical optimal stopping and the dynamic programming algorithm to compute \(f^{\mu ,\nu }\) and hence R. We state this as a corollary:

Corollary 3.7

Using the same notation and assumptions as in Theorem 3.6 it holds that

-

(1)

\(f^{\mu ,\nu }\) is the value function of the optimal stopping problem

$$\begin{aligned} f^{\mu ,\nu }(t,x) = \sup _{\tau } {\mathbb {E}}^x \left[ \mu \widehat{U}\left( \widehat{X}_\tau \right) \mathbb {1}_{\{\tau =t\}} + \nu \widehat{U}\left( \widehat{X}_\tau \right) \mathbb {1}_{\{\tau <t\}} \right] \quad \forall t \ge 0, x \in E, \end{aligned}$$(3.4)where the supremum is taken over stopping times \(\tau \) taking values in [0, t].

-

(2)

If we define for \(n \ge 0\) the function \(f_n^{\mu ,\nu }\) on \(\{ k2^{-n},~ k \ge 0\} \times E\) by

$$\begin{aligned} f_n^{\mu ,\nu }(0,\cdot ) = \mu \widehat{U}, \;\;\;\;f_n^{\mu ,\nu }(2^{-n}(k+1),\cdot ) = \max \left\{ \left( f^{\mu ,\nu }_n(2^{-n}k,\cdot ) \right) \widehat{P}_{2^{-n}} ,\nu \widehat{U}\right\} , \end{aligned}$$then for each \(t\ge 0\), \(x \in E\),

$$\begin{aligned}f^{\mu ,\nu }(t,x) = \lim _{n \rightarrow \infty } f^{\mu ,\nu }_n\left( 2^{-n} \lfloor 2^n t \rfloor ,x\right) .\end{aligned}$$

Informally, \(f^{\mu ,\nu }\) is the solution of the obstacle problem

where \(\widehat{\mathcal {L}}\) is the generator of the dual process \(\widehat{X}\). However, to make this rigorous is in general a subtle topic since the obstacle introduces singularities. Several notions of generalised PDE solutions ranging from variational inequalities to viscosity solutions address this, often together with numerical schemes [1, 44, 45, 52]. This PDE approach to Root’s barrier has been carried out in [23, 34] for one-dimensional diffusions. However, already in the one-dimensional case when the operator involves non-local terms as is the case for many Markov processes, the well-posedness of such obstacle PDEs is an active research area; see e.g. [2, 16]. In general, this PDE approach requires stronger assumptions than Assumption 2.1 for the well-posedness of (3.5); in stark contrast, Corollary 3.7 holds in full generality of Theorem 3.6.

Remark 3.8

(Minimal residual expectation) Item (2) of Theorem 3.6 was named minimal residual expectation by Rost [60] with respect to \(\nu = \mu P_{T_R}\). It implies that

This is actually an equivalent formulation of the minimal residual expectation property as soon as \((\mu U-\nu U)(E)\) is finite as then this quantity is equal to \({\mathbb {E}}^\mu [S]\) for all solutions S of SEP\((X, \mu , \nu )\). Furthermore, Rost proved in [60] that any stopping time S which is of minimal residual expectation with respect to \(\mu P_{T_R}\) necessarily satisfies \(S=T_R\) \({\mathbb {P}}^\mu \)-a.s.

Remark 3.9

(Recurrent Markov processes) That \(\mu U\) and \(\nu U\) are \(\sigma \)-finite is a kind of transience assumption, and is usual in this context [32, 60]. In the case of one-dimensional Brownian motion or diffusions it is not necessary, see [23, 34]. We expect that our result could be extended to the recurrent case (at least in some special cases), but this would require a certain amount of work, see e.g. [30] for results for two-dimensional Brownian motion.

Remark 3.10

(Assumptions of Theorem 3.6) From the counterexamples discussed in [30, 32], to obtain solutions to SEP\((X, \mu , \nu )\) as non-randomised stopping times, one needs to make:

-

(1)

an assumption on the process in order to avoid “deterministic portions” in the trajectory. In our case, this is reflected in the assumption of absolute continuity (2.2). This assumption is rather strong but can often be checked in practice. In the case of diffusions, the celebrated Hörmander’s criterion [43] gives a simple condition to ensure existence of transition densities with respect to Lebesgue measure. For jump-diffusions, there are also many results providing sufficient criteria for absolute continuity, see for instance [9, 53].

-

(2)

an assumption on the “small” sets charged by initial and target measures (to avoid issues as in the case of multidimensional Brownian motion and Dirac masses). This is why we assume that \(\nu \) charges no semipolar sets. Without this assumption, it is not true that there exists a solution to SEP\((X, \mu , \nu )\) as hitting time of a barrier, or even as an non-randomised stopping time. In the case where all semipolar sets are polar, following [31], we can replace the assumption that \(\nu \) charges no (semi)polar set by the assumption that

$$\begin{aligned}&\text{ there } \text{ exists } \text{ a } \text{(universally } \text{ measurable) } \text{ set } C \text{ s.t. } \nonumber \\&\quad \nu (Z) = \mu (Z \cap C), \,\, \text{ for } \text{ all } \text{ polar } Z. \end{aligned}$$(3.6)Indeed, there exists then a polar set \(M \subset C\), and a measure \(\gamma \) supported on M, \(\mu ', \nu '\) supported on \(M^c\) with \(\mu = \mu ' + \gamma \), \(\nu =\nu ' + \gamma \), and \(\nu '\) charges no polar sets (cf. [31, p.50]). Letting \(R'\) be a barrier embedding \(\nu '\) into \(\mu '\) as given by Theorem 3.6, let \(R:=R' \cup ({\mathbb {R}}_+ \times M)\), then \(T:= \inf \{t \ge 0, \; (t,X_t) \in R\}\) embeds \(\nu \) into \(\mu \). In [31] is proven that (if semipolar sets are polar), (3.6) is a necessary condition for a non-randomised solution to SEP\((X, \mu , \nu )\) to exist (in the case where \(\mu U \ge \nu U\) but (3.6) does not hold, randomisation of the stopping time at time 0 is necessary).

4 Proof of Theorem 3.6

The proof of our main result, Theorem 3.6, is split into two parts:

-

Existence. We first show that a Root barrier R exists such that \(\mu P_{T_R}=\nu \) and that items (1) and (2) of Theorem 3.6 hold. Here we rely on classic work of Rost, [60], that shows that SEP\((X, \mu , \nu )\) has as solution stopping time T that lies between the hitting times of two barriers which differ only by a space-time graph. We show that these hitting times are necessarily equal; a similar approach was already followed in [3, 18, 34] under different assumptions.

-

Free boundary characterisation. We show item (3) of Theorem 3.6, that is that one can take the contact set of the obstacle problem (3.5) as the Root barrier. From a conceptual point of view, this is similar to the case of one-dimensional diffusions as studied with PDE methods in [23, 34]. However, there the analysis is greatly simplified due to the existence of local times. Since local times are not available in our setting, the situation becomes more delicate and requires the analysis of negligible sets via potential theory.

4.1 Existence

We prepare the proof of existence and optimality with two lemmas. The first lemma shows right-continuity of the semigroup when applied to bounded Borel-measurable functions.

Lemma 4.1

Under Assumption 2.1, it holds for all Borel-measurable and bounded functions f, for all \(x\in E\) and \(t>0\)

Proof

First, note that if f is continuous then by a.s. right continuity of \(t\mapsto X_t\) it is clear that \(P_t f\) is right continuous as a function of t.

Let \(p_t^x = p_t(x,\cdot )\). Since \(\int _E p_t^x(y)\xi (\mathrm {d}y) = 1\), by de La Vallée Poussin’s theorem (see e.g. [26, Thm. II.22]Footnote 2 there exists a function G which is strictly convex and superlinear (i.e. \(\lim _{x \rightarrow +\infty } G(x)/x = +\infty \)) such that

Then for all \(h \ge 0\) one has

where we first used Kolmogorov-Chapman’s equality (2.3), then Jensen’s inequality and that it holds \(\int p_h^z(y) \xi (\mathrm {d}z) = 1\) by duality. Since \(\xi \) is \(\sigma \)-finite, there exists a countable increasing family of open sets \((E_n)_{n\in {\mathbb {N}}}\) such that  and \(\xi (E_n)<\infty \) for all \(n\in {\mathbb {N}}\).

and \(\xi (E_n)<\infty \) for all \(n\in {\mathbb {N}}\).

Now fix \(n\in {\mathbb {N}}\). On \(E_n\) the integrability condition as in (4.2) is satisfied for all functions in the family \((p_s^x)_{s \ge t}\). By the de La Vallée Poussin’s theorem this is equivalent to \((p_s^x)_{s \ge t}\) being uniformly integrable in \(L^1(E_n, \xi )\).

Then, by the Dunford-Pettis theorem (see e.g. [26, Thm. II.23]), uniform integrability of \((p_s^x)_{s\ge t}\) implies that it is weakly (relatively) compact in the finite measure space \(L^1(E_n, \xi )\). By a diagonal argument there exists a subsequence \(s_k \downarrow t\) and a measurable function q such that for all n, for all bounded and measurable f one has \(\int _{E_n} p^x_{s_k} f \,\mathrm {d}\xi \rightarrow \int _{E_n} q f \,\mathrm {d}\xi \) for \(k\rightarrow \infty \). If we take f as a continuous function supported in \(E_n\), by right-continuity of the sample paths, we obtain that \(q = p_t^x\). In addition, since \(E_n^c\) is closed, by a.s. right-continuity of X, one has that

Hence if f is measurable and bounded by 1,

Letting \(n \rightarrow \infty \), the right-hand side goes to 0 by dominated convergence. Hence \(p^x_{s_k}\) converges weakly in \(L^1(E,\xi )\) to \(p^x_t\). We can use the same line of argument for every subsequence of any sequence \(s_k\downarrow t\) to argue the convergence of a subsubsequence. Therefore for all \(x\in E\) we have that \(p^x_{s}\) converges weakly in \(L^1(E,\xi )\) to \(p^ x_t\) for \(s\downarrow t\) which leads to the required statement. \(\square \)

The second Lemma revisits Chacon’s idea of “shaking the barrier”, see also [3, 18] for similar statements under slightly stronger assumptions.

Lemma 4.2

If the semigroup of a Markov process satisfies (4.1), then for all Root barriers R one has almost surely

Proof

Firstly, by replacing R with \(R^+\) if necessary, it is enough to show that \(T_R = T_{R^{-}}\) almost surely. Secondly, if we define

we have \(T_R = \inf _{\delta >0} T_{R(\delta )}\). Put together, this implies that it is sufficient to show that for all \(\delta >0\), \(T_{R(\delta )}=T_{R^-(\delta )}\) \({\mathbb {P}}^\mu \)-a.s. and below we assume that \(R = R(\delta )\) for a given \(\delta >0\).

For \(\varepsilon \in {\mathbb {R}}\) define

That is, \(R^\varepsilon \) is the barrier that arises by shifting R in time to the left if \(\varepsilon >0\) [resp. to the right if \(\varepsilon <0\)]. Now since \(R=R(\delta )\),

and for any \(0< \varepsilon < \delta \) we also have

Now set \(f(x) := {\mathbb {E}}^x \left[ \exp \left( -T_{R^{\delta }}\right) \right] \) and use the above identities to deduce that for every \(0< \varepsilon < \delta \) and every x,

From the right-continuity of the semigroup, Lemma 4.1, it follows that

But since \(T_{R^{-\varepsilon }}\ge T_R\) \({\mathbb {P}}^x\)-a.s. for all x and for all \(\varepsilon >0\), this already implies that

and we conclude that \(T_R = T_{R^-}\) since \(R^- = \bigcup _{\varepsilon >0} R^{-\varepsilon }.\) \(\square \)

For the proof of existence and optimality, we rely on the following result obtained by Rost:

Theorem 4.3

(Rost, Theorems 1 and 3 in [60]) If \(\mu \prec \nu \), then there exists a (possibly randomised) stopping time T which is of minimal residual expectation with respect to \(\nu \), i.e. \(\mu P_T=\nu \) and

In addition, the measure

is given by the Q-réduite of the measure

Furthermore, there exists a finely closed Root barrier R such that \({\mathbb {P}}^\mu \)-a.s.:

-

(1)

\(T \le T_R:= \inf \left\{ t > 0, \; X_t \in R_{t}\right\} \),

-

(2)

\(X_{T} \in R_{T+}\).

The key ingredients in the proof of Rost’s theorem are the filling scheme from [59], which allows to obtain the existence of T satisfying the optimality property (4.5), and then a paths-swapping argument (see [42] for a heuristic description), which shows that T is almost the hitting time of a Root barrier (i.e. (1) and (2) above). However, this does not imply that T is the hitting time of a Root barrier.

In order to conclude item (1) from Theorem 3.6, we first see that Lemma B.3 yields

We will prove in Lemma 4.4 that \(f^{\mu ,\nu }\) is \(\widehat{Q}\)-excessive. Therefore, if we show that \(g(t,x):= \mu P_{t\wedge T}\widehat{U}(x)\) is \(\widehat{Q}\)-excessive then (4.6) holds everywhere. For this we need to show that g satisfies \(g \widehat{Q}_t \rightarrow g\) as \(t \rightarrow 0\). But this follows from the definition since

Secondly, from \(f^{\mu ,\nu } (t,x)= \mu P_{t \wedge T} \widehat{U}(x)\), it also follows from Theorem 1 in [60] that T is the unique stopping time minimising \(\mu P_{t\wedge T }\widehat{U}\) for all \(t\ge 0\) among all stopping times embedding \(\nu \) in \(\mu \).

Furthermore, \(X_{T} \in R_{T+}\) in (2) from Theorem 4.3 by Rost implies \(T \ge T_{R^+} :=\inf \left\{ t > 0, \; X_t \in R_{t+}\right\} \) on \(\{T>0\}\). If \(T=0\) we have \(X_0 \in R_{0+}\) and if \(X_0 \in R_{0+}^r\) then \(T_{R^+}=0\), so that combined we get

where we used that \(R_{0+} \setminus R_{0+}^r\) is semipolar and that by assumption \(\nu \) charges no semipolar sets. Hence combined with item (2) from Theorem 4.3, one has \(T_{R^+} \le T \le T_R\), and we can conclude the existence of a solution satisfying items (1) and (2) in Theorem 3.6 with Lemma 4.2.

4.2 Free boundary characterisation

Let \(T=T_R\) be the unique Root stopping time solving SEP\((X, \mu , \nu )\) from the previous section with the respective Root barrier R. We want to prove \(T={\widetilde{T}}\) with \({\widetilde{T}} := T_{{\widetilde{R}}}\), where \({\widetilde{R}}\) is defined as in Theorem 3.6

The proof is split into two inequalities given in Proposition 4.5 and Proposition 4.7. First, we show some useful properties of the Root barrier \({{\widetilde{R}}}\):

Lemma 4.4

The function \(f^{\mu ,\nu }\) and the resulting Root barrier \({\widetilde{R}}\) satisfy the following properties:

-

(1)

\(f^{\mu ,\nu }\) is \(\widehat{Q}\)-excessive and non-increasing in t,

-

(2)

\({\widetilde{R}}\) is a Borel-measurable and \(\widehat{Q}\)-finely closed Root barrier.

Proof

For (1), note that any function \(f:{\mathbb {R}}\times E\rightarrow {\mathbb {R}}\) is \(\widehat{Q}\)-finely continuous if and only if the process \(t\mapsto f(s-t, \widehat{X}_t)\) is \({\mathbb {P}}^{\delta _{(s,x)}}\)-a.s. right continuous for all \((s, x)\in {\mathbb {R}}\times E\) (see e.g. [12, Theorem (4.8)]) . As in the obstacle \(h(t,x) =\mu \widehat{U}(x) \mathbb {1}_{\{t \le 0\}} + \nu \widehat{U}(x) \mathbb {1}_{\{t >0\}}\), we have the \(\widehat{P}\)-finely continuous functions \(\mu \widehat{U}\) and \(\nu \widehat{U}\) making \(t\mapsto \mu \widehat{U}(\widehat{X}_t)\) (when \(t< s\)) and \(t\mapsto \nu \widehat{U}(\widehat{X}_t)\) (when \(t> s\)) \({\mathbb {P}}^{\delta _x}\)-a.s. right-continuous for all \(x\in E\). Furthermore, \(t\mapsto h(s-t,x)\) is right continuous at \(t=s\) which together makes h \(\widehat{Q}\)-finely continuous. By Proposition B.2 it then follows that \(f^{\mu ,\nu }\) is \(\widehat{Q}\)-excessive. Further, \(f^{\mu ,\nu }\) is non-increasing in t since h is non-increasing.

For (2), note that the \(\widehat{Q}\)-excessive function \(f^{\mu ,\nu }\) is Borel-measurable, see Remark 2.3, and the barrier \({\widetilde{R}}\) is a level set of the Borel-measurable function \((t,x) \mapsto f^{\mu ,\nu }(t,x) -\nu \widehat{U}(x)\), hence it is Borel-measurable. Therefore \({\widetilde{R}}\) is \(\widehat{Q}\)-finely closed since it is the set where the two finely-continuous functions \(f^{\mu ,\nu }\) and h coincide, and it is a barrier by time monotonicity of \(f^{\mu ,\nu }\).

\(\square \)

Proposition 4.5

\({\widetilde{T}} \le T\).

Proof

Since \({\widetilde{T}} = T_{{\widetilde{R}}} = T_{{\widetilde{R}}^+}\) by Lemma 4.2, we only need to prove \(T_{{\widetilde{R}}^+}\le T\). Since \(\mu U\) is \(\sigma \)-finite, \(\mathcal {N} = \{ \mu \widehat{U} = \infty \}\) is polar (cf. [12, (3.5)]). Let (s, y) be such that \(y \in {}^r R_{s}\) and \(y \notin \mathcal {N}\). One has

and since \(T\le s + T_{R_s}\circ \theta _s\) on \(\{s\le T \}\) as \(s\mapsto R_s\) is non-decreasing, we can apply the Markov property to obtain

By the switching identity (Proposition 2.4) and since \(y\in {}^rR_s\) we have

for all \(x \in E\) and hence

Thus, we can conclude that \((s,y) \in {\widetilde{R}}\).

Now for any \(\varepsilon >0\), if \(t<q < t+\varepsilon \), \(R_t \setminus {}^r R_{t+\varepsilon } \subset R_q \setminus {}^r R_q\). Since \(\bigcup _{q \in {\mathbb {Q}}} R_q \setminus {}^r R_q\) is semipolar, and since \(\nu \) charges no semipolar sets, it follows that a.s. \(X_T \in {}^r R_{T+\varepsilon } \setminus \mathcal {N}\). By the previous paragraph, this means that \(X_T \in \bigcap _{\varepsilon >0} {\widetilde{R}}_{T+\varepsilon } = {\widetilde{R}}_{T+}\). Hence \(T_{{\widetilde{R}}^+} \le T\). \(\square \)

Before we prove the inverse inequality, we first need a preliminary lemma:

Lemma 4.6

Assume that for some measure \(\eta \) which charges no semipolar sets, some stopping time \(\tau \) and some nearly Borel-measurable set A one has \(\eta \widehat{U} = \eta P_\tau \widehat{U}\) on A. Then \(\tau \le T_A\), \({\mathbb {P}}^\eta \) almost surely.

Proof

We first write

where we have used in the first inequality that \(\eta P_\tau \widehat{U} \) is coexcessive and in the following equality, that the coexcessive functions \(\eta \widehat{U}\) and \(\eta P_\tau \widehat{U}\) coincide on A and therefore also on its cofine closure on which \(\widehat{P}_A\) is supported. The last equality follows by the switching identity.

Therefore it holds that \( \eta P_A U \le \eta P_\tau U \), i.e. the measures \(\eta P_A\) and \(\eta P_\tau \) are in balayage order. We then follow the proof of [60, Lemma p.8]. By [59], since \(\eta P_A \succ \eta P_\tau \), there exists a stopping time \(\tau '\) (possibly on an enlarged probability space) which is later than \(\tau \) such that the process arrives in the measure \(\eta P_A\) at time \(\tau '\), i.e. \(\tau ' \ge \tau \) \({\mathbb {P}}^\eta \)-a.s. and \(\eta P_{\tau '} = \eta P_A\). We can assume without loss of generality that A is finely closed and then this implies that \({\mathbb {P}}^\eta (X_{\tau '}\in A)=1\). In particular, if \(D_A := \inf \{t\ge 0, X_t \in A\}\), then we have \(\tau ' \ge D_A\) \({\mathbb {P}}^\eta \)-a.s. However, since \(\eta (A \setminus A^r) = 0\) it holds that \(T_A=D_A\) \({\mathbb {P}}^\eta \)-a.s., so that \(\tau ' \ge T_A\). Since \(\tau ' > T_A\) would be a contradiction to \(\eta P_{\tau '}U = \eta P_A U\), we conclude that \(T_A=\tau '\), and therefore \(T_A \ge \tau \) \({\mathbb {P}}^\eta \)-almost surely.\(\square \)

Proposition 4.7

\({\widetilde{T}} \ge T\).

Proof

We first show that for all \(t \in {\mathbb {Q}}_+:={\mathbb {Q}}\cap (0,+\infty )\), one has \(T \le t + T_{{\widetilde{R}}_t} \circ \theta _t\). For this we first prove

where for fixed \(t \in {\mathbb {Q}}_+\) the stopping time \(T^{t}:=\inf \{ s >0: X_s \in {R}_{t+s}\}\) is the hitting time of R shifted in time by t. This holds since for all Borel-measurable functions f it holds

Since \(T =t+T^{t}\circ \theta _t\) on \(\{ t < T\}\), it holds that \({\mathbb {E}}^{\mu } [ f(X_{T^{t}\circ \theta _t}) \mathbb {1}_{\{t< T\}}] = {\mathbb {E}}^{\mu } [ f(X_{T}) \mathbb {1}_{\{t < T\}}]\). Furthermore, we know that by definition of \(T^t\) we have \(P_{T^{t}} f = f\) on \({R}_t^r\), since \(t\mapsto R_t\) is non-decreasing. As \({\mathbb {P}}^{\mu }(X_T \in {R}_t \setminus {R}_t^r) = \nu ({R}_t \setminus {R}_t^r) =0\) since \(\nu \) does not charge semipolar sets, it holds that \({\mathbb {E}}^ x[P_{T^{t}} f(X_T) \mathbb {1}_{\{t \ge T\}}] = {\mathbb {E}}^x[f(X_T) \mathbb {1}_{\{t \ge T\}}]\). Together this implies \(\mu P_{t \wedge T} P_{T^{t}} f = \mu P_Tf\).

Secondly, note that \(\mu P_{t \wedge T} \ll \xi + \nu \) does not charge semipolar sets. Since \(\mu P_T \widehat{U} = \mu P_{t \wedge T} \widehat{U}\) on \({\widetilde{R}}_t\), we can choose \(\eta =\mu P_{t\wedge T}\), \(\tau = T^{t}\) and \(A = {\widetilde{R}}_t\) in Lemma 4.6 to obtain that \(T^t \le T_{{\widetilde{R}}_t}\), \({\mathbb {P}}^{ \mu P_{t \wedge T}}\)-a.s. We write

and this implies

Since

this implies that \(T_{{\widetilde{R}}^-} \ge T\) \({\mathbb {P}}^\mu \)-almost surely, which concludes the proof by Lemma 4.2. \(\square \)

5 Examples

In this section we apply Theorem 3.6 to concrete Markov processes. The examples are

-

Continuous-time Markov chains. This is a toy example but we find it instructive since many abstract quantities from potential theory become very concrete and simple; e.g. the obstacle PDE reduces to a system of ordinary differential equations.

-

Hypo-elliptic diffusions. This is a large and important class of processes. In the one-dimensional case we recover the setting of [23, 34] but for the multi-dimensional case the results are new to our knowledge. As concrete example we give a Skorokhod embedding for two-dimensional Brownian motion and Brownian motion in a Lie group.

-

\(\alpha \)-stable Lévy processes. There is very little literature on the Skorokhod embedding problem for Lévy processes, see [27] for references. We apply our results to \(\alpha \)-stable Lévy processes which are of growing interest in financial modelling, see e.g. [63], as they are characterised uniquely as the class of Lévy processes possessing the self-similarity property. Due to the infinite jump-activity such processes are hard to analyse but potential theoretic tools are classic in this context and much is known about their potentials, see [8, 11, 14, 47].

Two remarks are in order: firstly, the question to characterise or even construct measures \(\mu ,\nu \) that are in balayage order \(\mu \prec \nu \) for a given Markov process seems to be a difficult topic. In the case of one-dimensional Brownian motion this reduces to the convex order which is usually easy to verify but already for multi-dimensional Brownian motion it can be (numerically) difficult to check if two given measures are in balayage order. Secondly, we reiterate the discussion after Corollary 3.7 that the PDE formulation usually requires stronger assumptions whereas the discrete dynamic programming algorithm, Corollary 3.7, applies to Theorem 3.6 in full generality. All our examples were computed using the dynamic programming equation stated as item (2) in Corollary 3.7.

5.1 Continuous-time Markov chains

Let \(Y=(Y_n)_{n\in {\mathbb {N}}}\) be a discrete-time Markov chain on a discrete state space \(E\subset {\mathbb {Z}}\) and transition matrix \(\Pi \) such that \(\Pi (x,y) = q(y-x)\) for all \(x,y\in E\) and a probability measure q. Imposing \(\exp (\lambda )\)-distributed waiting times at each state, we arrive at the continuous-time Markov chain \(X=(X_t)_{t\ge 0}\) with transition function

The process X is dual to the continuous-time Markov chain \(\widehat{X}\) with transition matrix \(\widehat{\Pi }= \Pi ^T\) and the same transition rate \(\lambda \) at each state with respect to the counting measure. The potentials are given with respect to the function

and the potential function of a measure \(\mu \) is given by

Example 5.1

(Asymmetric random walk on \({\mathbb {Z}}\)) Let Y be the asymmetric random walk on \({\mathbb {Z}}\), that is \(\Pi (x, x+1)= p\in (\frac{1}{2}, 1]\) and \(\Pi (x, x-1)= 1-p=:q\). By translation invariance and a standard result (see e.g. [54]) it then holds for the potential kernel of X

Now let \(\mu = \delta _0\) and \(\nu =\sum _{l=1}^N a_l\delta _{x_l}\) for some \(N\in {\mathbb {N}}\), \(a_l>0\), \(\sum _{l=1}^N a_l=1\) and \(0<x_1<\dots <x_N\). Then

Since \(p>q\), we have \(\nu \widehat{U}\le \mu \widehat{U}\) for all such \(\nu \). The generator of \(\widehat{X}\) is given by

and the obstacle problem (3.5) reduces to the following set of ODEs:

Then either classical methods for solving this set of coupled ODEs can be applied or we can directly apply the dynamical programming approach as in Corollary 3.7 as follows: For \(\varepsilon >0\) small enough, we choose \(x_0\) such that \(\mu \widehat{U}(x_0)-\nu \widehat{U}(x_0)< \varepsilon \). We approximate the function \(f^{\mu , \nu }\) on the set \(\{x_0, x_0+1, \dots , x_N\}\) at discrete time points \(t_k=\frac{k}{2^n}\) for fixed n:

For example, we take \(\lambda =1\), \(p=\frac{2}{3}\) and \(\nu = \frac{1}{4}\delta _2 + \frac{3}{4}\delta _4\). Figure 1 show the potentials \(\mu \widehat{U}\), \(\nu \widehat{U}\) and the resulting Root barrier.

5.2 Hypo-elliptic diffusions

Let X be the diffusion in \({\mathbb {R}}^d\) obtained by solving an SDE formulated in the Stratonovich sense

where the \(V_i\), \(i=1,\ldots , N\), are vector fields on \({\mathbb {R}}^d\) which we assume to be smooth with all derivatives bounded, and B is a standard Brownian motion in \({\mathbb {R}}^N\). We further assume that X is killed at rate \(c(X) \,\mathrm {d}t\), where \(c\ge 0\) is a non-negative smooth function. X is then a standard Markov process on \({\mathbb {R}}^d\), with generator \(\mathcal {L}\) which acts on smooth functions via

where the \(b_i\) and \(a_{ij}\)’s are smooth functions which can be written explicitely in terms of the \(V_i\). The formal adjoint of \(\mathcal {L}\) with respect to Lebesgue measure is then given by

and we can choose smooth vector fields \(\widehat{V}_i\)’s such that \(\widehat{\mathcal {L}} = (-\mathrm {div} (b) - c) f + \left( \widehat{V}_0 + \sum _i \widehat{V}_i^2\right) \). Assuming that

we can then identify \(\widehat{\mathcal {L}}\) with the generator of the Markov process consisting of the Stratonovich SDE

killed at rate \((\mathrm {div} (b) + c)(\widehat{X}_t) \,\mathrm {d}t\).

In addition, assume that the vector fields satisfy the weak Hörmander conditions

then the classical Hörmander result [43] yields that the semigroups \(P_t\), \({\widetilde{P}}_t\) associated to X, \({\widetilde{X}}\) admit (smooth) densities with respect to Lebesgue measure. Therefore, \((P_t)\) and \((\widehat{P}_t)\) are in duality with respect to Lebesgue measure, as seen by

which yields that \(\left\langle P_{t} f, g \right\rangle = \left\langle f, \widehat{P}_t g \right\rangle \), first for f, g smooth with compact support and then for all \(f,g \ge 0\) Borel measurable by an approximation argument. In conclusion, we have obtained the following.

Proposition 5.2

Assume that (5.8) and (5.10)–(5.11) hold. Then the process X given by solving the SDE (5.7) satisfies Assumption 2.1, with \(\widehat{X}\) given by the solution to (5.9) and \(\xi \) given by the Lebesgue measure on \({\mathbb {R}}^d\).

Example 5.3

(Brownian motion in \({\mathbb {R}}^d\)) For \(d\le 2\), as Brownian motion is recurrent, for any positive Borel function f we have either \(Uf \equiv \infty \) or \(Uf\equiv 0\). Therefore we consider the Brownian motion killed when exiting the unit ball \(B_1(0)\), i.e. \(\zeta = \inf \{t>0:~||X_t|| >1\}\). For any probability measure \(\mu \) with density f supported on \(B_1(0)\), the potential \(\mu \widehat{U} = f\widehat{U}\) is the unique continuous solution of \(\frac{1}{2}\Delta v = f\) on \(B_1(0)\) vanishing on \(\partial B_1(0)\), and is given explicitely as \( f\widehat{U}(x) = {\mathbb {E}}^x \left[ \int _0^\zeta f(\widehat{X}_t) \,\mathrm {d}t\right] = \int u(x, y) f( y)\,\mathrm {d}y \), where

In dimensions \(d\ge 3\), Brownian motion is transient, and the potential is the Newtonian potential on \({\mathbb {R}}^d\):

where \(c_d = \genfrac{}{}{}1{1}{2}\pi ^{-\nicefrac {d}{2}}\Gamma \big (\genfrac{}{}{}1{1}{2}(d-2)\big )\). For \(d=1\) the balayage order reduces to the convex order which is easy to verify. In higher dimensions it is in general non-trivial to find measures in balayage order.

Now we consider the two-dimensional Brownian motion starting in 0. As an example for a measure \(\nu \) which is not rotational symmetric and can be embedded in the two-dimensional Brownian motion, we take \(\nu \) as the measure with the following density as an approximation of the marginal of the diffusion Y which is generated by the operator \(\mathrm {e}^{-x_1-x_2}\widehat{\mathcal {L}}\), here \(\nu (A)={\mathbb {P}}^0(Y_{0.1}\in A)\),

where C denotes a normalising constant, \(a=\cos (\genfrac{}{}{}1{\pi }{4})\), \(b= \sin (\genfrac{}{}{}1{\pi }{4})\) and \({{\widetilde{x}}} = 0.15\). The (empirical) density is respresented in Fig. 2a on page 18. We take \(\nu \) of this form since Y can be obtained as a time change via an additive functional of X which implies \(\delta _0 \prec \nu \) (we will show this explicitly in Sect. 6) and we see \(\nu \widehat{U}\) in Fig. 2b.

Example 5.4

(Lie-group valued Brownian motion) Let \((B^1,B^2)\) be a two-dimensional Brownian motion. Then \((B^1,B^2,\int B^1 \,\mathrm {d}B^2 - \int B^2 \,\mathrm {d}B^1)\) can be identified (after taking the Lie algebra exponential), as a Brownian motion in the free nilpotent Lie group of order 2; see “Appendix C” for details and extension to general free nilpotent groups. The generator of the process is the sub-Laplacian \(\Delta _G = \frac{1}{2} \left( X^2 + Y^2\right) \) on the Heisenberg group G; where in coordinates

As shown by [35, 48], the transition density equals

and by Brownian scaling \(p_t(b^1,b^2,a):= p_1(\frac{b^1}{\sqrt{t}},\frac{b^2}{\sqrt{t}},\frac{a}{t})\). In this case, it is already non-trivial to find measures \(\mu ,\nu \) in balayage order, \(\mu \prec \nu \), even if \(\mu \) is a Dirac at the origin. However, Proposition C.1 in the appendix shows that any measure \({\widetilde{\nu }}\) on \((0,\infty )\) can be lifted to a measure \(\nu \) on G such that \( \delta _0 \prec \nu \). This provides a rich class of probability measures in balayage order, and Theorem 3.6 allows to apply dynamic programming to compute the Root barrier solving SEP\((X,\delta _0,\nu )\). However, this is computationally expensive since (5.14) is not available in closed form. In this case, the well-posedness of the obstacle PDE

can be shown by standard methods (such as viscosity solutions). Again this leads to non-trivial numerics,Footnote 3 even after using the radial symmetry of (5.14) to reduce the space dimension to 2, namely radius and area. Nevertheless, both approaches (dynamic programming and PDE) are applicable to compute barriers for group-valued Brownian motion, although much work remains to be done to turn this into a stable numerical tool and we leave this for future research.

5.3 Symmetric stable Lévy processes

A right-continuous stochastic process \((X_t)_{t\ge 0}\) is called an \(\alpha \)-stable Lévy process, if it has independent, stationary increments which are distributed according to an \(\alpha \)-stable distribution. We consider the symmetric case without drift. In this case, the characteristic component is given by \(\psi (\theta ) = |\theta |^\alpha \), i.e. \({\mathbb {E}}[\mathrm {e}^{i\theta X_t}]= \mathrm {e}^{-t|\theta |^\alpha } =:g_t(\theta )\) and hence \(X_t\) satisfies the scaling property \(X_t \overset{d}{=} t^{\nicefrac {1}{\alpha }} X_1\). Classic results, e.g. [41], show that X has a transition density

which is absolutely continuous with respect to the Lebesgues measure. For further properties of symmetric stable processes, we refer to [11]. We are going to take \(\alpha \in (0,1)\), as in this case X is transient, as shown in [14]. Furthermore, one-dimensional Lévy processes X are dual to \(\widehat{X}:= -X\) with respect to the Lebesgue measure (see [8]). Since the jumps are distributed according to the symmetric stable distribution, the symmetric stable process X is self-dual. By [12] the potential \(Uf(x) = \int u(x,y)f(y)\,\mathrm {d}y\) equals

where in the one-dimensional case \(C_{1,\alpha }= \Gamma (\frac{1-\alpha }{2})\cdot \big [ 2^{\alpha }\sqrt{\pi }\Gamma (\frac{\alpha }{2})^{2}\big ]^{-1}\). In order to construct the Root stopping time, we construct the function \(f^{\mu ,\nu }\) as described in Theorem 3.6 as solution to the obstacle problem

where the generator of the process \(-(-\Delta )^{\nicefrac {\alpha }{2}}\) is given by the fractional Laplacian

with \({\text {P.V.}}\) denoting a principal value integral.

Example 5.5

(Embedding for \(\alpha =0.5\), \(\mu \sim \mathrm {Uniform}([-1,1])\) and \(\nu \sim 0.75\cdot \mathrm {Beta}(2,2)\)) Let \(\mu \) be the Uniform distribution on \([-1,1]\), then

We want to construct a solution T for SEP\((X, \mu , \nu )\) where the density of \(\nu \) is given by \(\frac{\nu (\mathrm {d}x)}{\,\mathrm {d}x} = 0.75\cdot g_{a,b}\), where

is the density of a Beta(a, b) distribution on the interval \([-1,1]\). Realisations of the resulting embedding will then give us that on the event \(\{T<\infty \}\) where \({\mathbb {P}}(T<\infty ) =0.75\), the stopped values \(X_T\) are distributed according to the Beta(a, b) distribution. Studying general numerical methods for the fractional Laplacian is beyond the scope of this article, so we just discuss a quick method which is adapted to our case. We can rewrite (5.16) as

Define the set \(\mathcal {O}_{{\overline{T}}}:= [0, {\overline{T}}]\times [-K, K]\) for large \({\overline{T}}, K\in {\mathbb {R}}\) and \(h:= (\Delta t, \Delta x) = \big (\frac{{\overline{T}}}{N_{{\overline{T}}}}, \frac{2K}{N_x}\big )\), where \(N_x\), \(N_{{\overline{T}}}\in {\mathbb {N}}\) are chosen large enough. The space-time mesh grid is defined as

For the resulting minimal excessive majorant of \(\mu \widehat{U} \mathbb {1}_{\{t\le 0\}}+\nu \widehat{U} \mathbb {1}_{\{t> 0\}}\) we expect that \(f^{\mu ,\nu }\) never touches \(\nu \widehat{U}\) outside \([-1,1]\) as this is the support of \(\nu \). Indeed, a straightforward calculation shows that starting in \(\mu \widehat{U}\), for \(|y|\gg 1\) we have \((-\Delta )^{\nicefrac {\alpha }{2}}\mu \widehat{U}(y) = o(|y-1|)\), i.e. the repeated action of the fractional Laplacian on \(\mu \widehat{U}\) outside an interval \([-K, K]\) with large enough \(K\gg 1\) is negligible. For any \((t,x)\in \mathcal {G}_h\) we define the operator

where \((-\Delta )^{\nicefrac {\alpha }{2}}_h\) is the evaluation of the fractional Laplacian using a Gauß-Kronrod quadrature as described in [24] on \(\mathcal {G}_h\). Then the minimal excessive majorant \(f^{\mu ,\nu }\) for Theorem 3.6 can be computed on \(\mathcal {G}_h\) as follows:

In Fig. 3 on page 21, we can see a realisation of the embedding for SEP\((X, \mu , \nu )\) with \(\mu \) and \(\nu \) as given above. As for small values of \(\alpha \), the trajectories of X may have large jumps, for the simulations we need to take into consideration that X may jump back in the barrier although it already left the support of \(\nu \). Following the results from [13, 46], the probability of X not returning to \((-1,1)\) after reaching level x is

6 Towards generalised Root embeddings

The results of the previous sections, rely on Root’s and Rost’s approach to lift X to a space-time process

and find a solutions of SEP\((X, \mu , \nu )\) that are given as a hitting time of \({\overline{X}}\). A natural generalisation is to replace the time-component by another real-valued, increasing process A with \(A_0=0\), such that (A, X) is again Markov and carry out a similar construction. That is, to construct a set such that its first hitting time by the lifted process

solves SEP\((X, \mu , \nu )\). Again, one expects such a stopping time to be optimal in a minimal residual expectation sense, however, now formulated in terms of A.

Carrying out this program in full generality is beyond the scope of this article. Instead, we focus on the case when A is of the form \(A_t=\int _0^t a(X_s)\,\mathrm {d}s \) where a is strictly positive. Denote with \(\tau _s:=\inf \{t>0: A_t = s\}\) the first hitting time of \(s \ge 0\) by A and with \(Y_s:=X_{\tau _s}\) the time-changed process. Since for every (sufficiently nice) set \(R \subset [0,\infty ) \times E\)

this allows us to use the framework of the previous sections. Concretely, one needs to verify that the assumptions of Theorem 3.6 are met by Y. This already provides a new class of solutions for SEP\((X, \mu , \nu )\). It can be seen as an interpolation between the Root embedding (when \(a \equiv 1\)) and the classical Vallois embedding [64], since when applied to a Brownian motion, the classical Vallois embedding can be identified as the limiting case when a approaches a Dirac at 0.

6.1 Generalised Root embeddings

Below we restrict ourselves to additive functionals of the form

with a Borel measurable a which is locally bounded and locally bounded away from 0, so that \(t\mapsto A_t\) is one-to-one and the measure \(m_A(\mathrm {d}x) = a(x) \xi (\mathrm {d}x)\) is \(\sigma \)-finite. This implies that A is an additive functional of X, i.e. A satisfies

-

(1)

\(A_0=0\), \(t\mapsto A_t(\omega )\) is right continuous and non-decreasing, almost surely,

-

(2)

\(A_t\) is \({\mathcal {F}}_t\)-measurable,

-

(3)

\(A_{t+s} = A_t+ A_s\circ \theta _t\) almost surely for each \(t, s\ge 0\).

We can then define the time-changed process Y as follows

By [29, Theorem 10.11], Y is a standard process. Its potential is given by

and we can define the potential operator \(\widehat{U}^Af(y) = \int f(x)u(x,y) a(x)\xi (\mathrm {d}x)\) for any non-negative Borel-measurable function f which corresponds to the time-changed process \(\widehat{Y}_t = \widehat{X}_{\widehat{\tau }_t}\), where we analogously define \(\widehat{\tau }_t := \inf \{ u > 0, \;\;\widehat{A}_u = t\}\) with \(\widehat{A}_t = \int _0^t a(\widehat{X}_s) \,\mathrm {d}s\). In addition, strong duality holds,

Theorem 6.1

(Revuz, Thm. V.5 and Thm. 2 in VII.3 in [55]) The processes Y and \(\widehat{Y}\) are in strong duality with respect to the so-called Revuz measure \(m_A(\mathrm {d}x) = a(x) \xi (\mathrm {d}x)\).

Remark 6.2

From the duality with respect to the Revuz measure \(m_A\), it follows for any Borel measure \(\mu \) and \(y\in E\) that

Hence, \(\mu \widehat{U}^A = \mu \widehat{U}\), i.e. the potentials of the measures of the original and the time-changed process are equal. However, note that \(\mu U^A \not = \mu U\).

To apply our main result to the time-changed process we make the following assumption, which we will discuss later in this section.

Assumption 6.3

For all \(t>0\) and \(x\in E\), the transition functions of Y and \(\widehat{Y}\) are absolutely continuous with respect to \(m_A\), i.e. \(P^A_t(x,\cdot ) \ll m_A\) and \(\widehat{P}^A_t(\cdot ,y) \ll m_A\).

Combining the above duality results with our main Theorem 3.6 then gives us the following new solution of SEP\((X, \mu , \nu )\).

Theorem 6.4

Let X be a Markov process and A an additive functional for which Assumptions 2.1 and 6.3 hold. Let \(\mu \prec \nu \) be two measures with \(\sigma \)-finite potentials in balayage order, i.e. \(\mu \widehat{U}\ge \nu \widehat{U}\), and such that \(\nu \) charges no semipolar set. Then there exists a Root barrier \(R^A\) which embeds (A, X) such that its first hitting time \(T^A:= \inf \{ t >0: \;\; (A_t, X_t) \in R^A \}\) embeds \(\mu \) into \(\nu \),

Moreover, if we denote

where \(\widehat{Q}^A\) denotes the space-time semigroup associated with \(\widehat{Y}\), then

-

(1)

\(f^{A,\mu ,\nu }(t,x) = \mu P_{\tau _{t}\wedge A_{T^A}}\widehat{U}(y)\),

-

(2)

\(T^A = \mathop {\text {arg min}}\limits _{S:~ \mu P_S = \nu }~~ \mu P_{\tau _t\wedge A_S} U^A(B)\) for all Borel sets B and \(t\ge 0\),

-

(3)

We may take \(R^A = \left\{ (s,x)\in {\mathbb {R}}_+ \times E\;\; |\;\; f^{A,\mu ,\nu }(s,x) = \nu \widehat{U} (x) \right\} \).

Proof

By Remark 6.2, \(\mu \widehat{U}\ge \nu \widehat{U}\) implies \(\mu \widehat{U}^A\ge \nu \widehat{U}^A\) for the time-changed process Y. We henceforth write \(N_B(\omega ) = \{t>0:~ X_t(\omega ) \in B\}\) for the visits of a nearly Borel set B during the lifetime of X. Then the set B is semipolar if and only if the set \(N_B\) is almost surely countable. Further we have

since the mapping \(s\mapsto \tau _s\) is continuous and strictly increasing because \(t\mapsto A_t\) is. Therefore, any set B which is semipolar for X is also semipolar for Y and \(\nu \) does not charge sets which are semipolar for Y.

Due to Assumption 6.3, the processes Y and \(\widehat{Y}\) and the measures \(\mu \) and \(\nu \) satisfy the assumptions of Theorem 3.6. Then \(f^{A,\mu ,\nu }\) and \(R^A\) defined as above are exactly the equivalent results from Theorem 3.6 for Y and the stopping time solving SEP\((Y,\mu , \nu )\) is given by

Then for \(f^{A, \mu , \nu }\) as in (6.1), we have \(f^{A, \mu , \nu } (t, x) = \mu P^A_{t\wedge T}\widehat{U}^A (x)= \mu P_{\tau _{t\wedge T}}\widehat{U}(x)\) is the density of the measure \(\mu P_{\tau _t\wedge A_S} U^A\) w.r.t. \(m_A\). If we define \(T^A = \tau _T\), then for any nearly Borel set \(B\in \mathcal {E}^n\) we obtain \({\mathbb {P}}^\mu (X_{T^A}\in B) = {\mathbb {P}}^\mu (Y_T\in B)= \nu (B)\) and it follows that for any solution T to SEP\((Y,\mu , \nu )\), we have that \(T^A= \tau _T\) is a solution for SEP\((X, \mu , \nu )\). The optimality of Property 2 then naturally follows.

Finally, since \(\inf \{s>0:~ (s, Y_s) \in R^A\} = \inf \{s >0:~ (A_{\tau _s}, X_{\tau _s}) \in R^A\}\), for \(T^A= A_T\), we know that

which completes the proof. \(\square \)

Remark 6.5

Assumption 6.3 does not always hold, even under Assumption 2.1. For instance, let \(X=(X^1,X^2)\) be the Markov process given by

where a is non-negative, bounded, smooth with (for instance) \(a'\) strictly positive, and B is a linear Brownian motion. Then by the weak Hörmander criterion, X admits transition probabilities with respect to Lebesgue measure and satisfies Assumption 2.1. However taking the time-change \(\tau _s\) corresponding to \(\,\mathrm {d}A_t = a(X_t^1) \,\mathrm {d}t\), the resulting process satisfies

which does not admit transition probabilities.

Remark 6.6

Let X be the diffusion with generator given in Hörmander form by

(with for instance the \(V_i\)’s with bounded derivatives of all order), then (assuming a also smooth,say) the generator of Y is given by

for some vector field \(V^A_0\). In particular, if the following strong Hörmander condition holds for X :

it also holds for the generator of Y, in which case Y admits transition probabilities with respect to Lebesgue measure. This condition is for instance satisfied when X is multi-dimensional Brownian motion (or more generally, Brownian motion on a Carnot group).

Remark 6.7

(Obstacle PDE) The generator of the time-changed process \(\widehat{Y}\) is given by \(\widehat{\mathcal {L}}^A f(x) = \frac{1}{a(x)} \widehat{\mathcal {L}}f(x)\), see [29]. Hence, we can again identify \(f^{A,\mu ,\nu }(t,x)\) as the solution to the obstacle problem

provided additional regularity assumptions are made that guarantee well-posedness of the above PDE. However, analogous to Corollary 3.7, dynamic programming applies without any additionally assumptions on \(\widehat{\mathcal {L}}\) and a.

Remark 6.8

(Vallois’ embedding as limit of Root type embedding) Item (2) in Theorem 6.4 implies that

Taking X as the one-dimensional Brownian motion and \(a(x) =\delta _0(x)\) the Dirac at 0, the additive functional A becomes the local time of X at 0. Thus – at least informally since a is not bounded from below – Theorem 6.4 recovers the classical Vallois embedding, see e.g. [21, 64].

6.2 Examples

We now apply Theorem 6.4 to concrete Markov processes.

Example 6.9

(Brownian motion B and \(A_t= \int _0^t \exp (2B_s)\,\mathrm {d}s\)) Taking \(X_t = B_t\) as the one-dimensional Brownian motion, the additive functional \(\int _0^t \exp (2B_s)\,\mathrm {d}s\) has received much attention (see e.g. [50]) due to application in mathematical finance in the context of Asian options. Then \(B_{\tau _t} \overset{\mathrm {d}}{=}\log (Z_t)\), where Z is the Bessel process of index 0 for which the transition density is well known (see [50]). Figure 4 on page 25 shows the Root barriers for \(\mu = \delta _0\) and \(\nu =\mathrm {Uniform}[-1,1]\).

Example 6.10

(Symmetric stable Lévy process X and \(A_t=2t+ \int _0^t\mathrm {arctan}(4X_s)\,\mathrm {d}s\)) For smooth a with \(c_1\le a\le c_2\) for some \(c_1, c_2>0\), from [10, Theorem (2.5)], the time-changed process \(Y_t=X_{\tau _t}\) has absolutely continuous transition density with respect to the Lebesgue measure. Comparing the Root barriers for \(\mu = \mathrm {Uniform}[-1,1]\) and \(\nu = 0.75\cdot \mathrm {Beta}(2,2)\) for X and Y, we can see that the barrier in Fig. 5b on p. 26 is not symmetric, unlike the barrier for X in Fig. 3b. Due to the time change, the process Y runs faster past negative increments and more slowly through the positive parts which leads to hitting the barrier early on the negative parts and much later on the positive parts compared to X.

Notes

A \(\sigma \)-finite measure \(\xi \) is a reference measure for X if for all Borel B, (\(U(x,B) = 0\) for all x) \(\Leftrightarrow \) \(\xi (B)=0\).

The de La Vallée Poussin’s theorem in literature is given in finite measure spaces. That \(\xi \) is infinite is clearly not a problem here. If necessary consider the finite measure \(f(y)\wedge 1 \xi (\mathrm {d}y)\), applying the theorem to that measure gives that for some superlinear G, \(\int G(f(y) \vee 1) f(y) \wedge 1 \xi (\mathrm {d}y)<+\infty \).

We would like to thank Oleg Reichmann and Christian Bayer on helpful conversations and numerical experiments.

A process X taking values in Lie-group G is called (left) Brownian motion in G if \(t\mapsto X_t\) is continuous, \(\left( X^{-1}_sX_{t+s}\right) _{t \ge 0}\) is independent of \((X_u)_{0\le u \le s}\), and \(\left( X^{-1}_sX_{t+s}\right) _{t \ge 0}\) and \((X_t)_{t\ge 0}\) are identical in law.

References

Barles, G., Souganidis, P.: Convergence of approximation schemes for fully nonlinear second order equations. Asympt. Anal. 4(3), 271–283 (1991)

Barrios, B., Figalli, A., Ros-Oton, X.: Free boundary regularity in the parabolic fractional obstacle problem. Commun. Pure Appl. Math. 71(10), 2129–2159 (2018)

Beiglböck, M., Cox, A.M.G., Huesmann, M.: Optimal transport and Skorokhod embedding. Inventiones mathematicae 208(2), 327–400 (2017)

Beiglböck, M., Cox, A.M.G., Huesmann, M.: The geometry of multi-marginal Skorokhod Embedding. arXiv preprint arXiv:1705.09505 (2017)

Beiglböck, M., Henry-Labordère, P., Touzi, N.: Monotone martingale transport plans and Skorokhod embedding. Stoch. Process. Appl. 127(9), 3005–3013 (2017)

Beiglböck, M., Nutz, M., Stebegg, F.: Fine Properties of the Optimal Skorokhod Embedding Problem. arXiv preprint arXiv:1903.03887 (2019)

Beiglböck, M., Nutz, M., Touzi, N.: Complete duality for martingale optimal transport on the line. Ann. Probab. 45(5), 3038–3074 (2017)

Bertoin, J.: Lévy Processes, vol. 121. Cambridge University Press (1998)

Bichteler, K., Gravereaux, J.-B., Jacod, J.: Malliavin Calculus for Processes with Jumps, vol. 2. Gordon and Breach, Singapore (1987)

Bichteler, K., Jacod, J.: Calcul de malliavin pour les diffusions avec sauts: existence d’une densite dans le cas unidimensionnel. In: Séminaire de Probabilités XVII 1981/82, pp. 132–157. Springer (1983)

Blumenthal, R.M., Getoor, R.K.: Some theorems on stable processes. Trans. Am. Math. Soc. 95(2), 263–273 (1960)

Blumenthal, R.M., Getoor, R.K.: Markov Processes and Potential Theory. Courier Corporation (2007)

Blumenthal, R.M., Getoor, R.K., Ray, D.B.: On the distribution of first hits for the symmetric stable processes. Trans. Am. Math. Soc. 99(3), 540–554 (1961)

Bogdan, K., Byczkowski, T., Kulczycki, T., Ryznar, M., Song, R., Vondracek, Z.: Potential Analysis of Stable Processes and Its Extensions. Springer (2009)

Bonfiglioli, A., Lanconelli, E.: Subharmonic functions on Carnot groups. Mathematische Annalen 325(1), 97–122 (2003)

Caffarelli, L., Ros-Oton, X., Serra, J.: Obstacle problems for integro-differential operators: regularity of solutions and free boundaries. Inventiones mathematicae 208(3), 1155–1211 (2017)

Carlen, E.A., Kusuoka, S., Stroock, D.W.: Upper bounds for symmetric Markov transition functions. Annales de l’Institut Henri Poincaré, Probabilités et Statistiques 23(2, suppl.), 245–287 (1987)

Chacon, R.M.: Barrier Stopping Time and the Filling Scheme. PhD thesis, University of Washington (1986)

Chung, K.L., Walsh, J.B.: Markov Processes, Brownian Motion, and Time Symmetry, vol. 249. Springer (2006)

Cox, A.M.G., Peskir, G.: Embedding laws in diffusions by functions of time. Ann. Probab. 43(5), 2481–2510 (2015)

Cox, A.M.G., Hobson, D.G.: A unifying class of Skorokhod embeddings: connecting the Azéma-Yor and Vallois embeddings. Bernoulli 13, 114–130 (2007)

Cox, A.M.G., Obłój, J., Touzi, N.: The Root solution to the multi-marginal embedding problem: an optimal stopping and time-reversal approach. Probab. Theory Relat. Fields 173(1–2), 211–259 (2019)

Cox, A.M.G., Wang, J.: Root’s barrier: construction, optimality and applications to variance options. Ann. Appl. Probab. 23(3), 859–894 (2013)

Davis, P.J., Rabinowit, P.: Methods of Numerical Integration. Courier Corporation (2007)

De Angelis, T.: From optimal stopping boundaries to Rost’s reversed barriers and the Skorokhod embedding. In: Annales de l’Institut Henri Poincaré, Probabilités et Statistiques, vol. 54, pp. 1098–1133. Institut Henri Poincaré (2018)

Dellacherie, C., Meyer, P.-A.: Probabilités et Potentiel. Hermann (1966)

Döring, L., Gonon, L., Prömel, D.J., Reichmann, O., et al.: On Skorokhod embeddings and Poisson equations. Ann. Appl. Probab. 29(4), 2302–2337 (2019)

Dupire, B.: Arbitrage bounds for volatility derivatives as free boundary problem. Presentation at PDE and Mathematical Finance. KTH, Stockholm (2005)

Dynkin, E.B.: Markov Processes. Springer (1965)

Falkner, N.: The distribution of Brownian motion in Rn at a natural stopping time. Adv. Math. 40(2), 97–127 (1981)

Falkner, N.: Stopped distributions for Markov processes in duality. Zeitschrift für Wahrscheinlichkeitstheorie und Verwandte Gebiete 62(1), 43–51 (1983)

Falkner, N., Fitzsimmons, P.J.: Stopping distributions for right processes. Probab. Theory Relat. Fields 89(3), 301–318 (1991)

Gassiat, P., Mijatović, A., Oberhauser, H.: An integral equation for Root’s barrier and the generation of Brownian increments. Ann. Appl. Probab. 25(4), 2039–2065 (2015)

Gassiat, P., Oberhauser, H., dos Reis, G.: Root’s barrier, viscosity solutions of obstacle problems and reflected FBSDEs. Stoch. Process. Appl. 125(12), 4601–4631 (2015)

Gaveau, B.: Principe de moindre action, propagation de la chaleur et estimees sous elliptiques sur certains groupes nilpotents. Acta Mathematica 139, 95–153 (1977)

Getoor, R.K., Sharpe, M.J.: Excursions of dual processes. Adv. Math. 45(3), 259–309 (1982)

Ghoussoub, N., Kim, Y.-H., Palmer, A.Z.: A solution to the Monge transport problem for Brownian martingales. arXiv preprint arXiv:1903.00527 (2019)

Ghoussoub, N., Kim, Y.-H., Palmer, A.Z.: PDE methods for optimal Skorokhod embeddings. Calc. Var. Part. Differ. Equ. 58(3), 113 (2019)

Guo, G., Tan, X., Touzi, N.: On the monotonicity principle of optimal Skorokhod embedding problem. SIAM J. Control Optim. 54(5), 2478–2489 (2016)

Guo, G., Tan, X., Touzi, N.: Optimal Skorokhod embedding under finitely many marginal constraints. SIAM J. Control Optim. 54(4), 2174–2201 (2016)

Hartman, P., Wintner, A.: On the infinitesimal generators of integral convolutions. Am. J. Math. 64(1), 273–298 (1942)

Hobson, D.: The Skorokhod embedding problem and model-independent bounds for option prices. In: Paris–Princeton Lectures on Mathematical Finance 2010, pp. 267–318. Springer (2011)

Hörmander, L.: Hypoelliptic second order differential equations. Acta Math. 119(1), 147–171 (1967)

Jakobsen, E.R., Karlsen, K.H.: Continuous dependence estimates for viscosity solutions of integro-PDEs. J. Differ. Equ. 212(2), 278–318 (2005)

Kinderlehrer, D., Stampacchia, G.: An Introduction to Variational Inequalities and Their Applications, vol. 31. SIAM (1980)

Kyprianou, A.E., Pardo, J.C., Watson, A.R.: Hitting distributions of \(\alpha \)-stable processes via path censoring and self-similarity. Ann. Probab. 42(1), 398–430 (2014)

Kyprianou, A.E., Watson, A.R.: Potentials of stable processes. In: Séminaire de Probabilités XLVI, pp. 333–343. Springer (2014)

Lévy, P.: Wiener’s Random Function, and Other Laplacian Random Functions. In: Proceedings of the Second Berkeley Symposium on Mathematical Statistics and Probability, pp. 171–187, Berkeley, CA, 1951. University of California Press

Ma, Z.M., Röckner, M.: Introduction to the Theory of (nonsymmetric) Dirichlet Forms. Universitext. Springer, Berlin (1992)

Matsumoto, H., Yor, M., et al.: Exponential functionals of Brownian motion, I: probability laws at fixed time. Probab. Surv. 2, 312–347 (2005)

Obłój, J., et al.: The Skorokhod embedding problem and its offspring. Probab. Surv. 1, 321–392 (2004)

Petrosyan, A., Shahgholian, H., Uraltseva, N.N.: Regularity of Free Boundaries in Obstacle-Type Problems, vol. 136. American Mathematical Society (2012)

Picard, J.: On the existence of smooth densities for jump processes. Probab. Theory Relat. Fields 105(4), 481–511 (1996)

Révész, P.: Random Walk in Random and Non-random Environments. World Scientific (2005)

Revuz, D.: Mesures associées aux fonctionnelles additives de Markov. I. Trans. Am. Math. Soc. 148(2), 501–531 (1970)

Richard, A., Tan, X., Touzi, N.: On the Root solution to the Skorokhod embedding problem given full marginals. arXiv preprint arXiv:1810.10048 (2018)

Root, D.H.: The existence of certain stopping times on Brownian motion. Ann. Math. Stat. 40(2), 715–718 (1969)

Rost, H.: Die Stoppverteilungen eines Markoff-Prozesses mit lokalendlichem Potential. Manuscripta mathematica 3(4), 321–329 (1970)

Rost, H.: The stopping distributions of a Markov process. Inventiones mathematicae 14(1), 1–16 (1971)

Rost, H.: Skorokhod stopping times of minimal variance. In: Séminaire de Probabilités X Université de Strasbourg, pp. 194–208. Springer (1976)

Shiryaev, A.N.: Optimal Stopping Rules, vol. 8. Springer (2007)

Smythe, R.T., Walsh, J.B.: The existence of dual processes. Inventiones mathematicae 19(2), 113–148 (1973)

Tankov, P., Cont, R.: Financial Modelling with Jump Processes. Chapman and Hall/CRC (2003)

Vallois, P.: Quelques inégalités avec le temps local en zero du mouvement brownien. Stoch. Process. Appl. 41(1), 117–155 (1992)

Wittmann, R.: Natural densities of Markov transition probabilities. Probab. Theory Relat. Fields 73(1), 1–10 (1986)

Acknowledgements

PG acknowledges the support of the ANR, via the ANR Project ANR-16-CE40-0020-01. HO is grateful for support from the EPSRC Grant “Datasig” [EP/S026347/1], the Oxford-Man Institute of Quantitative Finance, and the Alan Turing Institute. CZ was supported by the EPSRC Grant EP/N509711/1, via the Project No. 1941799.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Basic definitions from potential theory

Below, we state some definitions taken from [12, Chapter 0 and 1]:

Definition A.1

(Universally measurable sets and nearly Borel sets) Given a Borel \(\sigma \)-algebra \(\mathcal {E}\), define the following \(\sigma \)-algebras on E:

-

(1)

the \(\sigma \)-algebra of universally measurable sets \(\mathcal {E}^*= \bigcap _{\mu \text { finite}} \mathcal {E}^\mu \) given as intersection of completions \(\mathcal {E}^\mu \) of \(\mathcal {E}\) with respect to finite measures \(\mu \),

-

(2)

the \(\sigma \)-algebra \(\mathcal {E}^n\) of nearly Borel sets. We call a set B nearly Borel (with respect to X) if for each finite measure \(\mu \) on E, there exists Borel sets \(B_1 \subset B \subset B_2\) such that \({\mathbb {P}}^\mu (\exists t \ge 0:~X_t \in B_2 \setminus B_1) = 0\).

Definition A.2

(Standard process) On a filtered probability space \(\left( \Omega , {\mathcal {F}}, ({\mathcal {F}}_t)_{t \ge 0}, ({\mathbb {P}}^x)_{x \in E}\right) \), the stochastic process \(X = (X_t)_{t\ge 0}\) with shift operator \(\theta _t\) is called a Markov process with augmented state space \((E_\Delta , \mathcal {E}_\Delta )\), if for all \(s,t\ge 0\), \(x\in E_\Delta \), and \(B\in \mathcal {E}_\Delta \)

-

(1)

\(X_t\) is \({\mathcal {F}}_t\)-\(\mathcal {E}_\Delta \)-measurable,

-

(2)

the map \(x\mapsto {\mathbb {P}}^x(X_t\in B)\) from \(E_\Delta \) to [0, 1] is \(\mathcal {E}_\Delta \)-measurable and \({\mathbb {P}}^\Delta (X_0 = \Delta )=1\),

-

(3)

\(X_t\circ \theta _s = X_{t+s}\), and

-

(4)

\({\mathbb {P}}^x(X_{t+s}\in B|{\mathcal {F}}_t) = {\mathbb {P}}^{X_t} (X_s\in B)\).

Furthermore, it is called a standard process, if additionally

-

(1)

\(({\mathcal {F}}_t)_{t\ge 0}\) is right-continuous and \({\mathcal {F}}_t\) is complete with respect to the family of measures \(\{{\mathbb {P}}^x, ~ x\in E\}\),

-

(2)

the sample paths \(t\mapsto X_t(\omega )\) are càdlàg a.s.,

-

(3)

X satisfies the strong Markov property, i.e. \(X_T\) is \({\mathcal {F}}_T\)-\(\mathcal {E}^*_\Delta \)-measurable and for all bounded measurable functions f and \(({\mathcal {F}}_t)_{t\ge 0}\)-stopping times T we have \({\mathbb {E}}^x[f(X_{T+t})] = {\mathbb {E}}^x\big [{\mathbb {E}}^{X_T}[f(X_t)]\big ]\) for all \(x\in E\) and \(t>0\), and

-

(4)

X is quasi-left-continuous on \([0,\zeta )\), i.e. for any increasing sequence \((T_n)_{n\in {\mathbb {N}}}\) of \(({\mathcal {F}}_t)_{t\ge 0}\)-stopping times such that \(T_n\uparrow T\) almost surely for a stopping time T, it holds that \(X_{T_n}\rightarrow X_T\) almost surely on \(\{T< \zeta \}\).

Semigroup and potential. In Table 2 we let \(x\in E\), \(A\in \mathcal {E}^n\), \(I\subseteq [0,\infty )\), \(f:E\rightarrow {\mathbb {R}}\) be a \(\mathcal {E}^*\)-measurable function (extended to \(E_\Delta \) by \(f(\Delta )=0\)), \(\mu \) be a Borel measure on E, and T be a stopping time.

The potential \(\mu U(A)\) of a measure \(\mu \) on a set A describes the occupation of the set A by X over its lifetime when starting in the initial distribution \(\mu \); on the other hand, Uf(x) evaluates the mass transported over the entire lifetime after starting in x under f. This explains the notation for the different actions \(\mu U\) and Uf of the potential kernel on \(\mu \) and f as we start in \(\mu \) and end in f, respectively.

Definition A.3

(Excessive functions and measures) A non-negative \(\mathcal {E}^*\)-measurable function \(f:E\rightarrow {\mathbb {R}}_+ \cup \{+\infty \}\) is called excessive if \(P_t f(x)\le f(x)\) for all \(x\in E\) and \(\lim _{t \downarrow 0}P_tf=f\) pointwise.

Analogously, a Borel measure \(\mu \) is called excessive if it is \(\sigma \)-finite and \(\mu P_t(A)\le \mu (A)\) for all \(A\in \mathcal {E}\) and \( t\ge 0\).

Fine topology. In Table 3, the set A denotes a nearly Borel set.

Intuitively, the polar sets are those sets which are never visited at positive times by the process, while semipolar sets are those sets which are almost surely visited only countably many times by the process. Every polar set is semipolar, but the reverse implication is not true in general.

Appendix B: Properties of the réduite

Definition B.1

(Réduite) Given a Markov semigroup \((P_t)\) associated to a standard process, and given \(h \ge 0\) Borel-measurable and finely lower semicontinuous, we define the réduite (or smallest excessive majorant) of h by

Proposition B.2

(Shiryaev, Lemma 3 and Theorem 1 in [61, Chapter 3]) Let X be a standard process with semigroup \((P_t)\) and \(h\ge 0\) finely lower semicontinuous. Then:

-

(1)

\({\mathrm{Red}}_P(h) \) is excessive.

-

(2)

For all \(x \in E\), it holds that

$$\begin{aligned} {\mathrm{Red}}_P(h)(x) = \sup _{\tau } {\mathbb {E}}^x\left[ h(X_{\tau }) \mathbb {1}_{\{\tau <\zeta \}}\right] , \end{aligned}$$where the supremum ranges over stopping times \(\tau \) taking values in \([0,\zeta )\).

-

(3)

Define for \(\delta >0\) and \(g \ge 0\) Borel-measurable, \(R_\delta (g) = g \vee P_{\delta } g.\) Then it holds that

$$\begin{aligned} {\mathrm{Red}}_P(h) = \lim _{n \rightarrow \infty } \lim _{N \rightarrow \infty } R_{2^{-n}}^N(h). \end{aligned}$$

Given a (positive) Borel measure \(\gamma \), we similarly define

(note that the infimum above is the infimum of a family of measures, namely the smallest measure dominated by all measures in the family).

Lemma B.3

Assume that X and \(\widehat{X}\) are standard processes in strong duality with respect to a reference measure \(\xi \). Let h be finely lower semi-continuous and \(\gamma (\mathrm {d}x) = h(x) \xi (\mathrm {d}x)\). Then

Proof

It is easy to see that \({\mathrm{Red}}_{\widehat{P}}(\gamma )\) is a \(\widehat{P}\)-excessive measure, and it therefore admits a P-excessive density g. Since \({{\mathrm{Red}}}_{\widehat{P}}(\gamma ) \ge \gamma \), it holds that \(g \ge h\) \(\xi \)-a.e. We then actually have the inequality everywhere since

using the semicontinuity of h. Therefore \(g \ge {\mathrm{Red}}_P(h)\).