Abstract

Assistive robots operate in complex environments and in presence of human beings, but the interaction between them can be affected by several factors, which may lead to undesired outcomes: wrong sensor readings, unexpected environmental conditions, or algorithmic errors represent just a few examples of the possible scenarios. When the safety of the user is not only an option but must be guaranteed, a feasible solution is to rely on a human-in-the-loop approach, e.g., to monitor if the robot performs a wrong action during a task execution or environmental conditions affect safety during the human-robot interaction, and provide a feedback accordingly. The present paper proposes a human-in-the-loop framework to enable safe autonomous navigation of an electric powered and sensorized (smart) wheelchair. During the wheelchair navigation towards a desired destination in an indoor scenario, possible problems (e.g. obstacles) along the trajectory cause the generation of electroencephalography (EEG) potentials when noticed by the user. These potentials can be used as additional inputs to the navigation algorithm in order to modify the trajectory planning and preserve safety. The framework has been preliminarily tested by using a wheelchair simulator implemented in ROS and Gazebo environments: EEG signals from a benchmark known in the literature were classified, passed to a custom simulation node, and made available to the navigation stack to perform obstacle avoidance.

Similar content being viewed by others

1 Introduction

Human robot cooperation and interaction have experienced significant growth in the last years to support people with reduced motor skills, both from the academic and industrial point of view. In particular, real time feedback from the human to the robot is an emerging requirement, with the main goal of ensuring human safety. In cooperative tasks, such feedback allows to handle possible environmental factors which may negatively affect the cooperative performance, and possibly mitigate the effects of unexpected factors, as investigated in the literature (Iturrate et al. 2010, 2012; Ferracuti et al. 2013; Zhang et al. 2015; Salazar-Gomez et al. 2017; Foresi et al. 2019). Wrong sensor readings, unexpected environmental conditions or algorithmic errors are just some of the factors which can expose to serious safety risks. For these reasons, it is fundamental that the human operator is included within the robot control loop, so that she/he can modify the robot’s decisions during human-robot interaction if needed (Iturrate et al. 2009). Different works, such as Behncke et al. (2018) and Mao et al. (2017), have investigated these kinds of applications by considering real-time feedbacks about the surrounding environment as well as robot control architecture and behavior via electroencephalographic (EEG) signals. In this context, the predictive capability of event-related potentials (ERPs) and error related-potentials (ErrPs) for early detection of driver’s intention in real assisted driving systems is investigated in the literature. Specifically, several studies have collectively identified neurophysiological patterns of sensory perception and processing that characterized driver emergency braking prior to the action taking place (Haghani et al. 2020). In Haufe et al. (2011), eighteen healthy participants were asked to drive a virtual racing car. The ERPs were elicited and the signatures preceding executed emergency braking were analyzed revealing the capability to recognize the driver’s intention to brake before any actions become observable. The results of this study indicate that the driver’s intention to perform emergency braking can be detected 130 ms earlier, than the car pedal responses using EEG and electromyography (EMG). In Haufe et al. (2014), in a real driving car situation, the authors showed that the amplitudes of brain rhythms reveal patterns specific to emergency braking situations indicating the possibility of performing fast detection of forced emergency braking based on EEG. In Lee et al. (2017), the proposed system was based on recurrent convolutional neural networks and tested on 14 participants recognizing the braking intention at 380 ms earlier based on early

ERP patterns than the brake pedal. In Hernández et al. (2018), the authors showed also the feasibility of incorporating recognizable driver’s bioelectrical responses into advanced driver-assistance systems to carry out early detection of emergency braking situations which could be useful to reduce car accidents. In Khaliliardali et al. (2019), the authors presented an EEG based decoder of brain states preceding movements performed in response to traffic lights in two experiments in a car simulator and a real car. The experimental results confirmed the presence of anticipatory slow cortical potentials in response to traffic lights for accelerating and braking actions. The anticipatory capability of slow cortical potentials of specific actions, namely braking and accelerating, was investigated also in Khaliliardali et al. (2015). The authors showed that the centro-medial anticipatory potentials are observed as early as 320 ± 200 ms before the action. In Il-Hwa Kim and Jeong-Woo Kim and Stefan Haufe and Seong-Whan Lee (2014), the authors studied the brain electrical activity in diverse braking situations (soft, abrupt, and emergency) during simulated driving and their results showed neuronal correlations, in particular movement-related potentials (MRP) and event-related desynchronization (ERD), that can be used to distinguish between different types of braking intentions. In Nguyen and Chung (2019), the authors developed a system to detect the braking intention of drivers in emergency situations using EEG signals and motion-sensing data from a custom-designed EEG headset during simulated driving. Experimental results indicated the possibility to detect the emergency braking intention approximately 600 ms before the onset of the executed braking event, with high accuracy. Thus, the results demonstrated the feasibility of developing a brain-controlled vehicle for real-world applications. Other works related to approaches for detecting emergency braking intention for brain-controlled vehicles by interpreting EEG signals of drivers were proposed in Teng et al. (2018), Teng and Bi (2017), Teng et al. (2015), Gougeh et al. (2021). The experimental results showed in Teng and Bi (2017), Teng et al. (2015) indicated that the system could issue a braking command 400 ms earlier than drivers, whereas, in Gougeh et al. (2021), the system was able to classify three classes with high accuracy and moreover commands could be predicted 500 ms earlier. In Vecchiato et al. (2019), it was established that dorso-mesial premotor cortex has involvement in the preparation of foot movement for braking and acceleration actions. The error (related) negativity (Ne/ERN) is an event-related potential in the electroencephalogram correlating with error processing. Its conditions of appearance before terminal external error information suggest that the Ne/ERN is indicative of predictive processes in the evaluation of errors. In Joch et al. (2017), the authors showed a significant negative deflection in the average EEG curves of the error trials peaking at 250 ms before error feedback. They concluded that Ne/ERN might indicate a predicted mismatch between a desired action outcome and the future outcome. Brain Computer Interface (BCI) can be successfully applied in this context (Alzahab et al. 2021), and Zhang et al. (2013, 2015) presented the first online BCI system tested in a real car to detect Error-Related Potentials (ErrPs), as a first step in transferring error-related BCI technology from laboratory studies to the real-world driving tasks. The studies presented an EEG-based BCI that decodes error-related brain activity showing whether the driver agrees with the assistance provided by the vehicle. Such information can be used, e.g., to predict driver’s intended turning direction before reaching road intersections. Furthermore, the authors suggested that such error-related activity could not only infer the driver’s immediate response to the assistance, but can also be used to gradually adapt the driving assistance for future occasions.

In addition, it was investigated in the literature the role of ErrPs as a mean to provide an instrument to improve or correct the misbehavior introduced during the operation of a robot (Omedes et al. 2015). Error potentials have been shown to be elicited when the user’s expected outcome differs from the actual outcome (Falkenstein et al. 2000) and have already been used to correct the commands executed by a robot, to adapt classification or as feedback to other robots. An interesting application is related to the use of error-related potentials in a semi-automatic wheelchair system as proposed in Perrin et al. (2010). They performed an experiment in which the participants monitored navigation of a robotic wheelchair in realistic simulation as well as a real environment. Reportedly, the ErrP was elicited when the semi-automatic wheelchair made a wrong move that restrained it from reaching a predefined target.

The aim of the present paper is to develop a human-in-the loop framework for addressing accurate autonomous navigation of an assistive mobile platform, while simultaneously accounting for unexpected and undetected errors by using EEG signals as feedback. In detail, a specific assistive mobile robot is investigated adding the possibility of modifying its pre-planned navigation when it receives a message from the human operator. The robot is a smart wheelchair, capable of performing semiautonomous navigation, while human-robot communication is obtained via BCI: this device is especially useful for people who have very limited mobility and whose physical interaction with the wheelchair must be minimal. In detail, when the user notices the presence of an obstacle not detected by the sensors installed on the wheelchair, then EEG signals generated in her/his brain are recorded by the BCI system, as investigated in Ferracuti et al. (2020) and Ciabattoni et al. (2021). Consequently, an alert message is sent to the mobile robot in order to redefine the navigation task at the path planning level. The possibility for the user to participate in the human-robot cooperation task can be generalized to face all those environmental changes that the system may not be able to manage, as well as to correct possible erroneous robot decisions due to software and/or hardware problems. The size and shape of an object undetected by the sensor set, its distance from the wheelchair, together with the relative speed, as well as EEG signal classification and communication speed, all play an important role.

The present paper proposes the architecture of the human-in-the-loop navigation, together with preliminary results. In detail, the proposed framework has been tested by using a simulator, implemented in ROS and Gazebo environments, which replicates a smart wheelchair model, together with sensors and navigation capabilities. A classifier was then designed and tested with EEG signals from a benchmark known in the literature, in order to provide information to the navigation stack. A simulation node was finally developed in order to collect the output of the classifier, and modify the trajectory tracking of the simulated wheelchair in response to the EEG signals classified.

The paper is organized as follows. The proposed approach is introduced in Sect. 2 mainly focusing on the robot trajectory planning and the EEG methods for human-robot interaction. The hardware of the system and the preliminary results of the proposed approach are discussed in Sect. 3. Conclusions and future improvements end the paper in the last Sect. 4.

2 Proposed approach

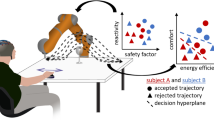

Assistive robots, employed to support the mobility of impaired users, are usually equipped with several sensors, used for both navigation and detection of possible obstacles on the way. However, in some cases, these sensors can not correctly detect objects (e.g., holes in the ground, stairs and small objects are often missed by laser rangefinders). The proposed idea is that of including the human observation within the robot control loop, by recording EEG signals to detect possible changes in the brain response as a result of a visual sensory event, and sending a feedback to the robot. The proposed human-in-the-loop approach is sketched in Fig. 1. The ROS (Robot Operating System) ecosystem was used as a base to build the proposed solution, due to its flexibility, wide range of tools and libraries for sensing, robot control and user interface. A description of the core modules of the proposed approach is given in the following subsections.

2.1 Robot navigation

The main goal of the navigation algorithm is to determine the global and local trajectories that the robot (the smart wheelchair in our case) follows to move to a desired point, defined as navigation goal, from the starting position, considering possible obstacles not included in the maps (Cavanini et al. 2017). The navigation task performed by the smart wheelchair is mainly composed of three different steps: localization, map building and path planning (Bonci et al. 2005; Siciliano and Khatib 2016), with the possibility to detect and handle the navigation sensor faults (Ippoliti et al. 2005). Each step is briefly described in the following, and was technically performed via ROS modules.

2.1.1 Localization

The estimation of the current position in the environment is based on the combination of Unscented Kalman Filter (UKF) and Adaptive Monte Carlo Localization (AMCL). The UKF is a recursive filter that takes as input a set of proprioceptive measurements affected by noise (e.g., inertial and odometric measurements), and returns the estimation of the robot position by exploiting knowledge of the nonlinear model in combination with the Unscented Transformation (UT), namely a method for calculating the statistics of a random variable which undergoes a nonlinear transformation (Wan and Van Der Merwe 2000). The estimation of the robot position provided by the UKF is then fed to the AMCL, which exploits recursive Bayesian estimation and a particle filter to determine the actual robot pose within the map, updated by using exteroceptive measurements (e.g., laser rangefinder). When the smart wheelchair moves and perceives changes from the outside world, the algorithm uses the “Importance Sampling” method (Nordlund and Gustafsson 2001). It is a technique that allows to determine the property of a particular distribution, starting from samples generated by a different distribution with respect to that of interest. The localization approach by Monte Carlo can be resumed into two phases: in the first one, when the robot moves, the algorithm provides for the generation of N new particles which approximate the position after the movement just made. Each particle, containing the expected position and orientation of the wheelchair, is randomly generated by choosing from the set of samples determined at the previous instant, where the update is determined by the system model via a weighting factor. In the second phase, the sensory readings are included in the weighting process to account for newly available information.

2.1.2 Mapping

The mapping step does consist of the representation of the environment where the wheelchair operates, which should contain enough information to let it accomplish the task of interest. More specifically, it is a preliminary step during which the map is built. Indeed, as previously seen, the localization is performed via AMCL, which uses a particle filter to track the pose of a robot against a known map. The a priori map of the environment has thus to be provided to the smart wheelchair before semi-autonomous navigation can be performed. The solution adopted is that of exploiting the laser rangefinder, positioned on fixed support at the base of the smart wheelchair, both for generating the map during the mapping step, and to perform obstacle avoidance (as it will be described later) during the path planning step. The map is acquired by manually driving the wheelchair via the joystick interface within the environment, where the laser acquires distance measurements for map reconstruction. The map obtained in this way is static and does not contain information on unexpected (e.g. moving) obstacles.

2.1.3 Path planning

Path planning involves the definition of the path to take in order to reach a desired goal location, given the wheelchair position within the map while taking into account possible obstacles. The applied navigation algorithm is the Dynamic Window Approach (DWA) (Ogren and Leonard 2005). The main feature of DWA is based on the fact that the control commands to the wheelchair are directly selected in the velocity space (linear and rotational). This space is limited by constraints that directly influence the behavior of the wheelchair: some of these constraints are imposed by the obstacles in the environment, while others come from the technical specifications of the wheelchair, such as its maximum speed and acceleration. All the allowed velocities are calculated by a function that evaluates the distance from the nearest obstacle to a certain trajectory and returns a score choosing the best solution among all the trajectories.

2.2 EEG-based feedback

In the proposed human-in-the-loop approach, the wheelchair operator can interact with it when she/he observes a problem during the navigation task (e.g., the wheelchair is about to fall into something unexpected, such as a hole or an obstacle not detected by the laser rangefinder). In detail, the system allows the operator to send a signal to the wheelchair in order to change its predefined path. The main problems to be solved are how to provide the EEG feedback to the wheelchair, and how to modify the predefined path.

2.2.1 BCI trigger

Brain-computer interfaces are able to translate the brain activity of the user into specific signals, which may be used for communicating or controlling external devices. Specific algorithms are intended to detect the user’s intentions by EEG signals and even predict the action itself. As already stated in Sect. 1, the predictive capability of ERPs and ErrPs for early detection of the driver’s intention in real assisted driving systems can be exploited to correct the erroneous actions of assisted vehicles: the presence of obstacles in the path, originally chosen by the smart wheelchair, triggers EEG potentials which are recorded by the BCI system and sent as feedback to avoid the not detected obstacles. The ERP and ErrP waveforms, typically, arises in the first 600 ms after the event. The ErrP wave, for example, is detectable at almost 500 ms from the error recognition by the user and it is defined by a huge positive peak, preceded and followed by two negative peaks as shown in Fig. 2.

Example of typical ErrP wave shape (Chavarriaga et al. 2008)

In order to test the proposed framework for assisted vehicles, the ROS node related to EEG signal acquisition and processing has been fed with EEG signals from the dataset described in Chavarriaga and Millan (2010) that shows a BCI protocol similar to Zhang et al. (2015), where the authors presented the application of an EEG-based BCI system that decodes error-related brain activity showing whether the driver agrees with the assistance provided by the vehicle. Such information can be used, e.g., to predict driver’s intention and infer the driver’s immediate response to the assistance. Different studies, in literature, have tried to define a protocol right to detect the ErrP signals generated when the subject recognizes an error during a task. In Spüler and Niethammer (2015), authors used a game simulation to involve the participant: the task was to avoid collisions of the cursor with blocks dropping from the top of the screen. The ErrP signal was measured in the signal acquisition originated from the subject recognizing the collision. In the same way, the authors in Omedes et al. (2015), proposed an approach where the subject observes erroneous computer cursor actions during the execution of trajectories. The cursor started each trial moving in the correct direction towards the goal or incorrectly towards one of the other targets. Where the device started correctly, most of the time it continued to the correct goal, but sometimes it performed a sudden change in the trajectory towards an incorrect target, and in these cases ErrP signals were recorded. Moreover, in Kumar et al. (2019), the focus was pointed to robotic control and participants’ task was to observe the robots behavior passively. The robot used the ErrP signal as feedback to perform/reach the desired goal. In this context, the protocol described in Chavarriaga and Millan (2010) has been considered to reply to the control of the wheelchair, developing the user training in a simulator scenario.

2.2.2 Signal preprocessing and classification

In the following, the algorithm implemented in a ROS node for EEG signal analysis and classification is described. The raw data, forwarded to the ROS node, are temporally filtered and a spatial filter is applied to the filtered data to improve the detection of EEG potentials. Finally, the detection of the evoked potentials is performed by the BLDA (Bayesian Linear Discriminant Analysis) classifier. An off-line bandpass forward–backward filtering between 1 and 10 Hz (Butterworth second order filter) was applied to raw data and the statistical spatial filter proposed in Pires et al. (2011) has been considered. The spatial filter allows to obtain a double channel projection from the EEG channels. Spatial filtering is a common feature extraction technique in EEG-based BCIs that simultaneously allows to increase the signal-to-noise ratio (SNR) and reduce the dimension of the feature data. The authors in Pires et al. (2011) proposed a two-stage spatial filter, the first stage is called Fisher criterion beamformer (and it takes into consideration the difference between target and nontarget spatio-temporal patterns). Then, it is expected that the spatial filter maximizes the spatio-temporal differences, leading to an enhancement of specific subcomponents of the ErrPs, whereas the second stage is called Max-SNR beamformer and it maximizes the output SNR. The Fisher criterion is given by the Rayleigh quotient

where \({\mathbf {S}}_w\) is the spatial within-class matrix and \({\mathbf {S}}_b\) is the spatial between-class matrix. The optimum filter \({\mathbf {W}}_1\) is found solving the generalized eigenvalue with matrices \({\mathbf {S}}_b\) and \({\mathbf {S}}_w\). Considering a spatio-temporal matrix \({\mathbf {D}}\) of dimension \([{N\times l}]\), representing the epochs of N channels with l time samples (\(l = 128\)), the first optimal spatial filter projection is obtained from

The matrices \({\mathbf {S}}_b\) and \({\mathbf {S}}_w\) are computed from

and

where \(i \in {T, NT}\) and \(C_T\), \(C_{NT}\) are respectively the Target (T) and Non-Target (NT) classes, and \(p_i\) is the class probability. The matrices \(\hat{{\mathbf {D}}}_i\) and \(\hat{{\mathbf {D}}}\) denotes the average of the epochs in class \(C_i\) and the average of all epochs, respectively, and \({\mathbf {D}}_{i,k}\) is the \(k-th\) epoch of class \(C_i\). Considering \(K_i\) and K the number of epochs in class \(C_i\) and the total number of epochs, respectively, then the matrices \(\hat{{\mathbf {D}}}_i\) and \(\hat{{\mathbf {D}}}\) are

and

In this paper, we considered a modification of the regularization term as proposed in Guo et al. (2006) in the first spatial filter (Fisher criterion beamformer) as follows

where \(\theta\) is the regularized parameter that can be adjusted from training data to increase class discrimination. Using the dataset reported in Chavarriaga and Millan (2010), the proposed solution showed better performances in terms of detection accuracy with respect to the method proposed by Pires et al. (2011). The second optimal spatial filter projection is obtained from

The solution \({\mathbf {W}}_2\) is achieved by finding the generalized eigenvalue decomposition with the matrices \(\hat{{\mathbf {R}}}_T\) and \(\hat{{\mathbf {R}}}_{NT}\) where

and

where \({\mathbf {R}}_k={\mathbf {D}}_k{{\mathbf {D}}_{k}}^{'}/tr({\mathbf {D}}_k{\mathbf {D}}'_k)\) and the matrices \(\hat{{\mathbf {R}}}_T\) and \(\hat{{\mathbf {R}}}_{NT}\) are estimated from the average over the epoch within each class. The size of the target and non-target classes is highly unbalanced and therefore a regularization of the covariance matrices can alleviate overfitting and improve class discrimination as follows

where \(\alpha \le 1\). The hyperparameters \(\alpha\) and \(\theta\) have been set by using a grid search strategy. The concatenation of the two projections \({\mathbf {v}}=[{\mathbf {y}}\,\, {\mathbf {z}}]\) represents the best virtual channels that maximizes both Fischer and Max-SNR criteria in a suboptimum way and they are used by the classifier.

The detection of the evoked potentials is performed by the BLDA classifier, which was proposed in MacKay (1991). Among the proposed classifiers for BCIs, BLDA was chosen since it was efficient and fully automatic (i.e., no hyperparameters to adjust). BLDA aims to fit data \({\varvec{x}}\) using a linear function of the form:

where \(\varvec{\phi ({\varvec{x}})}\) is the feature vector, assuming that the target variable is equal to \(t=y({\varvec{x}},{\varvec{w}})+\epsilon\), where \(\epsilon\) is Gaussian noise. The objective of BLDA is to minimize the function:

where \(\gamma\) and \(\beta\) are automatically inferred from data by using a Bayesian framework.

2.2.3 Feedback policy

When the feedback from the BCI is received, the path chosen during the path planning step must be modified in order to incorporate it in the control loop and possibly avoid the obstacle not detected by the navigation sensors. The feedback policy is described in the following.

Speed reduction As soon as the feedback from the BCI is received, the wheelchair speed is reduced, in order to increase the time available for the human-in-the-loop correction to take place.

Virtual obstacle creation The map is modified by creating a virtual obstacle within the map itself. The obstacle is a solid characterized by the following parameters: the position of its center within the map, the length and the width (the height of the obstacle is not considered, as the path planning algorithm operates in 2D). The center of the virtual obstacle should be theoretically placed at the center of the real obstacle detected by the user, while its area was chosen such that the real obstacle is inscribed in the virtual one. In practice, the adopted heuristic consisted of placing a virtual cylinder of the same dimension of the wheelchair along its trajectory (i.e., in the line of sight of the user) at a predefined distance.

Path planning iteration As soon as the virtual obstacle is introduced into the map, the path planning step is repeated. Since the path planning step does not discriminate between real and virtual obstacle, it will modify the local trajectory planning in order to avoid the obstacle. The resulting trajectory is safe, as long as the area covered in the map by the virtual obstacle includes the one which should be avoided in reality (e.g., the real obstacle).

The flowchart of the feedback policy is described in Fig. 3.

Please note that the first step of the feedback policy is to reduce the wheelchair speed as soon as the classifier provides a viable output from the EEG signals: this operation requires, on average, a time of the order of \(10^{-3} \text {s}\), which sums up to the time between the event and the generation of the required EEG signal. Indeed, the reduced speed is chosen as a trade-off between time to travel to the obstacle and time required by the navigation stack to modify the trajectory, the latter depending on the hardware mounted on the wheelchair. As such, the proposed framework can theoretically cope with close obstacles, where the actual minimum distance depends on the available technology but would require an experimental trial to be determined.

2.3 Navigation—EEG feedback integration via ROS nodes

The integration between the smart wheelchair navigation and the EEG feedback was realized by creating dedicated ROS nodes. The wheelchair software is basically composed of ROS packages and nodes, which acquire data from the sensor sets, elaborate the information and command the wheels accordingly. The ROS navigation stack takes information from odometry and sensor streams and outputs velocity commands to drive the smart wheelchair. As previously stated, the correction action on the wheelchair trajectory is obtained by creating imagery (virtual) obstacles on the map layer. The navigation stack then changes dynamically the cost map, by using sensor data and point clouds. In particular, a new software package has been created which allows a link between the BCI and the navigation task.

The implemented package is able to:

-

subscribe and listen continuously to the robot position;

-

transform the robot pose from the robot frame to the map frame;

-

subscribe/listen for the trigger generated by the BCI;

-

create the obstacle geometry and position it on the map;

-

convert it to ROS point clouds.

Then, the point clouds are published in the ROS navigation stack where the local and global cost map parameters are modified. The implementation of the nodes architecture is represented and detailed in Fig. 4.

3 Preliminary setup and results

The proposed human-in-the-loop architecture, as described in Sect. 2, has been applied to a specific setup and some preliminary results are collected. In this Section, the setup of the wheelchair as well as the dataset used by the classifier for generating the BCI trigger are first reported. Then, quantitative results regarding the classification performances, as well as qualitative results showing the feedback policy, are presented.

3.1 Setup

A scheme of the system setup is reported in Fig. 5 in order to show how the different sensors and components of the system are connected to each other. Please note that the proposed approach can be generalized to other hardware as well (i.e., different BCI systems, mobile robots or robotic arms).

3.1.1 Smart wheelchair

The mobile robot used for this study is based on the Quickie Salsa \(R^2\), an electric powered wheelchair produced by Sunrise Medical company. Its compact size and its low seat to floor height (starting from 42 cm) gives it flexibility and grant it easy access under tables, allowing a good accessibility in an indoor scenario. The mechanical system is composed of two rear driving wheels and two forward caster wheels; these last are not actuated wheels, but they are able to rotate around a vertical axis. The wheelchair is equipped with an internal control module, the OMNI interface device, manufactured by PG Drivers Technology. This controller has the ability to receive input from different devices of SIDs (Standard Input Devices) and to convert them to specific output commands compatible with the R-net control system. In addition, an Arduino MEGA 2560 microcontroller, a Microstrain 3DM-GX3-25 inertial measurement unit, two Sicod F3-1200-824-BZ-K-CV-01 encoders, an Hokuyo URG-04LX laser scanner and a Webcam Logitech C270 complete the smart wheelchair equipment. The encoders, inertial measurement unit and the OMNI are connected to the microcontroller, while the microcontroller itself and the other sensors are connected via USB to a computer running ROS. Signals from the Sicod and Microstrain devices are converted by the Arduino and sent to the ROS localization module. The information provided by the Hokuyo laser scanner is used by the mapping module and by the path planning module for obstacle avoidance. Once a waypoint is chosen by the user, the path planning module creates the predefined path: this can be then modified by a trigger coming from BCI signal as described before. Participants can receive continuous visual feedback on the planned trajectory by the path planning system.

3.1.2 Dataset description

The dataset described in Chavarriaga and Millan (2010) was used by the authors to evaluate the preliminary tests of the proposed framework for assisted vehicles. Six subjects (1 female and 5 male, mean age 27.83 ± 2.23 years standard deviation) performed two recording sessions (session 1 and session 2) separated by several weeks. Both session 1 and session 2 consisted of 10 blocks of 3 min each: each block was composed of approximately 50 trials and each trial was about 2000 ms long. In each trial, the user, without sending any command to the agent, only assessed whether an autonomous agent performed the task properly. In particular, the task consisted of a cursor reaching a target on a computer screen. Specifically, at the beginning of each trial, the user was asked to focus on the center of the screen, while during the trial was asked to follow the movement of the cursor, knowing the goal of the task. Thus, ErrPs were elicited by monitoring the behavior of the agent. The dataset is composed of a group of EEG stimulus-locked recordings elicited by a moving cursor (green square) and a randomly positioned target screen (red square). The participants for testing tried to guess the position of a target controlled by an artificial agent. If the agent’s cursor did not reach the position as the random target was considered an error, while trials on which the agent’s cursor reached the target position were considered correct. After correct trials, the target position randomly changed positions. Six participants were enrolled and received the training before the acquisition in two sessions separated weeks apart. Each session is composed of about 500 trials and the agent error probability was set to 0.20. All the trials were windowed (0–500 ms). In the paper, the Non-Target trials (NT) refer to a successful reaching of the final target, whereas, the Target trials (T) refer to those trials where the cursor does not reach the target position, namely the ErrP signal is evoked.

3.2 Results

Quantitative results regarding the classification performances as well as qualitative results showing the feedback policy are presented in the following sections.

3.2.1 BCI results

The dataset in Chavarriaga and Millan (2010) consists of two recording sessions (session 1 and session 2) separated by several weeks. The single-trial classification of ErrPs has been assessed using the first dataset/session (i.e., about 500 trials for each subject) for training the spatial filters and the BLDA classifier and the second dataset/session (i.e., about 500 trials for each subject) for the algorithm testing. During the acquisition, 64 electrodes were placed according to the standard (“International 10/20 system”) and EEG data were recorded by using a Biosemi ActiveTwo system at a sampling rate of 512 Hz. The data were downsampled to 256 Hz and a subset of eight electrodes, i.e., Fz, Cz, P3, Pz, P4, PO7, PO8 and Oz were considered for the analysis. An off-line bandpass forward–backward filtering between 1 and 10 Hz (Butterworth second order filter) and the spatial filter described in Sect. 2.2.2 were applied. The ErrPs morphology of the considered dataset is reported both in Chavarriaga and Millan (2010) and Ferracuti et al. (2020). In those works, the authors display the grand average of the recorded signal and the scalp with the localization of neural activation related to each condition of the task from channels Cz and FCz that are the channels most involved in ErrP detection and by considering also different spatial filters. The main findings are that the grand average ErrPs morphology obtained in Chavarriaga and Millan (2010) is consistent with that obtained in Ferracuti et al. (2020), and the ErrP waveform is a small positive peak near 200 ms after delivering the feedback, followed by a negative deflection around 260 ms and another positive peak around 300 ms. Finally, strong ErrPs stability between session 1 and session 2 was observed for all the methods tested, this aspect is essential for BCI applications. Table 1 shows the classification accuracy and Area Under the Curve (AUC) of training data (i.e., first session) for the six subjects as presented in the used dataset Chavarriaga and Millan (2010), whereas Table 2 shows the classification accuracy and area under the curve of testing data (i.e., second session). The reported results refer to the case of \(\theta =0.4\) and \(\alpha =0.1\) for the spatial filter since it gives the best results in terms of area under the curve.

Finally, Table 3 shows the overall performances in terms of accuracy, sensitivity, specificity and F1-score.

3.2.2 Simulation results

The system has been preliminarily tested by simulating the smart wheelchair detailed in Sect. 3.1.1, together with its sensory set, in the open-source 3D robotic simulator Gazebo, and using the output of the classifier described in Sect. 2.2.2 as a trigger for the human-in-the-loop feedback. Please note that the nodes and topics used for testing the wheelchair simulated in Gazebo are the same as those developed for the real system. The ErrP signals were correctly processed and recognized by the classifier and a message was written in a ROS node, interacting with the navigation system of the smart wheelchair as detailed in the policy described in Fig. 3. In detail, when the ErrP signal is triggered, a cylinder of the same size as the wheelchair was virtually created within the map available for path planning, and the path planning step iterated for validating the obstacle avoidance capabilities. The resulting trajectories, before and after the introduction of the virtual object within the map triggered by the ErrP signals, are reported in Fig. 6.

4 Conclusions

The study investigates the use of EEG signals in a closed-loop system, proposing a human-in-the-loop approach for path planning correction of assistive mobile robots. In particular, this study supports the possibility of a real-time feedback between the smart wheelchair and the BCI acquisition system, allowing the user to actively participate in the control of the planned trajectory, avoiding factors in the environment that may negatively affect user safety. This kind of interaction promotes the user intervention in robot collaborative task: the user must not only choose where to go or which object to take, but can also monitor if the task is correctly realized and provide a feedback accordingly. This approach could be a desirable solution for a user everyday’s life, especially for those users who have limited physical capabilities to control the wheelchair. The presence of the user in the closed-loop system promotes her/his involvement in the human-robot interaction allowing a direct participation and control on the task execution. Overall, the current study suggests that the adoption of the proposed human-in-the-loop approach in autonomous robot development is a fruitful research direction, in which human intervention can drastically improve human safety and environmental security.

So far, only the BCI trigger has been developed and tested in a ROS simulated scenario, but all the architecture system has been developed with the creation of ROS node to interface the BCI system and the smartwheelchair as described in Sect. 2. Even if the results are at a preliminary stage, and in simulation only, the system is able to recognize the EEG signals and send a feedback to the wheelchair, which can be used to modify its path. Future works include the following aspects:

-

Perform a trial with people in order to validate the classification performances on the EEG signals acquired via BCI by watching videos realized using the wheelchair simulated in Gazebo. In detail, by using simulated obstacles in a first person view of the wheelchair within the 3D simulator, it could be possible to create a visual experience very close to the real one;

-

Define the policy to recalculate the path and avoid obstacles when the trigger is activated. The major problem is currently due to the fact that the distance between the wheelchair and the obstacle, at the moment of detection, is not known. As such, we are investigating two possible solutions:

-

perform a set of trials, in order to obtain an average estimation of the distance at which a ground obstacle, whose size is smaller than the wheelchair, is typically detected by the user;

-

do not consider the distance between obstacle and wheelchair, and create a long virtual obstacle along the line of sight of the wheelchair to modify the trajectory iteratively;

-

-

Perform a trial with people in order to experimentally validate the classification performances on the EEG signals acquired via BCI by using a real wheelchair, with the aim to reduce the time required to recognize the object along its trajectory;

-

The last step will be the experimental test of whole human-in-the-loop navigation architecture with different subjects and different obstacles.

References

Alzahab NA, Apollonio L, Di Iorio A, Alshalak M, Iarlori S, Ferracuti F, Monteriù A, Porcaro C (2021) Hybrid deep learning (hDL)-based brain-computer interface (BCI) systems: a systematic review. Brain Sci 11(1)

Behncke J, Schirrmeister RT, Burgard W, Ball T (2018) The signature of robot action success in EEG signals of a human observer: Decoding and visualization using deep convolutional neural networks. In: 2018 6th International conference on brain-computer interface (BCI), pp 1–6

Bonci A, Longhi S, Monteriù A, Vaccarini M (2005) Navigation system for a smart wheelchair. J Zhejiang Univ Sci A 6:110–117

Cavanini L, Cimini G, Ferracuti F, Freddi A, Ippoliti G, Monteriù A, Verdini F (2017) A QR-code localization system for mobile robots: application to smart wheelchairs. In: 2017 European conference on mobile robots (ECMR), pp 1–6

Chavarriaga R, Millan JDR (2010) Learning from EEG error-related potentials in noninvasive brain-computer interfaces. IEEE Trans Neural Syst Rehabil Eng 18(4):381–388

Chavarriaga R, Ferrez PW, Millán JdR (2008) To err is human: learning from error potentials in brain-computer interfaces. In: Wang R, Shen E, Gu F (eds) Advances in cognitive neurodynamics ICCN 2007. Springer, Dordrecht, pp 777–782

Ciabattoni L, Ferracuti F, Freddi A, Iarlori S, Longhi S, Monteriù A (2021) Human-in-the-loop approach to safe navigation of a smart wheelchair via brain computer interface. In: Monteriù A, Freddi A, Longhi S (eds) Ambient assisted living. Springer, Cham, pp 197–209

Falkenstein M, Hoormann J, Christ S, Hohnsbein J (2000) ERP components on reaction errors and their functional significance: a tutorial. Biol Psychol 51:87–107

Ferracuti F, Freddi A, Iarlori S, Longhi S, Peretti P (2013) Auditory paradigm for a P300 BCI system using spatial hearing. In: 2013 IEEE/RSJ international conference on intelligent robots and systems, pp 871–876

Ferracuti F, Casadei V, Marcantoni I, Iarlori S, Burattini L, Monteriù A, Porcaro C (2020) A functional source separation algorithm to enhance error-related potentials monitoring in noninvasive brain-computer interface. Comput Methods Progr Biomed 191:105419

Foresi G, Freddi A, Iarlori S, Monteriù A, Ortenzi D, Proietti Pagnotta D (2019) Human-robot cooperation via brain computer interface in assistive scenario. ser Lecture notes in electrical engineering, ambient assisted living: Italian Forum 2017, vol 540, pp 115–131

Gougeh RA, Rezaii TY, Farzamnia A (2021) An automatic driver assistant based on intention detecting using EEG signal. In: Md Zain Z, Ahmad H, Pebrianti D, Mustafa M, Abdullah NRH, Samad R, Mat Noh M (eds) Proceedings of the 11th national technical seminar on unmanned system technology 2019, Springer Singapore, Singapore, pp 617–627

Guo Y, Hastie T, Tibshirani R (2006) Regularized linear discriminant analysis and its application in microarrays. Biostatistics 8(1):86–100

Haghani M, Bliemer MCJ, Farooq B, Kim I, Li Z, Oh C, Shahhoseini Z, MacDougall H (2020) Applications of brain imaging methods in driving behaviour research. 2007.09341

Haufe S, Treder MS, Gugler MF, Sagebaum M, Curio G, Blankertz B (2011) EEG potentials predict upcoming emergency brakings during simulated driving. J Neural Eng 8(5):056001

Haufe S, Kim J, Kim IH, Sonnleitner A, Schrauf M, Curio G, Blankertz B (2014) Electrophysiology-based detection of emergency braking intention in real-world driving. J Neural Eng 11(5):056011

Hernández LG, Mozos OM, Ferrández JM, Antelis JM (2018) EEG-based detection of braking intention under different car driving conditions. Front Neuroinform 12:29

Ippoliti G, Longhi S, Monteriù (2005) Model-based sensor fault detection system for a smart wheelchair. In: IFAC proceedings, vol 38(1), 16th IFAC world congress, pp 269–274

Iturrate I, Antelis JM, Kubler A, Minguez J (2009) A noninvasive brain-actuated wheelchair based on a p300 neurophysiological protocol and automated navigation. IEEE Trans Rob 25(3):614–627

Iturrate I, Montesano L (2010) Minguez J (2010) Single trial recognition of error-related potentials during observation of robot operation. In: Annual international conference of the IEEE engineering in medicine and biology, pp 4181–4184

Iturrate I, Chavarriaga R, Montesano L, Minguez J (2012) Millan JdR (2012) Latency correction of error potentials between different experiments reduces calibration time for single-trial classification. In: Annual international conference of the IEEE engineering in medicine and biology society, pp 3288–3291

Joch M, Hegele M, Maurer H, Müller H, Maurer LK (2017) Brain negativity as an indicator of predictive error processing: the contribution of visual action effect monitoring. J Neurophysiol 118(1):486–495

Khaliliardali Z, Chavarriaga R, Gheorghe LA, del Millán JR (2015) Action prediction based on anticipatory brain potentials during simulated driving. J Neural Eng 12(6)

Khaliliardali Z, Chavarriaga R, Zhang H, Gheorghe LA, Perdikis S, Millán JDR (2019) Real-time detection of driver’s movement intention in response to traffic lights. bioRxiv

Kim Il-Hwa, Kim Jeong-Woo, Haufe Stefan, Lee Seong-Whan (2014) Detection of braking intention in diverse situations during simulated driving based on EEG feature combination. J Neural Eng 12(1):016001

Kumar A, Gao L, Pirogova E, Fang Q (2019) A review of error-related potential-based brain-computer interfaces for motor impaired people. IEEE Access 7:142451–142466

Lee S, Kim J, Lee S (2017) Detecting driver’s braking intention using recurrent convolutional neural networks based EEG analysis. In: 2017 4th IAPR Asian conference on pattern recognition (ACPR), pp 840–845

MacKay DJ (1991) Bayesian interpolation. Neural Comput 4:415–447

Mao X, Li M, Li W, Niu L, Xian B, Zeng M, Chen G (2017) Review article progress in EEG-based brain robot interaction systems. Comput Intell Neurosci 2017:1–25

Nguyen TH, Chung WY (2019) Detection of driver braking intention using EEG signals during simulated driving. Sensors 19(13)

Nordlund P, Gustafsson F (2001) Sequential Monte Carlo filtering techniques applied to integrated navigation systems. In: Proceedings of the 2001 American control conference. (Cat. No.01CH37148), vol 6, pp 4375–4380

Ogren P, Leonard NE (2005) A convergent dynamic window approach to obstacle avoidance. IEEE Trans Rob 21(2):188–195

Omedes J, Iturrate I, Chavarriaga R, Montesano L (2015) Asynchronous decoding of error potentials during the monitoring of a reaching task. In: 2015 IEEE international conference on systems, man, and cybernetics, pp 3116–3121

Perrin X, Chavarriaga R, Colas F, Siegwart R, Millan JdR (2010) Brain-coupled interaction for semi-autonomous navigation of an assistive robot. Robot Autonom Syst 58:1246–1255

Pires G, Nunes U, Castelo-Branco M (2011) Statistical spatial filtering for a P300-based BCI: tests in able-bodied, and patients with cerebral palsy and amyotrophic lateral sclerosis. J Neurosci Methods 195(2):270–281

Salazar-Gomez AF, DelPreto J, Gil S, Guenther FH, Rus D (2017) Correcting robot mistakes in real time using EEG signals. In: 2017 IEEE international conference on robotics and automation (ICRA), pp 6570–6577

Siciliano B, Khatib O (2016) Springer handbook of robotics. Springer, Berlin

Spüler M, Niethammer C (2015) Error-related potentials during continuous feedback: using EEG to detect errors of different type and severity. Front Hum Neurosci 9

Teng T, Bi L (2017) A novel EEG-based detection method of emergency situations for assistive vehicles. In: 2017 Seventh international conference on information science and technology (ICIST), pp 335–339

Teng T, Bi L, Fan X (2015) Using EEG to recognize emergency situations for brain-controlled vehicles. In: 2015 IEEE intelligent vehicles symposium (IV), pp 1305–1309

Teng T, Bi L, Liu Y (2018) EEG-based detection of driver emergency braking intention for brain-controlled vehicles. IEEE Trans Intell Transp Syst 19(6):1766–1773

Vecchiato G, Vecchio MD, Ascari L, Antopolskiy S, Deon F, Kubin L, Ambeck-Madsen J, Rizzolatti G, Avanzini P (2019) Electroencephalographic time-frequency patterns of braking and acceleration movement preparation in car driving simulation. Brain Res 1716:16–26

Wan EA, Van Der Merwe R (2000) The unscented Kalman filter for nonlinear estimation. In: Proceedings of the IEEE 2000 adaptive systems for signal processing, communications, and control symposium (Cat. No. 00EX373), Ieee, pp 153–158

Zhang H, Chavarriaga R, Gheorghe L, Millán J (2013) Inferring driver’s turning direction through detection of error related brain activity. In: 2013 35th Annual international conference of the IEEE engineering in medicine and biology society (EMBC), pp 2196–2199

Zhang H, Chavarriaga R, Khaliliardali Z, Gheorghe L, Iturrate I, Millán JdR (2015) EEG-based decoding of error-related brain activity in a real-world driving task. J Neural Eng 12(6):066028

Acknowledgements

The authors would like to thank Daniele Polucci for his support in the data analysis, and Omer Karameldeen Ibrahim Mohamed for his support in the ROS programming.

Funding

Open access funding provided by Università Politecnica delle Marche within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ferracuti, F., Freddi, A., Iarlori, S. et al. Augmenting robot intelligence via EEG signals to avoid trajectory planning mistakes of a smart wheelchair. J Ambient Intell Human Comput 14, 223–235 (2023). https://doi.org/10.1007/s12652-021-03286-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-021-03286-7