Abstract

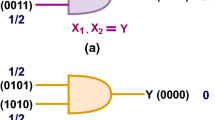

Recently, Deep Neural Networks (DNNs) have played a major role in revolutionizing Artificial Intelligence (AI). These methods have made unprecedented progress in several recognition and detection tasks, achieving accuracy close to, or even better, than human perception. However, their complex architectures requires high computational resources, restricting their usage in embedded devices due to limited area and power. This paper considers Stochastic Computing (SC) as a novel computing paradigm to provide significantly low hardware footprint with high scalability. SC operates on random bit-streams where the signal value is encoded by the probability of a bit in the bit-stream being one. By this representation, SC allows the implementation of basic arithmetic operations including addition and multiplication with simple logic. Hence, SC have potential to implement parallel and scalable DNNs with reduced hardware footprint. A modified SC neuron architecture is proposed for training and implementation of DNNs. Our experimental results show improved classification accuracy on the MNIST dataset by 9.47% compared to the conventional binary computing approach.

Similar content being viewed by others

References

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press, Cambridge

Abdel-Hamid O, Mohamed AR, Jiang H et al (2014) Convolutional neural networks for speech recognition. IEEE Trans Audio Speech Lang Process 22:1533–1545. https://doi.org/10.1109/TASLP.2014.2339736

Wang Y, Luo Z, Jodoin P-M (2017) Interactive deep learning method for segmenting moving objects. Pattern Recognit Lett 96:66–75

Ren A, Li Z, Ding C et al (2017) SC-DCNN: Highly-scalable deep convolutional neural network using stochastic computing. ACM Sigplan Not 52:405–418. https://doi.org/10.1145/3037697.3037746

Mohammadi SH (2019) Text to speech synthesis using deep neural network with constant unit length spectrogram

Yin W, Kann K, Yu M, Schütze H (2017) Comparative study of CNN and RNN for natural language processing. arXiv Prepr arXiv170201923

Visvam Devadoss AK, Thirulokachander VR, Visvam Devadoss AK (2019) Efficient daily news platform generation using natural language processing. Int J Inf Technol 11:295–311. https://doi.org/10.1007/s41870-018-0239-4

Guirado R, Kwon H, Alarcón E, et al (2019) Understanding the Impact of On-chip Communication on DNN Accelerator Performance. arXiv Prepr arXiv191201664

Jian Ouyang, Shiding Lin, Wei Qi, et al (2014) SDA: Software-defined accelerator for large-scale DNN systems. In: 2014 IEEE Hot Chips 26 Symposium (HCS). pp 1–23

Andri R, Cavigelli L, Rossi D, Benini L (2018) YodaNN: an architecture for ultralow power binary-weight CNN acceleration. IEEE Trans Comput Des Integr Circuits Syst 37:48–60

Tanomoto M, Takamaeda-Yamazaki S, Yao J, Nakashima Y (2015) A CGRA-based approach for accelerating convolutional neural networks. In: Proceedings of the 2015 IEEE 9th International Symposium on Embedded Multicore/Many-Core Systems-on-Chip. IEEE Computer Society, USA, pp 73–80

Gross WJ, Gaudet VC (2019) Stochastic computing: techniques and applications. Springer, Berlin

Alaghi A, Hayes JP (2013) Survey of stochastic computing. ACM Trans Embed Comput Syst 12:92:1–92:19. https://doi.org/10.1145/2465787.2465794

Gupta S, Agrawal A, Gopalakrishnan K, Narayanan P (2015) Deep learning with limited numerical precision. International Conference on Machine Learning. pp 1737–1746

Sinha GR (2017) Study of assessment of cognitive ability of human brain using deep learning. Int J Inf Technol 9:321–326. https://doi.org/10.1007/s41870-017-0025-8

Seltzer ML, Yu D, Wang Y (2013) An investigation of deep neural networks for noise robust speech recognition. In: 2013 IEEE international conference on acoustics, speech and signal processing. pp 7398–7402

Murray AF, Edwards PJ (1994) Enhanced MLP performance and fault tolerance resulting from synaptic weight noise during training. IEEE Trans Neural Netw 5:792–802

Bahrampour S, Ramakrishnan N, Schott L, Shah M (2015) Comparative study of caffe, neon, theano, and torch for deep learning. CoRR abs/1511.0:

Krizhevsky A, Sutskever I, Hinton GE (2017) ImageNet classification with deep convolutional neural networks. Commun ACM 60:84–90. https://doi.org/10.1145/3065386

Li C, Yang Y, Feng M, et al (2016) Optimizing memory efficiency for deep convolutional neural networks on GPUs. In: SC’16: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis. pp 633–644

Hu X, Feng G, Duan S, Liu L (2017) A memristive multilayer cellular neural network with applications to image processing. IEEE Trans Neural Networks Learn Syst 28:1889–1901

Guo K, Zeng S, Yu J, et al (2017) A survey of FPGA based neural network accelerator. CoRR abs/1712.0:

Venieris SI, Bouganis CS (2016) FpgaConvNet: A Framework for Mapping Convolutional Neural Networks on FPGAs. In: Proceedings - 24th IEEE International Symposium on Field-Programmable Custom Computing Machines, FCCM 2016. Institute of Electrical and Electronics Engineers Inc., pp 40–47

Zhang C, Li P, Sun G, et al (2015) Optimizing FPGA-based accelerator design for deep convolutional neural networks. In: Proceedings of the 2015 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays. Association for Computing Machinery, New York, NY, USA, pp 161–170

Wenlai Zhao, Haohuan Fu, Luk W, et al (2016) F-CNN: An FPGA-based framework for training Convolutional Neural Networks. In: 2016 IEEE 27th International Conference on Application-specific Systems, Architectures and Processors (ASAP). pp 107–114

Ji Y, Ran F, Ma C, Lilja DJ (2015) A hardware implementation of a radial basis function neural network using stochastic logic. In: 2015 Design, Automation Test in Europe Conference Exhibition (DATE). pp 880–883

Li J, Ren A, Li Z, et al (2017) Towards acceleration of deep convolutional neural networks using stochastic computing. In: 2017 22nd Asia and South Pacific Design Automation Conference, ASP-DAC 2017. Institute of Electrical and Electronics Engineers Inc., pp 115–120

Kim K, Kim J, Yu J, et al (2016) Dynamic energy-accuracy trade-off using stochastic computing in deep neural networks. In: 2016 53nd ACM/EDAC/IEEE Design Automation Conference (DAC). pp 1–6

Ando K, Ueyoshi K, Orimo K et al (2018) BRein memory: a single-chip binary/ternary reconfigurable in-memory deep neural network accelerator achieving 1.4 TOPS at 0.6 W. IEEE J Solid-State Circuits 53:983–994

Sim H, Kenzhegulov S, Lee J (2018) DPS: dynamic precision scaling for stochastic computing-based deep neural networks. In: Proceedings of the 55th Annual Design Automation Conference. p 13

Yu J, Kim K, Lee J, Choi K (2017) Accurate and efficient stochastic computing hardware for convolutional neural networks. In: 2017 IEEE International Conference on Computer Design (ICCD). pp 105–112

Villarrubia G, De Paz JF, Chamoso P, la Prieta F (2018) Artificial neural networks used in optimization problems. Neurocomputing 272:10–16

Gaines BR (1969) Stochastic computing systems. In: Advances in information systems science. Springer, pp 37–172

Brown BD, Card HC (2001) Stochastic neural computation. I. Computational elements. IEEE Trans Comput 50:891–905

Abadi M, Agarwal A, Barham P, et al (2016) TensorFlow: Large-scale machine learning on heterogeneous distributed systems. CoRR abs/1603.0:

Deng L (2012) The MNIST database of handwritten digit images for machine learning research. IEEE Signal Process Mag 29:141–142. https://doi.org/10.1109/MSP.2012.2211477

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bodiwala, S., Nanavati, N. An efficient stochastic computing based deep neural network accelerator with optimized activation functions. Int. j. inf. tecnol. 13, 1179–1192 (2021). https://doi.org/10.1007/s41870-021-00682-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41870-021-00682-2